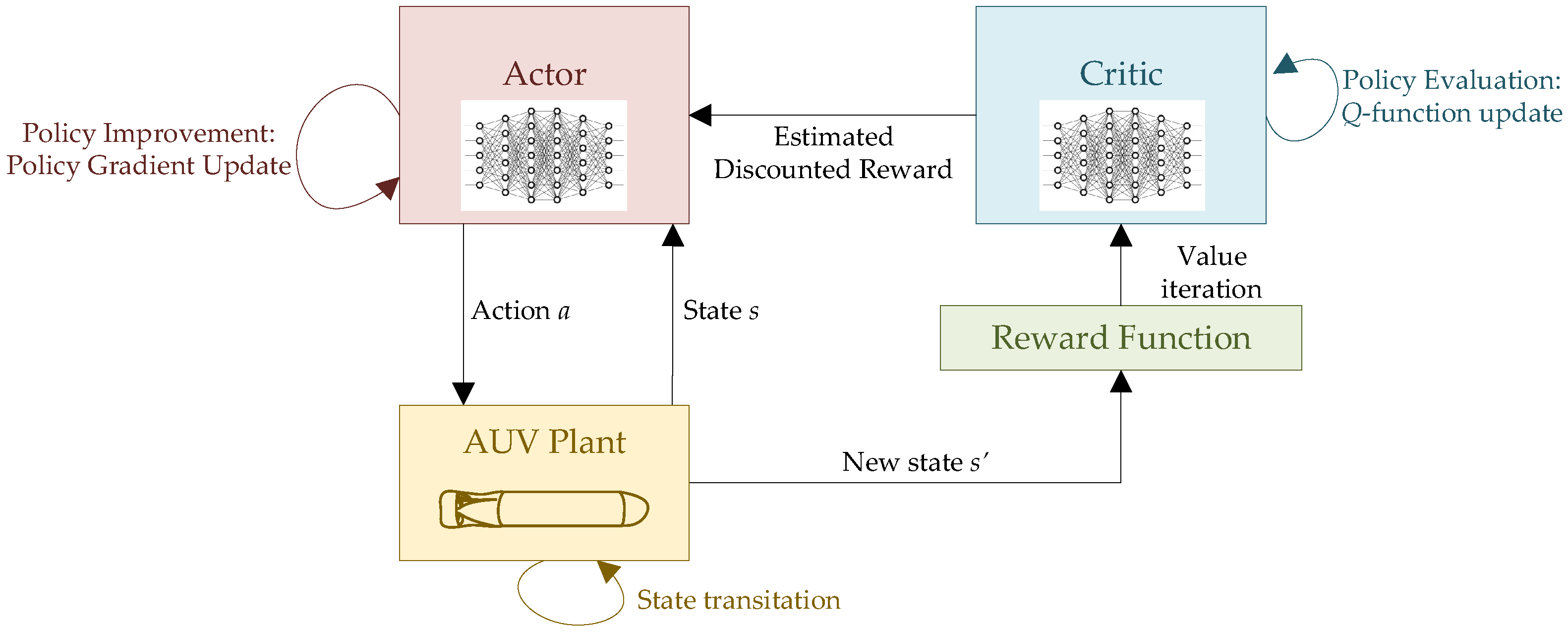

4.1. Reinforcement Learning Statement

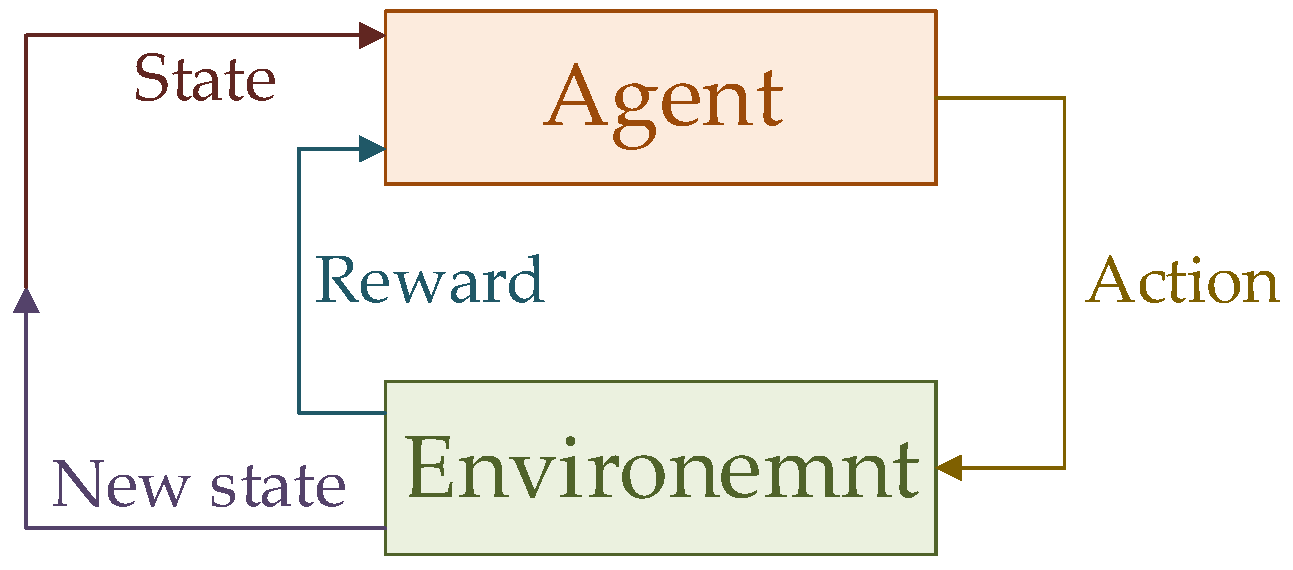

RL is a decision-making framework in which an

agent learns a desired behaviour, or

policy , from direct interactions with the

environment [

41]. At each time step, the agent is in a

states and takes and

actiona. As a result, it lands in a new state

while receiving a

rewardr. A Markov decision process is used to model the action selection depending on the value function

, which represents an estimate of the future reward. By interacting the environment for a long time, the agent learns an optimal policy, which maximises the total expected reward. This is shown graphically in

Figure 3.

The on-policy

action-value function

yields the expected reward if the agent starts in state

s, takes an action

a and afterwards acts according to policy

[

41]:

where

indicates a sequence of actions and states,

is a discount factor and

E expressed the expected, discounted value. Conversely, the optimal action-value function

yields the expected reward if the optimal policy is followed after starting from state

s and taking action

aSince the optimal policy will result in the selection of the action that maximises the expected reward starting from

s, it is possible to obtain the optimal action

from

as follows:

As a result, it is possible to express the Bellman equation for the optimal action-value function as follows [

41]:

where

P indicates state transitions according to a stochastic policy

.

RL algorithms can be subdivided into

model-based and

model-free schemes [

42]. Whereas model-based strategies enable the agent to plan by exploiting the model of the environment, thus greatly improving their behaviour, exact models of the environment are generally unavailable in practice. Any existing bias in the model is likely to cause significant problems with generalising the agent’s behaviour to the actual environment. As a result, RL research has focused on model-free approaches to date.

Within model-free schemes, RL algorithms can be further categorised based on what they learn. On the one hand, the strategies in the policy optimisation group directly represent the policy and optimise its parameters. Since the policy update only relies on data collected while following the policy, these approaches are known as on-policy. Note that policies can be stochastic, defined as , or deterministic, defined as . On the other hand, Q-learning strategies learn an approximation for the optimal action-value function based on the Bellman equation. As the update of the optimal Q-value may employ data collected at any point during training, these schemes are known as off-policy. Whereas policy optimisation schemes are more robust, since they directly optimise what is being sought, Q-learning methods can be significantly more sample-efficient when they work.

Additionally, RL algorithms can be subdivided into on-line and off-line types. With on-line schemes, learning occurs in real time from the data stream. In off-line strategies, the policy is updated only at regular intervals and stored in memory. Both types of algorithms have much lower computational cost at deployment time than during training.

The first RL algorithms dealt with discretised state and action spaces [

41]. Subsequently, linear features were introduced to approximate the state space to enable the treatment of complex control problems [

43]. More recently, deep neural networks have been shown to provide better generalisation and improved learning, allowing scientists to develop systems capable of outperforming humans in complex games [

44]. However, only recently have deep neural network been used to approximate the action selection process, thus enabling the inclusion of continuous action signals in the RL framework, which are typical of realistic control problems [

45].

4.2. Problem Formulation

RL has been successfully applied to control applications (e.g., see, [

43,

46,

47]). In these tasks, the controller represents the agent, while the system plant corresponds to the environment. The control output is the action, whereas the observable and estimated states may represent the state. The reward is typically expressed through a cost function. Most applications of RL to control tasks to date refer to episodic problems, i.e., the task can be defined as an episode with a specific desired outcome signalling its completion. Expressing the control problem as an episodic task greatly reduces the problem formulation complexity, as it enables the designer to exploit the classical RL framework.

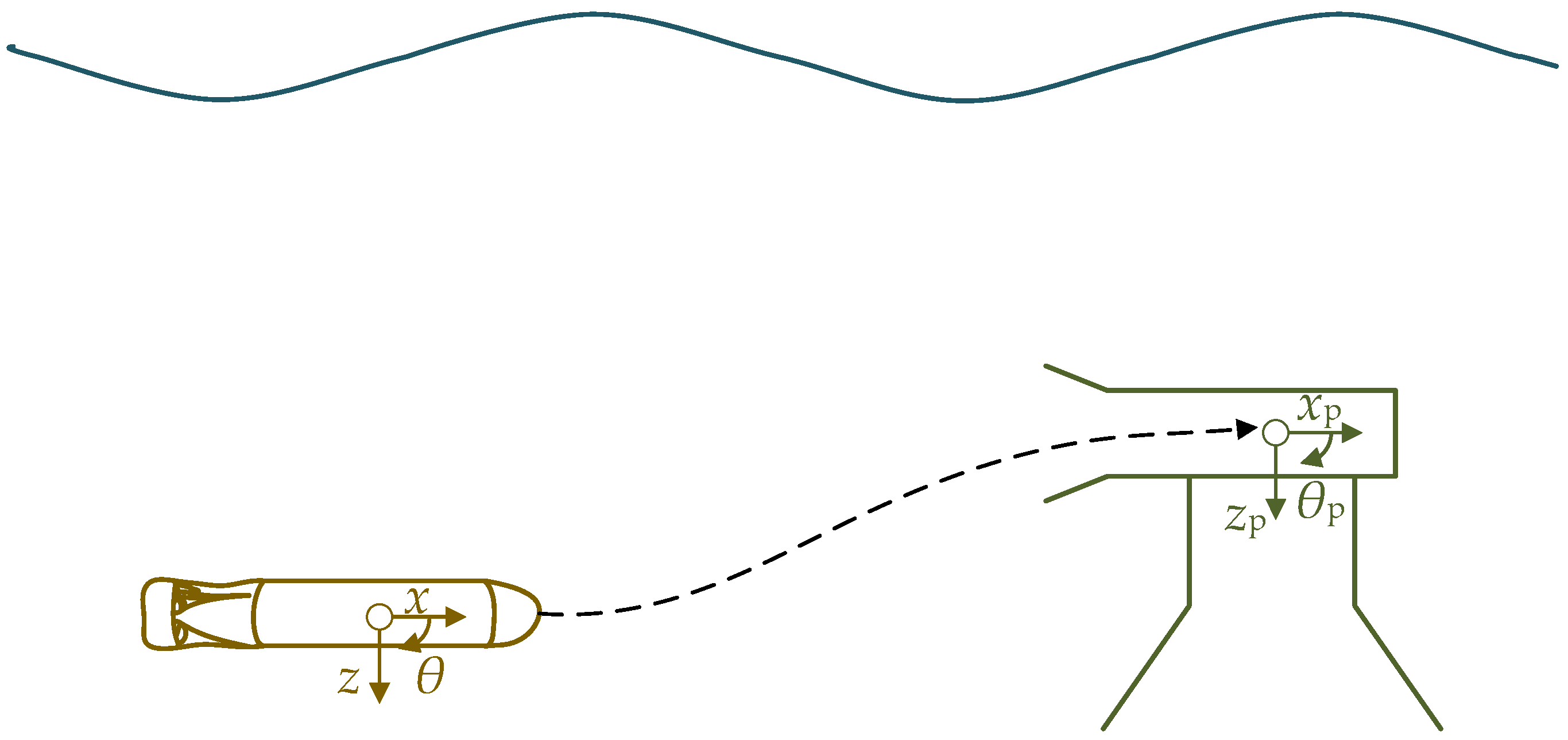

For the specific application to the docking of the AUV onto a fixed platform in surge, heave and pitch, it is possible to define the RL state space as follows:

It is necessary to add the propeller revolutions,

n, to the state-space to provide an indication of the propeller thrust. Changes in motor torque will affect the thrust, but with a delay. Hence, ideally, the controller should learn to reduce the propeller revolutions as it approaches the goal position and orientation. Similarly, the action space is identical to the control output

:

However, to prevent stall and because of torque limitations, the selected continuous action should be constrained to minimum and maximum values ( and , respectively).

Specifying an appropriate reward function is fundamental to have the agent learn the desired behaviour. Although it is possible to inverse-engineer reward functions from examples or expert pilots [

48], here a reward function is designed similarly to cost functions for control problems. In particular, the reward function for the docking problem should comprise of different elements: a continuous cost on the action to limit excessive power expenditures, a continuous cost on position so that the AUV is guided towards the docking point, a continuous cost on velocity so that small terminal speed is achieved, a high penalty for exceeding realistic environmental boundaries and high rewards once the AUV is close enough to docking point and at low speeds. The penalty and rewards make the reward function discontinuous and reliant on an if-loop. Inspiration for the reward function is taken from [

32], where the authors employed RL for the landing of a spacecraft. Similarly, the cost on the velocity is expressed as a function of the distance to the desired docking point. This is because at low speeds the sternplane becomes ineffective due to the drop in lift force. Hence, a higher speed away from the docking point is necessary to achieve the desired level of control. Therefore, the reward function that has been designed for the docking of an AUV to a fixed or moving platform is

The constants

indicate weights and

l limiting values. The parameter

is an additional reward achieved at the end of the episode for successful docking. Note that

f is a boolean that expresses whether docking is achieved to the desired level of accuracy:

Additionally, the boolean

h describes whether the episode should be terminated because the AUV exceeds sensible environmental boundaries:

The limiting values as well as the weights and other parameters in the reward function need to be tweaked until the desired performance is achieved. In particular, a compromise needs to be found between docking time, accuracy and the end speed. The boolean variables

f and

h are combined into an additional variable

d that determines whether the episode ends:

d is used in the following section to determine the RL algorithm behaviour.

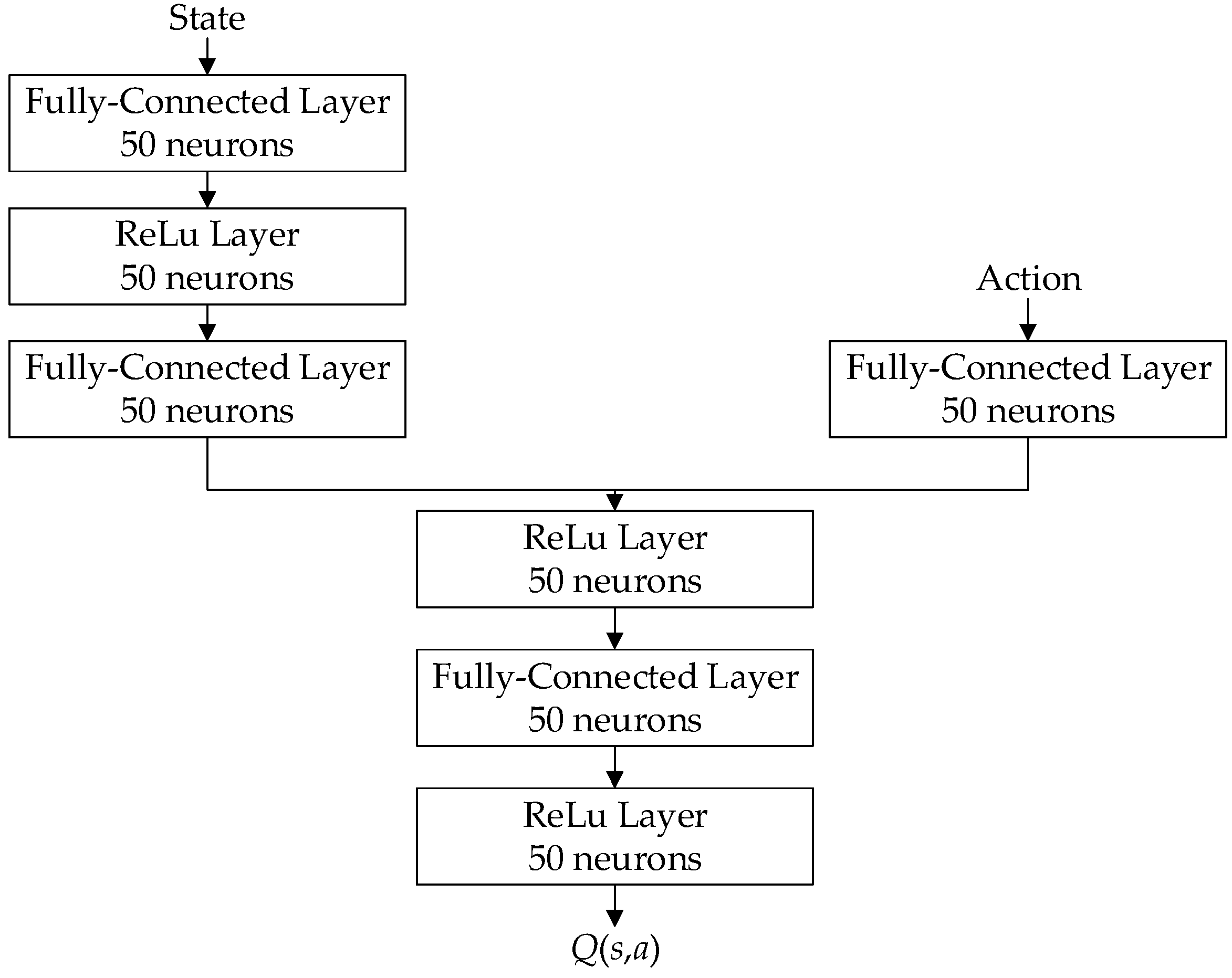

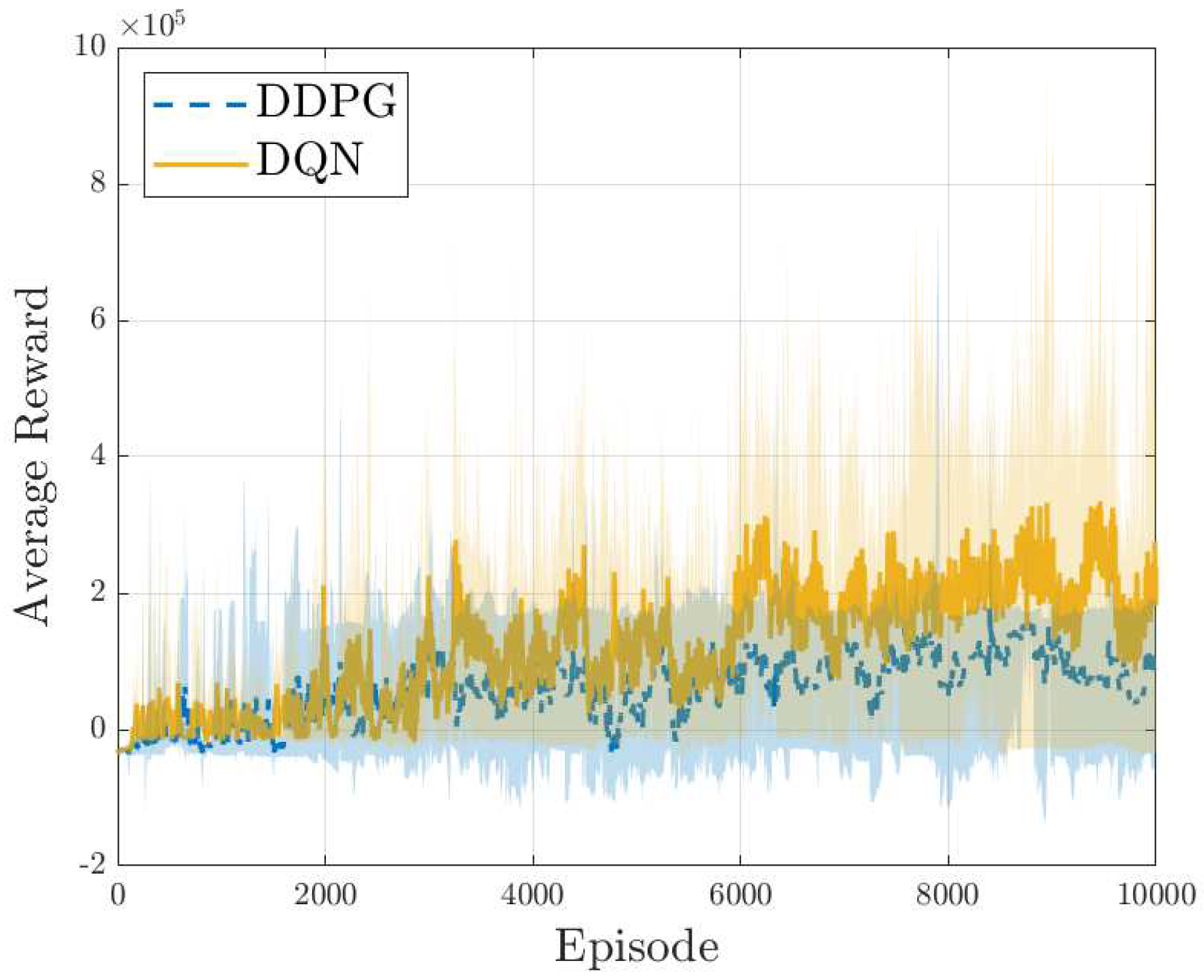

4.3. Deep Q-Network

The first RL algorithm investigated is deep Q-network (DQN), a popular model-free, on-line, off-policy strategy [

44,

49]. DQN trains a critic to approximate the action-value (or Q-) function

, i.e., to estimate the return of future rewards. Hence, the algorithm relies on a discrete action-space and a continuous state-space. As a result, the action is selected with an

-greedy policy [

41]:

where

indicates the action space, i.e., a vector of all possible actions,

is a random number and

is defined as the exploration rate. The exploration rate is typically set to a high value close to 1 at the start of the training process and as learning progresses a decay function is employed to lower its value. This ensures that the agent focuses on exploring the environment at the beginning and then shifts to exploiting the optimal actions as learning progresses.

In DQN, the critic approximator is represented by a deep neural network. Two features ensure that training occurs smoothly. Firstly, the algorithm relies on the concepts of experience replay buffer [

44,

45]. With this strategy, at each step, the state state, action, reward, new state and flag for episode end from the previous step

are saved as a transition inside the experience memory buffer

. During training, a mini-batch of experiences

is then sampled from the memory to update the deep neural networks. If only the latest experience is used, the neural network is likely to suffer from overfitting. To ensure stability, the replay buffer should be large and contain data from a wide range for each variable. However, only a limited number of data points (usually in the order of

–

) can be stored in memory. Hence, new experience should be stored only if it describes a data point different enough from the existing data. Furthermore, using batches sampled randomly from the experience buffer ensures the best compromise between computational performance and overfitting avoidance. Since learning occurs without relying on the current experience, DQN is described as off-policy scheme.

Furthermore, the DQN algorithm exploits the concept of a target network for the critic to improve learning and enhance the stability of the optimisation. This network,

has the same structure and parameterisation as

. The value function target is thus set as

which indicates the sum of the immediate and discounted future reward. The main problem is that the value function target depends on the same parameters that are being learned in training, thus making the minimisation of the mean-squared Bellman error unstable. For this reason, the target network is used, as it has the same set of parameters are the critic network, but lags it in time. Hence, the target network is updated once per main network update by Polyak averaging [

45]:

where

is the smoothing factor.

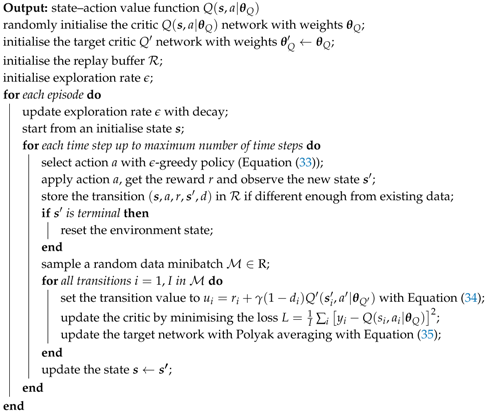

The DQN algorithm is summarised in Algorithm 1, which is taken from [

44,

49] with the addition of Polyak averaging as in the MATLAB implementation used here.

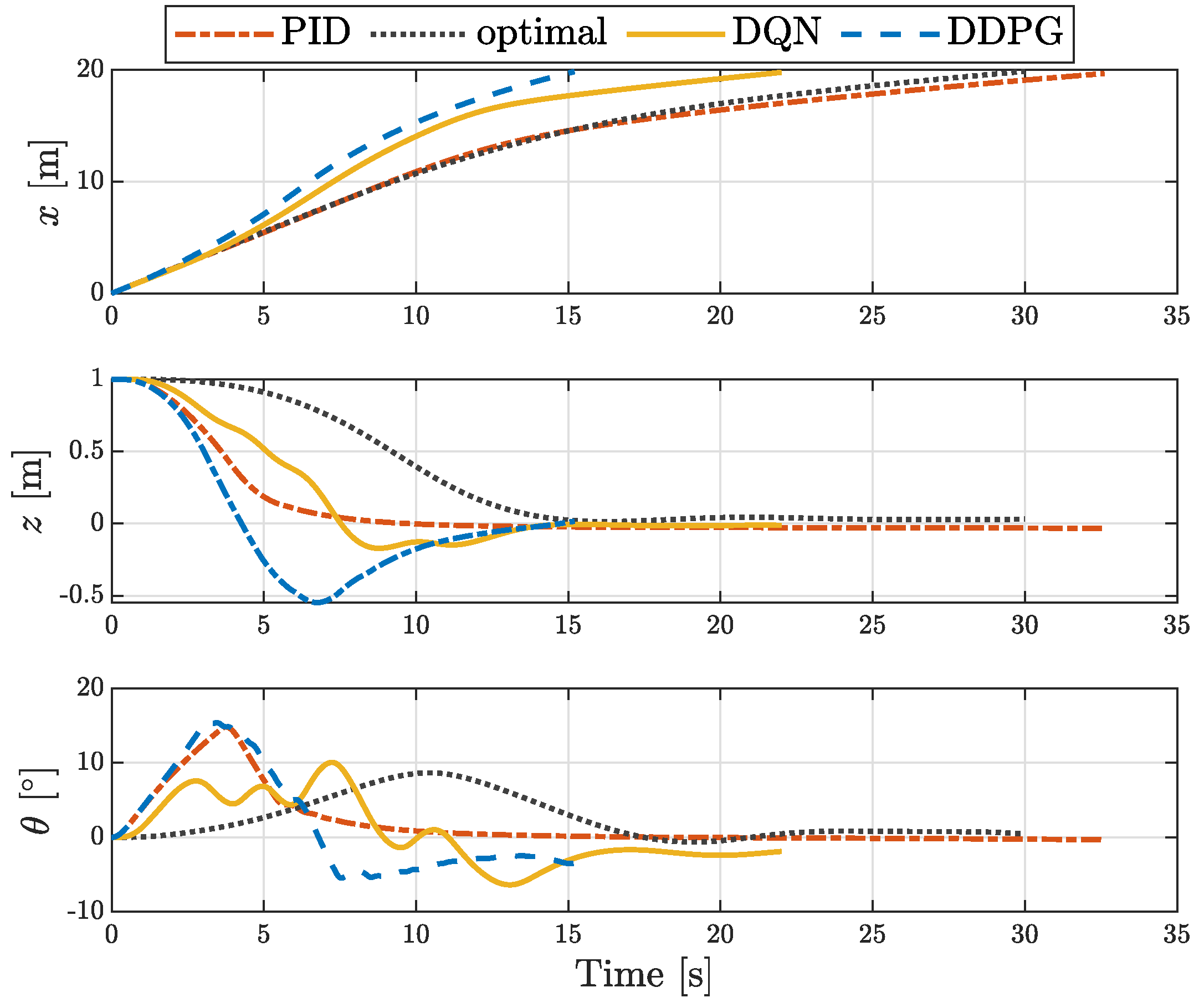

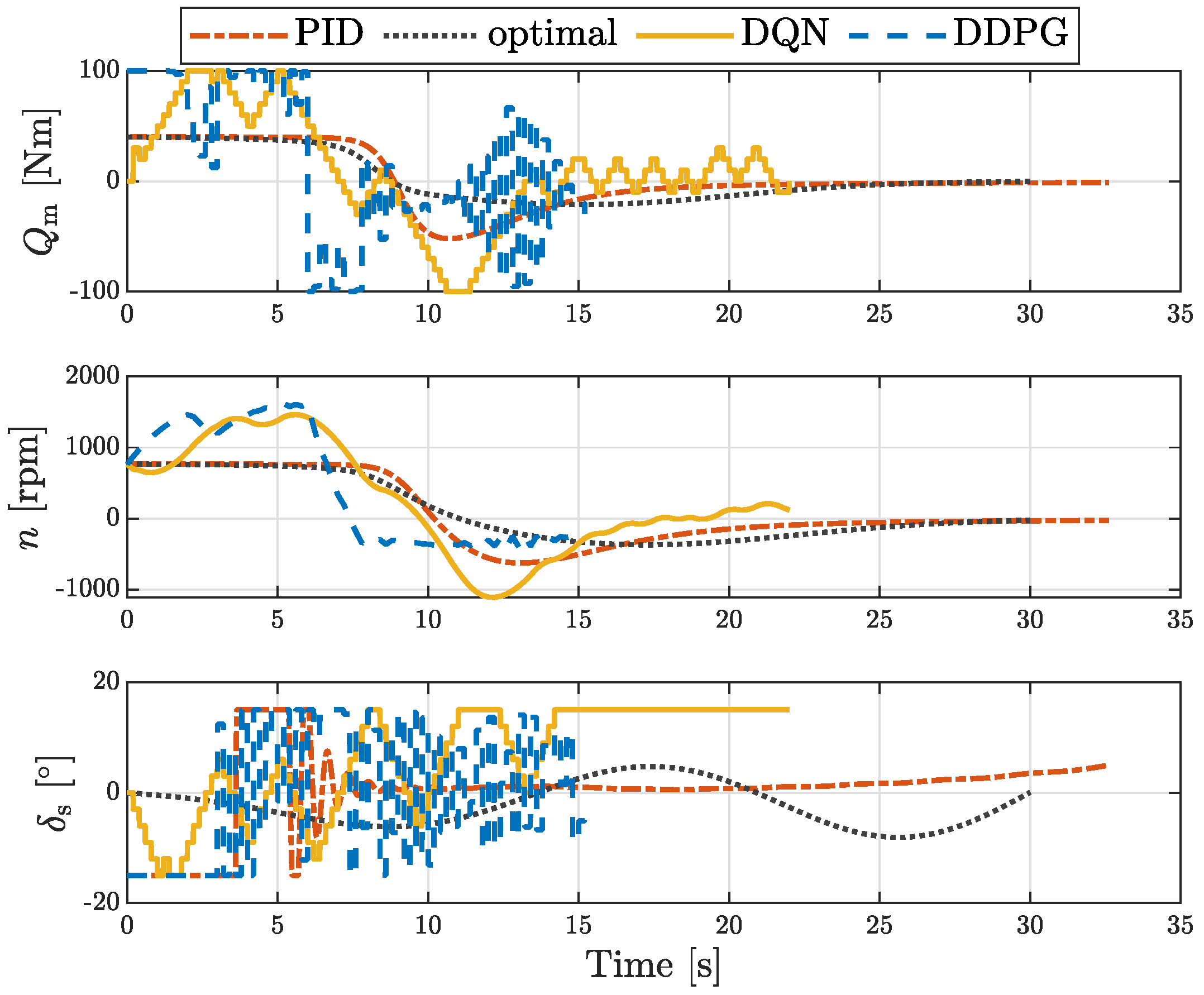

DQN Docking Control of an AUV in 3 DOF

Since DQN relies on discrete actions, only a limited set of actions

can be selected by the controller. One common approach with RL schemes with discrete actions is to select three actions per action signal: the maximum, minimum and zero values of the signal [

43]. This results in a bang-bang control type, which can be proven to be optimal with Pontryagin’s principle [

36]. However, here, a different approach is selected similarly to [

50,

51].

The action space is selected as the combination of positive, negative and zero step changes in the motor torque and sternplane angle, as described in

Section 5.1. Therefore, at each time step, the motor torque and sternplane angle are changed by a fixed amount, which should be selected as a realistic value based on the physical constraints of the system. To ensure learning occurs, the values of

and

must be tracked. Otherwise, the action information, i.e., a relative change in the control input, is not sufficient for the agent to successfully learn a policy, since information on the absolute (positive or negative) value of the motor torque and sternplane angle is required. Hence, the state space in Equation (

26) is modified as follows for the DQN algorithm for the docking control of the AUV in 3 DOF:

Note that upper and lower limits are imposed on the values of the motor torque and sternplane angles to reflect realistic physical boundaries. Hence, even if the action corresponding to a positive change in motor torque is selected for , no changes will be applied, since the value of is saturated.

| Algorithm 1: DQN algorithm adapted from [49]. |

![Applsci 09 03456 i001 Applsci 09 03456 i001]() |

The DQN aglorithm for the docking control of the AUV onto a fixed platform in surge, heave and pitch is shown graphically in

Figure 4.

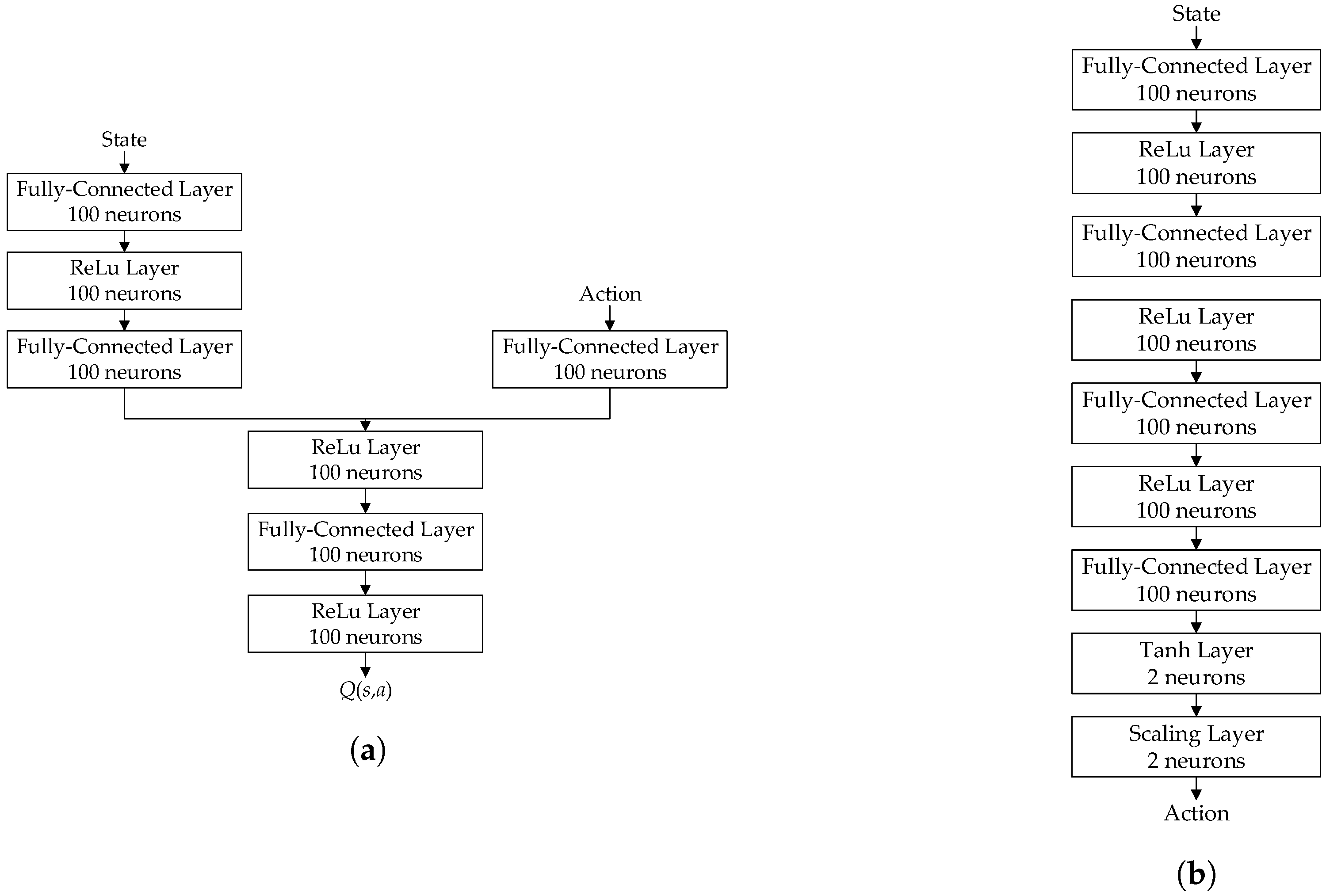

4.4. Deep Deterministic Policy Gradient

A popular model-free, on-line, off-policy RL algorithm known as deep deterministic policy gradient (DDPG) is adopted here [

45]. Although DDPG has been found to be sensitive to hyperparameter tuning and to suffer from overestimating the Q-values [

46,

47], it has been successfully applied to the depth and low-level control of AUVs [

30,

31].

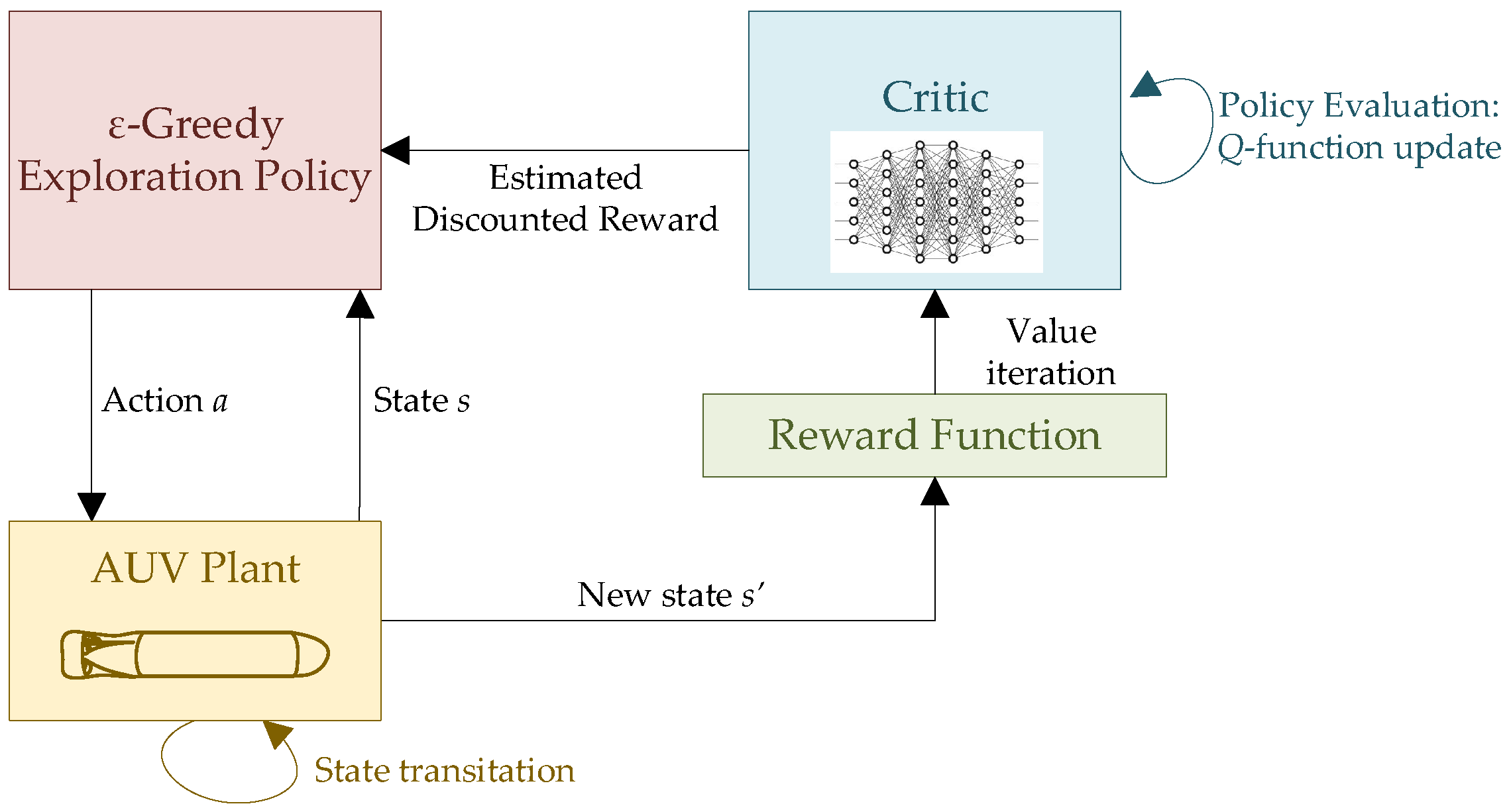

DDPG is a type of actor-critic strategy developed by Lillicrap et al. [

45]. As shown in

Figure 5, the critic evaluates the action-value (or

Q-)function, while the actor improves the policy in the direction suggested by the critic. In DDPG, a deep neural network is used by the critic to approximate the expectation of the long-term reward based on the state observation

and action

. Hence, the state–action value function can be expressed through the neural network features

as

. An additional deep neural network is employed by the actor for the approximation of the optimal policy: based on state

, the network returns the continuous action

that is expected to maximise the long-term reward or state–action value. Thus, by including the neural network features

, the policy can be expressed as

. Hence, in the DDPG algorithm, the agent learns a deterministic policy and the Q-function concurrently.

Furthermore, similar to DQN, the DDPG algorithm exploits target networks for the critic and actor to improve learning and enhance the stability of the optimisation. These networks,

and

, respectively, have the same structure and parameterisation as

and

, respectively. The value function target is thus set as

which indicates the sum of the immediate and discounted future reward. In Equation (

37), the agent first obtains the new observation from the policy through the actor. Then, the cumulative reward is found through the critic. As for DQN, the target networks are updated once per main network updated by Polyak averaging [

45] with the following equation in addition to Equation (

35):

Similar to DQN, DDPG is an off-policy strategy as it relies on an experience replay buffer. Using the samples in the data batch

, the parameters of the deep neural network for the critic are updated by

minimising the loss over all

I samples [

45]:

Conversely, the parameter of the deep neural network for the actor are updated by

maximising the expected discounted reward:

The off-policy nature of the DDPG algorithm is further exploited to deal with the RL long-standing compromise of exploration and exploitation. Especially during the initial stages of learning, it is fundamental for the agent to explore the state–action space to optimise performance and avoid getting stuck in a local optimum. As learning progresses, the agent can focus on exploiting the action that yields maximum overall reward. Since the policy provided by the actor is deterministic, noise needs to be added to the action during training to ensure sufficient levels of exploration. Ornstein–Uhlenbeck noise is selected as in [

45].

The DDPG algorithm is summarised in Algorithm 2.

| Algorithm 2: DDPG algorithm adapted from [45]. |

![Applsci 09 03456 i002 Applsci 09 03456 i002]() |