1. Introduction

Future air traffic control (ATC) scenarios involve, not only simple increases in traffic volumes, but also new forms of cooperation and adjustment among air traffic controllers. For aviation safety, the International Civil Aviation Organization (ICAO) is making efforts to convert air traffic management systems into those that use IT technology. This is expected to provide more information than what is currently available to many personnel that operate the entire air traffic management (ATM) system. As seen in ergonomics, excessive amounts of data mean new forms of work distribution and expectations of new cooperation strategies. That is, future air traffic requires changes in existing ATC/M paradigms. Air traffic controllers, who are in charge of ATC/M work, analyze numerous pieces of information occurring in dynamic environments in real time while maintaining flow, safety, and security to make judgments. ATC can be said to be multi-mode cooperation work with computer support. It includes processing of various unstructured composite data, such as voice communication, along with the real-time visualization of aircraft data. The main purpose of ATC is to maintain safe distances between aircraft and seamless traffic flows. Based on the long-term prediction of air traffic demand by the ICAO indicating steady increases every year throughout the world, future air traffic will become much more complex than the current situation and ATC/M is expected to increase further. Eventually, at this time point when the role of ATC/M in the national airspace has become more important, to be prepared for future air traffic, research and development is necessary to effectively present various complex navigation data to air traffic controllers.

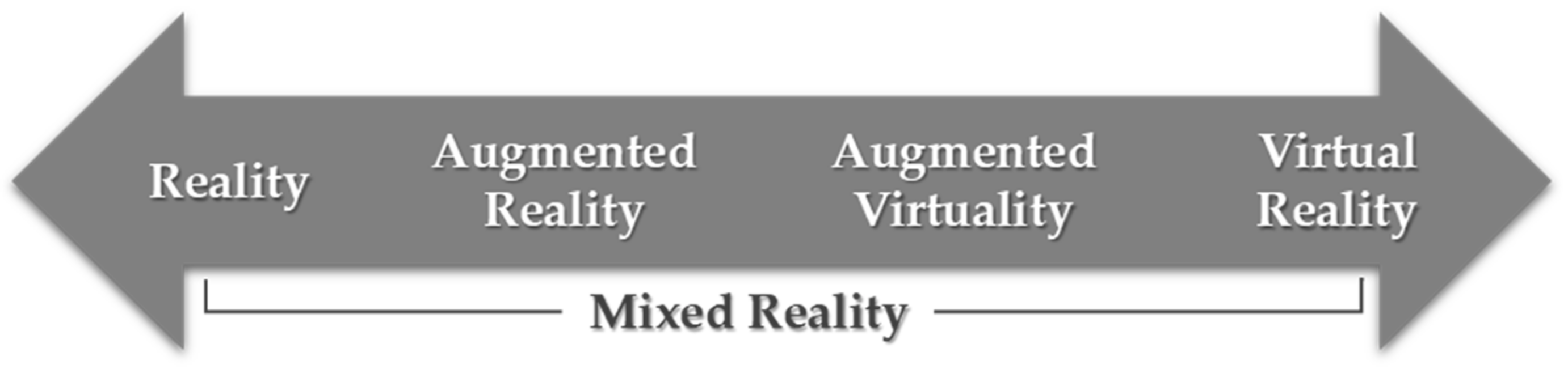

To be prepared for future air traffic, new solutions can be presented using technologies, such as mixed reality. The concept of mixed reality, which is being magnified as one of the core MR technologies of the fourth industrial revolution, is defined by Milgram [

1] as a state where the real world and virtual world are mixed. The ultimate goal of mixed reality is to reinforce the user recognition and interaction with the virtual objects also termed as holograms using a head mounted display in 3D real world space [

2]. The terms augmented reality and mixed reality are often confused, as both augment the real world with virtual objects. Mixed reality is the extended form of augmented reality. Augmented reality uses handheld flat LCD screens to augment the real world with virtual objects and shows the indirect view of real world. Whereas, in mixed reality, it provides hybrid environment by combining the real and virtual environment into a single environment. Here, the physical and virtual objects coexist in a hybrid environment and provide a direct view using head mounted holographic display. The continuum of reality to virtuality is shown in

Figure 1.

Mixed reality is a technology intended to overcome the limitations in virtual reality and those in actual reality. Along with the development of mixed reality technology, it is currently coming to the forefront of the field of entertainment and is rapidly spreading in all industrial fields such as manufacturing, healthcare, education, and aerospace. Therefore, mixed reality technology is used in a wide range of industrial fields and presents new potential. Mixed reality technology can provide augmented real-world information to users and can provide a new user experience as a medium for interactions in real time. Based on such characteristics, this paper proposes a mixed reality-based air traffic control system for the improvement of and support for air traffic controllers’ workflow using mixed reality technology, which is effective for the delivery of information, such as complex navigation data. Since this system enables the effective provision of complex navigation data, the efficient work by air traffic controllers can be expected. This system can be used as an alternative with unforeseen obstacles to control the work in low visibility situations due to environmental problems, such as air pollution around the airport, or weather deterioration such as fog and wind. The major contributions of this study to the field of ATC/M are as follows.

A preliminary study for current air traffic controllers for system development.

A real time simulation of flight trajectories within the mixed reality (MR)-based application.

The provision of MR-based 4D multi view of aerodromes to present effective and clear flight information.

Intuitive interaction design for rapid judgment and response according to emergency situations.

The provision of better interaction paradigms in order to keep the productivity of the work flow high in case of low visibility conditions.

2. Literature Review

Air traffic control is a very hectic and sensitive job. Many researchers have worked on making this job easier to perform. Approximately 25 years ago, Lloyd Hitchcock, a member of the Federal Aviation Administration (FAA), proposed the idea of performing tasks in air traffic control towers using augmented reality technology [

3]. A prototype was not made at that time, although many researchers recalled Hitchcock surmising various methods that could facilitate controllers [

4]. In 1996, an advanced interface for the human-computer interaction for air traffic management was presented by Ronald et al. [

5]. Moreover, another concept under scrutiny is the development of remotely located air traffic services. This is based on a rendering of a recreated 360° tower view in real time in virtual reality [

6,

7,

8,

9].

Many researchers have investigated 3D interfaces and compared them with 2D interfaces based on the controllers’ performance with air traffic management systems [

10,

11,

12]. In 2010, Sara Bagassi et al. proposed a 4D interface rendered over a 2D screen for better interpretation of flight data by controllers [

13]. For early detection of conflicts among flights, that study proposed a method to evaluate and display the airplanes in their future locations. In 2014, Ronald J. Reisman et al. did a flight test to measure the registration error in the use of augmented reality within the control tower [

14]. They observed errors in the registration due to the latency in the airplanes’ surveillance transport. In 2015, another method based on rendering the 3D information of flight data on 2D displays was proposed by Arif Abdul Rahman et al. [

15]. They also targeted the provision of better interaction paradigms to air traffic controllers using more focused visuals for better understanding of flights around the control tower.

In 2016, Nicola Masotti et al. also proposed an augmented reality-based method for air traffic control towers [

16]. They presented a rendering pipeline for multiple head-up displays to overlay outside views of the control tower with a visual symbol representing the location of an airplane outside the tower. In the same year, Maxime et al. introduced a virtual reality-based immersive control tower and investigated future challenges as well as multiple scenarios for the air traffic control system [

17]. In September 2016, Sara Bagassi et al. evaluated various augmented reality systems for the assessment of their application to on-site control towers [

18]. In their project, they concentrated on the placement of the airplanes’ information in the windows of the air traffic control tower. In 2017, Ezequiel R. Zorzal et al. presented a discussion on the development of a prototype that could merge real-time ground radar information on the airplanes with the airplanes in captured images from a live IP camera [

19]. In addition to the support of augmented reality for an air traffic control and management system, Chris et al. also developed an augmented reality-based interface for supporting joint air tactical controllers [

20].

All of the existing systems discussed above played a vital role in enhancing the performance of air traffic controllers. However, there are still many limitations in terms of better human-computer interaction in air traffic management services. The three-dimensional interfaces provided to visualize the traffic in an airport still use 2D screens for rendering [

13,

15,

19]. Compared to conventional approaches, these methods have significantly improved the performance of air traffic controllers. However, due to the use of 2D displays for rendering 3D information, the interpretation power of controllers is still limited. Until now, no efficient and interactive interface has been provided for visualizing the radar data of traffic in the air. However, virtual reality-based immersive systems [

17] also did not perform well because there was less confidence by the controller in a virtual environment disconnected from the outer world. The previously proposed pipeline for augmented reality systems overlaid the real windows with visual information through head-up displays [

16]. Only a mathematical formulation was provided and no real-time or simulation-based experimental results were provided in the proposed work at that time. Later in 2018, under the RETINA concept, they validated the concept of superposing of a synthetic overlay on the outside tower view with the flight’s information [

21]. The concept proposed in this method was not applicable to view air traffic from remote locations in the case of bad weather conditions. Whereas, the authors method provided the entire view of the airfield in holographic mixed reality along with the important information tagged with each flight. In this way, our proposed method dealt with low visibility conditions as well as monitoring the air traffic from remote locations. Moreover, the method presented in [

21] facilitated the controllers in low visibility conditions but did not reduce the overload for air traffic controllers to interact with multiple computer screens for air traffic management. Most of the research has only been done on the visualization of air traffic, but not the interaction mechanisms, whereas in our work, along with the improvement in the visualization of air traffic, air traffic controllers were enabled to easily switch between multiple interfaces and interact with them. Keeping in mind the limitations in existing systems, the authors proposed a mixed reality system to overcome these problems. The 3D information in our system was not confined to a 2D display or a static real-world location. Air traffic controllers could move around and view the 3D spatial information for better analysis of the air traffic situation. Moreover, by using this system, controllers could move the whole interface with them instead of sticking to a particular physical location. As the 3D information of air traffic was combined with the real world, our system provided 3D sound to give a better understanding of the location of an airplane with respect to the airport in mixed reality. Moreover, unlike other systems, our proposed system could be used remotely from any location and was not tied to the control tower. Therefore, in the case of bad weather conditions, our system enabled air traffic controllers to view air traffic even from remote locations. The multiple interfaces to view the air traffic in our system were provided within one device, so it required less effort to interact with multiple interfaces

3. User Survey

This study proposes a new air traffic control system. Prior to system development, Jeju International Airport was selected to extract sample data. The Jeju International Airport is considered among the airports with high traffic density. It has four runways. However, only two of them are functional for ILS CAT І, CAT ІІ operations. A questionnaire survey was conducted with air traffic controllers at the airport to derive problems and matters to be complemented in the current control system. The air traffic control towers of all airports including Jeju International Airport are designated as restricted access areas, and there were many restrictions on the access to information on the control system and visits to the control system. After a process of requesting cooperation for visiting and getting permission from the Ministry of Land, Transport and Maritime Affairs, the activities to collect information were allowed only in a limited range. In addition, due to the nature of the work, which is directly connected with safety, the information collection and interviews were allowed within limits so as not to interfere with the control work. The survey was conducted with air traffic controllers who could respond to the questionnaire when we visited the control tower. Considering the insufficient number of participants, the questionnaire was composed of selected questions appropriate for in-depth interviews. The participants who responded to the questionnaire consisted of four males and one female aged 40 years on average, and their average service period was 16.2 years.

The questionnaire was generally composed of the following contents. The detailed questionnaire results are presented in

Table 1.

Identify basic work procedures of air traffic controllers and the flight information referred to for work.

Identify the problems faced while air traffic controllers are performing their work, and the related work procedures and countermeasures.

Identify the content of work procedures in emergency situations.

Identify problems with the current control system.

Survey of demand for the proposed system.

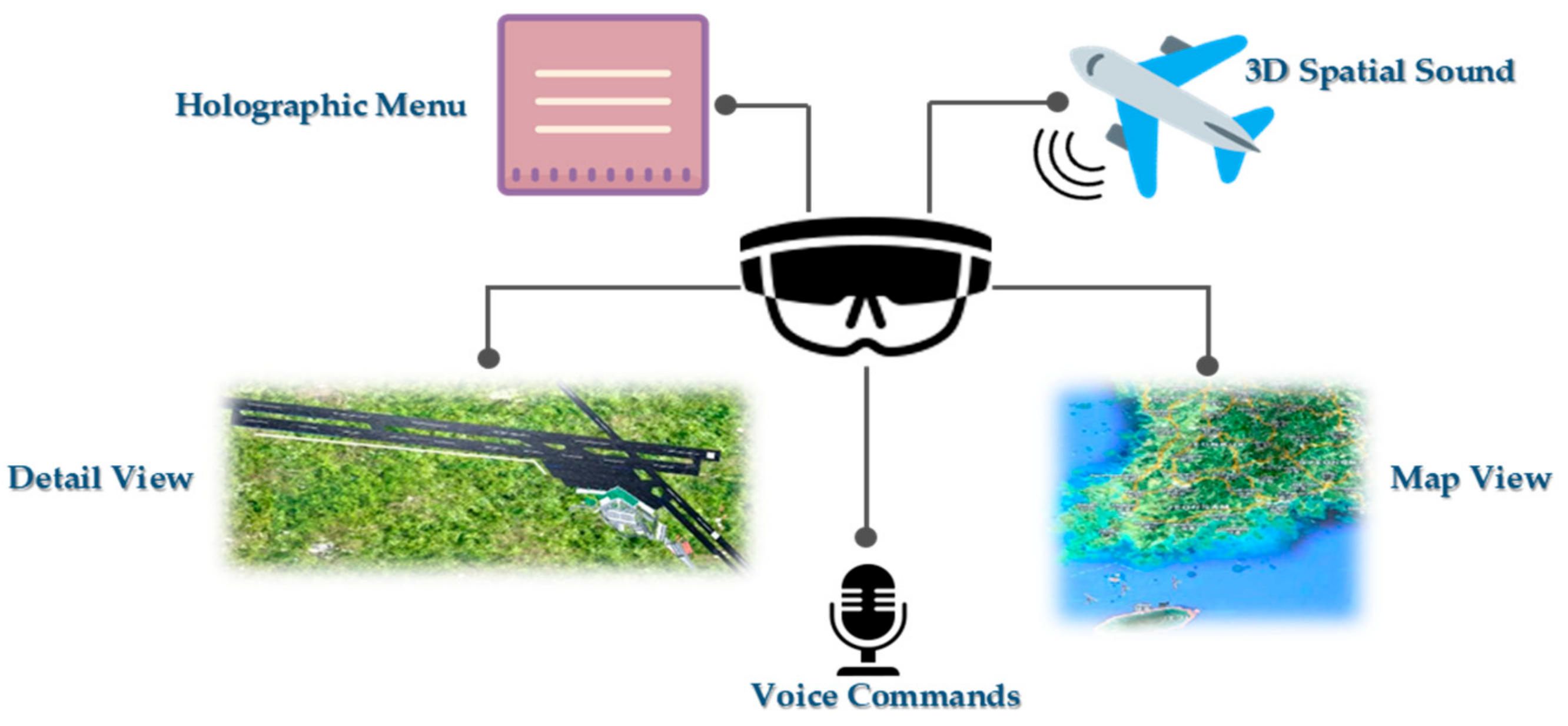

4. Proposed Mixed Reality System

This study proposed a 4D (3D + time) holographic mixed reality system for air traffic controllers to visualize and manage air traffic. Within the control tower, the controllers use to watch and monitor a considerable amount of information in the control tower. The information mainly refers to the landing, takeoffs, traffic in air, ground traffic, etc. of airplanes. On close observation of the information monitored by the air traffic controllers, it can be stated that this information can be categorized into the categories of traffic on the ground and traffic in the air. Ground traffic mainly consists of the landing, takeoffs, traffic on the taxiways, etc. of airplanes. To monitor the traffic in this category, the controllers use outside views through the windows of the tower. Due to this conventional method of monitoring, the controllers suffer from many problems including bad weather conditions. However, air traffic is conventionally monitored on a map using 2D monitor screens. These two-dimensional interfaces are less productive for giving a true interpretation of air traffic. Moreover, it gets very hectic and time consuming for air traffic controllers to switch among different monitor screens and real-world views of an airfield. Hence, a 4D mixed reality system was proposed for the efficient management of airfields using both visual and voice interaction through a single hardware unit. Within a single head-mounted mixed reality device, the interaction mechanisms were provided to control traffic in the air as well as on the ground. These mechanisms include switching between multiple interfaces within a single display device using a holographic display or voice commands. Moreover, keeping in mind that sound is an important medium for communicating information, the functionality of giving voice commands was provided through the head-mounted mixed reality device. Furthermore, 3D sound was used to enable the air traffic controller to interpret the location of airplanes within the system. Our proposed system consisted of multiple interaction modules and holographic spatial interfaces to support the air traffic controller’s various tasks. These tasks mainly include viewing radar information on a map, monitoring the airport’s runways through the windows of the control tower, switching between both views, communication with voice, etc.

Figure 2 shows all the modules included in the proposed system.

Each module is described in detail as follows:

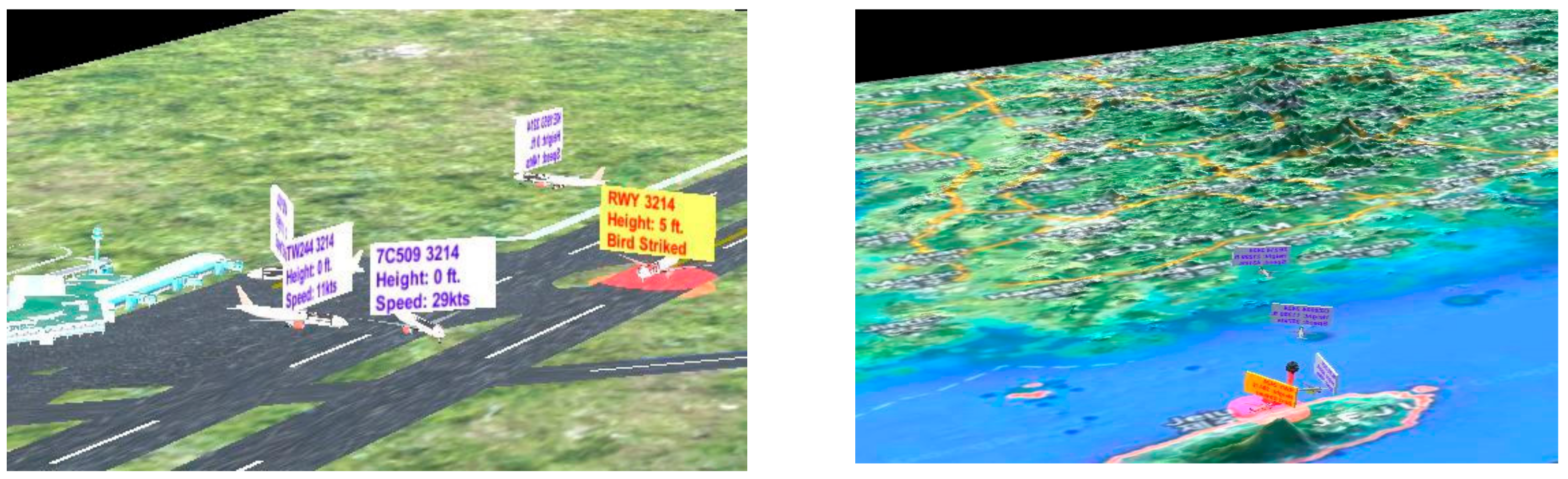

4.1. Detail View

A detailed 3D scenery file of the airfield was included in the detail view. The scenery file held the 3D information of each object on the airfield including the airport’s buildings, taxiways, runways, airport apron and major surroundings. The air traffic controller could place the 3D model of the airfield on the real world’s plane surface and view the information from different angles using a head-mounted mixed reality display. Instead of depending on the outside views from the control tower, the controller could monitor the airfield using a 4D holographic view of the airfield through the head-mounted mixed reality display. The controller could place the holographic view of the airfield on any horizontal surface inside the control tower. This view presented every single ongoing activity of the airplanes in the airport. It included 4D information about the following types of air traffic:

Preparing to take off.

Traffic on hold in the air and preparing to land.

Traffic on the taxiways after landing or before takeoff.

Traffic in the airport’s apron.

Moreover, the necessary information related to an air flight was displayed in a 3D text box attached to the respective airplane. The information consisted of the flight name, speed, altitude, transponder etc. The detail view gave a close interpretation of all the activities of the airplanes going on the ground and around the airport’s close vicinity in the air.

Figure 3a,b present a detailed holographic view of the airfield.

4.2. Map View

The map view mainly focused on the air traffic that was far from the airport. It gave a 4D holographic visual presentation of the air traffic over the map. It presented the big picture or abstract-level information on the air traffic on a world map. A 3D height map of the world map was made for this view. A 3D height map was created of the area within a bounding box around the city of the target airport. The 3D map generator atlas extension [

22] of Adobe Photoshop was used to generate a real 3D model from a 2D Google Maps image and the height map information. Instead of making the effort to understand air traffic information over world maps on 2D screens, controllers could visualize four-dimensional information for easy understanding of the current situation of the air traffic around the globe. An air traffic controller could move around the 3D world map and perform better analysis of the air traffic. The information on flights displayed with the airplanes was similar to that in the detail view, i.e., flight name, squawk code, etc. With the holographic mixed reality view, air traffic controllers could easily understand the difference in distance between airplanes and their individual distance from the airport. It enabled them to understand which flights were approaching the airport soon and which flights were far from the airport based on their distances in the mixed reality world. Moreover, the controllers could easily analyze the wrong direction of any airplane and predict the possible outcomes before an incident could happen. This was made possible with a 4D presentation of flight trajectories in mixed reality.

Figure 4a,b show the map view of air traffic.

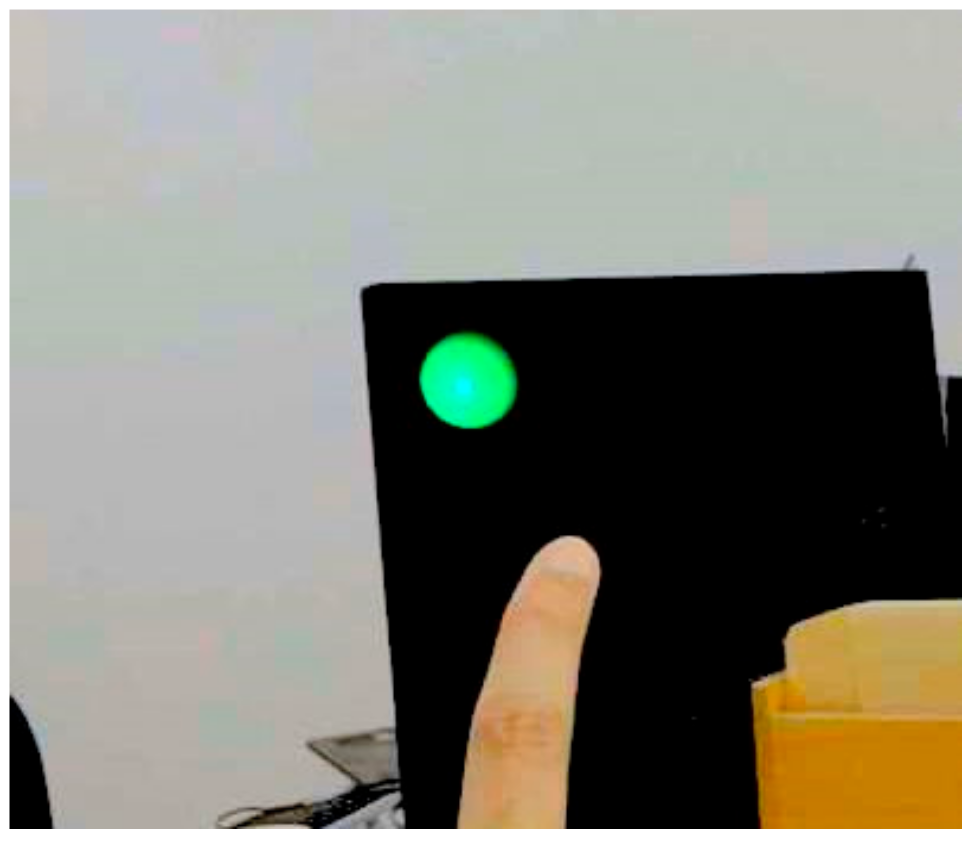

4.3. Holographic Menu

One of the issues faced by air traffic controllers is interacting with multiple computer screens to analyze different information. Moreover, they have to move close to the windows of the control tower most of the time to monitor the airplanes in the airport. Therefore, it is difficult for them to switch between the view of the airfield and multiple computer screens. All of the interfaces were provided under a single roof. Air traffic controllers could easily switch between the airfield’s detailed view and the map view within the mixed reality head-mounted display. For easy access to the interfaces, a holographic menu (

Figure 5) was provided to select and view the desired information related to air traffic. Moreover, for easy and more realistic interaction, direct hand manipulation was provided to select the desired option from the menu, as shown in

Figure 6.

4.4. Voice Commands

Voice is one of the main mediums of communication used by air traffic controllers. They usually use voice commands to communicate with the pilots and ground staff. The functionality of giving voice commands was provided using the same head-mounted device that was being used to view air traffic. In this way, the controllers did not have to use a separate device for sending voice commands. Moreover, as discussed in

Section 4.3, the controller had to view the menu and switch among multiple displays. Therefore, with the help of our system, the controller could easily open or close the menu and change the view using simple voice commands such as open Menu, close menu, map view, detail view, etc.

4.5. Three-Dimensional Spatial Audio Signals

Immersive audio plays a vital role in giving a signal to someone because it gives a sense about the direction of sound to the listener. The 3D spatial sound was also made available with the airplane. It helped the air traffic controller to easily understand the location of an airplane within the mixed reality environment while monitoring the airfield. There were two main audio signals: The airplane’s engine sound in normal cases and the alarm sound in the case of an emergency situation. In this way, the controller could get an alarm about approaching flights or any emergency situation in a timely way. Moreover, with spatial sound effects, the controller could easily navigate to the right location of the airplane.

4.6. Interface for Emergency Situations

There can be many emergency situations with flights. To give a timely alarm to air traffic controllers about emergency situations, an interface design was provided in our proposed mixed reality system. Although the 3D spatial sound played a good role in providing the location of an airplane that was in an emergency, this interface design made things clearer. In case of an emergency, the color of the 3D text box (discussed in

Section 4.1) containing information about the flight was changed. A message in the text box about the type of emergency was also added. Moreover, a blinking red light was provided around the airplane that was having an emergency. It gave a better understanding to the controller about which airplane was in an emergency situation. The interface design for airplanes in emergency situations is shown in

Figure 7.

5. Design and Development Process

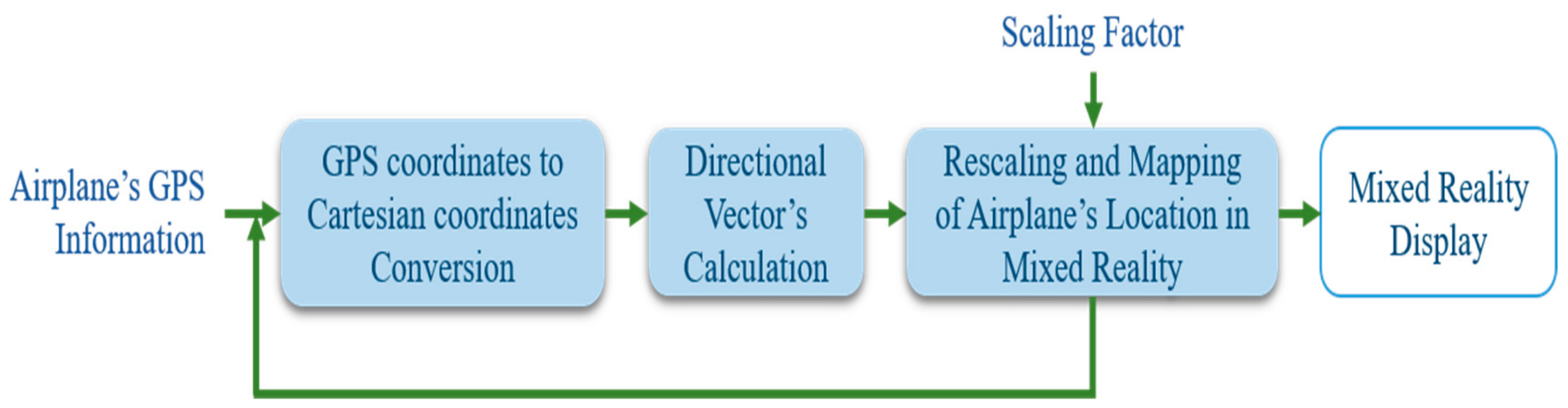

Real-world distances are very large. Therefore, in order to map the air traffic in a small mixed reality world with respect to each other’s location in the real world, a complete process of rescaling the actual world’s large distances and remapping them was required. The procedure for remapping air traffic in a holographic mixed reality world with respect to their positions in the real world comprised multiple modules. A flow chart with respect to the execution order of each module is shown in

Figure 8.

Each module of the entire processing pipeline is described as follows:

5.1. Calculation of Scaling Factor

In order to map the real-world locations in a mixed reality world, the actual distances were scaled down based on a derived scaling factor. The scaling factor decided the number of units in the real world represented by one unit in the mixed reality world. If the distance or units for representing the real-world distance in the mixed reality world is denoted by D

1 and actual real-world distance is denoted by D

2; the scaling factor can be derived using Equation (1).

“D2” depended on the level of detail in the view. There were two main views. A detail view that covered each minor visual detail of the airport and a map view that was an abstract representation of air traffic on the world map. It can be seen here that the detail view was more focused on closely located positions with less distance between the airplanes, in contrast to the map view. Therefore, it was required to rescale and remap comparatively larger distances for the map view than the detail view. As a result, for finding the actual distance to be considered for finding the scaling factor against each view, slightly different methods were used, as discussed below:

5.1.1. Detail View

This view was composed of 3D scenery of the airport. Therefore, it was required to map the air traffic on runways, taxiways etc. Hence, for calculating the scaling factor for this view, the actual distance/length of one of the runways of the airport was considered. This distance could also have been calculated using the GPS coordinates of an airport runway’s starting and ending points. The scaling factor was derived for this view by substituting that distance at D2 in Equation (1) against one unit of the mixed reality world represented as D1.

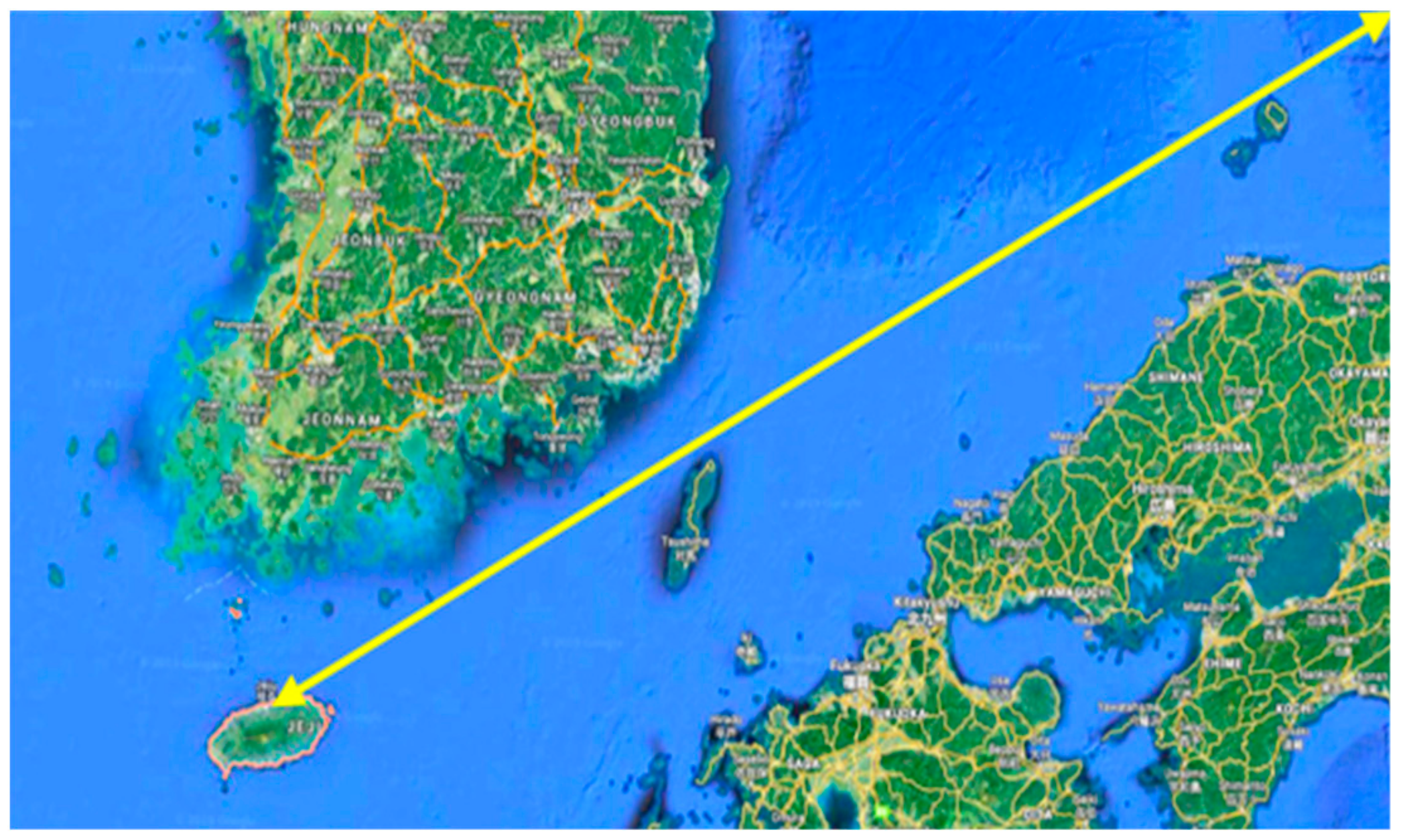

5.1.2. Map View

The 3D height map for this view was generated using the 2D image and the height map from Google Maps. While taking the 2D image from Google Maps, the information was calculated about the distance between the control tower and GPS coordinates of one corner of the bounding box selected for taking the 2D image for the generation of the 3D model of the map view area, as shown with the arrows in

Figure 9. For calculating the scaling factor, that distance was substituted at D

2 in Equation (1), while D

1 was a one scale unit of the mixed reality world.

5.2. Conversion of WGS-84 Geodetic Locations (GPS Values) to Cartesian Coordinates

The radar system gave the data about the location of the airplanes in the real world according to the World Geodetic System (GPS values). However, the Unity 3D engine used for the development of the mixed reality world followed a Cartesian coordinates system. Hence, for mapping the air traffic in the mixed reality world, the Cartesian coordinates were required in the form of x, y and z coordinates. Therefore, in order to find the Cartesian coordinates for the positions of the airplanes, it was required to convert the geodetic coordinates to the local tangent plane given by the radar system [

23]. The basis for the implementation of the conversion between the values of coordinate systems was a book by J. Farrell and M. Barth [

24]. The Cartesian coordinates in terms of x, y and z were derived from the GPS information by using the system of equations described below.

where in Equations (2)–(4), the height is denoted by h, latitude by λ and longitude by φ, while M and e were calculated by using the equation below:

where ellipsoid flatness is denoted by f and calculated using Equation (6).

For calculating the ellipsoid flatness, the WGS-84 Earth semi-major axis was required, which is denoted as a, and the derived Earth’s semi-minor axis, denoted as b in Equation (6). The value of M used in Equations (2)–(4), can be calculated by using the e in Equation (7).

The value of s can be calculated as follows:

Therefore, the Cartesian coordinates for an airport’s control tower and airplane locations were calculated by substituting their GPS locations in Equations (2)–(4).

5.3. Directional Vector

In this step, the directional vector was calculated between the Cartesian coordinates of the control tower and airplane in order to map the airplane with respect to the tower’s location. If the coordinates of the control tower are represented as C(x

1, y

1, z

1) and the airplane as A(x

2, y

2, z

2), the directional vector can be calculated as follows:

After calculating the directional vector, the magnitude of this vector was calculated. This magnitude was the distance between the control tower and airplane in the actual world. Later, the calculated magnitude, i.e., the control tower-airplane distance, was downscaled by multiplying it with the scaling factor calculated in

Section 5.1.

5.4. Mapping

Finally, the airplane in the mixed reality world was mapped with the help of the directional vector and its magnitude calculated in

Section 4.3. The directional vector provided the direction and its magnitude gave the distance of the airplane from the control tower for mapping at the exact location of the airplane with respect to the control tower in the mixed reality world. After finding the exact location, a 3D airplane was instantiated and mapped on that location in the mixed reality world (

Figure 1 and

Figure 2).

Modules 5.2-5.4 were executed for each airplane whenever a new GPS reading was received for it. However, the process described in module 5.1 was used only once in order to calculate the scaling factor for rescaling of the real world’s large distances.

6. Flight Scenarios and User Tests

The 3D scenery files and flight trajectories of Jeju Airport in the Republic of Korea were used in the flight tests. Eleven flights were included in the test. Two types of flight trajectories were simulated in our holographic mixed reality system: The first one was consisted of real flights in Jeju Airport; the second type of flight trajectory was created by the authors with the help of a flight simulator. The real flight trajectories were recorded and collected from an online source called FlightRadar24 [

25], whereas the second type of flight was scenario-based and created with the help of the X-Plane 11 [

26] software. There, a total of three scenario-based flight trajectories were undertaken, as described below:

An emergency situation for an airplane occurred while landing at Jeju Airport due to the collision of a bird with the wind screen. After landing, the airplane stopped right in the middle of the runway.

An airplane was approaching the runway to take off and was blocked by the emergency that happened in scenario 1. Hence, this airplane returned to the gate.

There was another airplane that was supposed to land on the same runway after the airplane’s takeoff discussed in scenario 2. Therefore, due to the blockage of the runway, this airplane was on hold and flying around the airport until it received the clearance signal to land.

The flight data were retrieved as a CSV file for each flight trajectory. They mainly consisted of the longitude, latitude, altitude, speed and heading of the airplane with a timestamp for the whole flight trajectory. One of the main concerns in testing was to analyze the accurate mapping of the airplanes’ location in mixed reality. In the flight tests, the flight trajectories were mapped accurately in the mixed reality view with respect to real world locations. As a result of very accurate mapping of the airplanes’ location in mixed reality, air traffic controllers could easily rely on the proposed system to monitor the runways and the rest of the airfield. The outside view from the control tower was not required to monitor the airfield anymore. This would facilitate the air traffic controllers in controlling flights during bad weather. The 4D holographic view increased the interpretation power. Moreover, 3D spatial sound provided with the airplanes played a vital role in giving signals about an airplane’s location in the mixed reality world. A controller could easily find the location of an airplane in the mixed reality world with the help of spatial sound. Another main purpose of testing was to analyze the ease of interaction with multiple interfaces using a single head-mounted see-through display. With the help of a holographic menu and voice commands, it was very comfortable to switch between multiple interfaces in no time.

In emergency situations, it is necessary to timely convey a message to the air traffic controller about the emergency with all information on the flight. The main purpose for creating emergency scenarios in flight tests was to analyze the performance of the system in emergency situations. With the help of 3D spatial sound and the interface of the proposed system presented in

Section 4.6, it sent the user an alarm about the emergency situation and location of the airplane at the right time.

Due to safety reasons, it was difficult to evaluate the system’s performance in the control tower with the real time air traffic. Hence, the pre-recorded as well as specially designed scenario-based flight trajectories were used to test the system in a controlled environment. For this testing, two experienced former air traffic controllers were invited who visualized flight trajectories and interacted with the system to assess the system’s usability.

The proposed system was deployed on Hololens and tested by two former air traffic controllers with an average age of 62 years. Due to their unfamiliarity with Hololens device, the authors demonstrated the way of using it and later executed the test.

The usability test mainly encompassed the four areas i.e., the usefulness, ease of use, ease of learning and satisfaction. While designing the questionnaire, the ease of the usability of Hololens was also taken into account as shown in

Table 2.

The results of the conducted user test are as follows:

As shown in

Figure 10, the category of usefulness in the questionnaire, achieved higher scores than the remaining three categories. This is likely to be associated with the holographic device’s view and usability, which is the ease of use. During the experiments, the subjects pointed to a narrow field of view, unfamiliar interactions, and so on. The subjects were unfamiliar with the Hololens device’s gesture input method and found it difficult to learn. These limitations of the device may have affected the system usability results.

The user test resulted in positive feedback about the applicability of the system along with constructive criticism to enhance the system. They also recorded their points of view about the hardware used. The overall feedback of each expert is as follows:

<Participant 1>

As a tower cab, it is a good way to monitor the work of the tower team.

The narrow field of view (FOV) of the Hololens makes it difficult to analyze a wider area which may result in difficulty to identify objects.

While the flight had been in progress, detailed information i.e., altitude, speed of aircraft etc. were shown along with aircraft. However, it was suggested to show the required information when it is needed.

<Participant 2>

It would be good to use it for simulation of flight operations as it enhanced the analysis power pretty well.

The narrow FOV of Hololens was a problem. Interaction was quite difficult and extensive training about how to use Hololens is required.

The overall feedback from the experts can be seen as both positive and negative. The positive comments are expressing new accessibility dimensions possible with the proposed system, which are as follows:

To begin with, the tower cab in the control tower can be used for real-time monitoring of control tasks performed by the entire team. Through monitoring, it can be possible to detect work situations quickly and respond efficiently to emergency situations in less time. Secondly, it can also be used as an educational tool to teach and train students for operations related to air navigation. The proposed system is developed by the use of data which is actually used by real controllers. Hence, it is possible to support simulation control work through this system.

On the other hand, the negative feedback was also received from the experts and it was mainly related to the limitations in the Hololens device, which has been discussed. On first hand, there was an opinion that the narrow FOV made the overall system inconvenient to use. The narrow viewing angle is a hardware related problem and can be easily solved by using the newly developed mixed reality head mounted device (HMD) with the recent advances, as the one developed by Microsoft [

27]. The new Hololens offers almost double FOV as compared to previous versions of the Hololens. The second opinion was the difficult and unfamiliar interactions. Initially, the gesture-based interactions provided by the Hololens may be unfamiliar interaction. However, this problem can be partially solved by expanding the speech recognition in the future. Complementing the interaction and providing selective information is expected to be carried out in future work.

7. Conclusions

Thus far, the application of research and development MR technology has only concentrated on one field, such as entertainment. However, seeing the necessity of MR technology in the operating environment of real equipment, this study proposed a future type air traffic control system using MR technology. That is, the attempt to apply this technology in a complex work environment such as ATC/M intended to develop MR technology, which is one of the deriving forces of the fourth industry at the national level, is very meaningful for the development of related technologies. Therefore, in this study, the characteristics of MR technology are understood, and appropriate interaction methods are designed to propose a system to improve the work efficiency and productivity of air traffic controllers. This system is made to be controlled firsthand by air traffic controllers to provide a measure to give new user experiences.

The expected effects that can be obtained from the outcome of the development in this study are as follows.

The possibility to use the system as an education and training system: The International Civil Aviation Organization (ICAO) recommends the expansion of simulator education for air traffic controllers because air accidents can be prevented in advance with pre-training by cultivating the ability of air traffic controllers to prevent accidents and cope when accidents have already occurred. In the aviation advanced countries, aerodrome control simulators are introduced, and periodic training is carried out to improve the ability of the air traffic controller to cope with normal and abnormal situations. Reflecting this international trend, the use of this system as an ATC simulator or training tool can help to solve problems to a great extent.

The possibility to use the system as a tool to analyze accident sequences: In order to understand the sequence of an accident, it is important to substitute the various unit events and environment conditions and to analyze the accurate causal relations. The survey indicated that air traffic controllers are also called for meetings to analyze accident sequences and trained. The systematic methods or tools are needed to establish preventive measures to prevent the recurrence of accidents and similar causes. The utilization of this as a tool to analyze accident sequences can be expected.

The possibility of overcoming control facility constraints: While efforts are being made to replace or add control equipment to solve problems caused by air traffic density increasing every year, there are always financial limitations. Continuous research on the control system is needed to overcome these physical constraints and reduce the probability of safety accidents. This system can reduce the purchase cost inputted in the construction of a new control system and equipment replacement and can be used for support as a complementary tool for control work while performing the main purpose of control work in actual operating environments.

The improvement of the work efficiency of air traffic controllers with system integration: Since air traffic control is carried out by air traffic controllers in general, it is important to reduce the complexity of air traffic control. Currently, there are various control systems with the same functions, but providing a vast amount of non-selected information can increase the complexity of air traffic control. This system provides the proper information used by the air traffic controllers after integrating and selecting proper information. In addition, it supports intuitive interactions such as gestures and voice commands, as well as multiple view and 3D spatial sound functions, which are effective in improving the understanding of information. By selectively providing information that is helpful for control judgments, this system can improve the efficiency of control work, such as reducing the workload of air traffic controllers.

Assistance for control judgments in emergency situations: It is true that the burden on control work increases under low visibility conditions due to severe weather, etc. Since this system actively provides possible additional information, quick judgments on, and coping with emergency situations can be expected. This system can also provide environments where the air traffic controller can logically recognize the inputs, requirements, or warnings easily.

The following presents the characteristics of this study, which is differentiated from existing studies that have been conducted thus far. First, as the actual operating environment is complex, preliminary surveys of air traffic controllers were conducted before the system development to understand the work of air traffic controllers and derive problems in the control system. Second, this study applies real-time data of aircraft flight trajectories and provides 4D multi view for the effective presentation of clear flight information. By visually providing real-time flight trajectories, the user’s understanding of information can be improved. Third, this study designed the system after identifying the equipment, control screen, and familiar interaction types used in the actual operation environment. It was a method to minimize the sense of difference in the work of the air traffic controller considering the fact that the system can be used in actual operating environments. This study can help with quick judgment and responses in emergency situations. Fourth, it is possible to improve the productivity of control work according to the interactive environment in actual use spaces instead of separate spaces.

However, since stability and reliability should be supported because the system should be used in actual operating environments, the appropriate indicators are necessary for the quantitative evaluation of the quality level along with the measurement of user experience. In order to evaluate the quality level of this system and to measure user experience, the development of evaluation indicators suitable for MR environment software should precede. Therefore, the authors will develop measurement tools based on the studies of evaluation items suitable for this system hereafter.