On Data Protection Regulations, Big Data and Sledgehammers in Higher Education

Abstract

1. Introduction

- Data must be collected for specified, explicit and legitimate purposes and not further processed in a manner that is incompatible with those purposes. Archiving purposes in the public interest, just like scientific or historical research purposes or statistical purposes, are not considered incompatible.

- Data must be adequate, relevant and limited to what is necessary in relation to the purposes for which they are processed.

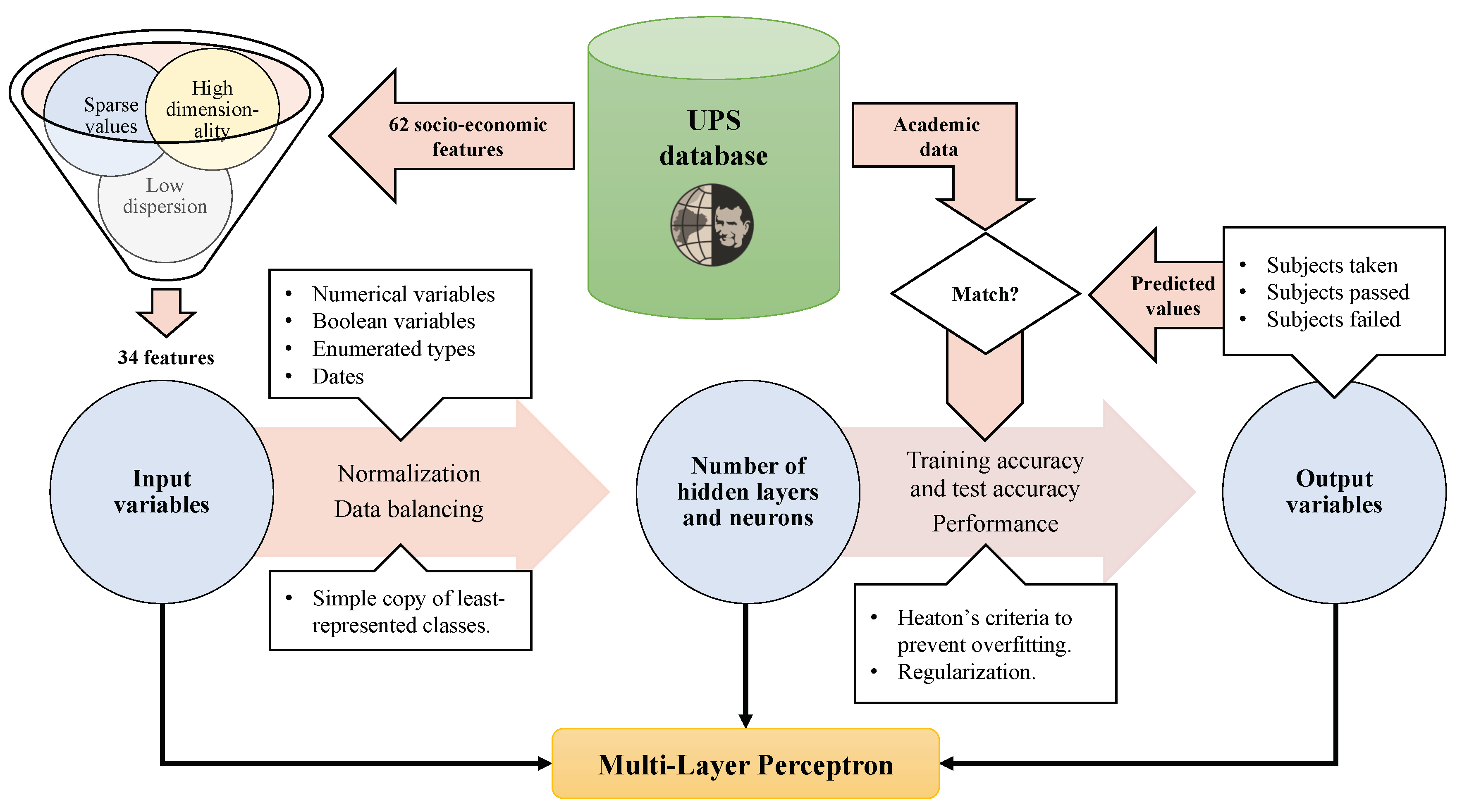

2. Selecting Inputs and Outputs to/from the MLP

- Low dispersion parameters. Some parameters—such as nationality, country of birth or country of residence—were treated separately, because the number of records with values other than ‘Ecuadorean’ or ‘Ecuador’ was very low. While it was interesting to study the differences between nationalities, the convergence of the neural network would be hindered both in terms of speed and quality, while the majority of the estimations to make in the future would be for Ecuadorean students anyway. Besides, the choice of a given mobile operator was not considered relevant, even though it did exhibit some correlation with economic variables.

- Dependent parameters. In a first approach, we decided to use the overall monthly expenses as the only indicator of economic level. Individual items of expenditure were considered in a second stage. Likewise, we did not consider at first the ‘Diploma level’ field, which only gets values when ‘Has another diploma?’ stores ‘Yes’.

- Missing or sparse values. We noticed that several fields about the students’ origin (e.g., province or city) were not systematically filled in, which was not the case for residence data. We thought it was undesirable to handle fields with too many gaps, because we would have to assign some value during the normalization and there would be no clear policies to follow.

- Dates. In general, temporal variables ought to be treated carefully before being used as input to a neural network, because their magnitude and range hampers normalization. Incorrect treatment can lead to overfitting and, thereby, to nullifying the informative value of the variables. (Overfitting happens when a neural network models the training data very accurately but fails to provide proper outputs for unknown data.) We used the normalized datum of the student’s age at high school graduation. A categorization of dates, corresponding to different generations, would be of little interest because, obviously, all the intended predictions would be made for new records, corresponding to posterior dates.

- High dimensionality parameters. Fields like ‘High school of origin’, ‘Parish of residence’ or ‘Neighborhood of residence’ take tens of different values. We did not consider them at first, if there were less granular fields conveying similar information. Thus, for example, in the first stages we used ‘Type of high school’, ‘Province of high school’ and ‘City of residence’ to assess the influence of the locations of pre-University studies and residence on the predictions. Likewise, ‘Type of disability’ was not considered because it took too many different values and the Boolean ‘Has any disability’ was used instead.

- Infrastructure services. The students’ enjoyment of potable water, sewage system, electricity supply, landline phone, Internet and cable TV was treated as an accumulated numerical value, from 0 to 6, instead of managing the 64 different combinations. We tried configurations in which the six variables were given the same weight and others in which water, sewage and electricity got double importance.

- Enumerated types: in general, the method that adds the lowest topological dispersion to the inputs of a neural network for enumerated types is one-to-K. As a shortcoming, the dimensionality of the network grows exponentially with each possible value.

- Binary variables: for simplicity, we chose to encode Boolean variables as enumerated types of 2 values.

- Numerical variables: we decided to use Gaussian normalization for the numerical fields, preprocessing the data (based on normalized median) in order to remove out-of-range values and false zeros that could have detrimental effects.

- Number of subjects taken.

- Average qualification.

- Number of subjects passed.

- Number of subjects failed.

3. Configuration of the MLP

- It should fall between the numbers of neurons at the input and output layers.

- It should be close to of the size of the inputs plus the size of the outputs.

- It should be lower than twice the number of input neurons.

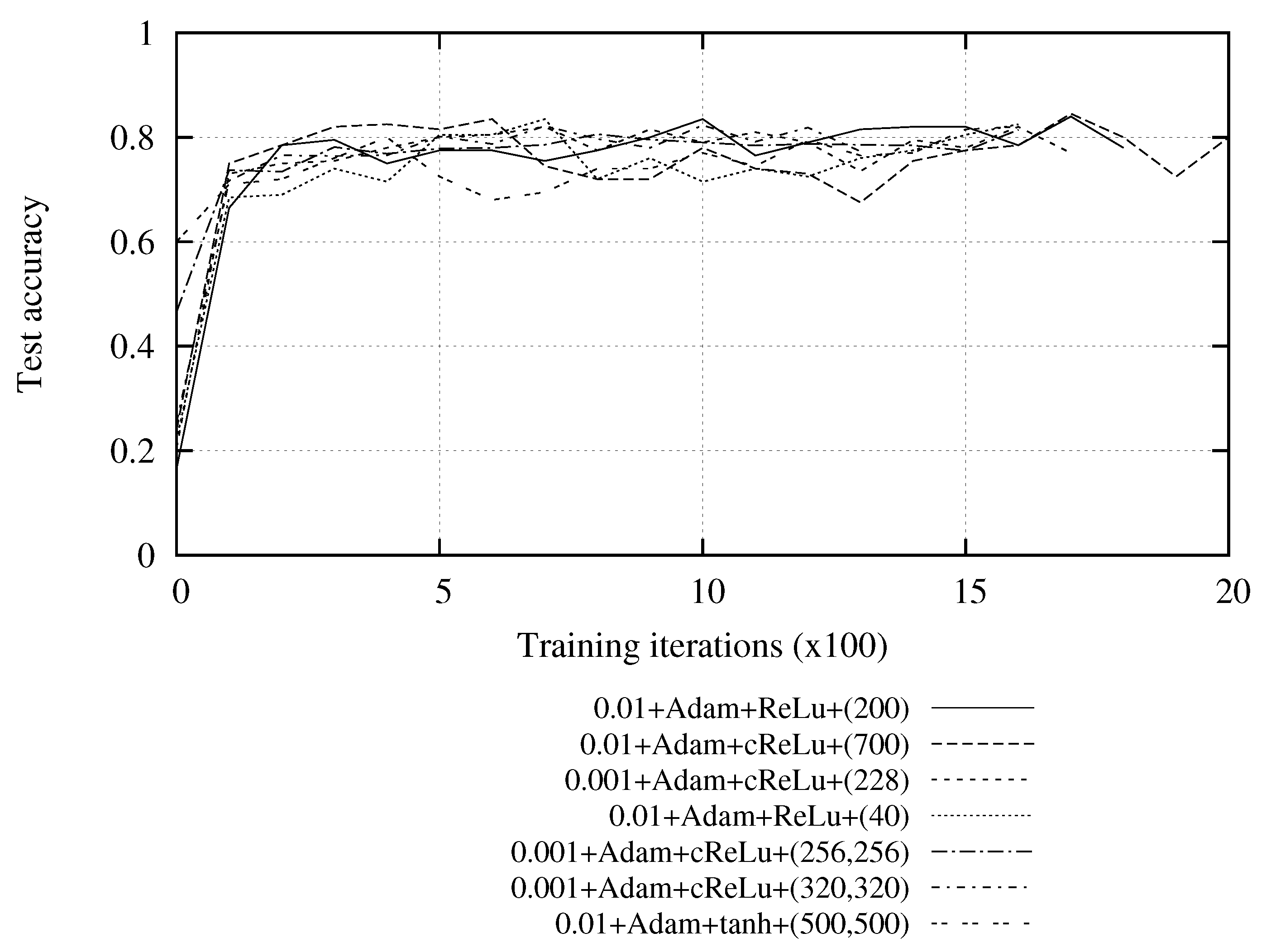

4. Results

- Training accuracy monitors the speed at which the neural network adjusts to the training data; the value must tend to 1.

- Test accuracy monitors the speed at which the neural network learns to predict the correct outputs for a set of input data for which it has not been trained.

5. Discussion vs. Related Work

- The authors of Reference [7] ran a comparative study on a dataset of 257 student records, showing that Bayesian networks (76.8% accuracy) outperformed decision trees (73.9%) and these in turn outperformed the Multi-Layer Perceptron (71.2%).

- Similarly, a study was presented in Reference [8] with data about 280 students, making predictions with 10 off-the-shelf algorithms implemented in the Weka data mining framework (https://www.cs.waikato.ac.nz/ml/weka/). The Naive Bayes classifier was found to be the best predictor (65% accuracy).

- Another comparison was made in Reference [9] on a dataset containing 225 student records, with 10 attributes of academic performance each. Once again, a Bayesian network (92% accuracy) turned out to be slightly better than other classifiers (Naive Bayes, ID3 and J48) and than the Multi-Layer Perceptron.

- Mishra et al. conducted a study including some social and emotional parameters in the students’ profiles, which the evaluation showed to be much less relevant to the predictions than the records of previous academic results. Random Tree happened to be the most accurate algorithm [10].

- The authors of Reference [12] applied a range of classifiers and clustering methods on the academic results of 480 students in order to predict the outcomes of 25 others in new subjects, taking as input the recent grades obtained by the latter in the preceding semesters too. They attained an accuracy of 80% in classifying the students’ performance as low, medium or high.

- Alsheddy and Habib [13] used the J48 classifier to predict (with 85.8% accuracy) the students’ probability of abandoning the University at the end of the year, working with some demographic variables as well as from the results of preceding semesters. This study used—to the best of our knowledge—the most extensive dataset in the literature to date, with records of 1980 students.

6. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| BFGS | Broyden-Fletcher-Goldfarb-Shanno algorithm |

| cReLu | Concatenated Rectified Linear Unit |

| GPU | Graphics Processing Unit |

| MLP | Multi-Layer Perceptron |

| ReLu | Rectified Linear Unit |

| SGD | Stochastic Gradient Descent |

| UPS | Universidad Politécnica Salesiana |

| USD | United States Dollars |

References

- ICO (Information Commissioner’s Office). Data protection rights: What the public want and what the public want from Data Protection Authorities. In Proceedings of the European Conference of Data Protection Authorities, Manchester, UK, 18–20 May 2015. [Google Scholar]

- Cory, N. Cross-Border Data Flows: Where Are the Barriers, and What Do They Cost? Information Technology & Innovation Foundation: Washington, DC, USA, 2017; pp. 1–42. [Google Scholar]

- Ross, W. EU Data Privacy Laws are Likely to Create Barriers to Trade. Financial Times, 30 May 2018. [Google Scholar]

- del Casino, V. Machine Learning, Big Data and the future of higher ed. Inside Higher Ed, 21 March 2018. [Google Scholar]

- Fendley, B. Artificial Intelligence in Higher Education. Medium, 8 March 2018. [Google Scholar]

- Blackwood, J. How one Artificial Intelligence is Changing Higher Education Curriculum. Tech Decisions, 23 January 2018. [Google Scholar]

- Osmanbegović, E.; Suljić, M. Data mining approach for predicting student performance. Econ. Rev. 2012, 10, 3–12. [Google Scholar]

- Romero, C.; Zafra, A.; Gibaja, E.; Luque, M.; Ventura, S. Predicción del rendimiento académico en las nuevas titulaciones de grado de la EPS de la Universidad de Córdoba. In Proceedings of the Jornadas de Enseñanza de la Informática, Ciudad Real, Spain, 10–13 July 2012. [Google Scholar]

- Almarabeh, H. Analysis of students’ performance by using different data mining classifiers. Int. J. Mod. Educ. Comput. Sci. 2017, 9, 1–9. [Google Scholar] [CrossRef]

- Mishra, T.; Kumar, D.; Gupta, S. Mining students’ data for performance prediction. In Proceedings of the International Conference on Advanced Computing & Communication Technologies, Rohtak, India, 8–9 February 2014. [Google Scholar]

- Ruby, J.; David, K. Predicting the Performance of Students in Higher Education Using Data Mining Classification Algorithms—A Case Study. Int. J. Res. Appl. Sci. Eng. Technol. 2014, 2, 173–180. [Google Scholar]

- Amrieh, E.A.; Hamtini, T.; Aljarah, I. Mining educational data to predict student’s academic performance using ensemble methods. Int. J. Database Theory Appl. 2016, 9, 119–136. [Google Scholar] [CrossRef]

- Alsheddy, A.; Habib, M. On the application of data mining algorithms for predicting student performance: A case study. Int. J. Comput. Sci. Netw. Secur. 2017, 17, 189–197. [Google Scholar]

- Devasia, T.; Vinushree, T.P.; Hegde, V. Prediction of students performance using Educational Data Mining. In Proceedings of the International Conference on Data Mining and Advanced Computing (SAPIENCE), Ernakulam, India, 16–18 March 2016. [Google Scholar]

- Son, L.; Fujita, H. Neural-fuzzy with representative sets for prediction of student performance. Appl. Intell. 2018, 49, 1–16. [Google Scholar] [CrossRef]

- Yang, F.; Li, F.W.B. Study on student performance estimation, student progress analysis, and student potential prediction based on data mining. Comput. Educ. 2018, 123, 97–108. [Google Scholar] [CrossRef]

- Hamoud, A.; Hashim, A.S.; Awadh, W.A. Predicting student performance in Higher Education Institutions using Decision Tree analysis. Int. J. Interact. Multimed. Artif. Intell. 2018, 5, 26–31. [Google Scholar] [CrossRef]

- Hornik, K. Approximation capabilities of multilayer feedforward networks. Neural Netw. 1991, 4, 251–257. [Google Scholar] [CrossRef]

- Belue, L.M.; Bauer, K.W. Determining input features for multilayer perceptrons. Neurocomputing 1995, 7, 111–121. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y.W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Heaton, J. The Number of Hidden Layers. Heaton Research. 1 June 2017. Available online: https://www.heatonresearch.com/2017/06/01/hidden-layers.html (accessed on 27 March 2018).

- Ramchoun, H.; Idrissi, M.A.J.; Ghanou, Y.; Ettaouil, M. New modeling of Multilayer Perceptron architecture optimization with regularization: An application to pattern classification. Int. J. Comput. Sci. 2017, 44, 261–269. [Google Scholar]

- Demyanov, S. Regularization Methods for NEURAL networks and Related Models. Ph.D. Thesis, Department of Computing and Information Systems, The University of Melbourne, Melbourne, Australia, September 2015. [Google Scholar]

- Vukicevic, M.; Jovanovic, M.Z.; Delibasic, B.; Suknovic, M. Recommender system for selection of the right study program for Higher Education students. In RapidMiner: Data Mining Use Cases and Business Analytics Applications; Hofmann, M., Klinkenberg, R., Eds.; Chapman & Hall: London, UK, October 2013. [Google Scholar]

- Bakhshinategh, B.; Spanakis, G.; Zaiane, O.; Elatia, S. A course recommender system based on graduating attributes. In Proceedings of the 9th International Conference on Computer Supported Education (CSEDU), Porto, Portugal, 21–23 April 2017. [Google Scholar]

- Lin, J.; Pu, H.; Li, Y.; Lian, J. Intelligent recommendation system for course selection in smart education. Procedia Comput. Sci. 2018, 129, 449–453. [Google Scholar] [CrossRef]

| Field | Type | Values |

|---|---|---|

| Gender | Enum | Male/female. |

| Birthdate | Date | Dates from 1970 to 1996. |

| Marital status | Enum | Single/married/divorced/widow/domestic partnership. |

| Country of birth | Enum | Several countries—most commonly, Ecuador. |

| City of birth | Enum | City names |

| Nationality | Enum | Several nationalities—most commonly, Ecuadorean. |

| Race | Enum | White/indigenous/mestizo/black/Afro-Ecuadorean/unknown. |

| Blood type | Enum | Includes ‘unknown’. |

| Mobile operator | Enum | Claro/CNT/Movistar/Others. |

| Country of origin | Enum | Several countries—most commonly, Ecuador. |

| High school of origin | Enum | Names of many different high schools. |

| Type of high school | Enum | Foreign/fiscal/fiscommisional/particular. |

| Province of high school | Enum | Province names. |

| City of high school | Enum | City names. |

| High school diploma | Enum | Many official denominations. |

| High school graduation year | Enum | Dates from 1984 to 2018 |

| High school grade | Float | From 0 to 20, except for foreign students. |

| Has another diploma? | Boolean | Yes/no. |

| Diploma level | Enum | Several values, if the above is ‘yes’. |

| Is currently studying another degree? | Boolean | Yes/no. |

| Country of residence | Enum | Ecuador. |

| Province of residence | Enum | Province names. |

| City of residence | Enum | City names. |

| Parish of residence | Enum | Parish names. |

| Type of parish | Enum | Rural/urban/marginal urban. |

| Neighborhood | Enum | Neighborhood names. |

| Area of residence | Enum | Center/north/south/valleys/rural/suburbs. |

| Country of origin | Enum | Several countries—most commonly, Ecuador. |

| Province of origin | Enum | Province names. |

| City of origin | Enum | City names. |

| Parish of origin | Enum | Parish names. |

| Type of parish | Enum | Rural/urban/marginal urban. |

| Neighborhood | Enum | Neighborhood names. |

| Area of origin | Enum | Center/north/south/valleys/rural/suburbs. |

| Head of family | Boolean | Yes/no. |

| Economically dependent | Boolean | Yes/no. |

| Who covers expenses | Enum | Self/parents/siblings/NGO/… |

| Has health problems? | Boolean | Yes/no. |

| Has any disability? | Boolean | Yes/no. |

| Type of disability | Enum | Disability denomination from taxonomy. |

| Member of CONADIS | Boolean | Yes/no. |

| Housing | Enum | Own/leased/rented/… |

| Type of housing | Enum | House/apartment/residence/… |

| Housing structure | Enum | Bricks/blocks/concrete/wood/substandard/… |

| Potable water | Boolean | Yes/no. |

| Sewage system | Boolean | Yes/no. |

| Electricity supply | Boolean | Yes/no. |

| Landline phone | Boolean | Yes/no. |

| Internet | Boolean | Yes/no. |

| Cable TV | Boolean | Yes/no. |

| Additional real estate properties | Integer | 0/1/2/… |

| Value of additional properties | Float | Value in USD. |

| Number of family-owned vehicles | Integer | 0/1/2/… |

| Value of vehicles | Float | Value in USD. |

| Monthly expenses in housing | Float | Value in USD. |

| Monthly expenses in food | Float | Value in USD. |

| Monthly expenses in education | Float | Value in USD. |

| Monthly expenses in transport | Float | Value in USD. |

| Monthly expenses in health | Float | Value in USD. |

| Monthly expenses in commodity services | Float | Value in USD. |

| Other monthly expenses | Float | Value in USD. |

| Overall monthly expenses | Float | Sum of the above. |

| Hyperparameter | Effect | Range |

|---|---|---|

| Learning rate | Rate of change of the internal weights of the neural network. | {constant, inverse, adaptive} |

| Penalty factor () | Used in regularization, to prevent excessive variations in the internal weights of the network. | 0.001–125 |

| Optimizer | Functions that decide the direction and gradient size to minimize the chosen error metrics. | {BFGS, Adam, Adadelta, SGD} |

| Activation functions | Shape of the output of any neuron, given an input or set of inputs. | {ReLu, cReLu, tanh} |

| Hidden layers and neurons | General architecture of the network. | 1 or 2 hidden layers, their numbers of neurons equal to 0.7 or 0.8 times the number of neurons at the input layer. |

| Momentum | It helps stabilize the neural network, controlling the impact of feedback information. If it takes an excessively low value, the internal weights of the network vary too much and the convergence process takes longer. In contrast, if its value is too high, the network may converge too far from the best point. | 0.05, 0.1, … 0.9 |

| Learning Rate | Optimizer | Activation Function | Hidden Layers | Training Accuracy | Test Accuracy | |

|---|---|---|---|---|---|---|

| 0.01 | 0.48 | Adam | cReLu | (560) | 1 | 0.825 |

| 0.01 | 0.12 | Adam | ReLu | (160) | 1 | 0.815 |

| 0.01 | 0.576 | Adam | ReLu | (128) | 0.965 | 0.83 |

| 0.1 | 0.01 | SGD | tanh | (200) | 0.785 | 0.81 |

| 0.1 | 0.1 | SGD | tanh | (200) | 0.734 | 0.82 |

| 0.001 | 0.01 | Adadelta | ReLu | (200,200) | 0.5225 | 0.832 |

| 0.01 | 0.21 | Adam | cReLu | (81,81) | 1 | 0.81 |

| 0.01 | 0.4 | SGD | cReLu | (320) | 0.87 | 0.82 |

| 0.1 | 0.025 | SGD | cReLu | (104) | 0.955 | 0.825 |

| 0.001 | 0.358 | Adam | ReLu | (104) | 0.99 | 0.825 |

| 0.01 | 0.0358 | Adam | ReLu | (104) | 1 | 0.81 |

| 0.1 | 0.25 | SGD | ReLu | (163,163) | 1 | 0.82 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

García-Vélez, R.A.; López-Nores, M.; González-Fernández, G.; Robles-Bykbaev, V.E.; Wallace, M.; Pazos-Arias, J.J.; Gil-Solla, A. On Data Protection Regulations, Big Data and Sledgehammers in Higher Education. Appl. Sci. 2019, 9, 3084. https://doi.org/10.3390/app9153084

García-Vélez RA, López-Nores M, González-Fernández G, Robles-Bykbaev VE, Wallace M, Pazos-Arias JJ, Gil-Solla A. On Data Protection Regulations, Big Data and Sledgehammers in Higher Education. Applied Sciences. 2019; 9(15):3084. https://doi.org/10.3390/app9153084

Chicago/Turabian StyleGarcía-Vélez, Roberto Agustín, Martín López-Nores, Gabriel González-Fernández, Vladimir Espartaco Robles-Bykbaev, Manolis Wallace, José J. Pazos-Arias, and Alberto Gil-Solla. 2019. "On Data Protection Regulations, Big Data and Sledgehammers in Higher Education" Applied Sciences 9, no. 15: 3084. https://doi.org/10.3390/app9153084

APA StyleGarcía-Vélez, R. A., López-Nores, M., González-Fernández, G., Robles-Bykbaev, V. E., Wallace, M., Pazos-Arias, J. J., & Gil-Solla, A. (2019). On Data Protection Regulations, Big Data and Sledgehammers in Higher Education. Applied Sciences, 9(15), 3084. https://doi.org/10.3390/app9153084