A New Approach to Information Extraction in User-Centric E-Recruitment Systems

Abstract

:1. Introduction

2. Related Work

2.1. Information Extraction

2.2. Knowledge Base Construction

2.3. Data Enrichment and Linked Open Data

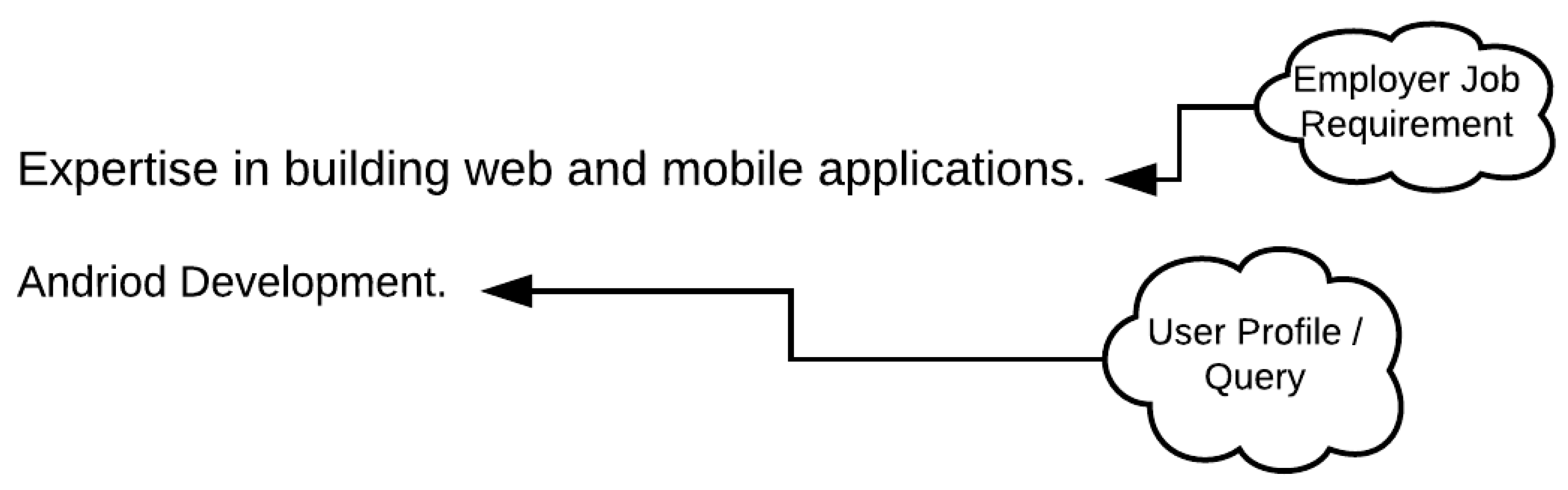

2.4. E-Recruitment Systems

2.5. Critical Analysis

- Information loss in the extraction of domain specific e-recruitment entities for job descriptions due to unavailability of their context, or inter and intra document linkages.

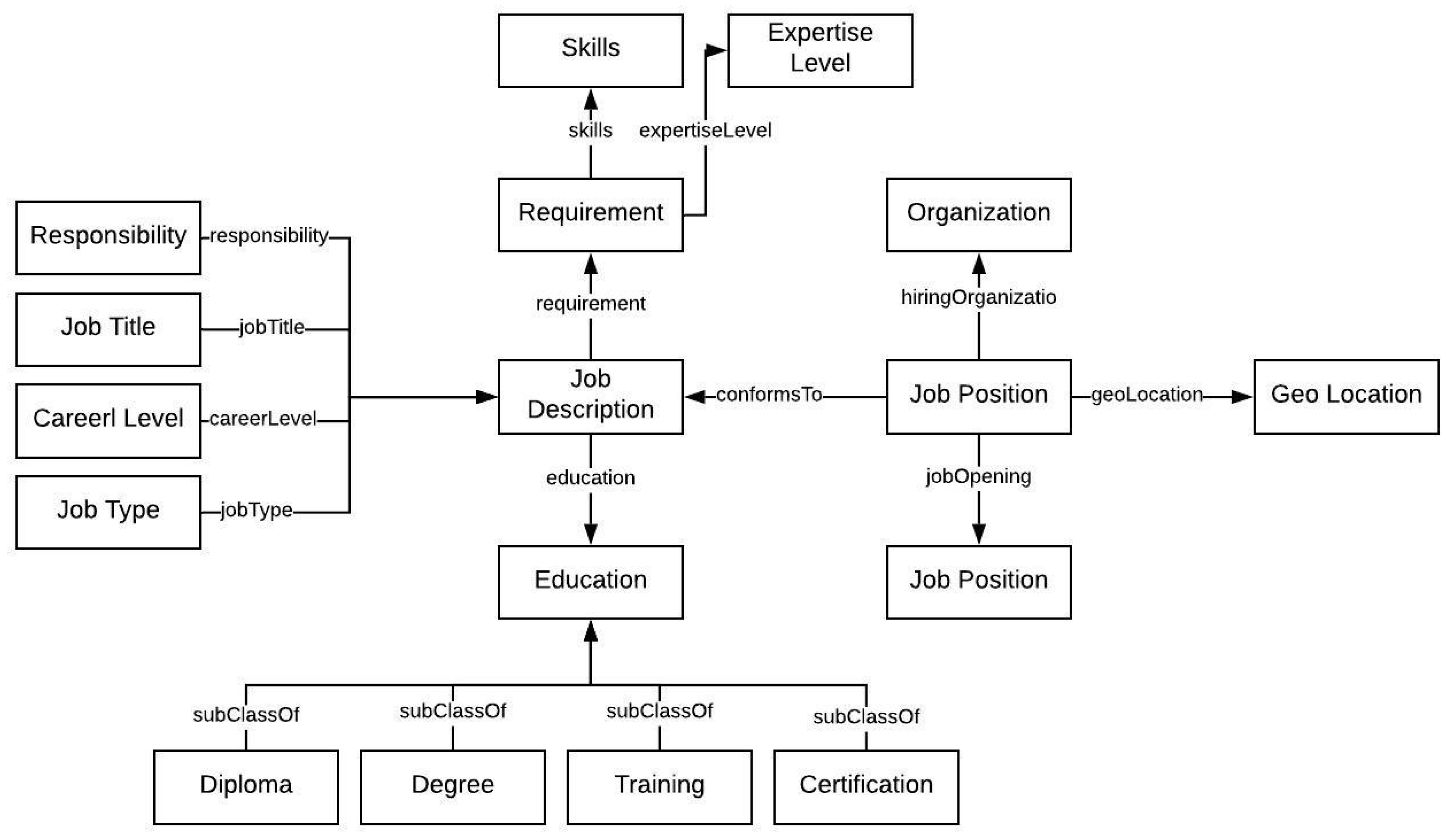

- Information loss due to the absence of a comprehensive schema level domain ontology in e-recruitment for building relationships (hierarchical and associative) among extracted entities for job descriptions.

- Usage of static sources for data enrichment (expansion) resulting in data staleness.

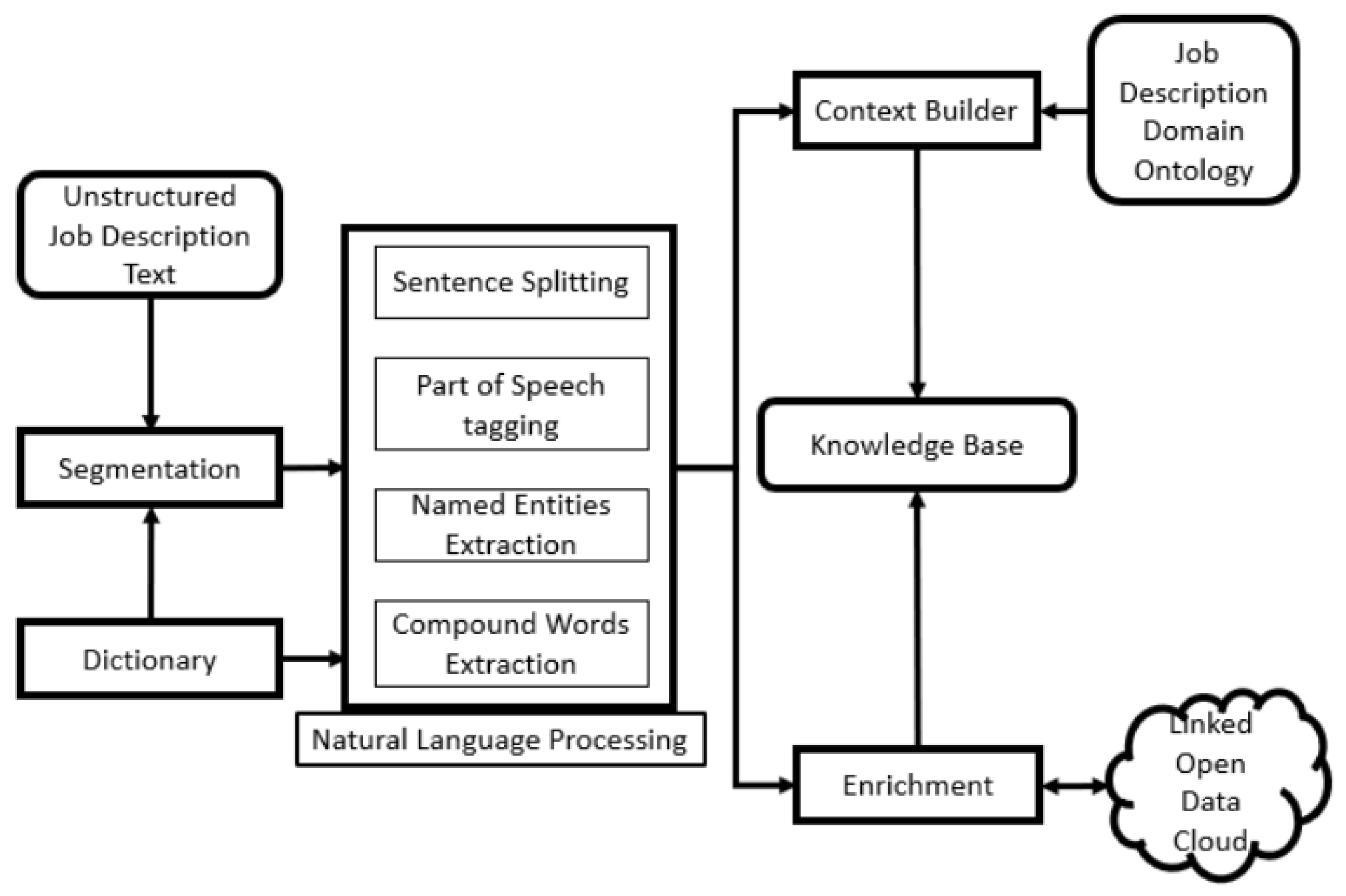

3. The SAJ System

3.1. Dictionary

3.2. Segmentation

3.3. Entity Extraction

3.4. Context Builder

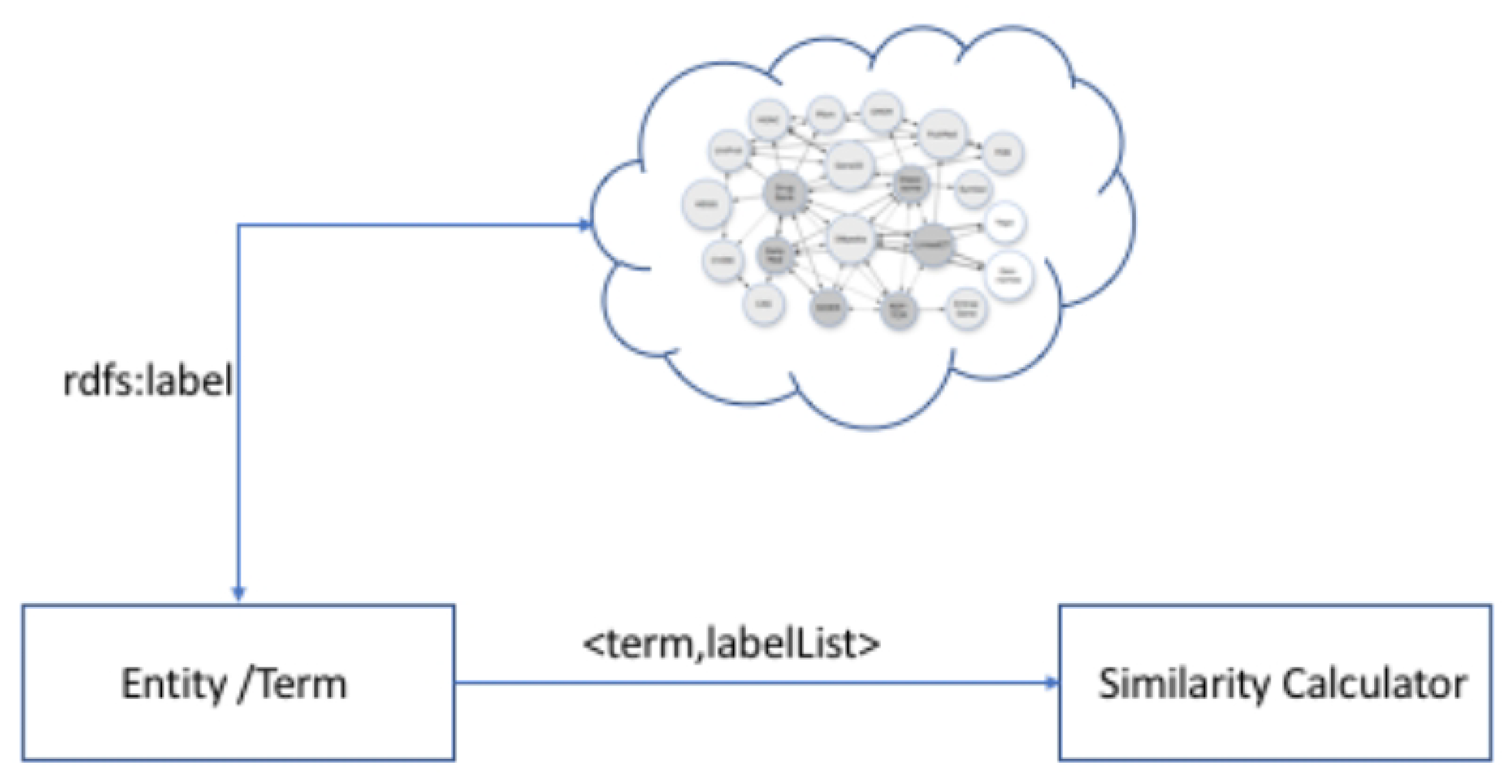

3.5. Enrichment

3.6. Knowledge Base

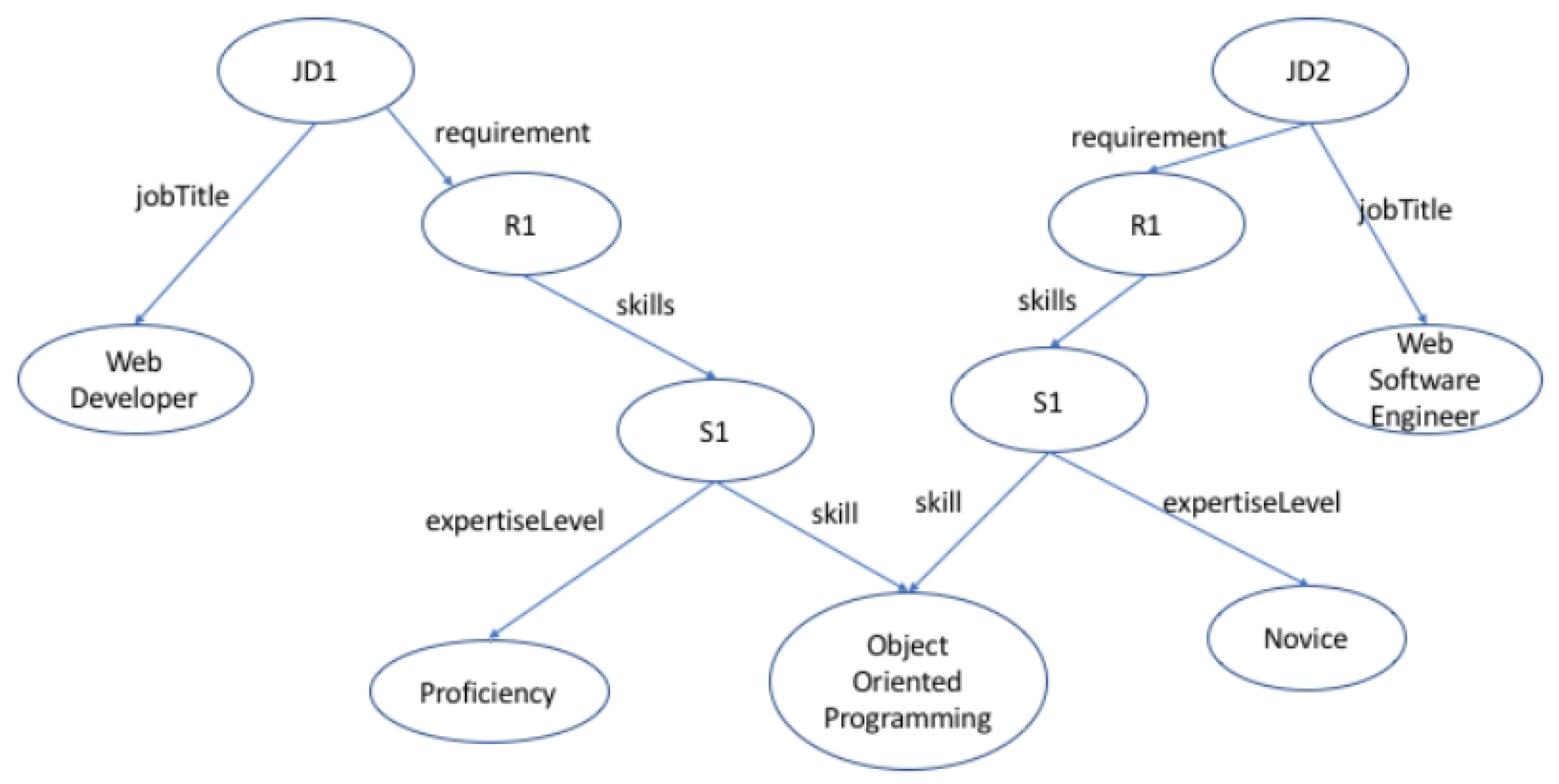

4. Extraction and Enrichment in SAJ

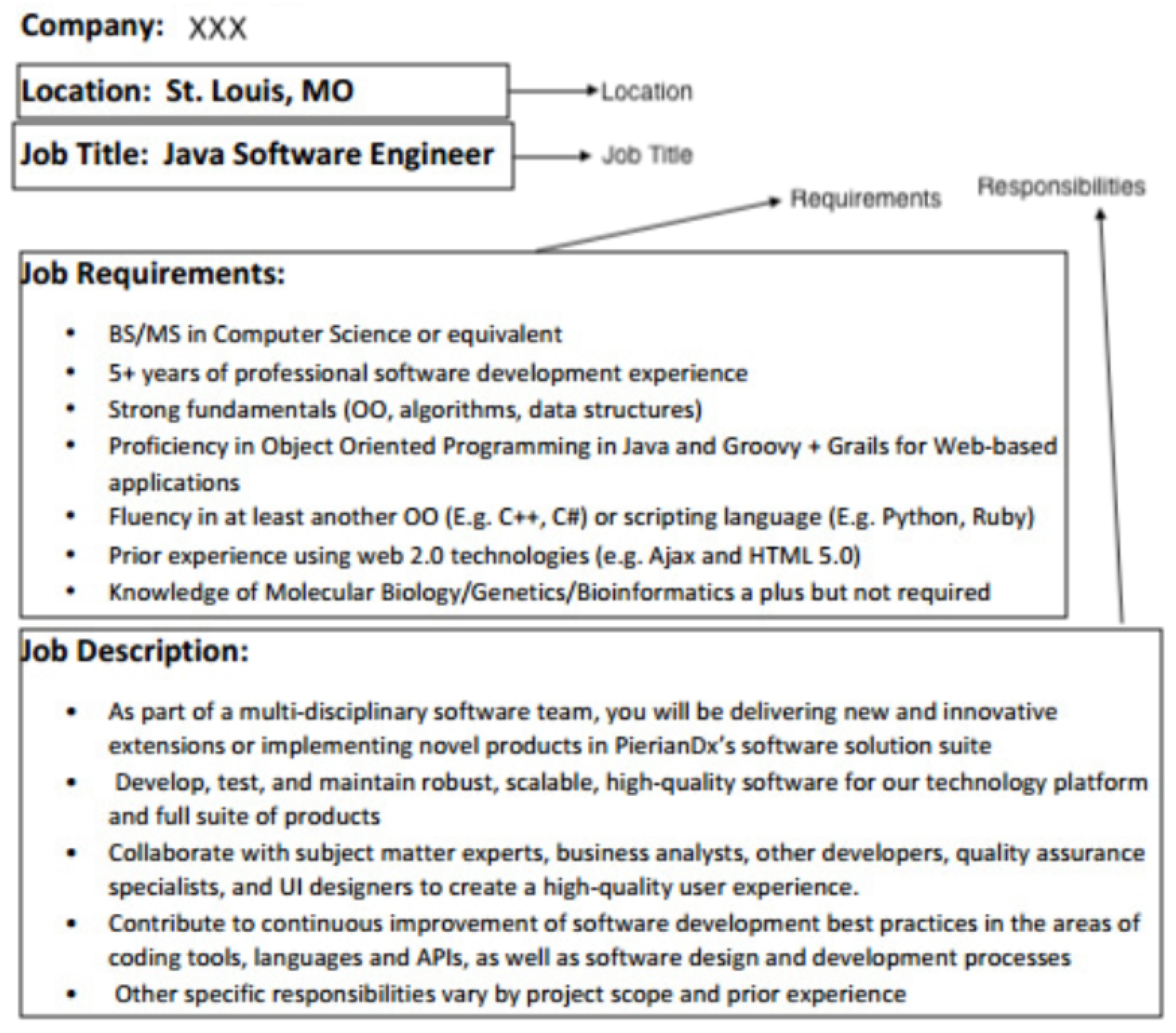

4.1. Basic Information Extraction

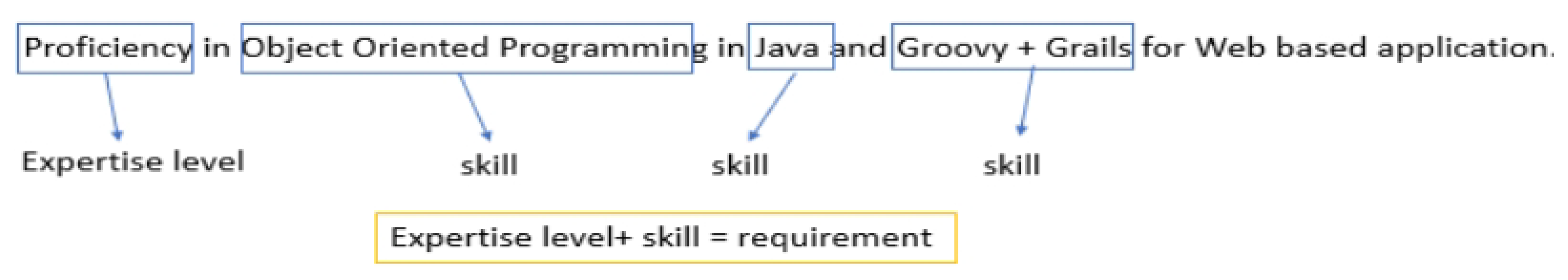

4.2. Requirements Extraction

4.2.1. Responsibilities’ Extraction

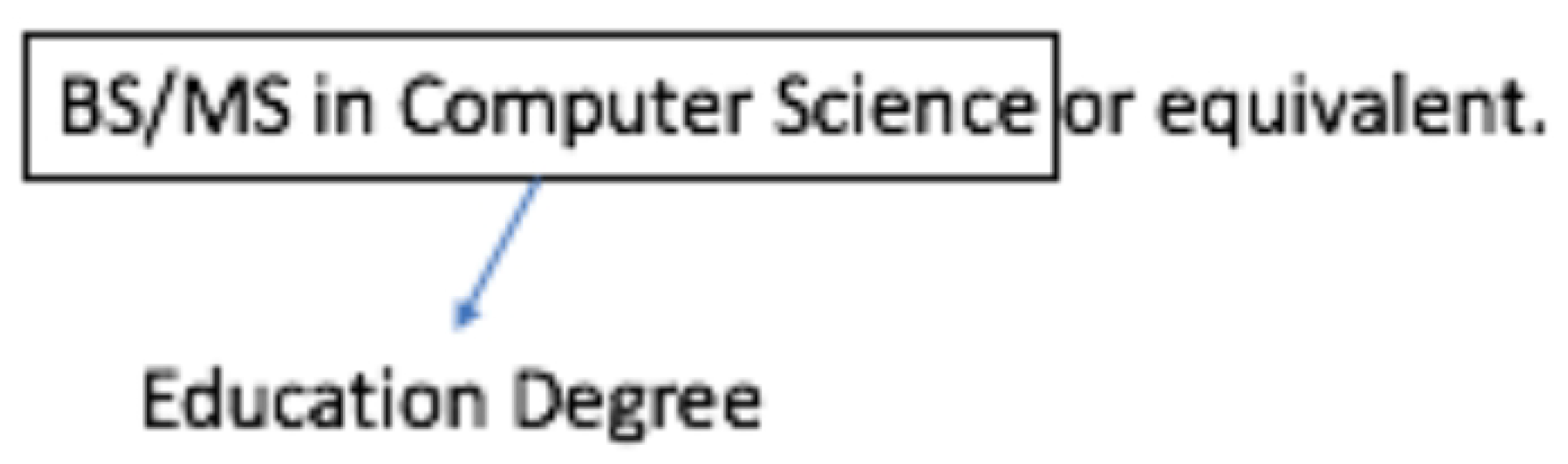

4.2.2. Education Extraction

4.3. Entities Enrichment

5. Evaluation

5.1. Data-Set Acquisition

5.2. Evaluation Metrics

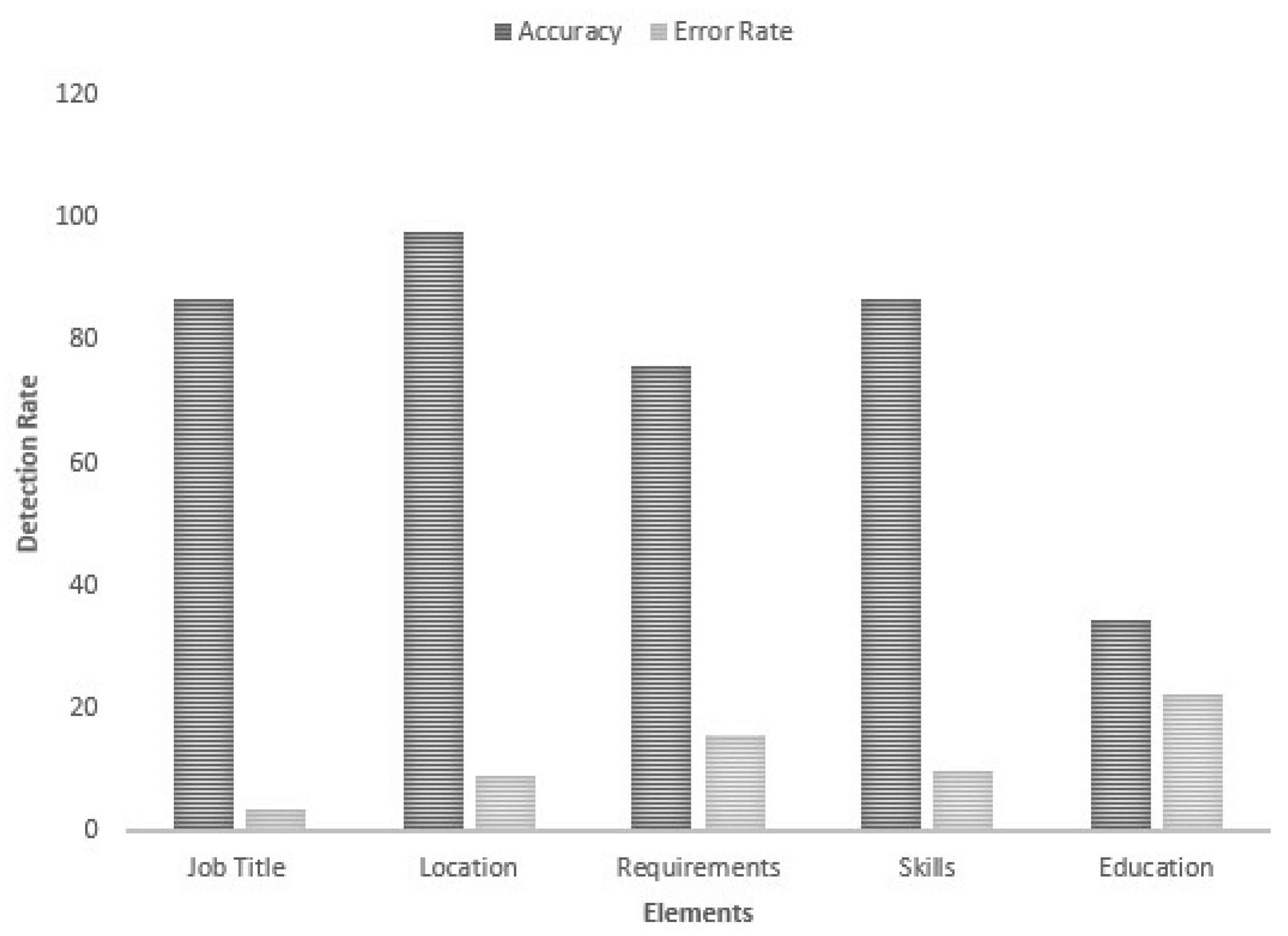

5.3. Evaluation Results

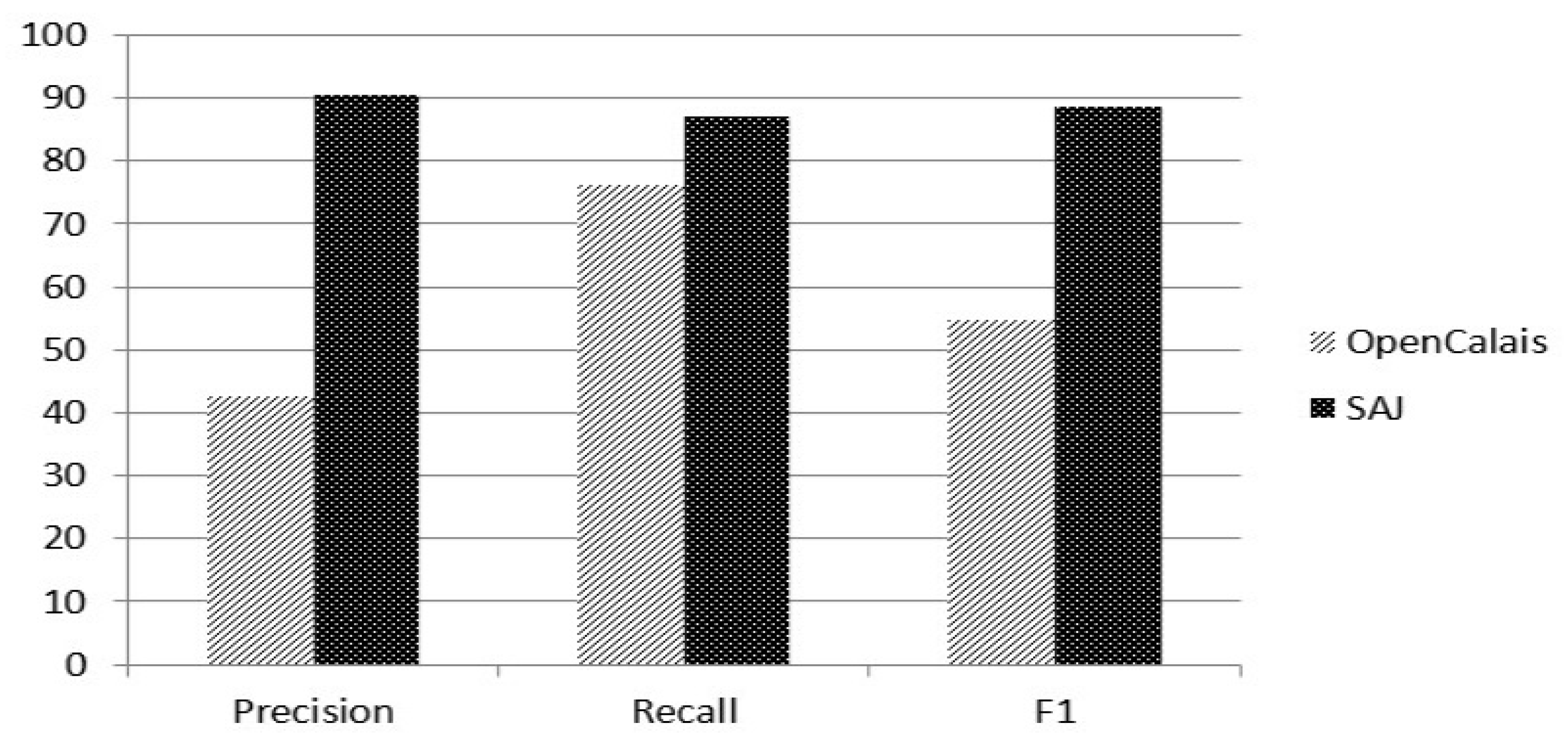

5.4. Comparative Analysis

6. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Is Your Recruitment Process Costing You Time, Money and Good Candidates? Available online: https://ckscience.co.uk/is-your-recruitment-process-costing-you-time-money-and-good-candidates/ (accessed on 1 January 2019).

- Owoseni, A.T.; Olabode, O.; Ojokoh, B. Enhanced E-recruitment using Semantic Retrieval of Modeled Serialized Documents. Int. J. Math. Sci. Comput. 2017, 1–16. [Google Scholar] [CrossRef]

- Valle, E.D.; Cerizza, D.; Celino, I.; Estublier, J.; Vega, G.; Kerrigan, M.; Ramírez, J.; Villazón-Terrazas, B.; Guarrera, P.; Zhao, G.; et al. SEEMP: An Semantic Interoperability Infrastructure for e-Government Services in the Employment Sector. In Proceedings of the 4th European Semantic Web Conference, Innsbruck, Austria, 3–7 June 2007; pp. 220–234. [Google Scholar] [CrossRef]

- Silvello, G.; Bordea, G.; Ferro, N.; Buitelaar, P.; Bogers, T. Semantic representation and enrichment of information retrieval experimental data. Int. J. Digit. Libr. 2017, 18, 145–172. [Google Scholar] [CrossRef]

- Romero, G.C.; Esteban, M.P.E.; Such, M.M. Semantic Enrichment on Cultural Heritage collections: A case study using geographic information. In Proceedings of the 2nd International Conference on Digital Access to Textual Cultural Heritage, DATeCH 2017, Göttingen, Germany, 1–2 June 2017; pp. 169–174. [Google Scholar] [CrossRef]

- Introduction to the Principles of Linked Open Data. Available online: https://programminghistorian.org/en/lessons/intro-to-linked-data (accessed on 1 December 2018).

- Sa, C.D.; Ratner, A.; Ré, C.; Shin, J.; Wang, F.; Wu, S.; Zhang, C. Incremental knowledge base construction using DeepDive. VLDB J. 2017, 26, 81–105. [Google Scholar] [CrossRef]

- Gregory, M.L.; McGrath, L.; Bell, E.B.; O’Hara, K.; Domico, K. Domain Independent Knowledge Base Population from Structured and Unstructured Data Sources. In Proceedings of the Twenty-Fourth International Florida Artificial Intelligence Research Society Conference, Palm Beach, FL, USA, 18–20 May 2011. [Google Scholar]

- Buttinger, C.; Pröll, B.; Palkoska, J.; Retschitzegger, W.; Schauer, M.; Immler, R. JobOlize - Headhunting by Information Extraction in the Era of Web 2.0. In Proceedings of the 7th International Workshop on Web-Oriented Software Technologies (IWWOST’2008) in conjunction with the 8th International Conference on Web Engineering (ICWE’2008) Yorktown Heights, New York, NY, USA, 14 July 2008. [Google Scholar]

- Karkaletsis, V.; Fragkou, P.; Petasis, G.; Iosif, E. Ontology Based Information Extraction from Text. In Knowledge-Driven Multimedia Information Extraction and Ontology Evolution - Bridging the Semantic Gap; Springer: Berlin/Heidelberg, Germany, 2011; pp. 89–109. [Google Scholar]

- Jayram, T.S.; Krishnamurthy, R.; Raghavan, S.; Vaithyanathan, S.; Zhu, H. Avatar Information Extraction System. IEEE Data Eng. Bull. 2006, 29, 40–48. [Google Scholar]

- Bijalwan, V.; Kumar, V.; Kumari, P.; Pascual, J. KNN based machine learning approach for text and document mining. Int. J. Database Theory Appl. 2014, 7, 61–70. [Google Scholar] [CrossRef]

- Vicient, C.; Sánchez, D.; Moreno, A. Ontology-Based Feature Extraction. In Proceedings of the 2011 IEEE/WIC/ACM International Conferences on Web Intelligence and Intelligent Agent Technology, Lyon, France, 22–27 August 2011; pp. 189–192. [Google Scholar] [CrossRef]

- Poria, S.; Cambria, E.; Ku, L.; Gui, C.; Gelbukh, A.F. A Rule-Based Approach to Aspect Extraction from Product Reviews. In Proceedings of the Second Workshop on Natural Language Processing for Social Media (SocialNLP), Dublin, Ireland, 24 August 2014; pp. 28–37. [Google Scholar] [CrossRef]

- Rocktäschel, T.; Singh, S.; Riedel, S. Injecting Logical Background Knowledge into Embeddings for Relation Extraction. In Proceedings of the 2015 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Denver, CO, USA, 31 May–5 June 2015; pp. 1119–1129. [Google Scholar]

- Mykowiecka, A.; Marciniak, M.; Kupsc, A. Rule-based information extraction from patients’ clinical data. J. Biomed. Inform. 2009, 42, 923–936. [Google Scholar] [CrossRef] [PubMed]

- Ramakrishnan, C.; Mendes, P.N.; Wang, S.; Sheth, A.P. Unsupervised Discovery of Compound Entities for Relationship Extraction. In Knowledge Engineering: Practice and Patterns; Gangemi, A., Euzenat, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 146–155. [Google Scholar] [Green Version]

- Zhang, N.R. Hidden Markov Models for Information Extraction; Technical Report; Stanford Natural Language Processing Group: Stanford, CA, USA, 2001. [Google Scholar]

- Lafferty, J.D.; McCallum, A.; Pereira, F.C.N. Conditional Random Fields: Probabilistic Models for Segmenting and Labeling Sequence Data. In Proceedings of the Eighteenth International Conference on Machine Learning (ICML 2001), Williams College, Williamstown, MA, USA, 28 June–1 July 2001; pp. 282–289. [Google Scholar]

- Kiryakov, A.; Popov, B.; Terziev, I.; Manov, D.; Ognyanoff, D. Semantic annotation, indexing, and retrieval. J. Web Sem. 2004, 2, 49–79. [Google Scholar] [CrossRef]

- Popov, B.; Kiryakov, A.; Kirilov, A.; Manov, D.; Ognyanoff, D.; Goranov, M. KIM—Semantic Annotation Platform. In Proceedings of the The Semantic Web—ISWC 2003, Second International Semantic Web Conference, Sanibel Island, FL, USA, 20–23 October 2003; pp. 834–849. [Google Scholar] [CrossRef]

- Muller, H.M.; Kenny, E.E.; Sternberg, P.W. Textpresso: An ontology-based information retrieval and extraction system for biological literature. PLoS Biol. 2004, 2, e309. [Google Scholar] [CrossRef] [PubMed]

- Ali, F.; Kim, E.K.; Kim, Y. Type-2 fuzzy ontology-based opinion mining and information extraction: A proposal to automate the hotel reservation system. Appl. Intell. 2015, 42, 481–500. [Google Scholar] [CrossRef]

- Saggion, H.; Funk, A.; Maynard, D.; Bontcheva, K. Ontology-Based Information Extraction for Business Intelligence. In Proceedings of the 6th International Semantic Web Conference, Busan, Korea, 11–15 November 2007; pp. 843–856. [Google Scholar] [CrossRef]

- Geibel, P.; Trautwein, M.; Erdur, H.; Zimmermann, L.; Jegzentis, K.; Bengner, M.; Nolte, C.H.; Tolxdorff, T. Ontology-Based Information Extraction: Identifying Eligible Patients for Clinical Trials in Neurology. J. Data Semant. 2015, 4, 133–147. [Google Scholar] [CrossRef]

- Vijayarajan, V.; Dinakaran, M.; Tejaswin, P.; Lohani, M. A generic framework for ontology-based information retrieval and image retrieval in web data. Hum.-Centric Comput. Inf. Sci. 2016, 6, 18. [Google Scholar] [CrossRef]

- Al-Yahya, M.M.; Aldhubayi, L.; Al-Malak, S. A Pattern-Based Approach to Semantic Relation Extraction Using a Seed Ontology. In Proceedings of the 2014 IEEE International Conference on Semantic Computing, Newport Beach, CA, USA, 16–18 June 2014; pp. 96–99. [Google Scholar] [CrossRef]

- Vicient, C.; Sánchez, D.; Moreno, A. An automatic approach for ontology-based feature extraction from heterogeneous textualresources. Eng. Appl. AI 2013, 26, 1092–1106. [Google Scholar] [CrossRef]

- Ahmed, N.; Khan, S.; Latif, K.; Masood, A. Extracting Semantic Annotation and their Correlation with Document. In Proceedings of the 4th International Conference on Emerging Technologies, Rawalpindi, Pakistan, 18–19 October 2008; pp. 32–37. [Google Scholar]

- Mayfield, J.; McNamee, P.; Harmon, C.; Finin, T.; Lawrie, D. KELVIN: Extracting knowledge from large text collections. In Proceedings of the 2014 AAAI Fall Symposium, Arlington, VA, USA, 13–15 November 2014; pp. 34–41. [Google Scholar]

- Zhang, C.; Shin, J.; Ré, C.; Cafarella, M.J.; Niu, F. Extracting Databases from Dark Data with DeepDive. In Proceedings of the 2016 International Conference on Management of Data, SIGMOD, San Francisco, CA, USA, 26 June–1 July 2016; pp. 847–859. [Google Scholar] [CrossRef]

- Cafarella, M.J.; Ilyas, I.F.; Kornacker, M.; Kraska, T.; Ré, C. Dark Data: Are we solving the right problems? In Proceedings of the 32nd IEEE International Conference on Data Engineering, ICDE, Helsinki, Finland, 16–20 May 2016; pp. 1444–1445. [Google Scholar] [CrossRef]

- Richardson, M.; Domingos, P.M. Markov logic networks. Mach. Learn. 2006, 62, 107–136. [Google Scholar] [CrossRef] [Green Version]

- Gao, N.; Dredze, M.; Oard, D.W. Knowledge Base Population for Organization Mentions in Email. In Proceedings of the 5th Workshop on Automated Knowledge Base Construction, AKBC@NAACL-HLT, San Diego, CA, USA, 17 June 2016; pp. 24–28. [Google Scholar]

- Weichselbraun, A.; Gindl, S.; Scharl, A. Enriching semantic knowledge bases for opinion mining in big data applications. Knowl.-Based Syst. 2014, 69, 78–85. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bertin, M.; Atanassova, I. Semantic Enrichment of Scientific Publications and Metadata: Citation Analysis Through Contextual and Cognitive Analysis. D-Lib Mag. 2012, 18. [Google Scholar] [CrossRef]

- Sun, H.; Ma, H.; Yih, W.; Tsai, C.; Liu, J.; Chang, M. Open Domain Question Answering via Semantic Enrichment. In Proceedings of the 24th International Conference on World Wide Web, WWW, Florence, Italy, 18–22 May 2015; pp. 1045–1055. [Google Scholar] [CrossRef]

- Hsueh, H.Y.; Chen, C.N.; Huang, K.F. Generating metadata from web documents: a systematic approach. Hum.-Centric Comput. Inf. Sci. 2013, 3, 7. [Google Scholar] [CrossRef] [Green Version]

- Russo, V. Semantic Web: Metadata, Linked Data, Open Data. Sci. Philos. 2017, 3, 37–46. [Google Scholar]

- Yamaguchi, A.; Kozaki, K.; Lenz, K.; Yamamoto, Y.; Masuya, H.; Kobayashi, N. Data Acquisition by Traversing Class-Class Relationships over the Linked Open Data. In Proceedings of the ISWC 2016 Posters & Demonstrations Track Co-Located with 15th International Semantic Web Conference (ISWC 2016), Kobe, Japan, 19 October 2016. [Google Scholar]

- Koho, M.; Hyvönen, E.; Heino, E.; Tuominen, J.; Leskinen, P.; Mäkelä, E. Linked Death–representing, publishing, and using Second World War death records as Linked Open Data. In The Semantic Web: ESWC 2017 Satellite Events, European Semantic Web Conference, Anissaras, Greece, 29 May 2016; Springer: Cham, Switzerland, 2016; pp. 3–14. [Google Scholar]

- Kamdar, M.R.; Musen, M.A. PhLeGrA: Graph Analytics in Pharmacology over the Web of Life Sciences Linked Open Data. In Proceedings of the 26th International Conference on World Wide Web, WWW 2017, Perth, Australia, 3–7 April 2017; pp. 321–329. [Google Scholar] [CrossRef]

- Wei, K.; Huang, J.; Fu, S. A survey of e-commerce recommender systems. In Proceedings of the 2007 International Conference on Service Systems and Service Management, Chengdu, China, 9–11 June 2007; pp. 1–5. [Google Scholar]

- Roman, D.; Kopecký, J.; Vitvar, T.; Domingue, J.; Fensel, D. WSMO-Lite and hRESTS: Lightweight semantic annotations for Web services and RESTful APIs. J. Web Sem. 2015, 31, 39–58. [Google Scholar] [CrossRef] [Green Version]

- Sharifi, O.; Bayram, Z. A Critical Evaluation of Web Service Modeling Ontology and Web Service Modeling Language. In Computer and Information Sciences, Proceedings of the International Symposium on Computer and Information Sciences, Krakow, Poland, 27–28 October 2016; Springer: Cham, Switzerland, 2016; pp. 97–105. [Google Scholar] [CrossRef] [Green Version]

- Rekha, R.; Syamili, C. Ontology Engineering Methodologies: An Analytical Study. 2017. Available online: https://pdfs.semanticscholar.org/abba/aec8969745162d25d3f468dc080eda289ce7.pdf (accessed on 15 March 2018).

- Malherbe, E.; Cataldi, M.; Ballatore, A. Bringing Order to the Job Market: Efficient Job Offer Categorization in E-Recruitment. In Proceedings of the 38th International ACM SIGIR Conference on Research and Development in Information Retrieval, Santiago, Chile, 9–13 August 2015; pp. 1101–1104. [Google Scholar] [CrossRef]

- Sen, A.; Das, A.; Ghosh, K.; Ghosh, S. Screener: A system for extracting education related information from resumes using text based information extraction system. In Proceedings of the International Conference on Computer and Software Modeling, Cochin, India, 20–21 October 2012; Volume 54, pp. 31–35. [Google Scholar]

- Malik, S.K.; Prakash, N.; Rizvi, S. Semantic annotation framework for intelligent information retrieval using KIM architecture. Int. J. Web Semant. Technol. 2010, 1, 12–26. [Google Scholar] [CrossRef]

- Cunningham, H.; Cunningham, H.; Maynard, D.; Maynard, D.; Tablan, V.; Tablan, V. JAPE: A Java Annotation Patterns Engine. 1999. Available online: https://www.researchgate.net/publication/2495768_JAPE_a_Java_Annotation_Patterns_Engine (accessed on 16 April 2010).

- Awan, M.N.A. Extraction and Generation of Semantic Annotations from Digital Documents. Master’s Thesis, NUST School of Electrical Engineering & Computer Science, Islamabad, India, 2009. [Google Scholar]

- Ahmed, N.; Khan, S.; Latif, K. Job Description Ontology. In Proceedings of the International Conference on Frontiers of Information Technology, FIT, Islamabad, Pakistan, 19–21 December 2016; pp. 217–222. [Google Scholar] [CrossRef]

- Agichtein, E.; Brill, E.; Dumais, S.T. Improving web search ranking by incorporating user behavior information. In Proceedings of the 29th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, Seattle, DC, USA, 6–11 August 2006; pp. 19–26. [Google Scholar] [CrossRef]

- Thada, V.; Jaglan, V. Comparison of jaccard, dice, cosine similarity coefficient to find best fitness value for web retrieved documents using genetic algorithm. Int. J. Innov. Eng. Technol. 2013, 2, 202–205. [Google Scholar]

- Kolb, P. Disco: A multilingual database of distributionally similar words. KONVENS 2008-Ergänzungsband: Textressourcen und Lexikalisches Wissen. 2008, Volume 156. Available online: https://pdfs.semanticscholar.org/e280/07775ad8bd1e3ecdca3cea682eafcace011b.pdf (accessed on 20 July 2010).

| Segmentation Rules | |

|---|---|

| Rule | Description |

| text.sentence.index == 1 | Job title as the first line of text |

| text.sentence.token < 4 | Heading line has no other text |

| Extraction Rule | |

| Rule | Description |

| Rule:expDurationForSkill (Token.kind==number Token.string==“+”SpaceToken (Token.string==“years” |Token.string==“yrs”)):exp –> :exp.ExpDuration = rule = “expDurationForSkill” | The rules detects experience for a skill, e.g., 2+ years of experience is required in Java |

| Rules | Description |

|---|---|

| every compound words have noun | |

| A noun succeeded by a noun, terms are joined | |

| A noun succeeded by an adjective, the noun term is saved and adjective is compared with next token |

| 1. 2+ years of experience required in Java. 2. Must have worked at least 2 years in Java development. 3. Experience of 2+ years is required in Java development. |

| Entity | Example |

|---|---|

| Job Title | Java Software Engineer |

| Location | St. Louis. MO |

| Career Level | Mid-Level |

| Organization | Google, Inc. |

| Rule | Description |

|---|---|

| Rule:requirementboundarymarker Priority: 100 {Lookup.majorType==Req_BeginKeywords} ({SpaceToken})[0,2] ({Token.category==IN}| {Token.category==TO}| {Token.category==VBG}| {Token.category==VB}| {Token.category==VBZ}|{Token.category==DT})? ((({SpaceToken})[0,3] ({Token.kind==word, !Lookup.majorType==Req_NotAfterKeywords} |{Token.kind==symbol}| {Token.kind==number}| {Token.kind==punctuation,!Token.string==``.’’}) )+) : req --> :req.Requirement = {rule = ``requirementboundarymarker’’} | The rule mark the boundary of the requirement. It detects the token categories as Part of Speech (POS). The tokens are either verbs (VBZ, VBG, VB), determiners or prepositions. Besides the POS requirement, the keyword placement in the sentence is verified. |

| Rule | Description |

|---|---|

| Phase: requirementSubParts Input: RequirementsBeg Token RequirementsNot RequirementsMid RequirementsEnd Skill Split ToolsAndTechnology OperatingSystem Database Course TechnicalLanguage Protocol ExpertiseLevel MandatoryConditionTrue MandatoryConditionFalse ExpDuration Options: control = appelt Rule:requirementSubPartsStart Priority: 50 {RequirementsBeg} (((({Token})* | {Skill} | {ToolsAndTechnology} | {OperatingSystem} | {Database} {Course} | {TechnicalLanguage}| {Protocol} |{ExpertiseLevel}| {MandatoryConditionTrue} | {MandatoryConditionFalse}| {ExpDuration} |{ExpertiseLevel}))+{Split} ) :req --> :req.Requirements = {rule =``requirementSubPartsStart’’} | The rule uses various dictionaries ToolAndTechnology, OperatingSystem, Database, Course, TechnicalLanguage and others to detect the entities. Besides these entities the rules also detects the experience duration and expertise level. The rule is dynamic, i.e., placement of these entities in sentence will not affect the rule. |

| Rule | Description |

|---|---|

| Phase: Responsibility Input: Lookup Token SpaceToken Options: control = appelt debug=true Rule:keywordResponsibility Priority: 10 {Lookup.majorType== Responsibilty_BeginKeywords} ({SpaceToken})[0,2] ({Token.category==IN}| {Token.category==TO} | {Token.category==VBG}| {Token.category==VB} | {Token.category==DT} | {Token.category==NN}| {Token.category==NNS})? ((({SpaceToken})[0,3] ({Token.kind==word, !Lookup.majorType==Res_NotAfterKeywords} | {Token.kind==symbol}|{Token.kind==number}| {Token.kind==punctuation,!Token.string==``.’’}))*) :req --> :req.Responsibility = {rule = ``keywordResponsibility’’} | The rule marks the boundary of the responsibility. It detects the token categories as POS. The tokens are either verbs (VBZ, VBG, VB), determiners or prepositions. Besides the POS requirement, keyword placement in the sentence is verified. |

| Rule | Description |

|---|---|

| Rule:degreeextractioninfull Priority: 40 ({Degree} {Token.string==``in’’}({Token.category==NNP, !Lookup.majorType==date})+ {Token.string==``and’’} ({Token.category==NNP,!Degree})+ ):Degree --> :Degree.FullDegree ={rule=``degreeextractioninfull’’} | The rule extracts educational requirement categorized as Degree. Its use POS tag (NNP) and degree dictionaries. The rule also verifies the existence of token “in”. |

| Source | Descriptions |

|---|---|

| Personforce.com (https://www.personforce.com/) | 101 |

| DBWorld (https://research.cs.wisc.edu/dbworld/browse.html) | 139 |

| Indeed.com (https://www.indeed.com.pk/?r=us) | 620 |

| Total | 860 |

| Job Category | Count |

|---|---|

| Engineering and Technical Services | 55 |

| Business Operations | 20 |

| Computer and Information Technology | 125 |

| Internet | 73 |

| Project Management | 85 |

| Health-care and Safety | 9 |

| Arts, Design and Entertainment | 26 |

| Sales and Marketing | 38 |

| Office Support and Administrative | 203 |

| Architecture and Engineering | 10 |

| Construction and Production | 9 |

| Customer Care | 21 |

| Management and Executive | 22 |

| Financial Services | 9 |

| Government and Policy | 6 |

| Post-doctoral | 45 |

| Research and Teaching | 66 |

| Others | 38 |

| Total | 860 |

| No. | Entity Type | Precision | Recall | F-1 |

|---|---|---|---|---|

| 1 | Requirements | 90.5 | 87.90 | 88.76 |

| 2 | Responsibilities | 76.14 | 75.00 | 75.76 |

| 3 | Education | 38 | 100 | 93.60 |

| 4 | Job Title | 100 | 90.67 | 95.00 |

| 5 | Job Category | 79.24 | 97.67 | 87.50 |

| Category | Manual | System Retrieved |

|---|---|---|

| Job Titles | 25 | 25 |

| Requirements | 33 | 33 |

| Career Level | 45 | 45 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ahmed Awan, M.N.; Khan, S.; Latif, K.; Khattak, A.M. A New Approach to Information Extraction in User-Centric E-Recruitment Systems. Appl. Sci. 2019, 9, 2852. https://doi.org/10.3390/app9142852

Ahmed Awan MN, Khan S, Latif K, Khattak AM. A New Approach to Information Extraction in User-Centric E-Recruitment Systems. Applied Sciences. 2019; 9(14):2852. https://doi.org/10.3390/app9142852

Chicago/Turabian StyleAhmed Awan, Malik Nabeel, Sharifullah Khan, Khalid Latif, and Asad Masood Khattak. 2019. "A New Approach to Information Extraction in User-Centric E-Recruitment Systems" Applied Sciences 9, no. 14: 2852. https://doi.org/10.3390/app9142852