Global Maximum Power Point Tracking of PV Systems under Partial Shading Condition: A Transfer Reinforcement Learning Approach

Abstract

1. Introduction

- High convergence randomness: Unlike the deterministic optimization algorithms, since the meta-heuristic algorithms adopt random searching mechanisms, the final optimal solutions may be different in different runs, which will cause the output power to fluctuate greatly and is undesirable to the operation of PV systems;

- Difficult to balance the optimum quality and computation time: To obtain a high-quality optimum, the meta-heuristic algorithms usually need to establish a larger size of initial population and carry out many iterations, which results in huge computational burden and long computing time. However, considering that the MPPT’s control cycle is extremely short, it is inevitable to lessen the size of population and the iteration numbers, which will lead to a significant reduction in the quality of optimization.

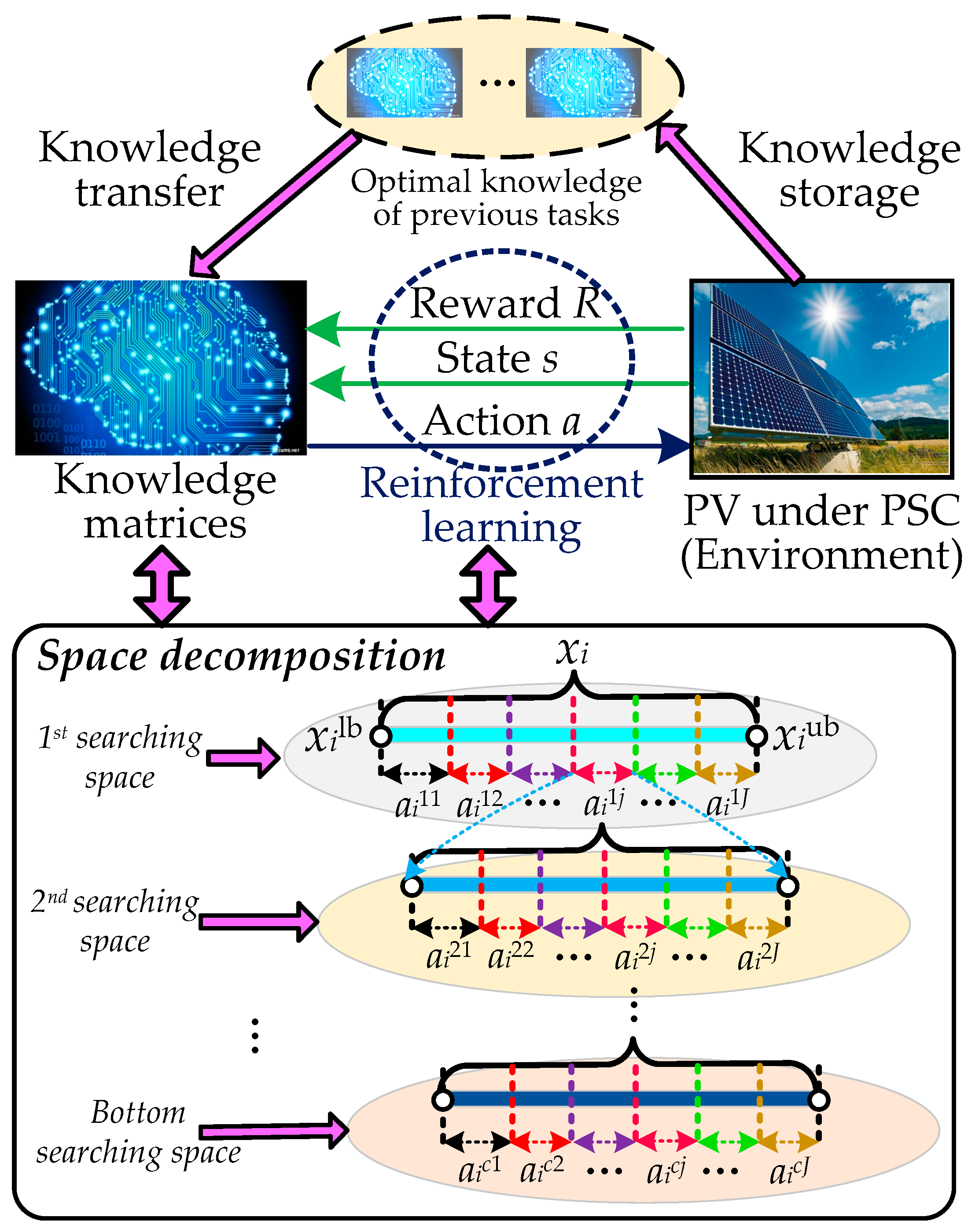

- Capability of knowledge transfer: Through a positive knowledge transfer from past optimization tasks, the optimal knowledge matrices of the new optimization task can be approximated by TRL, hence this method can efficiently harvest an optimum of high quality;

- Capability of online learning: TRL can continuously learn new knowledge from interactions with the environment based on RL, which can rapidly adapt to MPPT under different solar irradiation, temperatures, and PSC.

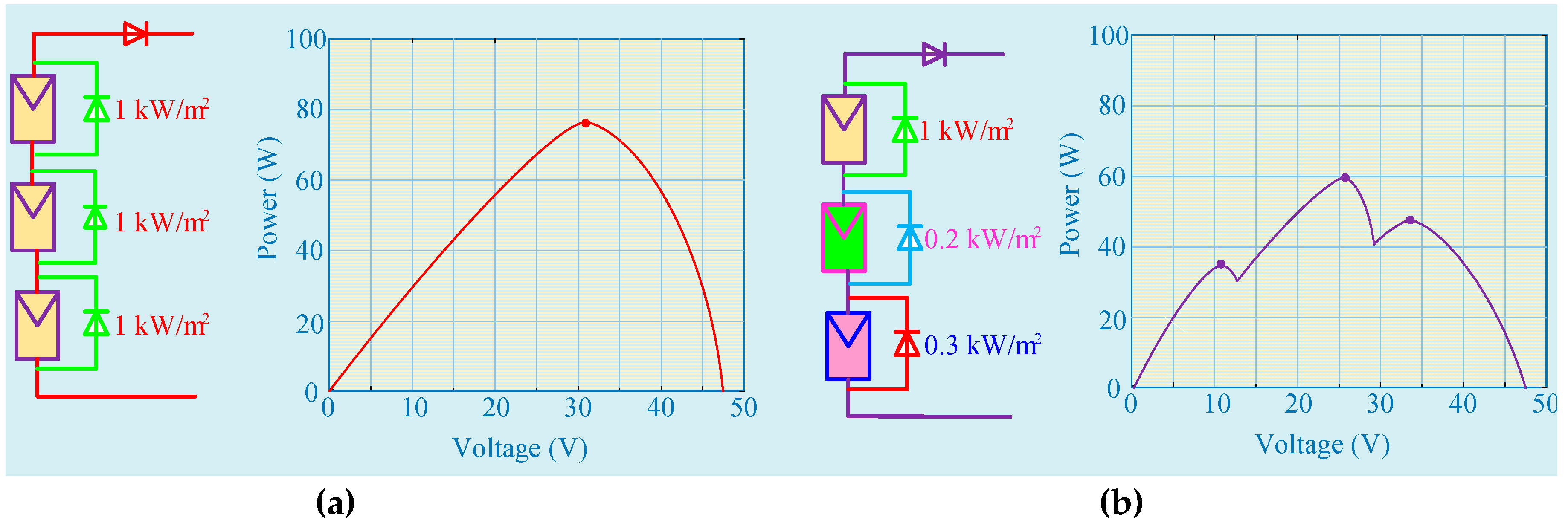

2. Modelling of PV Systems under PSC

2.1. PV Cell Model

2.2. PSC Effect

3. Transfer Reinforcement Learning with Space Decomposition

3.1. Space Decomposition Based Reinforcement Learning

3.2. Knowledge Update

3.3. Exploration and Exploitation

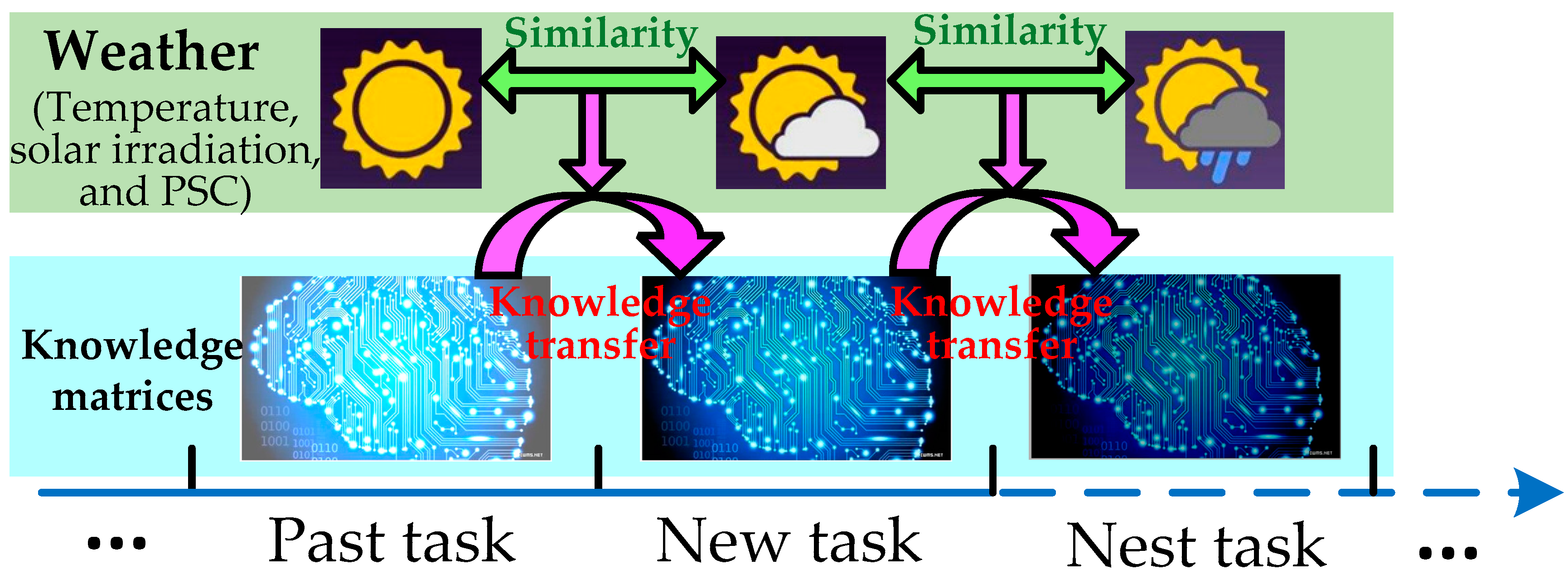

3.4. Knowledge Transfer

4. TRL Design of PV Systems for MPPT

4.1. Control Variable and Action Space

4.2. Reward Function

4.3. Knowledge Transfer

4.4. Overall Execution Procedure

5. Case Studies

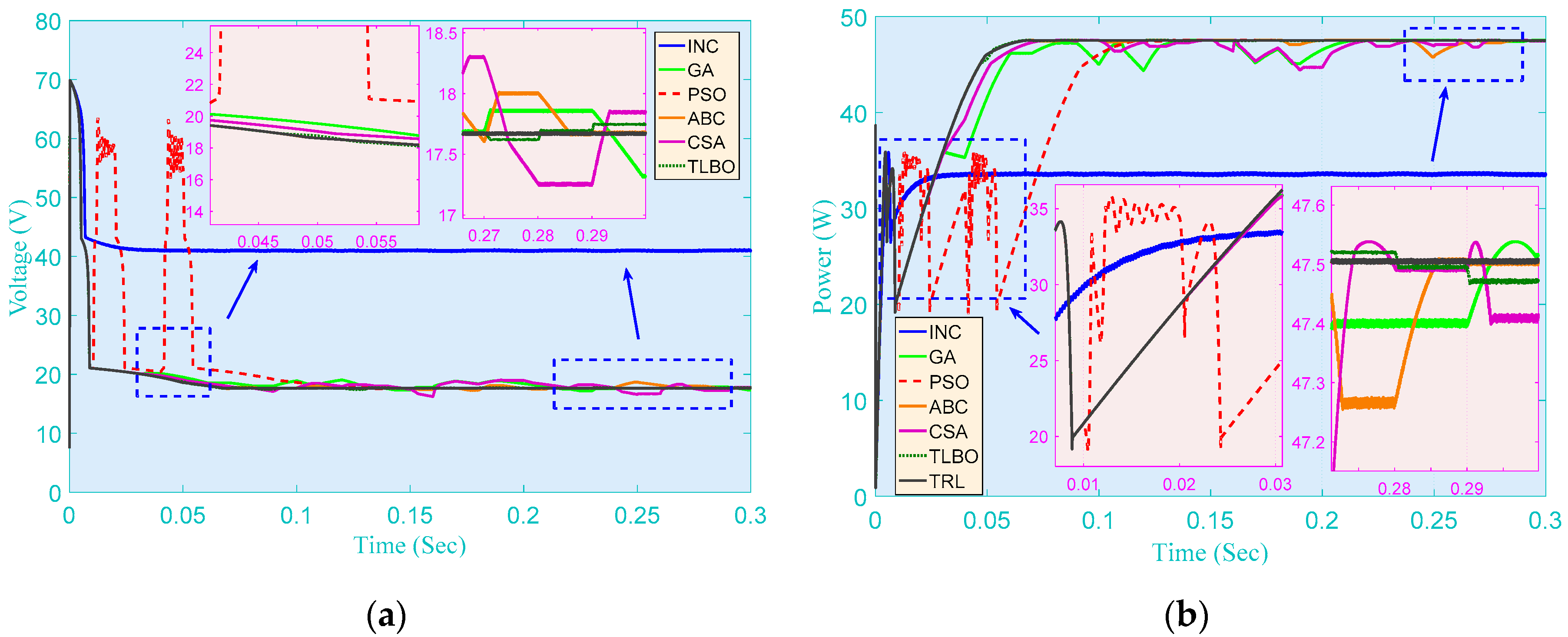

5.1. Start-Up Test

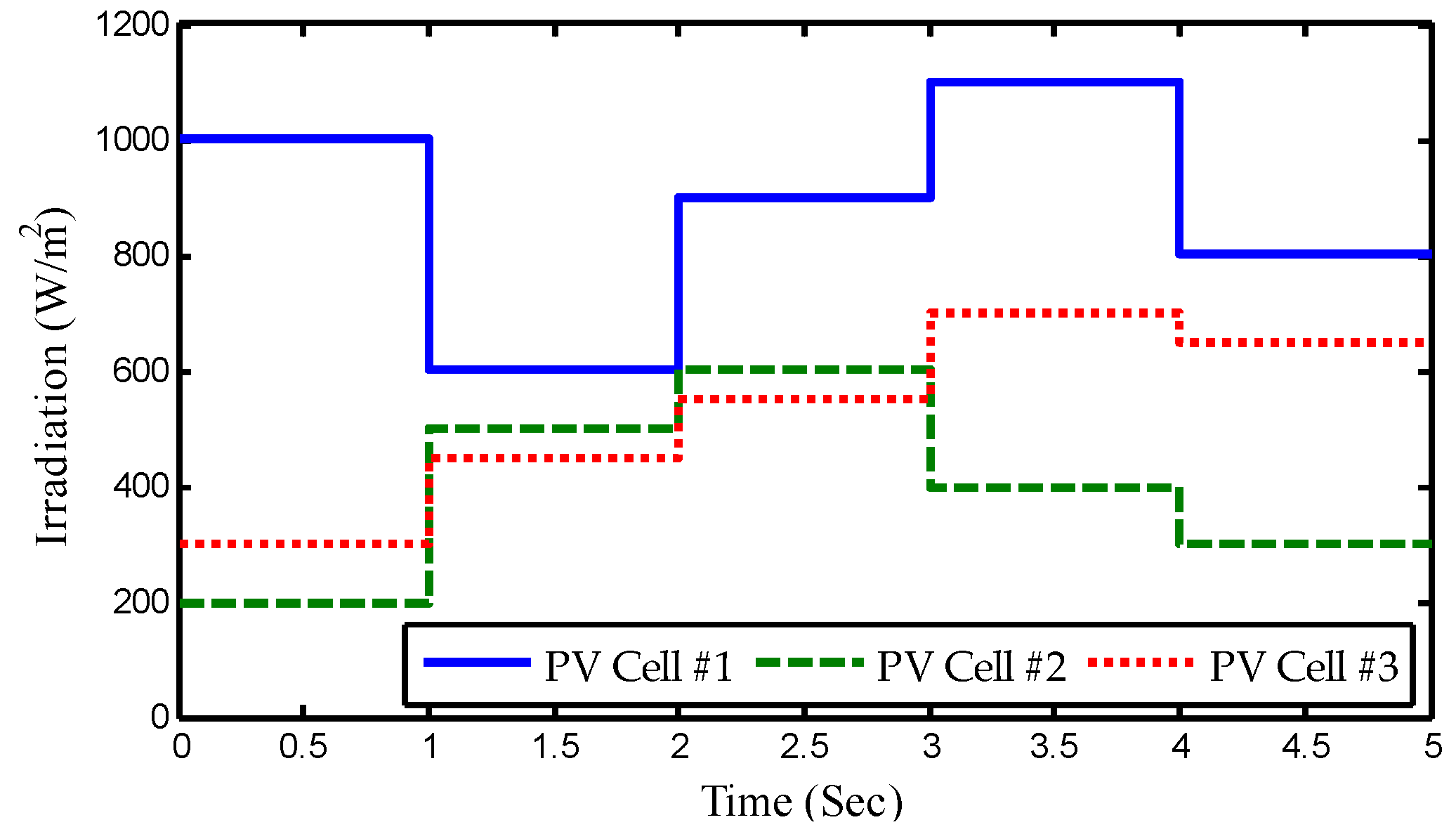

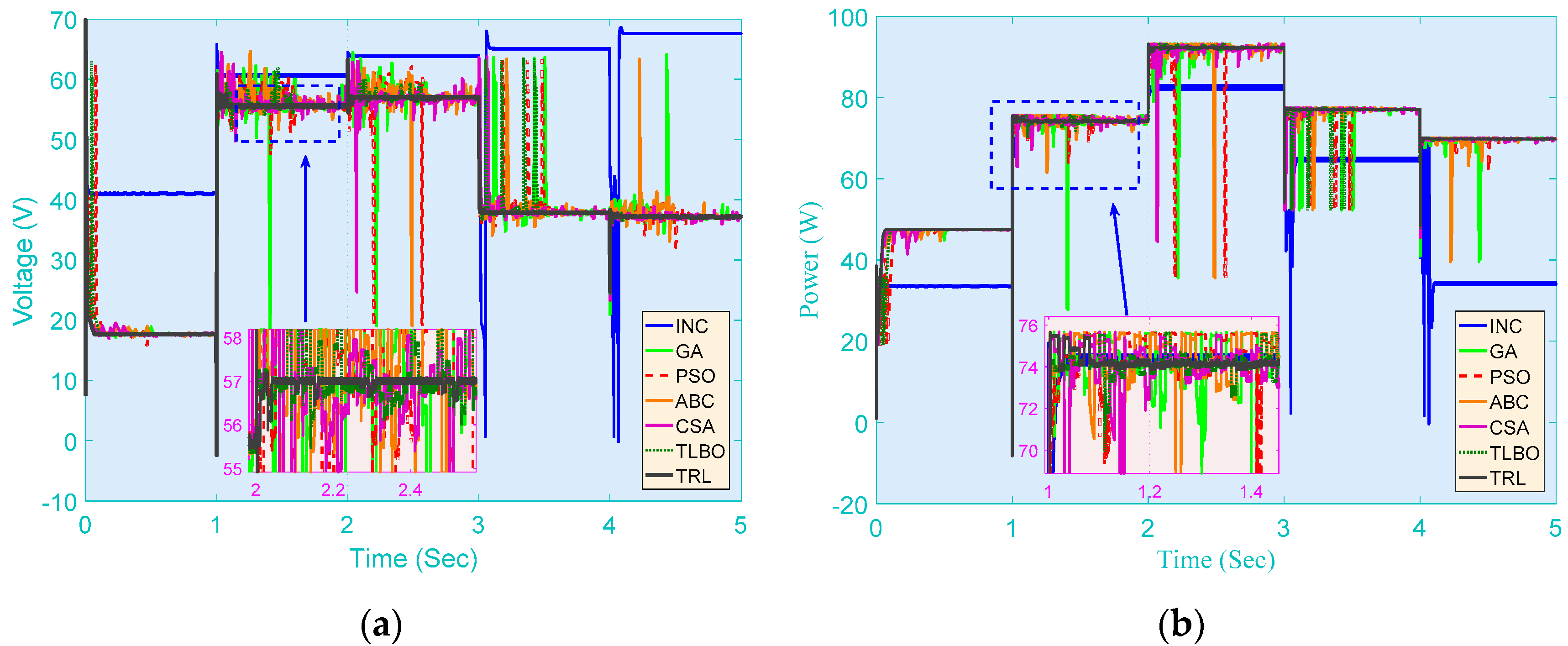

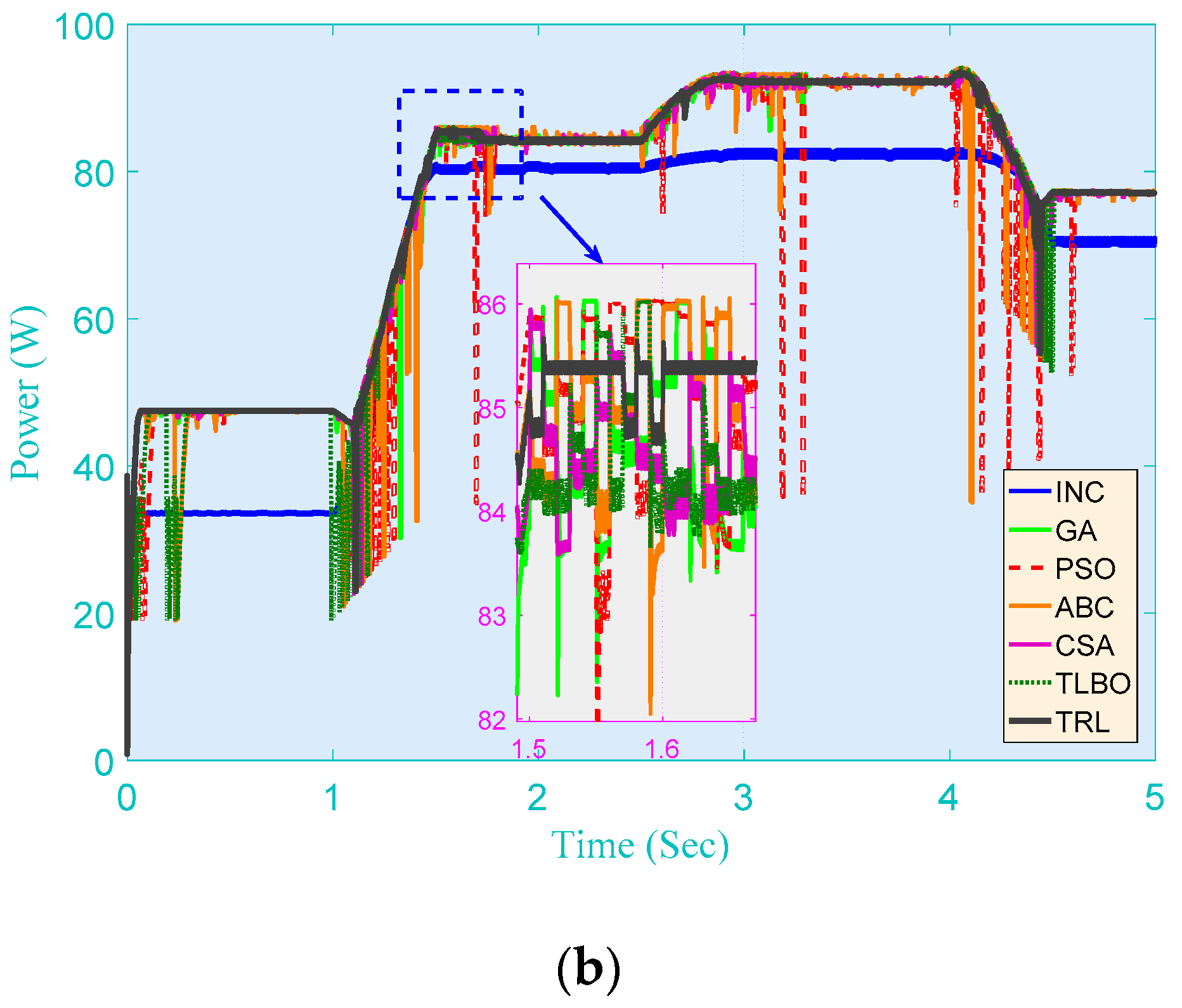

5.2. Step Change in Solar Irradiation with Constant Temperature

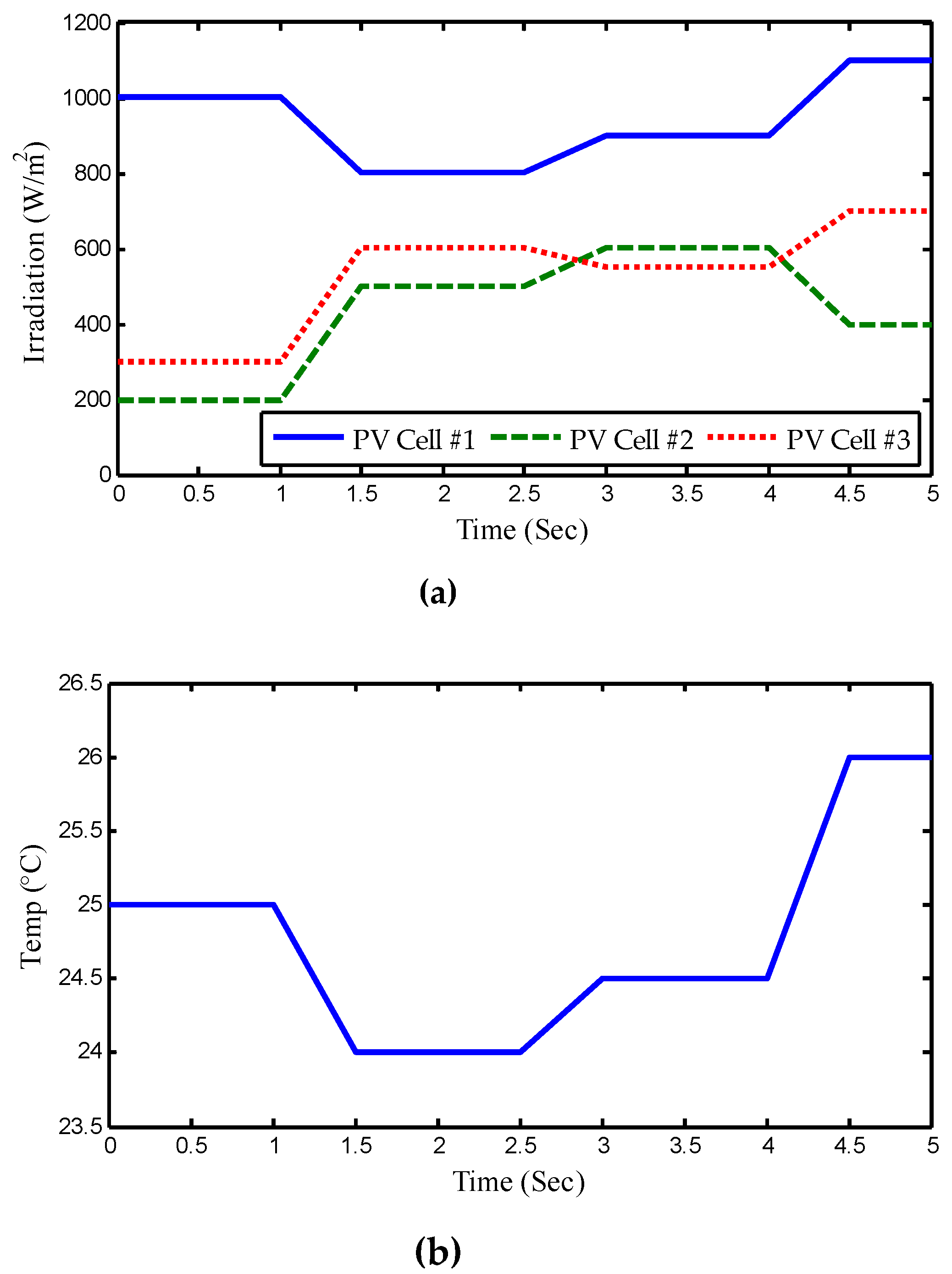

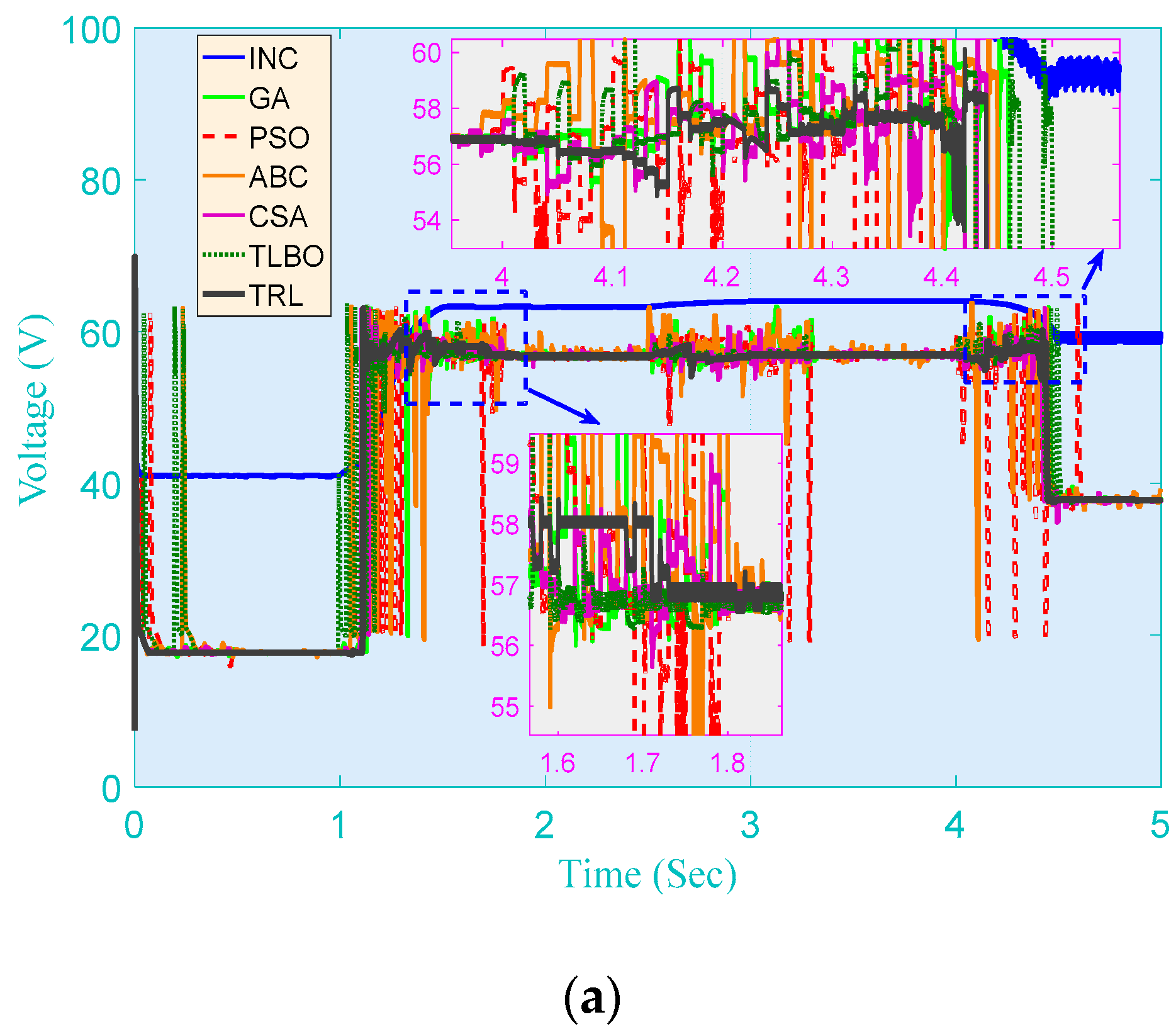

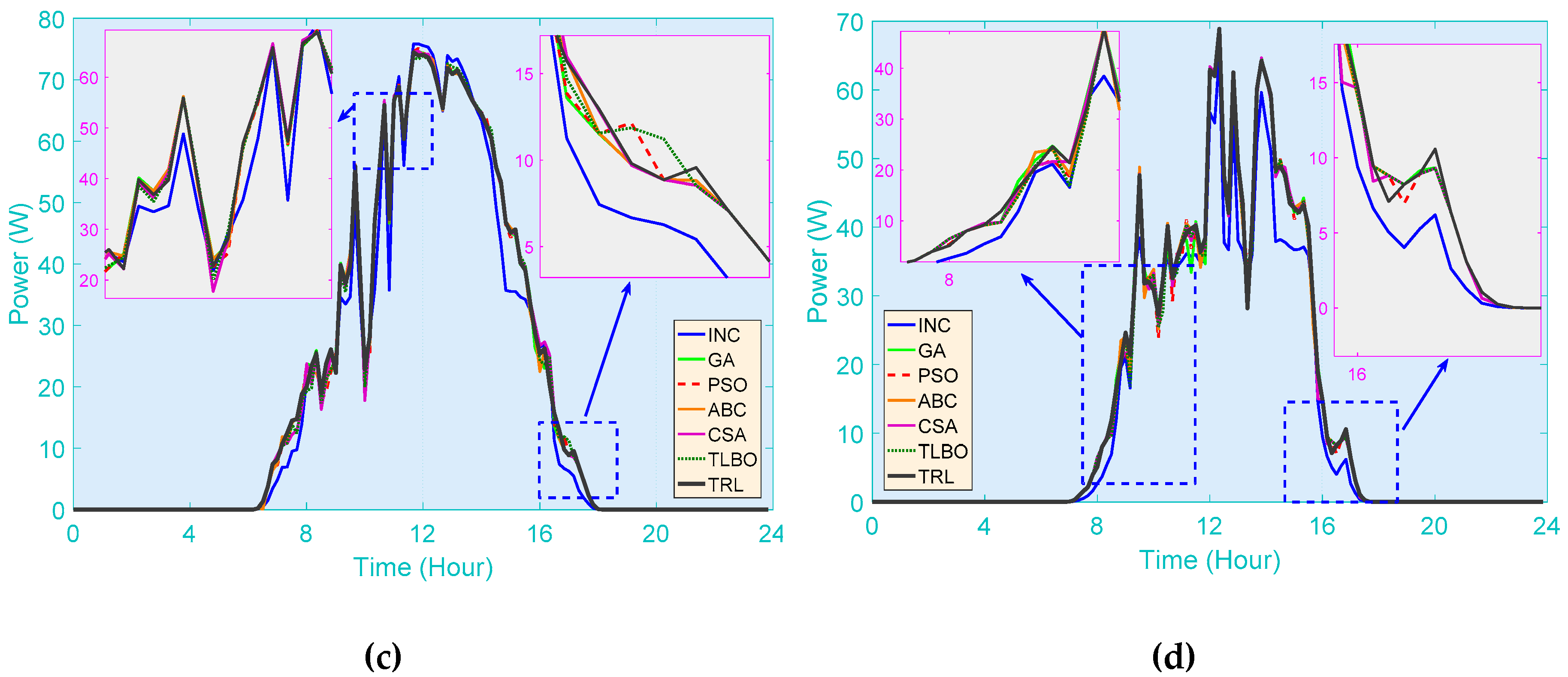

5.3. Gradual Change in Both Solar Irradiation and Temperature

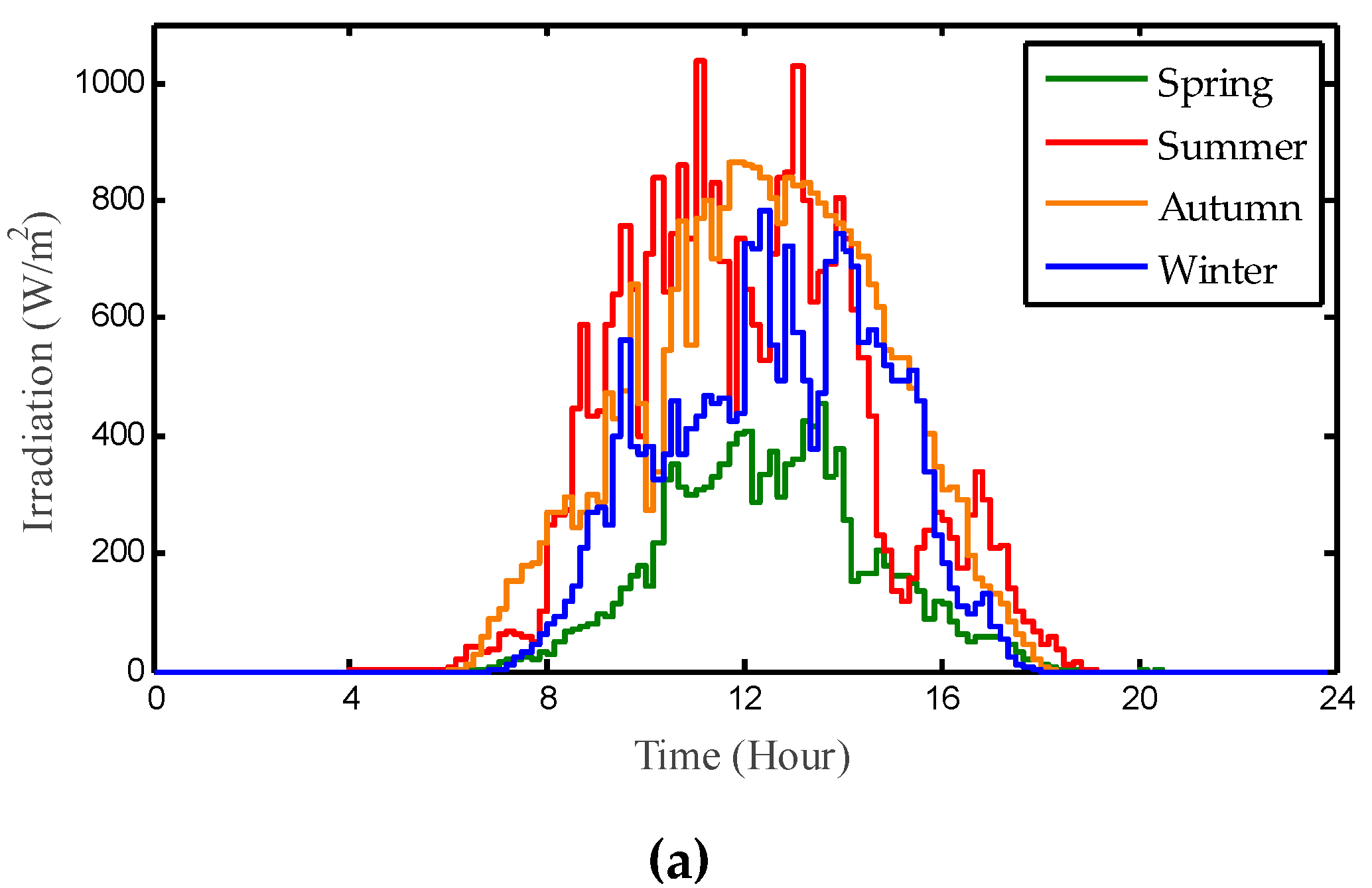

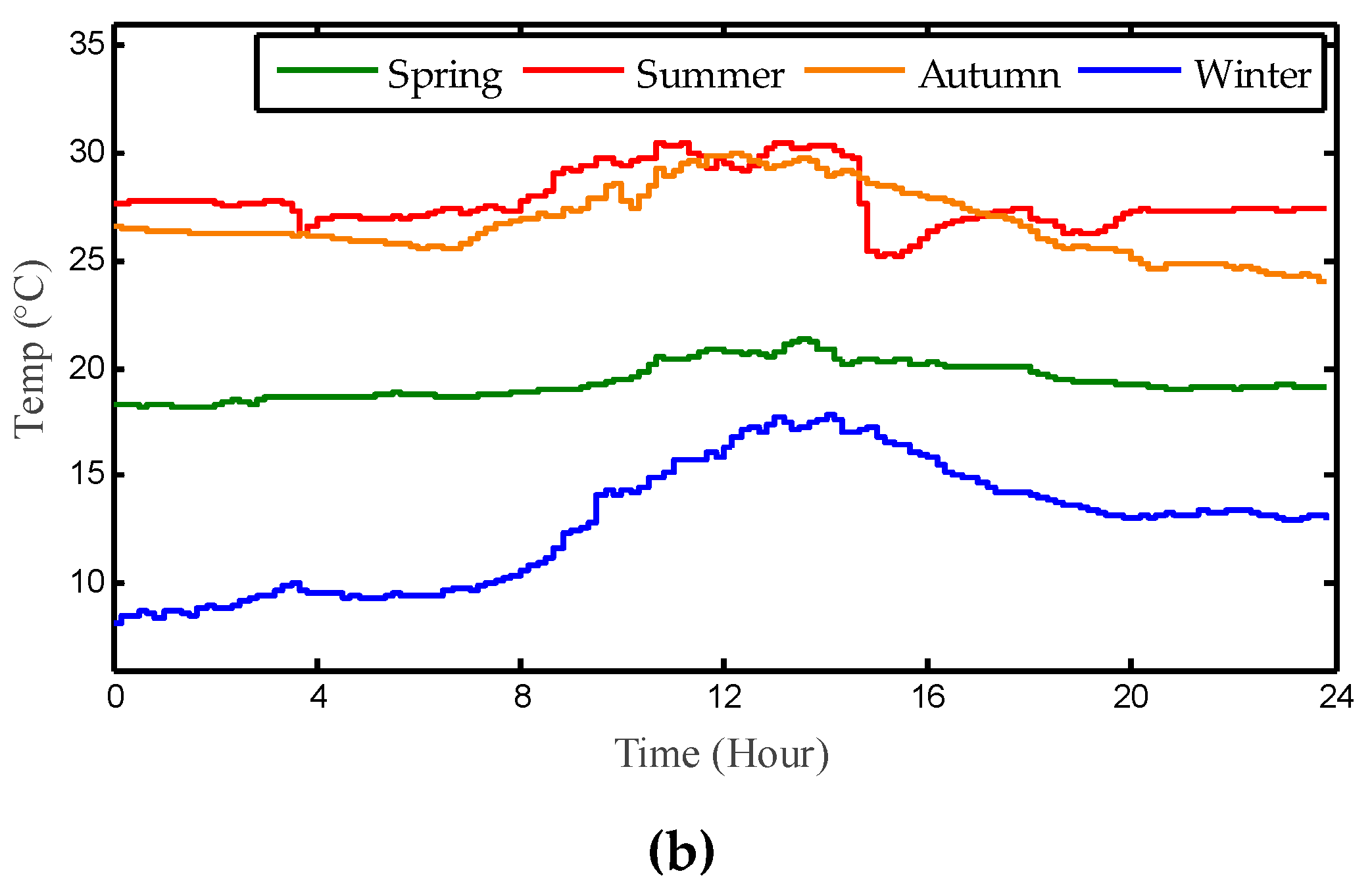

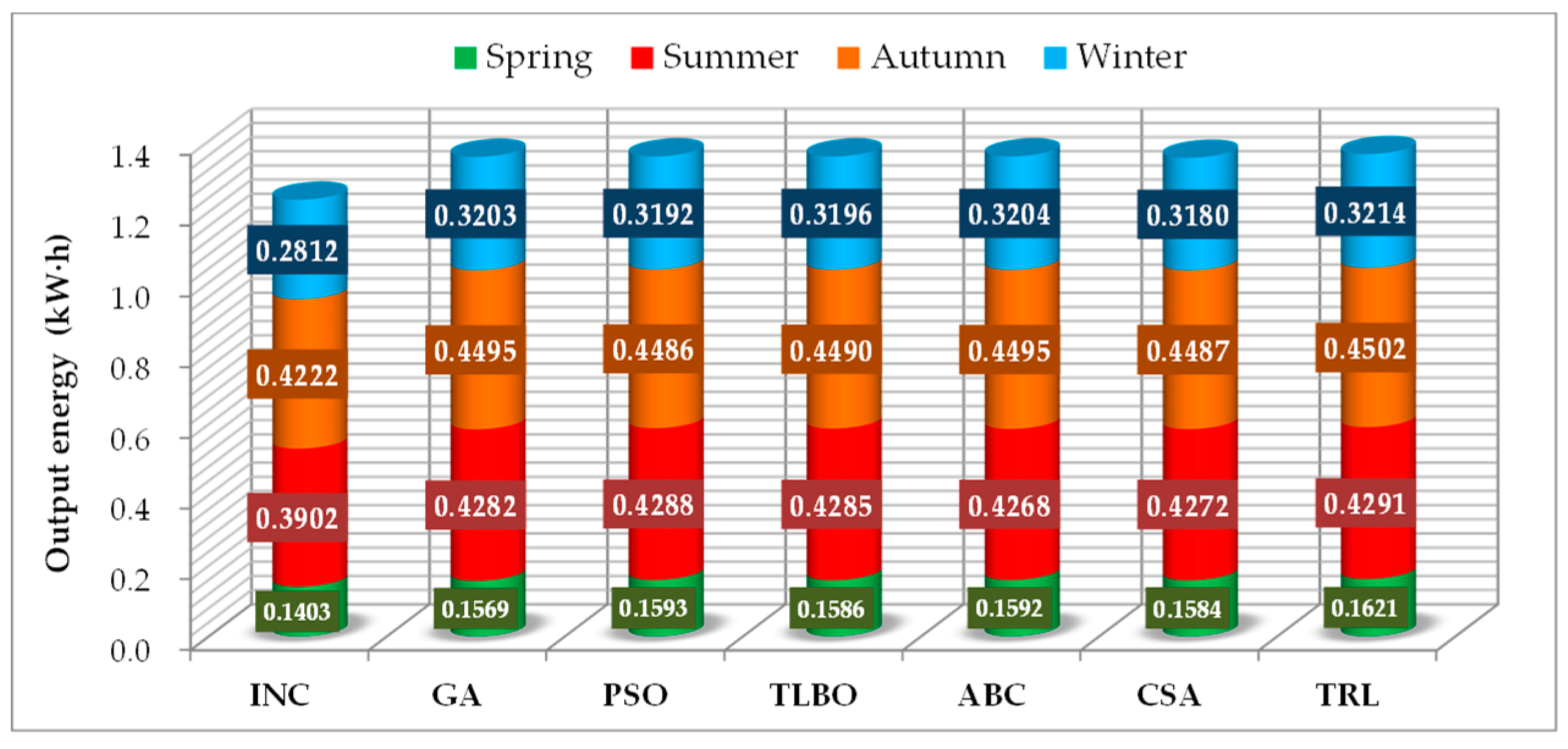

5.4. Daily Field Profile of Solar Irradiation and Temperature in Hong Kong

6. Conclusions

- (1)

- Through space decomposition, TRL can efficiently learn the knowledge for MPPT with PSC in real time; thus a high-quality optimum can be obtained to ensure that the PV system produces more energy under various environmental conditions;

- (2)

- The knowledge transfer can effectively avoid a blind/random search and provide a beneficial guidance to TRL, which results in a fast convergence and a high convergence stability. Therefore, not only can the output power be maximized for the PV system under various scenarios, but the power fluctuation can also be significantly reduced as the weather condition varies;

- (3)

- Compared with the conventional INC and other typical meta-heuristic algorithms, the TRL-based MPPT algorithm can produce the largest amount of output energy in the presence of PSC and other time-varying atmospheric conditions, which can bring about considerable economic benefit for operation in the long term.

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Yang, B.; Yu, T.; Zhang, X.S.; Li, H.F.; Shu, H.C.; Sang, Y.Y.; Jiang, L. Dynamic leader based collective intelligence for maximum power point tracking of PV systems affected by partial shading condition. Energy Convers. Manag. 2019, 179, 286–303. [Google Scholar] [CrossRef]

- Wang, X.T.; Barnett, A. The evolving value of photovoltaic module efficiency. Appl. Sci. 2019, 9, 1227. [Google Scholar] [CrossRef]

- Yang, B.; Yu, T.; Shu, H.C.; Dong, J.; Jiang, L. Robust sliding-mode control of wind energy conversion systems for optimal power extraction via nonlinear perturbation observers. Appl. Energy 2018, 210, 711–723. [Google Scholar] [CrossRef]

- Worighi, I.; Geury, T.; Baghdadi, M.E.; Mierlo, J.V.; Hegazy, O.; Maach, A. Optimal design of hybrid PV-Battery system in residential buildings: End-user economics, and PV penetration. Appl. Sci. 2019, 9, 1022. [Google Scholar] [CrossRef]

- Hyunkyung, S.; Zong, W.G. Optimal design of a residential photovoltaic renewable system in South Korea. Appl. Sci. 2019, 9, 1138. [Google Scholar]

- Yang, B.; Zhong, L.E.; Yu, T.; Li, H.F.; Zhang, X.S.; Shu, H.C.; Sang, Y.Y.; Jiang, L. Novel bio-inspired memetic salp swarm algorithm and application to MPPT for PV systems considering partial shading condition. J. Clean. Prod. 2019, 215, 1203–1222. [Google Scholar] [CrossRef]

- Gulkowski, S.; Zdyb, A.; Dragon, P. Experimental efficiency analysis of a photovoltaic system with different module technologies under temperate climate conditions. Appl. Sci. 2019, 9, 141. [Google Scholar] [CrossRef]

- Wu, Z.; Yu, D. Application of improved bat algorithm for solar PV maximum power point tracking under partially shaded condition. Appl. Soft Comput. 2018, 62, 101–109. [Google Scholar] [CrossRef]

- Tanaka, T.; Toumiya, T.; Suzuki, T. Output control by hill-climbing method for a small scale wind power generating system. Renew. Energy 2014, 12, 387–400. [Google Scholar] [CrossRef]

- Mohanty, S.; Subudhi, B.; Ray, P.K. A grey wolf-assisted Perturb & Observe MPPT algorithm for a PV system. IEEE Trans. Energy Convers. 2017, 32, 340–347. [Google Scholar]

- Zakzouk, N.E.; Elsaharty, M.A.; Abdelsalam, A.K.; Helal, A.A. Improved performance low-cost incremental conductance PV MPPT technique. IET Renew. Power Gener. 2016, 10, 561–574. [Google Scholar] [CrossRef]

- Sher, H.A.; Murtaza, A.F.; Noman, A.; Addoweesh, K.E.; Al-Haddad, K.; Chiaberge, M. A new sensorless hybrid MPPT algorithm based on fractional short-circuit current measurement and P&O MPPT. IEEE Trans. Sustain. Energy 2015, 6, 1426–1434. [Google Scholar]

- Huang, Y.P. A rapid maximum power measurement system for high-concentration Photovoltaic modules using the fractional open-circuit voltage technique and controllable electronic load. IEEE J. Photovolt. 2014, 4, 1610–1617. [Google Scholar] [CrossRef]

- Rezk, H.; Fathy, A.; Abdelaziz, A.Y. A comparison of different global MPPT techniques based on meta-heuristic algorithms for photovoltaic system subjected to partial shading conditions. Renew. Sustain. Energy Rev. 2017, 74, 377–386. [Google Scholar] [CrossRef]

- Messai, A.; Mellit, A.; Guessoum, A.; Kalogirou, S.A. Maximum power point tracking using a GA optimized fuzzy logic controller and its FPGA implementation. Sol. Energy 2011, 85, 265–277. [Google Scholar] [CrossRef]

- Babu, T.S.; Rajasekar, N.; Sangeetha, K. Modified Particle Swarm Optimization technique based Maximum Power Point Tracking for uniform and under partial shading condition. Appl. Soft Comput. 2015, 34, 613–624. [Google Scholar] [CrossRef]

- Benyoucef, A.S.; Chouder, A.; Kara, K.; Silvestre, S.; Sahed, O.A. Artificial bee colony based algorithm for maximum power point tracking (MPPT) for PV systems operating under partial shaded conditions. Appl. Soft Comput. 2015, 32, 38–48. [Google Scholar] [CrossRef]

- Ahmed, J.; Salam, Z. A maximum power point tracking (MPPT) for PV system using Cuckoo search with partial shading capability. Appl. Energy 2014, 119, 118–130. [Google Scholar] [CrossRef]

- Rezk, H.; Fathy, A. Simulation of global MPPT based on teaching-learning-based optimization technique for partially shaded PV system. Electr. Eng. 2017, 99, 847–859. [Google Scholar] [CrossRef]

- Javed, M.Y.; Murtaza, A.F.; Ling, Q.; Qamar, S.; Gulzar, M.M. A novel MPPT design using generalized pattern search for partial shading. Energy Build. 2016, 133, 59–69. [Google Scholar] [CrossRef]

- Titri, S.; Larbes, C.; Toumi, K.Y.; Benatchba, K. A new MPPT controller based on the Ant colony optimization algorithm for Photovoltaic systems under partial shading conditions. Appl. Soft Comput. 2017, 58, 465–479. [Google Scholar] [CrossRef]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; Van Den Driessche, G.; Dieleman, S. Mastering the game of Go with deep neural networks and tree search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef] [PubMed]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Qi, J.; Zhang, Y.; Chen, Y. Modeling and maximum power point tracking (MPPT) method for PV array under partial shade conditions. Renew. Energy 2014, 66, 337–345. [Google Scholar] [CrossRef]

- Lalili, D.; Mellit, A.; Lourci, N.; Medjahed, B.; Berkouk, E.M. Input output feedback linearization control and variable step size MPPT algorithm of a grid-connected photovoltaic inverter. Renew. Energy 2011, 36, 3282–3291. [Google Scholar] [CrossRef]

- Lalili, D.; Mellit, A.; Lourci, N.; Medjahed, B.; Boubakir, C. State feedback control and variable step size MPPT algorithm of three-level grid-connected photovoltaic inverter. Sol. Energy 2013, 98, 561–571. [Google Scholar] [CrossRef]

- Chen, K.; Tian, S.; Cheng, Y.; Bai, L. An improved MPPT controller for photovoltaic system under partial shading condition. IEEE Trans. Sustain. Energy 2017, 5, 978–985. [Google Scholar] [CrossRef]

- Watkins, J.C.H.; Dayan, P. Q-learning. Mach. Learn. 1992, 8, 279–292. [Google Scholar] [CrossRef]

- Er, M.J.; Deng, C. Online tuning of fuzzy inference systems using dynamic fuzzy Q-learning. IEEE Trans. Syst. Man Cybern. Part B 2004, 34, 1478–1489. [Google Scholar] [CrossRef]

- Zhang, X.; Yu, T.; Yang, B.; Cheng, L. Accelerating bio-inspired optimizer with transfer reinforcement learning for reactive power optimization. Knowl. Based Syst. 2017, 116, 26–38. [Google Scholar] [CrossRef]

- Bianchi, R.A.C.; Celiberto, L.A.; Santos, P.E.; Matsuura, J.P.; Lopez de Mantaras, R. Transferring knowledge as heuristics in reinforcement learning: A case-based approach. Artif. Intell. 2015, 226, 102–121. [Google Scholar] [CrossRef]

- Pan, J.; Wang, X.; Cheng, Y.; Cao, G. Multi-source transfer ELM-based Q learning. Neurocomputing 2014, 137, 57–64. [Google Scholar] [CrossRef]

- Ishaque, K.; Salam, Z.; Amjad, M.; Mekhilef, S. An improved particle swarm optimization (PSO)-based MPPT for PV with reduced steady-state oscillation. IEEE Trans. Power Electron. 2012, 27, 3627–3638. [Google Scholar] [CrossRef]

- Li, G.D.; Li, G.Y.; Zhou, M. Model and application of renewable energy accommodation capacity calculation considering utilization level of interprovincial tie-line. Prot. Control Mod. Power Syst. 2019, 4, 1–12. [Google Scholar] [CrossRef]

- Tummala, S.L.V.A. Modified vector controlled DFIG wind energy system based on barrier function adaptive sliding mode control. Prot. Control Mod. Power Syst. 2019, 4, 34–41. [Google Scholar]

- Faisal, R.B.; Purnima, D.; Subrata, K.S.; Sajal, K.D. A survey on control issues in renewable energy integration and microgrid. Prot. Control Mod. Power Syst. 2019, 4, 87–113. [Google Scholar]

- Dash, P.K.; Patnaik, R.K.; Mishra, S.P. Adaptive fractional integral terminal sliding mode power control of UPFC in DFIG wind farm penetrated multimachine power system. Prot. Control Mod. Power Syst. 2018, 3, 79–92. [Google Scholar] [CrossRef]

| Parameter | Range | Value |

|---|---|---|

| J | J > 1 | 10 |

| c | c > 1 | 4 |

| α | 0 < α < 1 | 0.01 |

| γ | 0 < γ < 1 | 0.0001 |

| ϵ | 0 < ϵ < 1 | 0.9 |

| kmax | kmax > 1 | 5 |

| M | M > 1 | 5 |

| Typical peak power | 51.716 W | Nominal operation cell temperature (Tref) | 25 °C |

| Voltage at peak power | 18.47 V | Factor of PV technology (A) | 1.5 |

| Current at peak power | 2.8 A | Switching frequency (f) | 100 kHz |

| Short-circuit current (Isc) | 1.5 A | Inductor (L) | 500 mH |

| Open-circuit voltage (Voc) | 23.36 V | Resistive load (R) | 200 Ω |

| Temperature coefficient of Isc (k1) | 3 mA/°C | Capacitor (C1, C2) | 1 μF |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ding, M.; Lv, D.; Yang, C.; Li, S.; Fang, Q.; Yang, B.; Zhang, X. Global Maximum Power Point Tracking of PV Systems under Partial Shading Condition: A Transfer Reinforcement Learning Approach. Appl. Sci. 2019, 9, 2769. https://doi.org/10.3390/app9132769

Ding M, Lv D, Yang C, Li S, Fang Q, Yang B, Zhang X. Global Maximum Power Point Tracking of PV Systems under Partial Shading Condition: A Transfer Reinforcement Learning Approach. Applied Sciences. 2019; 9(13):2769. https://doi.org/10.3390/app9132769

Chicago/Turabian StyleDing, Min, Dong Lv, Chen Yang, Shi Li, Qi Fang, Bo Yang, and Xiaoshun Zhang. 2019. "Global Maximum Power Point Tracking of PV Systems under Partial Shading Condition: A Transfer Reinforcement Learning Approach" Applied Sciences 9, no. 13: 2769. https://doi.org/10.3390/app9132769

APA StyleDing, M., Lv, D., Yang, C., Li, S., Fang, Q., Yang, B., & Zhang, X. (2019). Global Maximum Power Point Tracking of PV Systems under Partial Shading Condition: A Transfer Reinforcement Learning Approach. Applied Sciences, 9(13), 2769. https://doi.org/10.3390/app9132769