Construction of an Industrial Knowledge Graph for Unstructured Chinese Text Learning

Abstract

1. Introduction

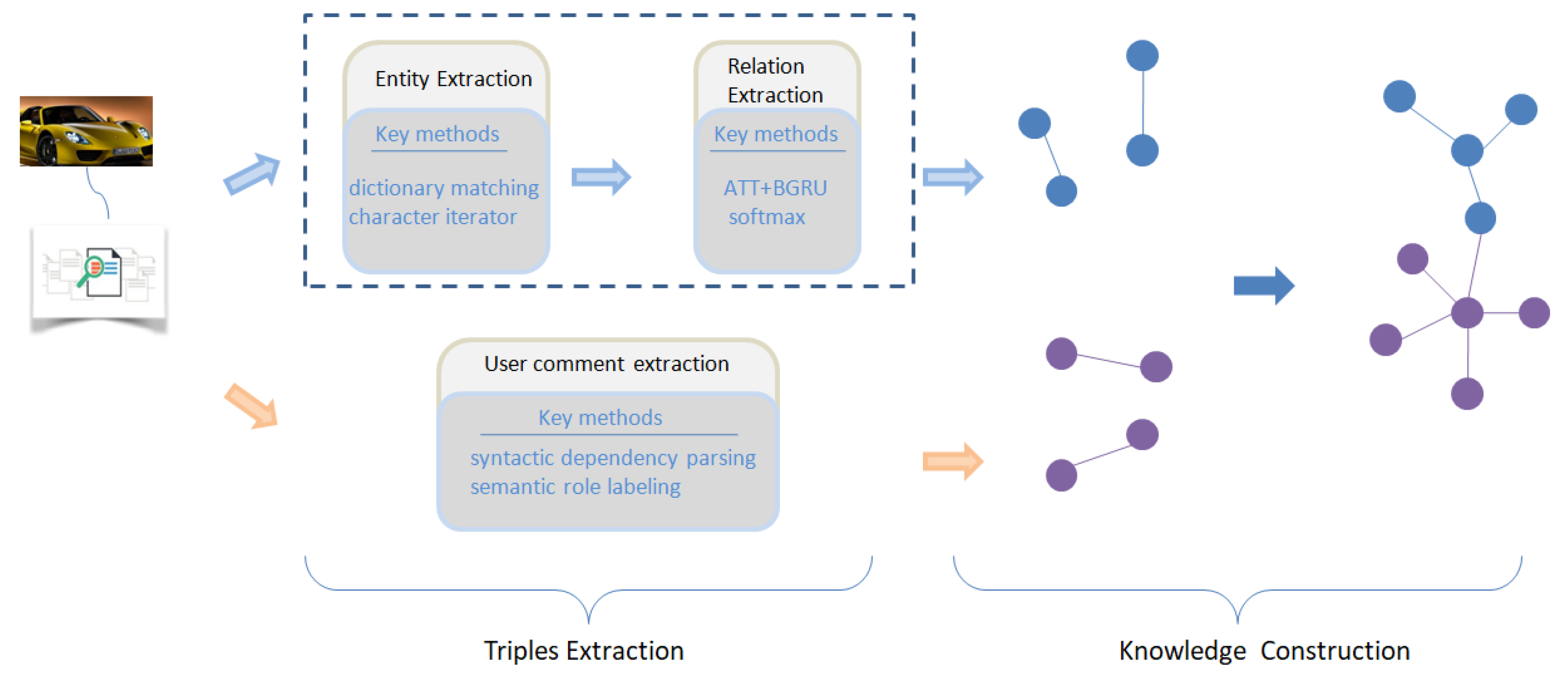

- (1)

- A feasible method is proposed to achieve automatic extraction of triples from unstructured Chinese text by combining entity extraction and relationship extraction.

- (2)

- An approach is proposed to extract structured user evaluation information from unstructured Chinese text.

- (3)

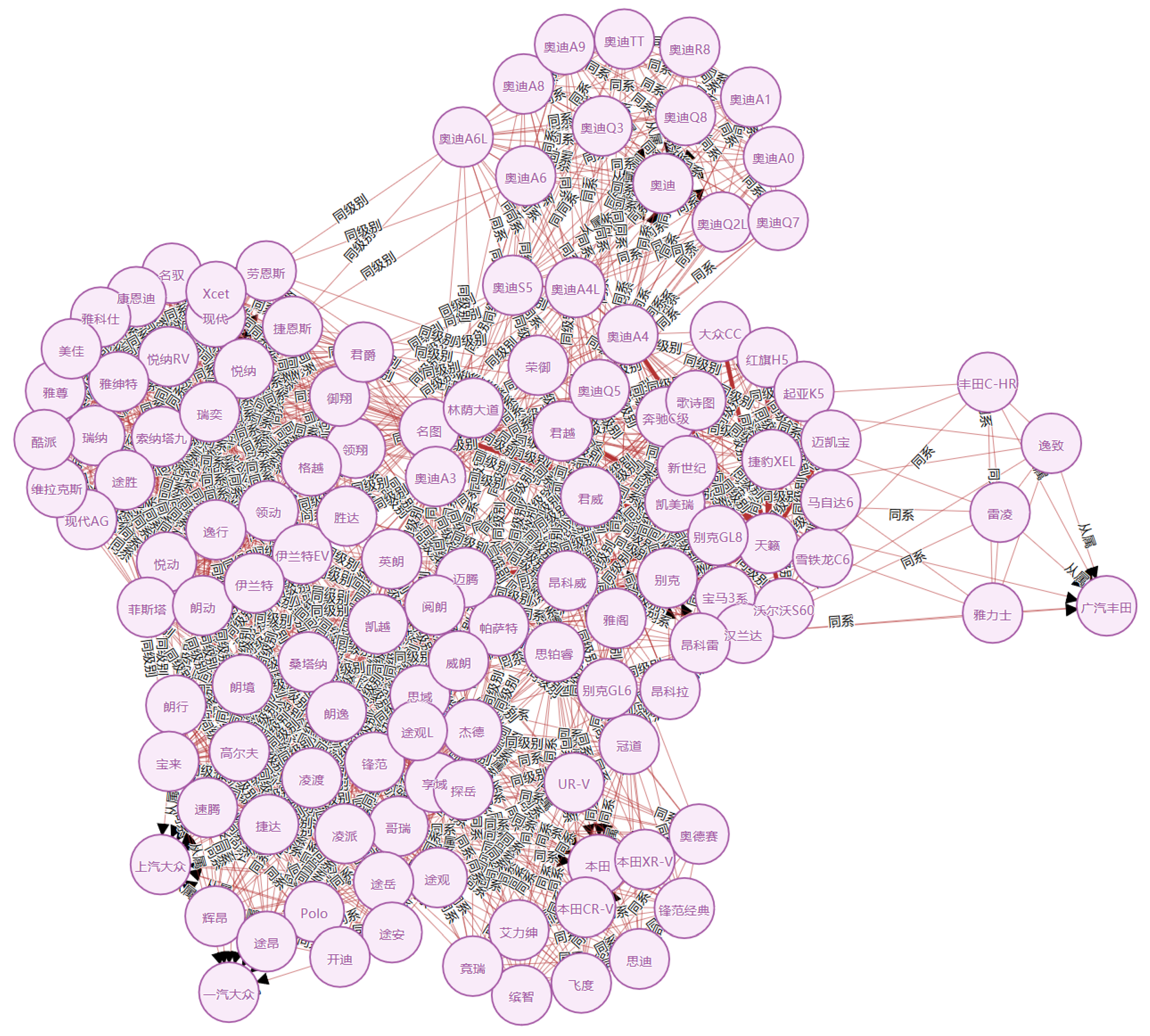

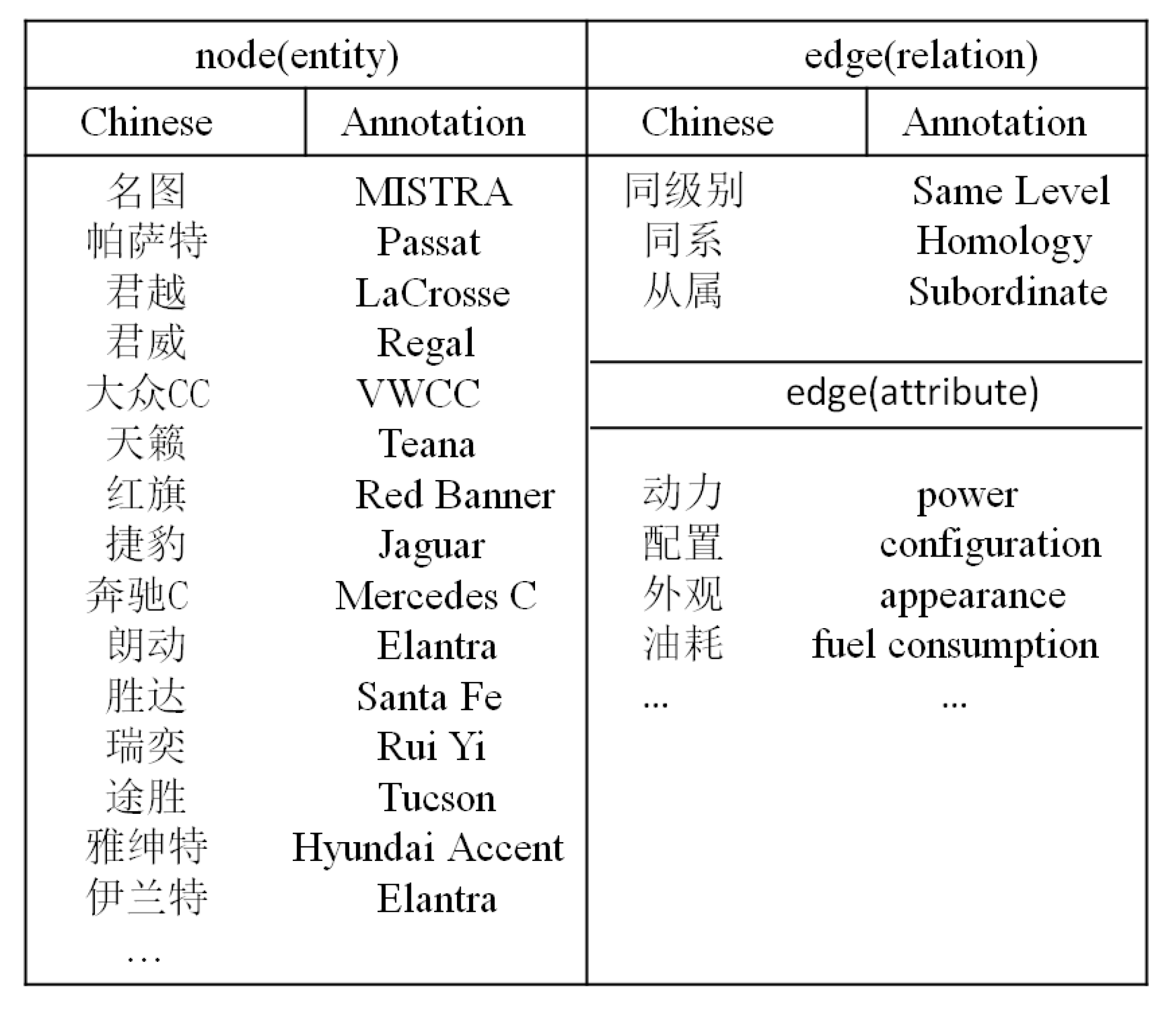

- A knowledge graph of the automobile industry is constructed.

2. Related Work

2.1. Entity Extraction

2.2. Relation Extraction

2.3. Knowledge Graph

2.4. Automated Knowledge Base Management

3. Methods

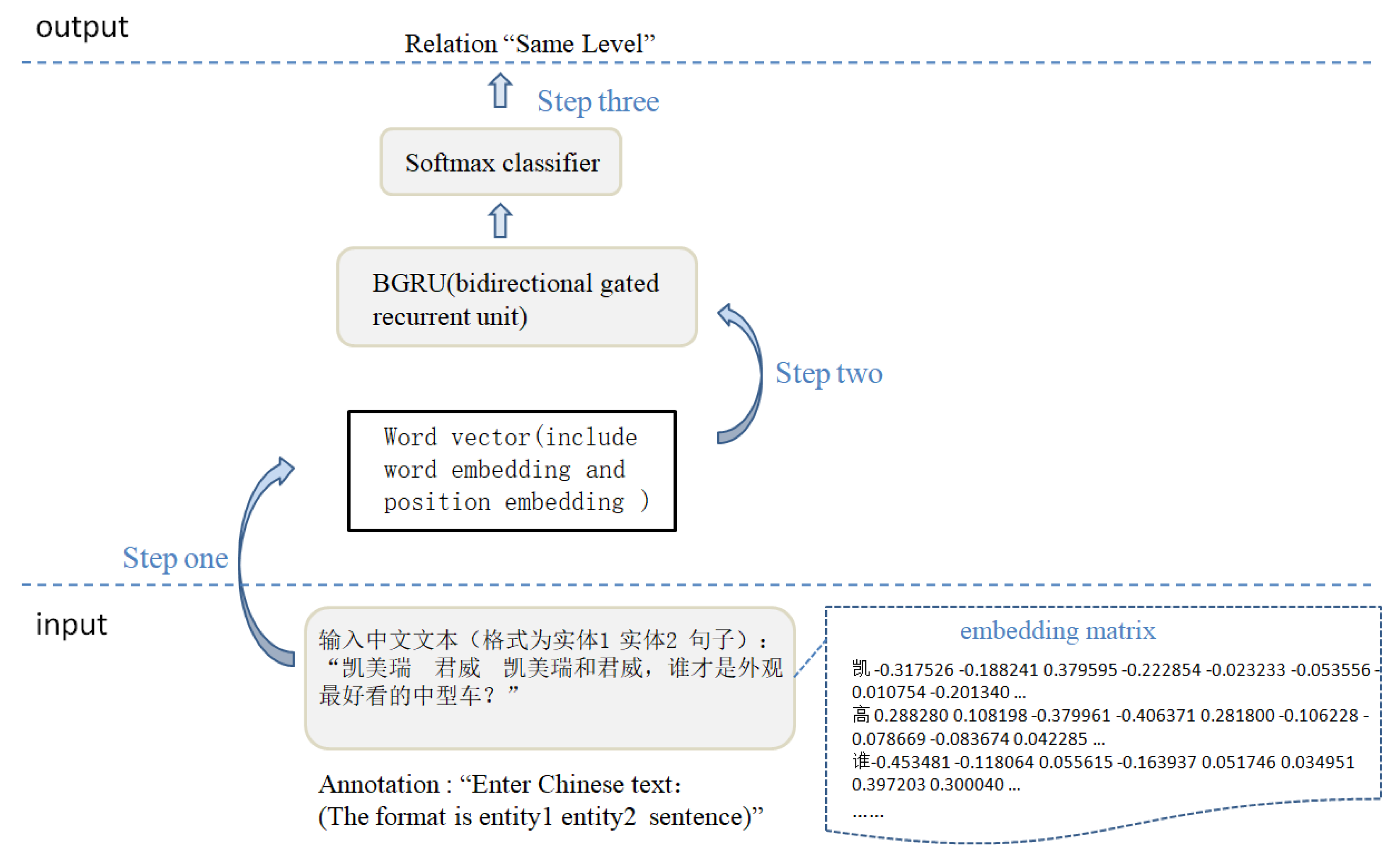

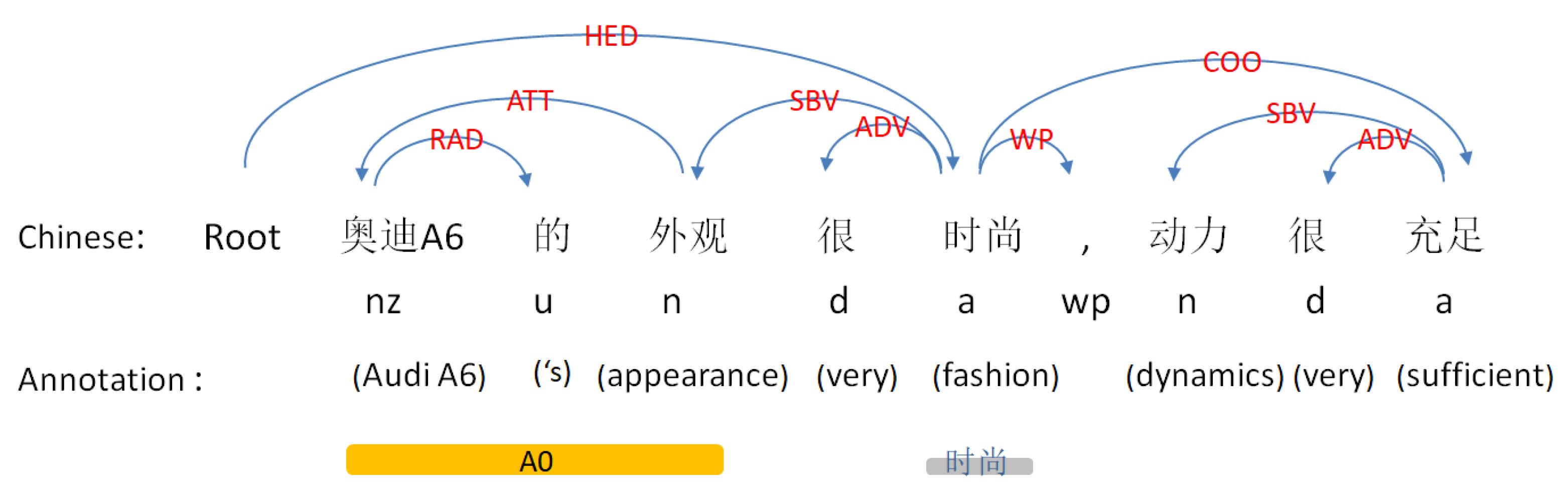

3.1. Semantic Relation Extraction

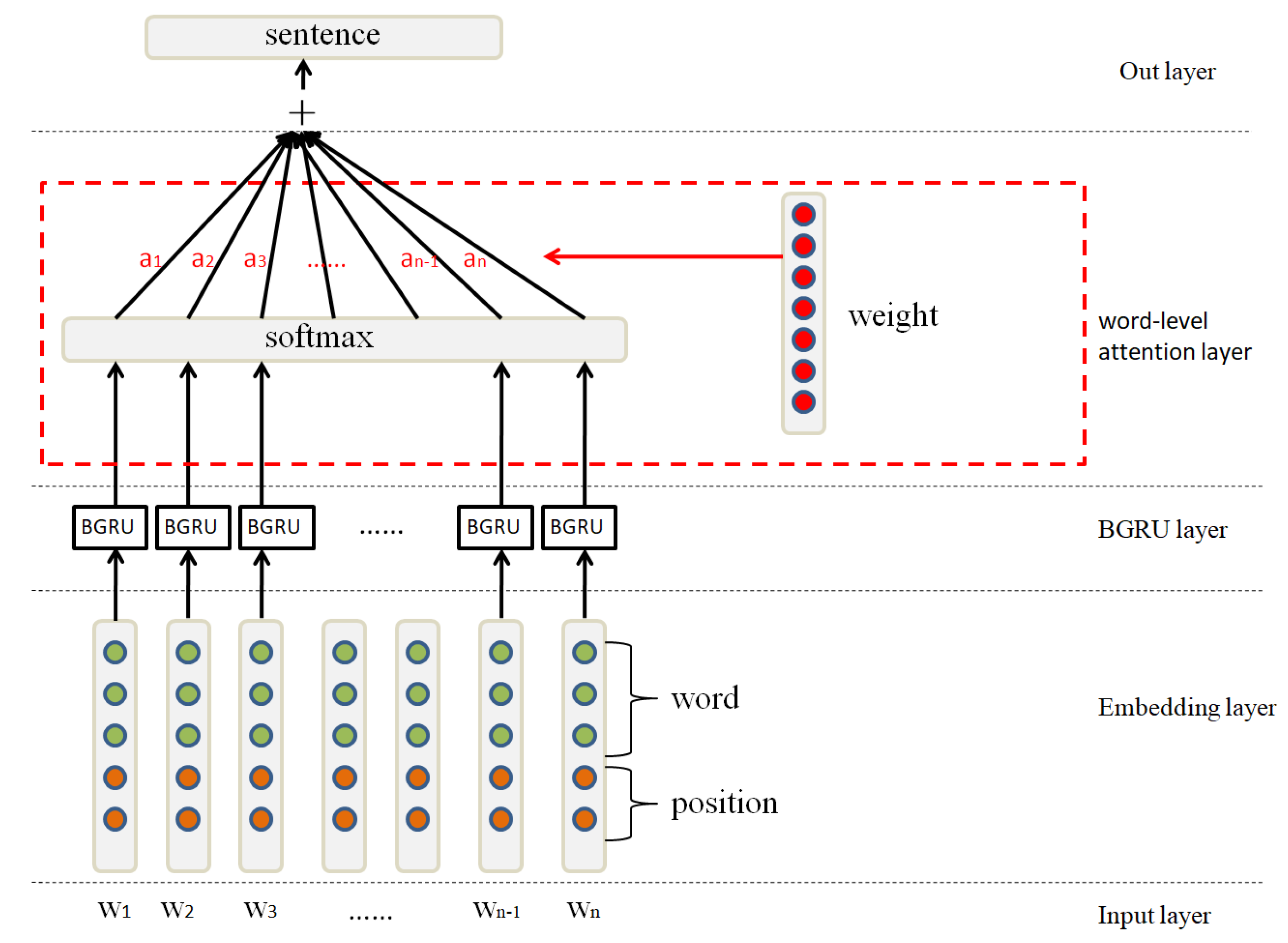

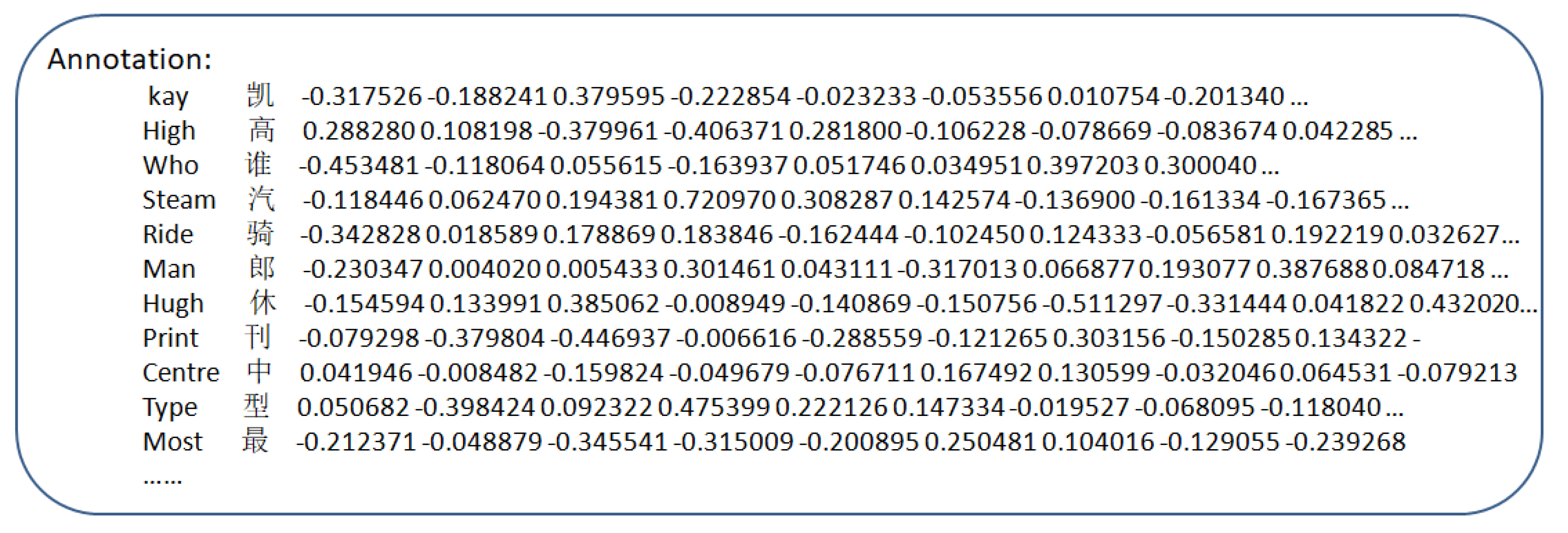

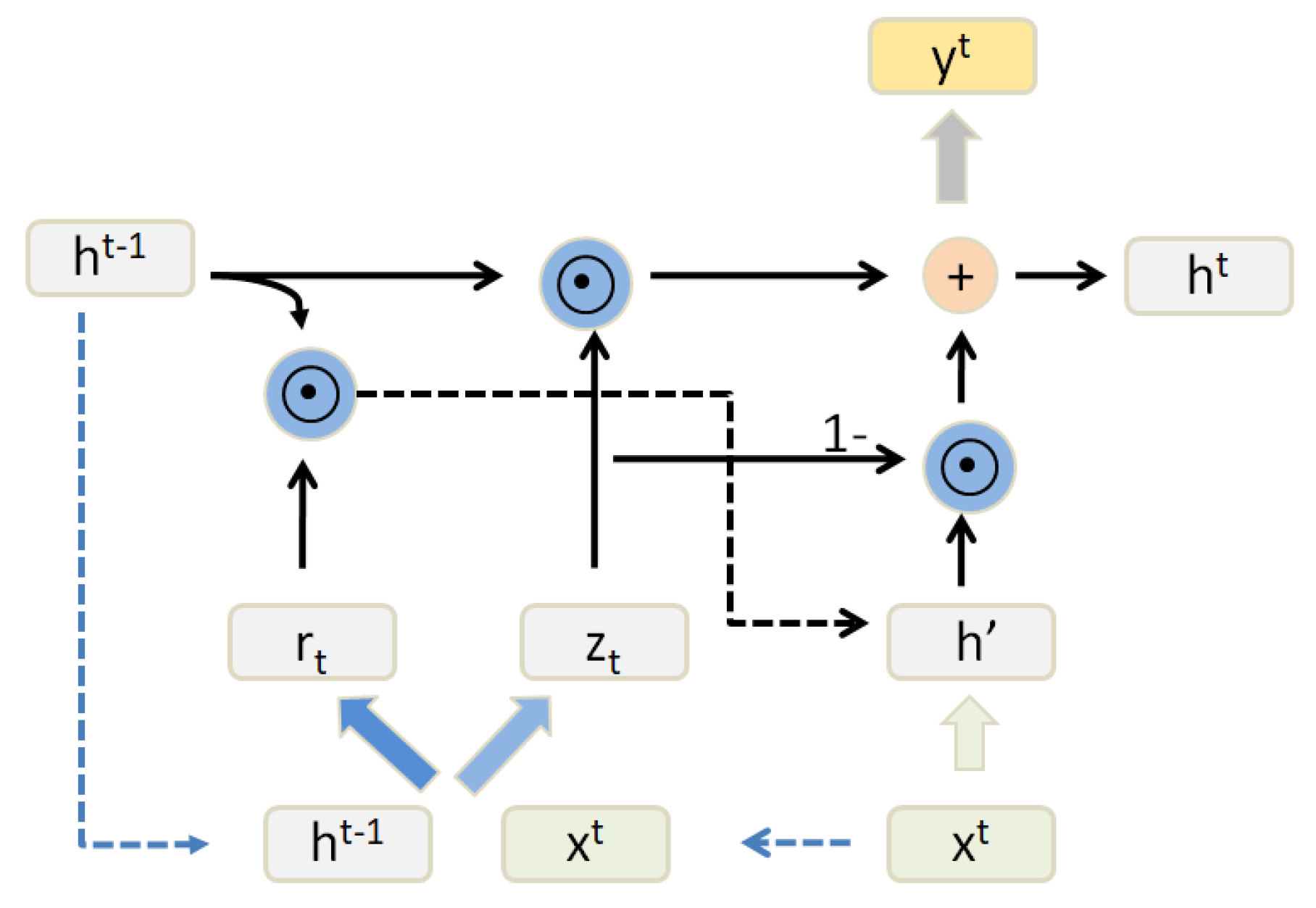

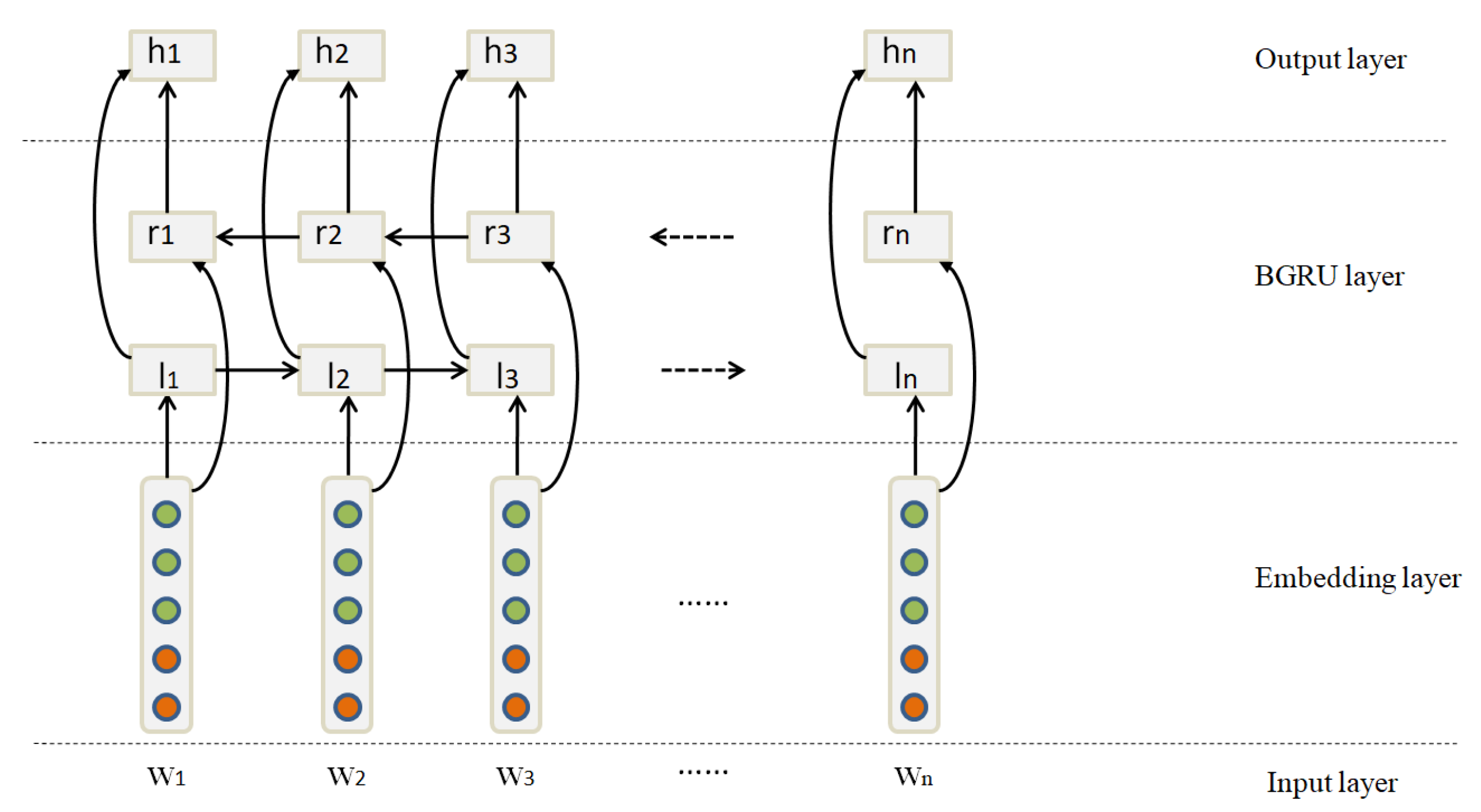

3.1.1. Sentence Encoder

- Input layer,

- Embedding layer,

- BGRU layer,

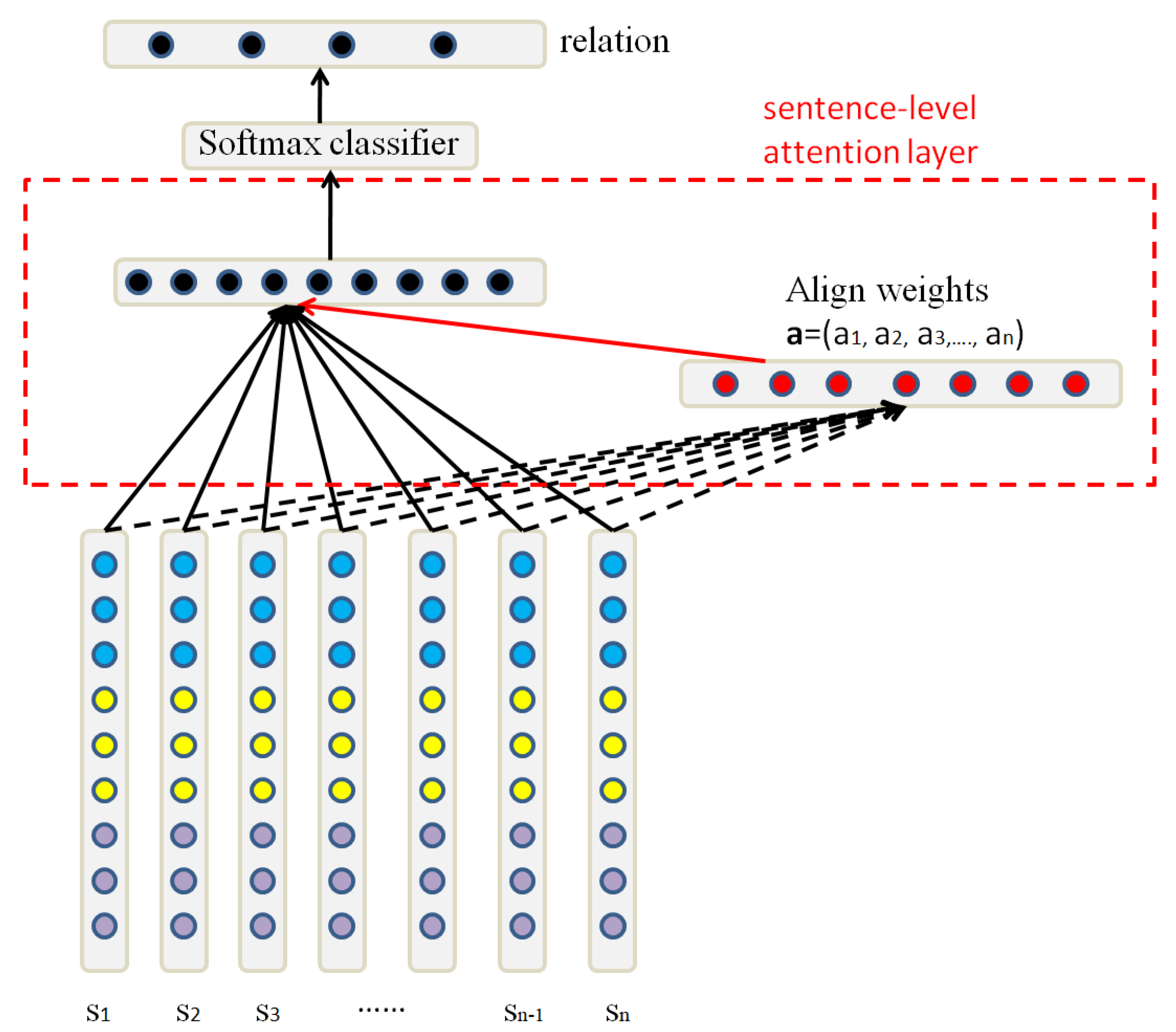

- Attention layer,

- Output layer.

3.1.2. Relation Classification

3.2. User Comment Information Extraction

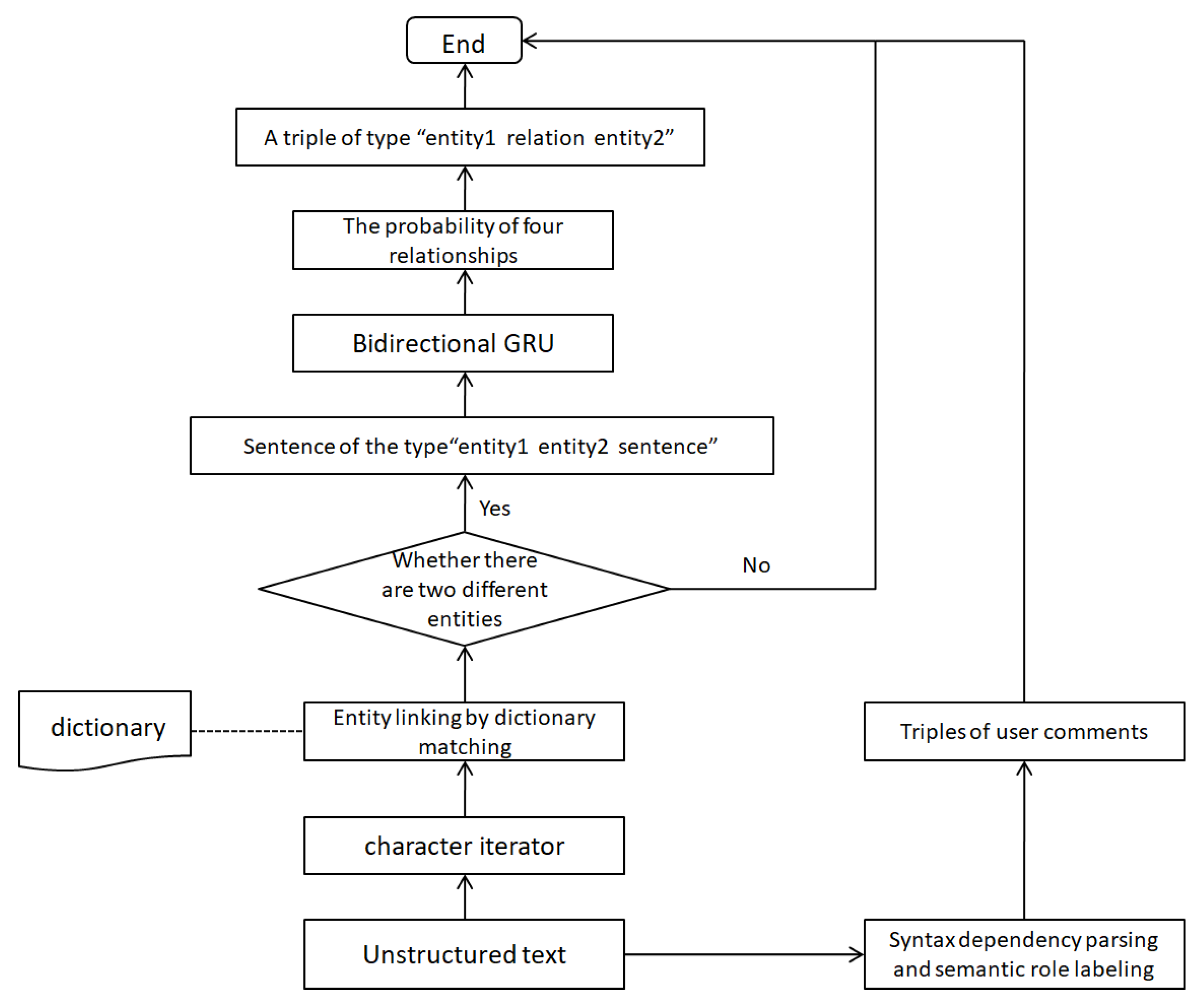

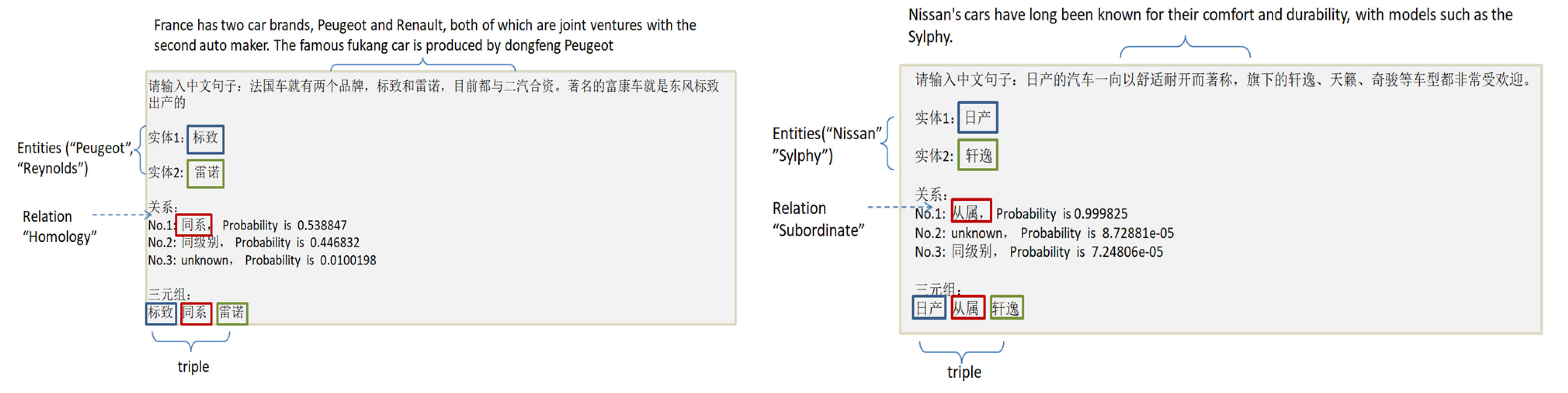

3.3. Automatic Triples Extraction

4. Experiment

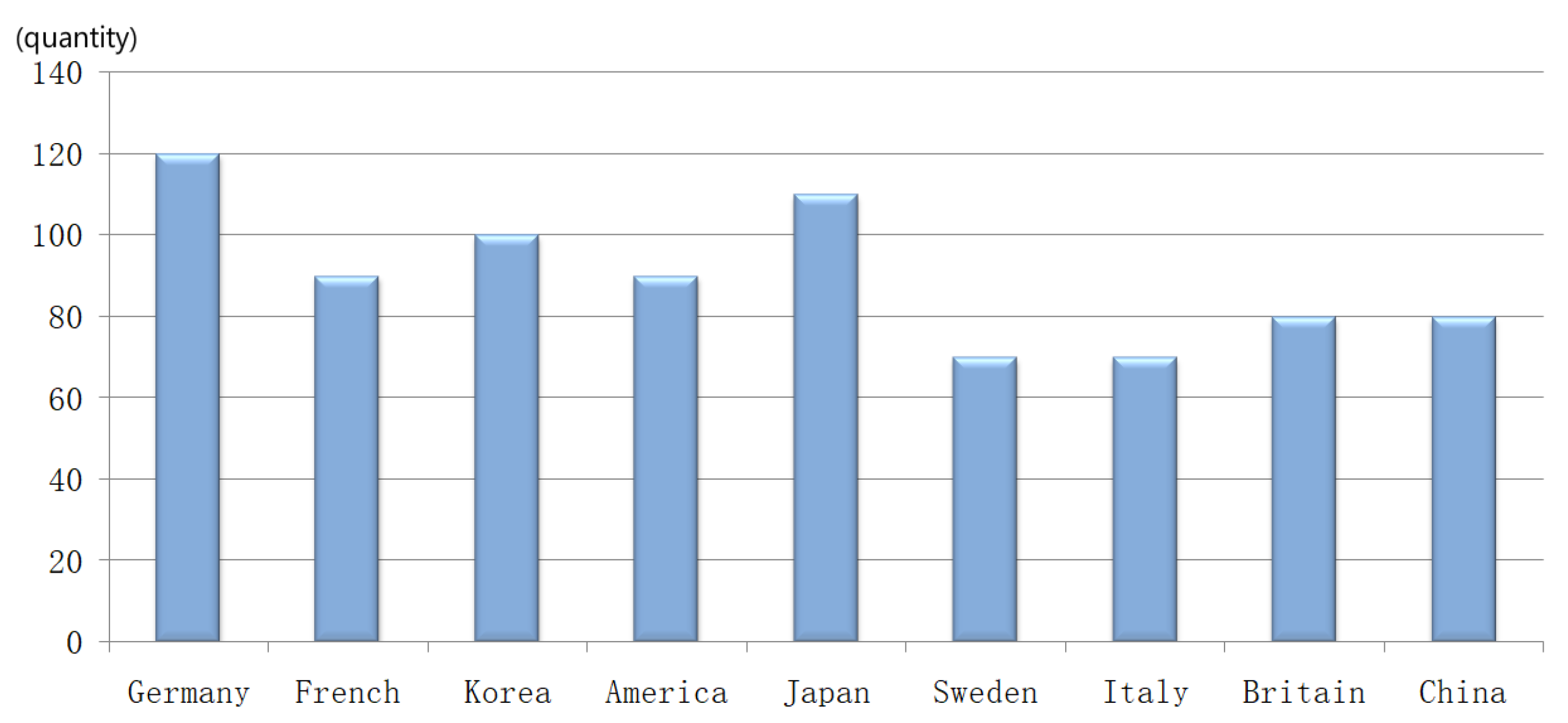

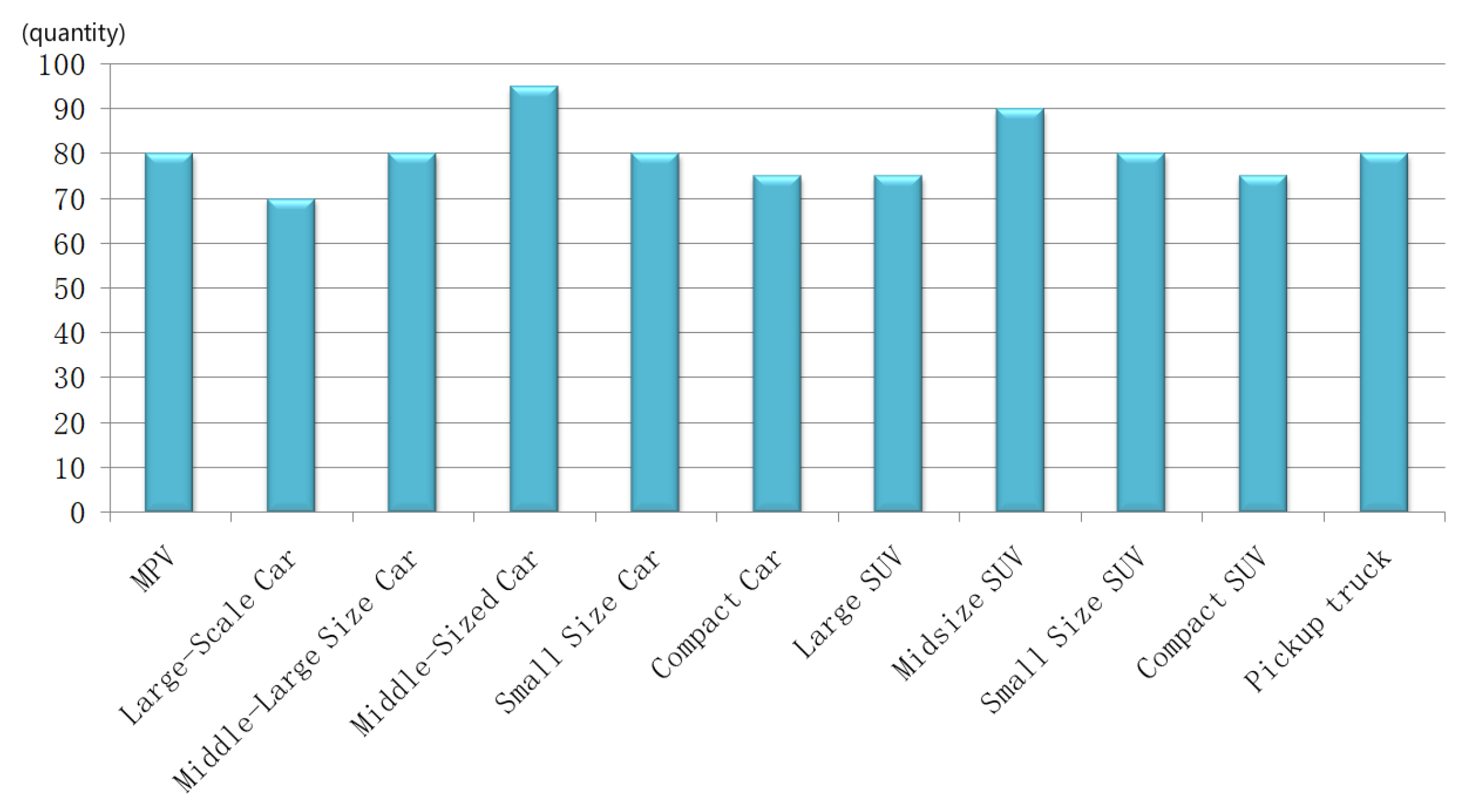

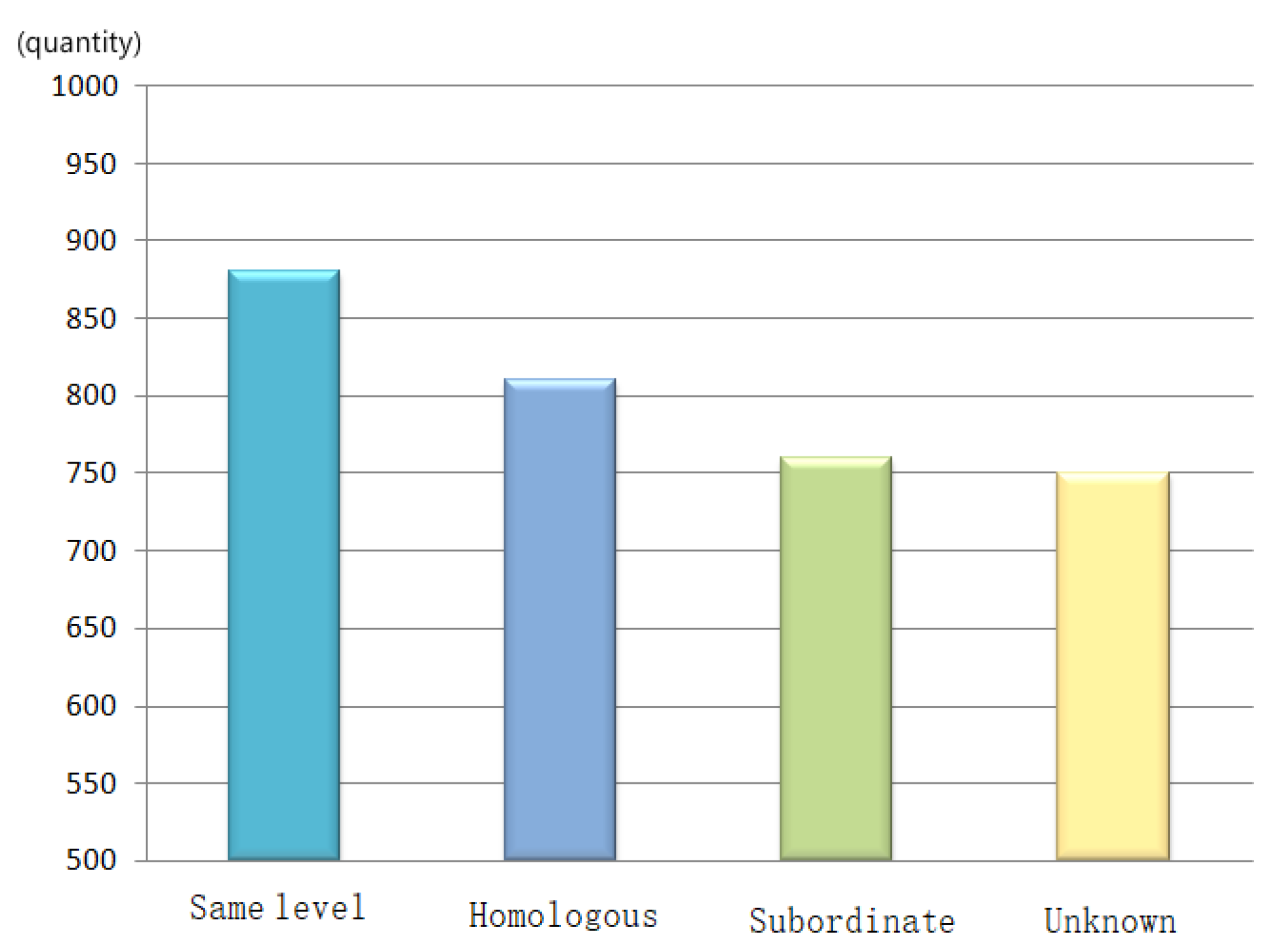

4.1. Dataset

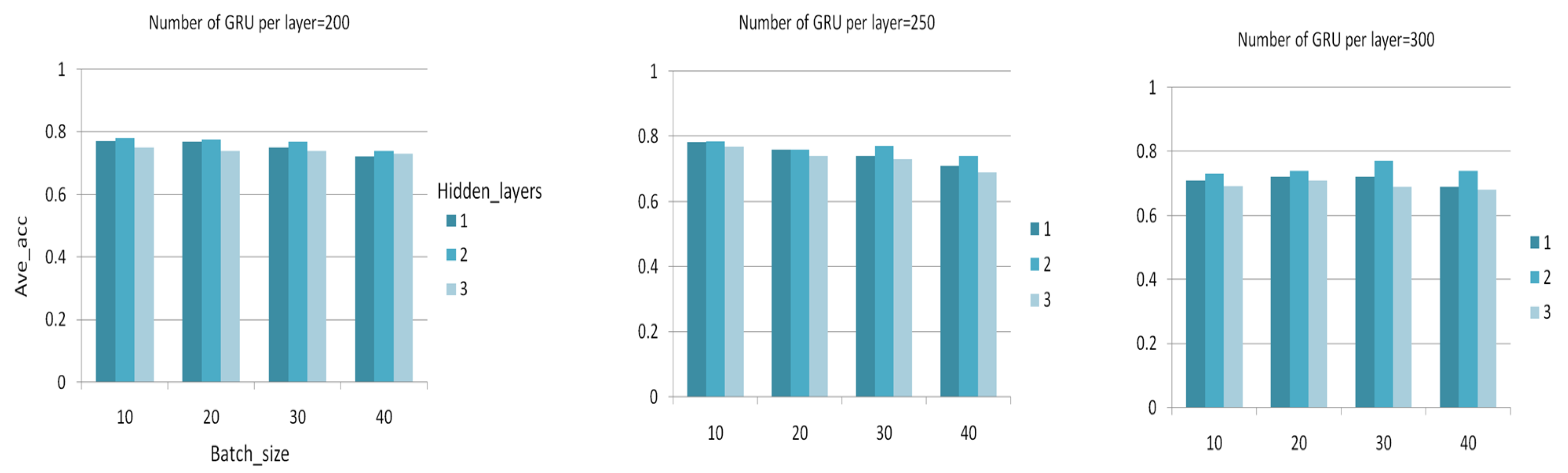

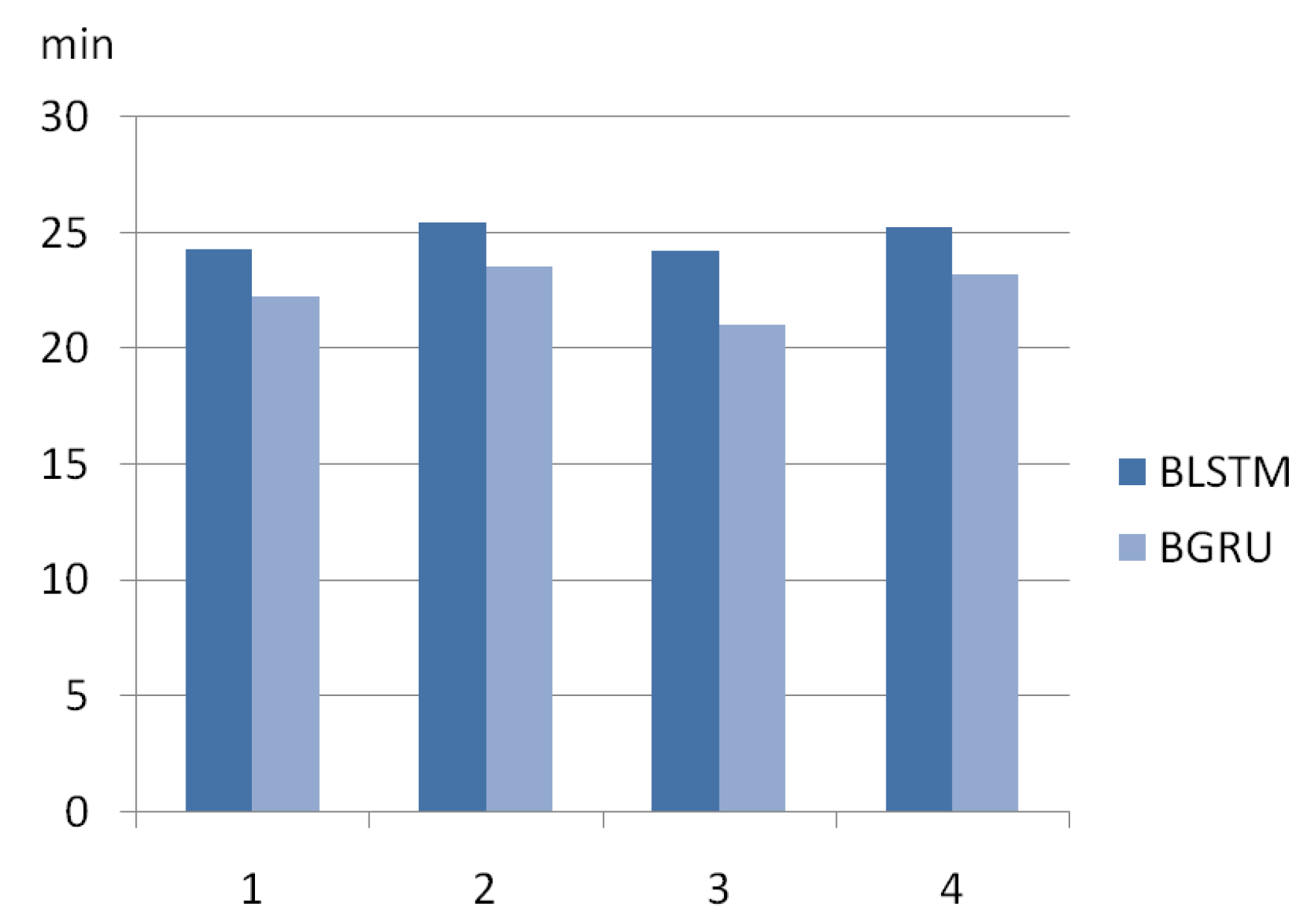

4.2. Model Training

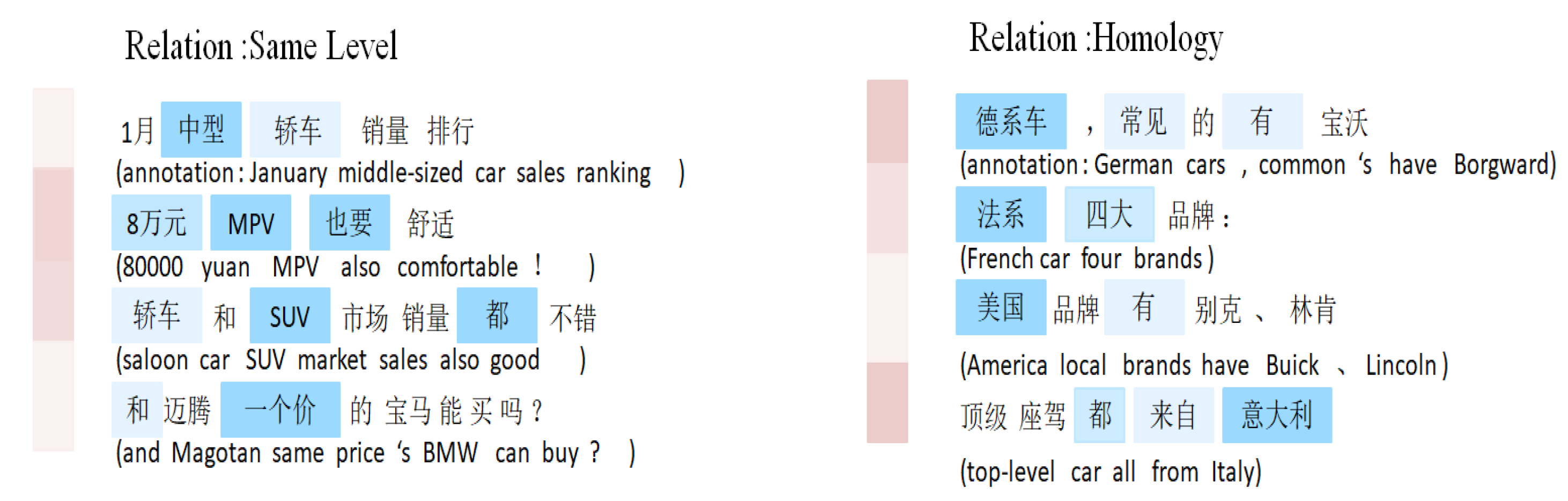

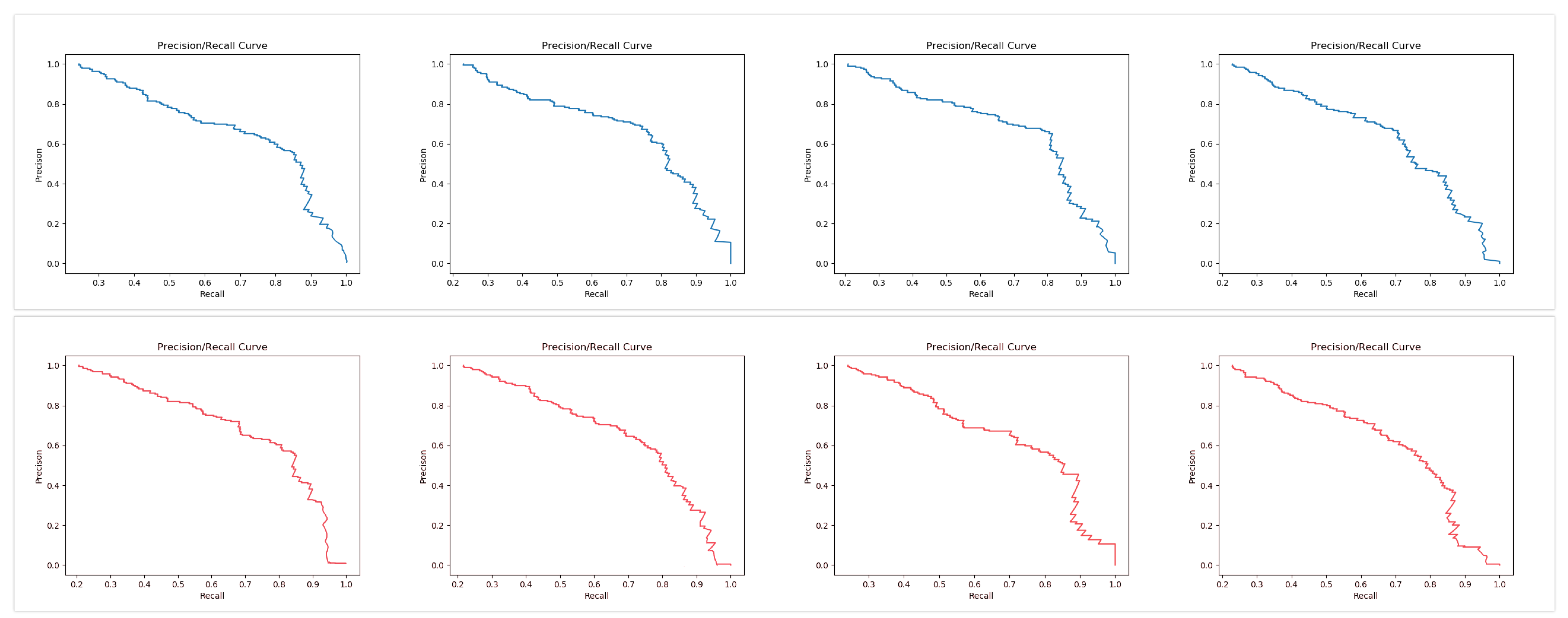

4.3. The Result of Triple Extraction

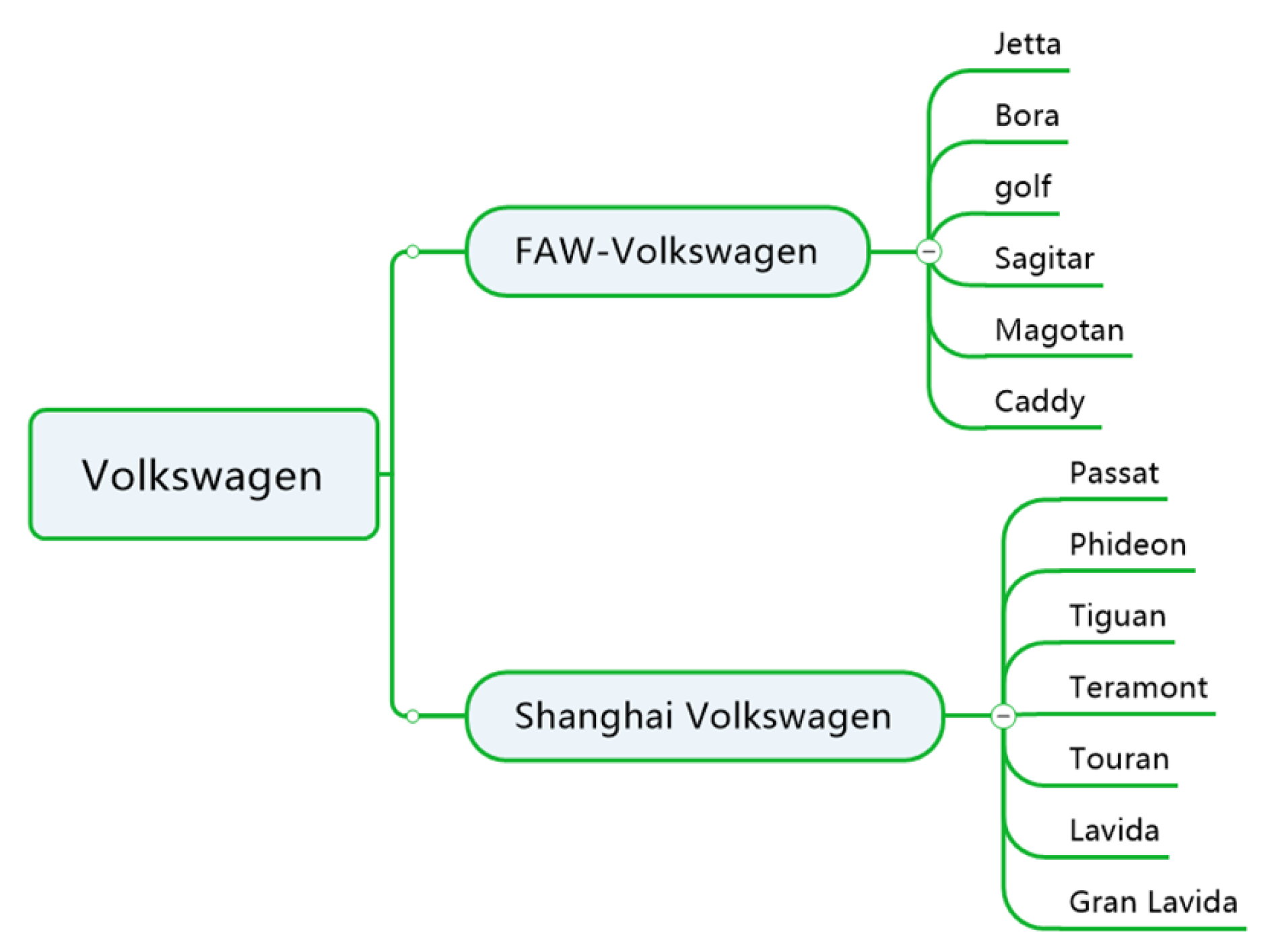

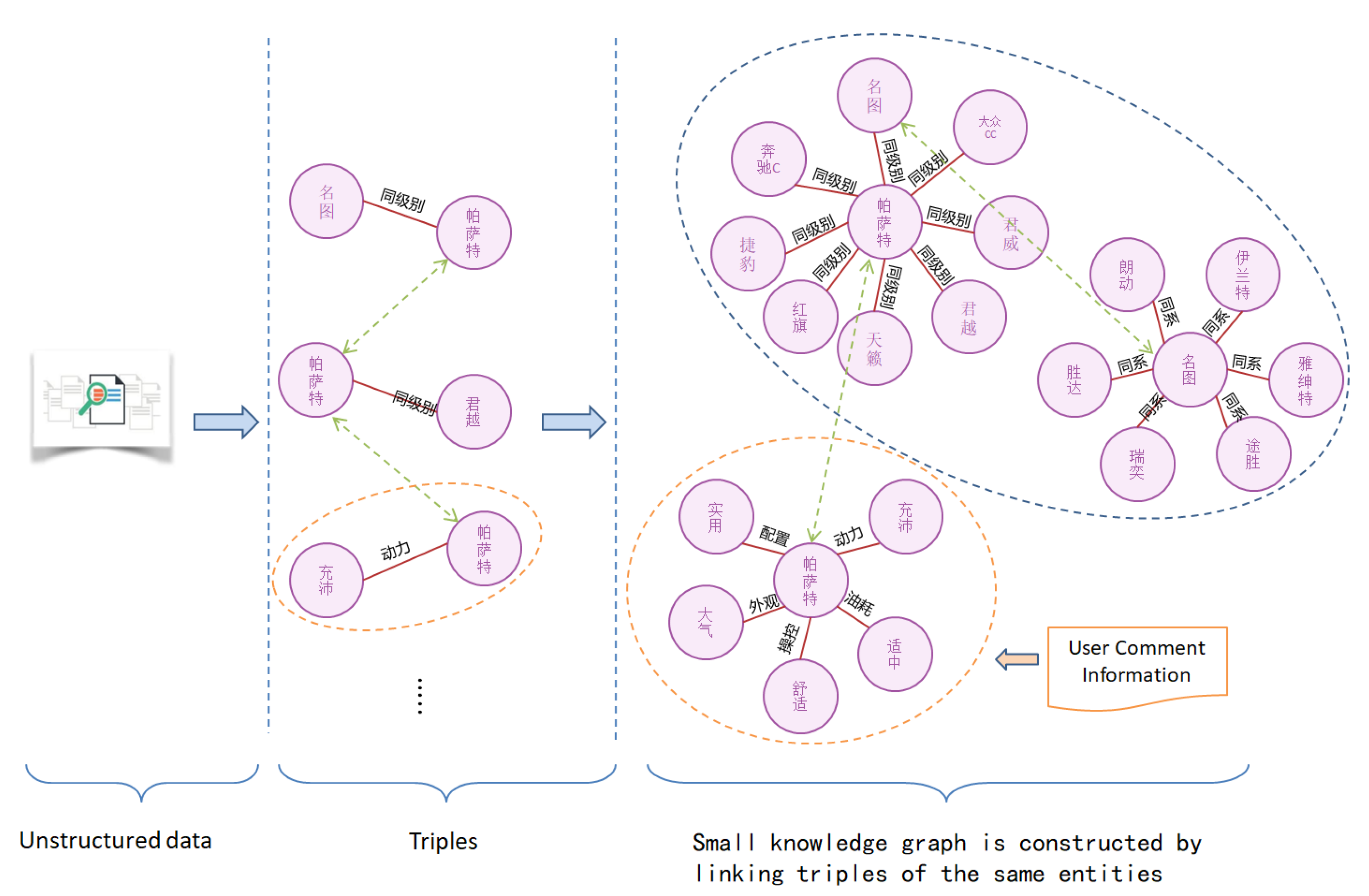

4.4. Knowledge Graph Construction

4.5. Discussion

5. Conclusions

- A feasible method is proposed to achieve automatic extraction of triples from unstructured Chinese text by combining entity extraction and relationship extraction.

- An approach is proposed to extract structured user evaluation information from unstructured Chinese text.

- A knowledge graph of the automobile industry is constructed.

- (1)

- We mainly crawl data from BBS and automobile sales websites. We will expand our data in future work, such as unstructured objective data in the automobile manufacturing process or unstructured data in other industrial fields.

- (2)

- In the process of constructing the industrial knowledge graph, we only aligned the entities with the same name and did not take into account the entities with ambiguity, that is, those with the same name but different meanings. Moreover, we did not merge the entities with different names but which had the same meanings. In the future, we will study the disambiguation and fusion of entities in the process of constructing knowledge graphs.

- (3)

- We have constructed the knowledge graph of the automobile industry. In the future, we will design a corresponding application according to this knowledge graph. For example, the KBQA (knowledge base question answering) in the automobile field holds prospects.

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| NLP | Natural Language Processing |

| LOD | Linking Open Data |

| GRU | Gate Recurrent Unit |

| CNN | Convolutional Neural Network |

| RNN | Recurrent Neural Network |

| LSTM | Long Short-Term Memory |

| BGRU | Bidirectional Gated Recurrent Unit |

| BLSTM | Bidirectional Long Short-Term Memory |

| ATT | Attribute |

| RAD | Right Adjunct |

| SBV | Subject-Verb |

| ADV | Adverbial |

| HED | Head |

| COO | Coordinate |

| KBQA | Knowledge Base Question Answering |

References

- Quintana, G.; Campa, F.J.; Ciurana, J. Productivity improvement through chatter-free milling in workshops. Proc. Inst. Mech. Eng. 2011, 225, 1163–1174. [Google Scholar] [CrossRef]

- Li, B.; Hou, B.; Yu, W. Applications of artificial intelligence in intelligent manufacturing: A review. Front. Inf. Technol. Electron. Eng. 2017, 18, 86–96. [Google Scholar] [CrossRef]

- Bahrin, M.A.K.; Othman, M.F.; Azli, N.H.N. Industry 4.0: A review on industrial automation and robotic. J. Teknol. 2016, 78, 137–143. [Google Scholar]

- Zhong, R.Y.; Xu, X.; Klotz, E. Intelligent manufacturing in the context of industry 4.0: A review. Engineering 2017, 3, 616–630. [Google Scholar] [CrossRef]

- Barrio, H.G.; Morán, I.C.; Ealo, J.A.; Barrena, F.S.; Beldarrain, T.O.; Zabaljauregui, M.C.; Zabala, A.M.; Arriola, P.J.A. Proceso de mecanizado fiable mediante uso intensivo de modelización y monitorización del proceso: Enfoque 2025. DYNA 2018, 93, 689–696. [Google Scholar] [CrossRef]

- Wang, J.; Ma, Y.; Zhang, L. Deep learning for smart manufacturing: Methods and applications. J. Manuf. Syst. 2018, 48, 144–156. [Google Scholar] [CrossRef]

- Bustillo, A.; Urbikain, G.; Perez, J.M.; Pereira, O.M.; de Lacalle, L.N.L. Smart optimization of a friction-drilling process based on boosting ensembles. J. Manuf. Syst. 2018, 48, 108–121. [Google Scholar] [CrossRef]

- Wang, C.; Gao, M.; He, X. Challenges in chinese knowledge graph construction. In Proceedings of the 2015 31st IEEE International Conference on Data Engineering Workshops, Seoul, Korea, 13–17 April 2015; pp. 59–61. [Google Scholar]

- Sintek, M.; Decker, S. TRIPLE—A query, inference, and transformation language for the semantic web. In Proceedings of the International Semantic Web Conference, Sardinia, Italy, 9–12 June 2002; pp. 364–378. [Google Scholar]

- Mihalcea, R.; Csomai, A. Wikify!: Linking documents to encyclopedic knowledge. In Proceedings of the Sixteenth ACM Conference on Conference on Information and Knowledge Management, Lisbon, Portugal, 6–10 November 2007; pp. 233–242. [Google Scholar]

- Ferragina, P.; Scaiella, U. Tagme: On-the-fly annotation of short text fragments (by wikipedia entities). In Proceedings of the 19th ACM international conference on Information and knowledge management, Toronto, ON, Canada, 26–30 October 2010; pp. 1625–1628. [Google Scholar]

- Hasibi, F.; Balog, K.; Bratsberg, S.E. On the reproducibility of the TAGME entity linking system. In Proceedings of the European Conference on Information Retrieval, London, UK, 10–12 April 2016; pp. 436–449. [Google Scholar]

- Mendes, P.N.; Jakob, M.; García-Silva, A. DBpedia spotlight: Shedding light on the web of documents. In Proceedings of the 7th International Conference on Semantic Systems, Graz, Austria, 7–9 September 2011; pp. 1–8. [Google Scholar]

- Meng, Z.; Yu, D.; Xun, E. Chinese microblog entity linking system combining wikipedia and search engine retrieval results. In Natural Language Processing and Chinese Computing; Springer: Berlin/Heidelberg, Germany, 2014; pp. 449–456. [Google Scholar]

- Yuan, J.; Yang, Y.; Jia, Z. Entity recognition and linking in Chinese search queries. In Natural Language Processing and Chinese Computing; Springer: Cham, Switzerland, 2015; pp. 507–519. [Google Scholar]

- Xie, C.; Yang, P.; Yang, Y. Open knowledge accessing method in IoT-based hospital information system for medical record enrichment. IEEE Access 2018, 6, 15202–15211. [Google Scholar] [CrossRef]

- He, Z. Chinese entity attributes extraction based on bidirectional LSTM networks. Int. J. Comput. Sci. Eng. 2019, 18, 65–71. [Google Scholar] [CrossRef]

- Enhanced Entity Extraction Using Big Data Mechanics. Available online: https://link.springer.com/chapter/10.1007/978-981-13-2673-8_8 (accessed on 5 July 2019).

- Daojian, Z.; Kang, L.; Siwei, L.; Guangyou, Z.; Jun, Z. Relation Classification via Convolutional Deep Neural Network. 2014, pp. 2335–2344. Available online: https://scholar.google.com.hk/scholar?hl=zh-CN&as_sdt=0%2C5&q=Relation+Classification+via+Convolutional+Deep+Neural+Network.&btnG= (accessed on 5 July 2019).

- Rinkm, B.; Harabagium, S. Utd: Classifying semantic relations by combining lexical and semantic resources. In Proceedings of the 5th International Workshop on Semantic Evaluation, Los Angeles, CA, USA, 15–16 July 2010; pp. 256–259. [Google Scholar]

- Relation Classification via Convolutional Deep Neural Network. Available online: http://ir.ia.ac.cn/bitstream/173211/4797/1/Relation%20Classification%20via%20Convolutional%20Deep%20Neural%20Network.pdf (accessed on 5 July 2019).

- Tomas, M.; Martin, K.; Lukás, B.; Jan, C.; Sanjeev, K. Recurrent neural network based language model. In Proceedings of the 11th Annual Conference of the International Speech Communication Association, Chiba, Japan, 26–30 September 2010; pp. 1045–1048. [Google Scholar]

- Zhang, D.; Wang, D. Relation classification via recurrent neural network. arXiv 2015, arXiv:1508.01006. [Google Scholar]

- Zhang, S.; Zheng, D.; Hu, X. Bidirectional long short-term memory networks for relation classification. In Proceedings of the 29th Pacific Asia Conference on Language, Information and Computation, Shanghai, China, 30 October–1 November 2015; pp. 73–78. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Chung, J.; Gulcehre, C.; Cho, K.H. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Mintz, M.; Bills, S.; Snow, R. Distant supervision for relation extraction without labeled data. In Proceedings of the Joint Conference of the 47th Annual Meeting of the ACL and the 4th International Joint Conference on Natural Language Processing of the AFNLP, Singapore, 2 August 2009; pp. 1003–1011. [Google Scholar]

- Riedel, S.; Yao, L.; McCallum, A. Modeling relations and their mentions without labeled text. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Barcelona, Spain, 20–24 September 2010; pp. 148–163. [Google Scholar]

- Hoffmann, R.; Zhang, C.; Ling, X. Knowledge-based weak supervision for information extraction of overlapping relations. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, Portland, OR, USA, 19–24 June 2011; pp. 541–550. [Google Scholar]

- Surdeanu, M.; Tibshirani, J.; Nallapati, R. Multi-instance multi-label learning for relation extraction. In Proceedings of the 2012 Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning, Jeju Island, Korea, 13 July 2012; pp. 455–465. [Google Scholar]

- Zeng, D.; Liu, K.; Chen, Y. Distant supervision for relation extraction via piecewise convolutional neural networks. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 1753–1762. [Google Scholar]

- Lin, Y.; Shen, S.; Liu, Z. Neural relation extraction with selective attention over instances. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 7–12 August 2016; pp. 2124–2133. [Google Scholar]

- Niu, X.; Sun, X.; Wang, H. Zhishi.me-weaving chinese linking open data. In Proceedings of the International Semantic Web Conference, Bonn, Germany, 23–27 October 2011; pp. 205–220. [Google Scholar]

- Auer, S.; Bizer, C.; Kobilarov, G. Dbpedia: A nucleus for a web of open data. The Semantic Web; Springer: Berlin/Heidelberg, Germany, 2007; pp. 722–735. [Google Scholar]

- Bollacker, K.; Evans, C.; Paritosh, P. Freebase: A collaboratively created graph database for structuring human knowledge. In Proceedings of the 2008 ACM SIGMOD International Conference on Management of Data, Vancouver, BC, Canada, 9–12 June 2008; pp. 1247–1250. [Google Scholar]

- Wikidata: A Free Collaborative Knowledge Base. Available online: https://ai.google/research/pubs/pub42240 (accessed on 5 July 2019).

- Chen, W.; Zhang, Y.; Isahara, H. Chinese named entity recognition with conditional random fields. In Proceedings of the Fifth SIGHAN Workshop on Chinese Language Processing, Sydney, Australia, 22–23 July 2006; pp. 118–121. [Google Scholar]

- Rudas, I.J.; Pap, E.; Fodor, J. Information aggregation in intelligent systems: An application oriented approach. Knowl.-Based Syst. 2013, 38, 3–13. [Google Scholar] [CrossRef]

- Felfernig, A.; Wotawa, F. Intelligent engineering techniques for knowledge bases. AI Commun. 2013, 26, 1–2. [Google Scholar]

- Martinez-Gil, J. Automated knowledge base management: A survey. Comput. Sci. Rev. 2015, 18, 1–9. [Google Scholar] [CrossRef]

- Zhou, P.; Shi, W.; Tian, J. Attention-based bidirectional long short-term memory networks for relation classification. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), Berlin, Germany, 7–12 August 2016; Volume 2, pp. 207–212. [Google Scholar]

- Teaching Machines to Read and Comprehend. Available online: http://papers.nips.cc/paper/5945-teaching-machines-to-read-and-comprehend (accessed on 5 July 2019).

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Xu, K.; Ba, J.; Kiros, R. Show, attend and tell: Neural image caption generation with visual attention. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 2048–2057. [Google Scholar]

| Language | Online API (application programming interface) | Status | Commercial | |

|---|---|---|---|---|

| Wikify! | English | Yes | Active | No |

| TAGME | English | Yes | Active | No |

| Spotlight | English | Yes | Active | No |

| CMEL | Chinese | no | update to 2014 | No |

| Yuan et al. | Chinese | no | update to 2015 | No |

| CN-EL | Chinese | Yes | Active | Yes |

| Knowledge Creation | Knowledge Exploitation | Knowledge Maintenance |

|---|---|---|

| Knowledge acquisition | Knowledge reasoning | Knowledge meta-modeling |

| Knowledge representation | Knowledge retrieval | Knowledge integration |

| Knowledge storage and manipulation | Knowledge sharing | Knowledge validation |

| Match Word | Match Word n | pos | Relation | Word | Tuple Word | |

|---|---|---|---|---|---|---|

| 0 | appearance | 2 | nz | ATT (attribute) | Audi A6 | Audi A6 - appearance |

| 1 | Audi A6 | 0 | u | RAD (right adjunct) | ’s | Audi A6 - ’s |

| 2 | fashion | 4 | n | SBV (subject-verb) | appearance | appearance - fashion |

| 3 | fashion | 4 | d | ADV (adverbial) | very | very - fashion |

| 4 | root | root | a | HED (head) | fashion | root - fashion |

| 5 | fashion | 4 | wp | WP (punctuation) | , | fashion - , |

| 6 | sufficient | 8 | n | SBV (subject-verb) | dynamics | dynamics - sufficient |

| 7 | sufficient | 8 | d | ADV (adverbial) | very | very - sufficient |

| 8 | fashion | 4 | a | COO (coordinate) | sufficient | sufficient - fashion |

| Tag of Relationship Types | Description |

|---|---|

| ATT | attribute |

| RAD | right adjunct |

| SBV | subject-verb |

| ADV | adverbial |

| HED | head |

| COO | coordinate |

| Number of GRU per Layer | 200 | 200 | 200 | 250 | 250 | 250 | 300 | 300 | 300 | |

|---|---|---|---|---|---|---|---|---|---|---|

| hidden layers | 1 | 2 | 3 | 1 | 2 | 3 | 1 | 2 | 3 | |

| bach size | 10 | 0.77 | 0.78 | 0.75 | 0.783 | 0.79 | 0.769 | 0.71 | 0.73 | 0.691 |

| 20 | 0.768 | 0.776 | 0.74 | 0.76 | 0.77 | 0.74 | 0.72 | 0.74 | 0.71 | |

| 30 | 0.75 | 0.768 | 0.74 | 0.74 | 0.77 | 0.73 | 0.72 | 0.77 | 0.69 | |

| 40 | 0.72 | 0.74 | 0.73 | 0.71 | 0.74 | 0.69 | 0.69 | 0.74 | 0.68 |

| Word dimension | 100 |

| Position dimension | 5 |

| Dropout probability | 0.5 |

| Batch size | 10 |

| BGRU (bidirectional gated recurrent unit) layer number | 2 |

| GRU (gated recurrent unit) size of each layer | 250 |

| 1 | 2 | 3 | 4 | Mean | |

|---|---|---|---|---|---|

| BLSTM (bidirectional long short-term memory) | 0.752 | 0.767 | 0.76 | 0.75 | 0.757 |

| BGRU (bidirectional gated recurrent unit) | 0.785 | 0.781 | 0.776 | 0.77 | 0.778 |

| All Entities | Extracted | Correct | F1-Measure | |

|---|---|---|---|---|

| 1428 | 1400 | 1400 | 0.99 |

| Relation | Ground Truth | Extracted | Correct | Precision | F1-Measure |

|---|---|---|---|---|---|

| Same Level | 230 | 203 | 168 | 0.83 | 0.78 |

| Homology | 150 | 102 | 74 | 0.73 | 0.59 |

| Subordinate | 92 | 63 | 49 | 0.78 | 0.63 |

| Unknown | 228 | 192 | 145 | 0.76 | 0.69 |

| Texts | Triples | ||

|---|---|---|---|

| 53,200 | 30,500 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, M.; Wang, H.; Guo, J.; Liu, D.; Xie, C.; Liu, Q.; Cheng, Z. Construction of an Industrial Knowledge Graph for Unstructured Chinese Text Learning. Appl. Sci. 2019, 9, 2720. https://doi.org/10.3390/app9132720

Zhao M, Wang H, Guo J, Liu D, Xie C, Liu Q, Cheng Z. Construction of an Industrial Knowledge Graph for Unstructured Chinese Text Learning. Applied Sciences. 2019; 9(13):2720. https://doi.org/10.3390/app9132720

Chicago/Turabian StyleZhao, Mingxiong, Han Wang, Jin Guo, Di Liu, Cheng Xie, Qing Liu, and Zhibo Cheng. 2019. "Construction of an Industrial Knowledge Graph for Unstructured Chinese Text Learning" Applied Sciences 9, no. 13: 2720. https://doi.org/10.3390/app9132720

APA StyleZhao, M., Wang, H., Guo, J., Liu, D., Xie, C., Liu, Q., & Cheng, Z. (2019). Construction of an Industrial Knowledge Graph for Unstructured Chinese Text Learning. Applied Sciences, 9(13), 2720. https://doi.org/10.3390/app9132720