Data Augmentation for Speaker Identification under Stress Conditions to Combat Gender-Based Violence †

Abstract

1. Introduction

1.1. Gender-Based Violence

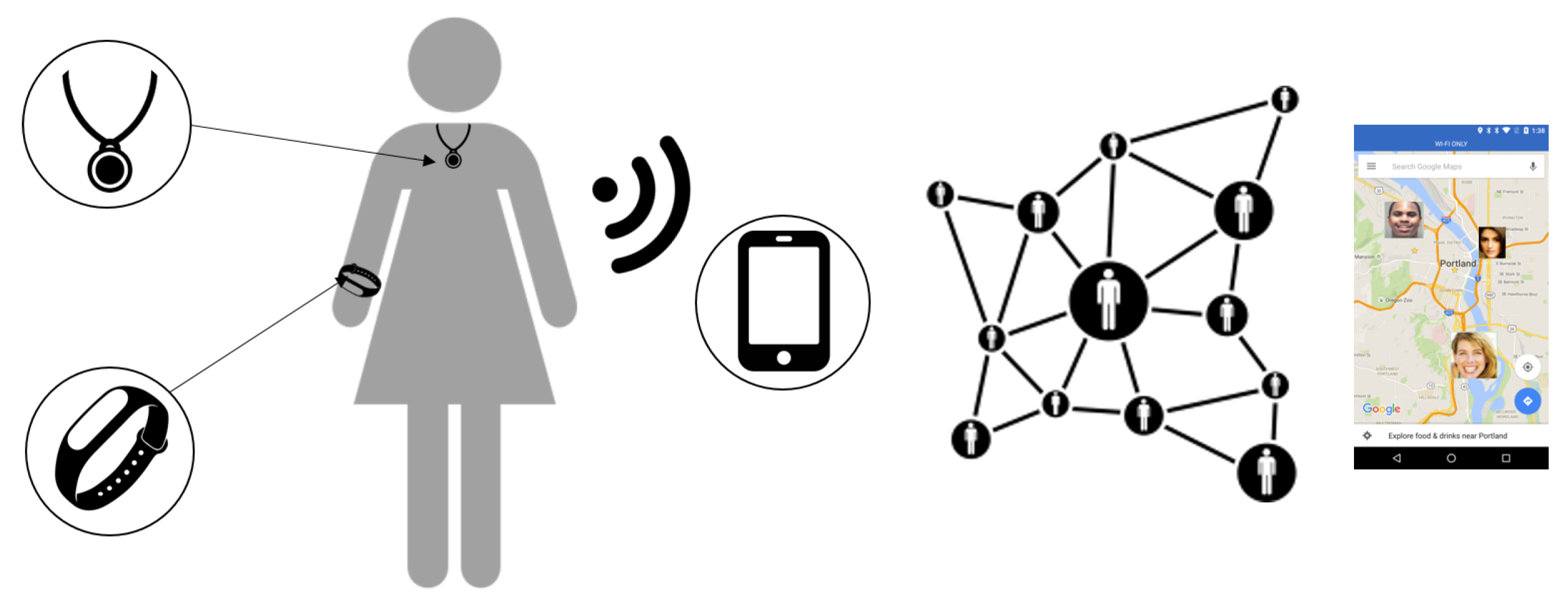

1.2. UC3M4Safety and Bindi

1.3. Technological Challenges

1.4. Contributions

2. Speaker Identification Related Work

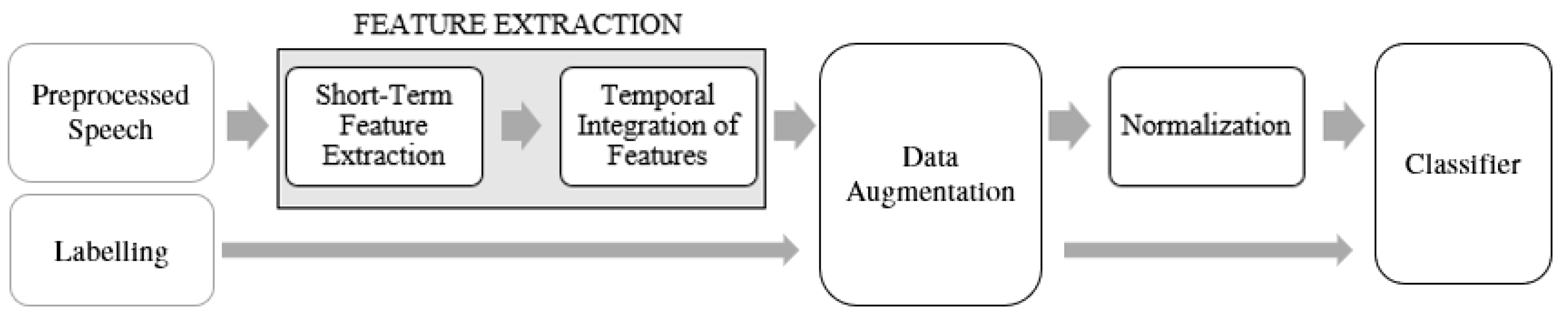

2.1. Feature Extraction

2.2. Data Augmentation

2.3. Classifiers

3. Methods

3.1. Corpus Database

3.2. Data Preprocessing

3.3. Automatic Stress Labelling

3.4. Feature Extraction

3.5. Data Augmentation

3.6. Normalization

4. Experiments

4.1. Balanced Data

4.2. Match and Mismatch Conditions

4.3. Pitch and Speed Modifications

4.4. Preliminary Experiments

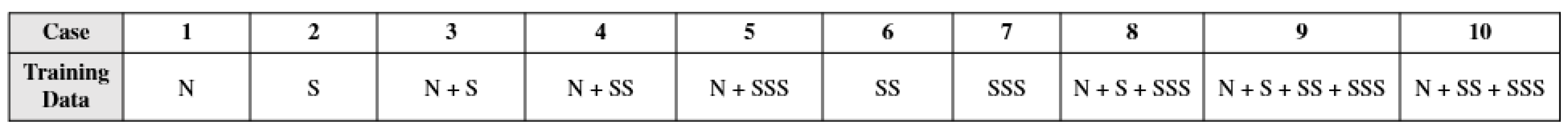

4.5. Synthetic Stress Combinations

5. Conclusions and Future Work

Future Work

- Our target in this research is a Speaker Identification task, a multiclass problem. However, the objective of the device to be built in Bindi is a Speaker Verification system, thus the next step would be to transform the system into a binary setting. These two approaches are not straightforwardly comparable but we believe that the problems and solutions can be translated to one another.

- Although stress seems to be an emotional condition that usually precedes more intense emotions, we aim to find or record a database that includes emotions in speech during an assault situation—such as panic, anxiety or fear—to work with.

- Finding techniques to strengthen the system by degrading audios as if they had been recorded in a real environment. Perhaps to simulate real world situations in which the recorded voice is not clean, we could add noise to the same database used and analyze its effect, either by sounds recorded at outdoors and indoors atmospheres or with white/pink noise.

- Further analyzing the differences between neutral and stressed speech to find new modifications to be applied to neutral speech to transform it into appropriate synthetically stressed speech.

- Implementing new methods for recording stressed speech in the training phase using Bindi, such as Stroop Effect games [45] in which the speaker should experiment stress, would be a way to count with originally stressed samples in the training stage.

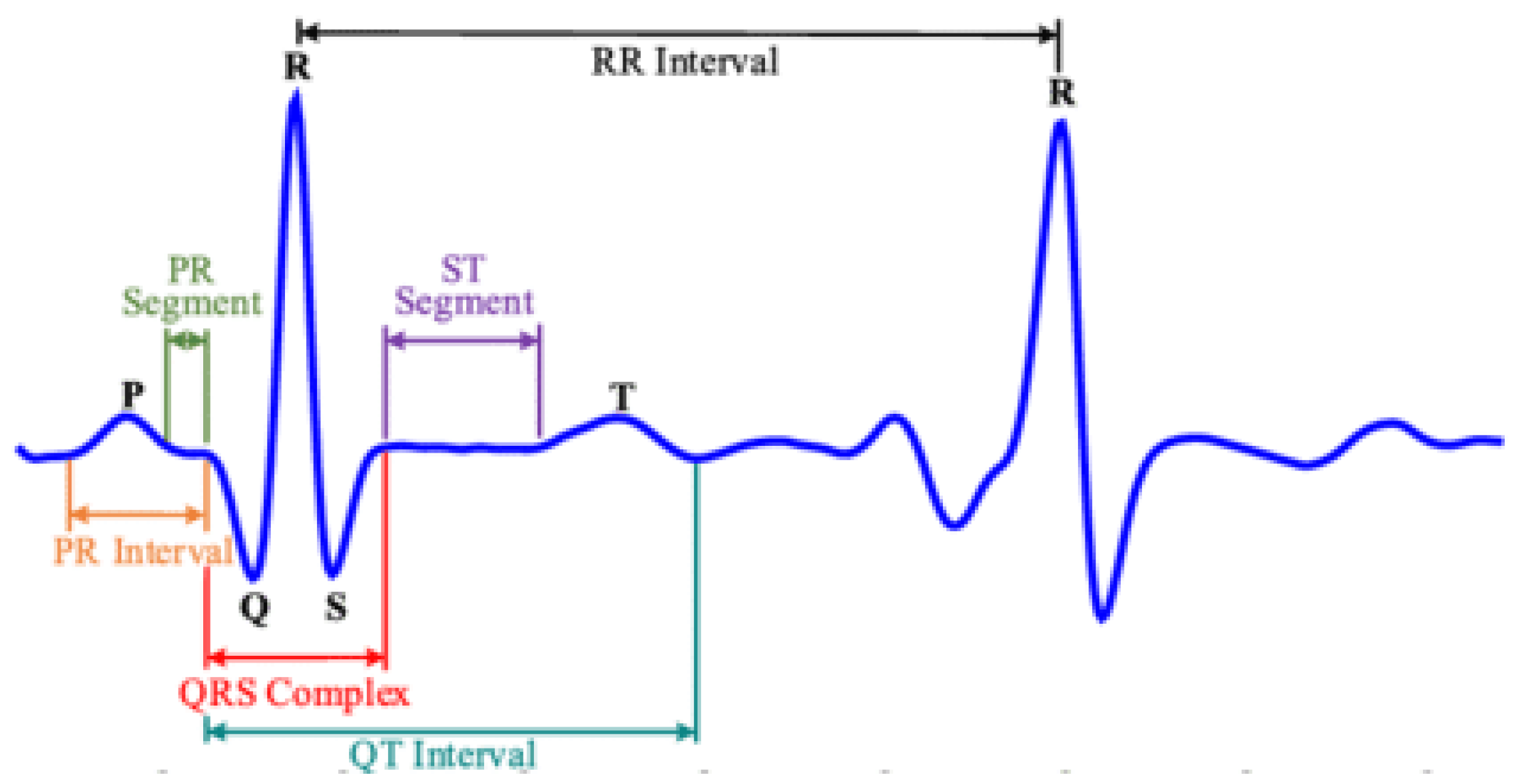

- Heart Rate Variability (HRV) is known to have a strong relationship with stress. To deepen into this question and further investigating, we could try to find correlations between HRV and stress, adding this other biometric feature to the stress-robust SI system.

- With the use of data augmentation techniques we have collected a much larger database and we could therefore employ more powerful Deep Learning algorithms in the future, provided the device employed is able to cope with it in real time.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Rituerto-González, E.; Gallardo-Antolín, A.; Peláez-Moreno, C. Speaker Recognition under Stress Conditions. In Proceedings of the IBER Speech, Barcelona, Spain, 21–23 November 2018; pp. 15–19. [Google Scholar] [CrossRef]

- World Health Organization. Global and Regional Estimates of Violence against Women: Prevalence and Health Effects of Intimate Partner Violence and Non-Partner Sexual Violence; World Health Organization: Geneva, Switzerland, 2013. [Google Scholar]

- UC3M4Safety—Multidisciplinary Team for Detecting, Preventing and Combating Violence against Women; University Carlos III of Madrid: Leganes, Spain, 2017.

- Miranda, J.A.; Canabal, M.F.; Portela-García, M.; Lopez-Ongil, C. Embedded Emotion Recognition: Autonomous Multimodal Affective Internet of Things; CEUR Workshop: Tenerife, Spain, 2018; Volume 2208, pp. 22–29. [Google Scholar]

- Miranda, J.A.; Canabal, M.F.; Lanza, J.M.; Portela-García, M.; López-Ongil, C.; Alcaide, T.R. Meaningful Data Treatment from Multiple Physiological Sensors in a Cyber-Physical System. In Proceedings of the DCIS 2017: XXXII Conference on Design of Circuits and Integrated Systems, Barcelona Spain, 22–24 November 2017. [Google Scholar]

- Miranda-Calero, J.A.; Marino, R.; Lanza-Gutierrez, J.M.; Riesgo, T.; Garcia-Valderas, M.; Lopez-Ongil, C. Embedded Emotion Recognition within Cyber-Physical Systems using Physiological Signals. In Proceedings of the DCIS 2018: XXXIII Conference on Design of Circuits and Integrated Systems, Lyon, France, 14–16 November 2018. [Google Scholar]

- Minguez-Sanchez, A. Detección de Estrés en Señales de voz. Bachelor’s Thesis, University Carlos III Madrid, Madrid, Spain, 2017. [Google Scholar]

- Schuller, B.W. Speech emotion recognition: Two decades in a nutshell, benchmarks, and ongoing trends. Commun. ACM 2018, 61, 90–99. [Google Scholar] [CrossRef]

- Anagnostopoulos, C.N.; Iliou, T.; Giannoukos, I. Features and classifiers for emotion recognition from speech: A survey from 2000 to 2011. Artif. Intell. Rev. 2015, 43, 155–177. [Google Scholar] [CrossRef]

- Noroozi, F.; Kaminska, D.; Sapinski, T.; Anbarjafari, G. Supervised Vocal-Based Emotion Recognition Using Multiclass Support Vector Machine, Random Forests, and Adaboost. J. Audio Eng. Soc. 2017, 65, 562–572. [Google Scholar] [CrossRef]

- Busso, C.; Bulut, M.; Lee, C.C.; Kazemzadeh, A.; Provost, E.M.; Kim, S.; Chang, J.N.; Lee, S.; Narayanan, S. IEMOCAP: Interactive emotional dyadic motion capture database. Lang. Resour. Eval. 2008, 42, 335–359. [Google Scholar] [CrossRef]

- Haq, P.J.S. Surrey Audio-Visual Expressed Emotion (SAVEE) Database; University of Surrey: Guildford, UK, 2014. [Google Scholar]

- Vryzas, N.; Kotsakis, R.; Liatsou, A.; Dimoulas, C.; Kalliris, G. Speech Emotion Recognition for Performance Interaction. J. Audio Eng. Soc. 2018, 66, 457–467. [Google Scholar] [CrossRef]

- Vryzas, N.; Vrysis, L.; Kotsakis, R.; Dimoulas, C. Speech Emotion Recognition Adapted to Multimodal Semantic Repositories. In Proceedings of the 2018 13th International Workshop on Semantic and Social Media Adaptation and Personalization (SMAP), Zaragoza, Spain, 6–7 September 2018; pp. 31–35. [Google Scholar] [CrossRef]

- Kahou, S.E.; Bouthillier, X.; Lamblin, P.; Gülçehre, Ç.; Michalski, V.; Konda, K.R.; Jean, S.; Froumenty, P.; Dauphin, Y.; Boulanger-Lewandowski, N.; et al. EmoNets: Multimodal deep learning approaches for emotion recognition in video. J. Multimodal User Interfaces 2016, 10, 99–111. [Google Scholar] [CrossRef]

- Hansen, J.H.; Patil, S. Speaker Classification. Speech Under Stress: Analysis, Modeling and Recognition; Springer: Berlin/Heidelberg, Germany, 2007; pp. 108–137. [Google Scholar]

- Murray, I.; Arnott, J.L. Toward the simulation of emotion in synthetic speech: A review of the literature on human vocal emotion. J. Acoust. Soc. Am. 1993, 93, 1097–1108. [Google Scholar] [CrossRef]

- Wu, W.; Zheng, F.; Xu, M.; Bao, H. Study on speaker verification on emotional speech. In Proceedings of the Ninth International Conference on Spoken Language Processing, Pittsburgh, PA, USA, 17–21 September 2006. [Google Scholar]

- Hansen, J.H.L. Speech under Simulated and Actual Stress (SUSAS) Database; Linguistic Data Consortium: Philadelphia, PA, USA, 1999. [Google Scholar]

- Aguiar, A.; Kaiseler, M.; Cunha, M.; Meinedo, H.; Almeida, P.R.; Silva, J. VOCE Corpus: Ecologically Collected Speech Annotated with Physiological and Psychological Stress Assessments. In Proceedings of the LREC 2014: 9th International Conference on Language Resources and Evaluation, Reykjavik, Iceland, 26–31 May 2014. [Google Scholar]

- Ikeno, A.; Varadarajan, V.; Patil, S.; Hansen, J.H.L. UT-Scope: Speech under Lombard Effect and Cognitive Stress. In Proceedings of the 2007 IEEE Aerospace Conference, Big Sky, MT, USA, 3–10 March 2007; pp. 1–7. [Google Scholar] [CrossRef]

- Steeneken, H.J.M.; Hansen, J.H.L. Speech under stress conditions: Overview of the effect on speech production and on system performance. In Proceedings of the 1999 IEEE International Conference on Acoustics, Speech, and Signal Processing, Phoenix, AZ, USA, 15–19 March 1999; Volume 4, pp. 2079–2082. [Google Scholar] [CrossRef]

- Poddar, A.; Sahidullah, M.; Saha, G. Speaker verification with short utterances: A review of challenges, trends and opportunities. IET Biom. 2018, 7, 91–101. [Google Scholar] [CrossRef]

- Reynolds, D.A.; Quatieri, T.F.; Dunn, R.B. Speaker Verification Using Adapted Gaussian Mixture Models. Digit. Signal Process. 2000, 10, 19–41. [Google Scholar] [CrossRef]

- Campbell, J.P. Speaker recognition: A tutorial. Proc. IEEE 1997, 85, 1437–1462. [Google Scholar] [CrossRef]

- Li, Y.; Zhao, Y. Recognizing Emotions in Speech Using Short-term and Long-term Features. In Proceedings of the International Conference on Spoken Language Processing, Sydney, Australia, 30 November–4 December 1998; Volume 6, pp. 2255–2258. [Google Scholar]

- Senthil Raja, G.; Dandapat, S. Speaker recognition under stressed condition. Int. J. Speech Technol. 2010, 13, 141–161. [Google Scholar] [CrossRef]

- Zheng, N.; Lee, T.; Ching, P.C. Integration of Complementary Acoustic Features for Speaker Recognition. IEEE Signal Process. Lett. 2007, 14, 181–184. [Google Scholar] [CrossRef]

- Trigeorgis, G.; Ringeval, F.; Brueckner, R.; Marchi, E.; Nicolaou, M.A.; Schuller, B.; Zafeiriou, S. Adieu features? End-to-end speech emotion recognition using a deep convolutional recurrent network. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 5200–5204. [Google Scholar] [CrossRef]

- Zhang, S.; Zhang, S.; Huang, T.; Gao, W. Speech Emotion Recognition Using Deep Convolutional Neural Network and Discriminant Temporal Pyramid Matching. IEEE Trans. Multimed. 2018, 20, 1576–1590. [Google Scholar] [CrossRef]

- Simard, P.Y.; Steinkraus, D.; Platt, J.C. Best practices for convolutional neural networks applied to visual document analysis. In Proceedings of the Seventh International Conference on Document Analysis and Recognition, Edinburgh, UK, 3–6 August 2003; pp. 958–963. [Google Scholar] [CrossRef]

- Bowyer, K.W.; Chawla, N.V.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic Minority Over-sampling Technique. J. Artif. Intell. Res. 2011, 16, 321–357. [Google Scholar]

- Rebai, I.; BenAyed, Y.; Mahdi, W.; Lorré, J.P. Improving speech recognition using data augmentation and acoustic model fusion. Procedia Comput. Sci. 2017, 112, 316–322. [Google Scholar] [CrossRef]

- Meuwly, D.; Drygajlo, A. Forensic speaker recognition based on a Bayesian framework and Gaussian mixture modelling (GMM). In Proceedings of the A Speaker Odyssey—The Speaker Recognition Workshop, Crete, Greece, 18–22 June 2001; pp. 145–150. [Google Scholar]

- Campbell, W.M.; Sturim, D.E.; Reynolds, D.A. Support vector machines using GMM supervectors for speaker verification. IEEE Signal Process. Lett. 2006, 13, 308–311. [Google Scholar] [CrossRef]

- Abdalmalak, K.A.; Gallardo-Antolín, A. Enhancement of a text-independent speaker verification system by using feature combination and parallel structure classifiers. Neural Comput. Appl. 2018, 29, 637–651. [Google Scholar] [CrossRef]

- Lei, Y.; Scheffer, N.; Ferrer, L.; McLaren, M. A novel scheme for speaker recognition using a phonetically-aware deep neural network. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 1695–1699. [Google Scholar] [CrossRef]

- Yu, D.; Seltzer, M.L.; Li, J.; Huang, J.; Seide, F. Feature Learning in Deep Neural Networks—A Study on Speech Recognition Tasks. arXiv 2013, arXiv:1301.3605. [Google Scholar]

- He, R.; Wang, K.; Li, Q.; Yuan, Y.; Zhao, N.; Liu, Y.; Zhang, H. A Novel Method for the Detection of R-peaks in ECG Based on K-Nearest Neighbors and Particle Swarm Optimization. EURASIP J. Adv. Signal Process. 2017, 2017, 82. [Google Scholar] [CrossRef]

- Spielberger, C.D.; Gorssuch, R.L.; Lushene, P.R.; Vagg, P.R.; Jacobs, G.A. State-Trait Anxiety Inventory (STAI); Consulting Psychologists Press: Palo Alto, CA, USA, 1968. [Google Scholar]

- Brookes, M. Voicebox: Speech Processing Toolbox for Matlab [Software]. Available online: www.ee.ic.ac.uk/hp/staff/dmb/voicebox/voicebox (accessed on 4 June 2019).

- Tirumala, S.S.; Shahamiri, S.R.; Garhwal, A.S.; Wang, R. Speaker identification features extraction methods: A systematic review. Expert Syst. Appl. 2017, 90, 250–271. [Google Scholar] [CrossRef]

- Bittner, R.; Humphrey, E.; Bello, J. PySOX: Leveraging the Audio Signal Processing Power of SOX in Python. In Proceedings of the International Conference on Music Information Retrieval (ISMIR-16) Conference Late Breaking and Demo Papers, New York, NY, USA, 8–11 August 2016. [Google Scholar]

- Zhang, A. Speech Recognition Library for Python (Version 3.8) [Software]. 2017. Available online: https://github.com/Uberi/speech_recognition (accessed on 4 June 2019).

- Stroop, J.R. Studies of Interference in Serial Verbal Reactions. J. Exp. Psychol. Gen. 1992, 121, 15–23. [Google Scholar] [CrossRef]

| Set Label | Neutral | Stressed | Total |

|---|---|---|---|

| Set 1 | 1389 | 3989 | 5378 |

| Set 2 | 1716 | 4858 | 6574 |

| Total | 3105 | 8847 | 11,952 |

| Row Index | Feature |

|---|---|

| 0–12 | Mean MFCC |

| 13–25 | Standard Deviation MFCC |

| 26–28 | Mean first three formants |

| 29–31 | Standard Deviation first three formants |

| 32 | Mean Pitch |

| 33 | Standard Deviation Pitch |

| Training Set | Test Set | Mean (%) | Std (%) |

|---|---|---|---|

| Neutral | Neutral Stressed | 96.73 79.21 | 0.33 0.90 |

| Stressed | Stressed Neutral | 95.87 90.89 | 0.28 0.49 |

| Mixed | Mixed | 96.05 | 0.12 |

| Case | Set 1 Mean | Set 1 Std | Set 1 + 2 Mean | Set 1 + 2 Std |

|---|---|---|---|---|

| 1 | 89.71 | 0.56 | 78.55 | 0.60 |

| 2 | 98.59 | 0.16 | 97.37 | 0.21 |

| 3 | 98.48 | 0.23 | 97.21 | 0.26 |

| 4 | 89.97 | 0.39 | 80.46 | 0.53 |

| 5 | 99.93 | 0.05 | 99.16 | 0.11 |

| 6 | 89.72 | 0.53 | 78.19 | 0.71 |

| 7 | 99.88 | 0.07 | 99.21 | 0.13 |

| 8 | 99.91 | 0.07 | 99.45 | 0.08 |

| 9 | 99.94 | 0.06 | 99.22 | 0.11 |

| 10 | 99.91 | 0.07 | 98.97 | 0.14 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rituerto-González, E.; Mínguez-Sánchez, A.; Gallardo-Antolín, A.; Peláez-Moreno, C. Data Augmentation for Speaker Identification under Stress Conditions to Combat Gender-Based Violence. Appl. Sci. 2019, 9, 2298. https://doi.org/10.3390/app9112298

Rituerto-González E, Mínguez-Sánchez A, Gallardo-Antolín A, Peláez-Moreno C. Data Augmentation for Speaker Identification under Stress Conditions to Combat Gender-Based Violence. Applied Sciences. 2019; 9(11):2298. https://doi.org/10.3390/app9112298

Chicago/Turabian StyleRituerto-González, Esther, Alba Mínguez-Sánchez, Ascensión Gallardo-Antolín, and Carmen Peláez-Moreno. 2019. "Data Augmentation for Speaker Identification under Stress Conditions to Combat Gender-Based Violence" Applied Sciences 9, no. 11: 2298. https://doi.org/10.3390/app9112298

APA StyleRituerto-González, E., Mínguez-Sánchez, A., Gallardo-Antolín, A., & Peláez-Moreno, C. (2019). Data Augmentation for Speaker Identification under Stress Conditions to Combat Gender-Based Violence. Applied Sciences, 9(11), 2298. https://doi.org/10.3390/app9112298