Abstract

This study proposes a novel quality function deployment (QFD) design methodology based on customers’ emotions conveyed by facial expressions. The current advances in pattern recognition related to face recognition techniques have fostered the cross-fertilization and pollination between this context and other fields, such as product design and human-computer interaction. In particular, the current technologies for monitoring human emotions have supported the birth of advanced emotional design techniques, whose main focus is to convey users’ emotional feedback into the design of novel products. As quality functional deployment aims at transforming the voice of customers into engineering features of a product, it appears to be an appropriate and promising nest in which to embed users’ emotional feedback with new emotional design methodologies, such as facial expression recognition. This way, the present methodology consists in interviewing the user and acquiring his/her face with a depth camera (allowing three-dimensional (3D) data), clustering the face information into different emotions with a support vector machine classificator, and assigning customers’ needs weights relying on the detected facial expressions. The proposed method has been applied to a case study in the context of agriculture and validated by a consortium. The approach appears sound and capable of collecting the unconscious feedback of the interviewee.

1. Introduction

Donald A. Norman, in his book “Emotional design: Why we love (or hate) everyday things” and in other publications has stated that a design is a success only when the final product is successful in making customer buy it, use it, and enjoy it, and spread the word of the product to others [1,2]. He claimed that designers need to ensure that the design satisfies people’s needs in terms of function, usability, and the ability to deliver emotional satisfaction, pride, and delight. In order to design a product that elicits positive emotions, it is necessary to define and be able to predict the target users’ emotional responses early in the design process.

Emotional Design occurred with the object to promote positive emotions [1] or pleasure in users [3,4] thanks to design properties of products and services [5]. According to Van Gorp and Adams [6], since emotions influence decision making, affect memory and attention, and generate meaning, they can deeply affect the overall user experience. The essential part of the design process is the capability of understanding the user’s feelings and emotions [7], and for this reason, user experience (UX) is recognized as a significant advantage in product design by more designers and companies [8,9,10]. In studies that investigated specific constructs of UX and their interrelations, such as hedonic quality and emotion [11], users’ ratings of these constructs, such as users’ preference, usage context, and product features are often obtained and analysed for UX [10]. In the literature, UX is considered a multifaceted phenomena that involves subjective feelings (change in core affect) [12], behavioural, expressive, and physiological reactions [13,14]. Today a number of different methods, such as quality function deployment (QFD) [15], semantical environment description (SMB or SD) [16], conjoint analysis [17], Kano model (Sauerwein, E., Bailom, F., Matzler, K., & Hinterhuber, H. H., 1996), Kano [18], and Kansei engineering [19,20] exist and are used in practical applications to capture the customer’s considerations and feelings of products and translate these emotional aspects into concrete product design [21,22,23,24,25,26,27,28].

Indeed, in the design of a product or service, it is important to translate and transform the emotional features of users to the product characteristics. Emotional engineering is a recent discipline that has introduced methods for predicting and likely being able to control users’ emotional responses with respect to product attributes in order to be able to design and engineer them [29]. The three main method groups for capturing emotional feedback and using it product classification are questioning methods, physiological measurements, and observing methods. Questioning methods aim to capture the customer’s written or oral emotional feedback in the form of answers to certain questions. Closed questions allow for only a limited number of answers, mostly between five and seven, placed on an increasing or decreasing Likert scale. Closed questions are restricting and require intensive conscious cognitive processing of affective reactions. Open questions allow free, creative answers. Physiological measurements are based on the quasi-continuous gathering of central (electroencephalography) signals or peripheral physiological signals (electromyography, blood volume pulse, electro-dermal activity, etc.) of individuals. Observing methods (or behavioural methods), relies on objective or subjective insights gained from the careful observation of the individuals experiencing the product. Measures of expressive behaviour include the description of facial expressions, bodily movements, gesture, and posture [30], and vocal nonverbal parameters. Pattern recognition of facial expressions can be used for emotion analysis, but it cannot be the only measurement on emotions as there are large amounts of emotional information besides what is elicited on human faces [31]. Therefore, these methods are usually used as complementary tools in, for example, Kansei studies.

The aim of the methodology proposed in this paper is to define 1) how to capture human emotions so that consumer’s emotional requirements can be identified; 2) how to identify the relationships between products and emotional needs; 3) how to improve products in such a way that new products better fit consumers’ emotional needs. The proposed approach has been specifically applied to QFD, which is a quality tool to improve customers’ satisfaction by translating their requirements into appropriate technical measures [32,33]. The method relies on recent three-dimensional (3D) face analysis techniques based on the study of the geometrical shape of the facial surface [34,35].

The suggested methodology has been applied in the design of an e-learning path to be used by farmers who want to experience new technologies within the context of the European Erasmus+ project “Farmer 4.0”.

2. Method

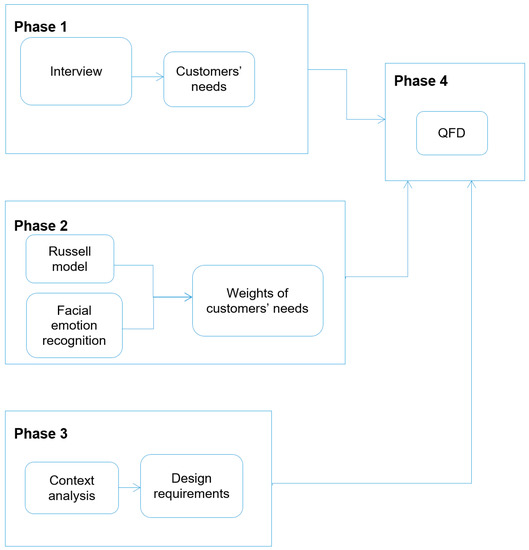

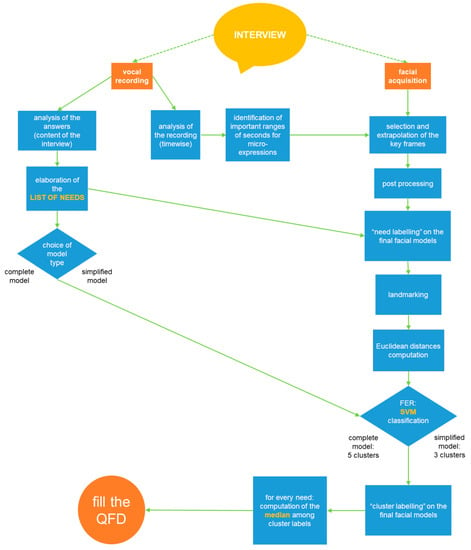

The QFD approach we propose in this work is a four-phase process that specifically introduces a novel method for assigning weights to customers’ needs. The first phase concerns the identification of the customer’s needs with an interview. Phase 2 defines of the weights to be assigned to the customer’s needs through the adoption of the Russell model and emotion recognition. Phase 3 is about the definition of the design requirements, namely services to be offered to respond to customers’ needs. The application of the QFD is in the fourth phase. The method is schematized in Figure 1.

Figure 1.

Layout of the proposed quality function deployment (QFD) methodology.

The assignment of specific numerical values was undertaken with matching between the answers given by a potential customer in a tailored interview and the results of a facial expression recognition (FER) procedure on the customer’s face acquired via depth camera during this interview. All the interviews were both audio-recorded and recorded with the depth camera.

The distance sensing for the depth camera is mapped using coded-light technology (or structured-light). The coded-light technology consists of projecting a known pattern on the surface of an object, a face, or any other element and obtaining information on the depth of the surface according to the way in which the pattern is deformed. This technology is designed specifically for applications at close range and the kind of sensor specifically works best within the range 0.2–1.5 m. The projected pattern is of the IR (infrared) type, and is therefore out of the visible spectrum. The depth video format, as well as the frame-rate, is adjustable. Typically, the recommended resolution is the best available (640 × 480) while the frame-rate is 30 FPS (Frame per Second). The output of every frame is a depth map.

The reason for involving emotions through facial expression monitoring and evaluation was to measure the emotional involvement in every topic, i.e., so that weights are assigned according to a quantification of engagement of the user.

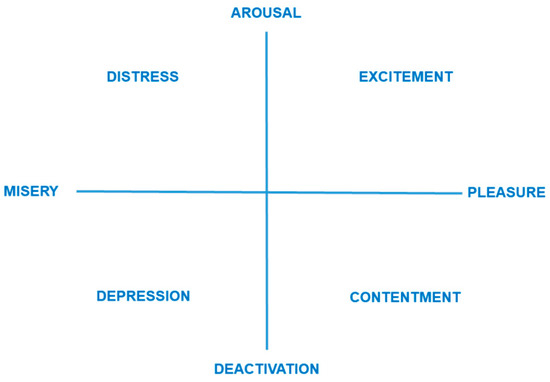

In a traditional QFD, the scale to be used to attribute the weights to the needs is usually a Likert scale of points 1 to 5. Differing from the standard approach, the degree of importance of needs was not directly asked to users through a questionnaire but obtained as output of the facial expression recognition algorithm. A model was searched for in the literature for matching weights (numerical values or degrees) to specific emotions, especially in the perspective of user involvement. Russell proposed an emotional model, called the “circumplex model”, which is shown in Figure 2, that can be discretized as it is placed on a Cartesian plane [36,37]. The x-axis quantifies the positivity/negativity of the emotion; the y-axis quantifies the emotional activation and involvement.

Figure 2.

The eight affect concept elaborated by Russell [36].

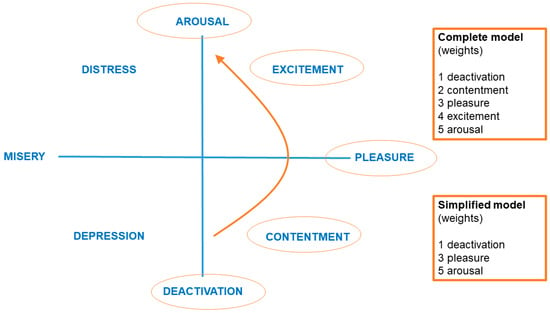

Considering the canonical emotional tone of a professional interview regarding a product to be conceptualized, we considered for our model only the “positive quadrants”, the first and the fourth. Weights 1, 2, 3, 4, and 5 have respectively been assigned to emotions “deactivation”, “contentment”, “pleasure”, “excitement”, and “arousal”, according to the circumplex model. We also took into consideration a simplified model with weights 1, 3, and 5 assigned respectively to “deactivation”, “pleasure”, and “arousal”, which could be adopted instead of the complete one, depending on the emotional involvement degree of the scenario, the topic, and the interviewed person. The two models are shown in Figure 3.

Figure 3.

Chosen emotions from Russell’s model for the QFD and the two weights models.

During the interview, which is organized with the potential user to define his/her needs, a depth camera should be placed in front of him/her to acquire the face frame-by-frame. The camera is started when the interview starts, together with a vocal recording. Then, the vocal recording is analysed and notes should be taken about the exact range of seconds in which the interviewer’s question is about to finish and the interviewee starts answering. This is supposed to be the very moment in which the micro-expression of the inner emotion (the “ground truth feeling”) is displayed by the face. Thus, these are the moments in which the degree of actual emotional involvement of the user could be measured to define the numerical values of the needs weight in QFD.

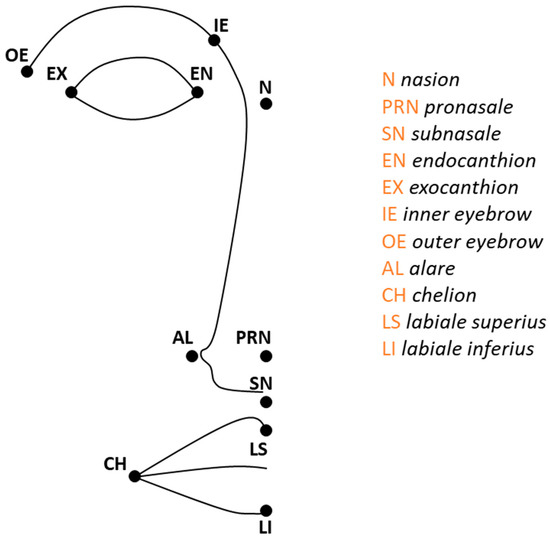

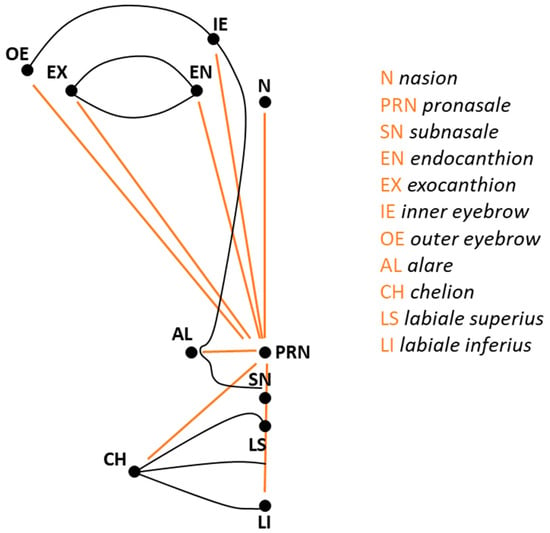

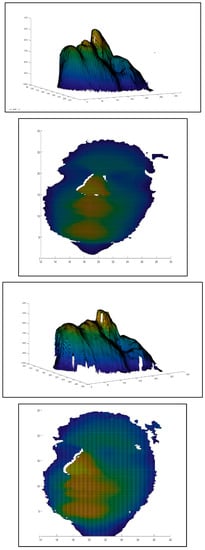

Facial data undergo a selection of the significant frames, which are then post-processed so that a depth map remains, framing the face alone. Then, an algorithm is run on the facial depth map to automatically localize 17 landmarks [38], shown in Figure 4, with a thresholding methodology [39] based on differential geometry descriptors [40].

Figure 4.

Set of anatomical landmarks adopted in this study. The formal definitions of every landmark are provided by Swennen et al. [41], with the exception of the eyebrow points.

Euclidean distances are calculated between landmarks by adopting the pronasale as an anchor point, as shown in Figure 5. Thus, distances between every landmark and the pronasale have been computed.

Figure 5.

Computed Euclidean distances using pronasale (PRN) as an anchor point.

These distances are adopted as features to be inputted in a classification methodology based on the support vector machine (SVM). Other classificators could also be adopted, depending on the cardinality of the dataset. The classification is obtained by providing in advance a number of clusters in which to funnel the data. These clusters represent the emotions; thus, they will be three or five in number, depending on the chosen model, namely the simplified or the complete model.

Once the list of needs is defined according to the interview topics and answers, every facial frame to be adopted in the facial expression recognition methodology will be labelled with a specific need, so that the result of the emotion analysis will provide an emotional involvement outcome for that specific customer’s need. If a facial frame, labelled with need X, is in cluster 4, representing excitement, then value 4 will be added in the set of possible weight values of need X. When the final weight of need X is to be assigned to proceed with the QFD, a median is computed among the available values obtained with the clustering. The whole methodology concerning needs is summed-up in Figure 6.

Figure 6.

Method for defining the list of needs and respective weights via FER, relying on the interview.

Case Study

The method has been applied to a case study in the agricultural field. The final objective is to design an e-learning path for farmers so that they could embed recent technologies in their daily farming practices. The QFD adoption appeared suitable for understanding the current needs in the field and for correlating needs with services.

Thirteen people coming from the field of interest have been interviewed with an interview form, which has been developed in the European project “Farmer 4.0”. The interview, reported in Appendix A, is approximately 40 min long and is composed of 74 questions. All the interviews were audio-recorded; one interviewed person was recorded with the depth camera Intel RealSense SR300. Thus, the 13 audio recordings supported the conceptualization of the needs list and the 3D video-recording was adopted for assigning weights. The person who was recorded with the depth camera was the most representative of the whole group, as he was used to interviews for agriculture projects, to public speaking, and in general with social events and media. He was evaluated as the most suitable candidate for emotion involvement analysis.

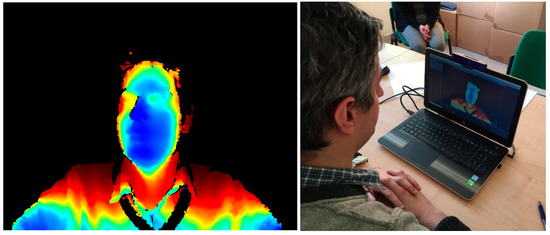

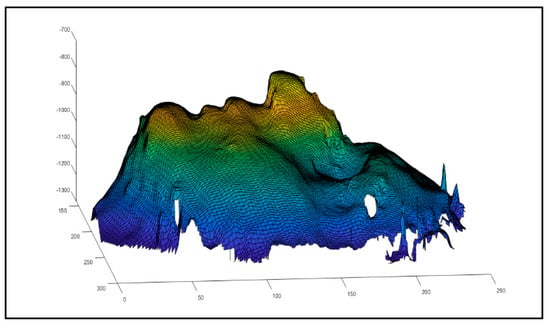

Figure 7 shows the unprocessed depth map given by the camera and a picture taken during the interview.

Figure 7.

Left: Depth map acquired by the sensor prior to processing. Right: The interview set-up.

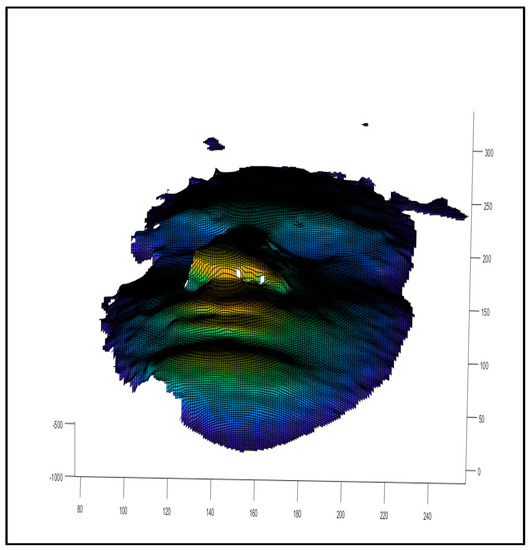

138 frames and subsequent facial depth maps were selected and post-processed on MATLAB, relying on the identification of the key moments of the interview between every question and every answer. They have been “need labelled” so that the results of the FER method could support the assignment of weights accordingly. Figure 8 shows some processed depth maps in which the face has been framed.

Figure 8.

Examples of post-processed facial depth maps coming from the interview. The yellow parts correspond to the highest Z coordinates of the matrix, i.e., the most prominent facial parts; the blueish parts are the lowest.

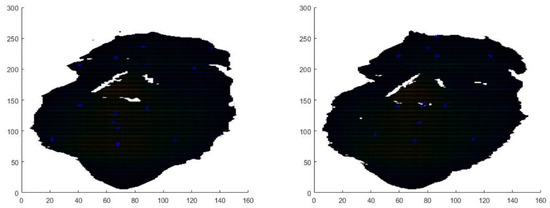

The landmark localization algorithm was then run on the faces (Figure 9) and Euclidean distances were computed.

Figure 9.

Landmark localization on two frames. Landmarks are displayed with blue asterisks.

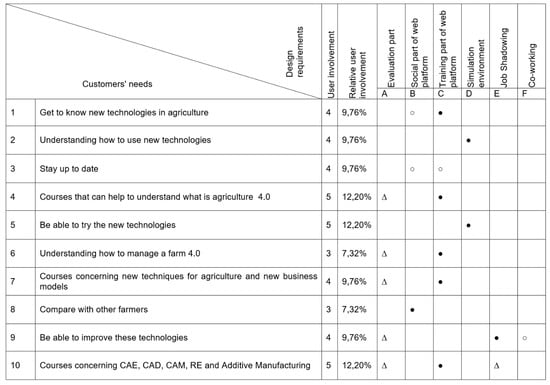

According to the type of data and the emotions displayed by the interviewee’s face, the complete model with weights 1, 2, 3, 4, and 5 was chosen in this case. Thus, five clusters were adopted. Clustering results allowed us to “cluster label” every facial frame so that the median was computed among the obtained weights for every need. The resulting QFD matrix is shown in Figure 10.

Figure 10.

QFD matrix obtained with our proposed methodology in which weights were obtained with FER.

3. Discussion

The obtained final weights underwent a validation, relying on the competencies of the project consortium. The results were examined and evaluated as suitable to every need taken into consideration for this QFD. In the literature, the development of a QFD model that provides technical design elements derived from psychological and behavioural needs was studied in several fields of application [42,43,44,45].

Generally speaking, the information obtained by QFD permits the determining of product improvement directions and sets targets and specifications for the product. The QFD approach decreases time for market and costs as the probability of mid-course corrections and implementation errors is reduced [43,46,47,48,49].

In particular, the advantage of the present methodology compared with other traditional QFD approaches is that the weight assignation does not require any work from the interviewee, as the method determines the weight values relying only on the detected facial expressions. The counterpart is that the proposed technique requires a depth camera, which, despite its low cost, may not be available for the research group. As well as this, it requires some processing of the data and knowledge about clustering techniques. Nonetheless, several software and routines in this sense are already open and available for the community.

The methodology is still preliminary and not ready for all application contexts yet. The next steps of research will strengthen the FER methodology by adding new geometrical features so that the clustering can be more robust. A psychologist will be asked to evaluate the emotions of the costumer during the interview so that a validation of the SVM classification can be provided.

4. Conclusions

A new QFD methodology is proposed in this work, in which needs weights are assigned relying on detected facial expressions. Russell’s circumplex model of emotions is used as theoretical background to discretize the emotion interval and make them correspond to numerical values representing degrees of emotional involvement. During interviews, the face of the user should be acquired frame-by-frame with a depth camera, which allows for the obtaining of 3D models of the face. These data are processed and classified with a support vector machine and weights are assigned according to the result of this classification.

The approach has been applied to a case study of a European project in agriculture in order to understand the needs of agricultural entrepreneurs. The method, validated by the project consortium, appears to accurately weight the inner needs, and the resulting QFD worked appropriately for the project purposes.

This technique is new in the field of product design, as it merges knowledge from the user-centred design field and pattern recognition techniques coming from a computer vision background. Even if the method is still preliminary, this approach could open a vein for designing new products which respond to the inner needs of the customer.

Author Contributions

Conceptualization, M.G.V. and F.M.; data curation, M.G.V., F.M., L.U., G.B., and L.D.G.; formal analysis, M.G.V., F.M., G.B., and L.D.G.; funding acquisition, M.G.V.; investigation, M.G.V. and F.M.; methodology, M.G.V. and F.M.; software, L.U.; supervision, M.G.V., F.M., and E.V.; validation, M.G.V., F.M., G.B., and L.D.G.; visualization, M.G.V., F.M., G.B., and L.D.G.; writing—original draft, M.G.V. and F.M.; writing—review and editing, F.M.

Funding

The research undertaken for this work and the analysis of the case study has been possible thanks to the funds of the European Erasmus+ project “Farmer 4.0”, project number: 2018-1-IT01-KA202-006775.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Appendix A

Interview for Project Farmer 4.0

Suggestions for Farmer 4.0 Focus Groups with Farmers and Professionals/Stakeholders in Agricultural Matters

- Do you know the different legal forms to give to a company (family, individual, small company, and so on)?

- What is your knowledge about new technologies in the agricultural field?

- What advantages in production do you think are derived from a systematic use of ground and air robots?

- How much can the innovative cultivation processes favour the presence of women in agricultural entrepreneurship?

- The importance of new technologies for agricultural safety?

- What are the advantages for farms that use underground sensors?

- The Internet of Thinking (IOT) and the chips in animals?

- The IOT to improve the efficiency of farms in terms of monitoring the production and organization of daily activities: the campaign notebook?

- Agriculture 4.0 and the new business models?

- The new Arduino software for remote control via smartphone (lights, sprinkler, music, etc.)?

- How easy is it to become aware of new equipment/technologies mentioned above to facilitate farm work? And those for the transformation of the product?

- And, knowing the new equipment/technologies mentioned above, what are the difficulties in using these and getting them?

- How easy is it to stay up to date?

- How are new product transformation technologies are suitable for small businesses? (Here, it must be kept in mind that a young man who intends to open a micro-farm will have small quantities of product to be processed and, if the machinery is not suitable even for small quantities, it will be difficult to close the supply chain. Moreover, above all at the beginning, he cannot afford to have employees).

- How useful (on a scale of 1 to 10) are the following competences from the perspective of the Global Farm 4.0?

- Knowing funding for starting, maintaining, and expanding own business______________

- Understanding the business culture of the country work______________________________

- Entering local and/or international markets_________________________________________

- Developing fundamental skills for being a successful entrepreneur_____________________

- Information and Communications Technology (ICT) knowledge for managing a Farm 4.0____________________________________________

- Are there other competences to take into consideration?

- In the area of “actions”: which of the following skills do you think are to be developed more in a 4.0 perspective? (Select one or more alternatives).

- taking the initiative________________________________________________________

- planning and management__________________________________________________

- coping with ambiguity, uncertainty, and risk working with others____________________

- learning through experiences_________________________________________________

- In the area of “resources”: which of the following skills do you think are to be developed more in a 4.0 perspective? (Select one or more alternatives).

- self-awareness and self-efficacy__________________________________________________

- motivation and perseverance____________________________________________________

- mobilising resourcing___________________________________________________________

- financial and economic literacy___________________________________________________

- mobilising others_______________________________________________________________

- In the area of “ideas and opportunities”: which of the following skills do you think are to be developed more in a 4.0 perspective? (Select one or more alternatives).

- ethical and sustainable thinking___________________________________________________

- valuing ideas__________________________________________________________________

- vision and creativity____________________________________________________________

- spotting opportunities__________________________________________________________

- Which social networks do you consider most important? Facebook or Instagram?

- How much and how can social networks give visibility to your company?

- Do you believe that social networks can be used for your business in terms of: (Select yes or no)

- Buying raw materials Yes No

- Selling your production Yes No

- Exchanging best practices Yes No

- Asking opinions from other farmers to solve practical problems Yes No

- How much do you know about FabLab?

- Do you know what the CAE/CAD/CAM/RE are?

- Do you know what 3D printers are? How are they used?

- Regarding the FabLab approach, on a scale from 1 to 10, how much do you consider the following design and manufacturing tools to be useful?

- CAD: Computer aided design_____________________________________________

- CAE: Computer aided engineering__________________________________________

- RE: Reverse engineering__________________________________________________

- CAM: Computer aided manufacturing________________________________________

- Additive Manufacturing—3D Printing________________________________________

- Do you think these tools can be used to support your work?

- Can they be useful and efficient in the agricultural sector? If yes, how?

- What do you think should be the main topics to be included in an interactive entrepreneurship course for Farmer 4.0?

- Regarding our scheme of the project, how important do you consider the following phases? (from 1 to 10):

- The evaluation path of the user in terms of skills and abilities________________________________________________________________________

- The training path________________________________________________________________________

- The simulative environment________________________________________________________________________

- The job shadowing________________________________________________________________________

- The co-working________________________________________________________________________

- Could it be useful to represent in the simulation environment a virtual FabLab in which the user can move at 360° to view typical FabLab equipment and tools and learn how they work?

References

- Norman, D.A. Emotional Design: Why We Love (or Hate) Everyday Things; Basic Civitas Books: New York, NY, USA, 2004. [Google Scholar]

- Norman, D.A. Cognitive engineering. User Cent. Syst. Des. 1986, 31, 61. [Google Scholar]

- Jordan, P.W. Designing Pleasurable Products: An Introduction to the New Human Factors; CRC Press: London, UK, 2003. [Google Scholar]

- Green, W.S.; Jordan, P.W. Pleasure with Products: Beyond Usability; CRC Press: London, UK, 2002. [Google Scholar]

- Triberti, S.; Chirico, A.; la Rocca, G.; Riva, G. Developing emotional design: Emotions as cognitive processes and their role in the design of interactive technologies. Front. Psychol. 2017, 8, 1773. [Google Scholar] [CrossRef] [PubMed]

- Van Gorp, T.; Adams, E. Design for Emotions; Elsevier: Waltham, MA, USA, 2012. [Google Scholar]

- Sáenz, D.C.; Domínguez, C.E.D.; Llorach-Massana, P.; García, A.A.; Arellano, J.L.H. A series of recommendations for industrial design conceptualizing based on emotional design. In Managing Innovation in Highly Restrictive Environments; di Cortés-Robles, G., García-Alcaraz, J.L., Alor-Hernández, G., Eds.; Springer: Cham, Switzerland, 2019; pp. 167–185. [Google Scholar]

- Lin, C.J.; Cheng, L.Y. Product attributes and user experience design: How to convey product information through user-centered service. J. Intell. Manuf. 2017, 28, 1743–1754. [Google Scholar] [CrossRef]

- Pucillo, F.; Cascini, G. A framework for user experience, needs and affordances. Des. Stud. 2014, 35, 160–179. [Google Scholar] [CrossRef]

- Yang, B.; Liu, Y.; Liang, Y.; Tang, M. Exploiting user experience from online customer reviews for product design. Int. J. Inf. Manag. 2019, 46, 173–186. [Google Scholar] [CrossRef]

- Hassenzahl, M. The interplay of beauty, goodness, and usability in interactive products. Hum.-Comput. Interact. 2004, 19, 319–349. [Google Scholar] [CrossRef]

- Mugge, R.; Schifferstein, H.N.; Schoormans, J.P. Product attachment and satisfaction: Understanding consumers’ post-purchase behavior. J. Consum. Mark. 2010, 27, 271–282. [Google Scholar] [CrossRef]

- Desmet, P.M.; Hekkert, P. Framework of product experience. Int. J. Des. 2007, 1, 57–66. [Google Scholar]

- Mahut, T.; Bouchard, C.; Omhover, J.F.; Favart, C.; Esquivel, D. Interdependency between user experience and interaction: A kansei design approach. Int. J. Interact. Des. Manuf. (IJIDeM) 2018, 12, 105–132. [Google Scholar] [CrossRef]

- Vezzetti, E.; Marcolin, F.; Guerra, A.L. QFD 3D: A new C-shaped matrix diagram quality approach. Int. J. Qual. Reliab. Manag. 2016, 33, 178–196. [Google Scholar] [CrossRef]

- Osgood, C.; Suci, G.; Tannenbaum, P. The Measurement of Meaning; University of Illinois Press: Urbana-Champaign, IL, USA, 1967. [Google Scholar]

- Green, P.E.; Srinivasan, V. Conjoint Analysis in consumer research: Issues and outlook. J. Consum. Res. 1978, 5, 103–123. [Google Scholar] [CrossRef]

- Violante, M.G.; Vezzetti, E. Kano qualitative vs quantitative approaches: An assessment framework for products attributes analysis. Comput. Ind. 2017, 86, 15–25. [Google Scholar] [CrossRef]

- Nagamachi, M. Kansei/Affective Engineering; CRC Press: London, UK, 2016. [Google Scholar]

- Hartono, M.; Tan, K.C.; Peacock, J.B. Applying Kansei Engineering, the Kano model and QFD to services. Int. J. Serv. Econ. Manag. 2013, 5, 256–274. [Google Scholar] [CrossRef]

- Lee, S.; Harada, A.; Stappers, P.J. Pleasure with products: Design based on Kansei. In Pleasure with Products: Beyond Usability; CRC Press: London, UK, 2002; pp. 219–229. [Google Scholar]

- Huang, Y.; Chen, C.H.; Khoo, L.P. Products classification in emotional design using a basic-emotion based semantic differential method. Int. J. Ind. Ergon. 2012, 42, 569–580. [Google Scholar] [CrossRef]

- Barone, S.; Lombardo, A.; Tarantino, P. A weighted logistic regression for conjoint analysis and Kansei engineering. Qual. Reliab. Eng. Int. 2007, 23, 689–706. [Google Scholar] [CrossRef]

- Violante, M.G.; Vezzetti, E. Virtual interactive e-learning application: An evaluation of the student satisfaction. Comput. Appl. Eng. Educ. 2015, 23, 72–91. [Google Scholar] [CrossRef]

- Violante, M.G.; Vezzetti, E. A methodology for supporting requirement management tools (RMt) design in the PLM scenario: An user-based strategy. Comput. Ind. 2014, 65, 1065–1075. [Google Scholar] [CrossRef]

- Violante, M.G.; Vezzetti, E.; Alemanni, M. An integrated approach to support the Requirement Management (RM) tool customization for a collaborative scenario. Int. J. Interact. Des. Manuf. (IJIDeM) 2017, 11, 191–204. [Google Scholar] [CrossRef]

- Violante, M.G.; Vezzetti, E. Implementing a new approach for the design of an e-learning platform in engineering education. Comput. Appl. Eng. Educ. 2014, 22, 708–727. [Google Scholar] [CrossRef]

- Violante, M.G.; Vezzetti, E. Guidelines to design engineering education in the twenty-first century for supporting innovative product development. Eur. J. Eng. Educ. 2017, 42, 1344–1364. [Google Scholar] [CrossRef]

- Fukuda, S. Emotional Engineering: Service Development; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Castellano, G.; Mortillaro, M.; Camurri, A.; Volpe, G.; Scherer, K. Automated analysis of body movement in emotionally expressive piano performances. Music Percept. Interdiscip. J. 2008, 26, 103–119. [Google Scholar] [CrossRef]

- Schmidt, S.; Stock, W.G. Collective indexing of emotions in images. A study in emotional information retrieval. J. Am. Soc. Inf. Sci. Technol. 2009, 60, 863–876. [Google Scholar] [CrossRef]

- Sullivan, L.P. Quality Function Deployment; Quality Progress (ASQC): Milwaukee, WI, USA, 1986; pp. 39–50. [Google Scholar]

- Akao, Y.; Mazur, G.H. The leading edge in QFD: Past, present and future. Int. J. Qual. Reliab. Manag. 2003, 20, 20–35. [Google Scholar] [CrossRef]

- Vezzetti, E.; Tornincasa, S.; Moos, S.; Marcolin, F. 3D Human Face Analysis: Automatic Expression Recognition, Biomedical Engineering; ACTA Press: Innsbruck, Austria, 2016. [Google Scholar]

- Tornincasa, S.; Vezzetti, E.; Moos, S.; Violante, M.G.; Marcolin, F.; Dagnes, N.; Ulrich, L.; Tregnaghi, G.F. 3D Facial Action Units and Expression Recognition using a Crisp Logic. Comput.-Aided Des. Appl. 2019, 16, 256–268. [Google Scholar] [CrossRef]

- Russell, J.A. A circumplex model of affect. J. Personal. Soc. Psychol. 1980, 39, 1161. [Google Scholar] [CrossRef]

- Russell, J.A.; Fehr, B. Relativity in the perception of emotion in facial expressions. J. Exp. Psychol. Gen. 1987, 116, 223. [Google Scholar] [CrossRef]

- Marcolin, F. Miscellaneous expertise of 3D facial landmarks in recent literature. Int. J. Biom. 2017, 9, 279–304. [Google Scholar] [CrossRef]

- Vezzetti, E.; Marcolin, F.; Tornincasa, S.; Ulrich, L.; Dagnes, N. 3D geometry-based automatic landmark localization in presence of facial occlusions. Multimed. Tools Appl. 2017. [Google Scholar] [CrossRef]

- Marcolin, F.; Vezzetti, E. Novel descriptors for geometrical 3D face analysis. Multimed. Tools Appl. 2017, 76, 13805–13834. [Google Scholar] [CrossRef]

- Swennen, G.R.; Schutyser, F.A.; Hausamen, J.E. Three-Dimensional Cephalometry: A Color Atlas and Manual; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2005. [Google Scholar]

- Avikal, S.; Singh, R.; Rashmi, R. QFD and fuzzy kano model based approach for classification of aesthetic attributes of SUV car profile. J. Intell. Manuf. 2018, 1–14. [Google Scholar] [CrossRef]

- Dolgun, L.E.; Köksal, G. Effective use of quality function deployment and Kansei engineering for product planning with sensory customer requirements: A plain yogurt case. Qual. Eng. 2018, 30, 569–582. [Google Scholar] [CrossRef]

- Han, D.I.D.; Jung, T.; Tom Dieck, M.C. Translating tourist requirements into mobile AR application engineering through QFD. Int. J. Hum.–Comput. Int. 2019, 1–17. [Google Scholar] [CrossRef]

- Ho, W.C.; Lee, A.W.; Lee, S.J.; Lin, G.T. The Application of quality function deployment to smart watches–the house of quality for improved product design. J. Sci. Ind. Res. 2018, 77, 149–152. [Google Scholar]

- Benner, M.; Linnemann, A.R.; Jongen, W.M.F.; Folstar, P. Quality Function Deployment (QFD)—Can it be used to develop food products? Food Qual. Prefer. 2003, 14, 327–339. [Google Scholar] [CrossRef]

- Bouchereau, V.; Rowlands, H. Methods and techniques to help quality function deployment (QFD). Benchmarking Int. J. 2000, 7, 8–20. [Google Scholar] [CrossRef]

- Chan, L.K.; Wu, M.L. Quality function deployment: A comprehensive review of its concepts and methods. Qual. Eng. 2002, 15, 23–35. [Google Scholar] [CrossRef]

- Zare Mehrjerdi, Y. Quality function deployment and its extensions. Int. J. Qual. Reliab. Manag. 2010, 27, 616–640. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).