Featured Application

This algorithm can be applied to various kinds of sparse channel estimations, e.g., room impulse response, early reflection, and underwater channel response.

Abstract

This paper presents a new l1-RLS method to estimate a sparse impulse response estimation. A new regularization factor calculation method is proposed for l1-RLS that requires no information of the true channel response in advance. In addition, we also derive a new model to compensate for uncertainty in the regularization factor. The results of the estimation for many different kinds of sparse impulse responses show that the proposed method without a priori channel information is comparable to the conventional method with a priori channel information.

1. Introduction

Room impulse response (RIR) estimation is a problem in many applications that use acoustic signal processing. The RIR identification [1] is fundamental for various applications such as room geometry related spatial audio applications [2,3,4,5], acoustic echo cancellation (AEC) [6], speech enhancement [7], and dereverberation [8]. In [9], the RIR has relatively large magnitude values during the early part of the reverberation and fades to smaller values during the later part. This indicates that most RIR entries have values close to zero. Therefore, the RIR has a sparse structure. The sparse RIR model is useful for estimating RIRs in real acoustic environments when the source is given a priori [10]. There has been recent interest in adaptive algorithms for sparsity in various signals and systems [11,12,13,14,15,16,17,18,19,20,21,22]. Many adaptive algorithms based on least mean square (LMS) [11,12] and recursive least squares (RLS) [14,15,16,17] have been reported with different penalty functions. Sparse estimation research, such as that done by Eksioglu and Tanc [17], has proposed a sparse RLS algorithm, l1-RLS, which is fully recursive like the plain RLS algorithm. The algorithm of l1-RLS in [17] proposed a proper calculation method for the regularization factor. These recursive algorithms have the potential for sparse RIR estimation; however, the regularization factor should be established prior to applying these algorithms. The regularization factor calculation method requires information about a true sparse channel response for a good performance. The authors in [18,19] have also proposed recursive regularization factor selection methods; however, these methods still need the true impulse response in advance.

In this paper, we propose a new regularization factor calculation method for l1-RLS algorithm in [17]. The new regularization factor calculation needs no information for the true channel response in advance. This makes it possible to apply l1-RLS algorithm in various room environments. In addition, we derive a new model equation for l1-RLS in [17] with uncertainty in the regularization factor and show that the new model is similar to the total least squares (TLS) model that compensates for uncertainty in the calculated regularization factor without the true channel response. For the performance evaluation, we simulate four different sparse channels and compare channel estimation performances. We show that, without any information of the true channel impulse response, the performance of the proposed algorithm is comparable to that of l1-RLS with the information of the true channel impulse response.

This paper is organized as follows. In Section 2, we summarize l1-RLS in [17]. In Section 3, we summarize the measure of sparsity. In Section 4, we propose a new method for the regularization calculation. In Section 5, we show that l1-RLS with uncertainty in the regularization factor can be modeled as the TLS model. In Section 6, we summarize l1-RTLS (recursive total least squares) algorithm as a solution for l1-RLS with uncertainty in the regularization factor. In Section 7, we present simulation results to show the performance of the proposed algorithm. Finally, we give the conclusion in Section 8.

2. Summarize l1-RLS

In the sparse channel estimation problem of interest, the system observes a signal represented by an vector at time instant n, performs filtering, and obtains the output , where is the M dimensional actual system with finite impulse response (FIR) type. For system estimation, an adaptive filter system applies with M dimensional vector to the same signal vector and produces an estimated output , and calculates the error signal , where is the measurement noise, is the output of the actual system, and is the estimated output. In order to estimate the channel impulse response, an adaptive algorithm minimizes the cost function defined by

From the gradient based minimization, Equation (1) becomes

where and . This equation is the normal equation for the least squares solution. Especially, is considered as a sparse system when the number of nonzero coefficients is less than the system order of . In order to estimate the sparse system, most estimation algorithms exploit non-zero coefficients in the system [11,12,13,14,15,16,17]. In [17], Eksioglu proposed a full recursive l1-regularized algorithm by the minimization of the object function as shown in Equation (3).

where . From the minimization of Equation (3), a modified normal equation was derived as shown in Equation (4).

When we solve Equation (4), we should select the regularization factor as shown in Equation (5).

where and the subgradient of is . In Equation (5), the regularization factor has the parameter, , which should be set beforehand. In [17], the parameter was set as with indicating the impulse response of the true channel. There was no further discussion about how to set . However, it is not practical to know the true channel in advance.

3. Measure of Sparseness

In [20], the sparseness of a channel impulse response is measured by Equation (6).

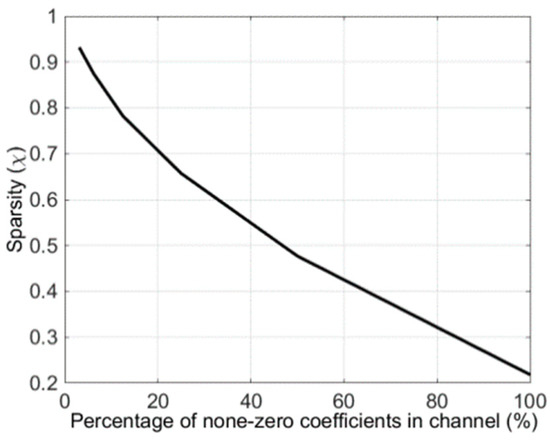

where is the p-norm of and L is the dimension of . The range of is . That is dependent on the sparseness of . As becomes sparser, the sparsity, , comes close to 1, and as becomes denser, comes close to 0. We often have small and none-zero value of , even in a dense channel. For example, Figure 1 shows the relation of the value of and the percentage of none-zero components in with L = 215. In Figure 1, we consider all possible cases of none-zero components in .

Figure 1.

Sparsity () vs. the percentage of none zero coefficients in the channel impulse response.

4. New Selection Method in the Sparsity Regularization Constant

Section 2 shows that the regularization constant in Equation (5) needs to be set as . However, we need a new method in the constant selection because Equation (5) is not practical. Therefore, Section 4 proposes a new method to set this constant.

For a practical method for the constant selection, we can consider using the estimated vector instead of using the true vector because , the solution with l1-norm, will be closer to the sparse true vector than the solution of the conventional RLS. The more iteration is repeated, the more converges to the true value. Conventional RLS also converges to the true value; however, the solution with l1-norm, is closer to the sparse true value. Therefore, we can use sparse estimate instead of when we set , and the uncertainty arising from this is compensated through a TLS solution in the next section. When we determine using the estimated , we choose between the average and the current estimate . Table 1 summarizes the selection steps.

Table 1.

selection method in the sparsity regularization constant .

The determination method for value shown in Table 1 is as follows. In Step 1, the sparsity of the estimated is calculated. The sparsity represents the sparseness of as a number [23]. In Step 2, l1-norm of the estimated is scaled and the value is averaged with the previous value. The scaling value approaches 1 as the sparsity, , gets close to 1. However, the scaling value gets close to as the sparsity, , gets close to 0. Therefore, the scaling does not change l1-norm of for the sparse . Instead the scaling changes the l1-norm smaller for the dense . In Step 3, the smaller one between the averaged and the l1-norm of the estimated is selected as the new value. In this case, the value becomes completely new if the l1-norm of the estimated is selected, otherwise the previous trend is maintained. In Figure 1, the reference value 0.75 used in Step 3 means that less than 16% of all the impulse response taps are not zero.

5. New Modeling for l1-RLS with Uncertainty in the Regularization Factor

If we set , the regularization factor becomes

Then,

Using Equation (8), Equation (4) becomes

is represented as

By applying Equation (10) to Equation (9),

where is i-th element of . Then it is simplified as

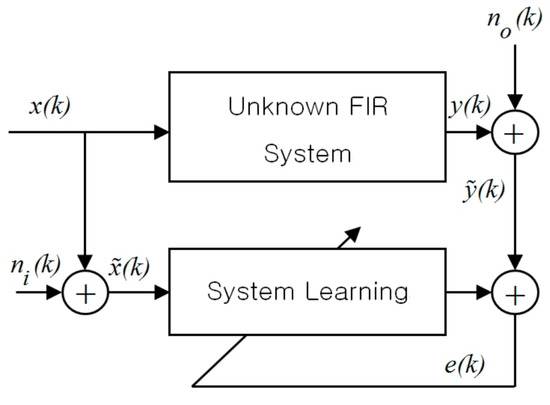

Equation (12) is very similar to the system model in Figure 2 that is contaminated by noise both in input and in output. Suppose that an example of the system in Figure 2 is represented as

where is , is , and is . Equation (13) is simplified as

Figure 2.

The model of a noisy input and noisy output system.

If we multiply Equation (14) by A and average it, we get

We can rewrite Equation (15) as follows

Then, it can be represented as

When we compare Equation (12) with Equation (17), the two system models have almost the same form. Therefore, it is feasible that the TLS method can be applied to Equation (12) [24,25,26,27,28,29,30]. Therefore, we expect to obtain almost the same performance as l1-RLS with the true channel response if we apply the TLS method by the regularization factor with the new in Table 1. In the next section, we summarize l1-RTLS (recursive total least squares) algorithm in [29].

6. Summarize l1-RTLS for the Solution of l1-RLS with Uncertainty in the Regularization Factor

Lim, one of the authors of this paper, has proposed the TLS solution for l1-RLS known as l1-RTLS [30]. In this section, we summarize l1-RTLS in [30] for the solution of Equation (11).

The TLS system model assumes that both input and output are contaminated by additive noise as Figure 2. The output is given by

where the output noise is the Gaussian white noise with variance . The noisy input vector in the system is modeled by

where and the input noise is the Gaussian white noise with variance . For the TLS solution, we set the augmented data vector as

The correlation matrix is represented as

where , , and . In [27,28], the TLS problem becomes to find the eigenvector associated with the smallest eigenvalue of . Equation (22) is the typical cost function to find the eigenvector associated with the smallest eigenvalue of .

where is a sample correlation matrix at k-th instant, and in which is the estimation result for the unknown system at k-th instant. We modify the cost function by adding a penalty function in order to reflect prior knowledge about the true sparsity system.

where is the Lagrange multiplier and is the regularized parameter in [13]. We solve the equations by and simultaneously.

where the subgradient of is . From (24), we obtain

Substituting Equation (26) in Equation (25), we get

or

Substituting in Equation (26) by Equation (28) leads to

Equation (29) can be expressed in a simple form as

where . Because asymptotically as , Equation (29) can be approximated as the following two equations.

Finally, we obtain the estimated parameter of the unknown system as

For Equation (23), we can use the modified regularization factor in [30]

where , , , and is the estimated parameter by recursive least squares (RLS). As , the subgradient of is

7. Simulation Results

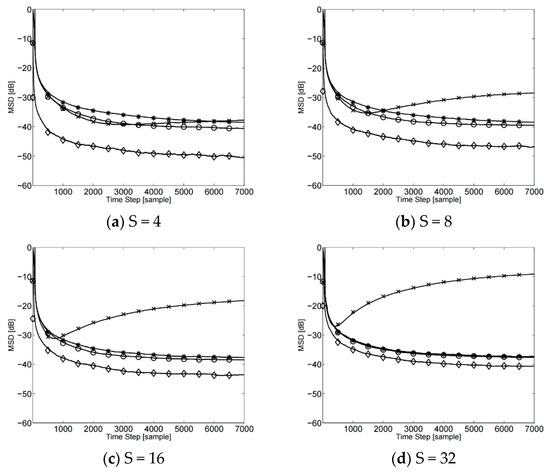

This section confirms the performance of the proposed algorithm in sparse channel estimation. In the first experiment, the channel estimation performance is compared with other algorithms using randomly generated sparse channels. In this simulation, we follow the same scenario in the experiments as [17]. The true system vector is 64 dimensions. In order to generate the sparse channel, we set the number of the nonzero coefficients, S, in the 64 coefficients and randomly position the nonzero coefficients. The values of the coefficients are taken from an distribution, where is the normal distribution. In the simulation, we estimate the channel impulse response by the proposed algorithms that are l1-RLS using the in Table 1 and l1-RTLS using the in Table 1. For the comparison, we estimate the channel impulse response by l1-RLS using the true channel response; in addition, we also execute the regular RLS algorithm in an oracle setting (oracle-RLS) where the positions of the true nonzero system parameters are assumed to be known. For the estimated channel results, we calculate the mean standard deviation (MSD), where , is the estimated channel response and is the true channel response. For the performance evaluation, we simulate the algorithms in the sparse channels for S = 4, 8, 16, and 32.

Figure 3 illustrates the MSD curves. For S = 4, Figure 3a shows that the estimation performance of l1-RTLS using the regularization factor with the in Table 1 is almost the same as the l1-RLS using regularization with a true channel impulse response. However, the performance of l1-RLS using the regularization factor with the in Table 1 is gradually degraded and shows a kind of uncertainty accumulation effect. In the other cases of S, we can observe the same trend in the MSD curves. Therefore, we can confirm that the new regularization factor selection method and the new modeling for l1-RLS can estimate the sparse channel as good as l1-RLS using the regularization with the true channel impulse response. In all the simulation scenarios, oracle RLS algorithm produces the lowest MSD as expected.

Figure 3.

Steady-state MSD for S = 4, 8, 16, and 32 when applying the new method to the regularization factor (-o-: l1-RLS with the true channel response, -×-: l1-RLS with the new method, -*-: proposed l1-RTLS with the new method, --: oracle-RLS).

Table 2 summarizes the steady-state MSD values as varying S from 4 to 32. The results show that the proposed l1-RTLS with the new is comparable to l1-RLS with the true channel.

Table 2.

MSD (mean square deviation) comparison.

In the second experiment, we compare channel estimation performance using room impulse response. The size of the room is (7.49, 6.24, 3.88 m). The position of the sound source is (1.53, 0.96, 1.12 m) and the position of the receiver is (1.81, 5.17, 0.71 m), respectively. T60 is set to 100 ms and 400 ms. The impulse response of the room is generated using the program in [31]. We focus on the direct reflection part and the early reflection part in the RIR because the direct reflection and early reflection part of the RIR has a sparse property. This is the part that is estimated in the AEC applications [32]. This part is also related to localization and clarity in room acoustics [33,34,35]. Comparing the impulse response (IR) generated by setting T60 = 100 ms to the channel with 65 coefficients used in the first experiment, it is equivalent to S = 4 in the channel with 65 coefficients. In the same manner, the IR generated by setting T60 = 400 ms is equivalent to S = 10.

Table 3 summarizes the steady-state MSD values. The results also show the same trend as Table 2. In RIR estimation, the proposed l1-RTLS with the new is also comparable to l1-RLS with the true channel.

Table 3.

MSD (mean square deviation) comparison in sparse RIR estimations.

8. Conclusions

In this paper, we have proposed the regularization factor for recursive adaptive estimation. The regularization factor needs no prior knowledge of the true channel impulse response. We have also reformulated the recursive estimation algorithm as l1-RTLS type. This formulation is robust to the uncertainty in the regularization factor without a priori knowledge of the true channel impulse response. Simulations show that the proposed regularization factor and l1-RTLS algorithm provide good performance comparable to l1- RLS with the knowledge of the true channel impulse response.

Author Contributions

Conceptualization, J.L.; Methodology, J.L.; Validation, J.L. and S.L.; Formal analysis, J.L.; Investigation, J.L. and S.L.; Writing—original draft preparation, J.L.; Writing—review and editing, S.L.; Visualization, J.L.; Project administration, J.L.; Funding acquisition, J.L. and S.L.

Funding

This research received no external funding.

Acknowledgments

This research was supported by Agency for Defense Development (ADD) in Korea (UD160015DD).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Benichoux, A.; Simon, L.; Vincent, E.; Gribonval, R. Convex regularizations for the simultaneous recording of room impulse responses. IEEE Trans. Signal Process. 2014, 62, 1976–1986. [Google Scholar] [CrossRef]

- Merimaa, J.; Pulkki, V. Spatial impulse response I: Analysis and synthesis. J. Audio Eng. Soc. 2005, 53, 1115–1127. [Google Scholar]

- Dokmanic, I.; Parhizkar, R.; Walther, A.; Lu, Y.M.; Vetterli, M. Acoustic echoes reveal room shape. Proc. Natl. Acad. Sci. USA 2013, 110, 12186–12191. [Google Scholar] [CrossRef] [PubMed]

- Remaggi, L.; Jackson, P.; Coleman, P.; Wang, W. Acoustic reflector localization: Novel image source reversion and direct localization methods. IEEE Trans. Audio Speech Lang. Process. 2017, 25, 296–309. [Google Scholar] [CrossRef]

- Baba, Y.; Walther, A.; Habets, E. 3D room geometry interference based on room impulse response stacks. IEEE Trans. Audio, Speech Lang. Process. 2018, 26, 857–872. [Google Scholar] [CrossRef]

- Goetze, S.; Xiong, F.; Jungmann, J.O.; Kallinger, M.; Kammeyer, K.; Mertins, A. System Identification of Equalized Room Impulse Responses by an Acoustic Echo Canceller using Proportionate LMS Algorithms. In Proceedings of the 130th AES Convention, London, UK, 13 May 2011; pp. 1–13. [Google Scholar]

- Yu, M.; Ma, W.; Xin, J.; Osher, S. Multi-channel l1 regularized convex speech enhancement model and fast computation by the split Bregman method. IEEE Trans. Audio Speech Lang. Process. 2012, 20, 661–675. [Google Scholar] [CrossRef]

- Lin, Y.; Chen, J.; Kim, Y.; Lee, D.D. Blind channel identification for speech dereverberation using l1-norm sparse learning. In Proceedings of the Twenty-First Annual Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 3–6 December 2007; pp. 921–928. [Google Scholar]

- Naylor, P.A.; Gaubitch, N.D. Speech Dereverberation; Springer: London, UK, 2010; pp. 219–270. [Google Scholar]

- Duttweiler, D.L. Proportionate normalized least-mean-squares adaptation in echo cancelers. IEEE Trans. Speech Audio Process. 2000, 8, 508–518. [Google Scholar] [CrossRef]

- Gu, Y.; Jin, J.; Mei, S. Norm Constraint LMS Algorithm for Sparse System Identification. IEEE Signal Process. Lett. 2009, 16, 774–777. [Google Scholar]

- Chen, Y.; Gu, Y.; Hero, A.O. Sparse LMS for system identification. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Taipei, Taiwan, 19–24 April 2009; pp. 3125–3128. [Google Scholar]

- He, X.; Song, R.; Zhu, W.P. Optimal pilot pattern design for compressed sensing-based sparse channel estimation in OFDM systems. Circuits Syst. Signal Process. 2012, 31, 1379–1395. [Google Scholar] [CrossRef]

- Babadi, B.; Kalouptsidis, N.; Tarokh, V. SPARLS: The sparse RLS algorithm. IEEE Trans. Signal Process. 2010, 58, 4013–4025. [Google Scholar] [CrossRef]

- Angelosante, D.; Bazerque, J.A.; Giannakis, G.B. Online adaptive estimation of sparse signals: Where RLS meets the l1-norm. IEEE Trans. Signal Process. 2010, 58, 3436–3447. [Google Scholar] [CrossRef]

- Eksioglu, E.M. Sparsity regularised recursive least squares adaptive filtering. IET Signal Process. 2011, 5, 480–487. [Google Scholar] [CrossRef]

- Eksioglu, E.M.; Tanc, A.L. RLS algorithm with convex regularization. IEEE Signal Process. Lett. 2011, 18, 470–473. [Google Scholar] [CrossRef]

- Sun, D.; Liu, L.; Zhang, Y. Recursive regularisation parameter selection for sparse RLS algorithm. Electron. Lett. 2018, 54, 286–287. [Google Scholar] [CrossRef]

- Chen, Y.; Gui, G. Recursive least square-based fast sparse multipath channel estimation. Int. J. Commun. Syst. 2017, 30, e3278. [Google Scholar] [CrossRef]

- Kalouptsidis, N.; Mileounis, G.; Babadi, B.; Tarokh, V. Adaptive algorithms for sparse system identification. Signal Process. 2011, 91, 1910–1919. [Google Scholar] [CrossRef]

- Candes, E.J.; Wakin, M.; Boyd, S. Enhancing sparsity by reweighted minimization. J. Fourier Anal. Appl. 2008, 14, 877–905. [Google Scholar] [CrossRef]

- Lamare, R.C.; Sampaio-Neto, R. Adaptive reduced-rank processing based on joint and iterative interpolation, decimation, and filtering. IEEE Trans. Signal Process. 2009, 57, 2503–2514. [Google Scholar] [CrossRef]

- Petraglia, M.R.; Haddad, D.B. New adaptive algorithms for identification of sparse impulse responses—Analysis and comparisons. In Proceedings of the Wireless Communication Systems, York, UK, 19–22 September 2010; pp. 384–388. [Google Scholar]

- Golub, G.H.; Van Loan, C.F. An analysis of the total least squares problem. SIAM J. Numer. Anal. 1980, 17, 883–893. [Google Scholar] [CrossRef]

- Dunne, B.E.; Williamson, G.A. Stable simplified gradient algorithms for total least squares filtering. In Proceedings of the 34th Annual Asilomar Conference on Signals, Systems, and Computers, Pacific Grove, CA, USA, 29 October–1 November 2000; pp. 1762–1766. [Google Scholar]

- Feng, D.Z.; Bao, Z.; Jiao, L.C. Total least mean squares algorithm. IEEE Trans. Signal Process. 1998, 46, 2122–2130. [Google Scholar] [CrossRef]

- Davila, C.E. An efficient recursive total least squares algorithm for FIR adaptive filtering. IEEE Trans. Signal Process. 1994, 42, 268–280. [Google Scholar] [CrossRef]

- Soijer, M.W. Sequential computation of total least-squares parameter estimates. J. Guid. Control Dyn. 2004, 27, 501–503. [Google Scholar] [CrossRef]

- Choi, N.; Lim, J.S.; Sung, K.M. An efficient recursive total least squares algorithm for raining multilayer feedforward neural networks. Lect. Notes Comput. Sci. 2005, 3496, 558–565. [Google Scholar]

- Lim, J.S.; Pang, H.S. l1-regularized recursive total least squares based sparse system identification for the error-in-variables. SpringerPlus 2016, 5, 1460–1469. [Google Scholar] [CrossRef] [PubMed]

- Lehmann, E. Image-Source Method: MATLAB Code Implementation. Available online: http://www.eric-lehmann.com/ (accessed on 17 December 2018).

- Gay, S.L.; Benesty, J. Acoustic Signal Processing for Telecommunication; Kluwer Academic Publisher: Norwell, MA, USA, 2000; pp. 6–7. [Google Scholar]

- Swanson, D.C. Signal Processing for Intelligent Sensor Systems with MATLAB, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2012; p. 70. [Google Scholar]

- Kuttruff, H. Room Acoustics, 6th ed.; CRC Press: Boca Raton, FL, USA, 2017; p. 168. [Google Scholar]

- Bai, H.; Richard, G.; Daudet, L. Modeling early reflections of room impulse responses using a radiance transfer method. In Proceedings of the IEEE Workshop on Applications of Signal Processing to Audio and Acoustics, New Paltz, NY, USA, 20–23 October 2013; pp. 1–4. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).