Abstract

Humans have an innate tendency to anthropomorphize surrounding entities and have always been fascinated by the creation of machines endowed with human-inspired capabilities and traits. In the last few decades, this has become a reality with enormous advances in hardware performance, computer graphics, robotics technology, and artificial intelligence. New interdisciplinary research fields have brought forth cognitive robotics aimed at building a new generation of control systems and providing robots with social, empathetic and affective capabilities. This paper presents the design, implementation, and test of a human-inspired cognitive architecture for social robots. State-of-the-art design approaches and methods are thoroughly analyzed and discussed, cases where the developed system has been successfully used are reported. The tests demonstrated the system’s ability to endow a social humanoid robot with human social behaviors and with in-silico robotic emotions.

1. Introduction

We have found that individuals’ interaction with computers, television and new media are fundamentally social and natural, just like interactions in real life. [...] Everyone expects media to obey a wide range of social and natural rules. All these rules come from the world of interpersonal interaction, and from studies [on] how people interact with [the] real world. But all of them apply equally well to media...”(The Media Equation Theory, Revees and Nass 1996) [1].

Humans have an innate tendency to to anthropomorphize surrounding entities [2], regardless of whether they are living or non-living beings. Similarly, we have been always fascinated by the creation of machines that have not only human traits but also emotional, sensitive, and communicative capabilities similar to humankind. This was clearly highlighted by the creation of artificial creatures able to interact with us and to move around our physical and social spaces, which has inspired writers, producers, and directors since the dawn of the science fiction genre. From the robots in Karel Capek’s R.U.R. to Star Wars’s droids and Asimov’s positronic robots, up to the Philip K. Dick’s replicants, science fiction novels, plays, and movies have illustrated how this robotic technology may live together with us, benefiting society but also raising questions about ethics and responsibility.

In the last few decades, this imagination has become a reality with enormous advances in hardware performance, computer graphics, robotics technology, and artificial intelligence (AI). There are many reasons to build robots able to interact with people in a human-centered way. We are a profoundly social species and understanding our sociality can help us to better understand ourselves and our humanity [3]. Such robots can be a test-bed for modeling human social behaviors and the parameters of those models could be systematically varied to study and analyze behavioral disorders [3]. If it would be possible to interact with robots in a natural and familiar way, they could be used to enhance the quality of our lives. In the future, a personal social robot could assist people in a wide range of activities, from domestic and service tasks to educational and medical assistance. Moreover, given emerging trends of the Internet of Things (IoT) and the evolution of smart environments that receive and process enormous sets of data, social robots could become the next generation of interfaces that enable humans to relate with the world of information by means of an empathic and immediate interaction. As a consequence of its importance, this emerging scientific trend has become a novel research field: cognitive robotics.

In this paper, we will introduce several key points of this new discipline with particular focus on the design of human-inspired cognitive systems for social robots. We will analyze the state of the art of control systems and architectures for robotics and compare them with the new needs outlined by cognitive robotics. Finally, we will report a detailed description of the implementation of a social robot as a case study, i.e., the FACE (Facial Automaton for Conveying Emotions) robot, which is a highly expressive humanoid robot endowed with a bio-inspired actuated facial mask.

2. The Mind of a Social Robot

Before dealing with principles and methods to develop the mind of a social robot, we should examine what we mean by the word “mind” in the context of humanoid social robots. From now on, we will use the term “mind” as a computational infrastructure designed to control a robot so that it can interpret and convey human readable social cues and express a variety of behavioral and communicative skills, especially aimed at engaging with people in social interactions.

As a consequence of its complexity, the creation of a cognitive architecture for robots requires additional knowledge from different research fields, such as social psychology, affective computing, computer science, and AI, which influence the design of the underlying control framework. Social psychology provides information on how people react to stimuli, which represents guidelines for modeling the robot’s behavior. Computer science deals with the development of software systems that control the behavior of the robot and its interaction with people and the world. Affective computing is a new interdisciplinary field focused on giving machines the ability to interpret the emotional state of humans and adapt their state and behavior to them [4]. AI is fundamental for enhancing the capabilities of the robot and how believable it is. Models and algorithms are needed that allow the robot to iteratively learn from human behaviors, to process environmental information about the interlocutors’ affective state, and to determine which action to take at a given moment on the basis of the current social context.

There are important scientific trends supporting a design method of a pure biomimetic robot’s mind, with the certainty that a successful AI would be possible only by means of a faithful reproduction of the biological human brain structure [5,6]. Nonetheless, in the last decade, the investigation of the main human brain functions and a more general study of human behaviors have led to the development of simplified models that have produced good results.

On the other hand, we must be careful not to move too away from the biological model. Neuroscience has taught us that human intelligence does not depend on monolithic internal models, on a monolithic control, or on a general purpose processing [7]. Humans perceive the external world and their internal state through multiple sensory modalities that in parallel acquire an enormous amount of information used to create multiple internal representations. Moreover, behaviors and skills are not innate knowledge but are assimilated by means of a development process, i.e., performing incrementally more difficult tasks in complex environments [7]. There is also evidence that pure rational reasoning is not sufficient for making decisions since human beings without emotional capabilities often show cognitive deficits [8].

Following this bio-inspired direction, over the last 60 years, AI has dramatically changed its paradigm from a computational perspective, which includes research topics such as problem solving, knowledge representation, formal games, and search techniques, to an embodied perspective, which concerns the development of systems that are embedded in the physical and social world. These embodied systems are designed to deal with real and physical problems that cannot be taken into consideration by a pure computational design perspective.

This new multidisciplinary field called “embodied artificial intelligence” has started to acquire other meanings in addition to the traditional algorithmic approach also known as GOFAI (Good Old-Fashioned Artificial Intelligence). This new meaning designates a paradigm aimed at understanding biological systems, abstracting general principles of intelligent behavior, and applying this knowledge to build intelligent artificial systems [9,10].

On this research line, promoters of the embodied intelligence began to build autonomous agents able to interact in a complex and dynamic world, always taking the human being as a reference. An embodied agent should be able to act in and react to the environment by building a “world model,” i.e., a dynamic map of information acquired through its sensors that changes over time. As in the human being case, the body assumes a key role in the exchange of information between the agent and the environment. The world is affected by the agent through the actions of its body, and the agent’s goal (or “intentions”) can be affected by the world through the agent’s body sensors. However, building a world model also requires the ability to simulate and make abstract representations of what is possible in certain situations, which entails “having a mind.”

In order to underline the importance of the body in this process of representation of the world, we must cite one of the major figures who outlined the tight bond between mind and body, Antonio Damasio:

“Mind is not something disembodied, it is something that is, in total, essential, [and] intrinsic ways, embodied. There would not be a mind if you did not have in the brain the possibility of constructing maps of our own organism [...] you need the maps in order to portray the structure of the body, portray the state of the body, so that the brain can construct a response that is adequate to the structure and state and generate some kind of corrective action.”

In conclusion, we claim that, by combining biological and robotic perspectives, building an intelligent embodied agent requires both a body and a mind. For a robot, as well as for a human being, the body represents the means through which the agent acquires knowledge of the external world, and the mind represents the means through which the agent models the knowledge and controls its behavior.

Requirements

Building the mind of a social robot is a long-term project that involves scientists from different academic fields who can integrate technical knowledge of hardware and software, psychological knowledge of interaction dynamics, and domain-specific knowledge of the target application [11]. Therefore, the process of building such a cognitive system requires many prototyping steps aimed at facing new challenges that are unique to social robots and empathic machines, such as sensory information processing, multimodal human communication design, and application of behavioral models based on acceptable rules and social norms. Indeed, robots with social abilities are designed to interact and cooperate together with humans in a shared space [12]. This means that a social robot must be able to express its own state and perceive the state of its social environment in a human-like way. Bionics research is focusing on the development of the so-called “social intelligence” for autonomous machines in order to make these social robots able to establish lifelike empathic relationships with their partners. The term “social intelligence” implies the ability to interact with other people or machines, to interpret and convey emotional signals, and to perceive and react to interlocutors’ intentions for maintaining the illusion of dealing with a real human being [13].

From a technical point of view, the following elements are required for the development of a cognitive architecture aimed at becoming the mind of a social robot:

- [R. 1] a distributed modular architecture that allows for the design of a system with multiple abstract and physical layers, with parallel processing and distributed computational loads;

- [R. 2] an imperative control architecture aimed at controlling low-level procedures such as motor control, sensor reading, kinematics calculation, and signal processing;

- [R. 3] a hardware platform robot-independent low-level control architecture that can be easily adapted to various robotics platforms and consequently used in various research, commercial, and therapeutic setups;

- [R. 4] a deliberative reasoning high-level architecture aimed at implementing the robot’s behavioral and emotional models;

- [R. 5] a pattern-matching engine able to conduct search and analysis procedures that are not necessarily describable with Boolean comparisons or mathematical analyses;

- [R. 6] an intuitive and easy-to-use behavior definition language that allows neuroscientists and behavioral psychologists to easily convert their theoretical models into executable scripts in the cognitive architecture;

- [R. 7] a high-level perception system aimed at extracting high-level social, emotional, and empathic parameters from the perceived scene, with particular focus on the interpretation of humans’ emotional and behavioral signs;

- [R. 8] an object-oriented meta-data communication and storage system on which data of heterogeneous categories can be easily managed and elaborated;

In summary, certain requirements are mandatory for the development of the social and emotional intelligence of a humanoid robot: a sensory apparatus able to perceive the social and emotional world, a platform-independent actuation and animation system able to properly control the robot’s movements and gestures, and a “smart brain” able to manipulate the incoming flow of information in order to generate fast and suitable responses. All these features need to be implemented while keeping in mind that these robots represent powerful research tools for studying human intelligence and behavioral models and investigating the social and emotional dynamics of humn–robot interaction [3,14,15].

3. Robot Control Paradigms and Cognitive Architectures

In order to explain the [R. 1] and [R. 2] requirements, it is necessary to introduce a discourse on the main paradigms used for building robot control architecture. From a robotic point of view, humans are sophisticated autonomous agents able to work in complex environments through a combination of reactive behaviors and deliberative reasoning. A control system for an autonomous robot must perform tasks based on complex information processing in real time. Typically, a robot has a number of inputs and outputs that have to be handled simultaneously and it operates in an environment in which the boundary conditions determined through its sensors change rapidly. The robot must be able to react to these changes in order to reach a stable state [16].

Over the years, many approaches have been used in AI to control robotic machines. The three most common paradigms are: hierarchical, reactive and hybrid deliberate/reactive paradigm. All of them are defined by the relationship among the three primitives, i.e., SENSE, PLAN, and ACT, and by the processing of the sensory data by the system [17].

3.1. The Hierarchical Paradigm

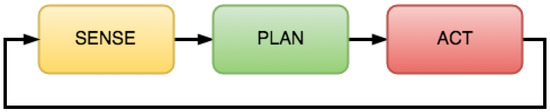

The hierarchical paradigm is historically the oldest method used in robotics, beginning in 1967 with the first AI robot, Shakey [18]. In the hierarchical paradigm, the robot senses the world to construct a model, plans its next actions to reach a certain goal, and carries out the first directive. This sequence of activities is repeated in a loop in which the goal may or may not have changed (Figure 1).

Figure 1.

The hierarchical paradigm based on a repetitive cycle of SENSE, PLAN, and ACT.

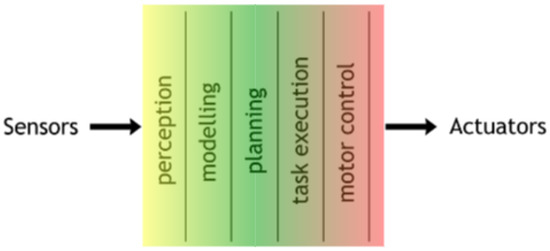

Figure 2 shows an example of a hierarchical paradigm characterized by a horizontal decomposition as summarized by Rodney Brooks in [19]. The first module consists in collecting and processing the environmental data received through the robot’s sensors. The processed data are used to either construct or update an internal world model. The model is usually constituted by a set of symbols composed by predicates and values that can be manipulated by a logical system. The third module, i.e., the planner, uses the world model and the current perception to decide a feasible plan of actions to be executed to achieve the desired goal. Once a suitable set of actions has been found, the fourth and fifth modules execute the actions by converting the high-level commands into low-level commands to control the actuators of the robot. This process is repeated continuously until the main goal of the robot has been achieved.

Figure 2.

An example of the traditional decomposition of a mobile robot control system into functional modules.

Using a top-down design and sequential modules, the hierarchical paradigm lacks robustness because each subsystem is required to work and the failure of any one of the sub-modules causes the entire chain to fail. Moreover, it requires higher computational resources due to the modeling and planning phases.

3.2. The Reactive Paradigm

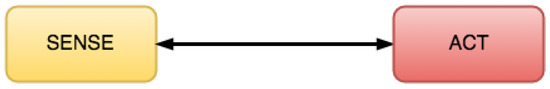

Starting from the 1970s, many roboticists in the field of AI explored biological and cognitive sciences in order to understand and replicate the different aspects of intelligence that animals use to live in an “open world,” overcoming the previous “closed world” assumption. They tried to develop robot control paradigms with a tighter link between perception and action, i.e., SENSE and ACT components, and discarded the PLAN component (Figure 3).

Figure 3.

The reactive paradigm based on a direct link between SENSE and ACT.

From a philosophical point of view, the reactive paradigm is very close to behaviorism approaches and theories [20]. In this paradigm, the system is decomposed into “task-achieving behaviors” that operate in parallel and independently of any other behavior. Each “behavior module” implements a complete and functional robot behavior rather than one single aspect of an overall control task, and it has access to sensors and actuators independently of any other module. The fundamental idea of a behavior-based decomposition is that intelligent behavior is not achieved by designing one complex, monolithic control structure but by bringing together the “right” type of simple behaviors; i.e., it is an emergent functionality.

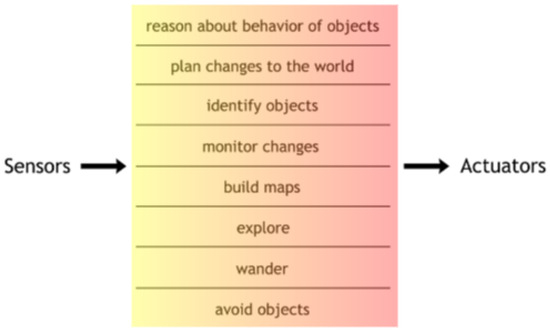

The subsumption architecture developed by Rodney Brooks in 1986 [19] is perhaps the best known representative of the reactive paradigm for controlling a robot. The model is based on the fact that the cognition can be observed simply using perceptive and action systems that interact directly with each other in a feedback loop through the environment. The subsumption architecture is focused around the idea of removing centralized control structures in order to build a robot control system with increasing levels of competence. Each layer of the behavior-based controller is responsible for producing one or few independent behaviors. All layers except the bottom one presuppose the existence of the lower layers, but none of the layers presupposes the existence of higher layers. In other words, if the robot is built with a bottom-up approach, each stage of the system development is able to operate. This architecture entails that a basic control system can be established for the lowest hardware level functionality of the robot and additional levels of competence can be built on the top without compromising the whole system. Figure 4 shows an example of a behavior-based decomposition of a mobile robot control system with the subsumption architecture.

Figure 4.

An example of decomposition of a mobile robot control system based on task-achieving behaviors.

3.3. The Hybrid Deliberate/Reactive Paradigm

Since the reactive paradigm eliminated planning or any reasoning functions, as a consequence, a robot with this kind of control architecture could not select the best behavior to accomplish a task or follow a person on the basis of some specific criteria. Thus, at the beginning of the 1990s, AI roboticists tried to reintroduce the PLAN component without disrupting the success of the reactive behavioral control, which was considered the correct way to perform low-level control [17]. From that moment, architectures that used reactive behaviors and incorporated planning activities were said to be using a hybrid deliberative/reactive paradigm (Figure 5).

Figure 5.

The hybrid deliberative/reactive paradigm, which reintroduces the PLAN component and combines a behavior-based reactive layer with a logic-based deliberative layer.

The hybrid deliberative/reactive paradigm can be described as PLAN, then SENSE-ACT: the robot first plans how to best decompose a task into sub-tasks, and it then decides what behaviors would appropriately accomplish each sub-task. The robot instantiates a set of behaviors to be executed as in the reactive paradigm. Planning is done at one step, while sensing and acting are done together. The system is conceptually divided into a reactive layer and a deliberative layer.

In a hybrid deliberative/reactive system, the three primitives are not clearly separated. Sensing remains local and behavior-specific, as it was in the reactive paradigm, but it is also used to create the world model, which is required by the planning. Therefore, some sensors can be shared between the model-making processes and each perceptual system of the behaviors. On the other hand, other sensors can be dedicated to provide observations that are useful for world modeling and are not used for any active behaviors. Here, the term “behavior” has a slightly different connotation than it does in the reactive paradigm: if “behavior” indicates a purely reflexive action in a reactive paradigm, the term is nearer to the concept of “skill” in a hybrid deliberative/reactive paradigm.

On the basis of Brooks’ theory, the robot cognitive system can be divided in two main blocks: the low-level reactive control and the high-level deliberative control.

The low-level reactive control is managed by an ad-hoc animation engine designed to receive and merge multiple requests coming from the higher modular layer. Since the behavior of the robot is inherently concurrent, multiple modules are expected to send requests for movements, and parallel requests can elicit the same actions, generating conflict. Thus, the animation engine is responsible for mixing reflexes, such as eye blinking or head turning to follow a person, with more deliberate actions, such as facial expressions. For example, in an expressive robot control system, eye blinking conflicts with the expression of surprise since, normally, people who are amazed tend to react by opening the eyes widely.

As the robot’s abilities increase in number, it becomes difficult to predict the overall behavior due to the complex interaction of different modules. Acting in a dynamic environment requires the robot to analyze its observations so as to update its internal state, to decide when and how to act, and to resolve conflicting behaviors. Therefore, knowledge processing systems are becoming increasingly important resources for hybrid deliberative/reactive controlled robots. Such systems are used to emulate the reasoning process of human experts through decision-making mechanisms, in which expert knowledge in a given domain is modeled using a symbolic syntax [21]. These systems, called expert systems, are functionally equivalent to a human expert in a specific problem domain in terms of ability to reason over representations of human knowledge, to solve problems by heuristic or approximation techniques, and to explain and justify solutions based on known facts. These considerations led us to choose an expert system as a core element of the high-level deliberative control of the described architecture.

In his book “Introduction to Expert Systems,” Peter Jackson wrote a good definition of an expert system: “a computer system that emulates the decision-making ability of a human expert” [22]. The main difference between expert systems and conventional computer programs is that the roots of the expert systems lie in many disciplines, among which is the area of psychology concerning human information processing, i.e., cognitive science. Indeed, expert systems are intrinsically designed to solve complex problems by reasoning about knowledge, represented as if–then-else rules, rather than through conventional high-level procedural languages, such as C, Pascal, COBOL, or Python [23].

The first expert systems were created in the 1970s and rapidly proliferated starting from the 1980s as the first truly successful form of AI software [24]. They were introduced by the Stanford Heuristic Programming Project led by Feigenbaum, who is sometimes referred to as “the father of expert systems.” The Stanford researchers tried to identify domains where expertise was highly valued and complex, such as diagnosing infectious diseases (MYCIN) [25] and identifying unknown organic molecules (DENDRAL) [26].

An expert system is divided into two subsystems: the inference engine and the knowledge base. The knowledge base is represented by facts and rules that can be activated by conditions on facts. The inference engine applies the rules activated by known facts to deduce new facts or to invoke an action. Inference engines can also include explanation, debugging capabilities, and conflict-resolution strategies.

A widely used public-domain software tool for building expert systems is CLIPS (C Language Integrated Production System) (http://clipsrules.sourceforge.net/). CLIPS is a rule-based production system that was developed in 1984 at NASA’s Johnson Space Center. Like other expert system languages, CLIPS deals with rules and facts. Asserting facts can make a rule applicable. An applicable rule is then fired following the "agenda," a list of activated rules whose order of execution is decided by the inference engine. Rules are defined using a symbolic syntax where information is described as a set of facts and decisions are taken through a set of simple rules in the form: IF certain conditions are true THEN execute the following actions.

In a hybrid deliberative/reactive architecture, where an expert system can be used to implement the high-level deliberative control, data perceived and elaborated by the sensor units is streamed through the connection bus of the cognitive subsystem, and then asserted in the rule engine as a knowledge base (facts), generating the first part of the robot cognition, i.e., the primary cognition [8]. In this case, the robot’s behavior is described through a set of primary rules, which are triggered by the facts that are continuously asserted and can fire the assertion of new facts, i.e., the secondary cognition [8], or can call actuation functions, which change the robot state, i.e., the motor control. Facts of the secondary cognition are analyzed by a higher-level rule set, which represents the emotion rule set. The emotion rule set triggers events that are related to the secondary cognition, such as the emotional state of the robot, a parameter that can influence its behavior.

4. Robot Control Frameworks

The human being can be seen as a distributed system composed of multiple subsystems working independently but communicating with each other at different scales and levels, e.g., apparatus, organs, cells, molecules. In the previous section, we considered the brain as a “director” of the human being orchestra, highlighting how the body is not just a medium but rather the essential substratum required for the existence of the mind as we know it. At this point, we must devote a few words to what can be defined as the third fundamental aspect of a human (or human-inspired) being: communication.

Taking inspiration from our brain and body, humanoid robots are conceived as modular systems. Robots can always be described as composed of parts that constitute the sensory apparatus, the motor apparatus, and the cognitive system, which in turn are divided into modules that receive, process, and stream information. Such a modular and distributed architecture allows for both the simultaneous functioning of many simple features and the fulfillment of very complex tasks that require high computational costs. Robustness and responsiveness can be guaranteed specifically thanks to the distribution of the workload among the subsystems that compose the overall architecture. Everything related to the management of the intercommunication among the subsystems is what, in computer science, is called middleware. The key feature of a robotics middleware is to provide a handy API and automatism whenever possible. Moreover, the middleware has to support cross-platform compilation and different programming languages.

5. Case Study: The FACE Humanoid Emotional Mind

The following section describes the design and implementation of the FACE humanoid control architecture highlighting links with the requirements, theories, and architectures introduced in the previous sections.

5.1. The FACE Robot

The FACE (Facial Automaton for Conveying Emotions) robot is a humanoid robot endowed with a believable facial display system based on biomimetic engineering principles and equipped with a passive articulated body [27]. The latest prototype of the FACE robot’s head has been fabricated by David Hanson (http://www.hansonrobotics.com/) through a life-casting technique. It aesthetically represents a copy of the head of a female subject, both in shape and texture, and the final result appears extremely realistic (Figure 6). The FACE actuation system is based on 32 electric servo motors, which are integrated into the skull and the upper torso mimicking the major facial muscles. Movements of the facial expressions are very responsive with no noticeable latency because the system fully exploits the hardware limitations of the control boards (i.e., 3 Pololu Mini Maestro 12-Channel USB Servo Controllers (https://www.pololu.com/product/1352)). Thanks to the physical and mechanical characteristics of the materials, The FACE robot is able to reproduce a full range of realistic human facial expressions [28].

Figure 6.

The FACE humanoid robot.

5.2. Sensing the Social World

In their semiotic theories, Uexküll and Sebeok define the concept of “Umwelt,” i.e., the “self-centered world.” According to Uexküll, organisms can have different Umwelten, even though they share the same environment [29]. We perceive the world through our senses that interpret it as creating a subjective point of view of the environment around us, which includes objective data, e.g., colors, light, and sounds, and subjective information, e.g., the tone of voice and the body gestures of our interlocutors. Similarly, the perception system of a social robot cannot be limited to the acquisition of low-level information from the environment. It has to extract and interpret the social and emotional meaning of the perceived scene. A robot observing people talking to each other has to deduce who the speaker is, their facial expressions, their gender, their body gestures, and other data needed to understand the social context. All this information has to be analyzed through the “body filter,” i.e., from the robot’s point of view [5].

The FACE robot is equipped with a rich set of sensors to acquire information from the environment. Raw data are processed and organized to create “meta-maps”, i.e., structured objects of itself (proprioception), of the world (exteroception) and of its social partners (social perception), which together form the knowledge base [R. 8]. Knowledge represented as structured objects helps the robot to manipulate it at a higher level of abstraction and in a more flexible and natural way, using also rule-based declarative languages.

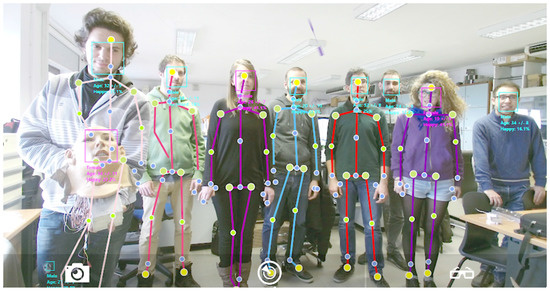

In particular, the FACE robot is equipped with a social scene analysis system [30,31] aimed at acquiring “the robot Umwelt” by extracting social information related to the current context [R. 7]. The perception system of the FACE control architecture creates contextualized representations of the the FACE robot’s Umwelt called social meta scenes (SMSs). High-level information, such as postures, facial expression, age estimation, gender, and speaking probability, is extracted and “projected” into the cognitive system of the FACE robot, which becomes aware of what is happening in the social environment (Figure 7).

Figure 7.

The FACE scene analyzing system is tracking interlocutors at the “Enrico Piaggio” Research Center (University of Pisa, Italy). Faces are recognized and highlighted with squares (blue for male; pink for female) together with social information, e.g., estimated age and facial expression. Body skeletons of the six closest subjects are also highlighted with lines linking their joint coordinates.

5.3. Reasoning and Planning: The Social Robot Awareness

Animals show an awareness of external sensory stimuli. Human beings in particular are aware of their own body states and feelings related to the social context [32]. In the context of social robots, awareness consists in not only being conscious of motors positions but also the ability to perceive the inner state, or “unconscious proprioception,” evolved as a consequence of exteroceptive sensory stimulation. The continuous generation of inner state representations is the core of a suitable cognitive system, which allows the social robot to project itself into the social context [33]. In a similar manner, the mind of the FACE robot has been conceived so that it can participate in its social environment, interpret social cues, and interact with other interlocutors in an active way.

As in the human nervous system, planning is the slowest part of the control. Rule-based expert systems can deal with a substantial amount amount of rules, but they require time to compute the final action. In the meantime, sensors and actuators have to be linked through direct communication channels to perform fast reactive actions. Thus, a hybrid deliberative/reactive paradigm, which supports heterogeneous knowledge representations, is a good solution for designing a control architecture of a social robot [R. 1]. Integrating a logic-based deliberative system with a behavior-based reactive system ensures that the robot can handle the real-time challenges of its environment appropriately [R. 2], while performing high-level tasks that require reasoning processes [34] [R. 4].

In this way, the FACE robot has the ability to react immediately to simple visual and auditory stimuli, e.g., an unexpected noise or a sudden movement in the scene, and, at the same time, to process high-level information that requires more reasoning from the acquired raw data. The result of this slower but more complex reasoning process can modulate or even completely change the behavior of the social robot.

The FACE mind has been biomimetically designed on the basis of a formalization of Damasio’s theory presented by Bosse et al. [35], who provided fundamental indications for the implementation of the three main concepts of Damasio’s theory, i.e., emotion, feeling, and feeling of a feeling. The cognitive system has also been conceived to endow the robot with primary and secondary cognition, i.e., what Damasio defines as the proto-self and the self of a human being. Indeed, all of the information gathered by the perception system of the robot, e.g., the noise level, the sound direction, RGB images, and depth images, is processed and identified only if the robot has templates that are pre-defined in the cognitive block. In the case of a successful match, this chunk of raw low-level information becomes an entity of the world perceived by the robot, such as a subject, or a particular object [R. 5]. The robot itself is also an entity of its own world, and its ”bodily” state is continuously perceived in terms of, for example, power consumption or motor position. This is the first layer of the FACE robot’s knowledge that can be defined as the primary cognition, or the proto-self. By a comparison between the robot’s personal state and this primary information about the surrounding scenario, the FACE robot, by means of its rule-based reasoning capability, can invoke an immediate action and build new knowledge. This is the second layer of the FACE robot’s knowledge, produced by its symbolic rule-based reasoning and the fundamental relation between the robot’s state and the robot’s social world, i.e., the robot’s Umwelt. This secondary higher level of knowledge can be considered as a synthetic extended consciousness [36], i.e., that which leads humans to the creation of a self, the simulation of a journey that starts from a perceived bodily state to a conscious feeling, passing through emotions.

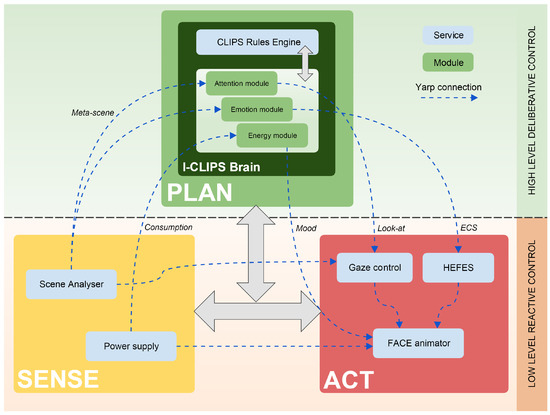

In summary, the FACE robot’s cognitive architecture is based on a modular hybrid deliberative/reactive paradigm [37] where specific functions are encapsulated into modules. Procedural modules collect and elaborate raw data gathered from sensors or received from other modules, while declarative modules process high-level information through a rule-based language.

The proposed architecture, shown in Figure 8, can be described taking into account the three main functional blocks (SENSE, PLAN, and ACT) introduced in Section 3. The sensory subsystem acquires and processes incoming data and makes the output available both to the actuation subsystem, which manages fast and instinctive ’stimulus–response’ behaviors (SENSE-ACT), and to the deliberative system, which creates meta-maps of the social world and the robot itself (SENSE-PLAN). Based on these meta-maps, the deliberative system plans and computes the next goal (PLAN-ACT). For instance, an unexpected sound could change the robot’s attention suddenly without taking care of the rest, or a high energy consumption interpreted by the robot as “fatigue” could influence its motor control system directly. Concurrently, the deliberative system uses the same information to reason and choose subsequent actions, according to the current knowledge of the robot.

Figure 8.

The FACE cognitive architecture based on the hybrid deliberative/reactive paradigm.

5.4. FACE Control Architecture Services

The system includes a set of services, standalone applications interconnected through the network. Each service collects and processes data gathered from sensors or directly from the network and sends new data over the network. The information flow is formalized as XML packets that represent a serialized form of structured data objects, which enables the creation of a modular and scalable architecture by developing services that can receive and send data through the network using different programming languages and hardware devices [R. 3].

The network infrastructure is based on YARP, an open-source middleware designed for the development of distributed robot control systems [38]. YARP manages the connections by using Port objects, i.e., active objects managing multiple asynchronous input and output connections for a given unit of data. Each service can open many different ports for sending and receiving data through the network. Each structured data object is serialized as XML packet and sent over the network through a dedicated YARP port. Vice versa, each structured object received from the network through a YARP port is deserialized in the corresponding structured object.

The current stage of the architecture includes the following services (Figure 8):

SENSE: The scene analyzer is the core of the SENSE block. It processes the information acquired through the Microsoft Kinect camera (https://developer.microsoft.com/en-us/windows/kinect) to extract a set of features used to create a meta-scene object. The extracted features include a wide range of high-level verbal/non-verbal cues of the people present in the scene, such as facial expressions, gestures, position, and speaking identification, and a set of the most relevant points of the visualized scene calculated from the low-level analysis of the visual saliency map. Finally, the meta-scene is serialized and sent over the network through its corresponding YARP port. Details of the scene analyzer algorithms and processes are reported in [30,39].

The power supply is the energy monitor of the FACE robot. It manages the connection with the robot’s power supply and monitors the current consumption and voltage levels of four power channels of the robot. The power supply service calculates the power consumption in Watt with a frequency of 1 Hz and serializes this information to be sent over the network.

Gaze control is the control system of the robot’s neck and eyes [39]. This module receives meta-scene objects containing a list of people in the field of view of the robot, each of them identified by a unique ID and associated with spatial coordinates (x,y,z). This service also listens to the “look at” YARP port used by the deliberative subsystem to send the subject ID towards the point on which the robot must focus its attention (the attention model is described in [37]).

ACT: HEFES (Hybrid Engine for Facial Expressions Synthesis) is a software engine deputed to the emotional control of the FACE robot [27]. This service receives an ECS (emotional circumplex space) point (v,a) expressed in terms of valence and arousal according to Russel’s theory, called the “circumplex model of affects” [40], and calculates the corresponding facial expression, i.e., a configuration of servo motors, that is sent over the network to the FACE animator.

The FACE animator is the low-level control system of the FACE robot. This service receives multiple requests coming from the other services such as facial expressions and neck movements. Since the behavior of the robot is inherently concurrent, parallel requests can generate conflicts. The animation engine is responsible for blending multiple actions, taking account of the time and the priority of each incoming request.

PLAN: The I-CLIPS Brain is the core of the PLAN block. This service embeds a rule-based expert system called the I-CLIPS rules engine and works as a gateway between the procedural and deliberative subsystems [37]. I-CLIPS allows for the definition of the FACE robot’s behavior and social interaction models by using an intuitive and easy-to-use behavior definition scripting language based on the CLIPS syntax. Thanks to the behavior definition through this natural language, neuroscientists and behavioral psychologist can easily convert their theoretical models into the FACE robot’s executable script [R. 6].

I-CLIPS behavioral and emotional rules can be grouped into modules (as reported in Figure 8) that can be managed, activated, and deactivated by other rules at run time, making the entire architecture deeply modular and versatile.

In the proposed cognitive architecture, thanks to I-CLIPS, the sensory system can be partially simulated giving the agents the ability to perceive in-silico parameters, e.g., heartbeat, respiration rate, and stamina. These simulated physiological parameters can be used to create a virtual proto-self extension in order to develop more complicated cognitive models that take into account inner states such as “stamina.”

6. Test and Results

Thanks to this control architecture and to its unique sensory and actuation system, the FACE robot is now a social robot with incredible empathic capabilities. The peculiarities and versatility of its control architecture makes it possible to use the FACE robots in different and heterogeneous purposes ways that tangibly demonstrate the potential of the overall architecture.

6.1. Robot Therapy

Autism spectrum disorders (ASDs) are pervasive developmental disorders characterized by social and communicative impairments, i.e., communication, shared attention, and turn-taking. Using FACE as a social interlocutor, the psychologist can emulate real-life scenarios and infer the psycho-physiological state of the interlocutor through a multi-parametric analysis of physiological signals, behavioral signs, and therapists’ annotations to obtain a deeper understanding of subjects’ reactions to treatments (Figure 9). The statistical analysis of the physiological signals highlighted that the children with ASDs, by showing different affective reactions compared to the control group, were more sensible to the treatment [41,42]. These preliminary results demonstrated that the FACE robot was well accepted by patients with ASDs and could be used as novel therapy for social skills training.

Figure 9.

The FACE robot involved in a therapist drive ASD therapeutic session aimed at teaching the subject how to recognize human facial expressions. Picture by Enzo Cei.

6.2. Human–Robot Emotional Interaction

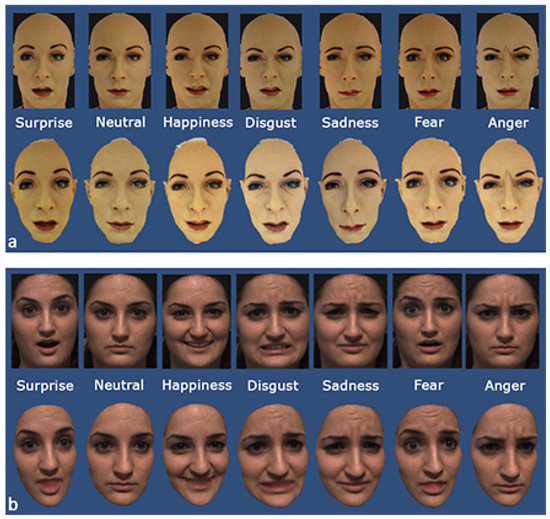

Since its aesthetically and expressively similar to humans, the FACE robot has been used as an emotional communication tool to test its visual expressiveness. Indeed, the facial expressiveness becomes a crucial issue for realistic humanoid robots that are intuitively perceivable by humans as not different from them. Two different experiments have been conducted. In the first experiment, static emotional signs performed by a humanoid robot have been compared with corresponding human facial expressions shown as 2D photos and 3D virtual models (Figure 10) in terms of recognition rate and response time. Fifteen subjects (10 males and 5 females) aged 19–31 years (mean age ) studying scientific disciplines except for one were recruited for the experiment. Preliminary results showed a greater tendency to better recognize expressions performed by the physical robot in comparison, both with the human stimuli and with its 2D photos and its 3D models. This supports our hypothesis that the robot is able to convey expressions at least as well as human 2D photos and 3D models [43].

Figure 10.

The 2D photos and 3D models used in the experiment: (a) FACE robot expressions and (b) human expressions.

The second experiment was based on the consideration that facial expressions in the real world are rarely static and mute, so it was hypothesized that the dynamics inherent in a facial expression and the sound or vocalizations that are normally associated with it could be fundamental in understanding its meaning. The experiment involved a total of 25 voluntary students and researchers (14 males and 11 females) aged 19–37 years (mean age ) working in the scientific area. Results showed that the presence of motion improves and makes it easier to recognize facial expressions even in the case of a humanoid robot. Moreover, auditory information (non-linguistic vocalizations and verbal sentences) helped to discriminate facial expressions in cases of a virtual 3D avatar and of a humanoid robot. In both experiments, the results concerning the recognition of the FACE robot’s facial expressions were comparable to those of human facial expressions, which demonstrates that the FACE robot could be used as an expressive and interactive social partner (publication under review).

6.3. Robot Entertainment

The extremely realistic facial expressions of FACE and its ability to interact in a completely automatic mode have attracted the interest of several important directors working in show business. For example, the FACE robot has been invited as a special guest by Beppe Grillo, an Italian comedian and one of the biggest influencers on the Internet. The android, controlled by our architecture, played a prominent role in a play shown in major theaters in Rome and Milan and interacted with the main actor in front of tens of thousands of people. Moreover, the FACE robot has turned out to be a potential actress in cinema. The robot was asked by 20th Century Fox to watch a teaser for the movie Morgan, produced by Ridley Scott. On this occasion, the facial automaton reacted expressively to the movie trailer, empathizing with the main character, an android capable of feeling and conveying emotions similar to the FACE robot (Figure 11).

Figure 11.

The FACE robot expressing emotions while watching Morgan’s trailer, a film by Luke Scott released on September 2, 2016, by 20th Century Fox [44].

6.4. Human Behavior and Mind Theory Simulator

In more recent studies, the FACE cognitive system has been endowed with a model of the somatic marker theory [8] and has been tested with a simulation of an experiment conceived by Bechara designed to validate the emotional and decisional capabilities in his patients with brain injuries: the Iowa Gambling Task [45]. With this experiment, we validated the ability of the FACE robot to make decisions according to the emotions felt during present and previous interactions with certain entities: in this case, entities were card decks during a gambling card game. This emotion-biased decisional process is completely human-inspired and gives the robot the ability to label objects, subjects, or events depending on the influence they have on the robot’s artificial mood. Such a mind theory simulation helps the android to make decisions that will be advantageous for itself in its future and to create its own beliefs autonomously. Detailed results and description can be found in [46].

7. Conclusions

We have provided social robotics definitions, descriptions, methods, and use cases. Clearly, this field is an entire universe in itself, and it is unfeasible to summarize all of it in one paper, but we believe that this work provides a good introduction on how to develop the brain and mind of a social and emotional robot.

We address the ambitious challenge of reproducing human features and behaviors on a robotic artifact. The biological systems provide an extraordinary source of inspiration, but a technical design approach is still necessary.

First of all, we can conclude that an intelligent embodied agent requires a strong reciprocal and dynamical coupling between mind (the control) and body (the means). However, in the embodied perspective, in order to give our robots the ability to cope with uncertain situations and react to unexpected events, it is necessary to avoid centralized monolithic control systems. The embodied design principles point out that the behavior emerges from the interaction between these two pillars and from the continuous flows of information that is at the base of the sensory-motor coordination. Those principles were applied when our humanoid FACE robot was designed and built, and the use cases reported in the paper demonstrate satisfying results.

Moreover, The FACE robot is currently used as the main robotic platform in the European Project EASEL (Expressive Agents for Symbiotic Education and Learning) (http://easel.upf.edu/). In this educational context, the FACE robot interprets the role of a synthetic tutor for pupils. For this purpose, the robot control system has been extended to other platforms (Aldebaran Nao and Hanson Robotics Zeno) demonstrating the platform versatility of the architecture.

We want to conclude this work with a vision of the potential opened by this area in conjunction with other emerging fields. The Internet of Things (IoT), for instance, is no longer a futuristic scenario. In a few years, billion of objects will be provided with the ability to transfer data over a network without requiring human supervision or interaction. Such a network will have the ability to analyze the human environment from various points of view, extending robots’ perceptual capabilities. This process is opening up the possibility of developing user-oriented scenarios, e.g., smart home/office [47], in which heterogeneous collections of objects become able to collect, exchange, analyze, and convey information to and through robots. It is clear that to cope with such a substantial data set, there must be some kind of artificial intelligence able to process data about our house, our work, and our daily life activities and to select which information is important.

At this point, a question arises: Is there a machine or an interface to deal with this challenge that is better than a social robot? We believe that this is one of the most exciting developments in the future of social robotics, i.e., building a new generation of emotional machines and in turn becoming the human-design-centered interfaces of the upcoming smart world.

Acknowledgments

This work was partially funded by the European Commission under the 7th Framework Program projects EASEL, “Expressive Agents for Symbiotic Education and Learning,” under Grant 611971-FP7-ICT-2013-10.

Author Contributions

All the authors conceived and designed the architecture from the scientific point of view. N.L., D.M. and L.C. developed and tested the platform performing the experiments and the data analysis. A.C. and D.D.R. supervised the research activity. All the authors contributed to the paper writing.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Reeves, B.; Nass, C. The Media Equation: How People Treat Computers, Television, and New Media Like Real People and Places; Cambridge University Press: New York, NY, USA, 1996. [Google Scholar]

- Hutson, M. The 7 Laws of Magical Thinking: How Irrational Beliefs Keep Us Happy, Healthy, and Sane; Penguin/Hudson Street Press: New York, NY, USA, 2012. [Google Scholar]

- Breazeal, C.; Scassellati, B. Challenges in building robots that imitate people. In Imitation in Animals and Artifacts; Chapter 4; Dautenhahn, K., Nehaniv, C.L., Eds.; The MIT Press: Cambridge, MA, USA, 2002; pp. 363–389. [Google Scholar]

- Picard, R.W. Affective Computing; The MIT Press: Cambridge, MA, USA, 1997. [Google Scholar]

- Pfeifer, R.; Lungarella, M.; Iida, F. Self-organization, embodiment, and biologically inspired robotics. Science 2007, 318, 1088–1093. [Google Scholar] [CrossRef] [PubMed]

- Bar-Cohen, Y.; Breazeat, C. (Eds.) Biologically-Inspired Intelligent Robots; SPIE- International Society for Optical Engineering: Bellingham, DC, USA, 2003. [Google Scholar]

- Brooks, R.A.; Breazeal, C.; Marjanovic, M.; Scassellati, B.; Williamson, M.M. The Cog Project: Building a Humanoid Robot. In Computation for Metaphors, Analogy, and Agents; Lecture Notes in Computer Science; Nehaniv, C., Ed.; Springer: Berlin/Heidelberg, Germany, 1999; Volume 1562, pp. 52–87. [Google Scholar]

- Damasio, A. Descartes’ Error: Emotion, Reason, and the Human Brain; Grosset/Putnam: New York, NY, USA, 1994. [Google Scholar]

- Pfeifer, R.; Bongard, J.C. How the Body Shapes the Way We Think: A New View of Intelligence; Bradford Books; The MIT Press: Cambridge, MA, USA, 2006. [Google Scholar]

- Vernon, D.; Metta, G.; Sandini, G. Embodiment in Cognitive Systems: On the Mutual Dependence of Cognition & Robotics. Cognition 2010, 8, 17. [Google Scholar]

- Glas, D.; Satake, S.; Kanda, T.; Hagita, N. An Interaction Design Framework for Social Robots. In Robotics: Science and Systems; The MIT Press: Cambridge, MA, USA, 2011. [Google Scholar]

- Horton, T.E.; Chakraborty, A.; St. Amant, R. Affordances for robots: A brief survey. AVANT 2012, 3, 70–84. [Google Scholar]

- Dautenhahn, K. Socially intelligent robots: Dimensions of human–robot interaction. Philos. Trans. B Biol. Sci. 2007, 362, 679–704. [Google Scholar] [CrossRef] [PubMed]

- Breazeal, C. Emotion and sociable humanoid robots. Int. J. Hum.-Comput. Stud. 2003, 59, 119–155. [Google Scholar] [CrossRef]

- Breazeal, C. Socially intelligent robots. Interactions 2005, 12, 19–22. [Google Scholar] [CrossRef]

- Arkin, R.C.; Mackenzie, D.C. Planning to Behave: A Hybrid Deliberative/Reactive Robot Control Architecture for Mobile Manipulation. In Proceedings of the International Symposium on Robotics and Manufacturing, Maui, HI, USA, 14–18 August 1994; pp. 5–12. [Google Scholar]

- Murphy, R.R. Introduction to AI Robotics, 1st ed.; MIT Press: Cambridge, MA, USA, 2000. [Google Scholar]

- Nilsson, N.J. Shakey The Robot. In Technical Report 323; AI Center, SRI International: Menlo Park, CA, USA, 1984. [Google Scholar]

- Brooks, R. A robust layered control system for a mobile robot. IEEE J. Robot. Autom. 1986, 2, 14–23. [Google Scholar] [CrossRef]

- Arkin, R.C. Behavior-Based Robotics; The MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Giarratano, J.C.; Riley, G.D. Expert Systems: Principles and Programming; Brooks/Cole Publishing Co.: Pacific Grove, CA, USA, 2005. [Google Scholar]

- Jackson, P. Introduction to Expert Systems; Addison-Wesley Pub. Co.: Reading, MA, USA, 1986. [Google Scholar]

- Giarratano, J.C.; Riley, G. Expert Systems, 3rd ed.; PWS Publishing Co.: Boston, MA, USA, 1998. [Google Scholar]

- Leondes, C.T. Expert Systems, Six-Volume Set: The Technology of Knowledge Management and Decision Making for the 21st Century; Academic Press: Cambridge, MA, USA, 2001. [Google Scholar]

- Shortliffe, E.H.; Davis, R.; Axline, S.G.; Buchanan, B.G.; Green, C.C.; Cohen, S.N. Computer-based consultations in clinical therapeutics: Explanation and rule acquisition capabilities of the MYCIN system. Comput. Biomed. Res. 1975, 8, 303–320. [Google Scholar] [CrossRef]

- Lindsay, R.K.; Buchanan, B.G.; Feigenbaum, E.A.; Lederberg, J. DENDRAL: A case study of the first expert system for scientific hypothesis formation. Artif. Intell. 1993, 61, 209–261. [Google Scholar] [CrossRef]

- Mazzei, D.; Lazzeri, N.; Hanson, D.; De Rossi, D. Hefes: A hybrid engine for facial expressions synthesis to control human-like androids and avatars. In Proceedings of the 2012 4th IEEE RAS & EMBS International Conference on Biomedical Robotics and Biomechatronics (BioRob), IEEE, Rome, Italy, 24–27 June 2012; pp. 195–200. [Google Scholar]

- Lazzeri, N.; Mazzei, D.; De Rossi, D. Development and testing of a multimodal acquisition platform for humn–robot interaction affective studies. J. Hum.-Robot Interact. 2014, 3, 1–24. [Google Scholar] [CrossRef]

- Von Uexküll, J. Umwelt und Innenwelt der Tiere; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Zaraki, A.; Pieroni, M.; De Rossi, D.; Mazzei, D.; Garofalo, R.; Cominelli, L.; Dehkordi, M.B. Design and Evaluation of a Unique Social Perception System for Human-Robot Interaction. IEEE Trans. Cognit. Dev. Syst. 2016, 9, 341–355. [Google Scholar] [CrossRef]

- Cominelli, L.; Mazzei, D.; Carbonaro, N.; Garofalo, R.; Zaraki, A.; Tognetti, A.; De Rossi, D. A Preliminary Framework for a Social Robot “Sixth Sense”. In Proceedings of the Conference on Biomimetic and Biohybrid Systems, Edinburgh, UK, 19–22 July 2016; pp. 58–70. [Google Scholar]

- Critchley, H.D.; Wiens, S.; Rotshtein, P.; Öhman, A.; Dolan, R.J. Neural systems supporting interoceptive awareness. Nat. Neurosci. 2004, 7, 189–195. [Google Scholar] [CrossRef] [PubMed]

- Parisi, D. The other half of the embodied mind. Embodied Gr. Cognit. 2011. [Google Scholar] [CrossRef] [PubMed]

- Qureshi, F.; Terzopoulos, D.; Gillett, R. The cognitive controller: A hybrid, deliberative/reactive control architecture for autonomous robots. In Innovations in Applied Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2004; pp. 1102–1111. [Google Scholar]

- Bosse, T.; Jonker, C.M.; Treur, J. Formalisation of Damasio’s theory of emotion, feeling and core consciousness. Conscious. Cognit. 2008, 17, 94–113. [Google Scholar] [CrossRef] [PubMed]

- Damasio, A. Self Comes to Mind : Constructing the Conscious Brain, 1st ed.; Pantheon Books: New York, NY, USA, 2010; p. 367. [Google Scholar]

- Mazzei, D.; Cominelli, L.; Lazzeri, N.; Zaraki, A.; De Rossi, D. I-clips brain: A hybrid cognitive system for social robots. In Biomimetic and Biohybrid Systems; Springer: Berlin/Heidelberg, Germany, 2014; pp. 213–224. [Google Scholar]

- Metta, G.; Fitzpatrick, P.; Natale, L. YARP: Yet Another Robot Platform. Int. J. Adv. Robot. Syst. 2006, 3, 43–48. [Google Scholar] [CrossRef]

- Zaraki, A.; Mazzei, D.; Giuliani, M.; De Rossi, D. Designing and Evaluating a Social Gaze-Control System for a Humanoid Robot. IEEE Trans. Hum.-Mach. Syst. 2014, PP, 1–12. [Google Scholar] [CrossRef]

- Russell, J.A. The circumplex model of affect. J. Personal. Soc. Psychol. 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Mazzei, D.; Billeci, L.; Armato, A.; Lazzeri, N.; Cisternino, A.; Pioggia, G.; Igliozzi, R.; Muratori, F.; Ahluwalia, A.; De Rossi, D. The FACE of autism. In Proceedings of the 19th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN 2010), Viareggio, Italy, 13–15 September 2010; pp. 791–796. [Google Scholar]

- Mazzei, D.; Greco, A.; Lazzeri, N.; Zaraki, A.; Lanatà, A.; Igliozzi, R.; Mancini, A.; Scilingo, P.E.; Muratori, F.; De Rossi, D. Robotic Social Therapy on Children with Autism: Preliminary Evaluation through Multi-parametric Analysis. In Proceedings of the 2012 International Conference on Social Computing (SocialCom) Privacy, Security, Risk and Trust (PASSAT), Amsterdam, The Netherlands, 3–5 September 2012; pp. 955–960. [Google Scholar]

- Lazzeri, N.; Mazzei, D.; Greco, A.; Rotesi, A.; Lanatà, A.; De Rossi, D.E. Can a Humanoid Face be Expressive? A Psychophysiological Investigation. Front. Bioeng. Biotechnol. 2015, 3, 64. [Google Scholar] [CrossRef] [PubMed]

- 20th Century FOX. Morgan | Robots React to the Morgan Trailer. Available online: https://www.youtube.com/watch?v=JqS8DVPiV0E (accessed on 25 August 2016).

- Bechara, A.; Damasio, H.; Tranel, D.; Damasio, A.R. Deciding advantageously before knowing the advantageous strategy. Science 1997, 275, 1293–1295. [Google Scholar] [CrossRef] [PubMed]

- Cominelli, L.; Mazzei, D.; Pieroni, M.; Zaraki, A.; Garofalo, R.; De Rossi, D. Damasio’s Somatic Marker for Social Robotics: Preliminary Implementation and Test. In Biomimetic and Biohybrid Systems; Springer: Berlin/Heidelberg, Germany, 2015; pp. 316–328. [Google Scholar]

- Atzori, L.; Iera, A.; Morabito, G. The internet of things: A survey. Comput. Netw. 2010, 54, 2787–2805. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).