Estimation of the Lateral Distance between Vehicle and Lanes Using Convolutional Neural Network and Vehicle Dynamics

Abstract

Featured Application

Abstract

1. Introduction

- An algorithm for image synthesis is proposed using only a few images from the real scene and a large number of high-quality images can be obtained.

- A label automatic annotation algorithm that provides the label images for training the CNN model based on the synthetic images is presented.

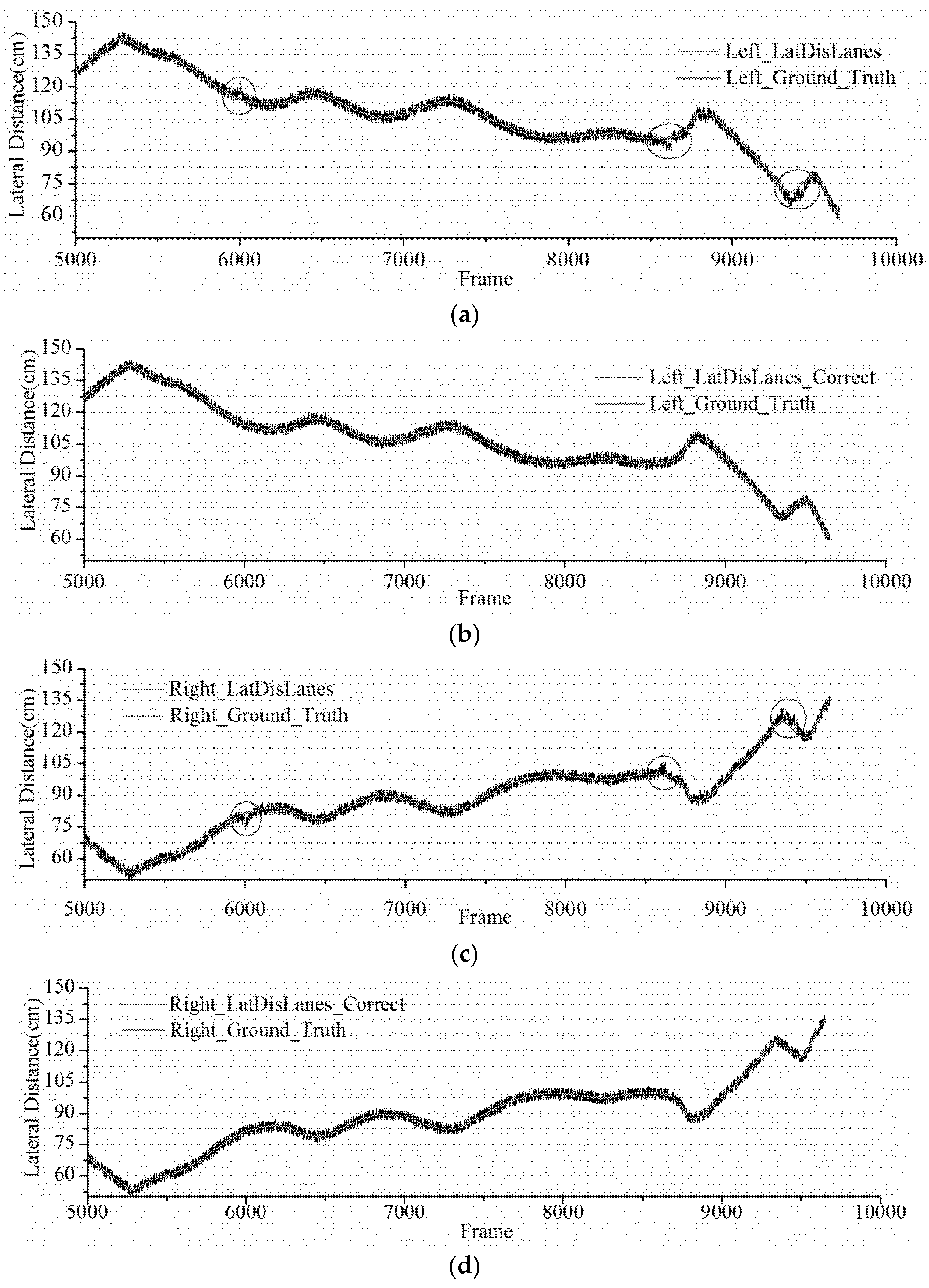

- A CNN model, LatDisLanes, is designed to recognize the lateral distance. Furthermore, to reduce the influence of inclination angle on model recognition accuracy, this study proposes a dynamic correction model.

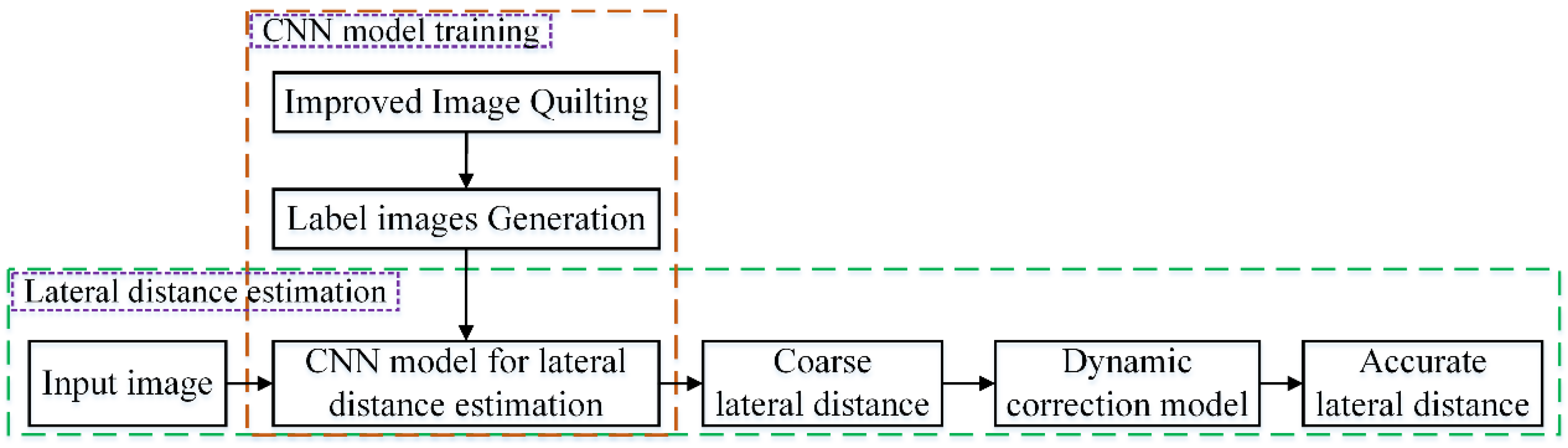

2. Method

2.1. Method of Label Images Synthesis

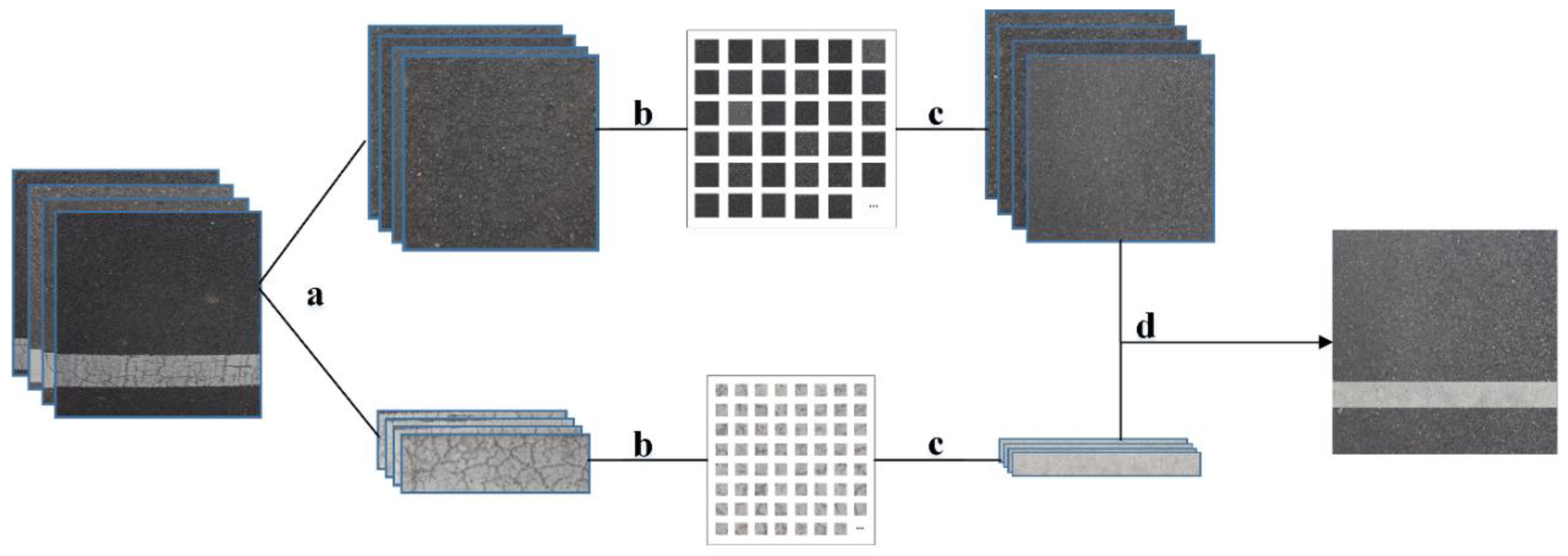

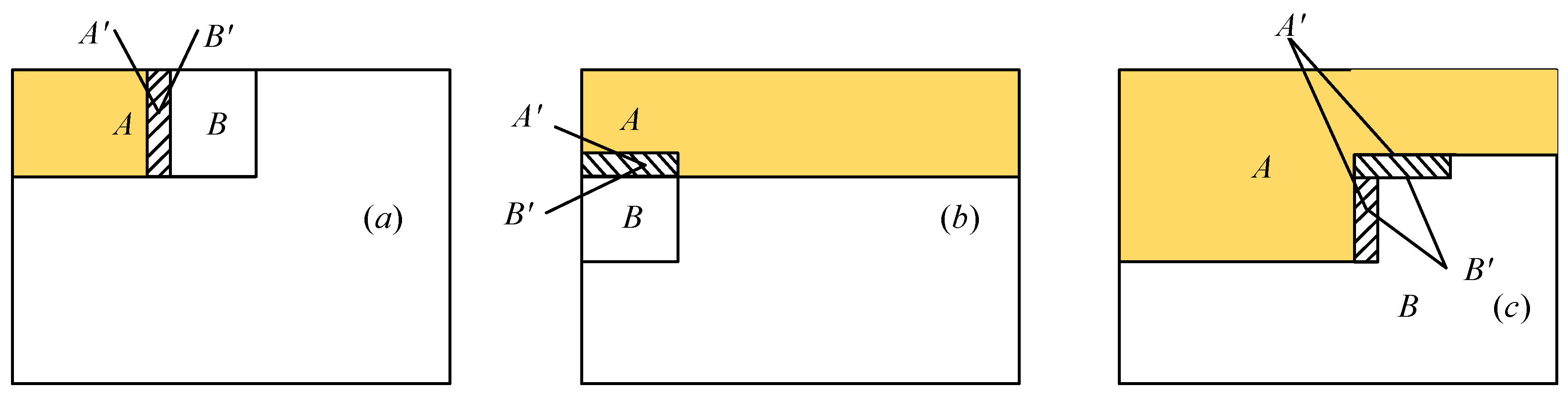

2.1.1. Improved Image Quilting

- Improvement of similarity matching metric

- 2.

- Improvement of texture block selection

2.1.2. Generation of Raw Labelled Images

- Generation of road shape

- 2.

- Lane edge filtering

- 3.

- Uniform wear

- 4.

- Texture consistency

2.1.3. Label Image Process

- Dirt addition

- 2.

- Shadow addition

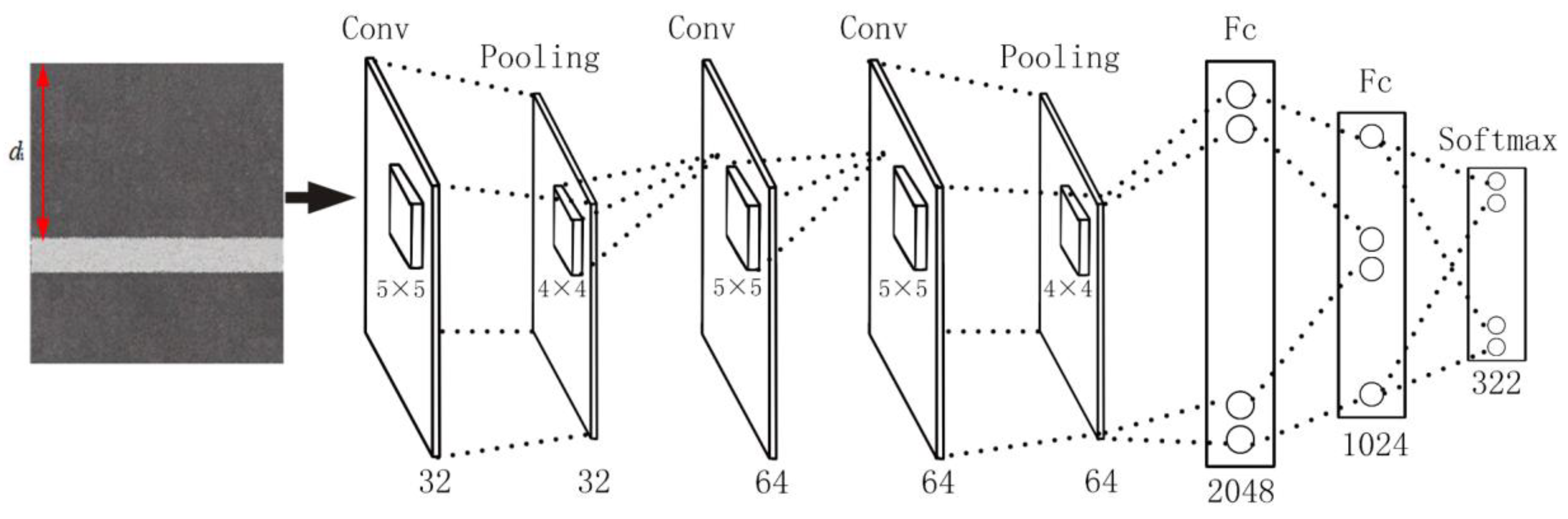

2.2. Recognition Model for Estimating Lateral Distance

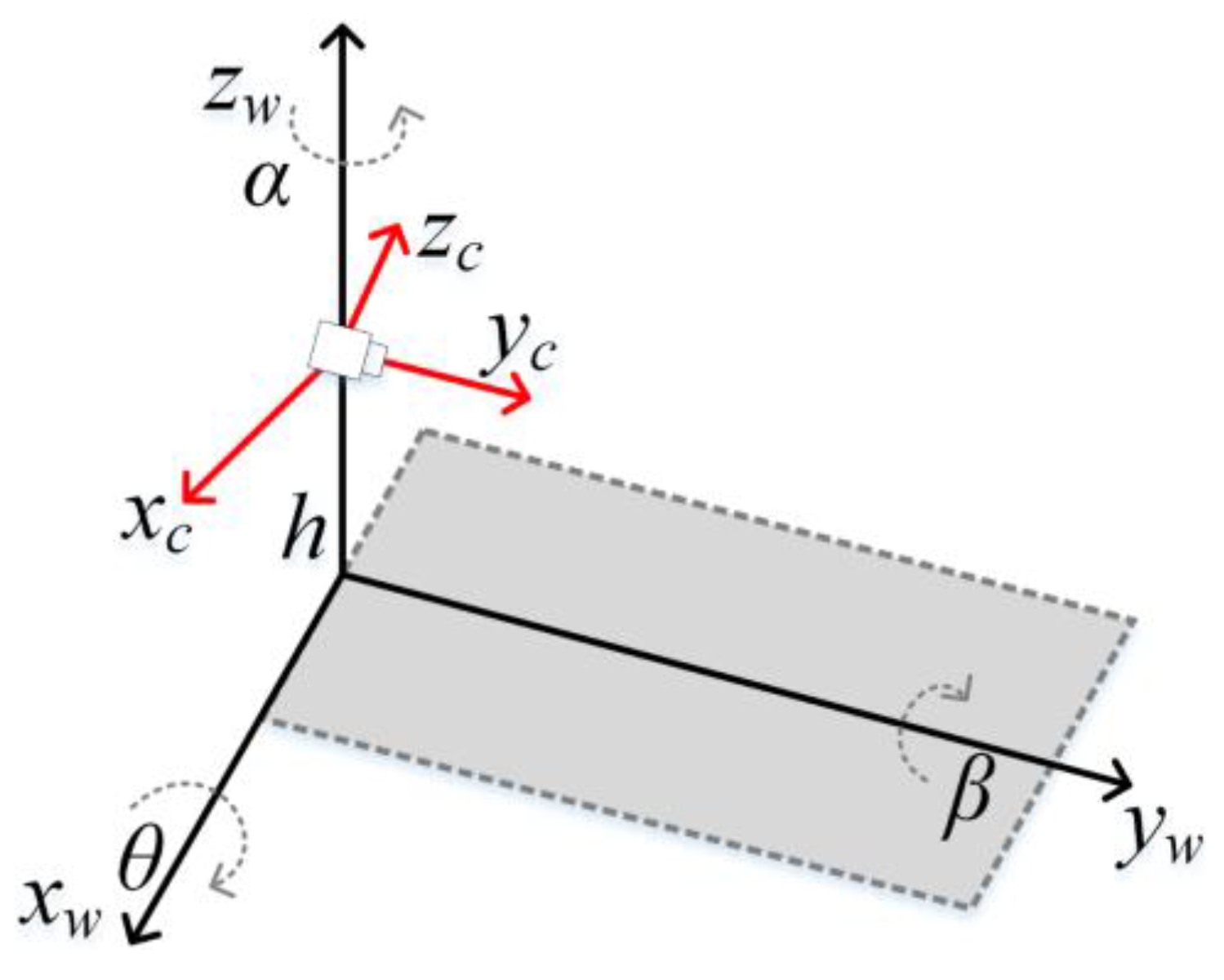

2.3. Dynamic Correction Model

- Transformation from world coordinate to camera coordinates

- 2.

- Transformation from camera coordinates to image coordinates

- 3.

- Transformation from image coordinates to pixel coordinates

3. Experiment and Discussion

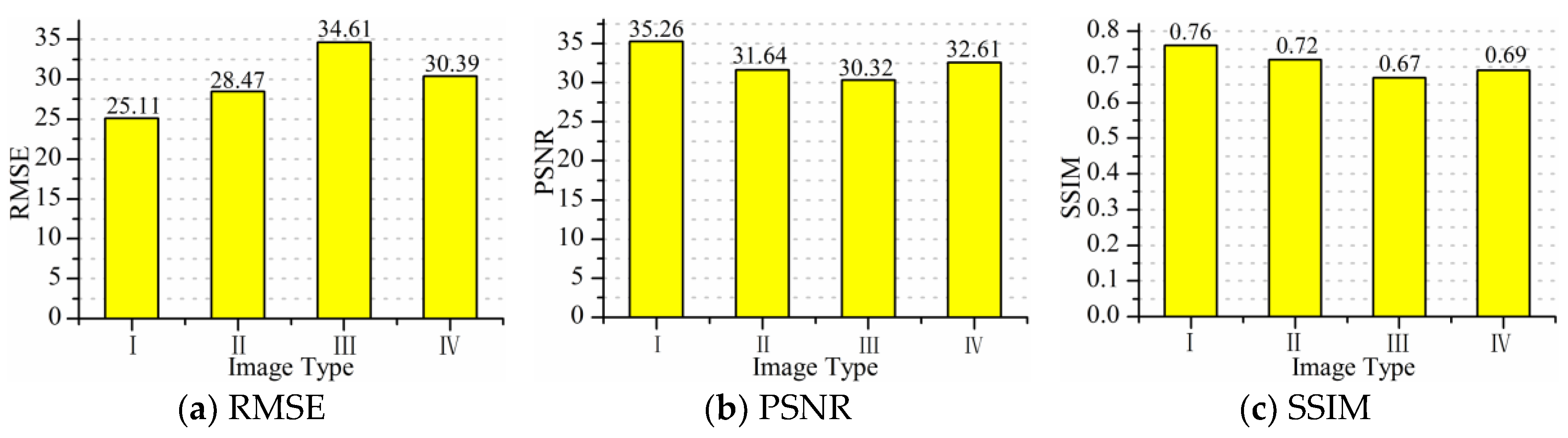

3.1. Evaluation of the Improved Image Quilting

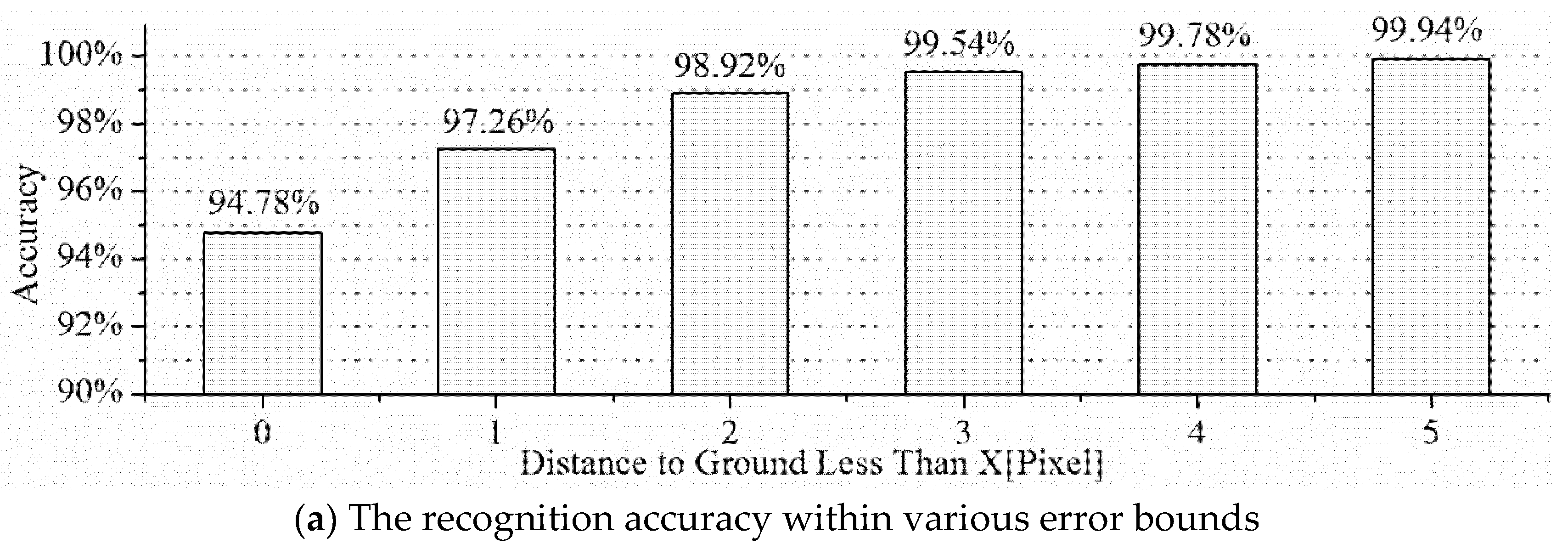

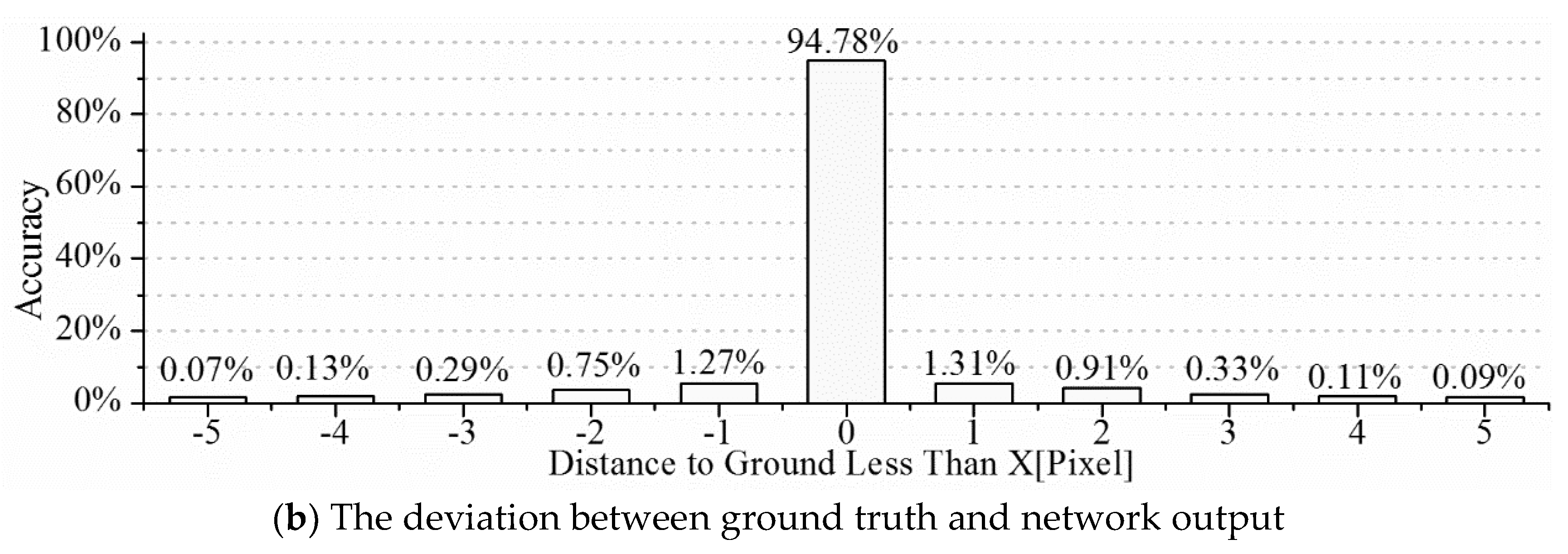

3.2. Evaluation of the LatDisLanes

3.3. Experiment of the Corrected Model

4. Conclusions

- An algorithm for image synthesis is proposed based on the Image Quilting by improving the metric of texture block matching and method of block selection, which can rapidly synthesize numerous high-quality lane and asphalt images.

- A label automatic annotation algorithm is presented, wherein the distance between the edge of lane and asphalt was set as the label, and factors of light, road wear, and dirt were taken into account. The experiment shows that the label images obtained had a high quality and they could be used for the model training.

- By constructing a deep CNN, LatDisLanes, the model can recognize the lateral distance with a sub-centimeter precision. Furthermore, a dynamic correction model is proposed to reduce the recognition error that is caused by the inclination angle of vehicle. The experiment was conducted in i-VISTA and the result showed that inclination angle greater than 3° would cause a bigger error, which can be reduced by the proposed correction model.

Author Contributions

Funding

Conflicts of Interest

References

- Tang, X.; Yang, W.; Hu, X.; Zhang, D. A novel simplified model for torsional vibration analysis of a series-parallel hybrid electric vehicle. Mech. Syst. Signal Process. 2017, 85, 329–338. [Google Scholar] [CrossRef]

- Van, Q.N.; Yoon, M.; Che, W.; Yun, D.; Kim, H.; Boo, K. A study on real time integrated lane detection and vehicle tracking method with side-mirror cameras. In Proceedings of the IEEE 14th International Workshop on Advanced Motion Control (AMC 2016), Auckland, New Zealand, 22–24 April 2016; pp. 346–352. [Google Scholar]

- Tang, X.; Hu, X.; Yang, W.; Yu, H. Novel torsional vibration modeling and assessment of a power-split hybrid electric vehicle equipped with a dual-mass flywheel. IEEE Trans. Veh. Technol. 2018, 67, 1990–2000. [Google Scholar] [CrossRef]

- Liu, K.; Gong, J.; Chen, S.; Zhang, Y.; Chen, H. Model Predictive Stabilization Control of High-Speed Autonomous Ground Vehicles Considering the Effect of Road Topography. Appl. Sci. 2018, 8, 822. [Google Scholar] [CrossRef]

- Satzoda, R.K.; Trivedi, M.M. On performance evaluation metrics for lane estimation. In Proceedings of the 22th IEEE International Conference on Pattern Recognition (ICPR) 2014, Stockholm, Sweden, 24–28 August 2014; pp. 2625–2630. [Google Scholar]

- Tang, X.; Zhang, D.; Liu, T.; Khajepour, A.; Yu, H.; Wang, H. Research on the energy control of a dual-motor hybrid vehicle during engine start-stop process. Energy 2019, 166, 1181–1193. [Google Scholar] [CrossRef]

- Nedevschi, S.; Popescu, V.; Danescu, R.; Marita, T.; Oniga, F. Accurate ego-vehicle global localization at intersections through alignment of visual data with digital map. IEEE Trans. Intell. Transp. Syst. 2013, 14, 673–687. [Google Scholar] [CrossRef]

- Lee, C.; Moon, J.H. Robust Lane Detection and Tracking for Real-Time Applications. IEEE Trans. Intell. Transp. Syst. 2018, 19, 4043–4048. [Google Scholar] [CrossRef]

- Chaple, G.N.; Daruwala, R.D.; Gofane, M.S. Comparisions of Robert, Prewitt, Sobel operator based edge detection methods for real time uses on FPGA. In Proceedings of the International Conference on Technologies for Sustainable Development (ICTSD) 2015, Mumbai, India, 4–6 February 2015; pp. 1–4. [Google Scholar]

- Tang, X.; Zou, L.; Yang, W.; Huang, Y.; Wang, H. Novel mathematical modelling methods of comprehensive mesh stiffness for spur and helical gears. Appl. Math. Model. 2018, 64, 524–540. [Google Scholar] [CrossRef]

- Du, X.; Tan, K.K. Vision-based approach towards lane line detection and vehicle localization. Mach. Vis. Appl. 2016, 27, 175–191. [Google Scholar] [CrossRef]

- Collado, J.M.; Hilario, C.; de la Escalera, A.; Armingol, J.M. Detection and classification of road lanes with a frequency analysis. In Proceedings of the IEEE Intelligent Vehicles Symposium 2005, Las Vegas, NV, USA, 6–8 June 2005; pp. 77–82. [Google Scholar]

- Aly, M. Real time detection of lane markers in urban streets. In Proceedings of the IEEE Intelligent Vehicles Symposium 2008, Eindhoven, The Netherlands, 4–6 June 2008; pp. 7–12. [Google Scholar]

- Hernández, D.C.; Seo, D.; Jo, K.H. Robust lane marking detection based on multi-feature fusion. In Proceedings of the 9th IEEE Human System Interactions (HSI) 2016, Portsmouth, UK, 6–8 July 2016; pp. 423–428. [Google Scholar]

- Wang, Y.; Bai, L.; Fairhurst, M. Robust road modeling and tracking using condensation. IEEE Trans. Intell. Transp. Syst. 2008, 9, 570–579. [Google Scholar] [CrossRef]

- Borkar, A.; Hayes, M.; Smith, M.T. A novel lane detection system with efficient ground truth generation. IEEE Trans. Intell. Transp. Syst. 2012, 13, 365–374. [Google Scholar] [CrossRef]

- Yoo, H.; Yang, U.; Sohn, K. Gradient-enhancing conversion for illumination-robust lane detection. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1083–1094. [Google Scholar] [CrossRef]

- Du, X.; Tan, K.K.; Htet, K.K.K. Vision-based lane line detection for autonomous vehicle navigation and guidance. In Proceedings of the IEEE 10th Asian Control Conference (ASCC) 2015, Kota Kinabalu, Malaysia, 31 May–3 June 2015; pp. 1–5. [Google Scholar]

- Zhang, Y.; Su, Y.; Yang, J.; Ponce, J.; Kong, H. When Dijkstra meets vanishing point: A stereo vision approach for road detection. IEEE Trans. Image Process. 2018, 27, 2176–2188. [Google Scholar] [CrossRef] [PubMed]

- Aeberhard, M.; Rauch, S.; Bahram, M.; Tanzmeister, G.; Thomas, J.; Pilat, Y.; Kaempchen, N. Experience, results and lessons learned from automated driving on Germany’s highways. IEEE Intell. Transp. Syst. Mag. 2015, 7, 42–57. [Google Scholar] [CrossRef]

- Wang, D.; Cui, P.; Zhu, W. Structural deep network embedding. In Proceedings of the 22nd ACM SIGKDD international conference on Knowledge discovery and data mining 2016, San Francisco, CA, USA, 13–17 August 2016; pp. 1225–1234. [Google Scholar]

- Li, J.; Mei, X.; Prokhorov, D.; Tao, D. Deep neural network for structural prediction and lane detection in traffic scene. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 690–703. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; Lee, M. Robust lane detection based on convolutional neural network and random sample consensus. In Proceedings of the 21st International Conference on Neural Information Processing 2014, Kuching, Malaysia, 3–6 November 2014; pp. 454–461. [Google Scholar]

- Brust, C.A.; Sickert, S.; Simon, M.; Rodner, E.; Denzler, J. Convolutional Patch Networks with Spatial Prior for Road Detection and Urban Scene Understanding. Available online: https://arxiv.org/abs/1502.06344 (accessed on 23 February 2015).

- Wang, A.; Lu, J.; Cai, J.; Cham, T.J.; Wang, G. Large-margin multi-modal deep learning for RGB-D object recognition. IEEE Trans. Multimed. 2015, 17, 1887–1898. [Google Scholar] [CrossRef]

- Ho, T.J.; Chung, M.J. Information-aided smart schemes for vehicle flow detection enhancements of traffic microwave radar detectors. Appl. Sci. 2016, 6, 196. [Google Scholar] [CrossRef]

- Borkar, A.; Hayes, M.; Smith, M.T. An efficient method to generate ground truth for evaluating lane detection systems. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 2010, Dallas, TX, USA, 14–19 March 2010; pp. 1090–1093. [Google Scholar]

- Al-Sarraf, A.; Shin, B.S.; Xu, Z.; Klette, R. Ground truth and performance evaluation of lane border detection. In Proceedings of the International Conference on Computer Vision and Graphics (ICCVG) 2014, Warsaw, Poland, 19–21 September 2016; pp. 171–178. [Google Scholar]

- Revilloud, M.; Gruyer, D.; Rahal, M.C. A new multi-agent approach for lane detection and tracking. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA) 2016, Stockholm, Sweden, 16–21 May 2016; pp. 3147–3153. [Google Scholar]

- Gurghian, A.; Koduri, T.; Bailur, S.V.; Carey, K.J.; Murali, V.N. Deeplanes: End-to-end lane position estimation using deep neural networksa. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 38–45. [Google Scholar]

- Efros, A.A.; Freeman, W.T. Image quilting for texture synthesis and transfer. In Proceedings of the 28th Annual Conference on Computer Craphics and Interactive Techniques, Los Angeles, CA, USA, 12–17 August 2001; pp. 341–346. [Google Scholar]

- Kwatra, V.; Schödl, A.; Essa, I.; Turk, G.; Bobick, A. Graphcut textures: Image and video synthesis using graph cuts. ACM Trans. Gr. 2003, 22, 277–286. [Google Scholar] [CrossRef]

- Dong, J.; Chantler, M. Capture and synthesis of 3D surface texture. Int. J. Comput. Vis. 2005, 62, 177–194. [Google Scholar] [CrossRef]

- Long, J.; Mould, D. Improved image quilting. In Proceedings of the Conference on Graphics Interface 2007, Montreal, QC, Canada, 28–30 May 2007; pp. 257–264. [Google Scholar]

- Zhuang, H.; Tan, Z.; Deng, K.; Yao, G. Change detection in multispectral images based on multiband structural information. Remote Sens. Lett. 2018, 9, 1167–1176. [Google Scholar] [CrossRef]

- Broumi, S.; Bakal, A.; Talea, M.; Smarandache, F.; Vladareanu, L. Applying Dijkstra algorithm for solving neutrosophic shortest path problem. In Proceedings of the IEEE International Conference on Advanced Mechatronic Systems (ICAMechS) 2016, Melbourne, Australia, 30 November–3 December 2016; pp. 412–416. [Google Scholar]

- Marc, R.; Dominique, G.; Evangeline, P. Generator of road marking textures and associated ground truth Applied to the evaluation of road marking detection. In Proceedings of the IEEE International Conference on Intelligent Transportation Systems 2012, Anchorage, Alaska, 16–19 September 2012; pp. 933–938. [Google Scholar]

- Chung, K.W.; Chan, H.S.Y.; Wang, B.N. Automatic generation of nonperiodic patterns from dynamical systems. Chaos Solitons Fractals 2004, 19, 1177–1187. [Google Scholar] [CrossRef]

- Gruyer, D.; Belaroussi, R.; Revilloud, M. Accurate lateral positioning from map data and road marking detection. Expert Syst. Appl. 2016, 43, 1–8. [Google Scholar] [CrossRef]

- Huang, Z.; Li, J.; Weng, C.; Lee, C.H. Beyond cross-entropy: Towards better frame-level objective functions for deep neural network training in automatic speech recognition. In Proceedings of the Fifteenth Annual Conference of the International Speech Communication Association (INTERSPEECH) 2014, Singapore, 14–18 September 2014; pp. 2236–2239. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. Available online: https://arxiv.org/abs/1412.6980 (accessed on 30 January 2017).

- Paula, M.B.D.; Jung, C.R. Automatic detection and classification of road lane markings using onboard vehicular cameras. IEEE Trans. Intell. Transp. Syst. 2015, 16, 3160–3169. [Google Scholar] [CrossRef]

- Chen, Z.; Lin, J.; Liao, N.; Chen, C.W. Full reference quality assessment for image retargeting based on natural scene statistics modeling and bi-directional saliency similarity. IEEE Trans. Image Process. 2017, 26, 5138–5148. [Google Scholar] [CrossRef] [PubMed]

- Code for Classification and Type of Highway Pavement. Available online: http://www.gb688.cn/bzgk/gb/newGbInfo?hcno=04A6AB6769C7F665AD608DBC74D6C3DD (accessed on 8 January 2002).

- Liu, Y.; Yang, J.; Meng, Q.; Lv, Z.; Song, Z.; Gao, Z. Stereoscopic image quality assessment method based on binocular combination saliency model. Signal Process. 2016, 125, 237–248. [Google Scholar] [CrossRef]

- Jiménez, F.; Clavijo, M.; Castellanos, F.; Álvarez, C. Accurate and Detailed Transversal Road Section Characteristics Extraction Using Laser Scanner. Appl. Sci. 2018, 8, 2076–3417. [Google Scholar] [CrossRef]

- Issac, J.; Wüthrich, M.; Cifuentes, C.G.; Bohg, J.; Trimpe, S.; Schaal, S. Depth-based object tracking using a Robust Gaussian Filter. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA) 2016, Stockholm, Sweden, 16–21 May 2016; pp. 608–615. [Google Scholar]

- Qin, Y.; Wang, Z.; Xiang, C.; Dong, M.; Hu, C.; Wang, R. A novel global sensitivity analysis on the observation accuracy of the coupled vehicle model. Veh. Syst. Dyn. 2018, 1–22. [Google Scholar] [CrossRef]

| Image Type | Texture Block Size (Pixel × Pixel) | Block Number | α | β | Output Image Size (Pixel × Pixel) | Synthesis Time (s) |

|---|---|---|---|---|---|---|

| Lane | 8 × 30 | 2500 | 0.91 | 0.09 | 32 × 360 | 7.73 |

| Road | 30 × 30 | 2500 | 0.65 | 0.35 | 360 × 360 | 20.18 |

| Image Category | Nl | Lth | Hth | γ | Ls | c | |

|---|---|---|---|---|---|---|---|

| I | No wear, no dirt, no shadows | 2 | 130 | 120 | 0.3 | 20 | 5 |

| II | Light wear, dirt, no shadows | 2 | 97 | 159 | 0.5 | 20 | 5 |

| III | Middle wear, dirt, shadows | 2 | 10 | 215 | 0.6 | 20 | 75 |

| IV | Severe wear, no dirt, shadows | 2 | 10 | 215 | 0.6 | 20 | 82 |

| Dataset Name | Number |

|---|---|

| Training data | 175,000 |

| Validation data | 37,500 |

| Test data | 37,500 |

| Light Condition | Average Error (cm) |

|---|---|

| Lateral supplementary light | 0.825 |

| Streetlights | 4.296 |

| No streetlights | 53.472 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Yang, W.; Tang, X.; He, Z. Estimation of the Lateral Distance between Vehicle and Lanes Using Convolutional Neural Network and Vehicle Dynamics. Appl. Sci. 2018, 8, 2508. https://doi.org/10.3390/app8122508

Zhang X, Yang W, Tang X, He Z. Estimation of the Lateral Distance between Vehicle and Lanes Using Convolutional Neural Network and Vehicle Dynamics. Applied Sciences. 2018; 8(12):2508. https://doi.org/10.3390/app8122508

Chicago/Turabian StyleZhang, Xiang, Wei Yang, Xiaolin Tang, and Zhonghua He. 2018. "Estimation of the Lateral Distance between Vehicle and Lanes Using Convolutional Neural Network and Vehicle Dynamics" Applied Sciences 8, no. 12: 2508. https://doi.org/10.3390/app8122508

APA StyleZhang, X., Yang, W., Tang, X., & He, Z. (2018). Estimation of the Lateral Distance between Vehicle and Lanes Using Convolutional Neural Network and Vehicle Dynamics. Applied Sciences, 8(12), 2508. https://doi.org/10.3390/app8122508