Convolution Neural Network with Selective Multi-Stage Feature Fusion: Case Study on Vehicle Rear Detection

Abstract

:1. Introduction

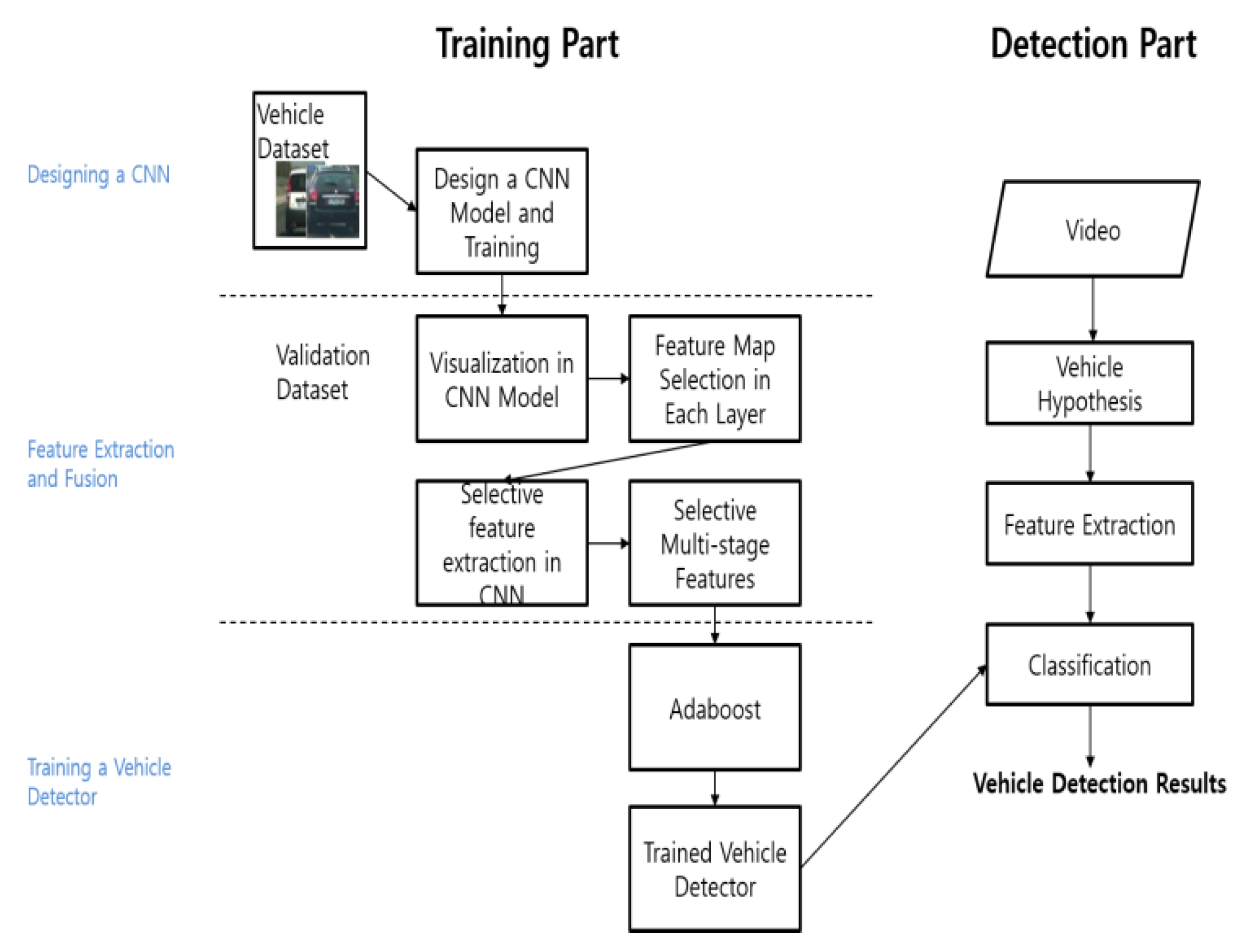

2. Vehicle Detection Framework Using Selective Multi-Stage Features

2.1. Related Works

2.2. Methodology Overview

- CNN model design. We designed a CNN model for the proposed vehicle detection framework. The design is important because the features to be extracted depend on the CNN model structure, which should ensure that the characteristic vehicle image information can be learned. We trained the CNN model with a prepared vehicle dataset using the stochastic gradient with momentum and back-propagation algorithms.

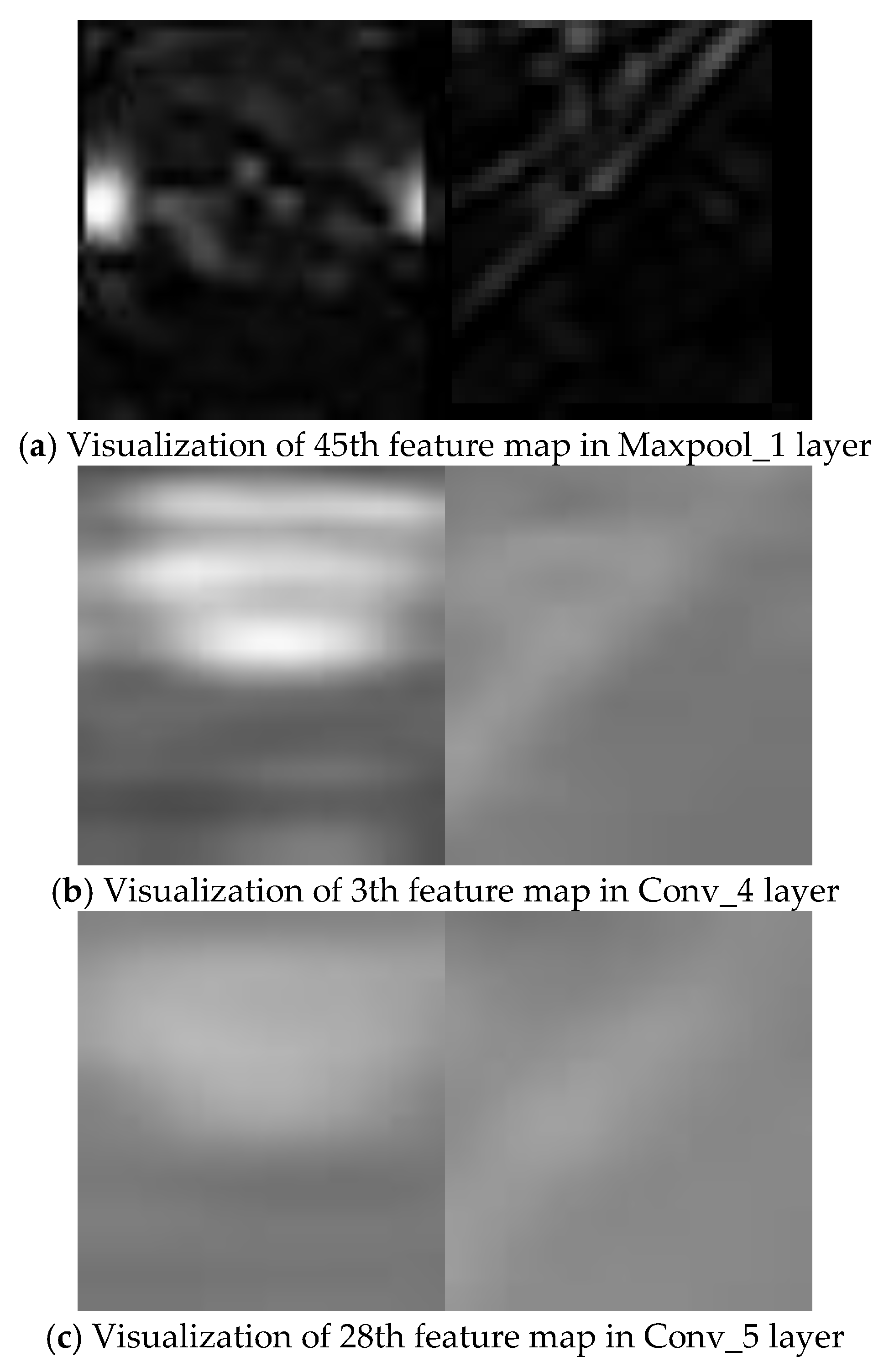

- Feature extraction and fusion. We extracted and fused the features containing the characteristic vehicle image information in the trained CNN model using a visualization technique that considered certain aspects of the feature map from each model layer. This step is critical, because the visualization technique implied that we do not need to use all the available feature maps, but can selectively extract features that include only the characteristic vehicle image information.

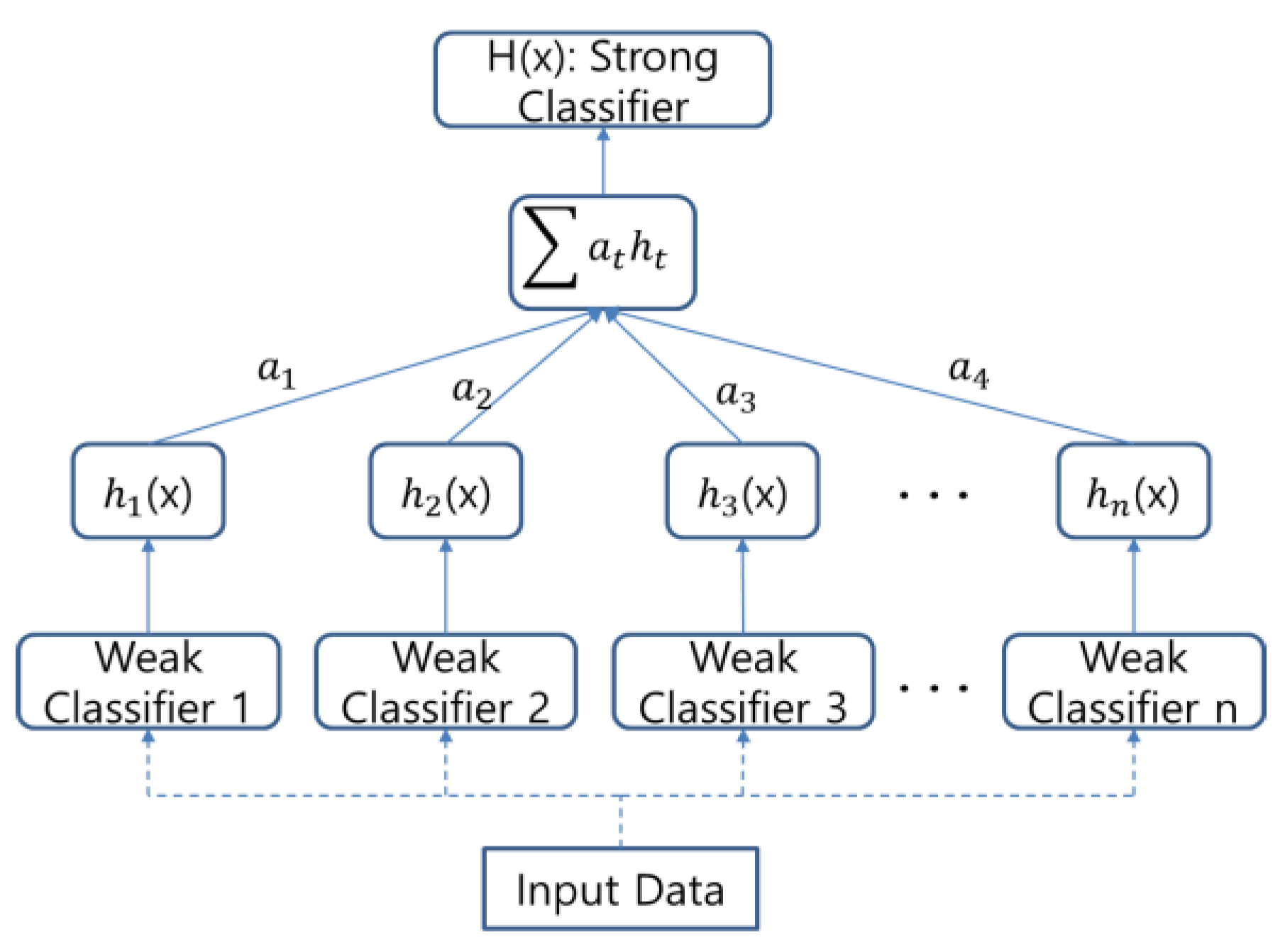

- Detector training. We trained the detector with the extracted features using the AdaBoost algorithm.

2.3. Designing and Training the CNN Model

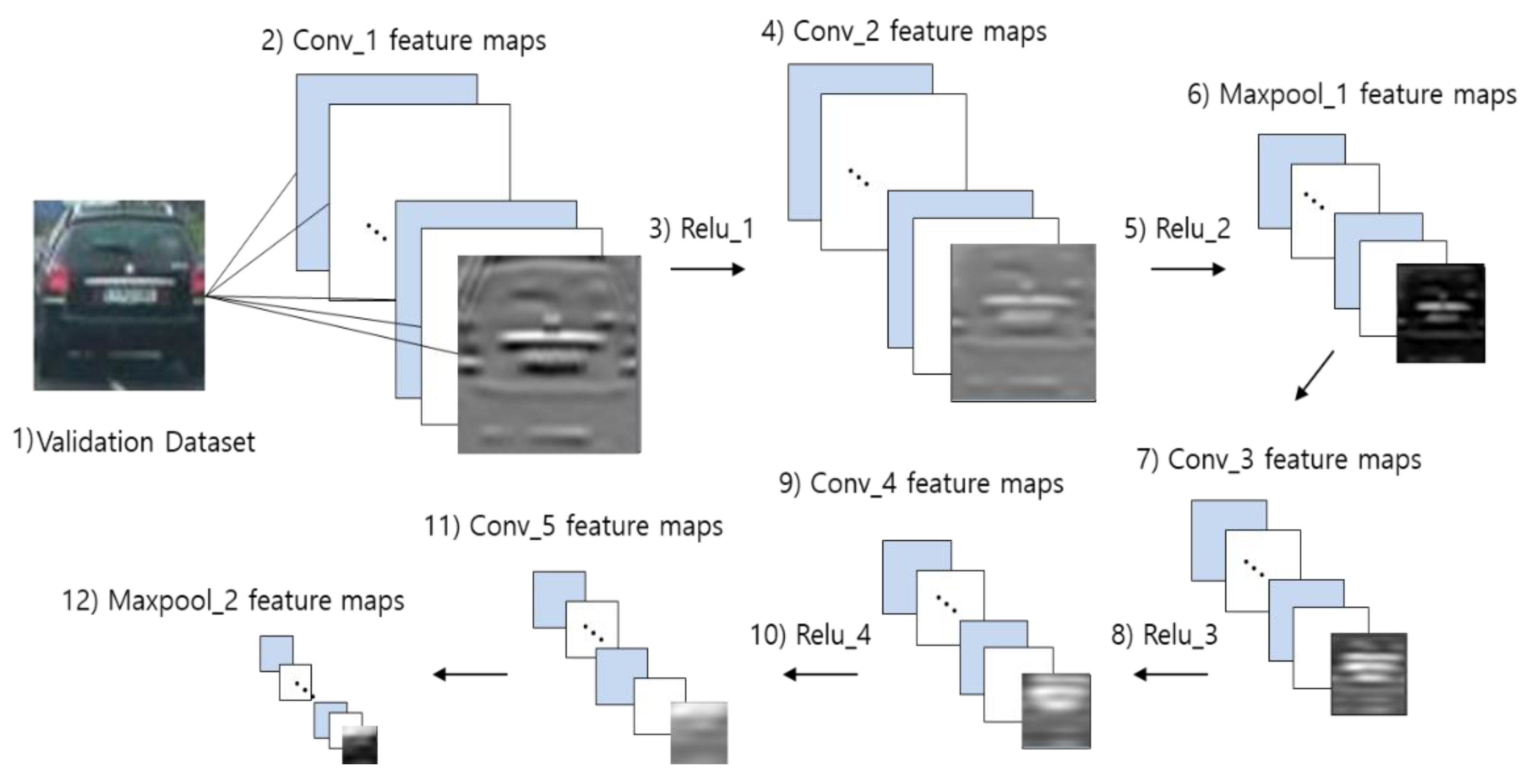

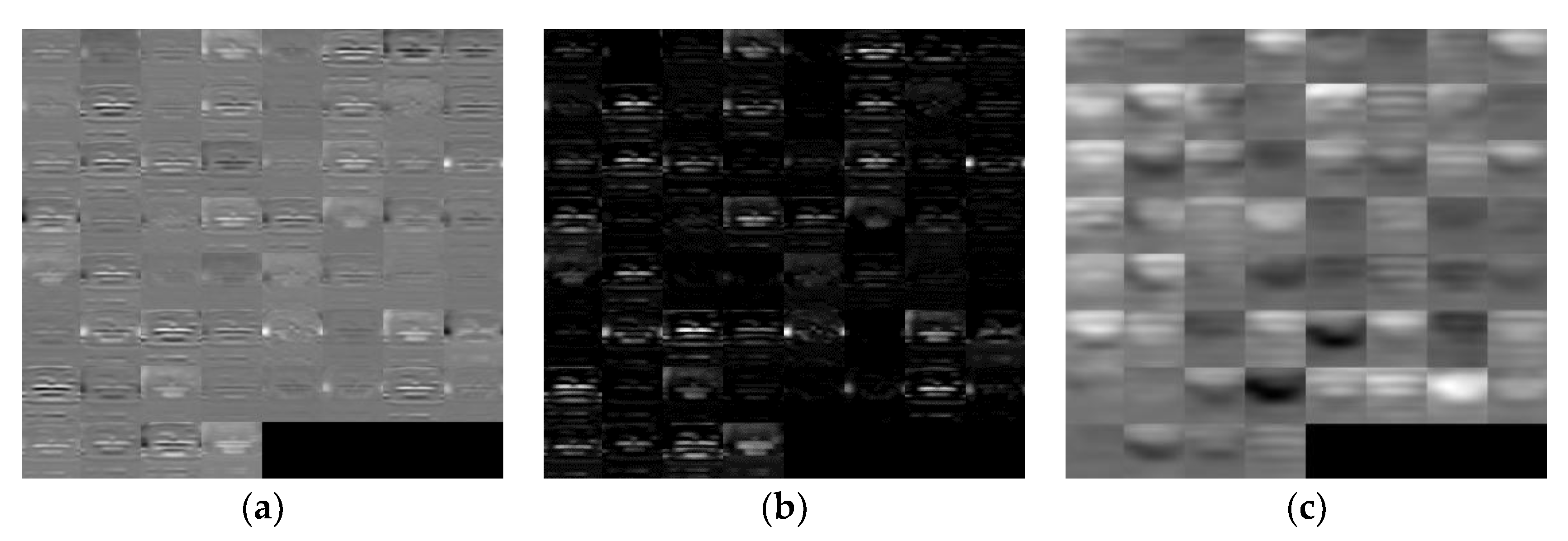

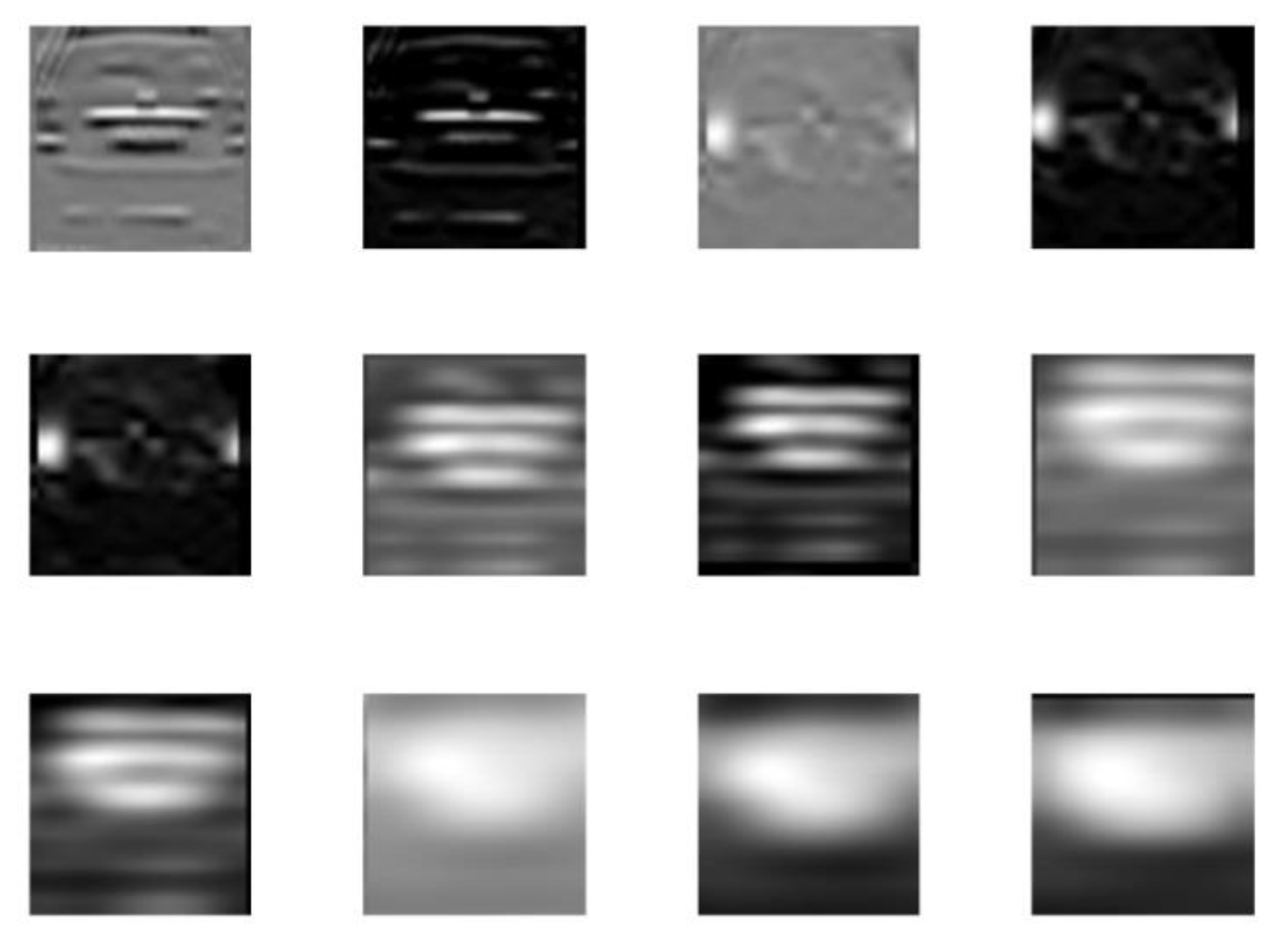

2.4. Selective Multi-Stage Feature Extraction

- More edge information in the x direction is learned. The feature maps in the second convolution layer represent richer low-level features than those from the first convolution layer. Therefore, when we include low-level features in the fusion process, the second convolution layer feature maps are more appropriate than the first convolution layer ones.

- Some features represent the vehicle head lamp, because the second convolution layer includes the low-level color information features. The head lamp area contains the characteristic vehicle information and can be differentiated from other objects. This particular characteristic information was not found in the other layers. Therefore, the feature map that contains the head lamp information must be included in the fusion process.

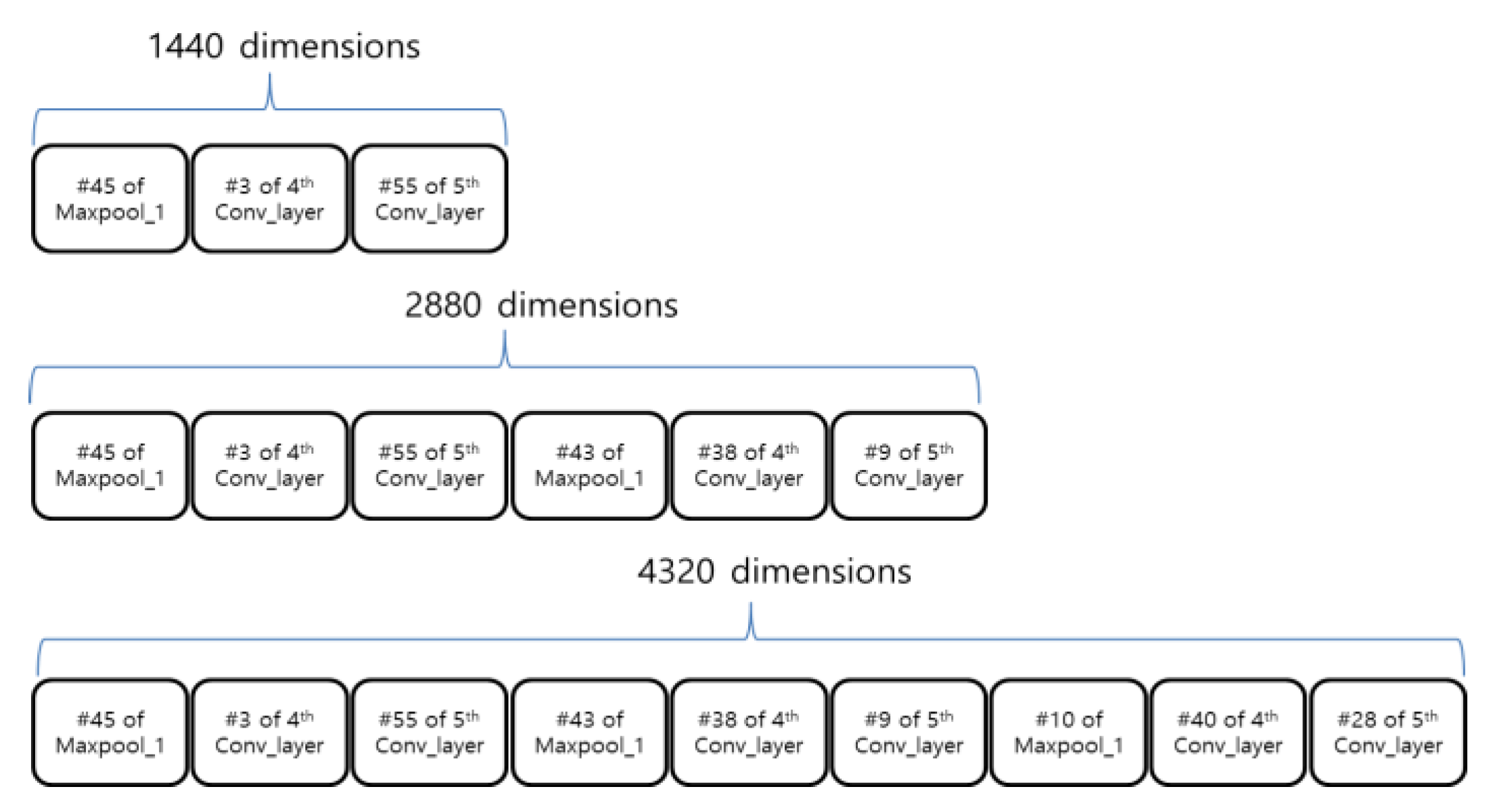

2.5. Selective Multi-Stage Feature Fusion

2.6. Vehicle Detector Trained by AdaBoost

3. Experiments and Comparisons

3.1. Environments

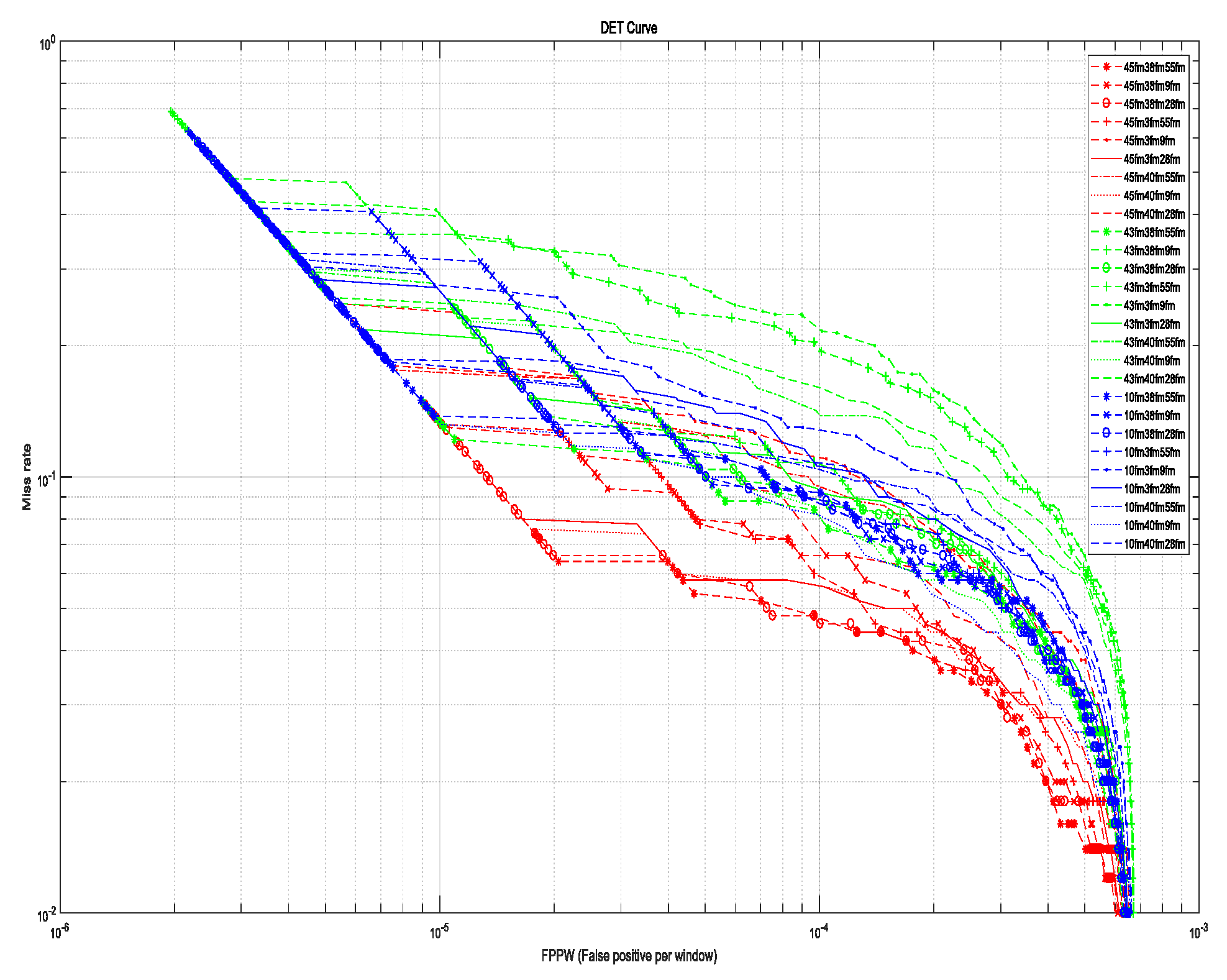

3.2. Feature Sets

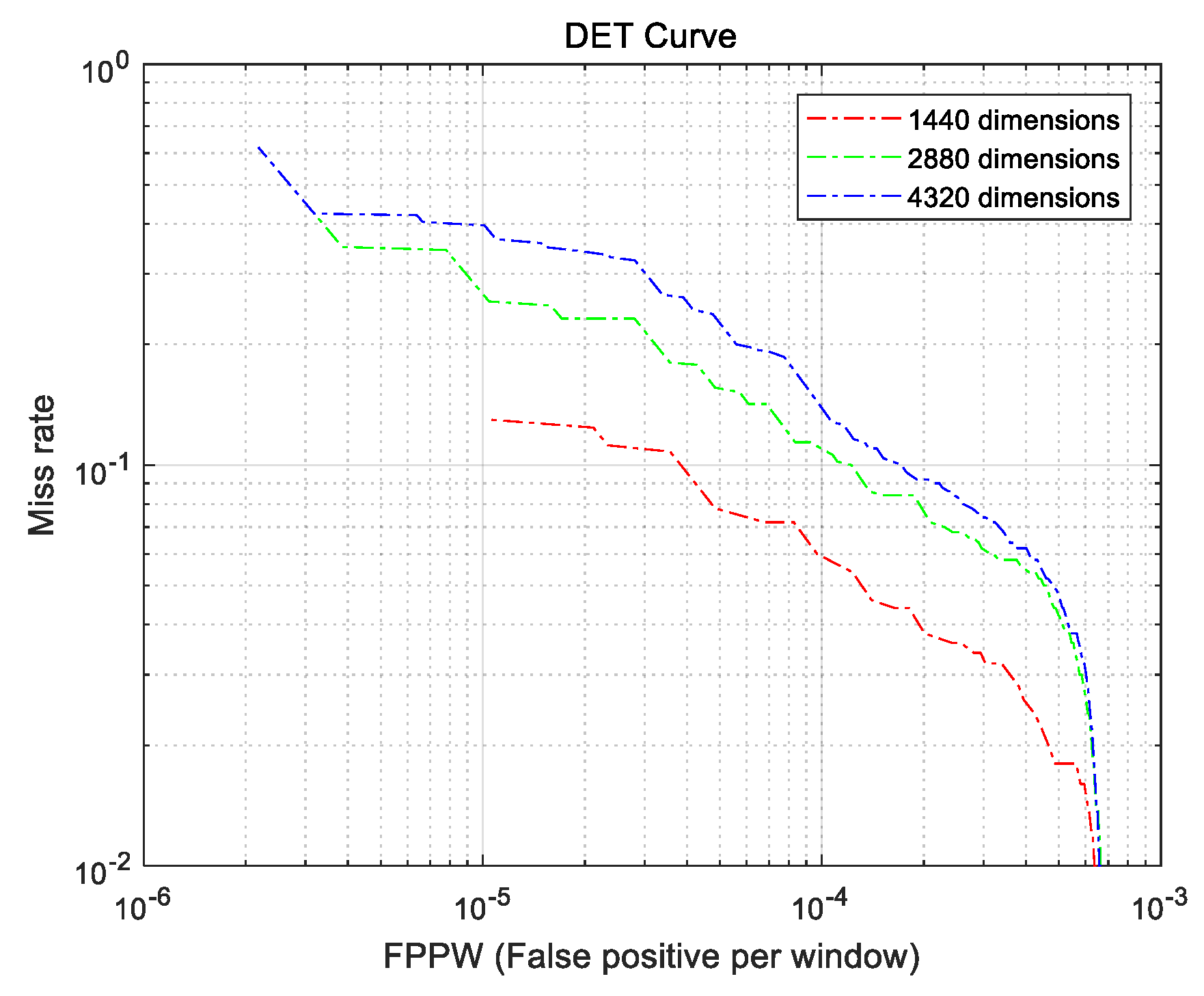

3.3. Experiment 1

3.4. Experiment 2

3.5. Performance Comparisons

4. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Bila, C.; Sivrikaya, F.; Khan, M.A.; Albayrak, S. Vehicles of the future: A survey of research on safety issues. Int. J. Intell. Transp. Syst. 2017, 18, 1046–1065. [Google Scholar] [CrossRef]

- Sivaraman, S.; Trivedi, M.M. Looking at vehicles on the road: A survey of vision-based vehicle detection, tracking and behavior analysis. Int. J. Intell. Transp. Syst. 2013, 14, 1773–1795. [Google Scholar] [CrossRef]

- Zhu, H.; Yuen, K.V.; Mihaylova, L.; Leung, H. Overview of environment perception for intelligent vehicles. Int. J. Intell. Transp. Syst. 2017, 18, 2584–2601. [Google Scholar] [CrossRef]

- Teoh, S.S.; Braunl, S.T. Symmery-based monocular vehicle detection system. Mach. Vis. Appl. 2012, 23, 831–842. [Google Scholar] [CrossRef]

- Neumann, D.; Langner, T.; Ulbrich, F.; Spitta, D.; Goehring, D. Online vehicle detection using Haar-like, LBP and HOG feature based image classifiers with stereo vision preselection. In Proceedings of the Intelligent Vehicles Symposium (IV), Anchorage, AK, USA, 11–14 June 2017; pp. 773–778. [Google Scholar]

- Martinez, E.; Diaz, M.; Melenchon, J.; Montero, J.; Iriondo, I.; Socoro, J. Driving assistance system based on the detection of head-on collisions. In Proceedings of the IEEE Intelligent Vehicles Symposium, Eindhoven, The Netherlands, 4–6 June 2008; pp. 913–918. [Google Scholar]

- Perrollaz, M.; Yoder, J.D.; Negre, A.; Spalanzani, A.; Laugier, C. A visibility-based approach for occupancy grid computation in disparity space. Int. J. Intell. Transp. Syst. 2012, 13, 1383–1393. [Google Scholar] [CrossRef]

- Lecun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Simard, Y.; Steinkraus, D.; Platt, J.C. Best practices for convolutional neural networks applied to visual document analysis. In Proceedings of the 7th International Conference on Document Analysis and Recognition, Edinburgh, UK, 3–6 August 2003. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and Understanding Convolutional Networks; Lecture Notes in Computer Science; Springer: Cham, Germany, 2014; Volume 8689. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 2nd International Conference on Learning Representations, Banff, AB, Canada, 14–16 April 2014; pp. 1–14. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Freund, Y.; Schapire, R.E. A detection-theoretic generalization of on-line learning and an application to boosting. Int. J. Comput. Syst. Sci. 1997, 55, 119–139. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M.J. Robust real-time face detection. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- The GTI-UPM Vehicle Image Database. Available online: https://www.gti.ssr.upm.es/data/Vehicle_database.html (accessed on 1 December 2017).

- Martin, A.; Doddington, A.G.; Kamm, T.; Ordowski, M.; Przybocki, M. The DET curve in assessment of detection task performance. In Proceedings of the 5th European Conference on Speech Communication and Technology (EUROSPEECH ’97), Rhodes, Greece, 22–25 September 1997; pp. 1895–1898. [Google Scholar]

| Layer | Conv_1 | Conv_2 | Maxp_1 | Conv_3 |

| Size | 5 × 5 | 5 × 5 | 2 × 2 | 5 × 5 |

| Stride | 1 | 1 | 2 | 1 |

| Layer | Conv_4 | Conv_5 | Maxp_2 | Full_1, 2 |

| Size | 5 × 5 | 5 × 5 | 2 × 2 | 2500 |

| Stride | 1 | 1 | 2 |

| Maxpool_1 | 4th Convolution Layer | 5th Convolution Layer | |

|---|---|---|---|

| Max 1 | 45 | 3 | 55 |

| Max 2 | 43 | 38 | 9 |

| Max 3 | 10 | 40 | 28 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, W.-J.; Kim, D.W.; Kang, T.-K.; Lim, M.-T. Convolution Neural Network with Selective Multi-Stage Feature Fusion: Case Study on Vehicle Rear Detection. Appl. Sci. 2018, 8, 2468. https://doi.org/10.3390/app8122468

Lee W-J, Kim DW, Kang T-K, Lim M-T. Convolution Neural Network with Selective Multi-Stage Feature Fusion: Case Study on Vehicle Rear Detection. Applied Sciences. 2018; 8(12):2468. https://doi.org/10.3390/app8122468

Chicago/Turabian StyleLee, Won-Jae, Dong W. Kim, Tae-Koo Kang, and Myo-Taeg Lim. 2018. "Convolution Neural Network with Selective Multi-Stage Feature Fusion: Case Study on Vehicle Rear Detection" Applied Sciences 8, no. 12: 2468. https://doi.org/10.3390/app8122468

APA StyleLee, W.-J., Kim, D. W., Kang, T.-K., & Lim, M.-T. (2018). Convolution Neural Network with Selective Multi-Stage Feature Fusion: Case Study on Vehicle Rear Detection. Applied Sciences, 8(12), 2468. https://doi.org/10.3390/app8122468