Adaptive Framework for Multi-Feature Hybrid Object Tracking

Abstract

1. Introduction

2. Materials and Methods

- The proposed AMF-MSPF framework implements a feature ranking module on top of the MSPF methodology. Thereby an adaptive multi-feature framework is implemented that selects the required features on-demand as and when required. Consequently, this enables the object tracking algorithm to discriminate the object locally. The feature ranking module re-initializes the MS procedure with new features and is triggered based on whether re-sampling occurs or not. When resampling occurs, a new set of features are selected using the ranking module that are used for updating the target model.

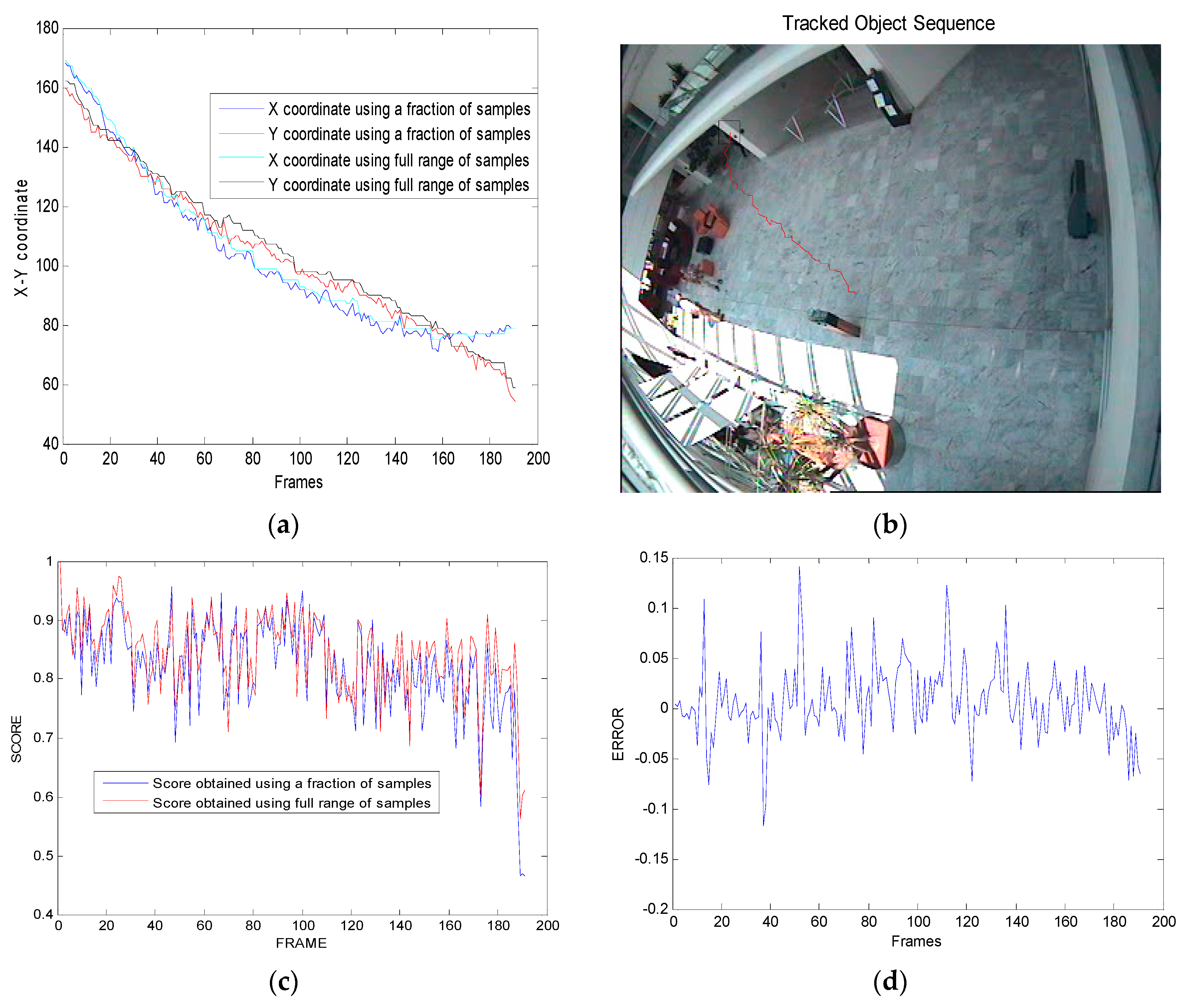

- As the PF algorithm itself is a very compute intensive method, embedding an MS into its particle validation process increases its computational load. Thereby it is pertinent that the complexity of the MS method is reduced to enable the proposed framework to run in real-time. We propose a novel MS optimization method based on an observation that MS only requires a fraction of sample to accurately track. This has led to huge reduction in computational load without inducing any significant error.

3. Proposed Framework

3.1. Feature Ranking

3.2. MS Optimization Procedure

3.3. MS Embedded Particle Filter

3.4. Pseudo Code of AMF-MSPF

- 1.

- Particle initialization step:{}[{}]

- 2.

- Feature Ranking step:

- 3.

- Propagation step:{ =

- 4.

- MS Optimization Step:

- 5.

- Weight Calculation and normalization step:

- 6.

- Estimation Step: (Posterior Estimation)

- 7.

- Re-sampling Step: (Particle redistribution and weight re-initialization)GOTO STEP 2 and REPEATELSEGOTO STEP 3 and REPEAT

4. Experimental Results

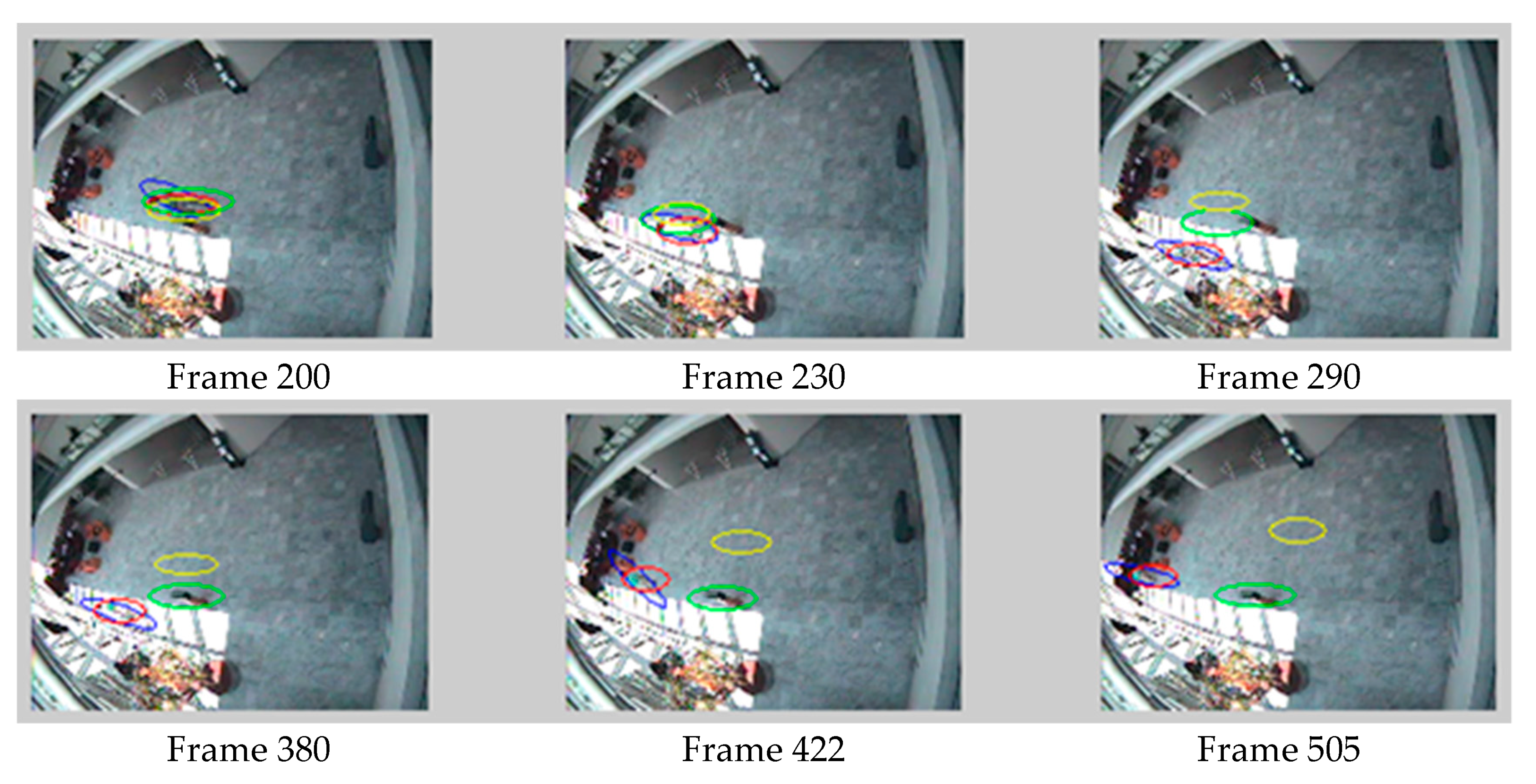

4.1. Visual Tracking Result

4.2. Computational Complexity

5. Summary

Author Contributions

Conflicts of Interest

References

- Khattak, A.S.; Raja, G.; Anjum, N.; Qasim, M. Integration of Meanshift and Particle Filter: A Survey. In Proceedings of the 2014 12th International Conference on Frontiers of Information Technology, Islamabad, Pakistan, 17–19 December 2014; pp. 286–291. [Google Scholar]

- Sahoo, P.K.; Kanungo, P.; Parvathi, K. Three frame based adaptive background subtraction. In Proceedings of the 2014 International Conference on High Performance Computing and Applications (ICHPCA), Bhubaneswar, India, 22–24 December 2014; pp. 1–5. [Google Scholar]

- Rasmussen, C.; Hager, G.D. Probabilistic data association methods for tracking complex visual objects. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 560–576. [Google Scholar] [CrossRef]

- Fukunaga, K.; Hostetler, L. The estimation of the gradient of a density function, with applications in pattern recognition. IEEE Trans. Inf. Theory 1975, 21, 32–40. [Google Scholar] [CrossRef]

- Comaniciu, D.; Ramesh, V.; Meer, P. Real-time tracking of non-rigid objects using mean shift. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2000), Hilton Head Island, SC, USA, 15 June 2000; Volume 2, pp. 142–149. [Google Scholar]

- Comaniciu, D.; Ramesh, V.; Meer, P. Kernel-based object tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 564–577. [Google Scholar] [CrossRef]

- Yilmaz, A.; Shafique, K.; Shah, M. Target tracking in airborne forward looking infrared imagery. Image Vis. Comput. 2003, 21, 623–635. [Google Scholar] [CrossRef]

- Yilmaz, A. Kernel-based object tracking using asymmetric kernels with adaptive scale and orientation selection. Mach. Vis. Appl. 2011, 22, 255–268. [Google Scholar] [CrossRef]

- Collins, R.T.; Liu, Y.; Leordeanu, M. Online selection of discriminative tracking features. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1631–1643. [Google Scholar] [CrossRef] [PubMed]

- Fang, J.; Yang, J.; Liu, H.; Lv, J.; Zhou, Y. Robust fragments-based tracking with adaptive feature selection. Opt. Eng. 2010, 49. [Google Scholar] [CrossRef]

- Dulai, A.; Stathaki, T. Mean shift through scale and occlusion. IET Signal Process. 2012, 6, 534–540. [Google Scholar] [CrossRef]

- Gordon, N.; Ristic, B.; Arulampalam, S. Beyond the Kalman Filter: Particle Filters for Tracking Applications; Artech House: London, UK, 2004. [Google Scholar]

- Doucet, A.; Simon, G.; Christophe, A. On sequential Monte Carlo sampling methods for Bayesian filtering. Stat. Comput. 2000, 10, 197–208. [Google Scholar] [CrossRef]

- Gordon, N.J.; Salmond, D.J.; Smith, A.F.M. Novel approach to nonlinear/non-Gaussian Bayesian state estimation. IEEE Proc. F-Radar Signal Process. 1993, 140, 107–113. [Google Scholar] [CrossRef]

- Czyz, J.; Ristic, B.; Macq, B. A particle filter for joint detection and tracking of color objects. Image Vis. Comput. 2007, 25, 1271–1281. [Google Scholar] [CrossRef]

- Nummiaro, K.; Koller-Meier, E.; Van Gool, L. An adaptive color-based particle filter. Image Vis. Comput. 2003, 21, 99–110. [Google Scholar] [CrossRef]

- Yang, C.; Duraiswami, R.; Davis, L. Fast multiple object tracking via a hierarchical particle filter. In Proceedings of the IEEE 2005 Computer Vision Conference, Beijing, China, 17–21 October 2005; Volume 1, pp. 212–219. [Google Scholar]

- Isard, M.; MacCormick, J. BraMBLe: A Bayesian multiple-blob tracker. In Proceedings of the 2001 Computer Vision European Conference, Vancouver, BC, Canada, 7–14 July 2001; Volume 2, pp. 34–41. [Google Scholar]

- Patrick, P.; Hue, C.; Vermaak, J.; Gangnet, M. Color-based probabilistic tracking. In Computer Vision on European Conference; Springer: Berlin/Heidelberg, Germany, 2002; pp. 661–675. [Google Scholar]

- Dou, J.; Li, J. Robust visual tracking based on interactive multiple model particle filter by integrating multiple cues. Neurocomputing 2014, 135, 118–129. [Google Scholar] [CrossRef]

- Arulampalam, M.S.; Maskell, S.; Gordon, N.; Clapp, T. A tutorial on particle filters for online nonlinear/non-gaussian Bayesian tracking. IEEE Trans. Signal Process. 2002, 50, 174–188. [Google Scholar] [CrossRef]

- Cheng, C.; Ansari, R. Kernel particle filter for visual tracking. IEEE Signal Process. Lett. 2005, 12, 242–245. [Google Scholar] [CrossRef]

- Shan, C.; Tan, T.; Wei, Y. Real-time hand tracking using a mean shift embedded particle filter. Pattern Recognit. 2007, 40, 1958–1970. [Google Scholar] [CrossRef]

- Yao, A.; Lin, X.; Wang, G.; Yu, S. A compact association of particle filtering and kernel based object tracking. Pattern Recognit. 2012, 45, 2584–2597. [Google Scholar] [CrossRef]

- Chia, Y.S.; Kow, W.Y.; Khong, W.L.; Kiring, A.; Teo, K.T.K. Kernel-based object tracking via particle filter and mean shift algorithm. In Proceedings of the 2011 11th International Conference on Hybrid Intelligent Systems (HIS), Melacca, Malaysia, 5–8 December 2011; pp. 522–527. [Google Scholar]

- Wang, Z.; Yang, X.; Xu, Y.; Yu, S. CamShift guided particle filter for visual tracking. Pattern Recognit. Lett. 2009, 30, 407–413. [Google Scholar] [CrossRef]

- Maggio, E.; Cavallaro, A. Hybrid particle filter and mean shift tracker with adaptive transition model. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, Philadelphia, PA, USA, 23 March 2005; Volume 2, pp. 221–224. [Google Scholar]

- Guo, W.; Zhao, Q.; Gu, D. Visual tracking using an insect vision embedded particle filter. Math. Probl. Eng. 2015, 2015, 573131. [Google Scholar] [CrossRef]

- Yin, M.; Zhang, J.; Sun, H.; Gu, W. Multi-cue-based CamShift guided particle filter tracking. Expert Syst. Appl. 2011, 38, 6313–6318. [Google Scholar] [CrossRef]

- Wei, Q.; Xiong, Z.; Li, C.; Ouyang, Y.; Sheng, H. A robust approach for multiple vehicles tracking using layered particle filter. AEU-Int. J. Electron. Commun. 2011, 65, 609–618. [Google Scholar] [CrossRef]

- Shi, J.; Malik, J. Normalized cuts and image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 888–905. [Google Scholar]

- Li, T.; Sun, S.; Sattar, T.P.; Corchado, J.M. Figureht sample degeneracy and impoverishment in particle filters: A review of intelligent approaches. Expert Syst. Appl. 2014, 41, 3944–3954. [Google Scholar] [CrossRef]

| Ref. | Search Mechanism | Features Models | Robustness Towards Various Constraints |

|---|---|---|---|

| [5] | Deterministic (Mean shift) | Color + Texture | Partial occlusion, clutter, and size/scale |

| [6] | Color | Partial occlusion, size and scale | |

| [7] | Edge + Texture | Small objects | |

| [8] | Color | Orientation scale and position | |

| [9] | Color | Fast object motion, partial occlusion | |

| [10] | Color | Full occlusion, size and scale | |

| [15] | Statistical (Particle Filters) | Color | Non-rigid deformations, partial occlusions and cluttered background |

| [16] | Color | Clutter background, Occlusion, size/scale variation and light illumination | |

| [17] | Color and edge orientation | Clutter background and short time period occlusion | |

| [18] | Color and shape | Large object motion, partial occlusion | |

| [19] | Color | Cluttered background, occlusion and size/scale variation | |

| [28] | Motion model | Illumination variation and partial occlusion | |

| [20] | MSPF based hybrid systems | Color + motion Cue | Fast motion, light clutter, illumination change |

| [21] | Color | Multiple hypothesis, clutter background | |

| [22] | HSV Color | Clutter background, size and scale | |

| [23] | Color + Motion model | Occlusion, clutter and fast motion | |

| [24] | Color + motion model | Fast object motion and clutter background | |

| [25] | Color | Background clutter, full occlusion | |

| [26] | HSV color components | Size/Scale, fast object motion, occlusion | |

| [27] | Color + Edge Orientation histogram | Size/Scale, fast motion | |

| [29] | Color + Motion model | Clutter background, light illumination variation and full occlusion | |

| [30] | Color + local integral orientation | Scale and pose variation |

| Video Sequence | Description | Characteristic | Frame Size | No. of Frames |

|---|---|---|---|---|

| WalkByShop1cor | Couple walking along a corridor browsing | Regular mild and Severe occlusion | 384 × 288 | 2359 |

| Browse4 | Person moves in an area with abrupt intensity change | abrupt illumination change with non-linear motion | 384 × 288 | 1138 |

| PETS 2007 | People walking in crowd with different obstacles | Severe occlusion by abrupt intensity variations | 576 × 720 | 3000 |

| Video Sequence | RMSE | FAR | F_SCORE | ||||||

|---|---|---|---|---|---|---|---|---|---|

| PFKBOT | TSHT | Proposed | PFKBOT | TSHT | Proposed | PFKBOT | TSHT | Proposed | |

| Browse4 | 55.80 | 41.30 | 9.18 | 0.49 | 0.47 | 0.17 | 0.67 | 0.71 | 0.91 |

| WalkBy-Shop1cor | 30.70 | 7.76 | 5.76 | 0.35 | 0.24 | 0.13 | 0.79 | 0.86 | 0.93 |

| PETS-2007 | 24.70 | 15.30 | 11.12 | 0.35 | 0.27 | 0.15 | 0.71 | 0.79 | 0.91 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khattak, A.S.; Raja, G.; Anjum, N. Adaptive Framework for Multi-Feature Hybrid Object Tracking. Appl. Sci. 2018, 8, 2294. https://doi.org/10.3390/app8112294

Khattak AS, Raja G, Anjum N. Adaptive Framework for Multi-Feature Hybrid Object Tracking. Applied Sciences. 2018; 8(11):2294. https://doi.org/10.3390/app8112294

Chicago/Turabian StyleKhattak, Ahmad Saeed, Gulistan Raja, and Nadeem Anjum. 2018. "Adaptive Framework for Multi-Feature Hybrid Object Tracking" Applied Sciences 8, no. 11: 2294. https://doi.org/10.3390/app8112294

APA StyleKhattak, A. S., Raja, G., & Anjum, N. (2018). Adaptive Framework for Multi-Feature Hybrid Object Tracking. Applied Sciences, 8(11), 2294. https://doi.org/10.3390/app8112294