Malware Collusion Attack against SVM: Issues and Countermeasures

Abstract

1. Introduction

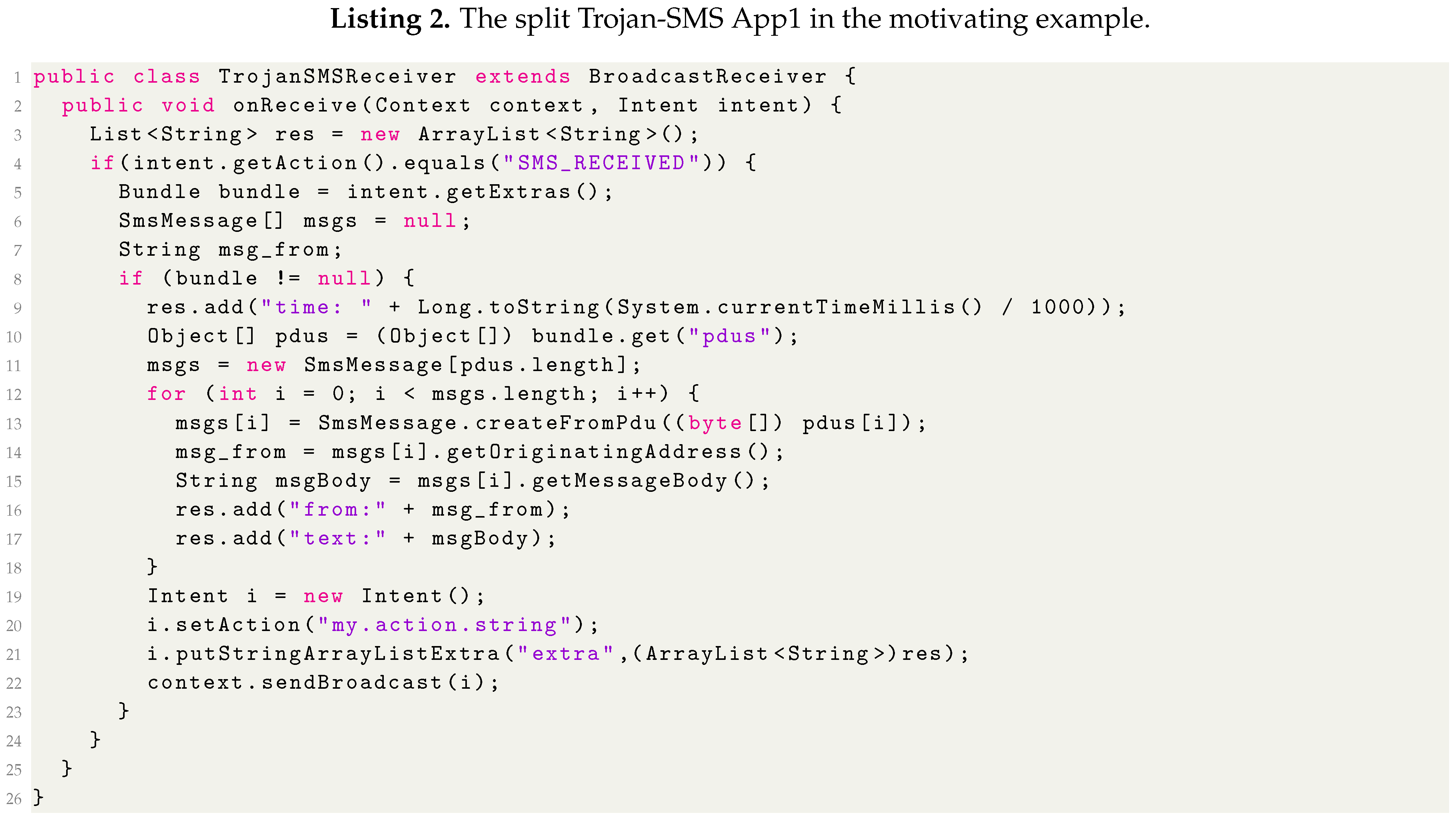

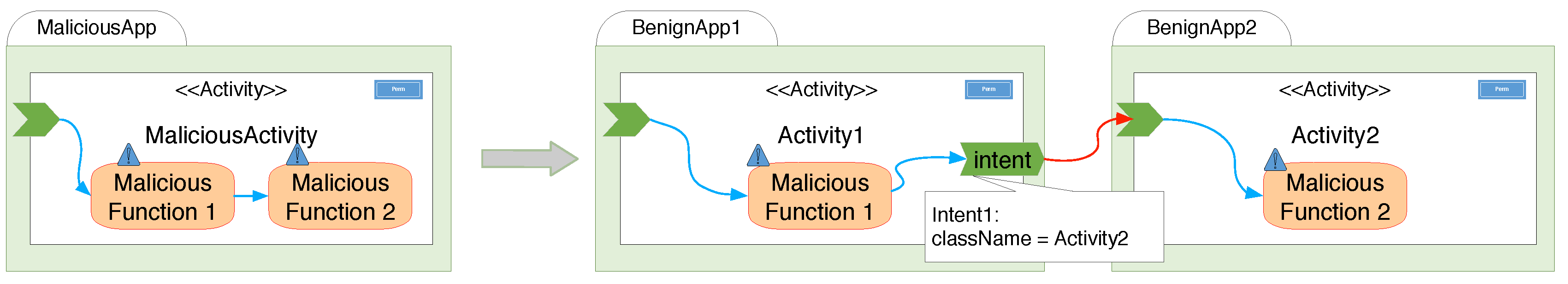

- Introducing Collusion Attack on SVM: By splitting one app into two, malware developers can easily evade the detection of current SVM based methods. We also write four example apps to demonstrate the idea of splitting.

- Proposing a method to detect the Collusion Attack: We present a method called ColluDroid to deal with Collusion Attack. In our proposed method, we analyze all the possible communication links to detect collusion.

- Evaluating the performance of ColluDroid with different learning methods. We develop a prototype, and present results from experiments running on our data set. The result shows the effectiveness of ColluDroid in dealing with collusion attacks and evasion attack. ColluDroid with Sec-SVM has the best performance. Furthermore, we analyze the efficiency of ColluDroid. The result shows that our ColluDroid is fast enough and can be applied on a large scale.

2. Background

2.1. Android Background

2.1.1. AndroidManifest.xml and Components

2.1.2. Inter-App Communication Channels

- An action string specifies the generic action to perform. Usually, an app specifies action constants defined by the system Intent class or other framework classes. However, an app can also specify their own actions. In this case, malware could specify their own unique action string to launch a particular component.

- A category string containing additional information about the kind of component that should handle the intent. For example, CATEGORY_APP_GALLERY category indicates that the intent should be delivered to a gallery application. The target activity should be able to view and manipulate image and video files stored on the device.

- A set of data fields specifies data to be acted upon or the Multipurpose Internet Mail Extensions (MIME) type of that data. The type of data supplied is generally dictated by the intent’s action. For example, if the action is ACTION_EDIT, the data should contain the URI of the document to edit.

3. Motivation

3.1. Formal Definition of Collusion

- There are communication links between the apps . The link is denoted by . The actions of app z is denoted by . The union action conducted by the link of app is denoted by , where and .

- There exists an extend action sequence .

3.2. Motivation Example

3.3. Collusion Apps to Evade SVM Detection

3.3.1. SVM Based Malware Detection

3.3.2. Collusion Attack on SVM

3.3.3. Evasion Attack on SVM

| Algorithm 1 Gradient-based Evasion Algorithm. |

|

4. Architecture of ColluDroid

4.1. Training Phase

4.1.1. Feature Extraction

4.1.2. Secure Learning Method

4.2. Detection Phase

4.2.1. Extract Communication Links

4.2.2. Collusion Malware Detection

5. Evaluation

5.1. Q1&Q3: Effectiveness and Performance of ColluDroid

5.1.1. Effectiveness of ColluDroid

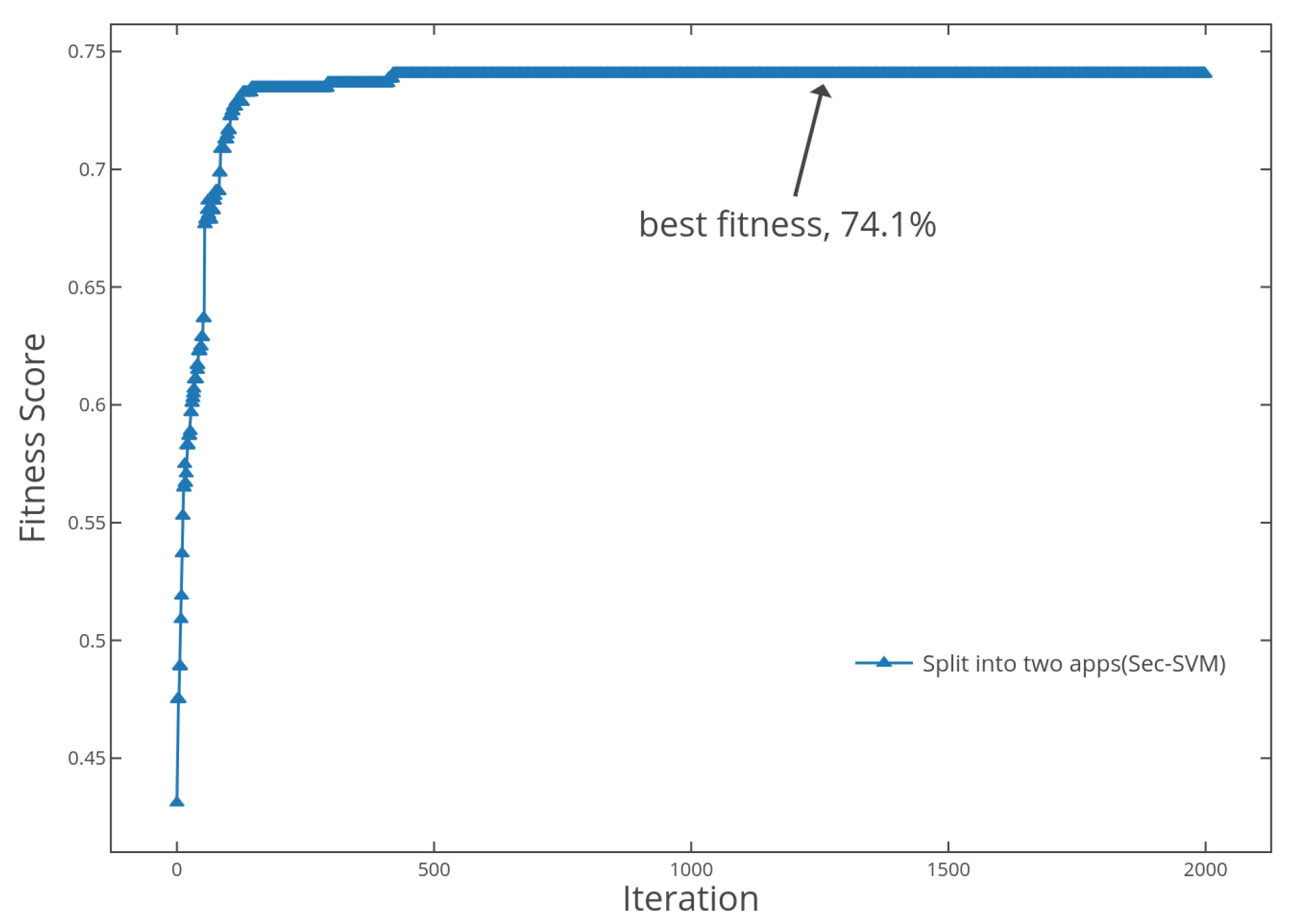

5.1.2. Estimate the Performance of ColluDroid against Collusion and Evasion Attack

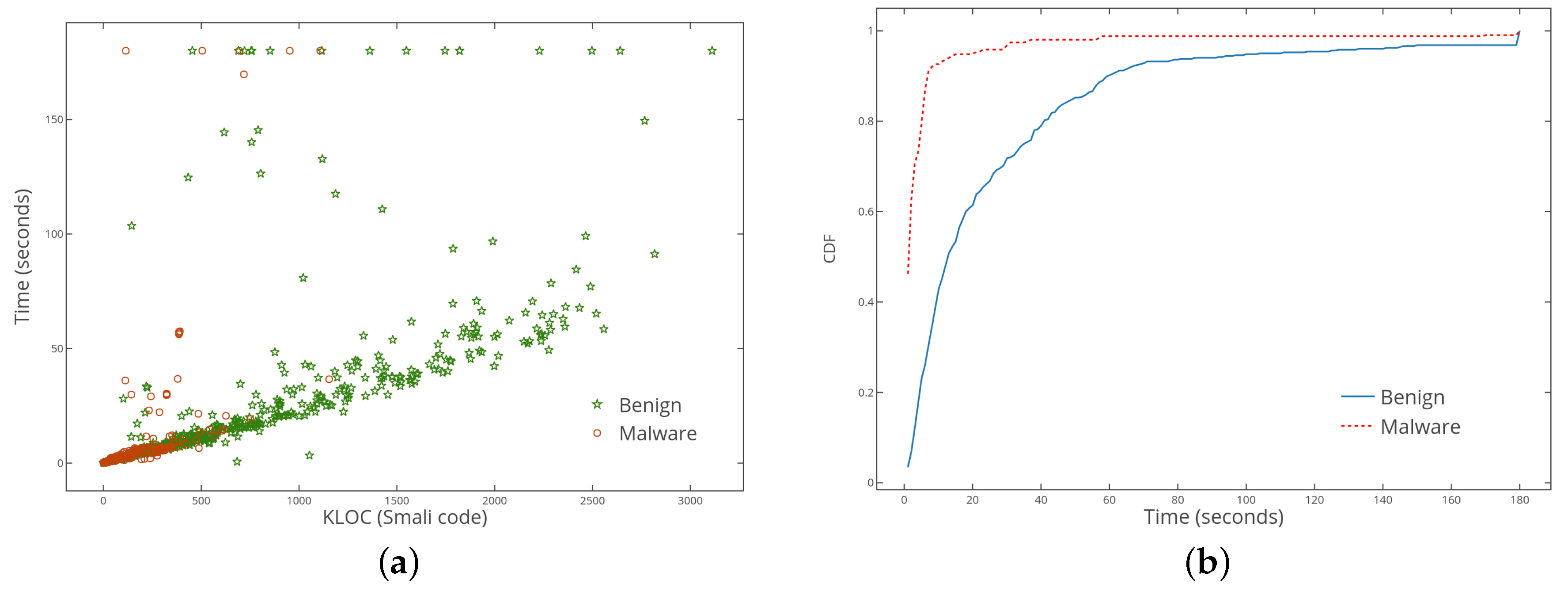

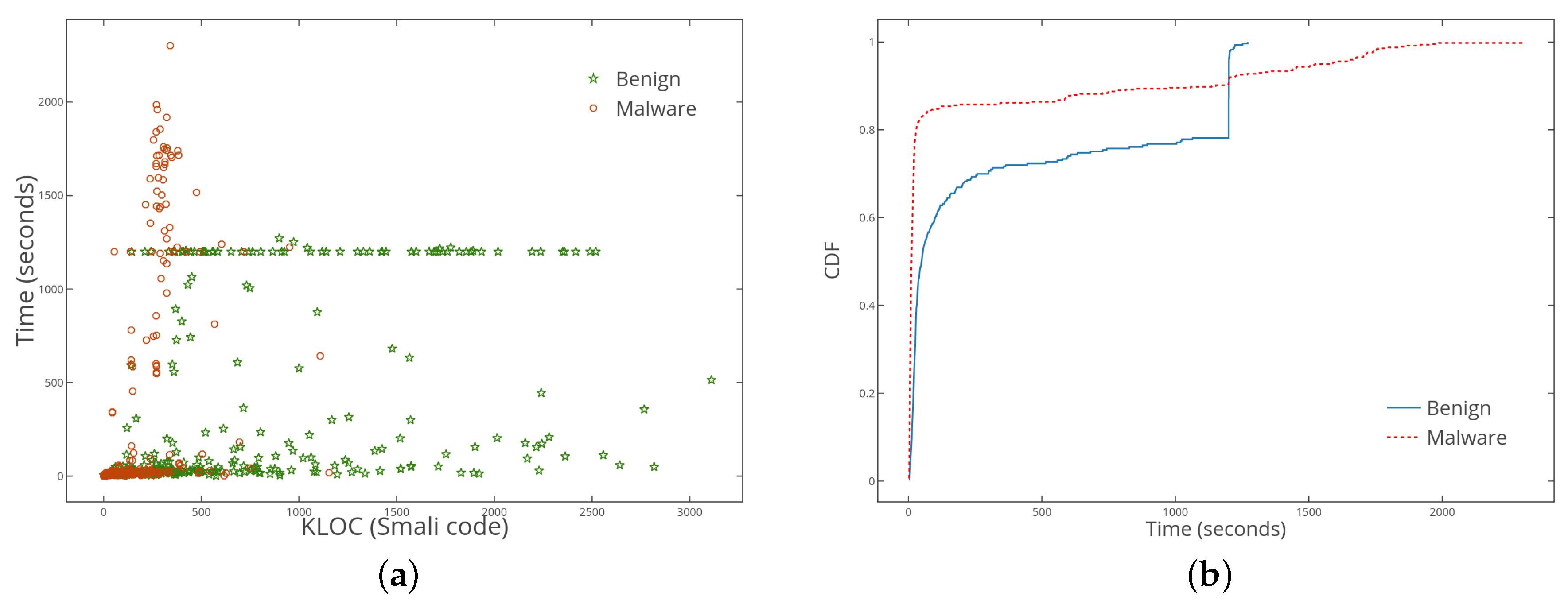

5.2. The Efficiency of ColluDroid

6. Related Works

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- McAfee. Mobile Threat Report - McAfee. 2017. Available online: https://www.mcafee.com/us/resources/reports/rp-mobile-threat-report-2017.pdf (accessed on 12 June 2018).

- Roman, U.; Fedor, S.; Denis, P.; Alexander, L. IT threat evolution Q3 2017. Statistics. 2017. Available online: https://securelist.com/it-threat-evolution-q3-2017-statistics/83131/ (accessed on 15 June 2018).

- Arzt, S.; Rasthofer, S.; Fritz, C.; Bodden, E.A. FlowDroid: Precise Context, Flow, Field, Object-Sensitive and Lifecycle-Aware Taint Analysis for Android Apps; PLDI ’14; ACM Press: New York, NY, USA, 2013; Volume 49, pp. 259–269. [Google Scholar]

- Chin, E.; Felt, A.P.; Greenwood, K.; Wagner, D. Analyzing inter-application communication in Android. In Proceedings of the 9th International Conference on Mobile Systems, Applications, and Services, Bethesda, MD, USA, 28 June–1 July 2011; ACM: New York, NY, USA, 2011; pp. 239–252. [Google Scholar]

- Spreitzenbarth, M.; Freiling, F.; Echtler, F.; Schreck, T.; Hoffmann, J. Mobile-Sandbox: Having a Deeper Look Into Android Applications. In Proceedings of the 28th Annual ACM Symposium on Applied Computing, Coimbra, Portugal, 18–22 March 2013; pp. 1808–1815. [Google Scholar]

- Tam, K.; Khan, S.J.; Fattori, A.; Cavallaro, L. CopperDroid: Automatic Reconstruction of Android Malware Behaviors. In Proceedings of the Network and Distributed System Security, San Diego, CA, USA, 8–11 February 2015. [Google Scholar] [CrossRef]

- Yan, L.; Yin, H. Droidscope: Seamlessly reconstructing the os and dalvik semantic views for dynamic android malware analysis. In Proceedings of the 21st USENIX Security Symposium, Bellevue, WA, USA, 6–10 August 2012; pp. 569–584. [Google Scholar]

- Arp, D.; Spreitzenbarth, M.; Hübner, M.; Gascon, H.; Rieck, K. DREBIN: Effective and Explainable Detection of Android Malware in Your Pocket. In Proceedings of the Network and Distributed System Security, San Diego, CA, USA, 23–26 February 2014. [Google Scholar]

- Hou, S.; Ye, Y.; Song, Y.; Abdulhayoglu, M. HinDroid: An Intelligent Android Malware Detection System Based on Structured Heterogeneous Information Network; KDD ’17; ACM Press: New York, NY, USA, 2017; pp. 1507–1515. [Google Scholar]

- Zhang, M.; Duan, Y.; Yin, H.; Zhao, Z. Semantics-Aware Android Malware Classification Using Weighted Contextual API Dependency Graphs. In Proceedings of the 2014 ACM SIGSAC Conference on Computer and Communications Security, CCS’14, Scottsdale, AZ, USA, 3–7 November 2014; pp. 1105–1116. [Google Scholar]

- Schlegel, R.; Zhang, K.; Zhou, X. Soundcomber: A stealthy and context-aware sound trojan for smartphones. In Proceedings of the 18th Annual Network and Distributed System Security Symposium (NDSS), San Diego, CA, USA, 6–9 February 2011; pp. 17–33. [Google Scholar]

- Seven, S. Setting the Record Straight on Moplus SDK and the Wormhole Vulnerability. 2015. Available online: https://blog.trendmicro.com/trendlabs-security-intelligence/setting-the-record-straight-on-moplus-sdk-and-the-wormhole-vulnerability/ (accessed on 8 July 2018).

- Suarez-Tangil, G.; Tapiador, J.E.; Peris-Lopez, P.; Blasco, J. Dendroid: A text mining approach to analyzing and classifying code structures in Android malware families. Expert Syst. Appl. 2014, 41, 1104–1117. [Google Scholar] [CrossRef]

- Chen, L.; Ye, Y. SecMD: Make Machine Learning More Secure against Adversarial Malware Attacks; Springer International Publishing: Cham, Switzerland, 2017; Volume 10400, pp. 76–89. [Google Scholar]

- Grosse, K.; Papernot, N.; Manoharan, P.; Backes, M.; McDaniel, P. Adversarial Perturbations against Deep Neural Networks for Malware Classification. arXiv, 2016; arXiv:1606.04435. [Google Scholar]

- Biggio, B.; Fumera, G.; Roli, F. Security evaluation of pattern classifiers under attack. IEEE Trans. Knowl. Data Eng. 2014, 26, 984–996. [Google Scholar] [CrossRef]

- Biggio, B.; Corona, I.; Maiorca, D.; Nelson, B.; Srndic, N.; Laskov, P.; Giacinto, G.; Roli, F. Evasion Attacks against Machine Learning at Test Time; Springer: Berlin/Heidelberg, Germany, 2017; pp. 387–402. [Google Scholar]

- Marforio, C.; Ritzdorf, H.; Francillon, A.; Capkun, S. Analysis of the communication between colluding applications on modern smartphones. In Proceedings of the 28th Annual Computer Security Applications Conference, Orlando, FL, USA, 3–7 December 2012; pp. 51–60. [Google Scholar]

- Android, D. SharedPreferences. 2018. Available online: https://developer.android.com/reference/android/content/SharedPreferences (accessed on 4 July 2018).

- Hiroshi, L. Android and Security. 2012. Available online: http://googlemobile.blogspot.com/2012/02/android-and-security.html (accessed on 4 June 2018).

- Faruki, P.; Bharmal, A.; Laxmi, V.; Ganmoor, V.; Gaur, M.; Conti, M.; Muttukrishnan, R. Android Security: A Survey of Issues, Malware Penetration and Defenses. Commun. Surv. Tutor. IEEE 2015, 17, 998–1022. [Google Scholar] [CrossRef]

- Securelist. Mobile Malware Evolution: 2013. Available online: https://securelist.com/mobile-malware-evolution-2013/58335/ (accessed on 14 June 2018).

- Friedman, J.; Hastie, T.; Tibshirani, R. The Elements of Statistical Learning; Springer: New York, NY, USA, 2001; Volume 1. [Google Scholar]

- Parker, R.G.; Rardin, R.L. Discrete Optimization; Elsevier: New York, NY, USA, 2014. [Google Scholar]

- Boyabatli, O.; Sabuncuoglu, I. Parameter selection in genetic algorithms. J. Syst. Cybern. Inf. 2004, 4, 78–83. [Google Scholar]

- Demontis, A.; Melis, M.; Biggio, B.; Maiorca, D.; Arp, D.; Rieck, K.; Corona, I.; Giacinto, G.; Roli, F. Yes, Machine Learning Can Be More Secure! A Case Study on Android Malware Detection. IEEE Trans. Dependable Secur. Comput. 2017, 1. [Google Scholar] [CrossRef]

- Anderson, H.S.; Filar, B.; Roth, P. Evading Machine Learning Malware Detection. Black Hat USA, Las Vegas, NV, USA, 22–27 July 2017. Available online: https://www.blackhat.com/docs/us-17/thursday/us-17-Anderson-Bot-Vs-Bot-Evading-Machine-Learning-Malware-Detection-wp.pdf (accessed on 10 June 2018).

- Lam, P.; Bodden, E.; Lhoták, O.; Hendren, L. The Soot framework for Java program analysis: A retrospective. In Proceedings of the Cetus Users and Compiler Infastructure Workshop (CETUS 2011), Galveston Island, TX, USA, 10 October 2011; Volume 15, p. 35. [Google Scholar]

- Octeau, D.; McDaniel, P.; Jha, S.; Bartel, A.; Bodden, E.; Klein, J.; Traon, Y.L. Effective inter-component communication mapping in android with epicc: An essential step towards holistic security analysis. In Proceedings of the SEC’13 Proceedings of the 22nd USENIX Conference on Security, Washington, DC, USA, 14–16 August 2013; pp. 543–558. [Google Scholar]

- Octeau, D.; Luchaup, D.; Dering, M.; Jha, S.; McDaniel, P. Composite constant propagation: Application to android inter-component communication analysis. In Proceedings of the International Conference on Software Engineering, Florence, Italy, 16–24 May 2015; pp. 77–88. [Google Scholar]

- Li, L.; Bartel, A.; Bissyande, T.F.D.A.; Klein, J.; Le Traon, Y.; Arzt, S.; Rasthofer, S.; Bodden, E.; Octeau, D.; McDaniel, P. IccTA: Detecting inter-component privacy leaks in android apps. In Proceedings of the 2015 IEEE/ACM 37th IEEE International Conference on Software Engineering (ICSE 2015), Florence, Italy, 16–24 May 2015; pp. 280–291. [Google Scholar]

- Li, L.; Bartel, A.; Bissyand, T.F.; Klein, J.; Le Traon, Y. ApkCombiner: Combining multiple android apps to support inter-app analysis. In IFIP Advances in Information and Communication Technology; Springer: Berlin, Germany, 2015; Volume 455, pp. 513–527. [Google Scholar]

- Enck, W.; Gilbert, P.; Chun, B.G.; Cox, L.P.; Jung, J.; McDaniel, P.; Sheth, A.N. TaintDroid: An information-flow tracking system for realtime privacy monitoring on smartphones. In Proceedings of the 9th USENIX conference on Operating systems design and implementation, Vancouver, BC, Canada, 4–6 October 2010; USENIX Association: Berkeley, CA, USA, 2010; pp. 1–6. [Google Scholar]

- Zhang, Y.; Yang, M.; Xu, B.; Yang, Z.; Gu, G.; Ning, P.; Wang, X.S.; Zang, B. Vetting undesirable behaviors in android apps with permission use analysis. In Proceedings of the 2013 ACM SIGSAC Conference on Computer & Communications Security, Berlin, Germany, 4–8 November 2013; ACM: New York, NY, USA, 2013; pp. 611–622. [Google Scholar]

- Tsutano, Y.; Bachala, S.; Srisa-An, W.; Rothermel, G.; Dinh, J. An Efficient, Robust, and Scalable Approach for Analyzing Interacting Android Apps. In Proceedings of the ICSE ’17 (39th International Conference on Software Engineering), Buenos Aires, Argentina, 20–28 May 2017; pp. 324–334. [Google Scholar]

- Choudhary, S.R.; Gorla, A.; Orso, A. Automated test input generation for android: Are we there yet? In Proceedings of the 2015 30th IEEE/ACM International Conference on Automated Software Engineering, ASE 2015, Lincoln, NE, USA, 9–13 November 2015; IEEE Computer Society: Washington, DC, USA, 2016; pp. 429–440. [Google Scholar]

| App Name | Malicious Payload | Payload of Split App1 | Payload of Split App2 |

|---|---|---|---|

| Short Message Service(SMS)→ Remote Server | SMS → Intent | Intent → Remote Server | |

| Read SMS → Send SMS | SMS → Intent | Intent → Send SMS | |

| Remote Command → SMS → Send SMS | Remote Command → SMS → Intent | Intent → Send SMS | |

| Location → Remote Server | Location → Shared Preference | Shared Preference → Remote Server |

| Methods | Collusion | Collusion & Evasion |

|---|---|---|

| ColluDroid-Linear Support Vector Machines(SVM) (PK) | 6.1% | 42.9% |

| ColluDroid-Sec-SVM(PK) | 7.3% | 18.5 % |

| Linear SVM(PK) | 87.4% | 96.4% |

| Sec-SVM(PK) | 74.1% | 88.0% |

| ColluDroid-Linear SVM(LK) | 8.1% | 66.2% |

| ColluDroid-Sec-SVM(LK) | 9.3% | 25.2 % |

| Linear SVM(LK) | 91.4% | 99.8% |

| Sec-SVM(LK) | 97.2% | 99.6% |

| Items | Time |

|---|---|

| feature extraction | Refer to Figure 7a |

| model training | Linear-SVM: 0.45 s |

| Sec-SVM: 23.45 s | |

| communication links computing | 20.53 s |

| malware detection | 0.02 ms |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, H.; Su, J.; Qiao, L.; Xin, Q. Malware Collusion Attack against SVM: Issues and Countermeasures. Appl. Sci. 2018, 8, 1718. https://doi.org/10.3390/app8101718

Chen H, Su J, Qiao L, Xin Q. Malware Collusion Attack against SVM: Issues and Countermeasures. Applied Sciences. 2018; 8(10):1718. https://doi.org/10.3390/app8101718

Chicago/Turabian StyleChen, Hongyi, Jinshu Su, Linbo Qiao, and Qin Xin. 2018. "Malware Collusion Attack against SVM: Issues and Countermeasures" Applied Sciences 8, no. 10: 1718. https://doi.org/10.3390/app8101718

APA StyleChen, H., Su, J., Qiao, L., & Xin, Q. (2018). Malware Collusion Attack against SVM: Issues and Countermeasures. Applied Sciences, 8(10), 1718. https://doi.org/10.3390/app8101718