Abstract

In nature, snakes can gracefully traverse a wide range of different and complex environments. Snake robots that can mimic this behaviour could be fitted with sensors and transport tools to hazardous or confined areas that other robots and humans are unable to access. In order to carry out such tasks, snake robots must have a high degree of awareness of their surroundings (i.e., perception-driven locomotion) and be capable of efficient obstacle exploitation (i.e., obstacle-aided locomotion) to gain propulsion. These aspects are pivotal in order to realise the large variety of possible snake robot applications in real-life operations such as fire-fighting, industrial inspection, search-and-rescue, and more. In this paper, we survey and discuss the state of the art, challenges, and possibilities of perception-driven obstacle-aided locomotion for snake robots. To this end, different levels of autonomy are identified for snake robots and categorised into environmental complexity, mission complexity, and external system independence. From this perspective, we present a step-wise approach on how to increment snake robot abilities within guidance, navigation, and control in order to target the different levels of autonomy. Pertinent to snake robots, we focus on current strategies for snake robot locomotion in the presence of obstacles. Moreover, we put obstacle-aided locomotion into the context of perception and mapping. Finally, we present an overview of relevant key technologies and methods within environment perception, mapping, and representation that constitute important aspects of perception-driven obstacle-aided locomotion.

1. Introduction

Bio-inspired robots have developed rapidly in recent years. Despite the great success of bio-robotics in mimicking biological snakes, there is still a large gap between the performance of bio-mimetic robot snakes and biological snakes. In nature, snakes are capable of performing an astounding variety of tasks. They can locomote, swim, climb, and even glide through the air in some species [1]. However, one of the most interesting features of biological snakes is their ability to exploit roughness in the terrain for locomotion [2], which allows them to be remarkably adaptable to different types of environments. To achieve this adaptability and to locomote more efficiently, biological snakes may push against rocks, stones, branches, obstacles, or other environment irregularities. They can also exploit the walls and surfaces of narrow passages or pipes for locomotion.

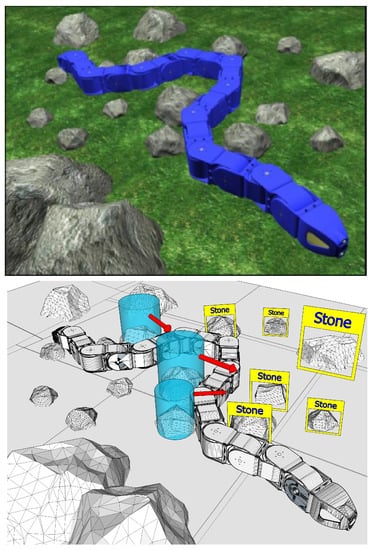

Building a robotic snake with such agility is one of the most attractive steps to fully mimic the movement of biological snakes. The development of such a robot is motivated by the fact that different applications may be realised for use in challenging real-life operations, in earthquake-hit areas, pipe inspection for the oil and gas industry, fire-fighting operations, and search-and-rescue. Snake robot locomotion in a cluttered environment where the snake robot utilises walls or external objects other than the flat ground as means of propulsion can be defined as obstacle-aided locomotion [3,4]. To achieve such a challenging control scheme, a mathematical model that includes the interaction between the snake robot and the surrounding operational environment is beneficial. This model can take into account the external objects that the snake robot uses in the environment as push-points to propel itself forwards. From this perspective, the environment perception, mapping, and representation is of fundamental importance for the model. To highlight this concept even further, we adopt the term perception-driven obstacle-aided locomotion as locomotion where the snake robot utilises a sensory-perceptual system to exploit the surrounding operational space and identifies walls, obstacles, or other external objects for means of propulsion [5]. Consequently, we can provide a more comprehensive characterization of the whole scientific problem considered in this work. The underlying idea is shown in Figure 1. The snake robot exploits the environment for locomotion by using augmented information: obstacles are recognised, potential push-points are chosen (shown as cylinders), while achievable normal contact forces are illustrated by arrows.

Figure 1.

The underlying idea of snake robot perception-driven obstacle-aided locomotion: a snake robot perceives and understands its environment in order to utilise it optimally for locomotion.

The goal of this paper is to further raise awareness of the possibilities with perception-driven obstacle-aided locomotion for snake robots and provide an up-to-date stepping stone for continued research and development within this field.

In this paper, we survey the state of the art within perception-driven obstacle-aided locomotion for snake robots, and provide an overview of challenges and possibilities within this area of snake robotics. We propose the identification of different levels of autonomy, such as environmental complexity, mission complexity, and external system dependence. We provide a step-wise approach on how to increment snake robot abilities within guidance, navigation, and control in order to target the different levels of autonomy. We review current strategies for snake robot locomotion in the presence of obstacles. Moreover, we discuss and present an overview of relevant key technologies and methods within environment perception, mapping, and representation which constitute important aspects of perception-driven obstacle-aided locomotion. The contribution of this work is summarised by three fundamental remarks:

- necessary conditions for lateral undulation locomotion in the presence of obstacles;

- lateral undulation is highly dependent on the actuator torque output and environmental friction;

- knowledge about the environment and its properties, in addition to its geometric representation, can be successfully exploited for improving locomotion performance for obstacle-aided locomotion.

The paper is organised as follows. A survey and classification of snake robots is given in Section 2. Successively, the state-of-the-art concerning control strategies for obstacle-aided locomotion is described in Section 3. Challenges related to the environment perception, mapping, and representation are described in Section 4. Finally, conclusions and remarks are outlined in Section 5.

2. Challenges and Possibilities in the Context of Autonomy Levels for Unmanned Systems

In this section, we survey snake robots as unmanned vehicle systems to highlight challenges and possibilities in the context of autonomy levels for unmanned systems. Moreover, we focus on the ability of snake robots to perform a large variety of tasks in different operational environments. When designing such systems, different levels of autonomy can be identified from an operational point of view. Based on this idea, the so-called framework of autonomy levels for unmanned systems (ALFUS) [6] is successively adopted and applied to provide a more in-depth overview for the design of snake robot perception-driven obstacle-aided locomotion. Following this, the autonomy and technology readiness assessment (ATRA) framework [7,8] is adopted and presented to better understand the design of these systems.

2.1. Classification of Snake Robots as Unmanned Vehicle Systems

An uncrewed or unmanned vehicle (UV) is a mobile system not having or needing a person, a crew, or staff operator on board [9]. UV systems can either be remote-controlled or remote-guided vehicles, or they can be autonomous vehicles capable of sensing their environment and navigating on their own. UV systems can be categorised according to their operational environment as follows: unmanned ground vehicle (UGV; e.g., autonomous cars or legged robots); unmanned surface vehicle (USV; i.e., unmanned systems used for operation on the surface of water); autonomous underwater vehicle (AUV) or unmanned undersea/underwater vehicle (UUV) for the operation underwater; unmanned aerial vehicle (UAV), such as unmanned aircraft generally known as “drones”; unmanned spacecraft, both remote-controlled (“unmanned space mission”) and autonomous (“robotic spacecraft” or “space probe”).

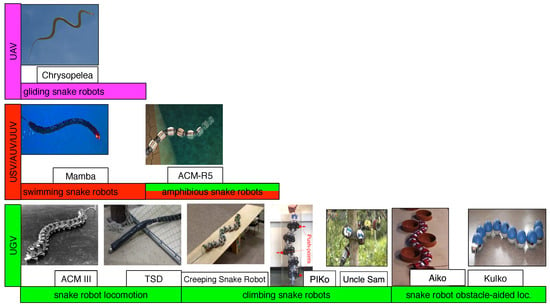

According to this terminology, snake robots can be classified as uncrewed vehicle (UV) systems. In particular, a snake robot constitutes a highly adaptable UV system due to its potential ability to perform a large variety of tasks in different operational environments. Following the standard nomenclature for UV systems, snake robots can be classified as shown in Figure 2, where some of the most significant systems are reported based on the literature for the sake of illustration. Several snake robots are implemented as unmanned ground vehicle (UGV) systems that are capable of performing the following tasks:

Figure 2.

The variety of possible application scenarios for snake robots. AUV: autonomous underwater vehicle; TSD: toroidal skin drive snake robot; UAV: unmanned aerial vehicle; UGV: unmanned ground vehicle; USV: unmanned surface vehicle; UUV: unmanned undersea/underwater vehicle.

- locomotion on flat or slightly rough surfaces, such as the ACM III snake robot [10], which was the world’s first snake robot, or the toroidal skin drive (TSD) snake robot [11], which is equipped with a skin drive propulsion system;

- climbing slopes, pipes, or trees, such as the Creeping snake Robot [12], which is capable of obtaining an environmentally-adaptable body shape to climb slopes, or the PIKo snake robot [13], which is equipped with a mechanism for navigating complex pipe structures, or the Uncle Sam snake robot [14], which is provided with a strong and compact joint mechanism for climbing trees;

- locomoting in the presence of obstacles, such as the Aiko snake robot [3], which is capable of pushing against external obstacles apart from a flat ground, or the Kulko snake robot [15], which is provided with a contact force measurement system for obstacle-aided locomotion.

To better assess the different working environments in which these robotic systems operate, we may consider various metrics. For instance, the environmental complexity (EC)—a measure of entropy and the compressibility of the environment as seen by the robot’s sensors [16]—may be used. Another useful metric is the mission complexity (MC), which is an estimation of the complicatedness of the environment as seen by the robot’s perception system [6]. Both environmental complexity EC and MC gradually increase when moving from the former examples to the last ones. With a slight additional increase in terms of EC and MC, other snake robot systems are designed as unmanned surface vehicle (USV), autonomous underwater vehicle (AUV), or unmanned undersea/underwater vehicle (UUV) systems. An example of such systems is the Mamba snake robot [17], which is capable of performing underwater locomotion. Another notable example of such systems is the ACM-R5 snake robot [18], which is an amphibious snake-like robot characterised by its hermetic dust and waterproof body structure.

With an additional increase in terms of both EC and MC, flying or gliding snake robots may be designed as unmanned aerial vehicle (UAV) systems. These systems can be inspired by the study and analysis of the gliding capabilities in flying snakes, such as the Chrysopelea, more commonly known as the flying snake or gliding snake [19]. This category of snake robot still does not exist, but perhaps one day it will be realised when the necessary technological advances become a reality. However, other designs may be more practical for flying.

2.2. Similarities and Differences between Traditional Snake Robots and Snake Robots for Perception-Driven Obstacle-Aided Locomotion

Some similarities can be identified between traditional snake robots and snake robots specifically designed to achieve perception-driven obstacle-aided locomotion. Both have a number of links serially attached to each other by means of joints that can be moved by some type of actuator. Therefore, they share similar structures from a kinematic point of view. However, snake robots specifically designed for obstacle-aided locomotion must be capable to exploit roughness in the terrain for locomotion. To achieve this, both their sensory system and their control system fundamentally differ with respect to the systems of traditional snake robots, which instead aim at avoiding or at most accommodating obstacles. The design guidelines for snake robot perception-driven obstacle-aided locomotion are described in the following sections of the paper.

2.3. The ALFUS Framework for Snake Robot Perception-Driven Obstacle-Aided Locomotion

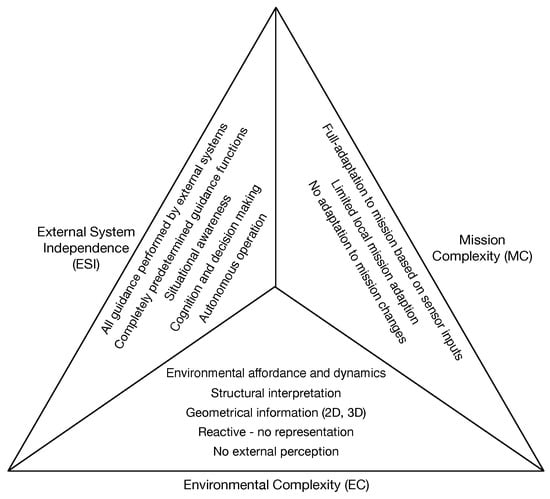

In this work, the focus is on snake robots designed as unmanned ground vehicle (UGV) systems with the aim of achieving perception-driven obstacle-aided locomotion. To the best of our knowledge, little research has been done in the past concerning this topic. When designing such systems, different levels of autonomy can be identified from an operational point of view. In Section 2.1, we have already briefly touched upon the concepts of environmental complexity (EC) and mission complexity (MC) to better categorise existing snake robots. By additionally considering the external system independence (ESI) metric, which represents the independence of snake robots from other external systems or from human operators, the so-called ALFUS framework [6] can be adopted and applied to provide a more in-depth overview for the design of snake robot perception-driven obstacle-aided locomotion, as shown in Figure 3.

Figure 3.

The autonomy levels for unmanned systems (ALFUS) framework [6] applied to snake robot perception-driven obstacle-aided locomotion.

The levels of autonomy for a system can be defined for various aspects of the system and categorised according to several taxonomies [20]. Regarding the external system independence for snake robots, we introduce the following autonomy levels (AL) according to gradually increased complexity:

- All guidance performed by external systems. In order to successfully accomplish the assigned mission within a defined scope, the snake robot requires full guidance and interaction with either a human operator or other external systems;

- Completely predetermined guidance functions. All planning, guidance, and navigation actions are predetermined in advance based on perception. The snake robot is capable of very low adaptation to environmental changes;

- Situational awareness [21]. The snake robot has a higher level of perception and autonomy with high adaptation to environmental changes. The system is not only capable of comprehending and understanding the current situation, but it can also make an extrapolation or projection of the actual information forward in time to determine how it will affect future states of the operational environment;

- Cognition and decision making. The snake robot has higher levels of prehension, intrinsically safe cognition, and decision-making capacity for reacting to unknown environmental changes;

- Autonomous operation. The snake robot is capable of fully autonomous capabilities. The system can achieve its assigned mission successfully without any intervention from human or any other external system while adapting to different environmental conditions.

Concerning the environmental complexity (EC), the following autonomy levels (AL) can be identified according to gradually increased complexity:

- No external perception. The snake robot executes a set of preprogrammed or planned actions in an open loop manner;

- Reactive—no representation. The snake robot does not generate an explicit environment representation, but the motion planner is able to react to sensor input feedback;

- Geometrical information (2D, 3D). Starting from sensor data, the snake robot can generate a geometric representation of the environment which is used for planning—typically for obstacle avoidance;

- Structural interpretation. The environment representation includes structural relationships between objects in the environment;

- Environmental affordance and dynamics. Higher-level entities and properties can be derived from the environment perception, including separate treatment for static and dynamic elements; different properties from the objects which the snake robot is interacting with might be of interest according to the specific task being performed.

With reference to mission complexity (MC), the following autonomy levels (AL) can be identified according to gradually increased complexity:

- No adaptation to mission changes. The mission plan is predetermined, the snake robot is not capable of any adaptation to mission changes;

- Limited local mission adaptation. The snake robot has low adaptation capabilities to small, externally-commanded mission changes;

- Full-adaptation to mission based on sensor inputs. The snake robot has high and independent adaptation capabilities.

Clearly, in all three cases, an increase of the complexity generates more challenging problems to solve; however, it opens new possibilities for snake robot applications as autonomous systems.

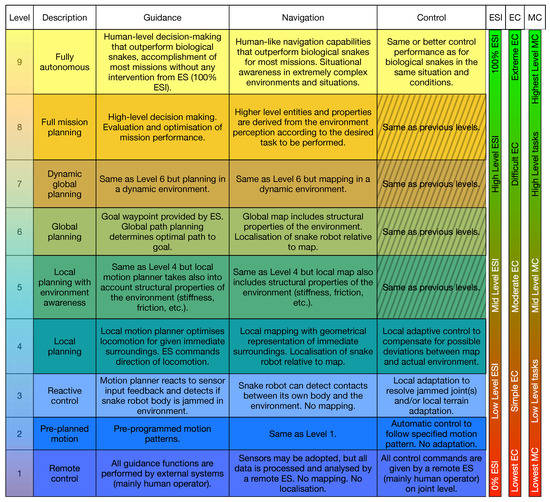

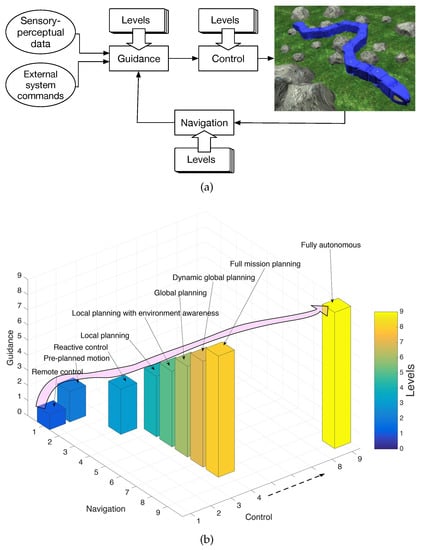

2.4. A Framework for Autonomy and Technology Readiness Assessment

To better understand the design of snake robot perception-driven obstacle-aided locomotion, some design examples of similarly demanding systems can be considered as sources of inspiration and prototyping purposes. For instance, the design of autonomous unmanned aircraft systems (UAS) may provide solid directions for establishing a flexible design and prototyping framework. In particular, to systematically evaluate the autonomy levels (AL) of a UAS and to correctly measure the maturity of their autonomy-enabling technologies, the autonomy and technology readiness assessment (ATRA) framework may be adopted [7,8]. The ATRA framework combines both autonomy levels (AL) and technology readiness level (TRL) metrics. Borrowing this idea from UAS, the same framework concept can be used to provide a comprehensive picture of how snake robot perception-driven obstacle-aided locomotion may be realised in a realistic operational environment, as shown in Figure 4.

Figure 4.

The ATRA framework [7,8] applied to snake robot perception-driven obstacle-aided locomotion with the different levels of external system independence (ESI), of environmental complexity (EC) and of mission complexity (MC). ES refers to “External System”.

When considering snake robot perception-driven obstacle-aided locomotion, identifying and differentiating between consecutive autonomy levels is very challenging from a design point of view. Nevertheless, it is crucial to clearly distinguish autonomy levels during the design process in order to provide the research community with a useful evaluation and comparison tool. Inspired by similarly demanding systems [7], a nine-level scale is proposed based on gradual increase (autonomy as a gradual property) of guidance, navigation, and control (GNC) functions and capabilities. Referring to Figure 4, the key GNC functions that enable each autonomy level are verbally described along with their correspondences with mission complexity (MC), environmental complexity (EC), and external system independence (ESI) metrics (illustrated with a colour gradient). It should be noted that the motion planner is assumed to take the environment representation as input (i.e., a map of the environment built as a fusion between different sensor data and previous stored knowledge). Then, the control function is supposed to compensate with adjustments for any possible deviations between map and actual environment.

To a certain degree, the three key GNC functions can be independently considered with respect to the information flow, as shown in Figure 5a. The snake robot’s sensory-perceptual data and external system commands are used to provide an input for the guidance system, which is responsible for decision-making, path-planning, and mission planning activities. The navigation system is responsible for achieving all the functions of perception, mapping, and localisation. The processed information is then adopted by the control system, which is responsible for low-level adaptation and control tasks.

Figure 5.

(a) Information flow through the different functions and capabilities of guidance, navigation, and control (GNC); (b) the possible design levels depicted in Figure 4 are represented in a three-dimensional space.

This design approach allows for the achievement a good level of modularity. For instance, concerning level 6 (global planning), the expected guidance function could first be carried out for a map which only includes a geometrical representation. At a later stage of the prototyping process, some structural properties of the environment may be gradually considered, such as stiffness, friction, and other parameters. Different combinations are possible, and they depend upon the particular prototyping approach that designers can adopt. The possible design levels depicted in Figure 4 are only provided for the sake of illustration. Our goal is to provide an overview of some of the main combinations of the three key GNC functions which we think are the most important steps in order to achieve fully autonomous snake robot operations.

Regarding our choice of describing a nine-level scale, it should be noted that the amount of effort needed in order to transit from one level to the next is far from equal for all levels. In particular, a significant and more challenging leap in functionality will be required to transit from level 7 to level 8, and from level 8 to level 9. We have chosen not to further refine the potentially existing sub-levels between these main levels (i.e., levels 7–9) in this paper. Instead, we have chosen an agile development-based and rapid-prototyping approach where we currently focus on refining the steps necessary in order to transit to the next immediate levels (compared to the state-of-the-art), and then leave the details for the higher levels until more information is available on the exact requirements and functionalities needed for these levels.

To provide a more intuitive overview of the design guidelines depicted in Figure 4, the same design levels can be represented in a three-dimensional space as shown in Figure 5b. It should be noted that control level 4 enables levels 4–8 concerning both guidance and navigation. The possible path that can be followed to achieve the desired level of independence is highlighted in the same figure.

It should be noted that the framework discussed in this work provides possible guidelines on how to design a system capable of adapting to more difficult terrains as the autonomy level of the robot increases. In particular, in Figure 4, the environmental complexity (EC) increases with the rise of complexity of the different design levels in the ATRA framework. Note that these design levels are also visualised in 3D in Figure 5b.

Referring to Figure 4 and Figure 5b, the current cutting edge technology for snake robots can exhibit level 4 or at most level 5 characteristics to the best of the authors’ knowledge. In fact, the authors believe that the current technology for snakes falls a little behind with respect to the advances of the current cutting-edge technology of non-snake-type robots.

3. Control Strategies for Obstacle-Aided Locomotion

The greater part of the existing literature on the control of snake robots considers motion across smooth—usually flat—surfaces. Different research groups have extensively investigated this particular operational scenario. Various approaches to mathematical modelling of the snake robot kinematics and dynamics have been presented as a means to simulate and analyse different control strategies [22]. In particular, many of the models presented in the early literature focus purely on kinematic aspects of locomotion [23,24], while more recent studies also include the dynamics of motion [25,26].

Among the different locomotion patterns inspired by biological snakes, lateral undulation is the fastest and most commonly implemented locomotion gait for robotic snakes in the literature [27]. This particular pattern can be realised through phase-shifted sinusoidal motion of each joint of the robotic snake [28]. This approach has been investigated for planar snake robots with metallic ventral scales [29] placed on the outer body of the robot, passive wheels [30], or for snake robots with anisotropic ground friction properties [31].

Even though these previous studies have provided researchers with a better understanding of snake robot dynamics, most of the past works on snake robot locomotion have almost exclusively considered motion across smooth surfaces. However, many real-life environments are not smooth, but cluttered with obstacles and irregularities. When the operational scenario is characterised by a surface that is no longer assumed to be flat and which has obstacles present, snake robots can move by sensing the surrounding environment. In the existing literature, not much work has been done to develop control tools specifically designed for this particular operational scenario. Next, we analyse and group relevant literature for snake robot locomotion in environments with obstacles, as shown in Table 1.

Table 1.

Snake locomotion in unstructured environments.

3.1. Obstacle Avoidance

A traditional approach to dealing with obstacles consists of trying to avoid them. Collisions may make the robot unable to progress and cause mechanical stress or damage to equipment. Therefore, different studies have focused on obstacle avoidance locomotion. For instance, principles of artificial potential field (APF) theory [32] have been adopted to effectively model imaginary force fields around objects that are either repulsive or attractive on the robot. The target position emits an attractive force field while obstacles, other robots, or the robot itself emits repulsive force fields. The strength of these forces may increase as the robot gets closer. Based on these principles, a controller capable of obstacle avoidance was presented in [33]. However, the standard APF approach may cause the robot to end up trapped in a local minima. In this case, the repulsive forces from nearby obstacles may leave the robot unable to move. To escape local minima, a hybrid control methodology using APF integrated with a modified simulated annealing (SA) optimization algorithm for motion planning of a team of multi-link snake robots was proposed in [34]. An alternative methodology was developed in [35]. Central pattern generators (CPGs) were employed to allow the robot to avoid obstacles or barriers by turning the robot’s body from its trajectory. A phase transition method was also presented in the same work, utilising the phase difference control parameter to realise the turning motion. This methodology also provides a way to incorporate sensory feedback into the CPG model, allowing for the detection of possible collisions.

3.2. Obstacle Accommodation

By using sensory feedback, a more relaxed approach to obstacle avoidance can be considered. Rather than absolutely avoiding collisions, the snake robot may be allowed to collide with obstacles, but collisions must be controlled so that no damage to the robot occurs. This approach was first investigated in [36], where a motion planning system was implemented to provide a snake-like robot with the possibility of accommodating environmental obstructions by continuing the motion towards the target while in contact with the obstacles. In [37], a general formulation of the motion constraints due to contact with obstacles was presented. Based on this formulation, a new inverse kinematics model was developed that provides joint motion for snake robots under contact constraints. By using this model, a motion planning algorithm for snake robot motion in a cluttered environment was also proposed.

3.3. Obstacle-Aided Locomotion

Even though obstacle avoidance and obstacle accommodation are useful features for snake robot locomotion in unstructured environments, these control approaches are not sufficient to fully exploit obstacles as means of propulsion. As observed in nature, biological snakes exploit the terrain irregularities and push against them so that a more efficient locomotion gait can be achieved. In particular, the entire snake’s body bends itself, and all sections consistently follow the path taken by the head and neck [2]. Snake robots may adopt a similar strategy. A key aspect of practical snake robots is therefore obstacle-aided locomotion [3,4]. To understand the mechanism underlying the functionalities of biological snakes on the basis of a synthetic approach, a model of a serpentine robot with viscoelastic properties was presented in [38,39]. It should be noted that the authors adopted the term scaffold-assisted serpentine locomotion, which is conceptually similar to the idea of obstacle-aided locomotion. The authors also designed an autonomous decentralised control scheme that employs local sensory feedback based on the muscle length and strain of the snake body, the latter of which is generated by the body’s softness. Through modelling and simulations, the authors demonstrated that only two local reflexive mechanisms which exploit sensory information about the stretching of muscles and the pressure on the body wall, are crucial for realising locomotion. This finding may help develop robots that work in undefined environments and shed light on the understanding of the fundamental principles underlying adaptive locomotion in animals.

However, to the best of our knowledge, little research has been done concerning the possibility of applying this locomotion approach to snake robots. For instance, a preliminary study aimed at understanding snake-like locomotion through a novel push-point approach was presented in [40].

Remark 1 .

In [40], an overview of the lateral undulation as it occurs in nature was first formalised according to the following conditions:

- it occurs over irregular ground with vertical projections;

- propulsive forces are generated from the lateral interaction between the mobile body and the vertical projections of the irregular ground, called push-points;

- at least three simultaneous push-points are necessary for this type of motion to take place;

- during the motion, the mobile body slides along its contacted push-points.

Based on the conditions described in Remark 1, the authors of the same work considered a generic planar mechanism and a related environment that suit to satisfy the fundamental mechanical phenomenon observed in the locomotion of terrestrial snakes. A simple control law was applied and tested via dynamic simulations with the purpose of calculating the contact forces required to propel the snake robot model in a desired direction. Successively, these findings were tested with practical experiments in [41], where closed-loop control of a snake-like locomotion through lateral undulation was presented and applied to a wheel-less snake-like mobile mechanism. To sense the environment and to implement this closed-loop control approach, simple switch sensors located on the side of each module were adopted. A more accurate sensing approach was introduced in [42], where a design process for the electrical, sensing, and mechanical systems needed to build a functional robotic snake capable of tactile and force sensing was presented. Through manipulation of the body shape, the robot was able to move in the horizontal plane by pushing off of obstacles to create propulsive forces. Instead of using additional hardware, an alternative and low-cost sensing approach was examined in [43], where robot actuators were used as sensors to allow the system to traverse an elastically deformable channel with no need for external tactile sensors.

Some researchers have focused on asymmetric pushing against obstacles. For instance, a control method with a predetermined and fixed pushing pattern was presented in [44]. In this method, the information of contact affects not only adjacent joints but also a couple of neighboring joints away from a contacting link. Furthermore, the distribution of the joint torques is empirically set asymmetrically in order to propel the snake robot forward. Later on, a more general and randomised control method that prevents the snake robot to get stuck in crowded obstacles was proposed by the same research group in [45].

When locomoting through environments with obstacles, it is also important to achieve body shape compliance for the snake robot. Some researchers have focused on shape-based control approaches, where a simple motion pattern is propagated along the snake’s body and dynamically adjusted according to the surrounding obstacles. For instance, a general motion planning framework for body shape control of snake robots was presented in [46]. The applicability of this framework was demonstrated for straight line path following control, and for implementing body shape compliance in environments with obstacles. Compliance is achieved by assigning mass-spring-damper dynamics to the shape curve defining the motion of the robot.

The idea of adopting a shape-based control approach to achieve compliance is particularly challenging when considering snake robots with many degrees of freedom (DOF). In fact, having many DOF is both a potential benefit and a possible disadvantage. A snake robot with a large number of DOF can better comply to and therefore better move in complex environments. Yet, possessing many degrees of freedom is only an advantage if the system is capable of coordinating them to achieve desired goals in real-time. In practice, there is a trade-off between the capability of highly articulated robots to navigate complex unstructured environments and the high computational cost of coordinating their many DOF. To facilitate the coordination of many DOF, the robot shape may be used as an important component in creating a middle layer that links high-level motion planning to low-level control for the robust locomotion of articulated systems in complex terrains. This idea was presented in [47], where shape functions were introduced as the layer of abstraction that formed the basis for creating this middle layer. In particular, these shape functions were used to capture joint-to-joint coupling and provide an intuitive set of controllable parameters that adapt the system to the environment in real-time. This approach was later extended to the definition of spatial frequency and temporal phase serpenoid shape parameters [48]. This approach provides a way to intuitively adapt the shape of highly articulated robots using joint-level torque feedback control, allowing a robot to navigate its way autonomously through unknown, irregular environment scenarios.

Remark 2 .

Most of the previous studies highlight the fact that lateral undulation is highly dependent on the actuator torque output and environmental friction.

Based on Remark 2, interesting approaches were discussed in [4,49,50]. The authors focused on how to optimally use the motor torque inputs, which result in obstacle forces suitable to achieve a user-defined desired path for a snake robot. Less importance was given to the forces generated by the environmental friction. In detail, assuming a desired snake robot trajectory and the desired link angles at the obstacles in contact, the contribution was on how to map the given trajectory to obstacle contact forces, and these forces to control inputs. Based on a 2D-model of the snake robot, a convex quadratic programming problem was solved, minimizing the power consumption and satisfying the maximum torque constraint and the obstacle constraints. To implement this strategy in practice, the optimization problem needs to be solved on-line recursively. In addition, when the configuration of the contact points changes, some of the parameters need to be updated accordingly. Finally, as the authors pointed out, there are two main issues to practically using their method for obstacle-aided locomotion. The first is the definition of an automatic method for finding the desired link angles at the obstacles. The second is the automatic calculation of the desired path. However, an interesting result is that one could use the approach in [4] to check the quality of a given path by verifying if useful forces can be generated by the interaction with a number of obstacles for that path, and this could be done off-line.

4. Environment Perception, Mapping, and Representation for Locomotion

In order for robots to be able to operate autonomously and interact with the environment in any of the ways mentioned in Section 3 (obstacle avoidance, obstacle accommodation, or obstacle-aided locomotion), they need to acquire information about the environment that can be used to plan their actions accordingly. This task can be divided into three different challenges that need to be solved:

- sensing, using the adequate sensor or sensor combinations to capture information about the environment;

- mapping, which combines and organises the sensing output in order to create a representation that can be exploited for the specific task to be performed by the robot;

- localisation, which estimates the robot’s pose in the environment representation according to the sensor inputs.

These topics are well studied for different types of robots and environments, and are tackled by the Simultaneous Localisation And Mapping (SLAM) community which has been the foremost research area for the last years in robotics [51]. However, comparatively little work has been done in this field for snake robots, as research has been focused on understanding the fundamentals of snake locomotion, and on the development of the control techniques.

Table 2 summarises the sensors most commonly found in the robotics literature for environment perception aimed at navigation. The table also contains some basic evaluation of the suitability of the specific sensor or sensing technology for the requirements and limitations of snake robots. For the sake of completeness, we have included references to representative robots different from snake robots for those technologies where we were not able to find examples of applications involving snake robots.

Table 2.

Sensors for environment perception

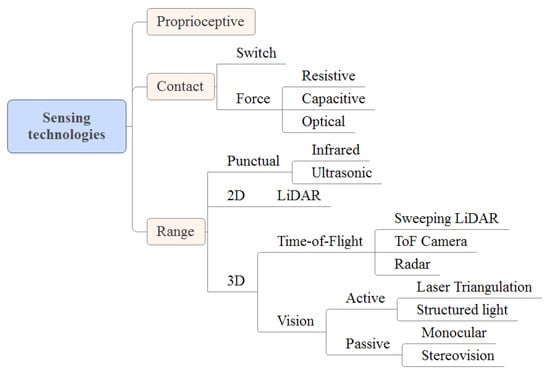

4.1. Sensor Technologies for Environment Perception for Navigation in Robotics

The classification of sensors in Table 2 considers both the measuring principles and sensing devices involved. For more clarity, Figure 6 summarises the sensor technologies that have been taken into account as the most representative ones found in literature regarding environment perception for navigation.

Figure 6.

A taxonomy of sensors for environment perception for navigation in robotics.

The qualifier proprioceptive applied to sensors is used in robotics to distinguish between those measures of values internal to the robot (e.g., motor speed) from other sensors that obtain information from the robot’s environment (e.g., distance to objects)—that is, exteroceptive. Contact sensors can inform whether the robot is touching elements from the environment in some specific locations, or even measure the pressure being applied. Range sensors provide distance measurements to nearby objects.

Proximity sensors [76] are intended for short-range distance measurements. They are active sensors that emit either an ultrasound (US) or infrared (IR) pulse and calculate the distance to the closest obstacle based on the time-of-flight principle; i.e., measuring the time it takes for the pulse to travel from the emitter to the obstacle, and then back to the receiver. In the case of infrared-based sensors, in order to simplify the electronics, in some cases instead of measuring the actual travelling time, the emitted pulse is modulated, and the basics for estimating the distance is the shift in the phase of the received signal with respect to the emitted one. Light detection and ranging (LiDAR) sensors are also based on the time-of-flight principle, emitting pulsed laser which is deflected by a rotating mirror. In this way, instead of obtaining a single measurement, the LiDAR system is able to scan the surroundings and provide measurements in a plane at specified angle intervals. Often in literature, LiDARs are mounted on an additional rotating element [77] that allows a full 3D reconstruction to be produced by aligning the subsequent scans in a single point cloud. Radars actuate under similar principles to LiDARs, but use electromagnetic waves instead of relying on optical signals.

Active 3D imaging techniques are also referred to as structured light [78]; the term “active” is used here in opposition to “passive” to denote that a controlled light source is projected onto the scene. In Table 2, we distinguish between laser triangulation, structured light, and light coding. Laser triangulation sensors consist of a laser source calibrated with respect to a camera or imaging sensor (position and orientation). The laser generates a single-point or narrow stripe that is projected onto the scene or object being inspected. The projected element is deformed according to the shape of the scene; this deformation can be observed by the imaging sensor, and then the depth profile is estimated by simple triangulation principle. By providing an additional scanning movement, the different scans can be stuck together to generate a point cloud representation. In order to avoid the scanning movement, bi-dimensional patterns of non-coherent light are used. In literature there are multiple proposals for ways of designing the projected patterns to achieve better reconstructions (e.g., binary codes, gray codes, phase shifting). A clear distinction can be made between methods that perform a temporal coding (which require a sequence of patters that are successively projected onto the scene) versus spatial coding methods which concentrate all the coding scheme in a unique pattern. Following some rather extended nomenclature, we denote temporal coding methods just by structured light, and spatial methods by light coding [79]. Light coding methods have been popularized in the last years by the introduction of low-cost devices in the consumer market, such as the Microsoft Kinect. Those devices are also often referred to as RGB-D cameras. Despite the name, most commercially available time-of-flight cameras (ToF camera) [80] operate by measuring the phase shift of the modulated infrared signal bounced back. The main difference with respect to LiDAR systems is that in ToF cameras the whole scene is captured with each pulse, as opposed to one-point measurements in the case of LiDARs. ToF cameras can provide 3D measurements at high rates, but at the cost of low resolutions and accuracies in the range of a few centimetres.

Referring to passive 3D imaging techniques, stereovision [81] consists of two calibrated cameras that acquire images of a scene from two different viewpoints. Calibration involves both the optical characteristics of the cameras, but also the relative position and orientation between them. Points in the scene need to be recognized or matched across the images from the two cameras, and then their position is triangulated to calculate the depth. Stereovision methods can be classified as sparse or dense, according to the nature of the point cloud generated: sparse methods select certain interest points from the images to be triangulated, while dense methods operate on a per-pixel basis. A single camera can also be used to recover the 3D structure of an environment: structure from motion (SfM) [82] or visual SLAM (vSLAM) [83] methods use different images from a moving camera (or images taken from different viewpoints) to simultaneously estimate the positions from where the images were taken and the 3D structure of the environment.

4.2. Survey of Environment Perception for Locomotion in Snake Robots

Many of the snake robots found in literature are equipped with a camera located in the head of the robot and pointing in the same direction as the navigation direction. However, in most cases, the images captured by the camera are not used for visual feedback for the robot’s control, but sent to the operator for her/him to plan the robot’s trajectory or to solve the high level applications, which in the case of snake robots are usually inspection or search-and-rescue operations.

One way a snake robot can have a very simple perception of the surrounding environments is by using proprioception; i.e., using the robot’s own internal state (e.g., joint angles, motor current levels, etc.) to derive some properties of the environment. As an example, [53] showed that the tilt angle of a slope can be inferred by only using the snake robot’s state estimated from the joint angles, and used this information to adapt the behaviour of sidewinding and maximise the travelling speed. In [52], a heavily bioinspired method was developed based on the mechanics and neural control locomotion of a worm which also uses a serpentine motion. The objective in this case is to react and adapt to obstacles in the environment. The work in [58] implements strain gauges measuring the deformation in the joint actuators; from these measurements, the contact forces applied to a waterproof snake robot are calculated.

The sensing modality that is most commonly explored in snake robots for environment perception is force or contact sensors. The first snake robot in 1972 [10] already used contact switches along the body of the snake robot, and demonstrated lateral inhibition with respect to external obstacles. Apart from reacting to contact with obstacles, the main use of force sensors is to adapt the body of the snake robot to the irregularities of the terrain [56,57]. The authors in [54] claim to feature the first full-body 3D-sensing snake. The robot is equipped with 3-DOF force sensors integrated in the wheels (which are also actuated), and uses this data to equally distribute the weight of all the segments, apart from moving away from obstacles at the sides. In [55], a different application of contact sensors is shown, in which lateral switches distributed along the body of the snake are used as touch sensors to guarantee that there are enough push-point contacts so that propulsion can be performed. In case the last contact point was lost, the robot performs an exploratory movement. The snake robot in [15] is already designed aimed at obstacle-aided locomotion, by including two main features: a smooth exterior surface that allows the robot to glide, and contact force sensing by using four force-sensing resistors on each side of the joint module, and assuming that in locomotion on horizontal surfaces it is enough to know on which side of the robot the contact happens.

Robots that base their environment perception on contact-based sensors exclusively allow for limited motion planning. For example, when aiming at obstacle avoidance, it is impossible to achieve full avoidance, as the robot needs to make contact with the obstacle in order to realise its presence. However, in [84,85], it is demonstrated that environment representation can be achieved purely by contact sensors. In this case, whisker-like contact sensors were used in a SLAM framework to produce an environment representation that could potentially be used for planning and obstacle-aided locomotion purposes.

The use of range or proximity sensors allows snake robots to not rely on contact in order to perceive the environment, and thus perform obstacle avoidance. The works in [60,61] feature active infrared sensors used to implement reactive behaviours for avoiding obstacles, either by selecting an obstacle-free trajectory in the first case, or by adapting the undulatory motion for narrow corridor-like passages in the second one. It is worth noting that the snake robot in [60] is equipped with actively-driven tracks for propulsion in slippery terrains. Ultrasound sensors are used in a similar fashion, and their data can also be used to estimate the snake robot’s speed in case it is approaching an obstacle [59]. A combination of ultrasound sensors for mapping and obstacle avoidance and passive infrared sensors for the detection of human life in urban search-and-rescue applications is proposed in [63], though details on how the sensor information is exploited is not provided.

A more detailed and accurate representation of the environment can be achieved by the use of LiDAR sensors, sometimes combined with ultrasound sensors as in [62]. The use of LiDAR even allows for the generation of richer and more complete maps. The use of such sensors in a SLAM framework is demonstrated in [64]: the snake robot is equipped with a LiDAR sensor in the head, and a camera and infrared sensors in the sides. The camera is used to provide position information to the remote operator who controls the desired velocity of the snake robot using a joystick. The snake then uses the LiDAR to perform SLAM, producing a map and an estimated position of the robot itself in that map. The robot then uses the output from the SLAM to navigate the environment, while using the infrared sensor information in a reactive way to avoid obstacles not detected by the LiDAR and overcome the errors in the SLAM. The work in [66] studies the use of SLAM in snake robots. It emphasizes two important challenges of SLAM in this type of robot in comparison with existing approaches of SLAM: on one hand, the use of odometry-less models, and on the other hand, the lack of features or landmarks that characterizes navigation environments such as the inside of tunnels and pipes, which is a typical focus for snake robots. A rotating LiDAR is used in [65] to scan the environment and generate a 2.5 dimensional map that then can be used to perform motion planning in 3D. The main objective of this system is to overcome challenging obstacles such as stairs, for which the robot also relies on active wheels. The point clouds generated by the LiDAR are also matched across time, estimating the relative localisation that is then used to correct the robot odometry. The work in [67] also approaches step climbing using a LiDAR sensor, which is used to calculate the relative position of the snake’s head to the step and its height. Several measurements of the LiDAR are fused through an Extended Kalman Filter in order to reduce the measurement’s uncertainty and detect the line segments that correspond to the different planes of the step. This information is then fed into a model predictive control (MPC) algorithm to generate a collision-free trajectory. In [66], the influence of two different SLAM algorithms using serpentine locomotion in a featureless environment is presented.

The use of onboard vision systems to perceive the environment and influence the snake robot’s motion is limited in the literature. In a simplified example [86], a camera mounted in the head of the snake robot is adopted to detect a black tape attached on the ground and then use that information as the desired trajectory for the snake. Time-of-flight (ToF) cameras provide 3D information of the environment in the form of depth images or point clouds, without requiring any additional scanning movement. A modified version of the iterative closest point (ICP) algorithm is used in [69] to combine the information of an inertial measurement unit (IMU) with a ToF camera. The ICP [87] is a well-known algorithm that calculates the transformation (translation and rotation) to align two point clouds that minimises the mean squared error between the point pairs of the point clouds. The modifications proposed for the ICP are intended to speed up and increase the robustness. The objective of this process is to perform localisation and mapping. Localisation is demonstrated at four frames per second, while map construction was done offline. The concept is demonstrated in a very challenging scenario, which is the Collapsed House Simulation Facility, and adopting the IRS Souryu snake robot which uses actively driven tracks for propulsion. The use of ToF cameras is also demonstrated in [70] in a pipe inspection snake robot. The camera is used to detect key aspects of the pipe geometry, such as bends, junctions, and pipe radius. The snake robot’s shape can then be adapted to the pipe’s features, and navigate that way efficiently, even through vertical pipes.

Laser triangulation is a well-known sensing technology in industry, providing very high resolution and high accuracy measurements. The work in [68] focuses on increasing the snake robot’s autonomy, which is demonstrated by autonomous pole climbing. This is a complex behaviour to be achieved by teleoperation. The authors have custom-designed a laser triangulation sensor to fit into the size and power constraints of the snake robot. The robot adopts a stable position with the head raised, and rotates the head to perform environment scanning. The resulting point cloud is filtered and processed to detect pole-like elements in the environment that the snake robot can climb. Once the pole and relative positioning is calculated, the robot pose is estimated by using the forward kinematics and IMU data.

For the sake of completeness, we can refer to vision systems offboard, which might not be applicable to more realistic applications such as exploration, search-and-rescue, or inspection. In [88], two cameras with a top-down view of the operating area are used to detect the obstacles and calculate the snake robot’s pose. The pose estimation is simplified by placing fiducials in the snake robot (10 orange blocks along the snake robot’s length). In a similar setup, [89] uses a stereovision system to measure the head’s position of the robot and target coordinates.

4.3. Other Relevant Sensor Technologies for Navigation in Non-Snake Robots

Multiple examples of robots with shapes and propulsion mechanisms others than snake robots, and which use different sensor modalities from the ones introduced above, are common in literature. Structured light sensors based on temporal coding are seldom used for navigation because of their limitations to cope with movement, either due to dynamic elements in the environment or with a moving sensor. However, an example of a moving robot for search-and-rescue applications is introduced in [71]. The work in [72] focuses on improving the performance of SLAM using RGB-D sensors in large-scale environments. The use of stereovision for mapping and navigating in very challenging outdoor scenarios is shown in [73]. A single camera together with an IMU is demonstrated to be enough to produce a map and calculate a pose estimate at 40 Hz in a micro aerial vehicle (MAV) in [74]. One of the most relevant limitations in MAVs is the constraints to the size, power, and weight of the payload. These constraints are addressed by [75] by designing and building a radar sensor based on ultra-wide band (UWB), used for mapping and obstacle detection.

Remark 3 .

Knowledge about the environment and its properties, in addition to its geometric representation, can be successfully exploited for improving locomotion performance for obstacle-aided locomotion.

While knowledge about the environment’s geometry might seem an obvious requirement for obstacle avoidance, other kinds of interaction with the environment—including obstacle-aided locomotion—require some further task-relevant knowledge about the environment. From a cognitive perspective, this has been acknowledged by the robotics community by the creation of semantic maps [90], which capture higher-level information about the environment, usually linked or grounded to knowledge from other sources. For the Snake-2s robot [64], the authors also claim that for planning the trajectory, they require the nature of the surrounding obstacles to be considered, as contact with some elements (e.g., fragile, high heat, electrically-charged, or sticky obstacles) might pose a safety risk to the robot and must then be completely avoided. However, safety is not the only reason. The biologically-inspired hexapod robot in [91] represents a good example of how knowledge about the environment is exploited for enhanced navigation. Information about certain terrain characteristics is captured in the environment model, and later adopted as part of the cost function used by the RRT* planner [92]. This way, the planned trajectory considers factors such as terrain roughness, terrain inclination, or mapping uncertainty.

5. Concluding Remarks

In this paper we have surveyed and discussed the state-of-the-art, challenges, and possibilities with perception-driven obstacle-aided locomotion. We have proposed a division of levels of autonomy for snake robots along three main axes: environmental complexity, mission complexity, and external system independence. Moreover, we have further expanded the description to suggest a step-wise approach to increasing the level of autonomy within three main robot technology areas: guidance, navigation, and control. We have reviewed existing literature relevant for perception-driven obstacle-aided locomotion. This includes snake robot obstacle avoidance, obstacle accommodation, and obstacle-aided locomotion, as well as methods and technologies for environment perception, mapping, and representation.

Perception-driven obstacle-aided locomotion is still in its infancy. However, there are strong results within both the snake robot community in particular, and the robotics community in general, which can be used to build further upon. One of the fundamental targets of this paper is to further increase global efforts to realise the large variety of application possibilities offered by snake robots and to provide an up-to-date reference as a stepping-stone for new research and development within this field.

Acknowledgments

This work is supported by the Research Council of Norway through the Young research talents funding scheme, project title “SNAKE—Control Strategies for Snake Robot Locomotion in Challenging Outdoor Environments”, project number 240072.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Perrow, M.R.; Davy, A.J. Handbook of Ecological Restoration: Volume 1, Principles of Restoration; Cambridge University Press: Cambridge, UK, 2008. [Google Scholar]

- Gray, J. The Mechanism of Locomotion in Snakes. J. Exp. Biol. 1946, 23, 101–120. [Google Scholar] [PubMed]

- Transeth, A.; Leine, R.; Glocker, C.; Pettersen, K.; Liljebäck, P. Snake Robot Obstacle-Aided Locomotion: Modeling, Simulations, and Experiments. IEEE Trans. Robot. 2008, 24, 88–104. [Google Scholar] [CrossRef]

- Holden, C.; Stavdahl, Ø.; Gravdahl, J.T. Optimal dynamic force mapping for obstacle-aided locomotion in 2D snake robots. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Chicago, IL, USA, 14–18 September 2014; pp. 321–328. [Google Scholar]

- Sanfilippo, F.; Azpiazu, J.; Marafioti, G.; Transeth, A.A.; Stavdahl, Ø.; Liljebäck, P. A review on perception-driven obstacle-aided locomotion for snake robots. In Proceedings of the 14th International Conference on Control, Automation, Robotics and Vision (ICARCV), Phuket, Thailand, 13–15 November 2016; pp. 1–7. [Google Scholar]

- Huang, H.M.; Pavek, K.; Albus, J.; Messina, E. Autonomy levels for unmanned systems (ALFUS) framework: An update. In Proceedings of the 2005 SPIE Defense and Security Symposium, Orlando, FL, USA, 28 March–1 April 2005; International Society for Optics and Photonics: Orlando, FL, USA, 2005; pp. 439–448. [Google Scholar]

- Kendoul, F. Towards a Unified Framework for UAS Autonomy and Technology Readiness Assessment (ATRA). In Autonomous Control Systems and Vehicles; Nonami, K., Kartidjo, M., Yoon, K.J., Budiyono, A., Eds.; Springer: Tokyo, Japan, 2013; pp. 55–71. [Google Scholar]

- Nonami, K.; Kartidjo, M.; Yoon, K.J.; Budiyono, A. Autonomous Control Systems and Vehicles: Intelligent Unmanned Systems; Springer Science & Business Media: Tokyo, Japan, 2013. [Google Scholar]

- Blasch, E.P.; Lakhotia, A.; Seetharaman, G. Unmanned vehicles come of age: The DARPA grand challenge. Computer 2006, 39, 26–29. [Google Scholar]

- Hirose, S. Biologically Inspired Robots: Snake-Like Locomotors and Manipulators; Oxford University Press: Oxford, UK, 1993. [Google Scholar]

- McKenna, J.C.; Anhalt, D.J.; Bronson, F.M.; Brown, H.B.; Schwerin, M.; Shammas, E.; Choset, H. Toroidal skin drive for snake robot locomotion. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA 2008), Pasadena, CA, USA, 19–23 May 2008; pp. 1150–1155. [Google Scholar]

- Ma, S.; Tadokoro, N. Analysis of Creeping Locomotion of a Snake-like Robot on a Slope. Auton. Robots 2006, 20, 15–23. [Google Scholar] [CrossRef]

- Fjerdingen, S.A.; Liljebäck, P.; Transeth, A.A. A snake-like robot for internal inspection of complex pipe structures (PIKo). In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2009), Saint Louis, MO, USA, 11–15 October 2009; pp. 5665–5671. [Google Scholar]

- Wright, C.; Buchan, A.; Brown, B.; Geist, J.; Schwerin, M.; Rollinson, D.; Tesch, M.; Choset, H. Design and architecture of the unified modular snake robot. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation (ICRA), Saint Paul, MN, USA, 14–18 May 2012; pp. 4347–4354. [Google Scholar]

- Liljebäck, P.; Pettersen, K.; Stavdahl, Ø. A snake robot with a contact force measurement system for obstacle-aided locomotion. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation (ICRA), Anchorage, AK, USA, 3–8 May 2010; pp. 683–690. [Google Scholar]

- Anderson, G.T.; Yang, G. A proposed measure of environmental complexity for robotic applications. In Proceedings of the 2007 IEEE International Conference on Systems, Man and Cybernetics, Montreal, QC, Canada, 7–10 October 2007; pp. 2461–2466. [Google Scholar]

- Kelasidi, E.; Liljebäck, P.; Pettersen, K.Y.; Gravdahl, J.T. Innovation in Underwater Robots: Biologically Inspired Swimming Snake Robots. IEEE Robot. Autom. Mag. 2016, 23, 44–62. [Google Scholar] [CrossRef]

- Chigisaki, S.; Mori, M.; Yamada, H.; Hirose, S. Design and control of amphibious Snake-like Robot ACM-R5. In In Proceedings of the 2005 JSME Conference on Robotics and Mechatronics, Kobe, Japan, 9–11 June 2005. [Google Scholar]

- Socha, J.J.; O’Dempsey, T.; LaBarbera, M. A 3-D kinematic analysis of gliding in a flying snake, Chrysopelea paradisi. J. Exp. Biol. 2005, 208, 1817–1833. [Google Scholar] [PubMed]

- Vagia, M.; Transeth, A.A.; Fjerdingen, S.A. A literature review on the levels of automation during the years. What are the different taxonomies that have been proposed? Appl. Ergon. 2016, 53 Pt A, 190–202. [Google Scholar] [CrossRef] [PubMed]

- Yanco, H.A.; Drury, J. “Where am I?” Acquiring situation awareness using a remote robot platform. In Proceedings of the 2004 IEEE International Conference on Systems, Man and Cybernetics, The Hague, The Netherlands, 10–13 October 2004; Volume 3, pp. 2835–2840. [Google Scholar]

- Liljebäck, P.; Pettersen, K.Y.; Stavdahl, Ø.; Gravdahl, J.T. Snake Robots: Modelling, Mechatronics, and Control; Springer Science & Business Media: London, UK, 2012. [Google Scholar]

- Chirikjian, G.S.; Burdick, J.W. The kinematics of hyper-redundant robot locomotion. IEEE Trans. Robot. Autom. 1995, 11, 781–793. [Google Scholar] [CrossRef]

- Ostrowski, J.; Burdick, J. The Geometric Mechanics of Undulatory Robotic Locomotion. Int. J. Robot. Res. 1998, 17, 683–701. [Google Scholar] [CrossRef]

- Prautsch, P.; Mita, T.; Iwasaki, T. Analysis and Control of a Gait of Snake Robot. IEEJ Trans. Ind. Appl. 2000, 120, 372–381. [Google Scholar]

- Liljebäck, P.; Pettersen, K.Y.; Stavdahl, Ø.; Gravdahl, J.T. Controllability and Stability Analysis of Planar Snake Robot Locomotion. IEEE Trans. Autom. Control 2011, 56, 1365–1380. [Google Scholar]

- Liljebäck, P.; Pettersen, K.Y.; Stavdahl, Ø.; Gravdahl, J.T. A review on modelling, implementation, and control of snake robots. Robot. Auton. Syst. 2012, 60, 29–40. [Google Scholar]

- Hu, D.L.; Nirody, J.; Scott, T.; Shelley, M.J. The mechanics of slithering locomotion. Proc. Natl. Acad. Sci. USA 2009, 106, 10081–10085. [Google Scholar] [CrossRef] [PubMed]

- Saito, M.; Fukaya, M.; Iwasaki, T. Serpentine locomotion with robotic snakes. IEEE Control Syst. 2002, 22, 64–81. [Google Scholar] [CrossRef]

- Tang, W.; Reyes, F.; Ma, S. Study on rectilinear locomotion based on a snake robot with passive anchor. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–3 October 2015; pp. 950–955. [Google Scholar]

- Transeth, A.A.; Pettersen, K.Y.; Liljebäck, P. A survey on snake robot modeling and locomotion. Robotica 2009, 27, 999–1015. [Google Scholar] [CrossRef]

- Lee, M.C.; Park, M.G. Artificial potential field based path planning for mobile robots using a virtual obstacle concept. In Proceedings of the 2003 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM 2003), Port Island, Japan, 20 July–24 July 2003; Volume 2, pp. 735–740. [Google Scholar]

- Ye, C.; Hu, D.; Ma, S.; Li, H. Motion planning of a snake-like robot based on artificial potential method. In Proceedings of the 2010 IEEE International Conference on Robotics and Biomimetics (ROBIO), Tianjin, China, 14–18 December 2010; pp. 1496–1501. [Google Scholar]

- Yagnik, D.; Ren, J.; Liscano, R. Motion planning for multi-link robots using Artificial Potential Fields and modified Simulated Annealing. In Proceedings of the 2010 IEEE/ASME International Conference on Mechatronics and Embedded Systems and Applications (MESA), Qingdao, China, 5–17 July 2010; pp. 421–427. [Google Scholar]

- Nor, N.M.; Ma, S. CPG-based locomotion control of a snake-like robot for obstacle avoidance. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 347–352. [Google Scholar]

- Shan, Y.; Koren, Y. Design and motion planning of a mechanical snake. IEEE Trans. Syst. Man Cybern. 1993, 23, 1091–1100. [Google Scholar] [CrossRef]

- Shan, Y.; Koren, Y. Obstacle accommodation motion planning. IEEE Trans. Robot. Autom. 1995, 11, 36–49. [Google Scholar] [CrossRef]

- Kano, T.; Sato, T.; Kobayashi, R.; Ishiguro, A. Decentralized control of scaffold-assisted serpentine locomotion that exploits body softness. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 5129–5134. [Google Scholar]

- Kano, T.; Sato, T.; Kobayashi, R.; Ishiguro, A. Local reflexive mechanisms essential for snakes’ scaffold-based locomotion. Bioinspir. Biomim. 2012, 7, 046008. [Google Scholar] [CrossRef] [PubMed]

- Bayraktaroglu, Z.Y.; Blazevic, P. Understanding snakelike locomotion through a novel push-point approach. J. Dyn. Syst. Meas. Control 2005, 127, 146–152. [Google Scholar] [CrossRef]

- Bayraktaroglu, Z.Y.; Kilicarslan, A.; Kuzucu, A. Design and control of biologically inspired wheel-less snake-like robot. In Proceedings of the First IEEE/RAS-EMBS International Conference on Biomedical Robotics and Biomechatronics (BioRob 2006), Pisa, Italy, 20–22 February 2006; pp. 1001–1006. [Google Scholar]

- Gupta, A. Lateral undulation of a snake-like robot. Master’s Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2007. [Google Scholar]

- Andruska, A.M.; Peterson, K.S. Control of a Snake-Like Robot in an Elastically Deformable Channel. IEEE/ASME Trans. Mechatron. 2008, 13, 219–227. [Google Scholar] [CrossRef]

- Kamegawa, T.; Kuroki, R.; Travers, M.; Choset, H. Proposal of EARLI for the snake robot’s obstacle aided locomotion. In Proceedings of the 2012 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), College Station, TX, USA, 5–8 November 2012; pp. 1–6. [Google Scholar]

- Kamegawa, T.; Kuroki, R.; Gofuku, A. Evaluation of snake robot’s behavior using randomized EARLI in crowded obstacles. In Proceedings of the 2014 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Toyako-cho, Japan, 27–30 October 2014; pp. 1–6. [Google Scholar]

- Liljebäck, P.; Pettersen, K.Y.; Stavdahl, Ø.; Gravdahl, J.T. Compliant control of the body shape of snake robots. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 4548–4555. [Google Scholar]

- Travers, M.; Whitman, J.; Schiebel, P.; Goldman, D.; Choset, H. Shape-Based Compliance in Locomotion. In Proceedings of the Robotics: Science and Systems (RSS), Cambridge, MA, USA, 12–16 July 2016; Number 5. p. 10. [Google Scholar]

- Whitman, J.; Ruscelli, F.; Travers, M.; Choset, H. Shape-based compliant control with variable coordination centralization on a snake robot. In Proceedings of the IEEE 55th Conference on Decision and Control (CDC). IEEE, Las Vegas, NV, USA, 12–14 December 2016; pp. 5165–5170. [Google Scholar]

- Ma, S.; Ohmameuda, Y.; Inoue, K. Dynamic analysis of 3-dimensional snake robots. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Sendai, Japan, 28 September–2 October 2004; Volume 1, pp. 767–772. [Google Scholar]

- Sanfilippo, F.; Stavdahl, Ø.; Marafioti, G.; Transeth, A.A.; Liljebäck, P. Virtual functional segmentation of snake robots for perception-driven obstacle-aided locomotion. In Proceedings of the IEEE Conference on Robotics and Biomimetics (ROBIO), Qingdao, China, 3–7 December 2016; pp. 1845–1851. [Google Scholar]

- Thrun, S.; Burgard, W.; Fox, D. Probabilistic Robotics; MIT Press: Cambridge, MA, USA, 2005. [Google Scholar]

- Boyle, J.H.; Johnson, S.; Dehghani-Sanij, A.A. Adaptive Undulatory Locomotion of a C. elegans Inspired Robot. IEEE/ASME Trans. Mechatron. 2013, 18, 439–448. [Google Scholar] [CrossRef]

- Gong, C.; Tesch, M.; Rollinson, D.; Choset, H. Snakes on an inclined plane: Learning an adaptive sidewinding motion for changing slopes. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2014), Chicago, IL, USA, 14–18 September 2014; pp. 1114–1119. [Google Scholar]

- Taal, S.; Yamada, H.; Hirose, S. 3 axial force sensor for a semi-autonomous snake robot. In Proceedings of the IEEE International Conference on Robotics and Automation, (ICRA ’09), Kobe, Japan, 12–17 May 2009; pp. 4057–4062. [Google Scholar]

- Bayraktaroglu, Z.Y. Snake-like locomotion: Experimentations with a biologically inspired wheel-less snake robot. Mech. Mach. Theory 2009, 44, 591–602. [Google Scholar] [CrossRef]

- Gonzalez-Gomez, J.; Gonzalez-Quijano, J.; Zhang, H.; Abderrahim, M. Toward the sense of touch in snake modular robots for search and rescue operations. In Proceedings of the ICRA 2010 Workshop on Modular Robots: State of the Art, Anchorage, AK, USA, 3–8 May 2010; pp. 63–68. [Google Scholar]

- Wu, X.; Ma, S. Development of a sensor-driven snake-like robot SR-I. In Proceedings of the 2011 IEEE International Conference on Information and Automation (ICIA), Shenzhen, China, 6–8 June 2011; pp. 157–162. [Google Scholar]

- Liljebäck, P.; Stavdahl, Ø.; Pettersen, K.; Gravdahl, J. Mamba—A waterproof snake robot with tactile sensing. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2014), Chicago, IL, USA, 14–18 September 2014; pp. 294–301. [Google Scholar]

- Paap, K.; Christaller, T.; Kirchner, F. A robot snake to inspect broken buildings. In Proceedings of the 2000 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2000), Takamatsu, Japan, 30 October–5 November 2000; Volume 3, pp. 2079–2082. [Google Scholar]

- Caglav, E.; Erkmen, A.M.; Erkmen, I. A snake-like robot for variable friction unstructured terrains, pushing aside debris in clearing passages. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2007), San Diego, CA, USA, 29 October–2 November 2007; pp. 3685–3690. [Google Scholar]

- Sfakiotakis, M.; Tsakiris, D.P.; Vlaikidis, A. Biomimetic Centering for Undulatory Robots. In Proceedings of the First IEEE/RAS-EMBS International Conference on Biomedical Robotics and Biomechatronics (BioRob 2006), Pisa, Italy, 20–22 February 2006; pp. 744–749. [Google Scholar]

- Wu, Q.; Gao, J.; Huang, C.; Zhao, Z.; Wang, C.; Su, X.; Liu, H.; Li, X.; Liu, Y.; Xu, Z. Obstacle avoidance research of snake-like robot based on multi-sensor information fusion. In Proceedings of the 2012 IEEE International Conference on Robotics and Biomimetics (ROBIO), Guangzhou, China, 11–14 December 2012; pp. 1040–1044. [Google Scholar]

- Chavan, P.; Murugan, M.; Vikas Unnikkannan, E.; Singh, A.; Phadatare, P. Modular Snake Robot with Mapping and Navigation: Urban Search and Rescue (USAR) Robot. In Proceedings of the 2015 International Conference on Computing Communication Control and Automation (ICCUBEA), Pune, India, 26–27 February 2015; pp. 537–541. [Google Scholar]

- Tanaka, M.; Kon, K.; Tanaka, K. Range-Sensor-Based Semiautonomous Whole-Body Collision Avoidance of a Snake Robot. IEEE Trans. Control Syst. Technol. 2015, 23, 1927–1934. [Google Scholar] [CrossRef]

- Pfotzer, L.; Staehler, M.; Hermann, A.; Roennau, A.; Dillmann, R. KAIRO 3: Moving over stairs & unknown obstacles with reconfigurable snake-like robots. In Proceedings of the 2015 European Conference on Mobile Robots (ECMR), Lincoln, UK, 2–4 September 2015; pp. 1–6. [Google Scholar]

- Tian, Y.; Gomez, V.; Ma, S. Influence of two SLAM algorithms using serpentine locomotion in a featureless environment. In Proceedings of the IEEE International Conference on Robotics and Biomimetics (ROBIO), Zhuhai, China, 6–9 December 2015; pp. 182–187. [Google Scholar]

- Kon, K.; Tanaka, M.; Tanaka, K. Mixed Integer Programming-Based Semiautonomous Step Climbing of a Snake Robot Considering Sensing Strategy. IEEE Trans. Control Syst. Technol. 2016, 24, 252–264. [Google Scholar] [CrossRef]

- Ponte, H.; Queenan, M.; Gong, C.; Mertz, C.; Travers, M.; Enner, F.; Hebert, M.; Choset, H. Visual sensing for developing autonomous behavior in snake robots. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 2779–2784. [Google Scholar]

- Ohno, K.; Nomura, T.; Tadokoro, S. Real-Time Robot Trajectory Estimation and 3D Map Construction using 3D Camera. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 5279–5285. [Google Scholar]

- Fjerdingen, S.A.; Mathiassen, J.R.; Schumann-Olsen, H.; Kyrkjebø, E. Adaptive Snake Robot Locomotion: A Benchmarking Facility for Experiments. In European Robotics Symposium 2008; Bruyninckx, H., Přeučil, L., Kulich, M., Eds.; Number 44 in Springer Tracts in Advanced Robotics; Springer: Berlin/Heidelberg, Germany, 2008; pp. 13–22. [Google Scholar]

- Mobedi, B.; Nejat, G. 3-D Active Sensing in Time-Critical Urban Search and Rescue Missions. IEEE/ASME Trans. Mechatron. 2012, 17, 1111–1119. [Google Scholar]

- Labbé, M.; Michaud, F. Online global loop closure detection for large-scale multi-session graph-based SLAM. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 2661–2666. [Google Scholar]

- Agrawal, M.; Konolige, K.; Bolles, R.C. Localization and Mapping for Autonomous Navigation in Outdoor Terrains: A Stereo Vision Approach. In Proceedings of the IEEE Workshop on Applications of Computer Vision (WACV ’07), Austin, TX, USA, 21–22 February 2007. [Google Scholar]

- Weiss, S.; Achtelik, M.W.; Lynen, S.; Chli, M.; Siegwart, R. Real-time onboard visual-inertial state estimation and self-calibration of MAVs in unknown environments. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation (ICRA), Saint Paul, MN, USA, 14–18 May 2012; pp. 957–964. [Google Scholar]

- Fontana, R.J.; Richley, E.A.; Marzullo, A.J.; Beard, L.C.; Mulloy, R.W.T.; Knight, E.J. An ultra wideband radar for micro air vehicle applications. In Proceedings of the 2002 IEEE Conference on Ultra Wideband Systems and Technologies, Digest of Papers, Baltimore, MD, USA, 21–23 May 2002; pp. 187–191. [Google Scholar]

- Dudek, G.; Jenkin, M. Computational Principles of Mobile Robotics; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- Surmann, H.; Nüchter, A.; Hertzberg, J. An autonomous mobile robot with a 3D laser range finder for 3D exploration and digitalization of indoor environments. Robot. Auton. Syst. 2003, 45, 181–198. [Google Scholar] [CrossRef]

- Sansoni, G.; Trebeschi, M.; Docchio, F. State-of-the-art and applications of 3D imaging sensors in industry, cultural heritage, medicine, and criminal investigation. Sensors 2009, 9, 568–601. [Google Scholar] [CrossRef] [PubMed]

- Henry, P.; Krainin, M.; Herbst, E.; Ren, X.; Fox, D. RGB-D mapping: Using Kinect-style depth cameras for dense 3D modeling of indoor environments. Int. J. Robot. Res. 2012, 31, 647–663. [Google Scholar] [CrossRef]

- Foix, S.; Alenya, G.; Torras, C. Lock-in Time-of-Flight (ToF) Cameras: A Survey. IEEE Sens. J. 2011, 11, 1917–1926. [Google Scholar] [CrossRef]

- Scharstein, D.; Szeliski, R. A taxonomy and evaluation of dense two-frame stereo correspondence algorithms. Int. J. Comput. Vis. 2002, 47, 7–42. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Davison, A.J.; Reid, I.D.; Molton, N.D.; Stasse, O. MonoSLAM: Real-Time Single Camera SLAM. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1052–1067. [Google Scholar] [CrossRef] [PubMed]

- Fox, C.; Evans, M.; Pearson, M.; Prescott, T. Tactile SLAM with a biomimetic whiskered robot. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation (ICRA), Saint Paul, MN, USA, 14–18 May 2012; pp. 4925–4930. [Google Scholar]

- Pearson, M.; Fox, C.; Sullivan, J.; Prescott, T.; Pipe, T.; Mitchinson, B. Simultaneous localisation and mapping on a multi-degree of freedom biomimetic whiskered robot. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation (ICRA), Karlsruhe, Germany, 6–10 May 2013; pp. 586–592. [Google Scholar]