1. Introduction

In the application of satellite technology, high-accuracy geolocation and joint observation of multi-source data have emerged as core issues in the fields of photogrammetry and remote sensing. Although the positioning precision of satellites on a global scale has improved steadily, as seen with ZY3, the overall positioning precision of some satellites is low and unstable owing to different design purposes and hardware configuration deficiencies, restricting their application. ZY-1-02C, which is equipped with a multispectral (MS) camera with 10 m resolution, a panchromatic (PAN) camera with 5 m resolution, and a high-resolution PAN camera with 2.36 m resolution, is a satellite that is used for surveying land resources. The overall positioning precision of this satellite imagery, whose processing level is aimed at sensor geometry, is approximately 100 m, and achieves only 1000 m precision under extreme conditions. The conventional approach of manually selecting a large number of control points cannot meet the demands of mass data processing. In contrast, an automatic control point matching method—namely, multi-source satellite image matching—allows the combined processing of different data, providing many possibilities for multi-source applications [

1]. This study focused on the use of multi-source image registration to compensate for the drawbacks of ZY-1-02C in positioning in the sensor geometry processing, resulting in a rich data source and achieving a high-utility earth observation method.

Automatic multi-source image matching (AMSIM), which is widely used in photogrammetry and remote sensing, has been studied for decades [

2,

3,

4,

5,

6,

7]. Compared to manually measuring control points, image matching can effectively reduce workload and is appropriate for processing mass data. Image matching can be broadly classified into two categories: area-based matching (ABM) and feature-based matching (FBM) [

8]. ABM performs a measurement using gray or phase data in a fixed-size window from two images. The most commonly used algorithms are the methods based on mutual information [

9,

10], frequency-domain correlation [

11], and cross-correlation [

12,

13]. However, ABM exhibits low efficiency. In contrast, FBM extracts salient features from images, can achieve sub-pixel accuracy, and facilitates rapid processing.

Well-known feature extraction operators [

14], SIFT [

15], and SURF [

16,

17] have been widely used in image matching. Other methods based on features, such as lines [

18], edges [

8], contours [

19], and shapes [

20], are also used in numerous applications. Feature points have been shown to exhibit excellent performance and have been successfully applied to various matching cases, including AMSIM [

21,

22]. However, some problems remain, such as uneven precision control, mismatch, and high cost. Frequency-domain correlation has also been applied to some AMSIM cases. Phase correlation is known to identify integer pixel displacement. Several Fourier domain methods, and closely-related spatial domain variations, have been proposed for estimating translational shift with sub-pixel accuracy between image pairs [

23,

24,

25]. Liu et al. proposed robust phase correlation methods for sub-pixel feature matching, based on the singular value decomposition method [

26]. Nevertheless, phase correlation algorithms may have gross errors in matching results due to minor noise and differences between images.

Gross errors in image matching—namely, the mismatching of point pairs—need to be addressed. Many experiments [

27] have indicated that mismatching is inevitable in areas that contain repeated or insufficient textures, clouds, or shades. Hence, gross error elimination has emerged as another key factor in judging algorithms [

28]. Conventional methods for eliminating gross errors are classified into two categories. One method involves complex iterative computation in terms of automatic adjustment [

29]. However, it is strongly dependent on the data quality as well as being capital-intensive and unreliable. The other method manually eliminates gross errors and is inapplicable to automatic photogrammetry [

30]. Kang et al. proposed an outlier detection method using the Triangulated Irregular Network (TIN) structure, which was successfully applied for automatic registration of terrestrial laser scanning point clouds [

31,

32]. This method effectively estimates complex distortions of point cloud data in local areas and eliminates unapparent gross errors in the overall statistics.

When conducting AMSIM, existing satellite orthoimages provide a georeference for satellite images that have unsatisfactory positioning precision. Enhanced thematic mapper (ETM+) images, which are acquired from the Landsat 7 platform, have been used for remote sensing [

33,

34]. The Landsat 7 satellite, which was launched in 1999, is equipped with an eight-band MS camera with a 15 m resolution. The ETM+ images captured by Landsat 7 have broad coverage and relatively high positioning precision. Thus, Digital Orthophoto Maps (DOMs), which are also called orthorectified imagery, of ETM+ were adopted as georeferenced images in our approach. AMSIM was conducted to achieve high and uniform global positioning accuracy of ZY-1-02C images.

Even though the positions of the satellite platforms and sensors are rigorously controlled in relation to satellite images from different sources, errors in rotation, scaling, and translation, relative to the same ground target, still occur in image acquisition, [

30,

35]. A strong relationship exists among these errors, which restricts image matching algorithms. The performance of image matching methods depends on the prediction of the initial position of points to be matched and gross error elimination. In other words, predicting initial positions and eliminating gross errors are the two crucial requirements for stable, precise, and effective image matching methods.

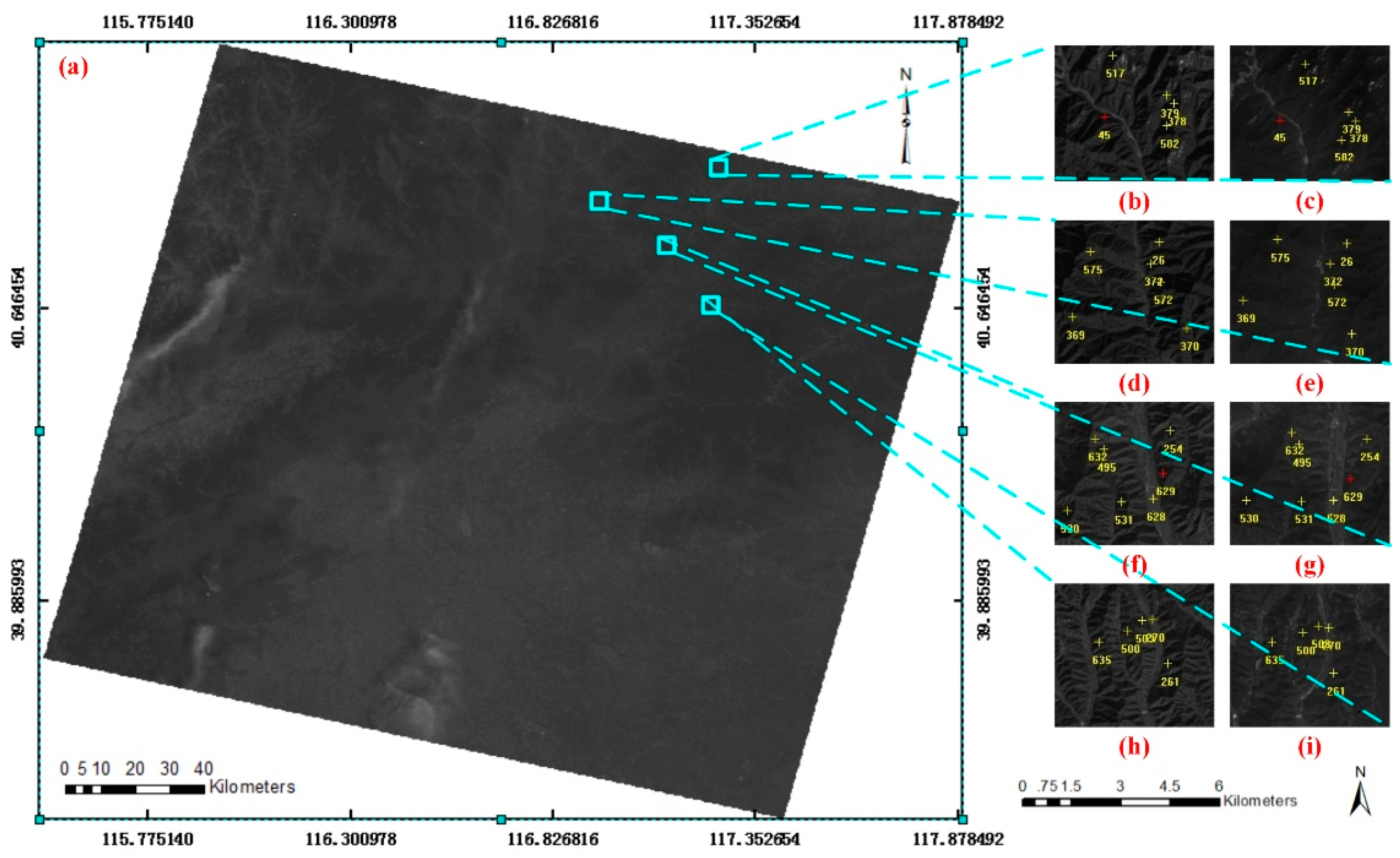

In this study, AMSIM achieved a co-registration accuracy between ZY-1-02C Level 1 images and the DOMs of ETM+. Corresponding point pairs in image space were first obtained according to the rational polynomial coefficients (RPCs) model. Rotation and scale variances between the images were calculated on the basis of these image point pairs. The ZY-1-02C images were then resampled based on the obtained rotation and scale variances. The resampled images were processed by blocking the overall phase correlation (OPC) with ETM+ orthoimages, to generate the translation parameters of the initial matching. The Harris detector was also applied for extracting feature points from the resampled ZY-1-02C images. These feature points were used in image matching. Normalized cross-correlation (NCC) and least-squares matching (LSM) were used for image matching between the resampled ZY-1-02C image and ETM+’s DOM. Finally, the matched points were assembled to construct a triangle network structure. According to the matched points, the local statistic vectors (LSV) of the point pairs were obtained statistically to detect and eliminate the gross errors. The experimental results indicate that the proposed approach can achieve stable and correct matching when the resolution difference is not large (1:3). The matching results of the ZY-1-02C image showed a positioning precision of <10 m.

2. Methods

The two crucial requirements for an effective AMSIM method are initial matching value prediction and matching error elimination. Our work focused on these requirements, as shown in

Figure 1. Initial matching value prediction includes two steps. In the first step, two or more object points within an overlapping area were projected onto both images, based on the RPCs or some other direct models. RPCs can be used to calculate the parameters of the resampling model, as the resolution of the ETM+ orthoimages is lower than the resolution of the ZY-1-02C images. In the second step, the resampled Zy-1-02C images were processed by blocking the overall phase correlation (OPC) with the ETM+ orthoimages to generate the translation parameters, providing the shift values for NCC. Then, image matching was conducted by NCC and LSM. Finally, according to the matched points, the LSV of the point pairs were obtained statistically to detect and eliminate the gross errors.

2.1. Initial Matching Point Detection

Considering image differences, the mathematical model between two satellite images can be described based on three aspects: rotation, scale, and translation. For automatic matching of multi-source satellite images, these aspects are usually unknown before processing.

Given the inconsistent positioning precision of RPC, directly predicting the initial value of image matching with the RPC model is generally unreliable. In the RPC model, rotation and scale are typically considered as systemic parameters, whereas translation represents the random parameter. The strong correlation among these three parameters requires minimal separation processing. Image matching should be convenient. Thus, the image to be matched is first resampled using the rotation and scale parameters, followed by initial value prediction of the matching points with the translation parameters. Using RPC to obtain relatively stable prediction parameters [

36,

37], followed by overall correlation to determine relatively random prediction parameters, is the key to predicting the initial value of image matching.

2.1.1. Estimation of Variation in Rotation and Scale

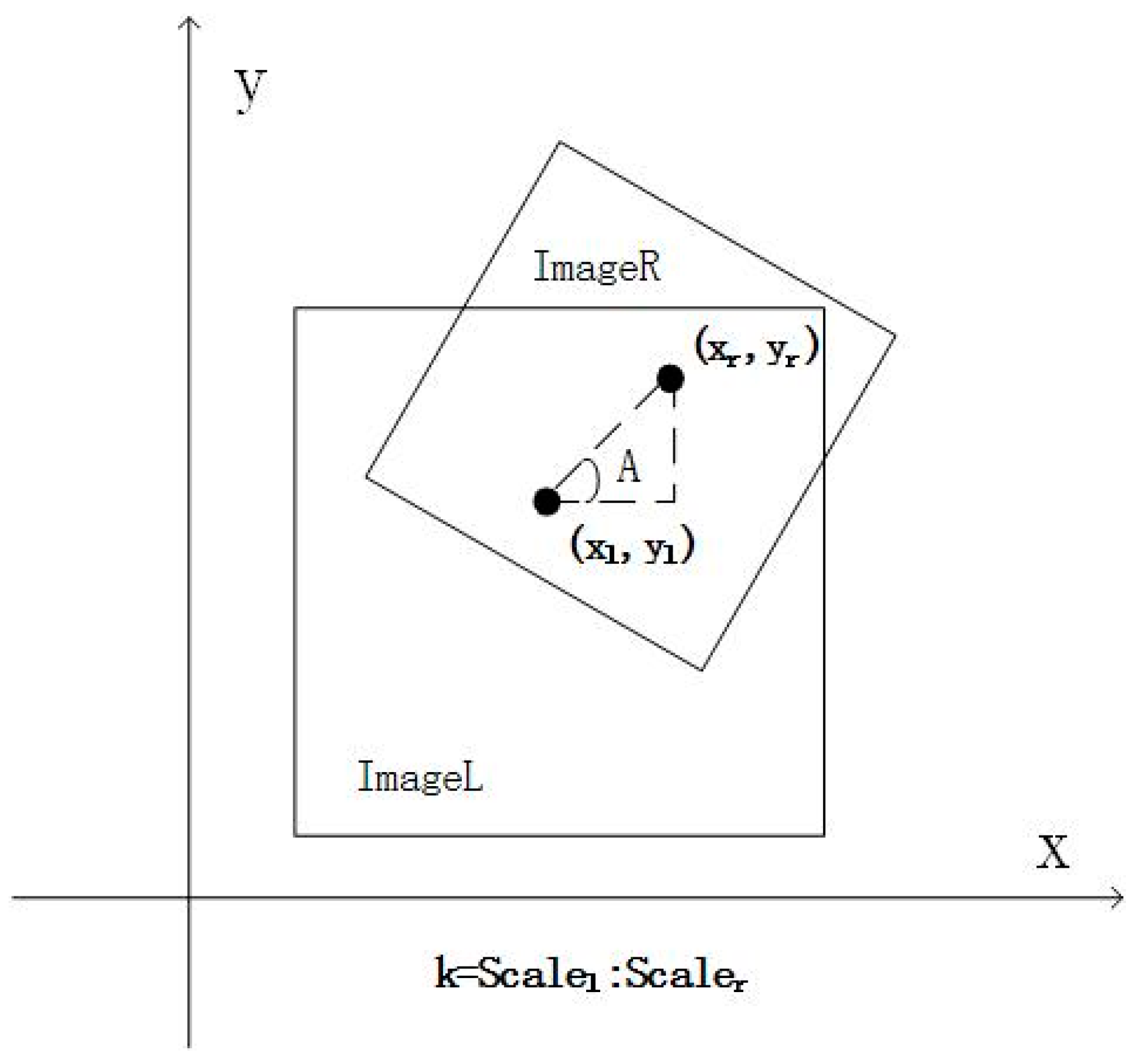

To address the problem of rotation and scale variances between images, establishing a uniform space reference is crucial. Image point pairs that correspond to the same ground tie point can be determined with RPC in the ZY-1-02C imagery and geographic information in the DOMs of ETM+ and SRTM, which were first detected by Harris detectors. As shown in

Figure 2, a uniform image coordinate system was adopted. The coordinate system of one image was selected as the standard so variances in rotation, scale, and translation would be reflected in the coordinates of the corresponding points.

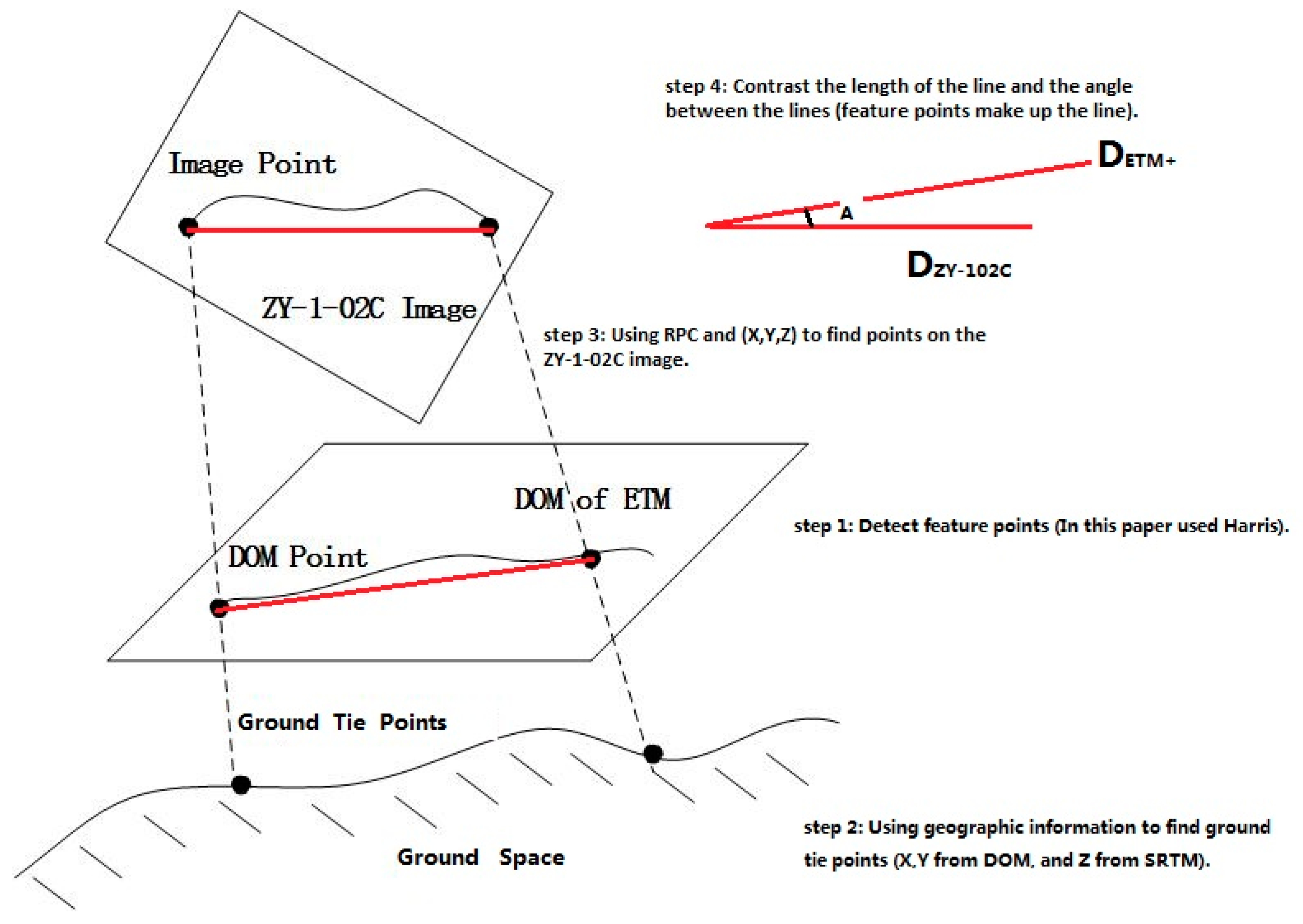

Figure 3 shows the model for transforming one image space to another image space. There are four steps in this process. Step 1: Detect feature points on the DOM of ETM+ by Harris detector. Step 2: Use geographic information to find the ground tie point (X, Y, Z). The X and Y are found in the DOM, and Z is found in the SRTM. Step 3: Use the RPC to find the ground tie point (X, Y, Z) on the ZY1-02C imagery with Equation (1). Step 4: The feature points create a line, where D

ETM+ and D

ZY-102c represent the length of the line in ETM+ and ZY-1-02C imagery. Contrast the length of the line and the angle between the lines by using Equation (2).

where

and

are the normalized coordinates of the image space and the ground space points, respectively, and

are the cubic polynomials that represent the rigorous geometric sensor model of satellite imagery.

The rotation angles of the lines were used to obtain the value of A, and the lengths of the lines were used to obtain the value of k, as shown in

Figure 2. The following equation represents a simple and effective model for determining the relationship between the two images. To calculate the model in Equation (2), the RPCs and the geographic information of the DOMs play important roles. As shown in

Figure 2 and

Figure 3, two or more pairs of image points should be used to connect the lines between two image spaces. These statistical points can calculate the value of

A and

k, which is the preparation work for image matching.

where

and

are the rotation angles of the lines in the two images,

and

are the lengths of the lines in the two images,

is the rotation angle,

k is a scale parameter, and

is the number of statistical samples.

Owing to the inconsistent positioning precision, these point pairs may be non-correspondent or far away. However, the relative relationship in ZY-1-02C imagery space between the point pairs tends to be consistent, regardless of whether the geographical positioning is accurate or not. For instance, the distances and angles of lines between two groups of point pairs could highlight the rotation and scale variances between images. In this study, the lengths and angles of lines, consisting of image point pairs with identical geographic coordinates, were calculated to determine the relationship between images in terms of rotation and scale.

2.1.2. Estimation of Variation in Translation

OPC is a nonlinear frequency-domain correlation technique that is based on the Fourier shift property, which is the shift in the spatial coordinate frame of two functions that results in a linear phase difference in the frequency domain of the Fourier transforms (FTs) of the two functions, as shown in the following equations:

where

and

are the two image functions of the spatial coordinate frames,

and

are the corresponding image functions of the frequency domain of the FTs, and

and

are the parameters of the shift [

25].

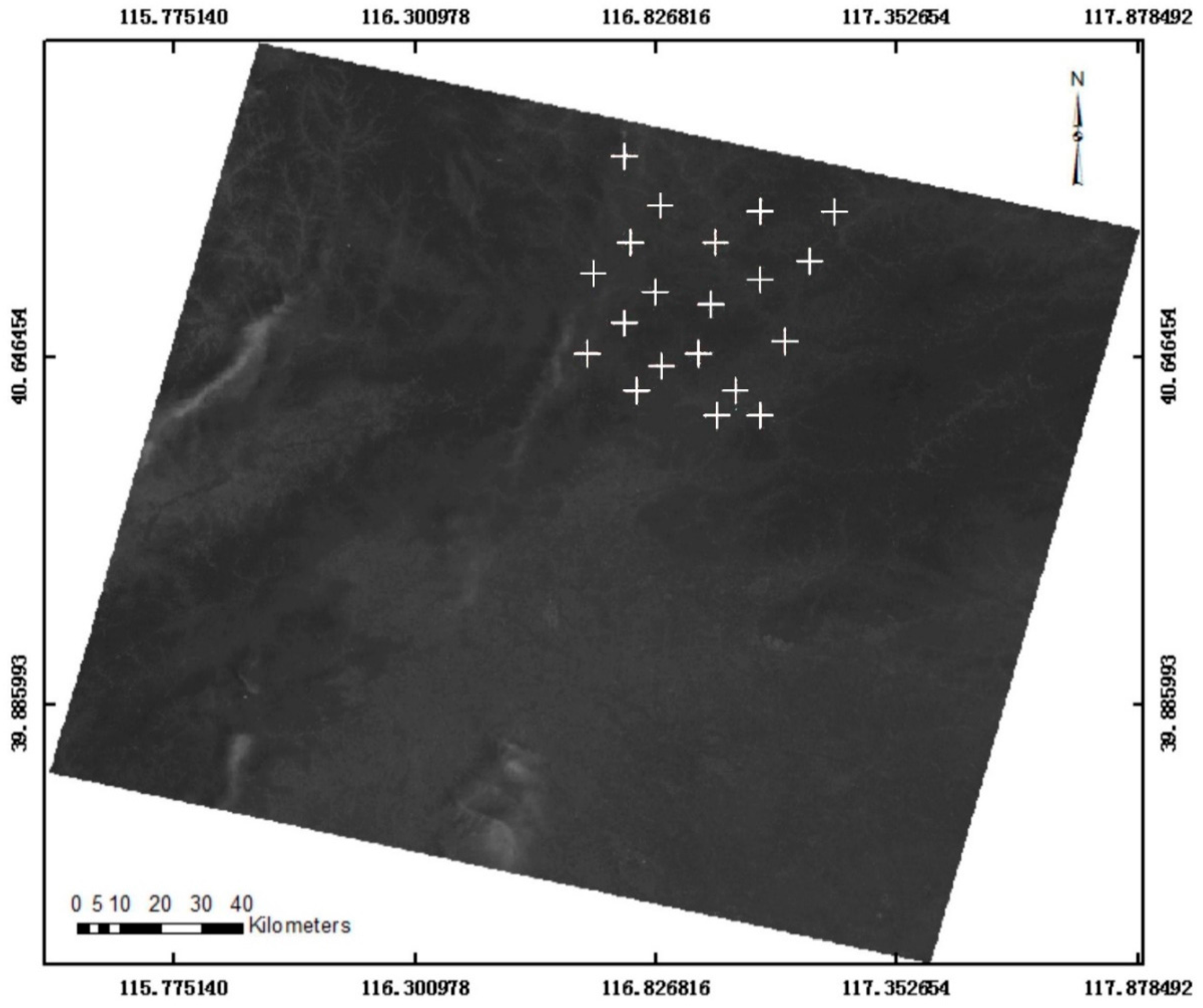

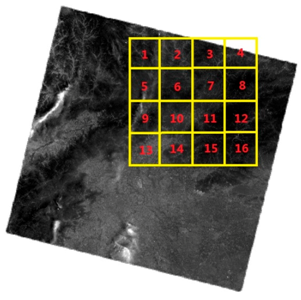

Between the resampled ZY-1-02C image and the DOM of ETM+, the OPC results produce only the overall translation parameters, i.e., . A high level of accuracy is not required. Considering the complex matching conditions, the 20-pixel accuracy of OPC can meet the image-matching requirements for NCC. Given the large size of the scene images, (the size of a ZY-1-02C image scene is approximately 12,000 × 12,000 pixels), OPC is conducted in several image blocks of one image scene to improve its efficiency. Each block has a group of translation parameters for image matching.

2.2. Improving Matching-Point Location Estimation by NCC and LSM

With the translation parameters obtained by OPC, the position of the conjugate points was predicted. The conjugate points can be searched for in a relatively small window around the predicted position. For remote sensing image matching with small rotation and scale variances and initial matching values with good quality, Harris-supported NCC and LSM methods have been shown to be highly efficient and precise [

38]. Thus, in our approach, feature extraction based on the Harris detector and matching based on NCC and LSM were adopted for precise image matching [

14,

39]. The Harris detector is known for its high speed and stability. We used this detector to extract apparent features and refine feature points to the sub-pixel level by using the Förstner operator. To extract an appropriate number of evenly distributed feature points, the image was segmented into 50 by 50 pixel grids. The features with the strongest Harris value in each grid were then extracted. To enhance the positioning precision of the ZY-1-02C by image matching, the feature points needed to correlate with the original ZY-1-02C image. In this study, feature points with positions that corresponded to those of the original image were extracted from the resampled ZY-1-02C image. The corresponding points were then searched from the ETM+ orthoimage. Thus, extracting feature points from the resampled ZY-1-02C images, which were connected to the original ZY-1-02C images, and searching for the corresponding points in DOMs of ETM+, was a good approach for the case study. After feature extraction, the coordinates of the corresponding points on DOMs of the ETM+ images were estimated using the following equation:

where

is the point on the DOMs of the ETM+ images,

is the feature point in the resampled ZY-1-02C images, and

represents the translation parameters.

A small search window was needed around the estimated position to find the conjugate points, which ensured high efficiency and reliability of the matching results when an NCC-based approach is used. To achieve sub-pixel matching precision, the corresponding points obtained by NCC were used as the initial values for LSM. Proposed by Ackermann [

40], LSM uses adequate information in the image window that used a second-degree polynomial model for the adjustment calculation.

2.3. Finalizing the Matching Process by LSV

The different imaging timing and undulating terrain in ZY-1-02C images and DOMs of ETM+ can cause errors in image matching. Such error matches must be eliminated before the images can be used to refine the RPCs of ZY-1-02C. However, elimination is difficult when a mathematical model is used to directly describe the image relationships. In most cases, the effects of the differences can be minimized when the judging area is reduced to a relatively small area [

27]. In this case, local image relationships could be accurately approximated on a small surface, as shown in

Figure 3. A transformation vector that is consistent with statistical laws can be fitted with the coordinate differences between correct matches on the small surface.

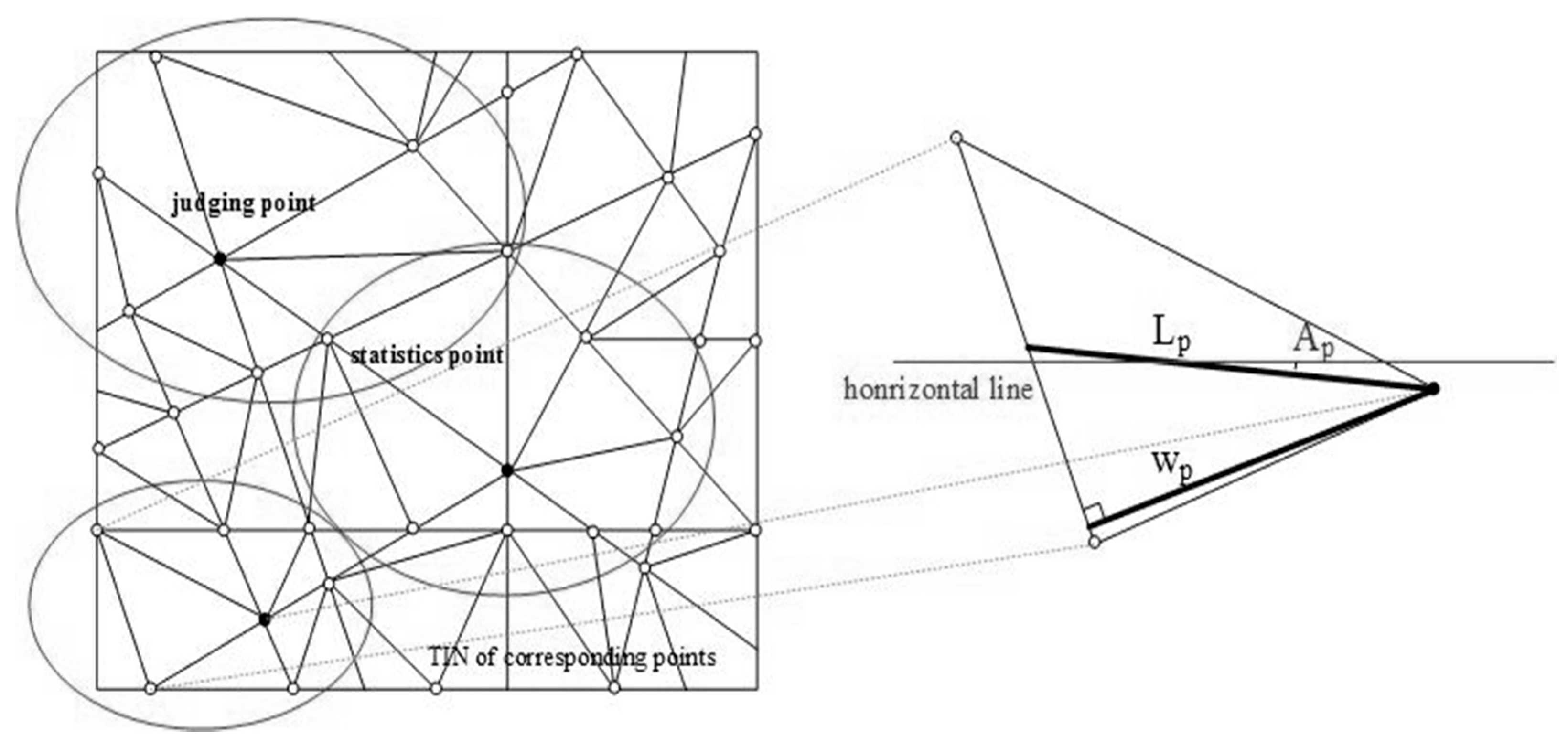

Figure 4 shows that the relationship between the corresponding points, which are organized by Triangulated Irregular Network (TIN), must be analogous when the judging area is limited to a small local part. The median lines of the triangles in TIN are used to build the LSV,

, where

is the length of the triangle median lines,

is the angle of the triangle medians with the horizontal, and

is the parameter of the distance weights. The LSV of each triangle, where the point to be determined in the local facet center is located, can be calculated according to the corresponding point pairs in the triangle network. Reliability coefficients are obtained by the statistical results of the points to be determined in the triangle networks of the left and right images. The positions of the points with gross errors are eliminated on the basis of a given reliability coefficient threshold.

In this situation, a similarity comparison is conducted for the corresponding points one at a time. The vector differences of the correct matches can be fitted well between the two images, in contrast to those of the error matches. Weighting and voting strategies were designed for connecting the surrounding points with the judging point, as shown in Equation (6). The process of determining the judging point as a gross error to be eliminated is shown in Equation (7).

where

is the vector of the left images,

is the vector of the right images,

is the absolute value of the length difference of the vertical segment through the judging point, and

is the judging value.

where

is the threshold for determining if the point is a gross error. This threshold is usually set as three times the root-mean-square error (RMSE) of

.

Based on the preceding analysis, error matches could be eliminated effectively by the statistics with LSV. The proposed error elimination method is as follows: (1) A TIN, based on the divide-and-conquer approach, was constructed using the coordinates of the matching points, and the points in the TIN were judged individually in the following steps. (2) Several nearest neighboring points around the current judging point were collected based on the TIN structure. All these points, which were collected as candidate points, were selected according to the distance from the judging point. Extremely long distances of more than 300 pixels were disregarded. (3) The LSV of the left and right images were calculated in a small local area, which was based on the selected matching points in Step 2. Through Equation (5), the statistics were initiated. The judging of the current point was estimated using Equation (6) until every point pair had been judged. (4) After traversing all the points in TIN, Step 1 was repeated to reconstruct a new TIN by using the remaining points. The process continued until the residual errors of all the points met the requirements.

4. Comparison with Other Methods

Experiments were conducted using a personal computer with the following basic parameters: the CPU was Intel® Core™ i3, M380 @ 2.53 GHz. RAM: 4 GB. The operating system was Windows 7, 32-bit, and the software compiler was Visual C++ 6.0.

A comparison of SIFT, A-SIFT, and SURF algorithms is shown in

Table 6. Dataset 2, with a size of 12,000 × 12,000 pixels, and Dataset 3 with a size of 15,321 × 14,921 pixels, were used for image matching. When an affine relationship in images exists in SIFT and SURF, in situations where rotation, scale, and translation exist simultaneously in images to be matched, the stability and accuracy of these methods decrease. A-SIFT performs better under this condition; however, it has the longest processing time. Our method showed the best performance in the experiment. The mismatched points were partly caused by the variation in ground objects and by wrong matching. The algorithm for matching gross error elimination proposed in this paper detected and eliminated mismatched point pairs effectively.

5. Discussion

An AMSIM method with high precision and efficiency was proposed and applied to a case study on ZY-1-02C and ETM+ images. The advantageous characteristics of the proposed method include relatively independent initial matching value prediction models and an effective method to eliminate gross errors.

5.1. Image Matching and Error Elimination

Related studies have presented two representative problems to realize an AMSIM case. One problem is solving the correlation among rotation, scale, and translation in image matching. For multi-source images, the transformation between images is too complex for reliable image matching to be implemented. Numerous approaches, such as SIFT, SURF, and A-SIFT, have been used to perform multi-source image matching. However, they not only consume excessive memory and time but also are unreliable; these are the technological bottlenecks for fully automatic image processing.

A rough estimation of the transformation between images was achieved via geographic information and OPC-based matching. The most important finding was that the geographic information of images could be used to search for tie points. Tie points were obtained in Datasets 2 and 3 to calculate

A and

k (

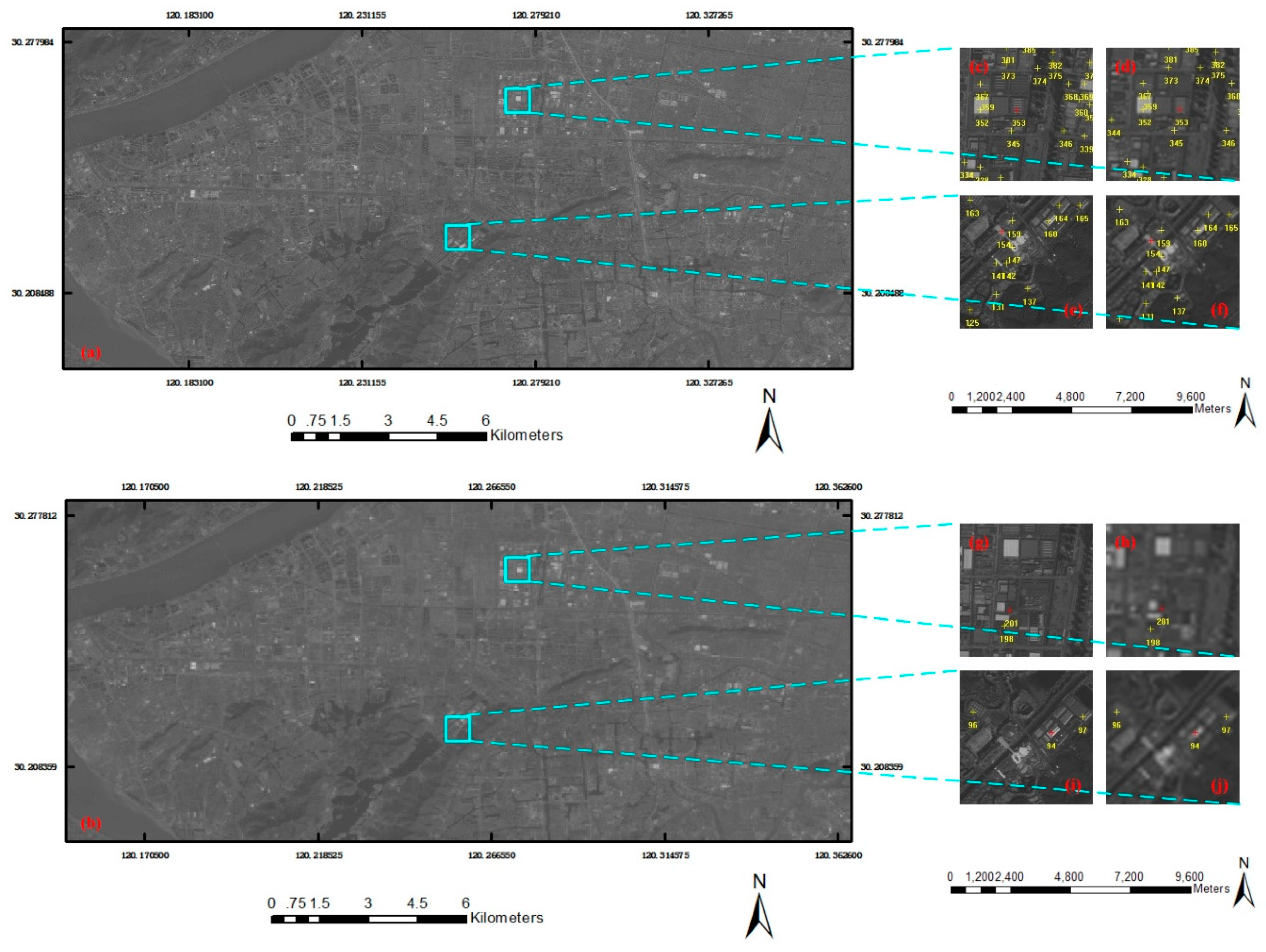

Table 4). The transformation parameters between the images that were calculated in this way were not very accurate, which we attribute to large topographic variations, image resampling, and processing noise. Given that the viewpoints changed drastically between ZY-1-02C and the DOM of ETM+, the relief parameters were available for the initial matching value prediction. After OPC-based matching, the search region was narrowed. The experimental results proved that the method can solve the problem of transformation between images. Thus, numerous evenly distributed corresponding points could be rapidly obtained and efficiently based on NCC and LSM. The results shown in

Figure 5 imply that sufficient conjugate points were obtained. In

Section 3.3.4, the matching accuracy was proven to be improved with this image matching strategy. The first experiment also showed that high accuracy matching between ZY-1-02C and ETM+ could only be obtained when the resolutions of the two images were 5 and 15 m.

The other problem in image matching is error elimination. In most engineering practices, an image matching result contains gross errors. Conventional processing of gross errors is generally categorized into two types: automatic and complex iterative computation in block adjustment [

41] and artificial error processing, which are obviously unsuitable for automation in photogrammetry. To solve the problem of automatic elimination of gross errors in image matching, a local statistical estimation, which differs from the approaches reported in most studies, was adopted in this study. Calculating the LSV of the judging points ensured that false matches were detected correctly from the matching results. In the second experiment, the false matches detected in Datasets 2 and 3 were evidently greater in number than the real errors because of the processing noise. Further, the false matches were eliminated in all the results. The high matching precision, which is shown in

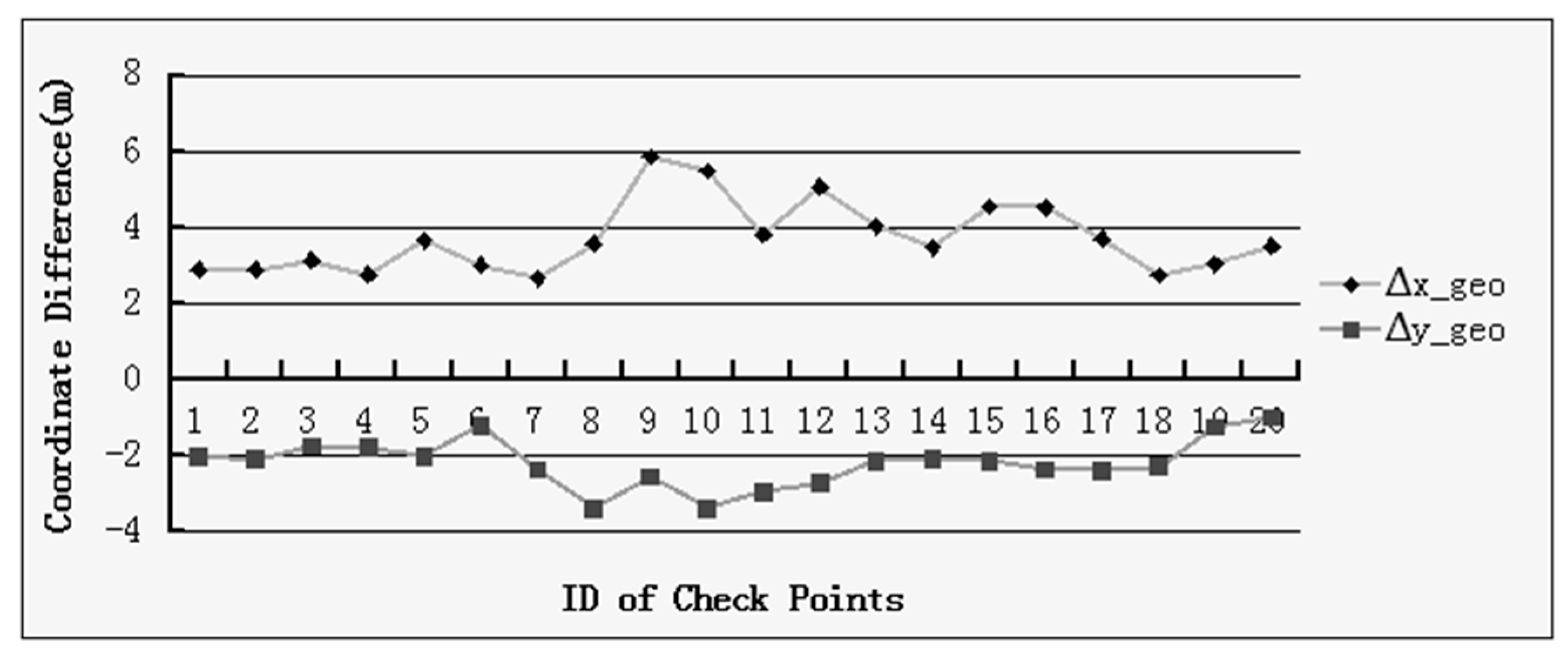

Figure 10, confirmed the accuracy and practicability of the proposed gross error elimination method.

Unlike related AMSIM approaches [

7,

42], this approach has two different designs for the matching method. Image resampling based on RPCs was first applied to data with uncertain resolution. Second, the matching strategy based on OPC aimed to solve the RPC that contained a positioning error. The main purpose of this study was to obtain a high level of global positioning accuracy for the ZY-1-02C images with the DOM of ETM+ images as georeferences. However, the proposed method also has limitations. Between the input and reference images, the DOM could not handle significant landscape changes or large resolution differences above a ratio of 1:6. Indeed, when satellite images exhibit small differences in positioning accuracy, NCC and LSM can be performed directly, and the corresponding points can be easily searched on the basis of the geographic information of the images.

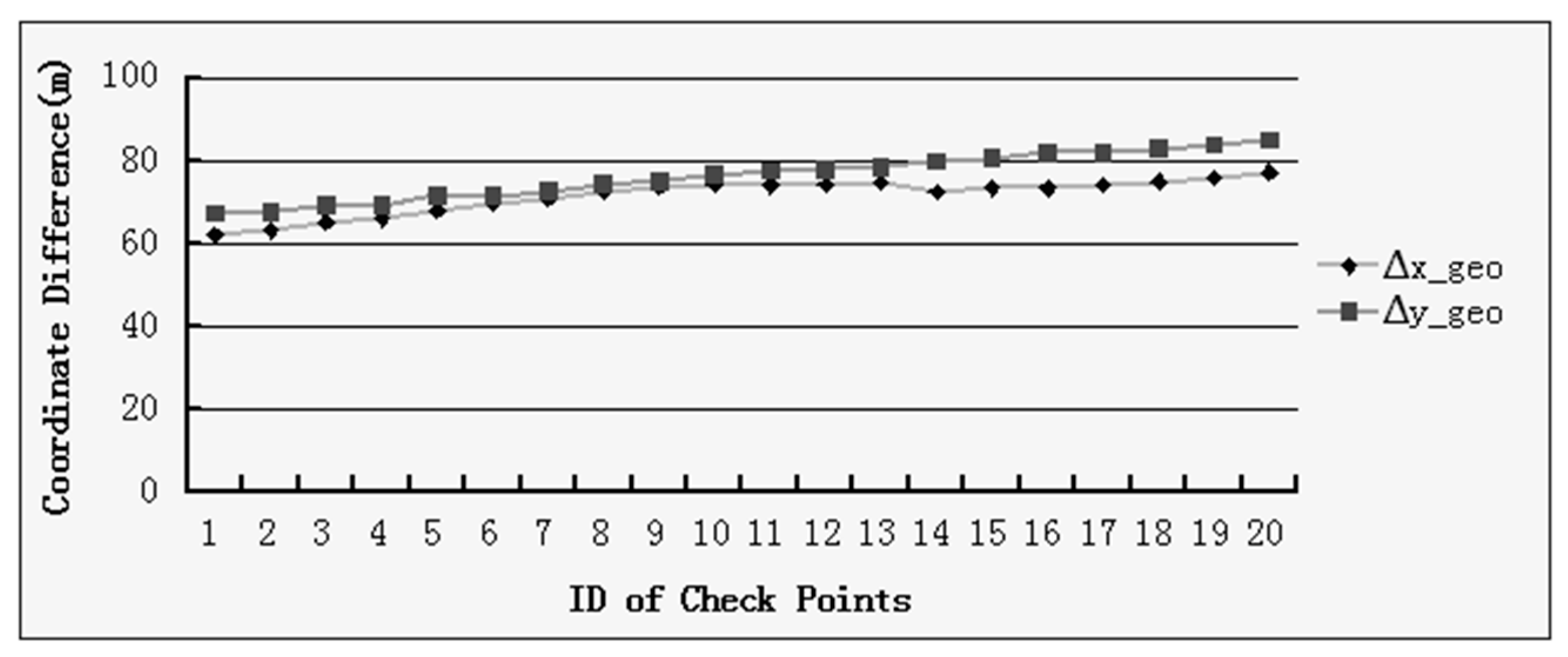

5.2. Accuracies, Errors, and Uncertainties

In the first experiment, different resolution discrepancies, where the ratio of original image to down-sampled image was 1:3 and 1:6, were selected for the matching tests. Comparative analysis revealed that our method proves that images with a higher resolution difference are more difficult to match. In the second experiment, 20 checkpoint pairs were manually selected for image matching accuracy analysis. The original positioning precision, which was around 100 m, was enhanced to within eight meters by the image matching results. Our method is more precise and efficient than conventional SIFT, SURF, and A-SIFT.

The matching error in this study is mainly attributed to four factors. First, the error may be generated because of the different acquisition dates of the two images. Second, the error may be caused by bad results of error estimation. The estimation based on local statistics may be insufficient when the matching points contain numerous errors above 20%. Third, the error may be caused by image resampling. The bilinear interpolation adopted in our approach may also produce errors. Fourth, the differences in the levels of image rectification and the effects of projection error of the terrain may lead to mismatches.

In the proposed method, the matching accuracy is significantly determined by checkpoints. The maximum resolution discrepancy in our test datasets was approximately three times. The first experiment proved that such a discrepancy is uncertain if the proposed approach can ensure accuracy with high differences in resolution. We are also uncertain about the georeference accuracy of DOM of ETM+, which uses uncertain DEM for geometric correction.

6. Conclusions

The essence of image matching lies in the prediction model for the corresponding points and the elimination of matching gross errors. In contrast to related studies, we performed special processing to address both problems. The relatively independent calculation model parameters of rotation, scale, and translation can provide stable prediction results. Applying RPC to obtain the rotation and scale parameters led to satisfactory image positioning precision. The translation parameters generated by OPC exploit the stability of the overall correlation. While performing matching gross error elimination, the local facet vectors of points to be determined can be obtained statistically. Consequently, local properties and overall statistics were considered, thereby avoiding the problem of large-scale adjustment calculation failure due to the strong correlation of the observed values.

The proposed method can achieve precise matching even when the resolution of one image is three times higher than that of the other image, as shown in the first experiment. The accuracy and efficiency of this method was compared to those of SIFT, SURF, and A-SIFT; the proposed method fully met application requirements. Research data indicated that SIFT and SURF matching have more mismatched points and lower efficiency than our method. In contrast, the A-SIFT results are satisfactory; however, this method is time-consuming, which makes it unsuitable for automated processing. The matching results obtained by the proposed method enhanced the direct positioning precision from 100 m to under 8 m. Using the reference image for comparison, the position error of checkpoints in the x direction was between 2.7 and 6 m, whereas that in the y direction was between 1 and 3.5 m. The research results verified the effectiveness of our matching strategy. The proposed method has several limitations that require further investigation. First, the error elimination procedure through TIN could be simplified to enhance processing efficiency. Second, during image resampling, the model for calculating tie points has many drawbacks that might introduce excessive noise. Future studies will focus on solving these problems.