2D Gaze Estimation Based on Pupil-Glint Vector Using an Artificial Neural Network

Abstract

:1. Introduction

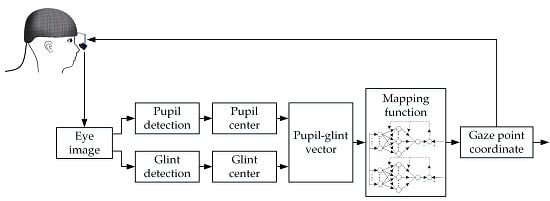

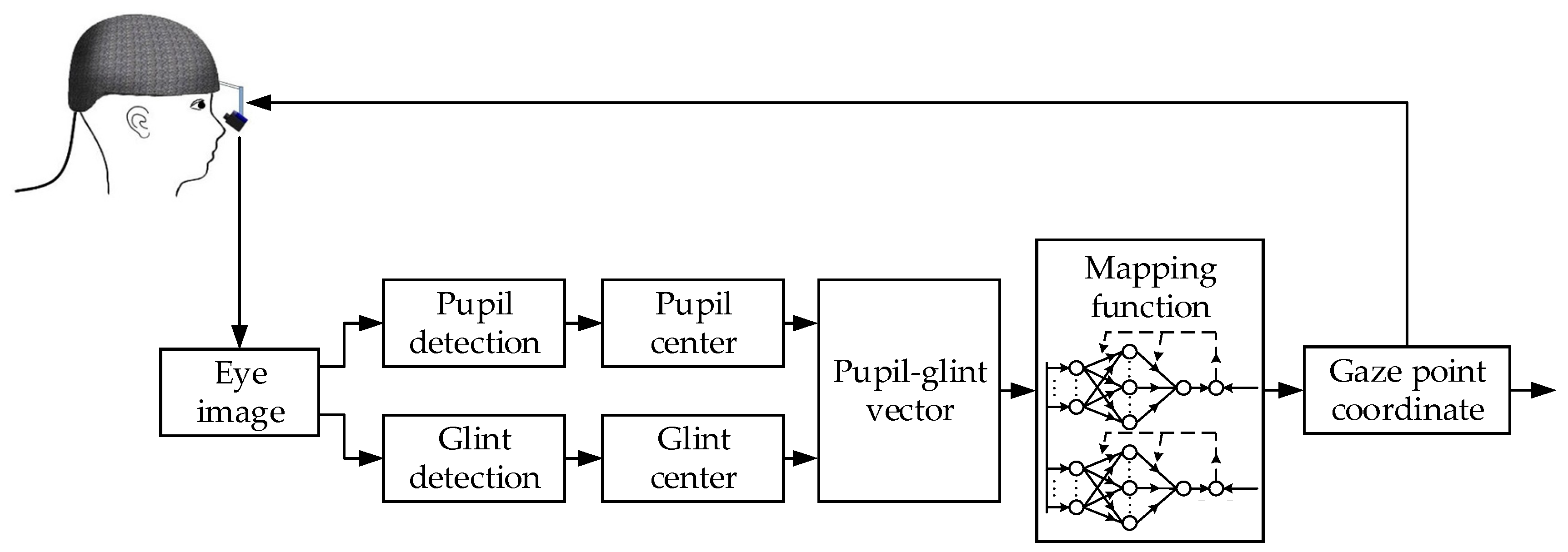

2. Proposed Methods for Gaze Estimation

3. Experimental System and Results

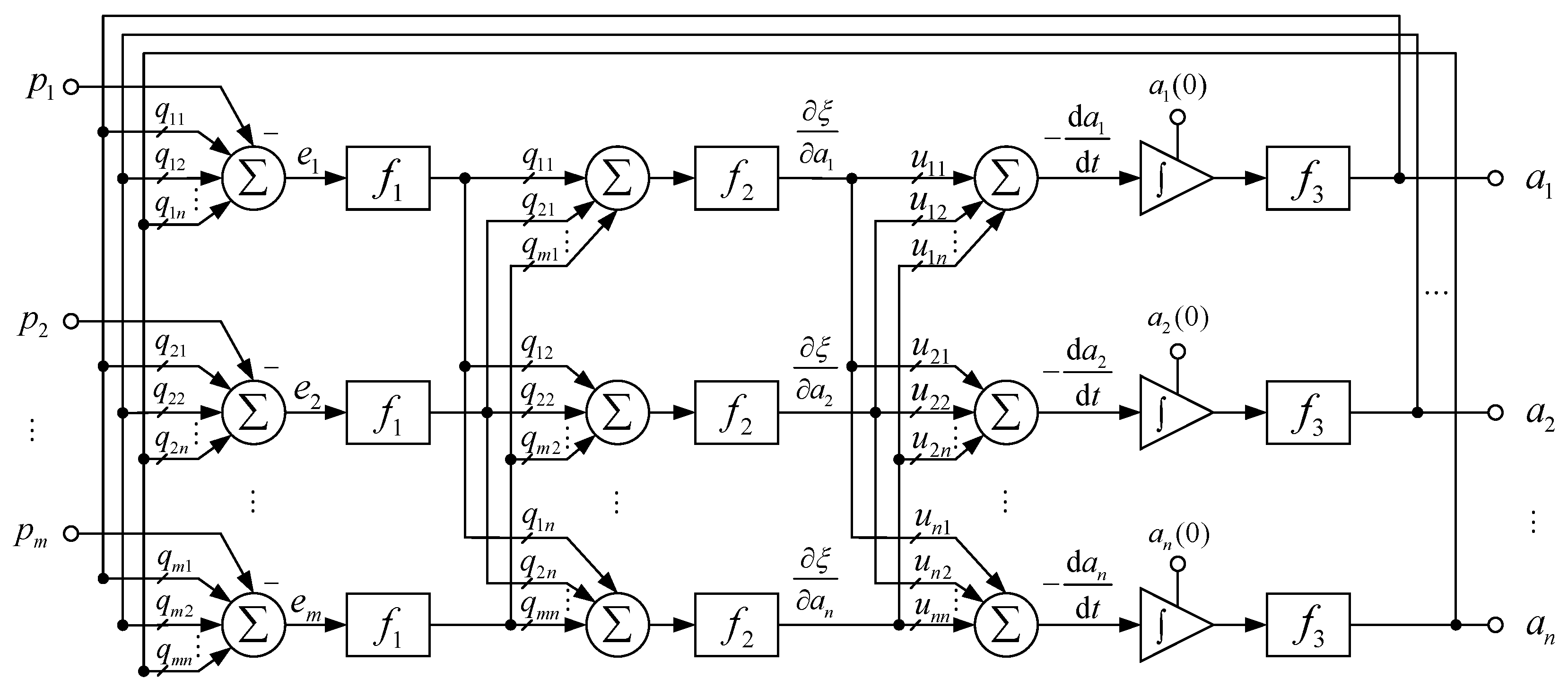

3.1. Experimental System

3.2. Pupil and Glint Detection

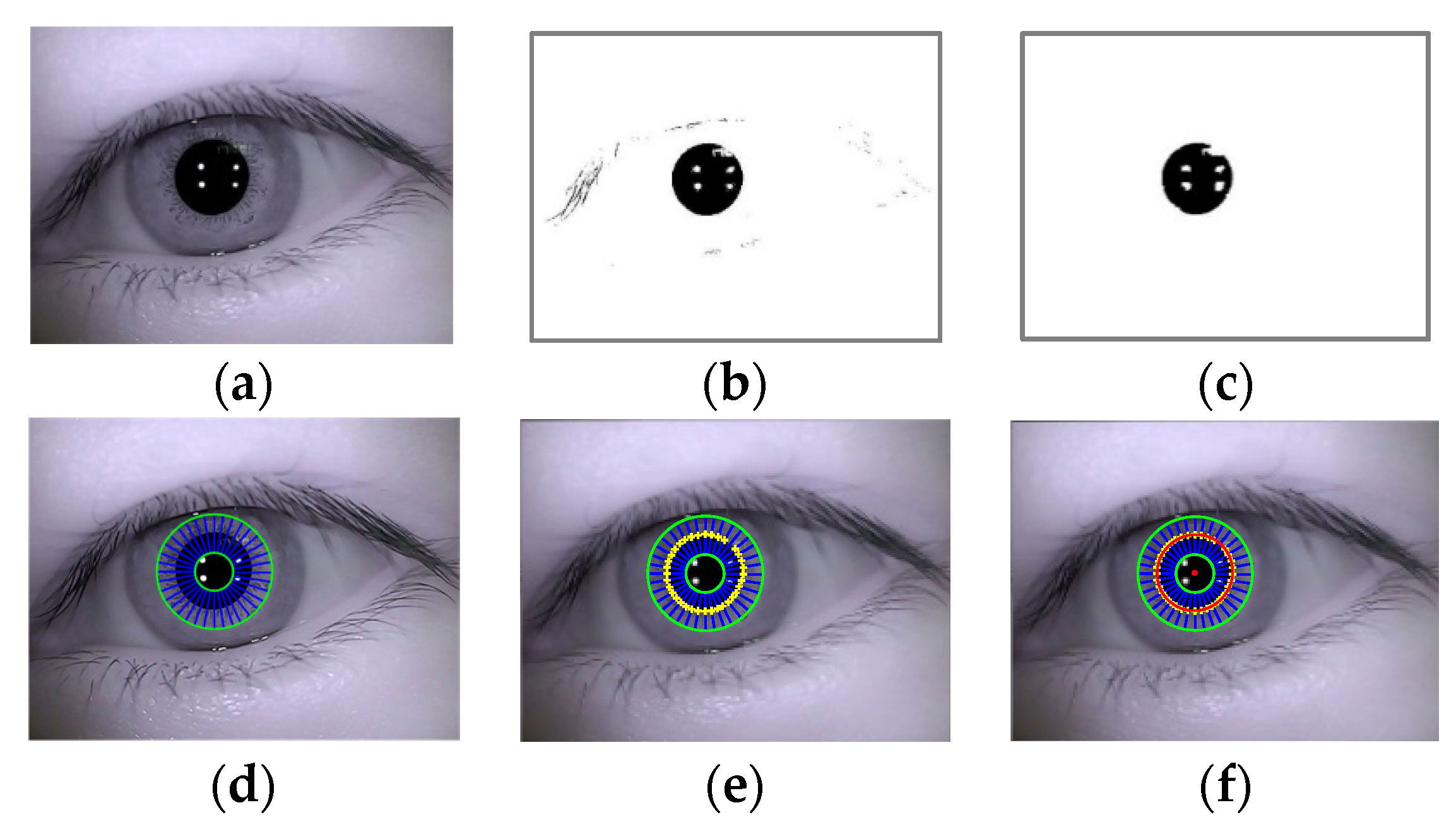

3.2.1. Pupil Detection

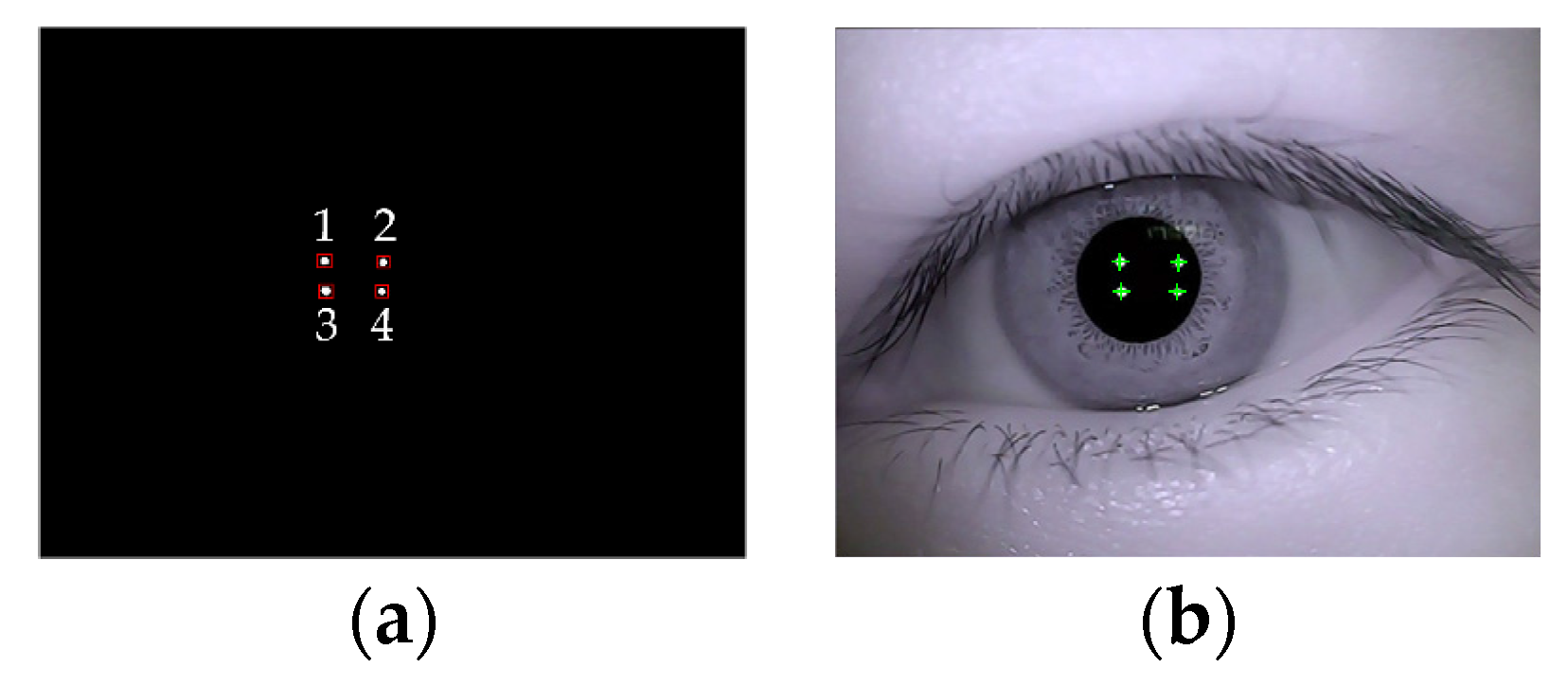

3.2.2. Glint Detection

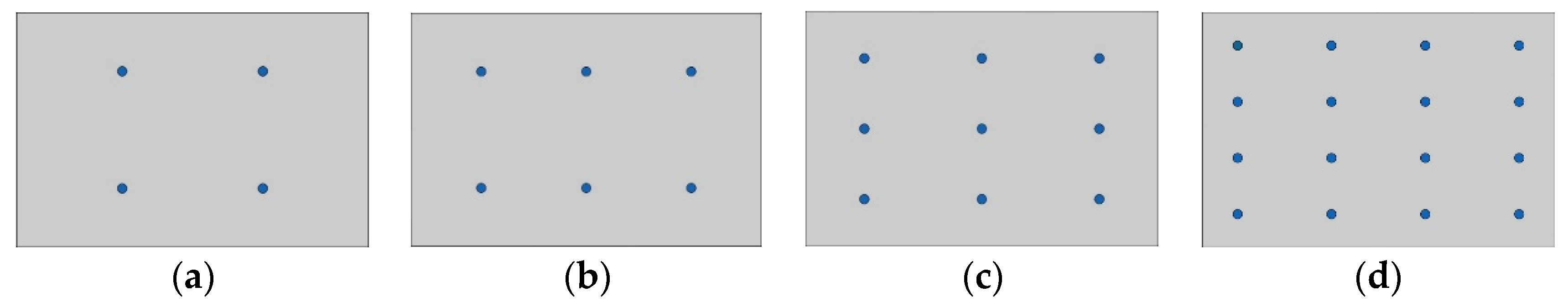

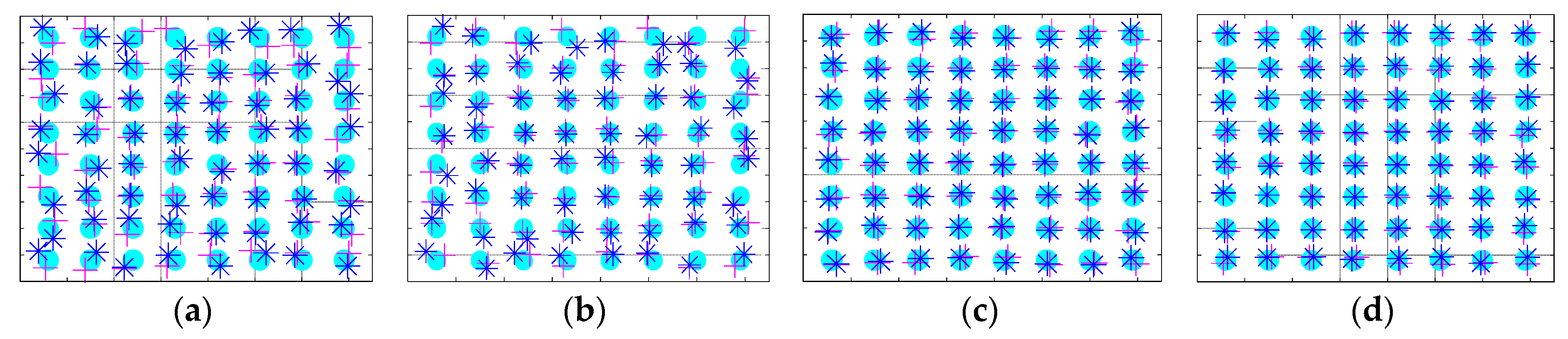

3.3. Calibration Model

3.4. Gaze Point Estimation

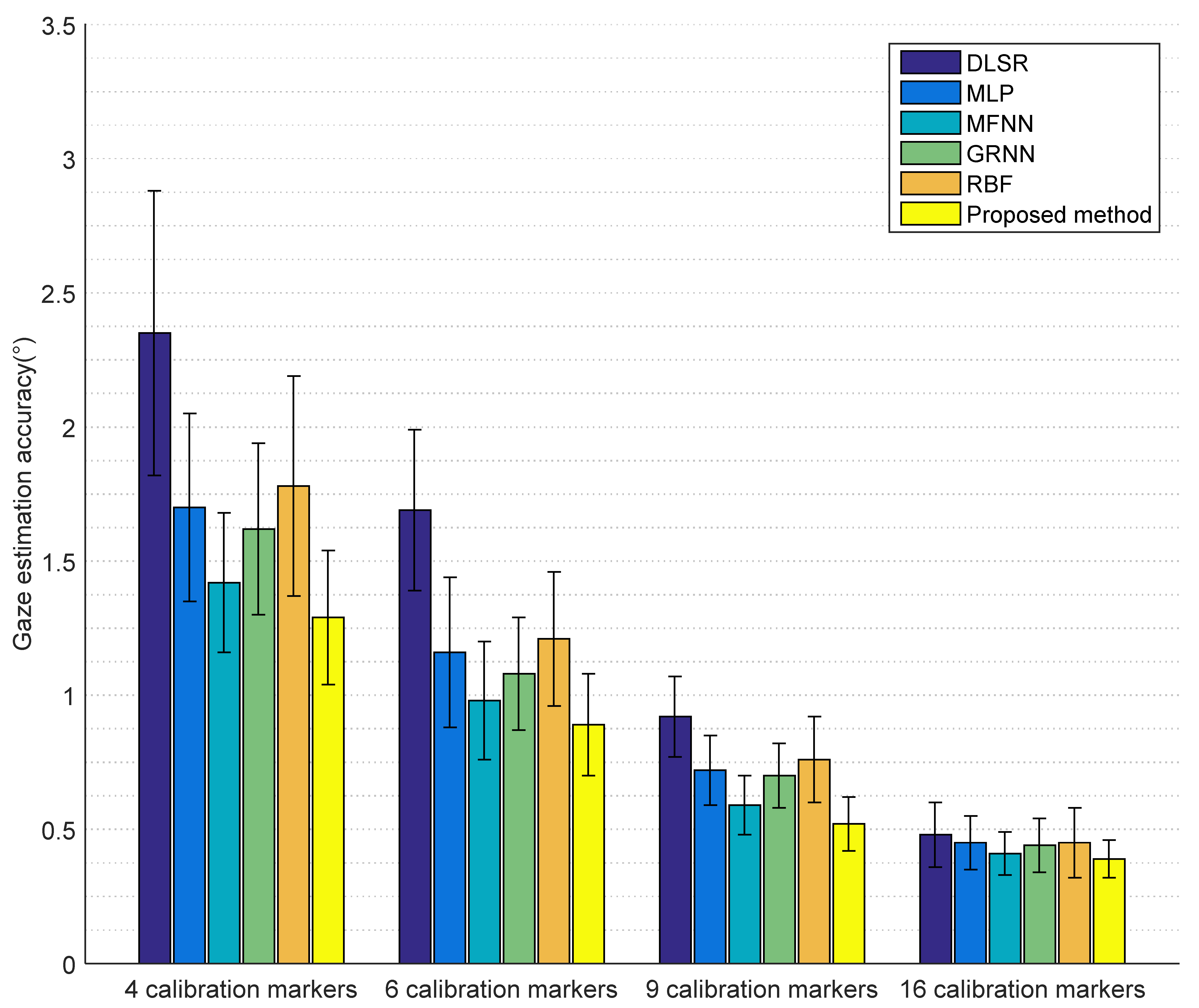

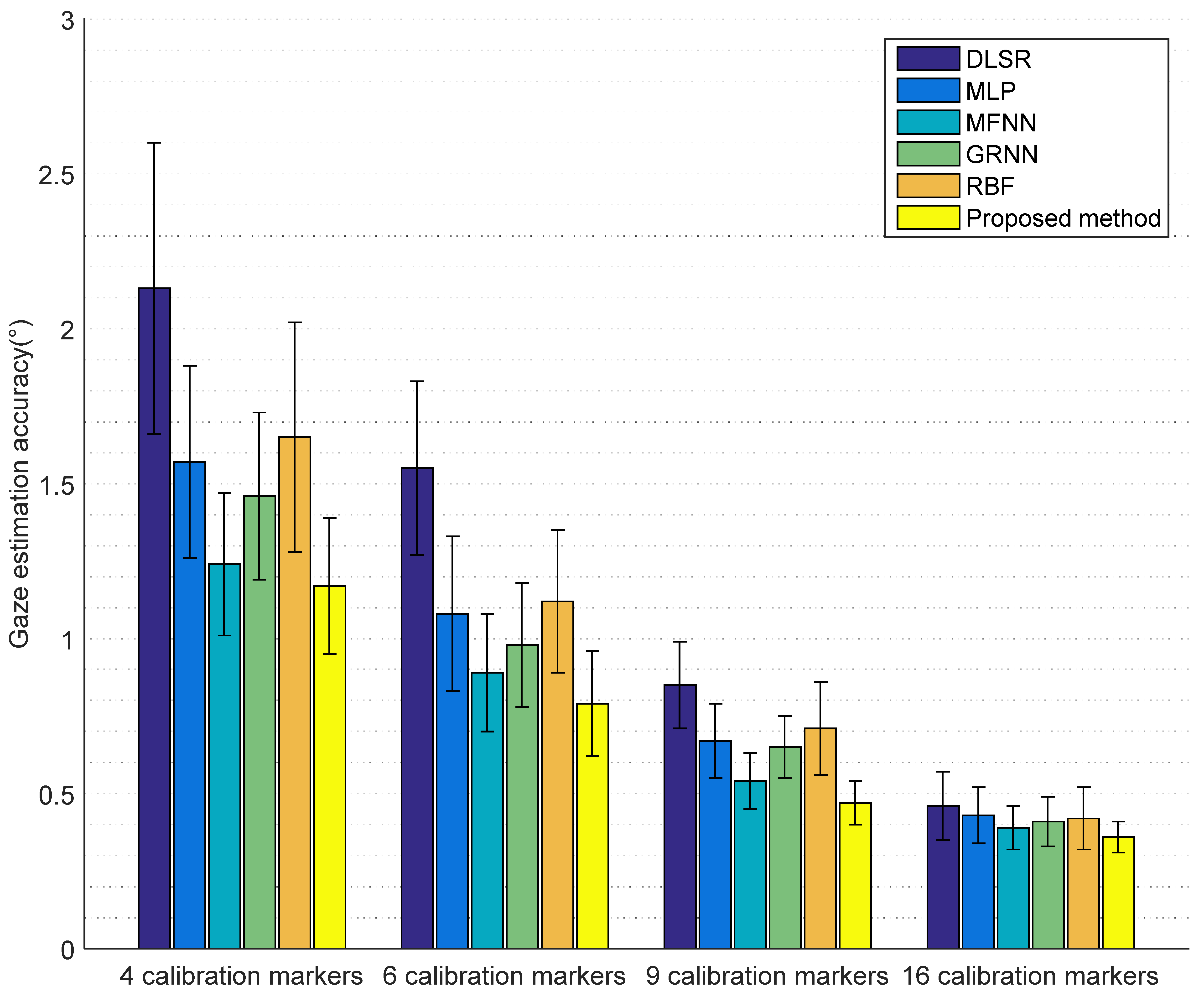

3.5. Gaze Estimation Accuracy Comparison of Different Methods

3.5.1. Gaze Estimation Accuracy without Considering Error Compensation

3.5.2. Gaze Estimation Accuracy Considering Error Compensation

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Appendix

| Calibration Markers | Method | Subject 1 | Subject 2 | Subject 3 | Subject 4 | Subject 5 | Subject 6 | Average Err. |

|---|---|---|---|---|---|---|---|---|

| 4 | DLSR [55] | 2.32° ± 0.54° | 2.41° ± 0.58° | 2.48° ± 0.62° | 2.24° ± 0.45° | 2.29° ± 0.48° | 2.37° ± 0.51° | 2.35° ± 0.53° |

| MLP [66] | 1.71° ± 0.35° | 1.74° ± 0.39° | 1.64° ± 0.32° | 1.72° ± 0.33° | 1.76° ± 0.40° | 1.62° ± 0.29° | 1.70° ± 0.35° | |

| MFNN [67] | 1.38° ± 0.27° | 1.45° ± 0.30° | 1.43° ± 0.24° | 1.49° ± 0.29° | 1.34° ± 0.22° | 1.40° ± 0.25° | 1.42° ± 0.26° | |

| GRNN [68] | 1.63° ± 0.32° | 1.52° ± 0.28° | 1.69° ± 0.35° | 1.72° ± 0.36° | 1.55° ± 0.30° | 1.61° ± 0.33° | 1.62° ± 0.32° | |

| RBF [69] | 1.81° ± 0.43° | 1.92° ± 0.48° | 1.85° ± 0.44° | 1.74° ± 0.37° | 1.67° ± 0.33° | 1.72° ± 0.41° | 1.78° ± 0.41° | |

| Proposed | 1.36° ± 0.24° | 1.28° ± 0.19° | 1.26° ± 0.31° | 1.31° ± 0.25° | 1.21° ± 0.30° | 1.32° ± 0.20° | 1.29° ± 0.25° | |

| 6 | DLSR [55] | 1.68° ± 0.29° | 1.62° ± 0.25° | 1.64° ± 0.28° | 1.72° ± 0.31° | 1.71° ± 0.33° | 1.74° ± 0.31° | 1.69° ± 0.30° |

| MLP [66] | 1.15° ± 0.26° | 1.23° ± 0.33° | 1.17° ± 0.25° | 1.06° ± 0.24° | 1.10° ± 0.27° | 1.26° ± 0.35° | 1.16° ± 0.28° | |

| MFNN [67] | 0.98° ± 0.22° | 0.96° ± 0.20° | 1.05° ± 0.27° | 1.03° ± 0.25° | 0.95° ± 0.18° | 0.93° ± 0.19° | 0.98° ± 0.22° | |

| GRNN [68] | 1.07° ± 0.19° | 1.16° ± 0.27° | 1.02° ± 0.15° | 1.05° ± 0.19° | 1.12° ± 0.26° | 1.08° ± 0.18° | 1.08° ± 0.21° | |

| RBF [69] | 1.20° ± 0.26° | 1.17° ± 0.24° | 1.23° ± 0.27° | 1.24° ± 0.29° | 1.15° ± 0.19° | 1.18° ± 0.25° | 1.21° ± 0.25° | |

| Proposed | 0.88° ± 0.16° | 0.94° ± 0.19° | 0.78° ± 0.25° | 0.86° ± 0.14° | 0.92° ± 0.21° | 0.95° ± 0.18° | 0.89° ± 0.19° | |

| 9 | DLSR [55] | 0.91° ± 0.15° | 0.89° ± 0.16° | 0.97° ± 0.18° | 0.96° ± 0.15° | 0.86° ± 0.13° | 0.94° ± 0.14° | 0.92° ± 0.15° |

| MLP [66] | 0.73° ± 0.13° | 0.78° ± 0.16° | 0.74° ± 0.16° | 0.67° ± 0.11° | 0.64° ± 0.10° | 0.75° ± 0.14° | 0.72° ± 0.13° | |

| MFNN [67] | 0.58° ± 0.09° | 0.57° ± 0.12° | 0.64° ± 0.11° | 0.56° ± 0.14° | 0.59° ± 0.09° | 0.62° ± 0.13° | 0.59° ± 0.11° | |

| GRNN [68] | 0.71° ± 0.11° | 0.74° ± 0.12° | 0.77° ± 0.16° | 0.65° ± 0.09° | 0.64° ± 0.10° | 0.67° ± 0.12° | 0.70° ± 0.12° | |

| RBF [69] | 0.77° ± 0.17° | 0.72° ± 0.14° | 0.84° ± 0.21° | 0.80° ± 0.20° | 0.76° ± 0.15° | 0.70° ± 0.12° | 0.76° ± 0.16° | |

| Proposed | 0.51° ± 0.08° | 0.49° ± 0.09° | 0.48° ± 0.12° | 0.56° ± 0.10° | 0.51° ± 0.11° | 0.47° ± 0.07° | 0.52° ± 0.10° | |

| 16 | DLSR [55] | 0.50° ± 0.12° | 0.47° ± 0.10° | 0.49° ± 0.13° | 0.48° ± 0.15° | 0.49° ± 0.09° | 0.51° ± 0.14° | 0.48° ± 0.12° |

| MLP [66] | 0.44° ± 0.11° | 0.48° ± 0.13° | 0.49° ± 0.11° | 0.46° ± 0.09° | 0.44° ± 0.10° | 0.46° ± 0.08° | 0.45° ± 0.10° | |

| MFNN [67] | 0.39° ± 0.09° | 0.42° ± 0.08° | 0.44° ± 0.12° | 0.39° ± 0.07° | 0.40° ± 0.07° | 0.42° ± 0.08° | 0.41° ± 0.08° | |

| GRNN [68] | 0.46° ± 0.12° | 0.41° ± 0.09° | 0.45° ± 0.10° | 0.47° ± 0.13° | 0.40° ± 0.08° | 0.43° ± 0.11° | 0.44° ± 0.10° | |

| RBF [69] | 0.48° ± 0.15° | 0.46° ± 0.13° | 0.41° ± 0.11° | 0.42° ± 0.12° | 0.46° ± 0.14° | 0.44° ± 0.15° | 0.45° ± 0.13° | |

| Proposed | 0.36° ± 0.06° | 0.42° ± 0.09° | 0.38° ± 0.08° | 0.40° ± 0.07° | 0.43° ± 0.08° | 0.37° ± 0.06° | 0.39° ± 0.07° |

| Calibration Markers | Method | Subject 1 | Subject 2 | Subject 3 | Subject 4 | Subject 5 | Subject 6 | Average Err. |

|---|---|---|---|---|---|---|---|---|

| 4 | DLSR [55] | 2.11° ± 0.48° | 2.20° ± 0.52° | 2.24° ± 0.55° | 2.03° ± 0.40° | 2.06° ± 0.42° | 2.15° ± 0.44° | 2.13° ± 0.47° |

| MLP [66] | 1.56° ± 0.31° | 1.64° ± 0.35° | 1.52° ± 0.28° | 1.58° ± 0.30° | 1.63° ± 0.36° | 1.51° ± 0.26° | 1.57° ± 0.31° | |

| MFNN [67] | 1.23° ± 0.24° | 1.21° ± 0.26° | 1.28° ± 0.21° | 1.26° ± 0.25° | 1.18° ± 0.20° | 1.25° ± 0.22° | 1.24° ± 0.23° | |

| GRNN [68] | 1.48° ± 0.29° | 1.37° ± 0.22° | 1.45° ± 0.31° | 1.57° ± 0.28° | 1.41° ± 0.26° | 1.49° ± 0.28° | 1.46° ± 0.27° | |

| RBF [69] | 1.65° ± 0.39° | 1.77° ± 0.43° | 1.79° ± 0.40° | 1.54° ± 0.34° | 1.52° ± 0.29° | 1.61° ± 0.36° | 1.65° ± 0.37° | |

| Proposed | 1.23° ± 0.21° | 1.17° ± 0.18° | 1.14° ± 0.26° | 1.18° ± 0.22° | 1.09° ± 0.28° | 1.21° ± 0.19° | 1.17° ± 0.22° | |

| 6 | DLSR [55] | 1.54° ± 0.28° | 1.49° ± 0.23° | 1.51° ± 0.26° | 1.57° ± 0.30° | 1.56° ± 0.31° | 1.61° ± 0.29° | 1.55° ± 0.28° |

| MLP [66] | 1.06° ± 0.24° | 1.15° ± 0.30° | 1.08° ± 0.21° | 1.01° ± 0.22° | 1.03° ± 0.23° | 1.14° ± 0.29° | 1.08° ± 0.25° | |

| MFNN [67] | 0.87° ± 0.21° | 0.88° ± 0.18° | 0.96° ± 0.23° | 0.94° ± 0.21° | 0.86° ± 0.16° | 0.84° ± 0.17° | 0.89° ± 0.19° | |

| GRNN [68] | 0.95° ± 0.19° | 1.05° ± 0.24° | 0.91° ± 0.15° | 0.94° ± 0.19° | 1.01° ± 0.23° | 0.99° ± 0.18° | 0.98° ± 0.20° | |

| RBF [69] | 1.11° ± 0.23° | 1.09° ± 0.21° | 1.15° ± 0.25° | 1.14° ± 0.27° | 1.07° ± 0.18° | 1.18° ± 0.22° | 1.12° ± 0.23° | |

| Proposed | 0.78° ± 0.13° | 0.82° ± 0.17° | 0.71° ± 0.23° | 0.73° ± 0.12° | 0.81° ± 0.20° | 0.87° ± 0.17° | 0.79° ± 0.17° | |

| 9 | DLSR [55] | 0.84° ± 0.14° | 0.81° ± 0.15° | 0.89° ± 0.17° | 0.88° ± 0.13° | 0.80° ± 0.12° | 0.86° ± 0.13° | 0.85° ± 0.14° |

| MLP [66] | 0.68° ± 0.12° | 0.74° ± 0.14° | 0.70° ± 0.15° | 0.62° ± 0.10° | 0.61° ± 0.09° | 0.69° ± 0.12° | 0.67° ± 0.12° | |

| MFNN [67] | 0.53° ± 0.08° | 0.52° ± 0.10° | 0.60° ± 0.09° | 0.51° ± 0.11° | 0.54° ± 0.08° | 0.56° ± 0.09° | 0.54° ± 0.09° | |

| GRNN [68] | 0.66° ± 0.09° | 0.69° ± 0.11° | 0.71° ± 0.15° | 0.61° ± 0.08° | 0.60° ± 0.09° | 0.62° ± 0.10° | 0.65° ± 0.10° | |

| RBF [69] | 0.71° ± 0.16° | 0.66° ± 0.13° | 0.78° ± 0.18° | 0.73° ± 0.19° | 0.70° ± 0.14° | 0.65° ± 0.11° | 0.71° ± 0.15° | |

| Proposed | 0.46° ± 0.07° | 0.45° ± 0.06° | 0.47° ± 0.10° | 0.51° ± 0.08° | 0.48° ± 0.09° | 0.43° ± 0.05° | 0.47° ± 0.07° | |

| 16 | DLSR [55] | 0.43° ± 0.09° | 0.48° ± 0.12° | 0.45° ± 0.10° | 0.43° ± 0.09° | 0.49° ± 0.13° | 0.46° ± 0.10° | 0.46° ± 0.11° |

| MLP [66] | 0.47° ± 0.11° | 0.42° ± 0.09° | 0.40° ± 0.08° | 0.44° ± 0.07° | 0.45° ± 0.10° | 0.41° ± 0.11° | 0.43° ± 0.09° | |

| MFNN [67] | 0.36° ± 0.08° | 0.41° ± 0.07° | 0.38° ± 0.05° | 0.40° ± 0.09° | 0.41° ± 0.06° | 0.38° ± 0.08° | 0.39° ± 0.07° | |

| GRNN [68] | 0.42° ± 0.11° | 0.39° ± 0.07° | 0.42° ± 0.10° | 0.38° ± 0.06° | 0.41° ± 0.06° | 0.44° ± 0.10° | 0.41° ± 0.08° | |

| RBF [69] | 0.38° ± 0.09° | 0.44° ± 0.12° | 0.45° ± 0.13° | 0.43° ± 0.09° | 0.39° ± 0.08° | 0.41° ± 0.11° | 0.42° ± 0.10° | |

| Proposed | 0.33° ± 0.05° | 0.35° ± 0.04° | 0.39° ± 0.06° | 0.38° ± 0.08° | 0.35° ± 0.04° | 0.37° ± 0.05° | 0.36° ± 0.05° |

References

- Jacob, R.J.K. What you look at is what you get: Eye movement-based interaction techniques. In Proceedings of the Sigchi Conference on Human Factors in Computing Systems, Seattle, WA, USA, 1–5 April 1990; pp. 11–18.

- Jacob, R.J.K. The use of eye movements in human-computer interaction techniques: What you look at is what you get. ACM Trans. Inf. Syst. 1991, 9, 152–169. [Google Scholar] [CrossRef]

- Schütz, A.C.; Braun, D.I.; Gegenfurtner, K.R. Eye movements and perception: A selective review. J. Vis. 2011, 11, 89–91. [Google Scholar] [CrossRef] [PubMed]

- Miriam, S.; Anna, M. Do we track what we see? Common versus independent processing for motion perception and smooth pursuit eye movements: A review. Vis. Res. 2011, 51, 836–852. [Google Scholar]

- Blondon, K.; Wipfli, R.; Lovis, C. Use of eye-tracking technology in clinical reasoning: A systematic review. Stud. Health Technol. Inform. 2015, 210, 90–94. [Google Scholar] [PubMed]

- Higgins, E.; Leinenger, M.; Rayner, K. Eye movements when viewing advertisements. Front. Psychol. 2014, 5, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Spakov, O.; Majaranta, P.; Spakov, O. Scrollable keyboards for casual eye typing. Psychol. J. 2009, 7, 159–173. [Google Scholar]

- Noureddin, B.; Lawrence, P.D.; Man, C.F. A non-contact device for tracking gaze in a human computer interface. Comput. Vis. Image Underst. 2005, 98, 52–82. [Google Scholar] [CrossRef]

- Biswas, P.; Langdon, P. Multimodal intelligent eye-gaze tracking system. Int. J. Hum. Comput. Interact. 2015, 31, 277–294. [Google Scholar] [CrossRef]

- Lim, C.J.; Kim, D. Development of gaze tracking interface for controlling 3d contents. Sens. Actuator A-Phys. 2012, 185, 151–159. [Google Scholar] [CrossRef]

- Ince, I.F.; Jin, W.K. A 2D eye gaze estimation system with low-resolution webcam images. EURASIP J. Adv. Signal Process. 2011, 1, 589–597. [Google Scholar] [CrossRef]

- Kumar, N.; Kohlbecher, S.; Schneider, E. A novel approach to video-based pupil tracking. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, Hyatt Regency Riverwalk, San Antonio, TX, USA, 11–14 October 2009; pp. 1255–1262.

- Lee, E.C.; Min, W.P. A new eye tracking method as a smartphone interface. KSII Trans. Internet Inf. Syst. 2013, 7, 834–848. [Google Scholar]

- Kim, J. Webcam-based 2D eye gaze estimation system by means of binary deformable eyeball templates. J. Inform. Commun. Converg. Eng. 2010, 8, 575–580. [Google Scholar] [CrossRef]

- Dong, L. Investigation of Calibration Techniques in video based eye tracking system. In Proceedings of the 11th international conference on Computers Helping People with Special Needs, Linz, Austria, 9–11 July 2008; pp. 1208–1215.

- Fard, P.J.M.; Moradi, M.H.; Parvaneh, S. Eye tracking using a novel approach. In Proceedings of the World Congress on Medical Physics and Biomedical Engineering 2006, Seoul, Korea, 27 August–1 September 2006; pp. 2407–2410.

- Yamazoe, H.; Utsumi, A.; Yonezawa, T.; Abe, S. Remote gaze estimation with a single camera based on facial-feature tracking without special calibration actions. In Proceedings of the 2008 symposium on Eye tracking research and applications, Savannah, GA, USA, 26–28 March 2008; pp. 245–250.

- Lee, E.C.; Kang, R.P.; Min, C.W.; Park, J. Robust gaze tracking method for stereoscopic virtual reality systems. Hum.-Comput. Interact. 2007, 4552, 700–709. [Google Scholar]

- Lee, H.C.; Lee, W.O.; Cho, C.W.; Gwon, S.Y.; Park, K.R.; Lee, H.; Cha, J. Remote gaze tracking system on a large display. Sensors 2013, 13, 13439–13463. [Google Scholar] [CrossRef] [PubMed]

- Ohno, T.; Mukawa, N.; Yoshikawa, A. Free gaze: A gaze tracking system for everyday gaze interaction. In Proceedings of the Symposium on Eye Tracking Research and Applications Symposium, New Orleans, LA, USA, 25–27 March 2002; pp. 125–132.

- Chen, J.; Ji, Q. 3D gaze estimation with a single camera without IR illumination. In Proceedings of the International Conference on Pattern Recognition, Tampa, Florida, FL, USA, 8–11 December 2008; pp. 1–4.

- Sheng-Wen, S.; Jin, L. A novel approach to 3D gaze tracking using stereo cameras. IEEE Trans. Syst. Man Cybern. Part B-Cybern. 2004, 34, 234–245. [Google Scholar]

- Ki, J.; Kwon, Y.M.; Sohn, K. 3D gaze tracking and analysis for attentive Human Computer Interaction. In Proceedings of the Frontiers in the Convergence of Bioscience and Information Technologies, Jeju, Korea, 11–13 October 2007; pp. 617–621.

- Ji, W.L.; Cho, C.W.; Shin, K.Y.; Lee, E.C.; Park, K.R. 3D gaze tracking method using purkinje images on eye optical model and pupil. Opt. Lasers Eng. 2012, 50, 736–751. [Google Scholar]

- Lee, E.C.; Kang, R.P. A robust eye gaze tracking method based on a virtual eyeball model. Mach. Vis. Appl. 2009, 20, 319–337. [Google Scholar] [CrossRef]

- Wang, J.G.; Sung, E.; Venkateswarlu, R. Estimating the eye gaze from one eye. Comput. Vis. Image Underst. 2005, 98, 83–103. [Google Scholar] [CrossRef]

- Ryoung, P.K. A real-time gaze position estimation method based on a 3-d eye model. IEEE Trans. Syst. Man Cybern. Part B-Cybern. 2007, 37, 199–212. [Google Scholar]

- Topal, C.; Dogan, A.; Gerek, O.N. A wearable head-mounted sensor-based apparatus for eye tracking applications. In Proceedings of the IEEE Conference on Virtual Environments, Human-computer Interfaces and Measurement Systems, Istanbul, Turkey, 14–16 July 2008; pp. 136–139.

- Ville, R.; Toni, V.; Outi, T.; Niemenlehto, P.-H.; Verho, J.; Surakka, V.; Juhola, M.; Lekkahla, J. A wearable, wireless gaze tracker with integrated selection command source for human-computer interaction. IEEE Trans. Inf. Technol. Biomed. 2011, 15, 795–801. [Google Scholar]

- Noris, B.; Keller, J.B.; Billard, A. A wearable gaze tracking system for children in unconstrained environments. Comput. Vis. Image Underst. 2011, 115, 476–486. [Google Scholar] [CrossRef]

- Stengel, M.; Grogorick, S.; Eisemann, M.; Eisemann, E.; Magnor, M. An affordable solution for binocular eye tracking and calibration in head-mounted displays. In Proceedings of the ACM Multimedia conference for 2015, Brisbane, Queensland, Australia, 26–30 October 2015; pp. 15–24.

- Takemura, K.; Takahashi, K.; Takamatsu, J.; Ogasawara, T. Estimating 3-D point-of-regard in a real environment using a head-mounted eye-tracking system. IEEE Trans. Hum.-Mach. Syst. 2014, 44, 531–536. [Google Scholar] [CrossRef]

- Schneider, E.; Dera, T.; Bard, K.; Bardins, S.; Boening, G.; Brand, T. Eye movement driven head-mounted camera: It looks where the eyes look. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, Waikoloa, HI, USA, 12 October 2005; pp. 2437–2442.

- Min, Y.K.; Yang, S.; Kim, D. Head-mounted binocular gaze detection for selective visual recognition systems. Sens. Actuator A-Phys. 2012, 187, 29–36. [Google Scholar]

- Fuhl, W.; Kübler, T.; Sippel, K.; Rosenstiel, W.; Kasneci, E. Excuse: Robust pupil detection in real-world scenarios. In Proceedings of the 16th International Conference on Computer Analysis of Images and Patterns (CAIP), Valletta, Malta, 2–4 September 2015; pp. 39–51.

- Fuhl, W.; Santini, T.; Kasneci, G.; Kasneci, E. PupilNet: Convolutional Neural Networks for Robust Pupil Detection, arXiv preprint arXiv: 1601.04902. Available online: http://arxiv.org/abs/1601.04902 (accessed on 19 January 2016).

- Fuhl, W.; Santini, T.; Kübler, T.; Kasneci, E. ElSe: Ellipse selection for robust pupil detection in real-world environments. In Proceedings of the Ninth Biennial ACM Symposium on Eye Tracking Research & Applications (ETRA’ 16), Charleston, SC, USA, 14–17 March 2016; pp. 123–130.

- Dong, H.Y.; Chung, M.J. A novel non-intrusive eye gaze estimation using cross-ratio under large head motion. Comput. Vis. Image Underst. 2005, 98, 25–51. [Google Scholar]

- Mohammadi, M.R.; Raie, A. Selection of unique gaze direction based on pupil position. IET Comput. Vis. 2013, 7, 238–245. [Google Scholar] [CrossRef]

- Arantxa, V.; Rafael, C. A novel gaze estimation system with one calibration point. IEEE Trans. Syst. Man Cybern. Part B-Cybern. 2008, 38, 1123–1138. [Google Scholar]

- Coutinho, F.L.; Morimoto, C.H. Free head motion eye gaze tracking using a single camera and multiple light sources. In Proceedings of the SIBGRAPI Conference on Graphics, Patterns and Images, Manaus, Amazon, Brazil, 8–11 October 2006; pp. 171–178.

- Beymer, D.; Flickner, M. Eye gaze tracking using an active stereo head. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Madison, WI, USA, 18–20 June 2003; pp. 451–458.

- Zhu, Z.; Ji, Q. Eye gaze tracking under natural head movements. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 20–25 June 2005; pp. 918–923.

- Magee, J.J.; Betke, M.; Gips, J.; Scott, M.R. A human–computer interface using symmetry between eyes to detect gaze direction. IEEE Trans. Syst. Man Cybern. Part A-Syst. Hum. 2008, 38, 1248–1261. [Google Scholar] [CrossRef]

- Scott, D.; Findlay, J.M. Visual Search, Eye Movements and Display Units; IBM UK Hursley Human Factors Laboratory: Winchester, UK, 1991. [Google Scholar]

- Wen, Z.; Zhang, T.N.; Chang, S.J. Eye gaze estimation from the elliptical features of one iris. Opt. Eng. 2011, 50. [Google Scholar] [CrossRef]

- Wang, J.G.; Sung, E. Gaze determination via images of irises. Image Vis. Comput. 2001, 19, 891–911. [Google Scholar] [CrossRef]

- Ebisawa, Y. Unconstrained pupil detection technique using two light sources and the image difference method. WIT Trans. Inf. Commun. Technol. 1995, 15, 79–89. [Google Scholar]

- Morimoto, C.; Koons, D.; Amir, A.; Flicker, M. Framerate pupil detector and gaze tracker. In Proceedings of the International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; pp. 1–6.

- Wang, C.W.; Gao, H.L. Differences in the infrared bright pupil response of human eyes. In Proceedings of Etra Eye Tracking Research and Applications Symposium, New Orleans, LA, USA, 25–27 March 2002; pp. 133–138.

- Dodge, R.; Cline, T.S. The angle velocity of eye movements. Psychol. Rev. 1901, 8, 145–157. [Google Scholar] [CrossRef]

- Tomono, A.; Iida, M.; Kobayashi, Y. A TV camera system which extracts feature points for non-contact eye movement detection. In Proceedings of the SPIE Optics, Illumination, and Image Sensing for Machine Vision, Philadelphia, PA, USA, 1 November 1989; pp. 2–12.

- Ebisawa, Y. Improved video-based eye-gaze detection method. IEEE Trans. Instrum. Meas. 1998, 47, 948–955. [Google Scholar] [CrossRef]

- Hu, B.; Qiu, M.H. A new method for human-computer interaction by using eye gaze. In Proceedings of the IEEE International Conference on Systems, Man, & Cybernetics, Humans, Information & Technology, San Antonio, TX, USA, 2–5 October 1994; pp. 2723–2728.

- Hutchinson, T.E.; White, K.P.; Martin, W.N.; Reichert, K.C.; Frey, L.A. Human-computer interaction using eye-gaze input. IEEE Trans. Syst. Man Cybern. 1989, 19, 1527–1534. [Google Scholar] [CrossRef]

- Cornsweet, T.N.; Crane, H.D. Accurate two-dimensional eye tracker using first and fourth purkinje images. J. Opt. Soc. Am. 1973, 63, 921–928. [Google Scholar] [CrossRef] [PubMed]

- Glenstrup, A.J.; Nielsen, T.E. Eye Controlled Media: Present and Future State. Master’s Thesis, University of Copenhagen, Copenhagen, Denmark, 1 June 1995. [Google Scholar]

- Mimica, M.R.M.; Morimoto, C.H. A computer vision framework for eye gaze tracking. In Proceedings of XVI Brazilian Symposium on Computer Graphics and Image Processing, Sao Carlos, Brazil, 12–15 October 2003; pp. 406–412.

- Morimoto, C.H.; Mimica, M.R.M. Eye gaze tracking techniques for interactive applications. Comput. Vis. Image Underst. 2005, 98, 4–24. [Google Scholar] [CrossRef]

- Jian-Nan, C.; Peng-Yi, Z.; Si-Yi, Z.; Chuang, Z.; Ying, H. Key Techniques of Eye Gaze Tracking Based on Pupil Corneal Reflection. In Proceedings of the WRI Global Congress on Intelligent Systems, Xiamen, China, 19–21 May 2009; pp. 133–138.

- Feng, L.; Sugano, Y.; Okabe, T.; Sato, Y. Adaptive linear regression for appearance-based gaze estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 2033–2046. [Google Scholar]

- Cherif, Z.R.; Nait-Ali, A.; Motsch, J.F.; Krebs, M.O. An adaptive calibration of an infrared light device used for gaze tracking. In Proceedings of the IEEE Instrumentation and Measurement Technology Conference, Anchorage, AK, USA, 21–23 May 2002; pp. 1029–1033.

- Cerrolaza, J.J.; Villanueva, A.; Cabeza, R. Taxonomic study of polynomial regressions applied to the calibration of video-oculographic systems. In Proceedings of the 2008 symposium on Eye Tracking Research and Applications, Savannah, GA, USA, 26–28 March 2008; pp. 259–266.

- Cerrolaza, J.J.; Villanueva, A.; Cabeza, R. Study of polynomial mapping functions in video-oculography eye trackers. ACM Trans. Comput.-Hum. Interact. 2012, 19, 602–615. [Google Scholar] [CrossRef]

- Baluja, S.; Pomerleau, D. Non-intrusive gaze tracking using artificial neural networks. Neural Inf. Process. Syst. 1994, 6, 753–760. [Google Scholar]

- Piratla, N.M.; Jayasumana, A.P. A neural network based real-time gaze tracker. J. Netw. Comput. Appl. 2002, 25, 179–196. [Google Scholar] [CrossRef]

- Demjen, E.; Abosi, V.; Tomori, Z. Eye tracking using artificial neural networks for human computer interaction. Physiol. Res. 2011, 60, 841–844. [Google Scholar] [PubMed]

- Coughlin, M.J.; Cutmore, T.R.H.; Hine, T.J. Automated eye tracking system calibration using artificial neural networks. Comput. Meth. Programs Biomed. 2004, 76, 207–220. [Google Scholar] [CrossRef] [PubMed]

- Sesin, A.; Adjouadi, M.; Cabrerizo, M.; Ayala, M.; Barreto, A. Adaptive eye-gaze tracking using neural-network-based user profiles to assist people with motor disability. J. Rehabil. Res. Dev. 2008, 45, 801–817. [Google Scholar] [CrossRef] [PubMed]

- Gneo, M.; Schmid, M.; Conforto, S.; D’Alessio, T. A free geometry model-independent neural eye-gaze tracking system. J. NeuroEng. Rehabil. 2012, 9, 17025–17036. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Z.; Ji, Q. Eye and gaze tracking for interactive graphic display. Mach. Vis. Appl. 2002, 15, 139–148. [Google Scholar] [CrossRef]

- Kiat, L.C.; Ranganath, S. One-time calibration eye gaze detection system. In Proceedings of International Conference on Image Processing, Singapore, 24–27 October 2004; pp. 873–876.

- Wu, Y.L.; Yeh, C.T.; Hung, W.C.; Tang, C.Y. Gaze direction estimation using support vector machine with active appearance model. Multimed. Tools Appl. 2014, 70, 2037–2062. [Google Scholar] [CrossRef]

- Fredric, M.H.; Ivica, K. Principles of Neurocomputing for Science and Engineering; McGraw Hill: New York, NY, USA, 2001. [Google Scholar]

- Oyster, C.W. The Human Eye: Structure and Function; Sinauer Associates: Sunderland, MA, USA, 1999. [Google Scholar]

- Sliney, D.; Aron-Rosa, D.; DeLori, F.; Fankhauser, F.; Landry, R.; Mainster, M.; Marshall, J.; Rassow, B.; Stuck, B.; Trokel, S.; et al. Adjustment of guidelines for exposure of the eye to optical radiation from ocular instruments: Statement from a task group of the International Commission on Non-Ionizing Radiation Protection. Appl. Opt. 2005, 44, 2162–2176. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.Z.; Zhang, G.Y.; Shi, J.D. Pupil and glint detection using wearable camera sensor and near-infrared led array. Sensors 2015, 15, 30126–30141. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.W.; Heo, H.; Park, K.R. A novel gaze tracking method based on the generation of virtual calibration points. Sensors 2013, 13, 10802–10822. [Google Scholar] [CrossRef] [PubMed]

- Gwon, S.Y.; Jung, D.; Pan, W.; Park, K.R. Estimation of Gaze Detection Accuracy Using the Calibration Information-Based Fuzzy System. Sensors 2016. [Google Scholar] [CrossRef] [PubMed]

| Eye Image | Pupil Center (x, y) | Glint Center (x, y) | |||

|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | ||

| 1 | (290.15, 265.34) | (265.31, 298.65) | (294.56, 300.87) | (266.41, 310.28) | (296.25, 312.49) |

| 2 | (251.42, 255.93) | (245.58, 292.36) | (276.54, 295.13) | (246.19, 305.67) | (277.51, 307.26) |

| 3 | (203.34, 260.81) | (221.95, 297.32) | (252.49, 298.61) | (221.34, 309.17) | (253.65, 310.28) |

| 4 | (297.74, 275.62) | (271.25, 300.56) | (301.58, 300.67) | (270.91, 315.66) | (300.85, 315.46) |

| 5 | (247.31, 277.58) | (243.25, 302.62) | (273.55, 303.46) | (242.81, 317.54) | (274.26, 318.19) |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, J.; Zhang, G.; Shi, J. 2D Gaze Estimation Based on Pupil-Glint Vector Using an Artificial Neural Network. Appl. Sci. 2016, 6, 174. https://doi.org/10.3390/app6060174

Wang J, Zhang G, Shi J. 2D Gaze Estimation Based on Pupil-Glint Vector Using an Artificial Neural Network. Applied Sciences. 2016; 6(6):174. https://doi.org/10.3390/app6060174

Chicago/Turabian StyleWang, Jianzhong, Guangyue Zhang, and Jiadong Shi. 2016. "2D Gaze Estimation Based on Pupil-Glint Vector Using an Artificial Neural Network" Applied Sciences 6, no. 6: 174. https://doi.org/10.3390/app6060174

APA StyleWang, J., Zhang, G., & Shi, J. (2016). 2D Gaze Estimation Based on Pupil-Glint Vector Using an Artificial Neural Network. Applied Sciences, 6(6), 174. https://doi.org/10.3390/app6060174