Enchancing Robustness in Skin Lesion Detection: A Benchmark of 32 Models on a Novel Dataset Including Healthy Skin Images

Abstract

1. Introduction

- Creation and public release of a novel, comprehensive dataset for skin lesion detection. The dataset is the first of its kind to include a dedicated and balanced ‘background’ class of 1000 healthy skin images, which is critical for training robust models that can minimize false positives in a real-world clinical setting.

- A large-scale benchmark of 32 state-of-the-art object detection models. This study provides a comprehensive comparative analysis of modern architectures, primarily from the YOLO family (v5–v12) and RT-DETR, establishing their true effectiveness on a challenging, multi-class medical imaging task.

- Development of a systematic, multi-stage evaluation methodology. An original approach to model selection was presented, which considers not only performance metrics like mAP and Recall but also computational complexity, ensuring that the recommended solution is both accurate and efficient for practical deployment.

- Introduction of a novel scoring system for model selection. To objectively identify the superior architecture, we developed a unique scoring system based on per-class recall performance, which allowed for a granular and fair comparison between the final candidate models.

- Establishing a new benchmark and identifying the best-performing model. Based on the rigorous analysis, the YOLOv9c model was identified as the most powerful and efficient solution. Its performance on our custom dataset establishes a new, more realistic benchmark for future research in automated dermatological diagnosis.

2. Related Works

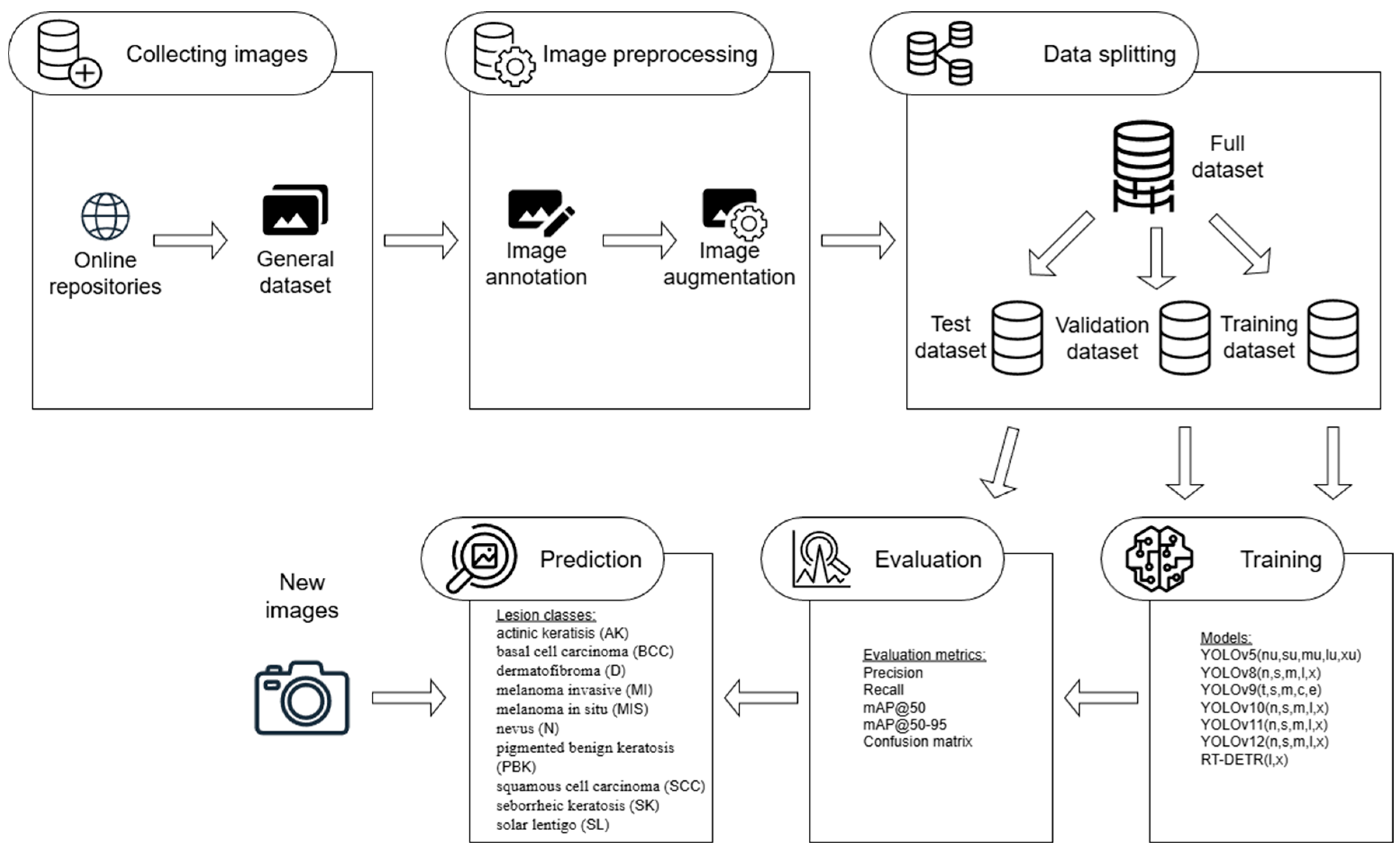

3. Material and Methods

3.1. Dataset

3.2. Dataset Preparation

3.3. Metrics

- TP (True Positives)—Cases where the model correctly predicted the positive class.

- TN (True Negatives)—Cases where the model correctly predicted the negative class.

- FP (False Positives)—Cases where the model incorrectly predicted the positive class (a false alarm).

- FN (False Negatives)—Cases where the model incorrectly predicted the negative class (an oversight or a miss).

- IoU is a value from 0 to 1 that measures how much the predicted bounding box overlaps with the actual bounding box (1). A higher IoU value means a better, more precise detection.

3.4. The YOLO Family of Models

3.5. RT-DETR Architecture

4. Results

4.1. Training Progress

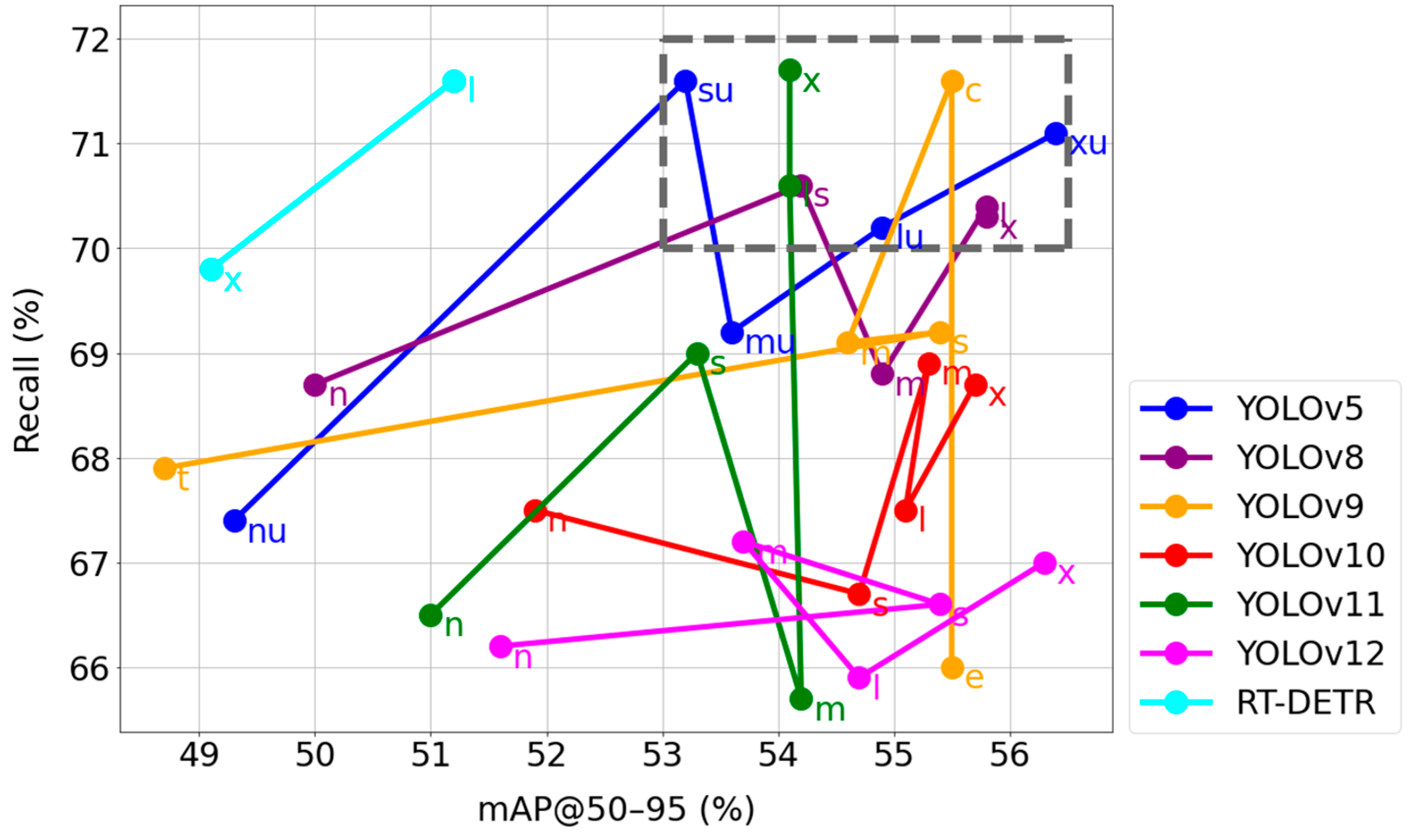

4.2. Initial Performance Benchmark

4.3. Efficiency-Based Selection

4.4. Per-Class Performance Analysis

4.5. Final Model Selection Using a Scoring System

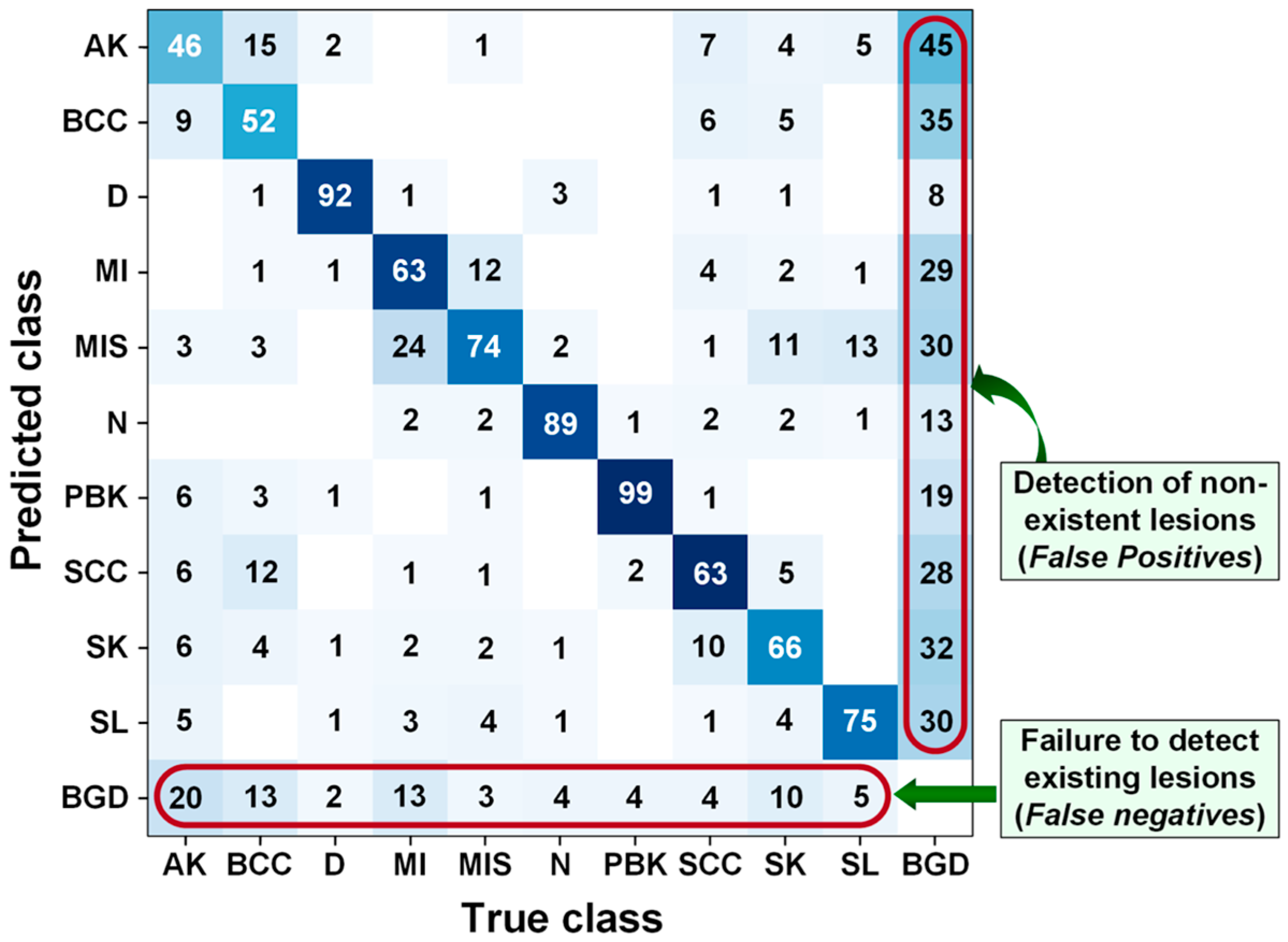

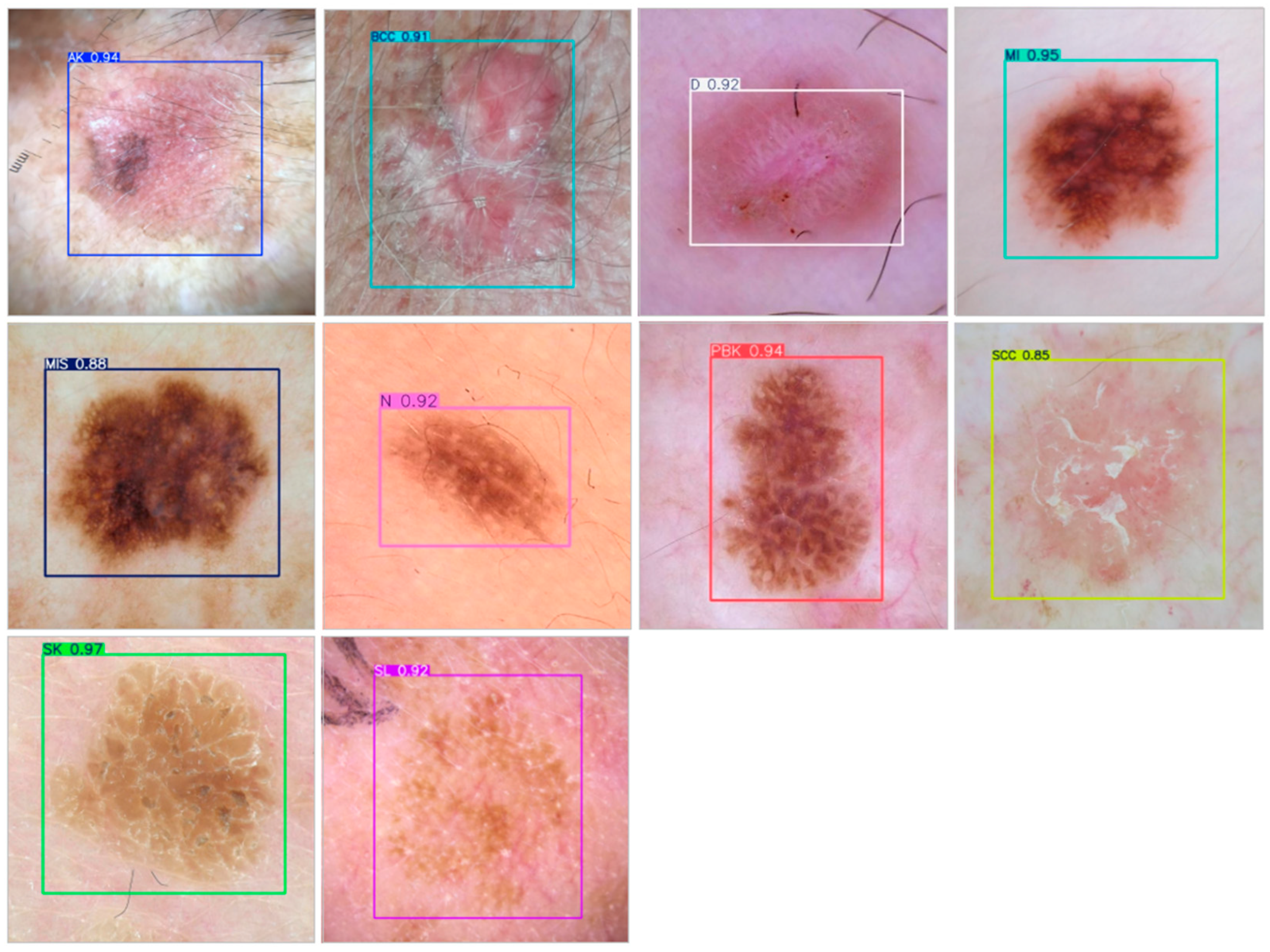

4.6. Results of the Best Model

5. Discussion

5.1. Comparison of Results with Those of Other Authors

5.2. Possibilities for Improving the Model’s Effectiveness

5.3. Future Directions

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Urban, K.; Mehrmal, S.; Uppal, P.; Giesey, R.L.; Delost, G.R. The global burden of skin cancer: A longitudinal analysis from the Global Burden of Disease Study, 1990–2017. JAAD Int. 2021, 2, 98–108. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- World Health Organization. The Effect of Occupational Exposure to Solar Ultraviolet Radiation on Malignant Skin Melanoma and Non-Melanoma Skin Cancer: A Systematic Review and Meta-Analysis from the WHO/ILO Joint Estimates of the Work-Related Burden of Disease and Injury; World Health Organization: Geneva, Switzerland, 2021. Available online: https://iris.who.int/handle/10665/350569 (accessed on 1 October 2025).

- Voss, R.K.; Woods, T.N.; Cromwell, K.D.; Nelson, K.C.; Cormier, J.N. Improving outcomes in patients with melanoma: Strategies to ensure an early diagnosis. Patient Relat. Outcome Meas. 2015, 6, 229–242. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Naik, P.P. Cutaneous Malignant Melanoma: A Review of Early Diagnosis and Management. World J. Oncol. 2021, 12, 7–19. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Waseh, S.; Lee, J.B. Advances in melanoma: Epidemiology, diagnosis, and prognosis. Front. Med. 2023, 10, 1268479. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Aishwarya, N.; Prabhakaran, K.M.; Debebe, F.T.; Reddy, M.S.S.A.; Pranavee, P. Skin Cancer diagnosis with Yolo Deep Neural Network. Procedia Comput. Sci. 2023, 220, 651–658. [Google Scholar] [CrossRef]

- AlSadhan, N.A.; Alamri, S.A.; Ben Ismail, M.M.; Bchir, O. Skin Cancer Recognition Using Unified Deep Convolutional Neural Networks. Cancers 2024, 16, 1246. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Stefan, Ć.; Nikola, S. Application of the YOLO algorithm for Medical Purposes in the Detection of Skin Cancer. In Proceedings of the 10th International Scientific Conference Technics, Informatics and Education-TIE 2024, Čačak, Serbia, 20–24 September 2024; pp. 83–88. [Google Scholar] [CrossRef]

- Riyadi, M.A.; Ayuningtias, A.; Isnanto, R.R. Detection and Classification of Skin Cancer Using YOLOv8n. In Proceedings of the 2024 11th International Conference on Electrical Engineering, Computer Science and Informatics (EECSI), Yogyakarta, Indonesia, 26–27 September 2024; pp. 9–15. [Google Scholar] [CrossRef]

- Dalawai, N.; Prathamraj, P.; Dayananda, B.; Joythi, A.; Suresh, R. Real-Time Skin Disease Detection and Classification Using YOLOv8 Object Detection for Healthcare Diagnosis. Int. J. Multidiscip. Res. Anal. 2024, 7, 231–235. [Google Scholar] [CrossRef]

- Elshahawy, M.; Elnemr, A.; Oproescu, M.; Schiopu, A.G.; Elgarayhi, A.; Elmogy, M.M.; Sallah, M. Early Melanoma Detection Based on a Hybrid YOLOv5 and ResNet Technique. Diagnostics 2023, 13, 2804. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Takashi, N. Improved Skin Lesion Segmentation in Dermoscopic Images Using Object Detection and Semantic Segmentation. Clin. Cosmet. Investig. Dermatol. 2025, 18, 1191–1198. [Google Scholar] [CrossRef]

- Gül, S.; Cetinel, G.; Aydin, B.M.; Akgün, D.; Öztaş Kara, R. YOLOSAMIC: A Hybrid Approach to Skin Cancer Segmentation with the Segment Anything Model and YOLOv8. Diagnostics 2025, 15, 479. [Google Scholar] [CrossRef]

- Aljohani, K.; Turki, T. Automatic Classification of Melanoma Skin Cancer with Deep Convolutional Neural Networks. AI 2022, 3, 512–525. [Google Scholar] [CrossRef]

- Alzahrani, S.M. SkinLiTE: Lightweight Supervised Contrastive Learning Model for Enhanced Skin Lesion Detection and Disease Typification in Dermoscopic Images. Curr. Med. Imaging 2024, 20, e15734056313837. [Google Scholar] [CrossRef]

- Manoj, S.O.; Abirami, K.R.; Victor, A.; Arya, M. Automatic Detection and Categorization of Skin Lesions for Early Diagnosis of Skin Cancer Using YOLO-v3-DCNN Architecture. Image Anal. Stereol. 2023, 42, 101–117. [Google Scholar] [CrossRef]

- Abdullah, K.; Muhammad, S.; Nauman, K.; Ayman, Y.; Qaisar, A. CAD-Skin: A Hybrid Convolutional Neural Network–Autoencoder Framework for Precise Detection and Classification of Skin Lesions and Cancer. Bioengineering 2025, 12, 326. [Google Scholar] [CrossRef]

- Wang, F.; Ju, M.; Zhu, X.; Zhu, Q.; Wang, H.; Qian, C.; Wang, R. A Geometric algebra-enhanced network for skin lesion detection with diagnostic prior. J. Supercomput. 2024, 81, 327. [Google Scholar] [CrossRef]

- Rashid, J.; Ishfaq, M.; Ali, G.; Saeed, M.R.; Hussain, M.; Alkhalifah, T.; Alturise, F.; Samand, N. Skin Cancer Disease Detection Using Transfer Learning Technique. Appl. Sci. 2022, 12, 5714. [Google Scholar] [CrossRef]

- Adekunle, A. Hybrid Skin Lesion Detection Integrating CNN and XGBoost for Accurate Diagnosis. J. Ski. Cancer 2025, 53, 14–71. [Google Scholar]

- Ashraf, R.; Afzal, S.; Rehman, A.U.; Gul, S.; Baber, J.; Bakhtyar, M.; Mehmood, I.; Song, O.-Y.; Maqsood, M. Region-of-Interest Based Transfer Learning Assisted Framework for Skin Cancer Detection. IEEE Access 2020, 8, 147858–147871. [Google Scholar] [CrossRef]

- Joseph, R.; Santosh, D.; Ross, G.; Ali, F. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Pedro, F.; Ross, G.; David, M.; Deva, R. Object Detection with Discriminatively Trained Part-Based Models. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1627–1645. [Google Scholar] [CrossRef]

- Rahima, K.; Muhammad, H. What is YOLOv5: A deep look into the internal features of the popular object detector. arXiv 2024, arXiv:2407.20892. [Google Scholar] [CrossRef]

- Reis, D.; Kupec, J.; Hong, J.; Daoudi, A. Real-Time Flying Object Detection with YOLOv8. arXiv 2023, arXiv:2305.09972. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Yeh, I.-H.; Liao, H.-Y. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. In Proceedings of the 18th European Conference on Computer Vision (ECCV), Milan, Italy, 29 September–4 October 2024. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Ye, Q.; Doermann, D. YOLOv12: Attention-Centric Real-Time Object Detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar] [CrossRef]

- Joseph, R.; Ali, F. YOLO9000: Better, Faster, Stronger. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar] [CrossRef]

- Joseph, R.; Ali, F. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar] [CrossRef]

- Lv, W.; Xu, S.; Zhao, Y.; Wang, G.; Wei, J.; Cui, C.; Du, Y.; Dang, Q.; Liu, Y. DETRs Beat YOLOs on Real-time Object Detection. arXiv 2023, arXiv:2304.08069. [Google Scholar]

- Ultralytics Homepage. Available online: https://docs.ultralytics.com/models/ (accessed on 5 December 2025).

| Relevance to This Study | Key Innovation & Architecture | Model |

|---|---|---|

| Acts as a stable, high-performance baseline for speed/accuracy trade-off. | Introduce CSPDarknet53 backbone and Focus layer. PyTorch-native (Version 1.5) implementation. | YOLOv5 |

| Represents the current industry standard for object detection tasks. | Anchor-free detection with a decoupled head and C2f module. | YOLOv8 |

| Designed to prevent information loss in deep networks, crucial for detecting subtle lesion features. | Introduces Programmable Gradient Information (PGI) and GELAN backbone. | YOLOv9 |

| Tested for efficiency by eliminating post-processing steps. | NMS-free training using consistent dual assignment. | YOLOv10 |

| Evaluated for enhanced feature extraction capabilities. | Features C3k2 block and C2PSA (Parallel Spatial Attention). | YOLOv11 |

| Tested to see if attention mechanisms improve medical imaging accuracy. | Incorporates Area Attention Module (A2) and FlashAttention. | YOLOv12 |

| Name | Value |

|---|---|

| epochs—total number of training epochs | 200 |

| patience—number of epochs to wait without improvement in validation metrics before early stopping the training | 100 |

| batch—batch size | 16 |

| imgsz—target image size for training | 640 |

| optimizer—choice of optimizer for training | SGD |

| momentum—momentum factor influencing the incorporation of past gradients in the current update | 0.937 |

| lr0—initial learning rate | 0.01 |

| lrf—final learning rate as a fraction of the initial rate = (lr0 * lrf), used in conjunction with schedulers to adjust the learning rate over time | 0.01 |

| weight_decay—L2 regularization term, penalizing large weights to prevent overfitting | 0.0005 |

| warmup_epochs—number of epochs for learning rate warmup, gradually increasing the learning rate from a low value to the initial learning rate to stabilize training early on | 3.0 |

| warmup_momentum—initial momentum for warmup phase, gradually adjusting to the set momentum over the warmup period | 0.8 |

| warmup_bias_lr—learning rate for bias parameters during the warmup phase, helping stabilize model training in the initial epochs | 0.1 |

| box—weight of the box loss component in the loss function, influencing how much emphasis is placed on accurately predicting bounding box coordinates | 7.5 |

| cls—weight of the classification loss in the total loss function, affecting the importance of correct class prediction relative to other components | 0.5 |

| dfl—weight of the distribution focal loss, used in certain YOLO versions for fine-grained classification | 1.5 |

| iou—sets the Intersection Over Union threshold for Non-Maximum Suppression | 0.7 |

| max_det—limits the maximum number of detections per image | 300 |

| augment—enables test-time augmentation during validation | false |

| Architecture | Version | Precision | Recall | mAP@50 | mAP@50–95 |

|---|---|---|---|---|---|

| YOLOv5 | nu | 0.648 | 0.674 | 0.680 | 0.493 |

| su | 0.680 | 0.716 | 0.723 | 0.532 | |

| mu | 0.695 | 0.692 | 0.721 | 0.536 | |

| lu | 0.701 | 0.702 | 0.728 | 0.549 | |

| xu | 0.718 | 0.711 | 0.740 | 0.564 | |

| YOLOv8 | n | 0.659 | 0.687 | 0.689 | 0.500 |

| s | 0.725 | 0.706 | 0.727 | 0.542 | |

| m | 0.728 | 0.688 | 0.730 | 0.549 | |

| l | 0.722 | 0.704 | 0.735 | 0.558 | |

| x | 0.726 | 0.703 | 0.736 | 0.558 | |

| YOLOv9 | t | 0.610 | 0.679 | 0.670 | 0.487 |

| s | 0.704 | 0.692 | 0.731 | 0.554 | |

| m | 0.691 | 0.691 | 0.724 | 0.546 | |

| c | 0.665 | 0.716 | 0.725 | 0.555 | |

| e | 0.753 | 0.660 | 0.734 | 0.555 | |

| YOLOv10 | n | 0.673 | 0.675 | 0.707 | 0.519 |

| s | 0.735 | 0.667 | 0.732 | 0.547 | |

| m | 0.710 | 0.689 | 0.732 | 0.553 | |

| l | 0.714 | 0.675 | 0.730 | 0.551 | |

| x | 0.722 | 0.687 | 0.732 | 0.557 | |

| YOLOv11 | n | 0.666 | 0.665 | 0.692 | 0.510 |

| s | 0.677 | 0.690 | 0.710 | 0.533 | |

| m | 0.720 | 0.657 | 0.720 | 0.542 | |

| l | 0.674 | 0.706 | 0.711 | 0.541 | |

| x | 0.656 | 0.717 | 0.717 | 0.541 | |

| YOLOv12 | n | 0.690 | 0.662 | 0.693 | 0.516 |

| s | 0.746 | 0.666 | 0.729 | 0.554 | |

| m | 0.701 | 0.672 | 0.710 | 0.537 | |

| l | 0.750 | 0.659 | 0.718 | 0.547 | |

| x | 0.759 | 0.670 | 0.734 | 0.563 | |

| RT-DETR | l | 0.733 | 0.716 | 0.680 | 0.512 |

| x | 0.734 | 0.698 | 0.672 | 0.491 |

| Lesion Classes | 2 Points | 1 Points | |

|---|---|---|---|

| MALIGNANT | AK | YOLOv5su | YOLOv9c |

| BCC | YOLOv11l | YOLOv9c | |

| MI | YOLOv5su | YOLOv9c | |

| MIS | YOLOv9c | YOLOv8s | |

| SCC | YOLOv9c | YOLOv5su | |

| BENIGN | D | YOLOv5su | YOLOv8s |

| N | YOLOv8s | YOLOv5su | |

| PBK | YOLOv9c | YOLOv11l | |

| SK | YOLOv8s | YOLOv9c | |

| SL | YOLOv5su YOLOv8s | YOLOv9c |

| (a) | (b) | ||

|---|---|---|---|

| Model | Points | Model | Points |

| YOLOv5su | 5 | YOLOv5su | 10 |

| YOLOv8s | 1 | YOLOv8s | 8 |

| YOLOv9c | 7 | YOLOv9c | 11 |

| YOLOv11l | 2 | YOLOv11l | 3 |

| Class | Images | Instances | Precision | Recall | mAP@50 | mAP@50–95 |

|---|---|---|---|---|---|---|

| all | 1100 | 1030 | 0.665 | 0.716 | 0.725 | 0.555 |

| AK | 100 | 101 | 0.448 | 0.485 | 0.444 | 0.275 |

| BCC | 100 | 104 | 0.588 | 0.51 | 0.578 | 0.377 |

| D | 100 | 100 | 0.863 | 0.9 | 0.933 | 0.669 |

| MI | 100 | 109 | 0.64 | 0.606 | 0.668 | 0.53 |

| MIS | 100 | 100 | 0.511 | 0.77 | 0.679 | 0.584 |

| N | 100 | 100 | 0.832 | 0.894 | 0.93 | 0.741 |

| PBK | 100 | 106 | 0.823 | 0.953 | 0.958 | 0.8 |

| SCC | 100 | 100 | 0.612 | 0.632 | 0.611 | 0.403 |

| SK | 100 | 110 | 0.622 | 0.614 | 0.642 | 0.515 |

| SL | 100 | 100 | 0.711 | 0.8 | 0.812 | 0.652 |

| Article | Task | Dataset | Nr. of Classes | Models | Precision | Recall | mAP@50 |

|---|---|---|---|---|---|---|---|

| Our model | Detection | ISIC (custom) | 10 + BGD | YOLOv9c | 66.5% | 71.6% | 72.5% |

| 6 | Detection | ISIC | 9 | YOLOv4 | 92.75% | 91% | 86.52% |

| 8 | Detection | HAM10000 | 7 | YOLOv5 + ResNet50 | 99% | 98.6% | 98.3% 1 |

| 14 | Detection | private | 2 | YOLOv8 | 78.2% | 81.6% | N/A 4 |

| 7 | Detection | ISIC | 10 | YOLOv7 | N/A | N/A | 72.5% |

| 18 | Detection | ISIC/Private | N/A | YOLOv8 | 96.2% | 94.4% | 98.3% |

| 19 | Detection/Class. | ISIC 2019 | 9 | YOLOv8n | 93.5% | 93.7% | N/A |

| 16 | Classification 2 | HAM10000 | 7 | SqueezeNet | 98.5% | 99.6% | N/A |

| 17 | Classification 2 | ISIC19 & 20 | N/A | SkinLiTE | 94.6% | 83.6% | N/A |

| 15 | Classification 2 | ISIC 2020 | 2 | MobileNetV2 | 98.0% | N/A | N/A |

| 10 | Classification 2 | ISIC 2020 | N/A | GRNet50 | N/A | 92.4% 3 | N/A |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.

Share and Cite

Krukar, N.; Omiotek, Z. Enchancing Robustness in Skin Lesion Detection: A Benchmark of 32 Models on a Novel Dataset Including Healthy Skin Images. Appl. Sci. 2026, 16, 99. https://doi.org/10.3390/app16010099

Krukar N, Omiotek Z. Enchancing Robustness in Skin Lesion Detection: A Benchmark of 32 Models on a Novel Dataset Including Healthy Skin Images. Applied Sciences. 2026; 16(1):99. https://doi.org/10.3390/app16010099

Chicago/Turabian StyleKrukar, Natalia, and Zbigniew Omiotek. 2026. "Enchancing Robustness in Skin Lesion Detection: A Benchmark of 32 Models on a Novel Dataset Including Healthy Skin Images" Applied Sciences 16, no. 1: 99. https://doi.org/10.3390/app16010099

APA StyleKrukar, N., & Omiotek, Z. (2026). Enchancing Robustness in Skin Lesion Detection: A Benchmark of 32 Models on a Novel Dataset Including Healthy Skin Images. Applied Sciences, 16(1), 99. https://doi.org/10.3390/app16010099