Dynamic Multi-Core Task Scheduling for Real-Time Hybrid Simulation Model in Power Grid: A Deep Reinforcement Learning-Based Method

Abstract

1. Introduction

- Task Modeling: We model the security and stability control system (SSCS) simulation tasks as a Directed Acyclic Graph (DAG). This systematically characterizes the intrinsic dependency relationships between tasks, providing a solid model foundation for scheduling in complex concurrency scenarios.

- Algorithm Framework: We introduce a deep reinforcement learning (DRL) framework to achieve dynamic, adaptive adjustment of task priorities and resource allocation. By employing multi-head and heterogeneous attention mechanisms, the proposed method breaks through the rigid limitations of traditional rule-based scheduling.

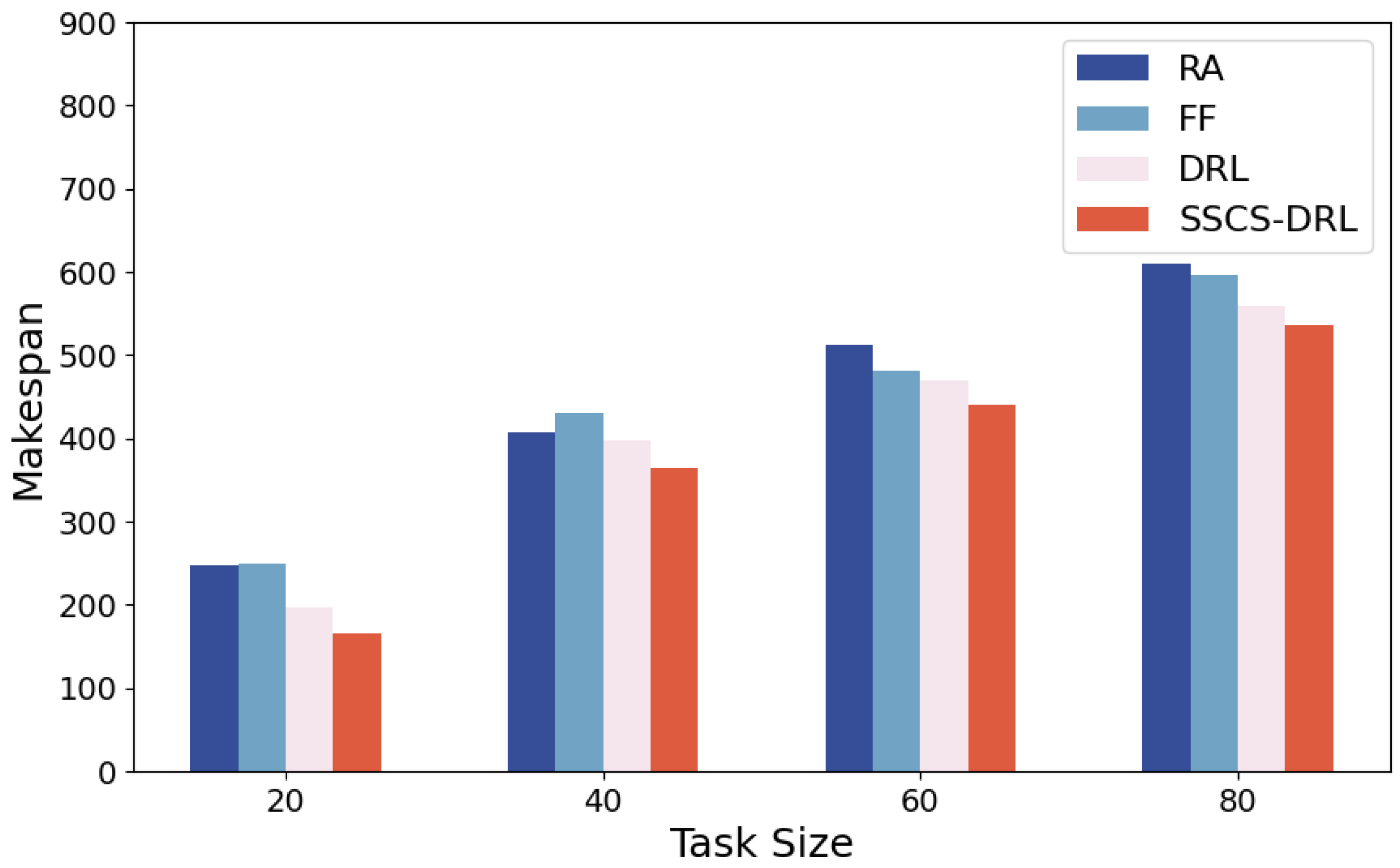

- Performance Optimization: Extensive simulations validate that the proposed method significantly enhances scheduling efficiency, effectively shortening the total execution time (makespan) and improving resource utilization compared to traditional baselines.

2. Related Work

3. Methodology

3.1. Problem Formulation

- : set of cores;

- : set of task nodes;

- : set of directed edges;

- : set of high-priority tasks;

- : set of low-priority tasks;

- : an indicator. It equals to 1 if core k schedules from task i to task j;otherwise, it equals 0; Specifically, the index 0 denotes a virtual start node, representing the initial idle state of a core, while the index denotes a virtual end node. Thus, = 1 indicates that task j is the first task scheduled on core k.

- : context-switching cost of core k when transitioning from task i to task j (excluding task execution costs). The resource requirement for executing task j itself is denoted by ;

- : scheduling cost of core k from i to j;

- : resource requirement of task j;

- D: resource capacity of a core.

3.2. DRL Problem Analysis

3.2.1. State Space

3.2.2. Action Space

3.2.3. Transition Dynamics

3.2.4. Reward Function

3.3. A DRL Solution for Multi-Core Task Scheduling

3.3.1. Master Core

3.3.2. Main Cores

3.3.3. Auxiliary Cores

4. Evaluation

4.1. Experimental Settings

4.2. Experimental Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Chamana, M.; Bhatta, R.; Schmitt, K.; Shrestha, R.; Bayne, S. An Integrated Testbed for Power System Cyber-Physical Operations Training. Appl. Sci. 2023, 13, 9451. [Google Scholar] [CrossRef]

- Rubio, S.; Bogarra, S.; Nunes, M.; Gomez, X. Smart Grid Protection, Automation and Control: Challenges and Opportunities. Appl. Sci. 2025, 15, 3186. [Google Scholar] [CrossRef]

- Grigsby, L.L. Power System Stability and Control; CRC Press: Boca Raton, FL, USA, 2007. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, UK, 1998; Volume 1. [Google Scholar]

- Bertsekas, D. Dynamic Programming and Optimal Control: Volume I; Athena Scientific: Belmont, MA, USA, 2012; Volume 4. [Google Scholar]

- Jiang, X.; Long, X. Improved decomposition-based global EDF scheduling of DAGs. J. Circuits Syst. Comput. 2018, 27, 1850101. [Google Scholar] [CrossRef]

- Silver, D.; Schrittwieser, J.; Simonyan, K.; Antonoglou, I.; Huang, A.; Guez, A.; Hubert, T.; Baker, L.; Lai, M.; Bolton, A.; et al. Mastering the game of go without human knowledge. Nature 2017, 550, 354–359. [Google Scholar] [CrossRef]

- Deng, B.; Zhai, Z. A dynamic task scheduling algorithm for airborne device clouds. Int. J. Aerosp. Eng. 2024, 2024, 9922714. [Google Scholar] [CrossRef]

- Davis, R.I.; Burns, A. A survey of hard real-time scheduling for multiprocessor systems. ACM Comput. Surv. (CSUR) 2011, 43, 35. [Google Scholar] [CrossRef]

- Grajcar, M. Genetic list scheduling algorithm for scheduling and allocation on a loosely coupled heterogeneous multiprocessor system. In Proceedings of the 36th Annual ACM/IEEE Design Automation Conference, New Orleans, LA, USA, 21–25 June 1999; pp. 280–285. [Google Scholar]

- Topcuoglu, H.; Hariri, S.; Wu, M.Y. Performance-effective and low-complexity task scheduling for heterogeneous computing. IEEE Trans. Parallel Distrib. Syst. 2002, 13, 260–274. [Google Scholar] [CrossRef]

- Pop, F.; Dobre, C.; Cristea, V. Genetic algorithm for DAG scheduling in grid environments. In Proceedings of the 2009 IEEE 5th International Conference on Intelligent Computer Communication and Processing, Cluj-Napoca, Romania, 27–29 August 2009; pp. 299–305. [Google Scholar]

- Tseng, C.T.; Liao, C.J. A particle swarm optimization algorithm for hybrid flow-shop scheduling with multiprocessor tasks. Int. J. Prod. Res. 2008, 46, 4655–4670. [Google Scholar] [CrossRef]

- Jayanetti, A.; Halgamuge, S.; Buyya, R. Multi-Agent Deep Reinforcement Learning Framework for Renewable Energy-Aware Workflow Scheduling on Distributed Cloud Data Centers. IEEE Trans. Parallel Distrib. Syst. 2024, 35, 604–615. [Google Scholar] [CrossRef]

- Mao, H.; Alizadeh, M.; Menache, I.; Kandula, S. Resource management with deep reinforcement learning. In Proceedings of the 15th ACM Workshop on Hot Topics in Networks, Atlanta, GA, USA, 9–10 November 2016; pp. 50–56. [Google Scholar]

- Mangalampalli, S.; Karri, G.R.; Kumar, M.; Khalaf, O.I.; Romero, C.A.T.; Sahib, G.A. DRLBTSA: Deep reinforcement learning based task-scheduling algorithm in cloud computing. Multimed. Tools Appl. 2024, 83, 8359–8387. [Google Scholar] [CrossRef]

- Manduva, V.C. Multi-Agent Reinforcement Learning for Efficient Task Scheduling in Edge-Cloud Systems. Int. J. Mod. Comput. 2022, 5, 108–129. [Google Scholar]

- Supreethi, K.P.; Jayasingh, B.B. Deep Reinforcement Learning for Dynamic Task Scheduling in Edge-Cloud Environments. Int. J. Electr. Comput. Eng. Syst. 2024, 15, 837–850. [Google Scholar] [CrossRef]

- Li, J.; Zhang, X.; Wei, Z.; Wei, J.; Ji, Z. Energy-aware task scheduling optimization with deep reinforcement learning for large-scale heterogeneous systems. CCF Trans. High Perform. Comput. 2021, 3, 383–392. [Google Scholar]

- Smit, I.G.; Zhou, J.; Reijnen, R.; Wu, Y.; Chen, J.; Zhang, C.; Bukhsh, Z.; Zhang, Y.; Nuijten, W. Graph neural networks for job shop scheduling problems: A survey. Comput. Oper. Res. 2024, 176, 106914. [Google Scholar] [CrossRef]

- Song, W.; Chen, X.; Li, Q.; Cao, Z. Flexible job-shop scheduling via graph neural network and deep reinforcement learning. IEEE Trans. Ind. Inform. 2022, 19, 1600–1610. [Google Scholar] [CrossRef]

- Liu, Z.; Huang, L.; Gao, Z.; Luo, M.; Hosseinalipour, S.; Dai, H. GA-DRL: Graph neural network-augmented deep reinforcement learning for DAG task scheduling over dynamic vehicular clouds. IEEE Trans. Netw. Serv. Manag. 2024, 21, 4226–4242. [Google Scholar] [CrossRef]

- Parisotto, E.; Song, F.; Rae, J.; Pascanu, R.; Gulcehre, C.; Jayakumar, S.; Jaderberg, M.; Kaufman, R.L.; Clark, A.; Noury, S.; et al. Stabilizing transformers for reinforcement learning. In Proceedings of the 37th International Conference on Machine Learning, PMLR, Virtual, 13–18 July 2020; Volume 119, pp. 7487–7498. [Google Scholar]

- Nachum, O.; Gu, S.; Lee, H.; Levine, S. Data-efficient hierarchical reinforcement learning. arXiv 2018, arXiv:1805.08296. [Google Scholar] [CrossRef]

- Kool, W.; Van Hoof, H.; Welling, M. Attention, learn to solve routing problems! arXiv 2018, arXiv:1803.08475. [Google Scholar]

- He, Y.; Wang, Y.; Lin, Q.; Li, J. Meta-hierarchical reinforcement learning (MHRL)-based dynamic resource allocation for dynamic vehicular networks. IEEE Trans. Veh. Technol. 2022, 71, 3495–3506. [Google Scholar] [CrossRef]

- Han, L.; Zhou, Z.; Li, Z. Pantheon: Preemptible multi-dnn inference on mobile edge gpus. In Proceedings of the 22nd Annual International Conference on Mobile Systems, Applications and Services, Tokyo, Japan, 3–7 June 2024; pp. 465–478. [Google Scholar]

- Zhang, D.; Han, X.; Deng, C. Review on the research and practice of deep learning and reinforcement learning in smart grids. CSEE J. Power Energy Syst. 2018, 4, 362–370. [Google Scholar] [CrossRef]

- Glavic, M.; Fonteneau, R.; Ernst, D. Reinforcement learning for electric power system decision and control: Past considerations and perspectives. IFAC-PapersOnLine 2017, 50, 6918–6927. [Google Scholar] [CrossRef]

- Dixit, V.; Patil, M.B.; Chandorkar, M.C. Real time simulation of power electronic systems on multi-core processors. In Proceedings of the 2009 International Conference on Power Electronics and Drive Systems (PEDS), Taipei, Taiwan, 2–5 November 2009; pp. 1524–1529. [Google Scholar]

- Jadon, S.; Kannan, P.K.; Kalaria, U.; Varsha, K.; Gupta, K.; Honnavalli, P.B. A comprehensive study of load balancing approaches in real-time multi-core systems for mixed real-time tasks. IEEE Access 2024, 12, 53373–53395. [Google Scholar] [CrossRef]

- Gai, K.; Qiu, M.; Zhao, H.; Sun, X. Resource management in sustainable cyber-physical systems using heterogeneous cloud computing. IEEE Trans. Sustain. Comput. 2017, 3, 60–72. [Google Scholar] [CrossRef]

- Li, Y.; Yan, J.; Naili, M. Deep reinforcement learning for penetration testing of cyber-physical attacks in the smart grid. In Proceedings of the 2022 International Joint Conference on Neural Networks (IJCNN), Padua, Italy, 18–23 July 2022; pp. 1–9. [Google Scholar]

- Ng, A.Y.; Harada, D.; Russell, S. Policy invariance under reward transformations: Theory and application to reward shaping. In Proceedings of the International Conference on Machine Learning, Bled, Slovenia, 27–30 June 1999. [Google Scholar]

| Method Type | Priority Handling | Multi-Core Optimization | Dynamic Adaptability | Power System Application |

|---|---|---|---|---|

| Traditional Heuristic Scheduling | Static priority | Limited | Weak | General Method |

| Classic DRL Scheduling | Dynamic Adjustment | Partially Optimized | Medium | General Method |

| Proposed Method (SSCS-DRL) | Dynamic Priority + Attention Mechanism | Deeply Optimized | Excellent | Specially Designed |

| Scenario | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| Number of cores | 2 | 4 | 6 | 8 | 8 | 8 | 8 | 8 |

| Number of Tasks | 100 | 100 | 100 | 100 | 20 | 40 | 60 | 80 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.

Share and Cite

Hu, D.; Wang, Z.; Liu, Q.; Xu, J.; Zhang, L.; Shen, B. Dynamic Multi-Core Task Scheduling for Real-Time Hybrid Simulation Model in Power Grid: A Deep Reinforcement Learning-Based Method. Appl. Sci. 2026, 16, 192. https://doi.org/10.3390/app16010192

Hu D, Wang Z, Liu Q, Xu J, Zhang L, Shen B. Dynamic Multi-Core Task Scheduling for Real-Time Hybrid Simulation Model in Power Grid: A Deep Reinforcement Learning-Based Method. Applied Sciences. 2026; 16(1):192. https://doi.org/10.3390/app16010192

Chicago/Turabian StyleHu, Dingyu, Zhi Wang, Qitao Liu, Jianbing Xu, Lu Zhang, and Bo Shen. 2026. "Dynamic Multi-Core Task Scheduling for Real-Time Hybrid Simulation Model in Power Grid: A Deep Reinforcement Learning-Based Method" Applied Sciences 16, no. 1: 192. https://doi.org/10.3390/app16010192

APA StyleHu, D., Wang, Z., Liu, Q., Xu, J., Zhang, L., & Shen, B. (2026). Dynamic Multi-Core Task Scheduling for Real-Time Hybrid Simulation Model in Power Grid: A Deep Reinforcement Learning-Based Method. Applied Sciences, 16(1), 192. https://doi.org/10.3390/app16010192