Multi-Module Combination for Underwater Image Enhancement

Abstract

1. Introduction

- In this paper, we present an algorithm that utilizes color bias detection to identify color bias in images. A white balance technique is then applied to process the color-biased images. This preprocessing step enhances the detection of color bias, thereby improving the accuracy of subsequent image processing tasks.

- We employ a defogging and contrast enhancement algorithm that utilizes a rank-one prior matrix and curve transformation. The clarity and legibility of the underwater image are improved by converting it to the LAB color space, removing fog with the rank-one prior matrix, and enhancing the image’s contrast through a curve transformation.

- The defogged image is combined with a contrast-enhanced image to produce a clearer and more recognizable underwater image.

2. Related Work

3. Proposed Method

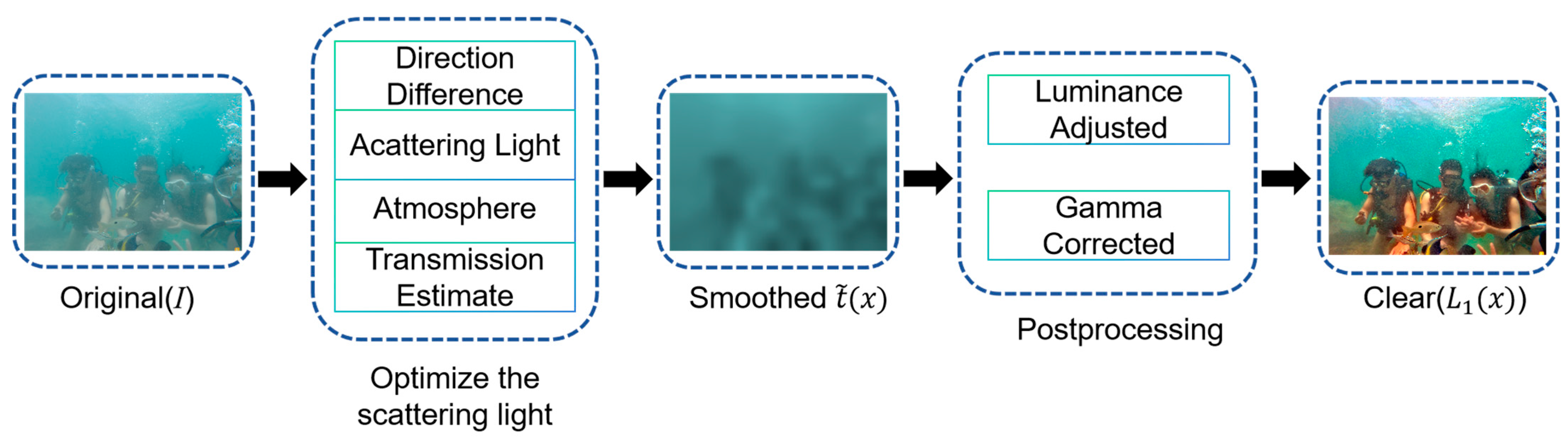

3.1. Framework Architecture

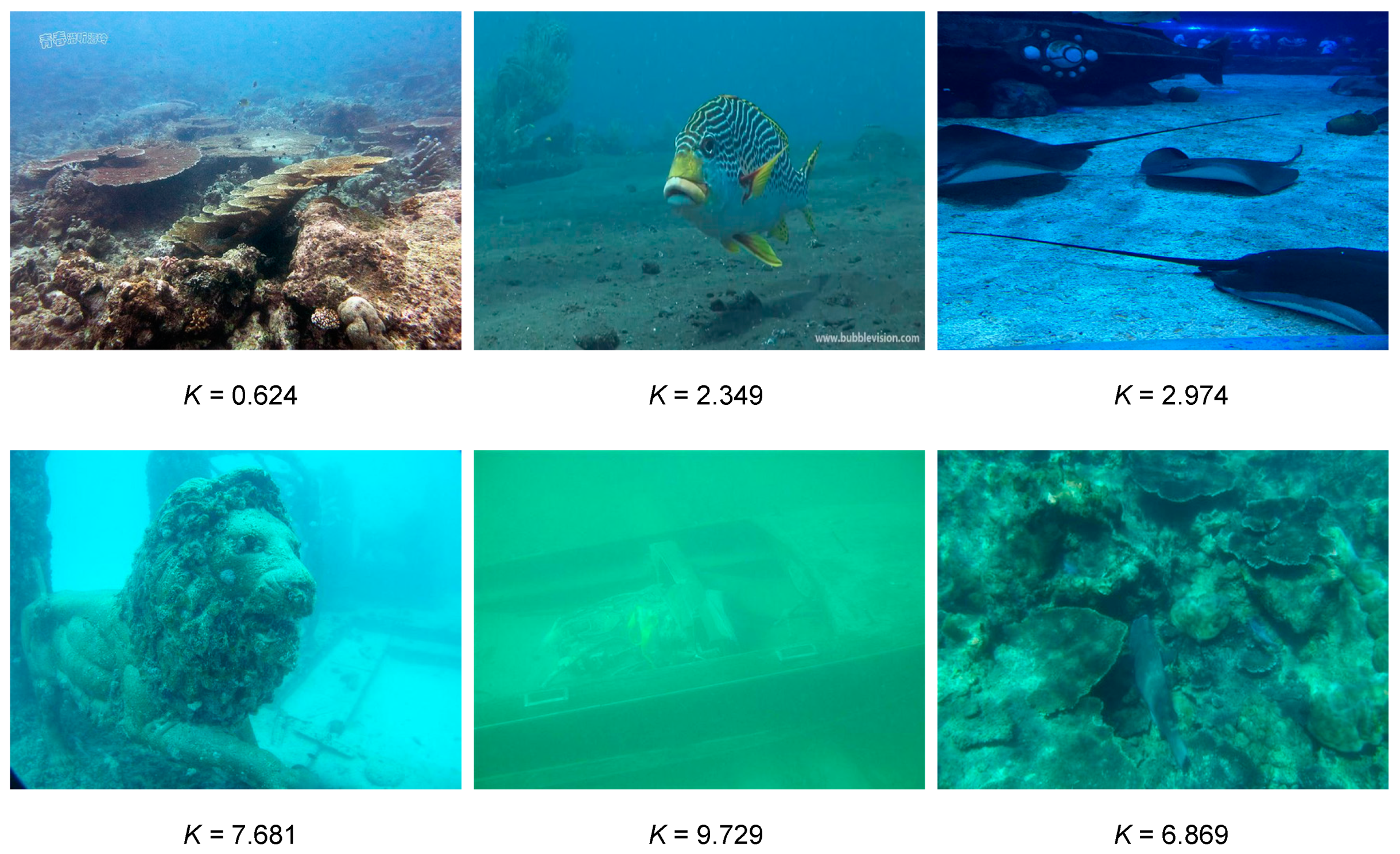

3.2. Color Devviation Detection Module

3.3. Color and White Balance Correction Module

3.4. Visibility Restoration Module

3.5. Comparative Enhancement Module

3.6. Fusion Module

3.6.1. Weighted Design Based on Pixel Intensity

3.6.2. Weighted Design Based on Global Gradient

4. Experiment

4.1. Experimental Details

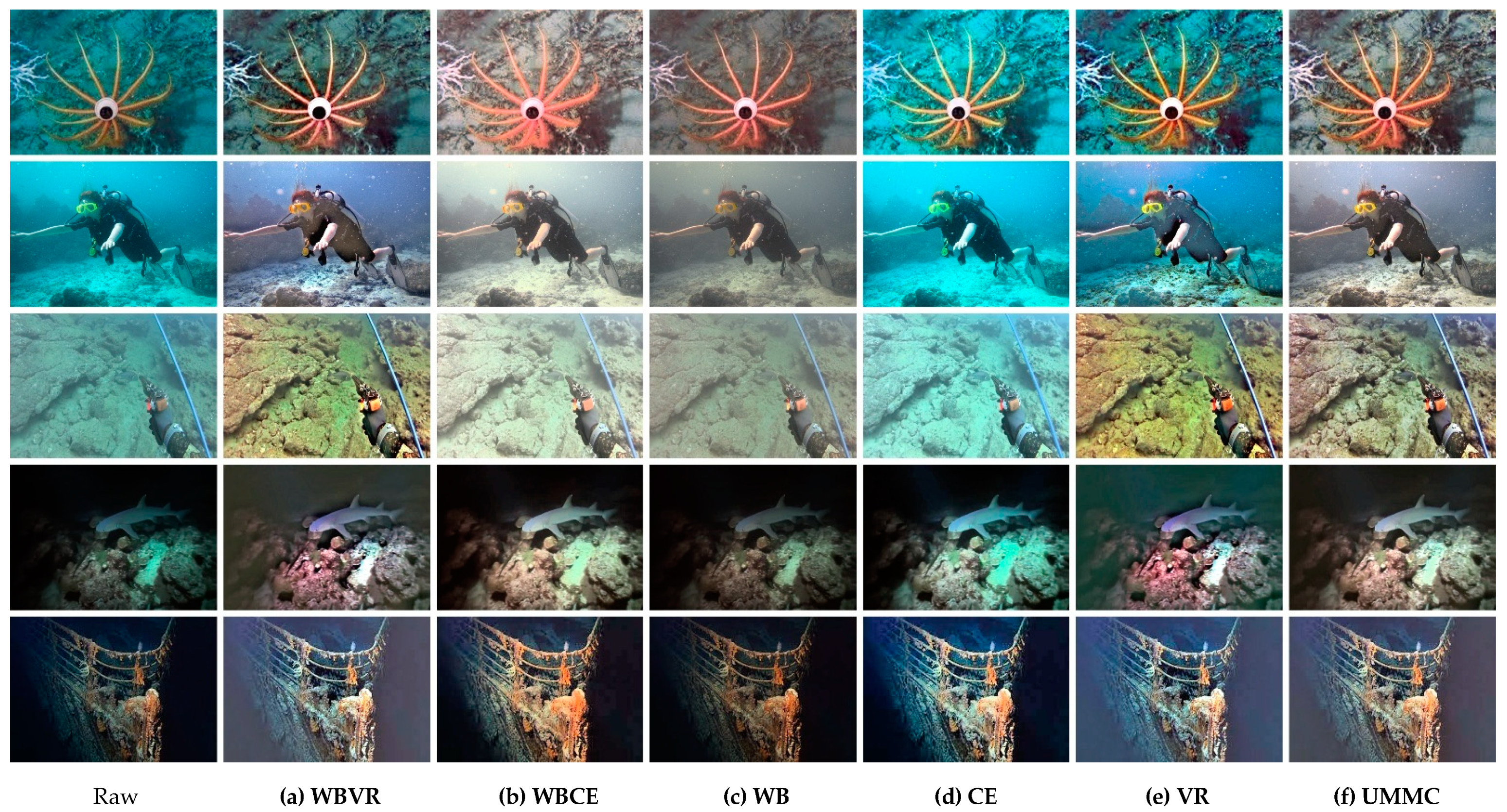

4.1.1. Comparative Methods

4.1.2. Metrics

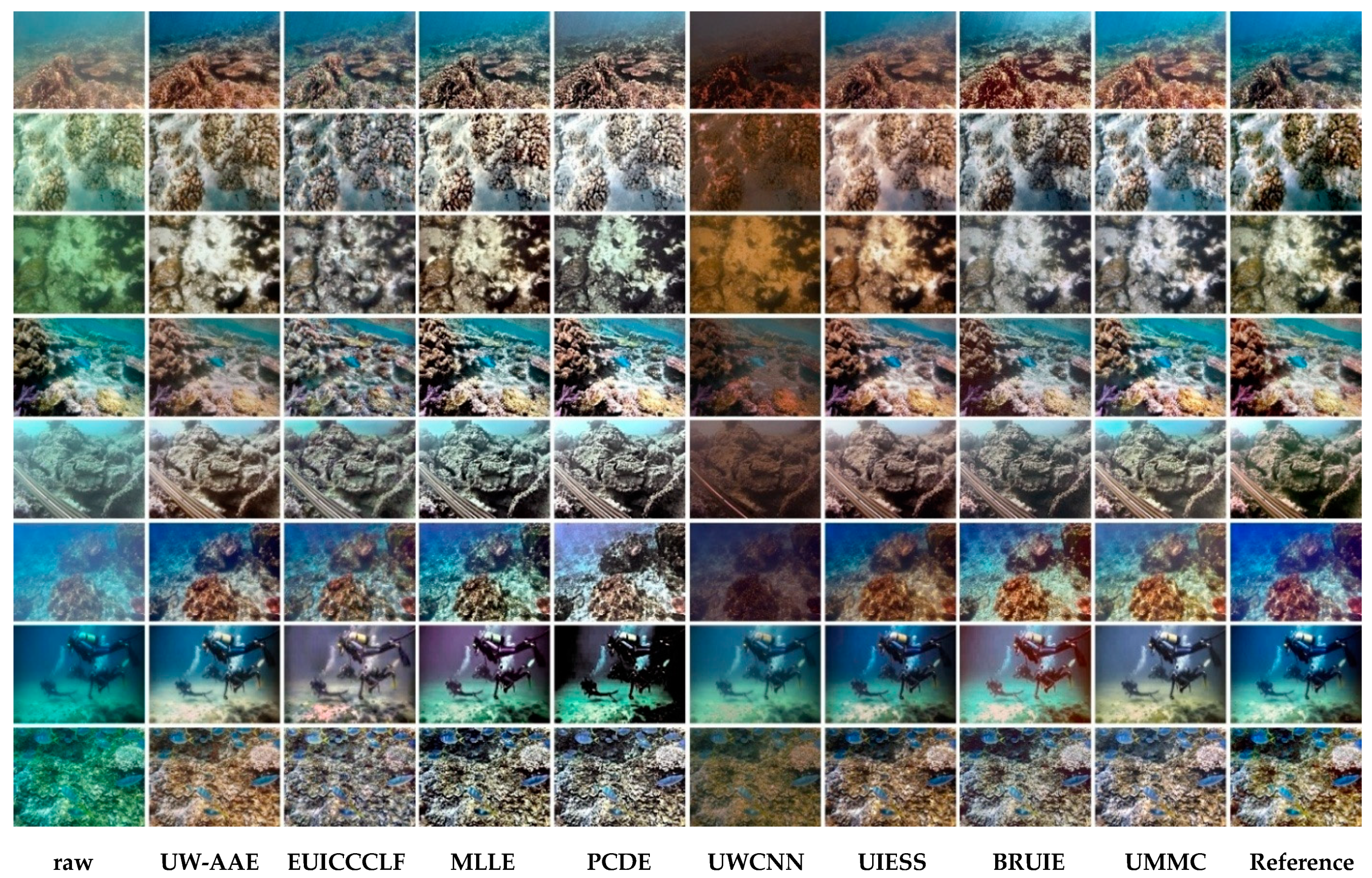

4.2. UIEB Dataset Evaluation

4.3. SUID Dataset Evaluation

4.4. Keypoint Matching

4.5. Ablation Study

4.6. Parameters and FLOPs

4.7. Summary

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Correction Statement

References

- Luo, G.; Wang, Z.; Chen, Y.; Wang, F.; Zhang, J.; Ma, Z.; Jiang, Z. Design and hydrodynamic analysis of an automated polymer composite mattress deployment system for offshore oil and gas pipeline protection. Mar. Georesour. Geotechnol. 2024, 1–17. [Google Scholar] [CrossRef]

- Khan, A.; Ali, S.S.A.; Anwer, A.; Adil, S.H.; Mériaudeau, F. Subsea pipeline corrosion estimation by restoring and enhancing degraded underwater images. IEEE Access 2018, 6, 40585–40601. [Google Scholar] [CrossRef]

- Azmi, K.Z.M.; Ghani, A.S.A.; Yusof, Z.M.; Ibrahim, Z. Natural-based underwater image color enhancement through fusion of swarm-intelligence algorithm. Appl. Soft Comput. 2019, 85, 105810. [Google Scholar] [CrossRef]

- Desai, C.; Tabib, R.A.; Reddy, S.; Patil, U.; Mudenagudi, U. RUIG: Realistic underwater image generation towards restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision Pattern Recognition Workshops, Virtual, 19–25 June 2021; pp. 2181–2189. [Google Scholar] [CrossRef]

- Muhammad, N.; Arini; Fahrianto, F.J. Underwater image enhancement using guided joint bilateral filter. In Proceedings of the 2018 6th International Conference on Cyber and IT Service Management (CITSM), Parapat, Indonesia, 7–9 August 2018; pp. 1–6. [Google Scholar]

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.T.W.; Tao, D. An underwater image enhancement benchmark dataset and beyond. IEEE Trans. Image Process. 2019, 29, 4376–4389. [Google Scholar] [CrossRef]

- Ding, W.; Bi, D.; He, L.; Fan, Z. Contrast-enhanced Fusion of Infrared and Visible Images. Opt. Eng. 2018, 57. [Google Scholar] [CrossRef]

- Zhang, W.; Dong, L.; Zhang, T.; Xu, W. Enhancing Underwater Image Via Color Correction and Bi-interval Contrast Enhancement. Signal Process. Image Commun. 2021, 90, 116030. [Google Scholar] [CrossRef]

- Zhang, W.; Wang, Y.; Li, C. Underwater Image Enhancement by Attenuated Color Channel Correction and Detail Preserved Contrast Enhancement. IEEE J. Ocean. Eng. 2022, 47, 718–735. [Google Scholar] [CrossRef]

- Xu, H.; Ma, J.; Jiang, J.; Guo, X.; Ling, H. U2Fusion: A Unified Unsupervised Image Fusion Network. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 502–518. [Google Scholar] [CrossRef]

- Zhang, H.; Xu, H.; Xiao, Y.; Guo, X.; Ma, J. Rethinking the Image Fusion: A Fast Unified Image Fusion Network Based on Proportional Maintenance of Gradient and Intensity. Proc. AAAI Conf. Artif. Intell. 2020, 34, 12797–12804. [Google Scholar] [CrossRef]

- Tang, L.; Yuan, J.; Ma, J. Image Fusion in the Loop of High-Level Vision Tasks: A Semantic-Aware Real-Time Infrared and Visible Image Fusion Network. Inf. Fusion 2022, 82, 28–42. [Google Scholar] [CrossRef]

- Arya, P.; Kumar, S.; Agarwal, A.; Yenneti, N.; Pai, N. Redefining Well Exposedness for Locally Adaptive Multi-Exposure Fusion. In Proceedings of the ICASSP 2025–2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; pp. 1–5. [Google Scholar] [CrossRef]

- Sudhanthira, K.; Sathya, P.D. Color Balance and Fusion for Underwater Image Enhancement. Pramana Res. J. 2019, 9, 1–13. [Google Scholar]

- Jiang, Q.; Zhang, Y.; Bao, F.; Zhao, X.; Zhang, C.; Liu, P. Two-step Domain Adaptation for Underwater Image Enhancement. Pattern Recognit. 2022, 122, 108324. [Google Scholar] [CrossRef]

- Zhou, J.; Sun, J.; Zhang, W.; Lin, Z. Multi-view Underwater Image Enhancement Method Via Embedded Fusion Mechanism. Eng. Appl. Artif. Intell. 2023, 121, 105946. [Google Scholar] [CrossRef]

- Zhang, W.; Zhou, L.; Zhuang, P.; Li, G.; Pan, X.; Zhao, W.; Li, C. Underwater Image Enhancement Via Weighted Wavelet Visual Perception Fusion. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 2469–2483. [Google Scholar] [CrossRef]

- Liu, J.; Liu, R.W.; Sun, J.; Zeng, T. Rank-one prior: Real-time scene recovery. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 8845–8860. [Google Scholar] [CrossRef] [PubMed]

- Li, F.; Wu, J.; Wang, Y.; Zhao, Y.; Zhang, X. A color cast detection algorithm of robust performance. In Proceedings of the IEEE Fifth International Conference on Advanced Computational Intelligence, Nanjing, China, 18–20 October 2012; pp. 662–664. [Google Scholar]

- Gasparini, F.; Schettini, R. Color correction for digital photographs. In Proceedings of the 12th International Conference on Image Analysis and Processing, Mantova, Italy, 17–19 September 2003; pp. 646–651. [Google Scholar] [CrossRef]

- Wang, Y.; Tang, C.; Cai, M.; Yin, J.; Wang, S.; Cheng, L.; Wang, R.; Tan, M. Real-time underwater onboard vision sensing system for robotic gripping. IEEE Trans. Instrum. 2021, 70, 1–11. [Google Scholar] [CrossRef]

- Afifi, M.; Brown, M.S. Interactive White Balancing for Camera-Rendered Images. Color Imaging Conf. 2020, 28, 136–141. [Google Scholar] [CrossRef]

- Jaffe, J. Computer modeling and the design of optimal underwater imaging systems. IEEE J. Ocean. Eng. 1990, 15, 101–111. [Google Scholar] [CrossRef]

- Huang, S.-C.; Cheng, F.-C.; Chiu, Y.-S. Efficient contrast enhancement using adaptive gamma correction with weighting distribution. IEEE Trans. Image Process. 2012, 22, 1032–1041. [Google Scholar] [CrossRef]

- Lee, S.-H.; Park, J.S.; Cho, N.I. A multi-exposure image fusion based on the adaptive weights reflecting the relative pixel intensity and global gradient. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 1737–1741. [Google Scholar]

- An, S.; Xu, L.; Deng, Z.; Zhang, H. HFM: A Hybrid Fusion Method for Underwater Image Enhancement. Eng. Appl. Artif. Intell. 2024, 127. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Hou, G.; Zhao, X.; Pan, Z.; Yang, H.; Tan, L.; Li, J. Benchmarking underwater image enhancement and restoration, and beyond. IEEE Access 2020, 8, 122078–122091. [Google Scholar] [CrossRef]

- Islam, M.J.; Xia, Y.; Sattar, J. Fast underwater image enhancement for improved visual perceptionn. IEEE Robot. Autom. Lett. 2019, 5, 3227–3234. [Google Scholar] [CrossRef]

- Luo, G.; He, G.; Jiang, Z.; Luo, C. Attention-based mechanism and adversarial autoencoder for underwater image enhancement. Appl. Sci. 2023, 13, 9956. [Google Scholar] [CrossRef]

- Hu, H.; Xu, S.; Zhao, Y.; Chen, H.; Yang, S.; Liu, H.; Zhai, J.; Li, X. Enhancing Underwater Image via Color-Cast Correction and Luminance Fusion. IEEE J. Ocean. Eng. 2024, 49, 15–29. [Google Scholar] [CrossRef]

- Zhang, W.; Zhuang, P.; Sun, H.; Li, G.; Kwong, S.; Li, C. Underwater image enhancement via minimal color loss and locally adaptive contrast enhancement. IEEE Trans. Image Process. 2022, 31, 3997–4010. [Google Scholar] [CrossRef]

- Zhang, W.; Jin, S.; Zhuang, P.; Liang, Z.; Li, C. Underwater Image Enhancement via Piecewise Color Correction and Dual Prior Optimized Contrast Enhancement. IEEE Signal Process. Lett. 2023, 30, 229–233. [Google Scholar] [CrossRef]

- Li, C.; Anwar, S.; Porikli, F. Underwater scene prior inspired deep underwater image and video enhancement. Pattern Recognit. 2020, 98, 107038. [Google Scholar] [CrossRef]

- Chen, Y.-W.; Pei, S.-C. Domain adaptation for underwater image enhancement via content and style separation. ISSS J. Micro Smart Syst. 2022, 10, 90523–90534. [Google Scholar] [CrossRef]

- Zhuang, P.; Li, C.; Wu, J. Bayesian retinex underwater image enhancement. Eng. Appl. Artif. Intell. 2021, 101, 104171. [Google Scholar] [CrossRef]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “completely blind” image quality analyzer. IEEE Signal Process. Lett. 2013, 20, 209–212. [Google Scholar] [CrossRef]

- Yang, M.; Sowmya, A. An underwater color image quality evaluation metric. IEEE Trans. Image Process. 2015, 24, 6062–6071. [Google Scholar] [CrossRef] [PubMed]

- Panetta, K.; Gao, C.; Agaian, S. Human-visual-system-inspired underwater image quality measures. IEEE J. Ocean. Eng. 2016, 41, 541–551. [Google Scholar] [CrossRef]

- Korhonen, J.; You, J. Peak signal-to-noise ratio revisited: Is simple beautiful? In Proceedings of the Fourth International Workshop on Quality of Multimedia Experience, Melbourne, VIC, Australia, 5–7 July 2012; pp. 37–38. [Google Scholar]

- Horé, A.; Ziou, D. Image quality metrics: PSNR vs. SSIM. In Proceedings of the 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 2366–2369. [Google Scholar]

| Methods | Metric | ||

|---|---|---|---|

| NIQE | UCIQE | UIQM | |

| UW-AAE | 15.705 | 0.789 | 115.897 |

| EUICCCLF | 15.406 | 0.704 | 116.363 |

| MLLE | 12.771 | 2.475 | 114.243 |

| PCDE | 14.085 | 1.453 | 126.915 |

| UWCNN | 15.328 | 0.897 | 69.118 |

| UIESS | 16.896 | 1.059 | 99.772 |

| BRUIE | 12.970 | 0.812 | 115.0823 |

| UMMC | 12.612 | 2.532 | 124.220 |

| Methods | Metric | |

|---|---|---|

| PSNR | SSIM | |

| UW-AAE | 9.679 | 0.361 |

| EUICCCLF | 16.125 | 0.786 |

| MLLE | 18.010 | 0.752 |

| PCDE | 14.703 | 0.636 |

| UWCNN | 16.162 | 0.792 |

| UIESS | 16.071 | 0.866 |

| BRUIE | 17.422 | 0.758 |

| UMMC | 18.258 | 0.824 |

| Methods | Left Match Point | Right Match Point |

|---|---|---|

| Raw | 1110 | 1128 |

| UW-AAE | 2367 | 2341 |

| EUICCCLF | 4149 | 4216 |

| MLLE | 3132 | 3104 |

| PCDE | 4031 | 4054 |

| UWCNN | 258 | 233 |

| UIESS | 1637 | 1599 |

| BRUIE | 4260 | 4260 |

| UMMC | 3562 | 3474 |

| Methods | Metric | ||

|---|---|---|---|

| NIQE | UCIQE | UIQM | |

| WBVR | 15.254 | 0.782 | 104.244 |

| WBCE | 14.332 | 0.633 | 104.375 |

| WB | 16.417 | 0.703 | 100.291 |

| CE | 12.979 | 1.757 | 113.581 |

| VR | 12.778 | 0.784 | 104.663 |

| UMMC | 12.612 | 2.532 | 124.220 |

| Methods | Platform | #Param. | FLOPs | Time (s) | PSNR | SSIM |

|---|---|---|---|---|---|---|

| UW-AAE | TensorFlow | 148.77 M | 2805.34 G | 6.97 | 17.12 | 0.85 |

| EUICCCLF | MATLAB | - | - | 0.02 | 16.82 | 0.71 |

| MLLE | MATLAB | - | - | 0.08 | 16.26 | 0.64 |

| PCDE | MATLAB | - | - | 0.16 | 15.13 | 0.60 |

| UWCNN | PyTorch | 0.04 M | 2.61 G | 0.12 | 11.08 | 0.30 |

| UIESS | PyTorch | 4.2 M | 26.35 G | 0.43 | 23.37 | 0.73 |

| BRUIE | MATLAB | - | - | 0.15 | 15.13 | 0.60 |

| UMMC | MATLAB | - | - | 0.07 | 18.03 | 0.87 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, Z.; Wang, H.; He, G.; Chen, J.; Feng, W.; Luo, G. Multi-Module Combination for Underwater Image Enhancement. Appl. Sci. 2025, 15, 5200. https://doi.org/10.3390/app15095200

Jiang Z, Wang H, He G, Chen J, Feng W, Luo G. Multi-Module Combination for Underwater Image Enhancement. Applied Sciences. 2025; 15(9):5200. https://doi.org/10.3390/app15095200

Chicago/Turabian StyleJiang, Zhe, Huanhuan Wang, Gang He, Jiawang Chen, Wei Feng, and Gaosheng Luo. 2025. "Multi-Module Combination for Underwater Image Enhancement" Applied Sciences 15, no. 9: 5200. https://doi.org/10.3390/app15095200

APA StyleJiang, Z., Wang, H., He, G., Chen, J., Feng, W., & Luo, G. (2025). Multi-Module Combination for Underwater Image Enhancement. Applied Sciences, 15(9), 5200. https://doi.org/10.3390/app15095200