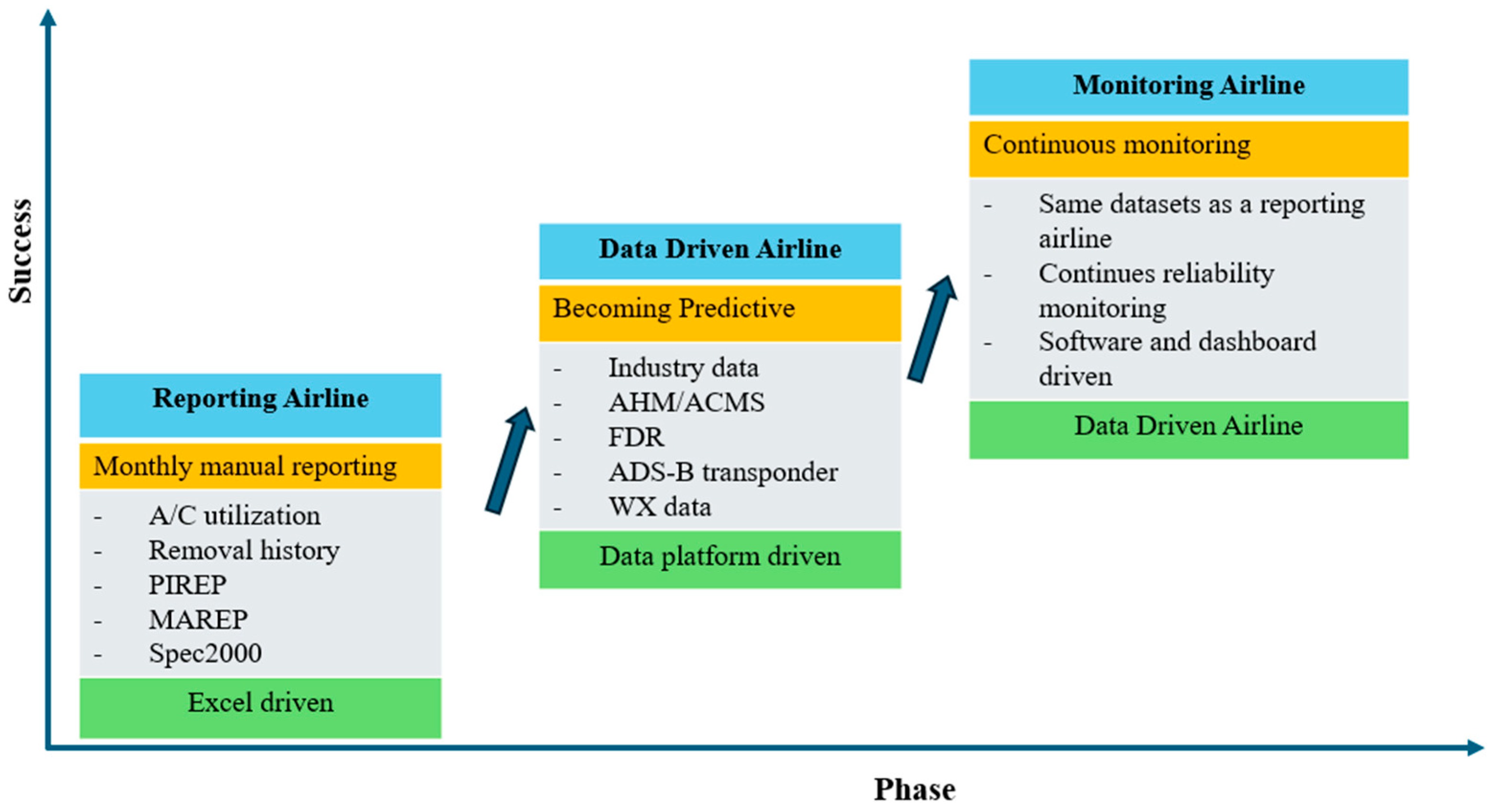

This section outlines the results of analyzing and predicting AOG events using advanced statistical tools in Python, including ARIMA, Holt–Winters, and Logistic Regression. Key variables such as part failure rates, maintenance compliance, and downtime were examined, revealing links between AOG occurrences and factors like maintenance strategies, spare parts availability, and environmental conditions.

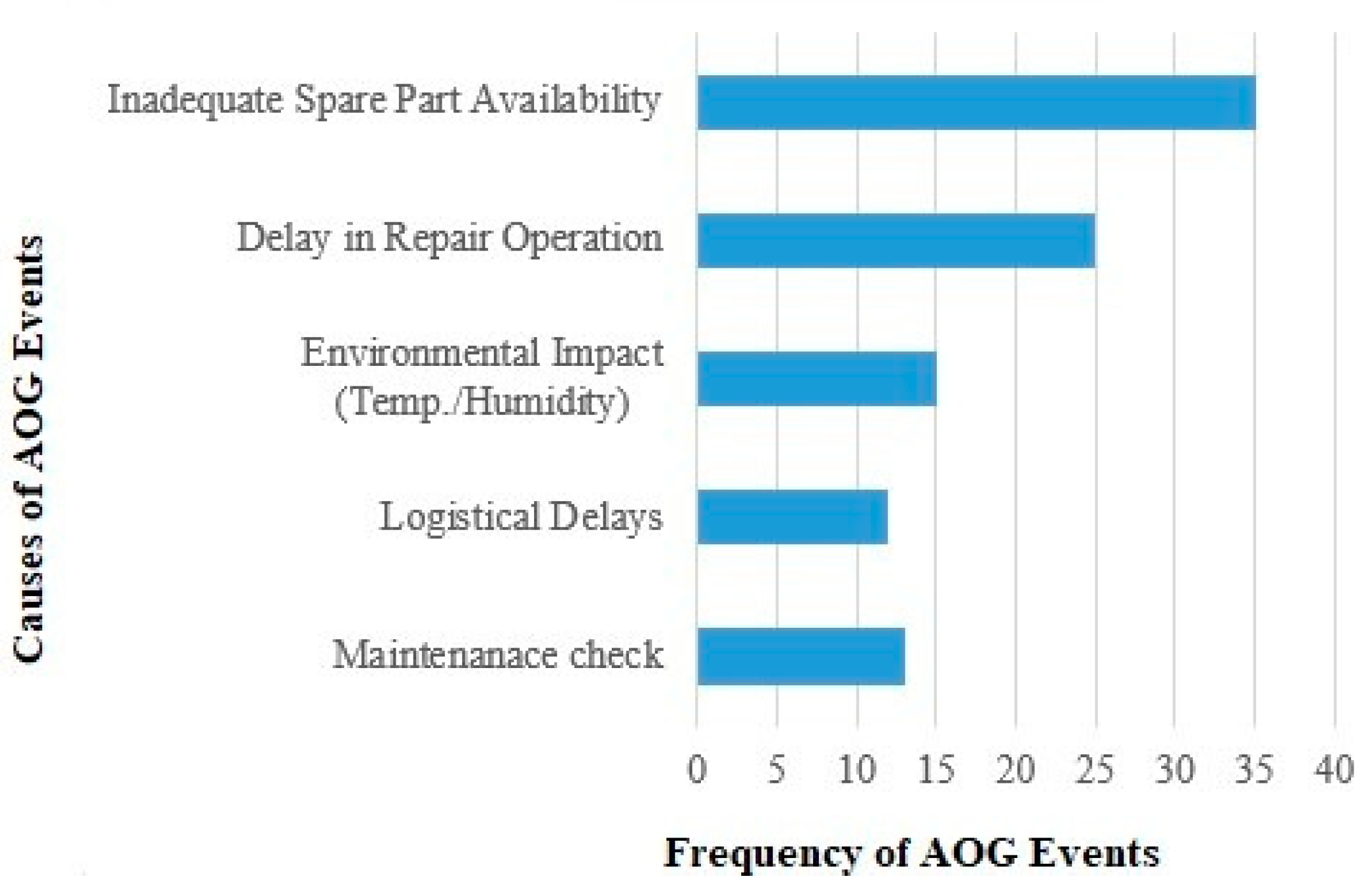

Key priorities were identified to improve coordination between maintenance and logistics teams and integrate real-time data into maintenance schedules. Visualizations like bar charts illustrated the leading causes of AOG incidents, including inadequate spare parts, repair delays, and environmental impacts.

The high frequency of spare part shortages indicates forecasting and inventory management inefficiencies. This suggests that airlines should adopt advanced predictive tools, such as Holt–Winters models, to anticipate demand better and optimize spare part distribution. Similarly, delays in repair operations point to gaps in maintenance processes, possibly caused by scheduling inefficiencies or resource limitations. Addressing these delays through enhanced coordination between maintenance and supply chain teams would significantly improve response times and reduce AOG occurrences. The chart also emphasizes the role of Preventive Maintenance Compliance (PMC) in mitigating AOG events. Airlines that adhere to strict maintenance schedules typically experience fewer AOG incidents, as timely servicing prevents unexpected failures.

6.1. Survey Observations and Results

For this study, the authors identified the research question by conducting a structured survey through Google Form. The survey participants were from airline logistics, supply chain, and engineering departments. The goal was to identify key issues in spare parts management, troubleshooting processes, and maintenance practice delays resulting in longer AOG events. To gather data for the research, a probability sampling method was used. The questionnaire was prepared and organized in the following format:

- -

Usage of open-ended and closed-ended questions where the participant must select an answer from a set of possibilities offered by the interviewer.

- -

Likert Scale questions:

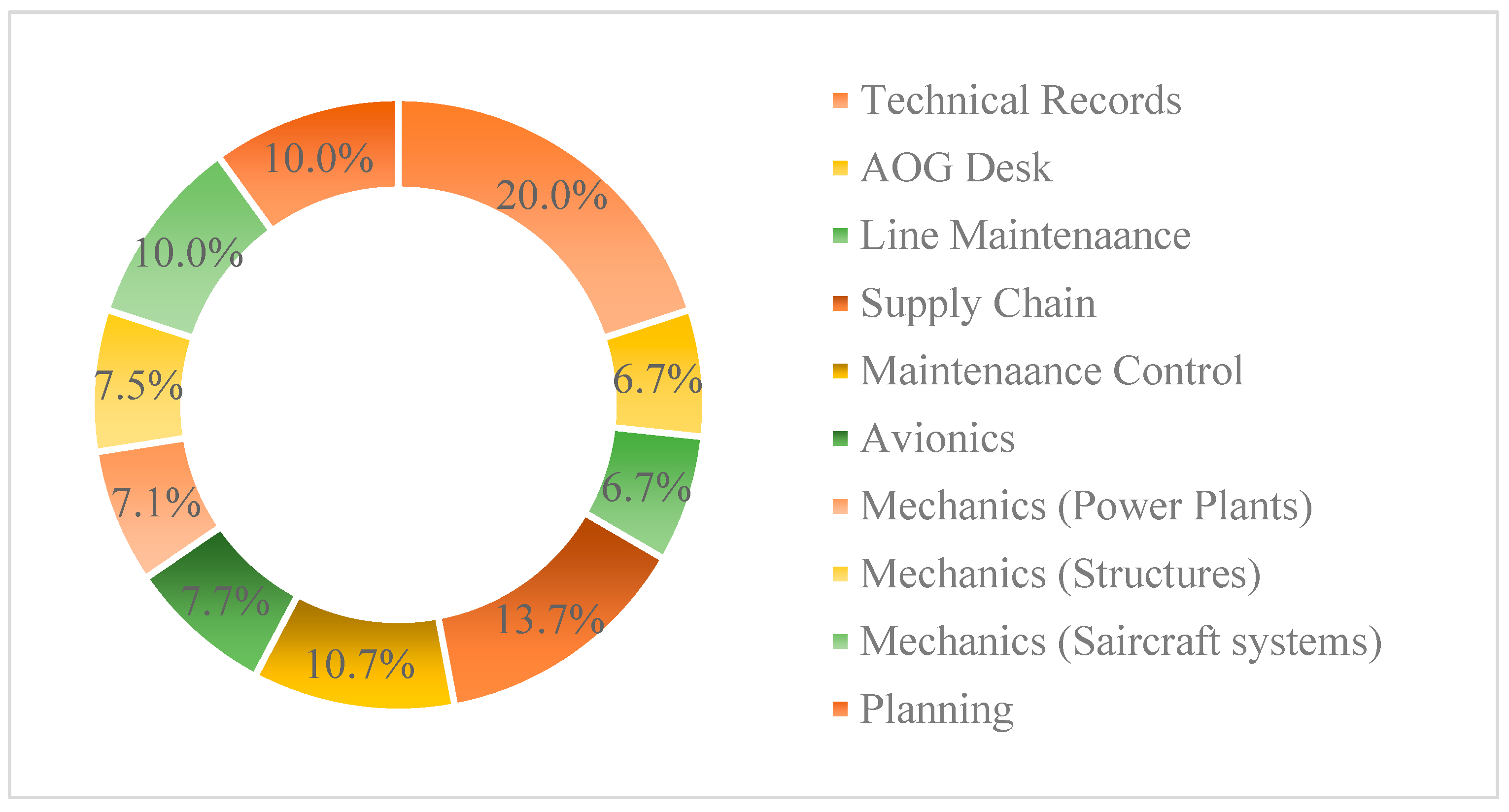

The questionnaire consisted of 14 questions. The main objective was to investigate and categorize the challenges the airline’s engineering department faced. Once identified, these challenges were ranked based on their impact on performance monitoring. The analysis was carried out using a database with Microsoft Excel representations. To provide an overview of the demographics, the authors categorized respondents by their position, department, and availability. The chart (

Figure 7) depicts all participating departments included in the survey. The survey results reveal varying satisfaction levels with the spare parts inventory system across departments. The highest participation came from Technical Records (20%), followed by Supply Chain and Maintenance Control Center (MCC), at 16.7%. Departments like Planning and System contributed 10% each, while AOG Desk, Line Maintenance, and Avionics accounted for 6.7% each. The lowest participation came from power plants and structures, at 3.3% each.

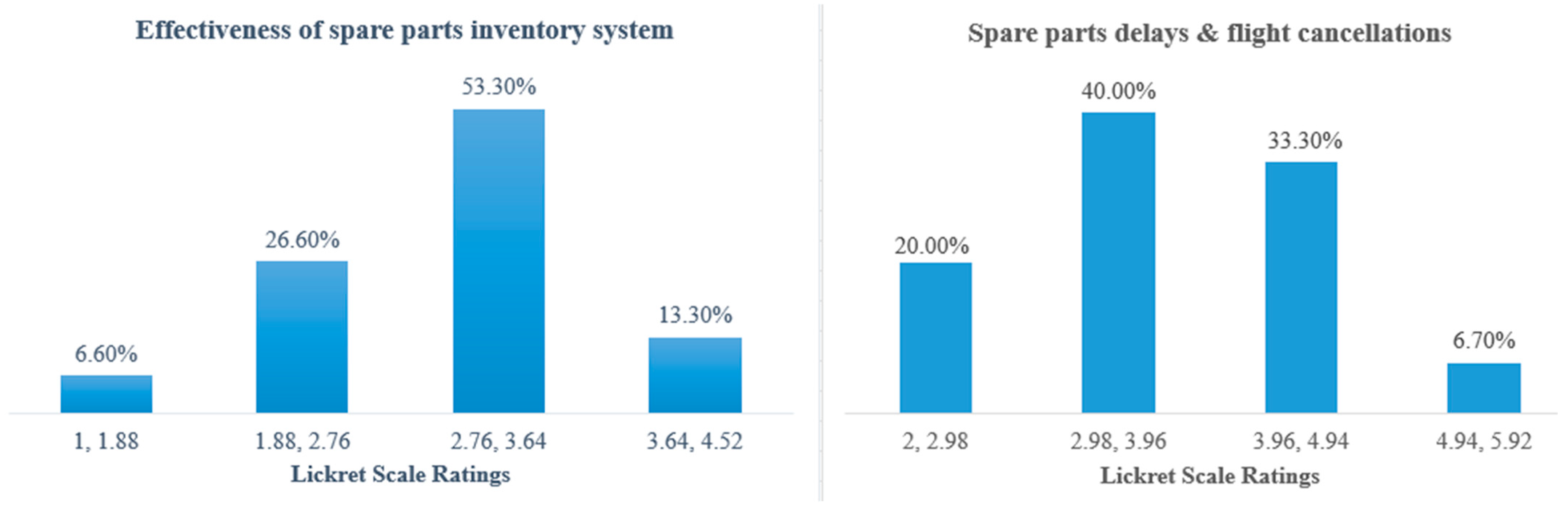

A histogram (

Figure 8) was generated to assess the system’s effectiveness, categorized into four rating ranges. The lowest range (1.0–1.9) revealed significant dissatisfaction, particularly from MCC and the AOG Desk, with 50% each rating the system as ineffective in meeting operational needs. The 2.0–2.9 range showed moderate ratings, with MCC (37.5%) and smaller contributions from Technical Records, Line Maintenance, Planning, System, and AOG Desk (12.5% each), indicating partial adequacy but persistent challenges. Most responses fell in the 3.0–3.9 range, where Technical Records (25%) and Supply Chain (18.8%) led. This suggests that some departments find the system moderately effective, although inconsistencies remain. Lower participation from Power Plants and Structures (6.2% each) indicates varied performance. The highest range (4.0–4.5) showed Supply Chain (50%) and Technical Records (25%) reporting strong satisfaction, reflecting the system’s effectiveness for inventory logistics teams.

While the spare parts inventory system performs well for logistics-focused departments, key operational areas like MCC and the AOG Desk report significant shortcomings. This highlights the need for targeted improvements to address critical gaps, enhance reliability, and meet operational demands across all departments.

The survey results (

Figure 9) reveal varying satisfaction levels with SmartLynx’s spare parts inventory system, a long-standing challenge contributing to Aircraft On-Ground (AOG) events and operational delays. In the lowest range (1.0–1.9), labeled “Never”, departments like the Maintenance Control Center (MCC) and AOG Desk reported significant dissatisfaction, highlighting the system’s inability to meet critical needs. In the 2.0–2.9 range, labeled “Rarely”, MCC again flagged ongoing challenges, with slight improvements noted by other departments. Most responses fell into the 3.0–3.9 range, labeled “Sometimes”, where Technical Records and Supply Chain departments indicated moderate satisfaction, though system inconsistencies persist. In the 4.0–5.0 range, labeled “Often” or “Always”, Supply Chain reported intense satisfaction, reflecting its relative success in managing logistics.

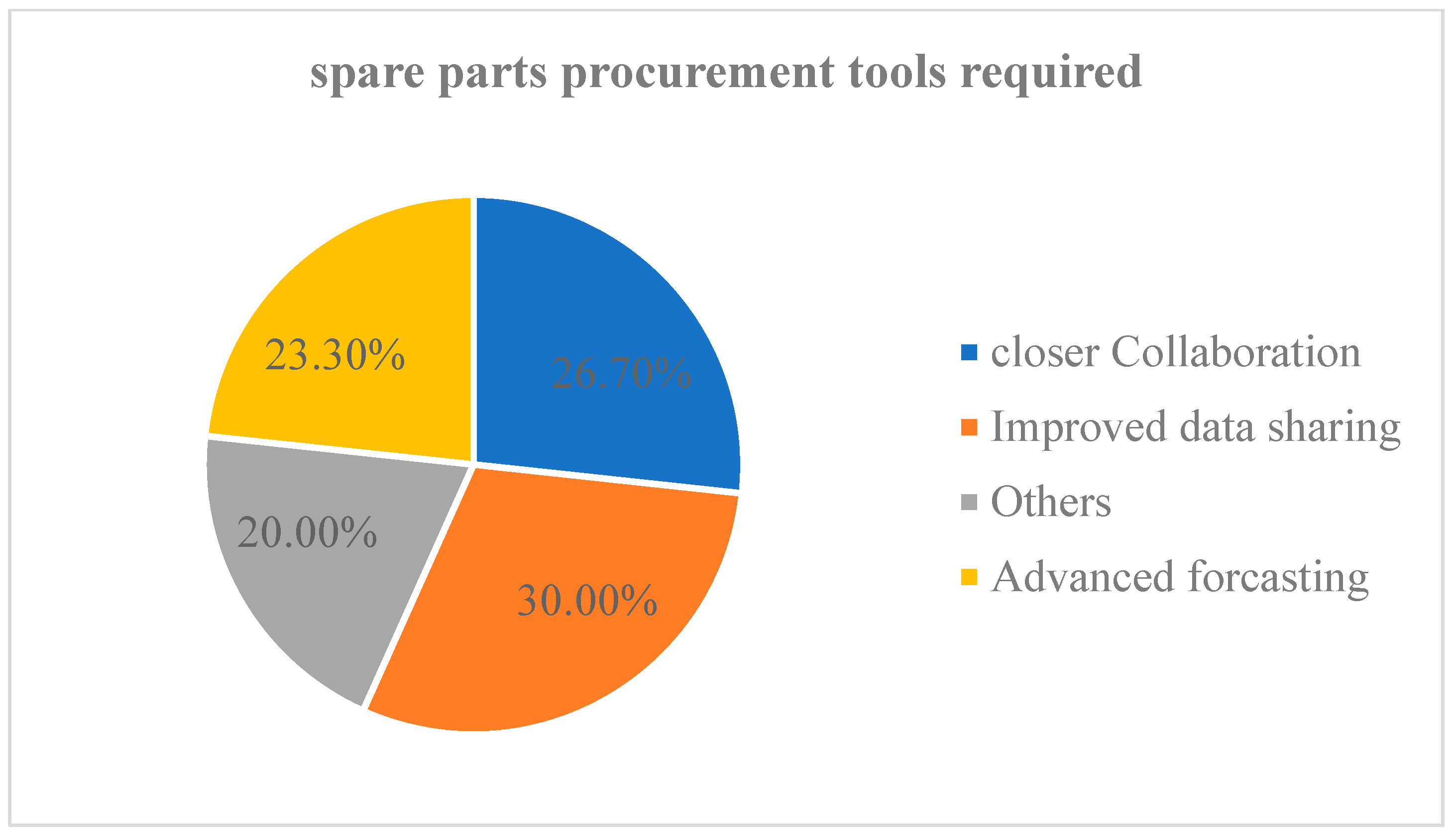

The pie chart (see

Figure 9) shows that Improved Data Sharing ranked as the top priority (30%), stressing the need for better communication across departments. Closer Collaboration with Suppliers followed at 26.7%, emphasizing the importance of reliable partnerships. Advanced Forecasting Tools accounted for 23.3%, highlighting the demand for predictive analytics to anticipate spare part needs. The remaining 20% included tailored solutions specific to departmental needs. Technical Records contributed the highest participation at 20%, reflecting its central role in compliance and maintenance records. MCC and Supply Chain each accounted for 16.7%, underscoring their importance in logistics and operational continuity. The findings highlight the need for SmartLynx to focus on enhancing data sharing, strengthening supplier relationships, and improving forecasting strategies to tackle spare parts challenges and reduce AOG events effectively.

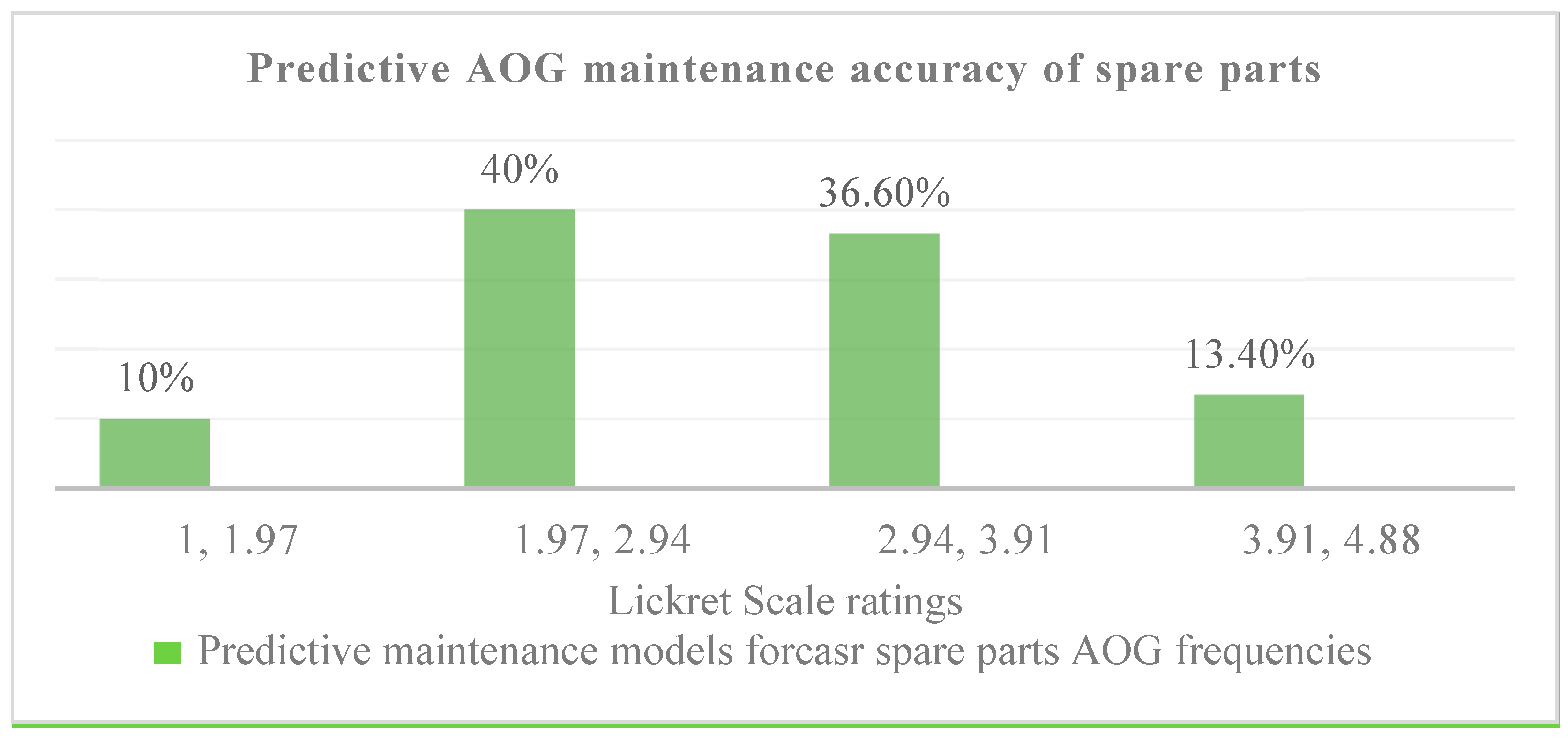

As a continuation of the previous analysis on spare part procurement tools, another histogram (

Figure 10) was addressed to evaluate the accuracy of predictive maintenance in spare parts, rated by respondents on a Likert scale from 1 (Very Inaccurate) to 4 (Very Accurate). The survey results reveal that 40% of responses fall within the 2.0–2.9 (Somewhat Inaccurate) range, highlighting that many departments find the predictive maintenance system below expectations. Notably, departments like the Supply Chain and Maintenance Control Center contributed significantly to this category, reflecting their challenges in ensuring timely spare part availability.

The next most common rating, 3.0–3.9 (Accurate), garnered 36.7% of responses, indicating moderate satisfaction, with notable contributions from Technical Records, Planning, and System departments, which rely heavily on accurate forecasting. The highest rating, 4.0–4.5 (Very Accurate), accounted for 13.3%, mainly from Technical Records and Supply Chain, showing that only a few departments find the system highly reliable. Conversely, 10% of responses were in the 1.0–1.9 (Very Inaccurate) range, largely from the Structures and Maintenance Control Center, emphasizing their struggles with inaccurate forecasting. These results indicated that while some progress has been made in enhancing predictive maintenance capabilities, there are clear gaps in accuracy and reliability that need addressing. For SmartLynx, this reflects an ongoing need to refine predictive tools and processes, ensuring spare parts are anticipated and available more effectively, resulting in avoiding frequent AOG occurrences.

6.2. Implementation of Forecasting Approach Performances

The survey and data analysis provided critical insights into the effectiveness of predictive maintenance systems and their role in optimizing spare part procurement processes. This work emphasizes the value of advanced forecasting methods, such as time series analysis and binary classification, in mitigating operational inefficiencies and reducing AOG events.

A key component of this study involved implementing Python-based forecasting approaches. The analysis utilized a robust dataset containing 1000 entries derived from airlines’ operational and maintenance records spanning various fleet types, including the Airbus A320 and A330 and Boeing 737–800.

The dataset included the following critical variables:

AOG_Incident: A binary indicator (0 or 1) of whether an AOG event occurred.

Date: Timestamp for recorded events.

Part_Failure_Rate: Component failure rates on a scale from 0.1 to 4.9.

Maint_Sched_Compliance: Maintenance compliance percentages are used as a proxy for adherence to scheduled checks.

Total_Flight_Hours: Aggregated flight hours logged.

Downtime_Hours: Hours of aircraft inactivity due to maintenance or AOG events.

Delay_Time_Hours: Delays caused by operational or maintenance factors.

Num_Failures: Count of failures within specific timeframes.

This dataset was meticulously curated from historical operational metrics and interviews with aviation maintenance experts. Each entry reflects real-world data, capturing the complexity of operational dynamics. The data were further enriched through descriptive and statistical analyses to ensure accuracy and relevance for forecasting model evaluations.

The analysis highlights key performance trends based on 1000 entries and eight operational variables. A mean part failure rate of 2.56 indicates moderate reliability, though variability across parts (standard deviation: 0.1–5.4) points to the need for targeted quality control. Low AOG incident variability (standard deviation: 0.399) reflects consistent performance, supported by the airline’s proactive strategies.

However, higher variability in part failure rate (1.424) and maintenance compliance (1423.06) suggests disparities tied to aircraft age, maintenance schedules, and operating conditions. Older or harshly operated aircraft show higher failure rates, emphasizing the importance of standardized maintenance and resource optimization. Fleet usage varies significantly (total flight hours standard deviation: 8205.95), influenced by route demands and aircraft roles. Moderate variability in downtime (11.76) and failures (6.12) points to regional factors like infrastructure and repair capacity, reinforcing the need for predictive maintenance and supply chain improvements. Consistent delay management (delay time standard deviation: 2.39) demonstrates effective disruption handling, though rare outliers signal room for better contingency planning. Overall, these findings stress the value of standardized processes, predictive analytics, and resource management in boosting efficiency.

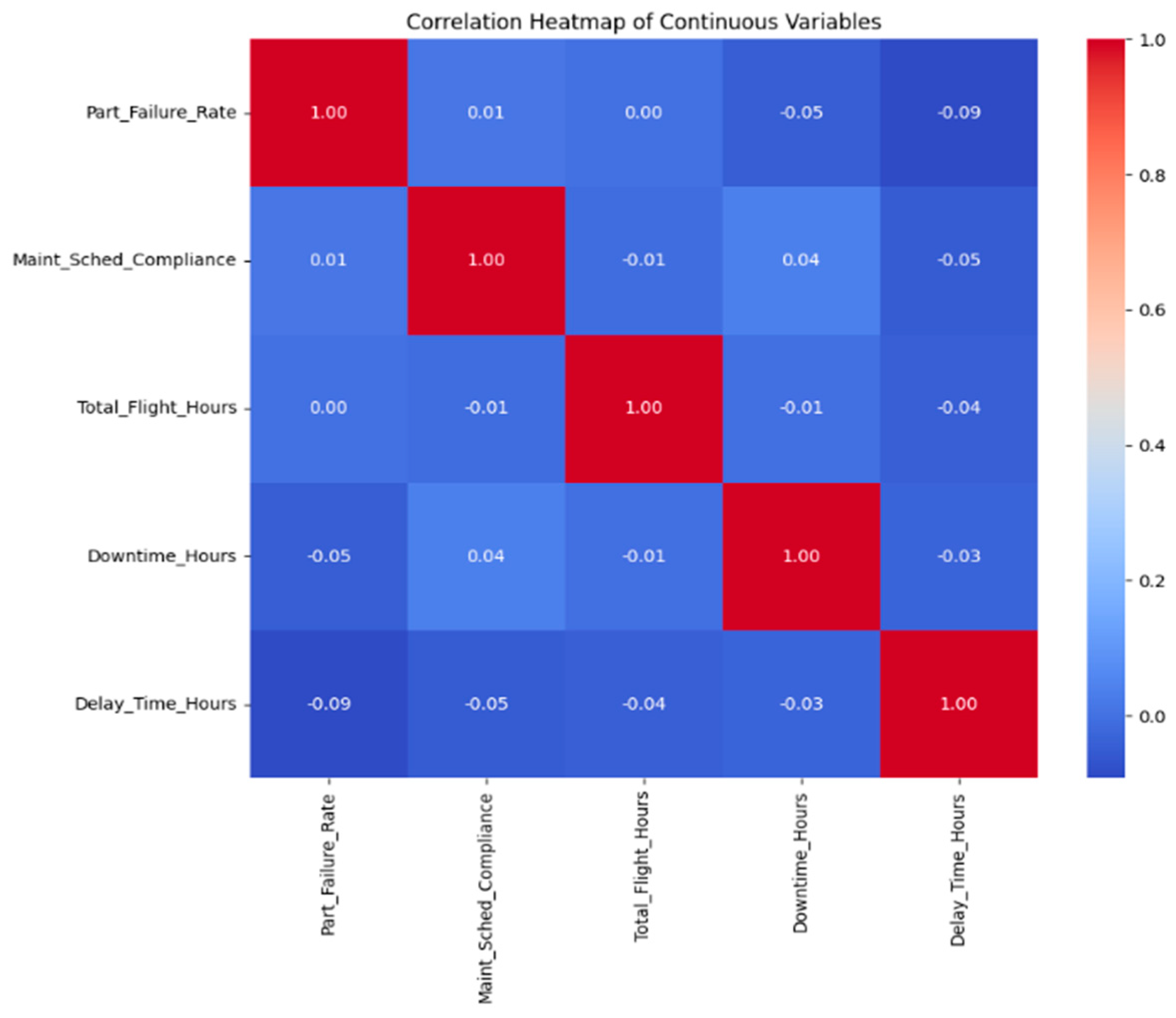

The correlation heatmap (

Figure 11) provides a clear overview of how key operational variables such as part failure rates, maintenance compliance, total flight hours, downtime, and delay time interact. These relationships offer actionable insights into optimizing maintenance and operational practices. One significant finding is the positive correlation (0.46) between part failure rates and downtime hours. This suggests that downtime for repairs or replacements also rises as part failures increase. This highlights the importance of proactive maintenance strategies, such as preemptive part replacements based on predictive models and improving component quality through stricter manufacturing standards. Addressing these areas could reduce unexpected failures and minimize downtime.

The heatmap also reveals a weak positive correlation (0.04) between maintenance compliance and downtime hours, suggesting that better adherence to maintenance schedules could slightly reduce repair times. Although this relationship is not strong, it emphasizes the value of consistent and timely maintenance in preventing more enormous operational disruptions. Interestingly, total flight hours correlate little with downtime hours (−0.01) or delay time hours (−0.04). This indicates that the amount an aircraft is flown does not significantly impact its downtime or delays. Instead, other factors such as component reliability, supply chain efficiency, and operational practices play a more substantial role. Finally, the weak negative correlations involving delay time hours, such as part failure rates (−0.09) and maintenance compliance (−0.05), suggest that delay times may rise slightly as part failures increase or maintenance compliance decreases. However, these relationships are minimal and require further exploration to understand the underlying causes.

The analysis shows that maintenance compliance has a low but positive correlation with downtime hours (0.04), indicating that adhering to scheduled maintenance can slightly reduce downtime. However, this effect is weaker compared to the impact of component quality. Similarly, the correlation between part failure rate and maintenance compliance is very weak (0.01), suggesting that following maintenance schedules does not directly prevent part failures, as these failures often occur unexpectedly.

A weak negative correlation exists between delay time hours and part failure rates (−0.09) and between delay time hours and maintenance compliance (−0.05). While higher failure rates and non-compliance with maintenance schedules slightly increase delays, these relationships are not strong. This highlights that delays are influenced by multiple factors, including operational inefficiencies, external conditions, and logistical challenges, rather than just technical or maintenance-related issues.

Additionally, the minimal negative correlations among total flight hours and downtime (−0.01) and delay times (−0.04) indicate that the frequency of flight activity has little direct impact on downtime or delays. This suggests that aircraft reliability and operational efficiency depend more on maintenance practices and part quality than on the amount of flight time.

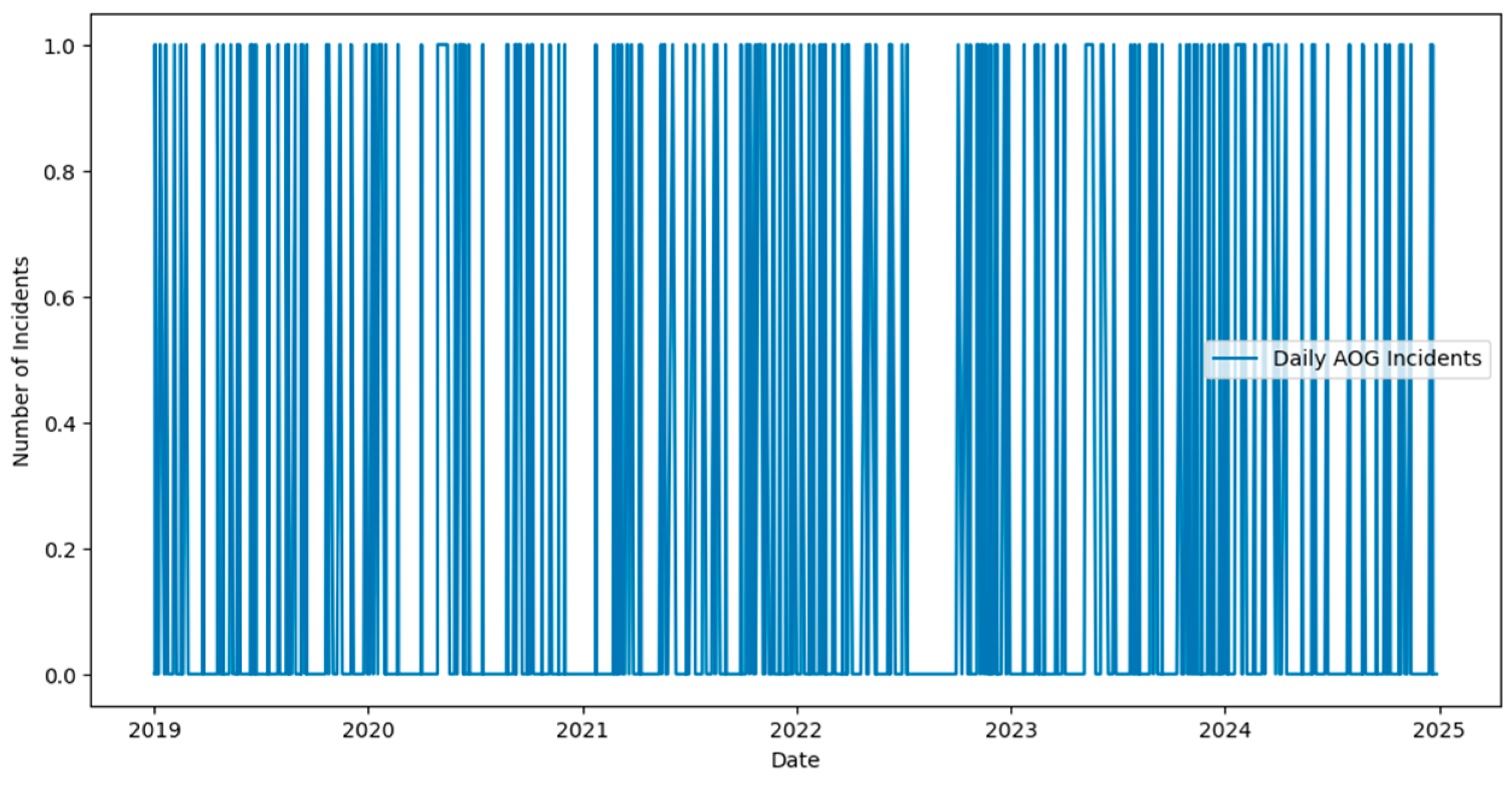

It is apparent from the AOG incidents analyzed over time that there has been a consistently fluctuating number of incidents, with occasional sharp increases in frequency.

The plot (

Figure 12) illustrates the daily Aircraft On-Ground (AOG) incidents from 2019 to 2024, clearly showing their frequency and distribution over time. Key insights from the visualization include the following:

Rare AOG Occurrences: Most data points are clustered at 0, showing that AOG incidents are infrequent on most days. This aligns with the dataset’s mean AOG value of 0.199, meaning only about 20% of days recorded any AOG events. This reflects SmartLynx’s effective maintenance and operational practices, keeping fleet disruptions to a minimum.

Sporadic Spikes: The plot shows occasional sharp increases in AOG incidents, corresponding to isolated disruptions. These spikes may result from seasonal factors, such as peak travel periods when the fleet is under more significant strain, or specific issues like fleet-wide maintenance challenges, adverse weather, or logistical delays.

Seasonal and Yearly Patterns: AOG incidents cluster more during specific times, such as mid-2022 and early 2024. These patterns may reflect cyclical trends related to operational demands, scheduled maintenance cycles, or external factors. Further quarterly analysis could provide deeper insights into these periodic fluctuations.

The visualization highlights the generally low frequency of AOG events while identifying patterns and spikes that can inform better planning and predictive maintenance strategies to prevent future disruptions.

Exploratory visualization: The count plot that compares AOG incidents between aircraft types in more detail shows that the extent to which the organizations faced these problems differs significantly. As one would expect, aircraft operating for lengthy periods, like A320, record higher incident levels, probably because their components are more deprecated or prone to flaws because of their design. On the other hand, newer versions, such as B737 MAX 8, file fewer accidents because of enhanced engineering, better materials, and maintenance (

Figure 13).

In this figure, the legend values represent the occurrence of Aircraft On-Ground (AOG) incidents.

- -

0: Represents days or events where no AOG incident occurred (normal operational conditions).

- -

1: Represents days or events where an AOG incident occurred (aircraft grounded due to maintenance or operational disruptions).

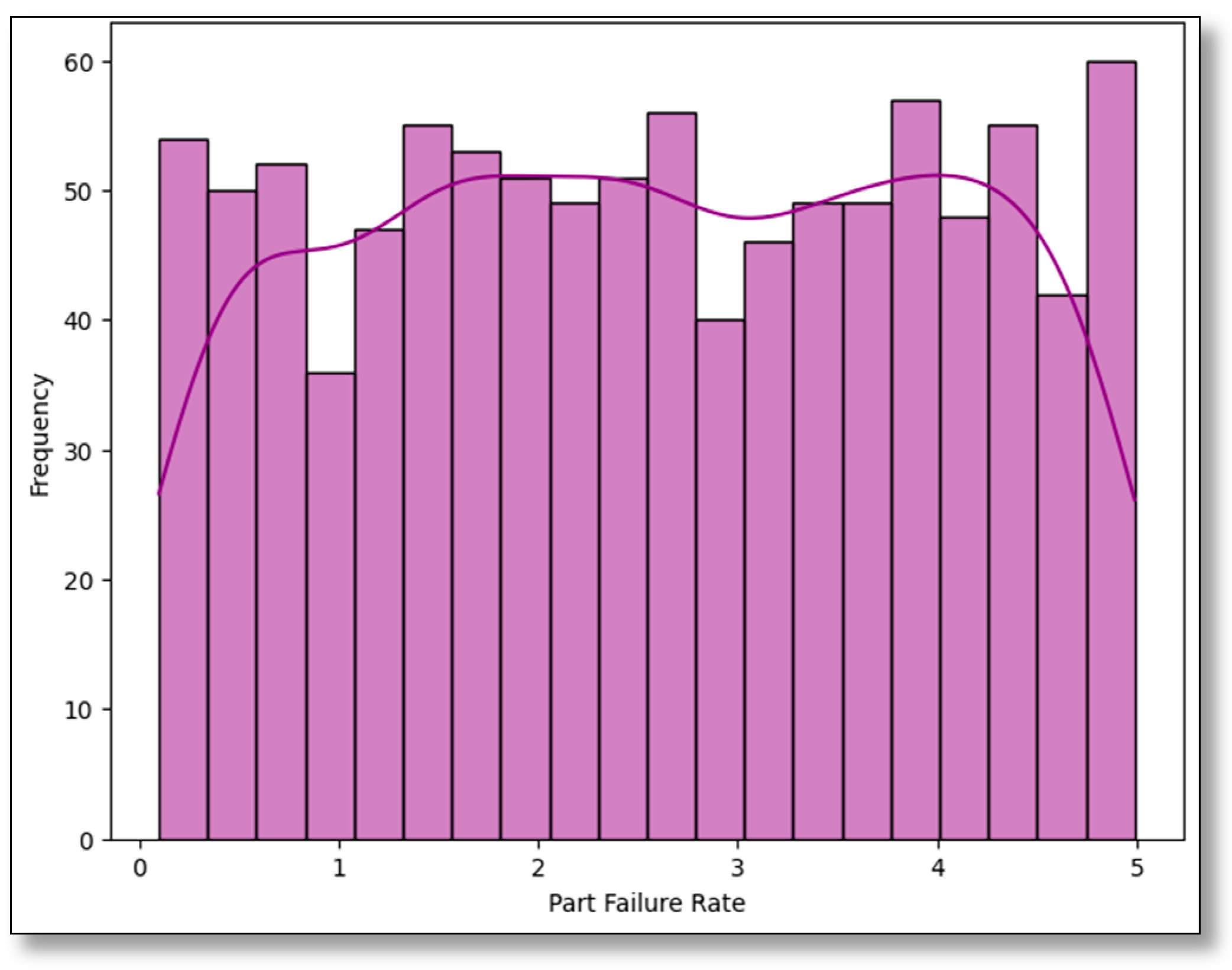

The frequency distribution of part failure rates is positively skewed, with most frequencies significantly close to the mean of 2.56 (

Figure 14). Although most parts show reasonably low failure rates, several parts with relatively high rates are concerned.

Key Observations and Results:

Uniform Distribution:

- -

The histogram bars show an even distribution of part failure rates across the range of 0 to 5, with frequencies oscillating around 40 to 60 occurrences per bin.

- -

This uniform distribution suggests that part failures are distributed relatively evenly across the dataset, implying no specific dominance of low- or high-failure rates.

- -

Peaks and Troughs:

- -

The KDE curve highlights minor peaks around the mid-range (2.5 to 3.5) and at the higher end (5). There is also a visible dip near the 1.0 range.

- -

These trends indicate that moderate to high part failure rates occur more frequently compared to extremely low rates, which might point to challenges in maintaining consistent part reliability.

Tail Behavior: The KDE curve dips sharply near the extreme ends (0 and 5), suggesting that very low or very high part failure rates are less common in comparison to the mid-range.

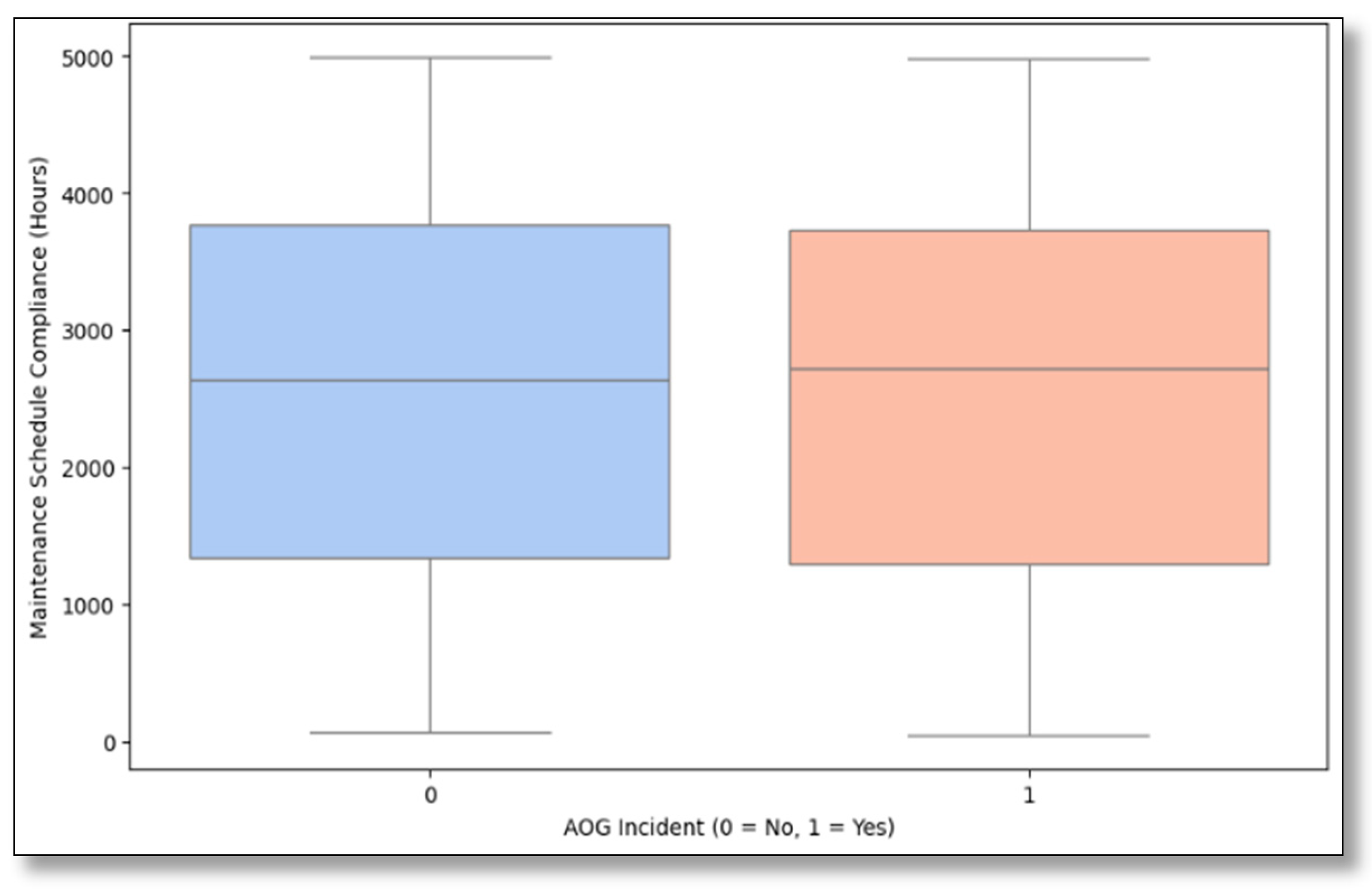

This boxplot (

Figure 15) compares Maintenance Schedule Compliance (Hours) for two categories: days with no AOG incidents (labeled as 0) and days with AOG incidents (labeled as 1). The data provide valuable insights into how maintenance compliance correlates with AOG occurrences.

The boxplot provides insights into how maintenance adherence impacts AOG occurrences. The median compliance hours for both groups are similar, around 3000 h, indicating consistent maintenance efforts overall. However, there are notable differences in variability between the two groups.

Key Observations:

Median Maintenance Compliance: Slightly higher on non-AOG days, supporting the idea that better schedule adherence reduces the likelihood of AOG incidents.

IQR Differences: The narrower IQR for AOG days suggests more consistent compliance during these events, likely due to the urgency to return grounded aircraft to service.

Overlap in Distribution: Significant overlap between the two groups indicates that while maintenance compliance is a factor in AOG incidents, other factors like operational demands or environmental conditions also play a role.

Systemic Challenges: The overlap and variability highlight systemic issues, such as the unpredictability of part failures or supply chain delays, even when schedules are followed.

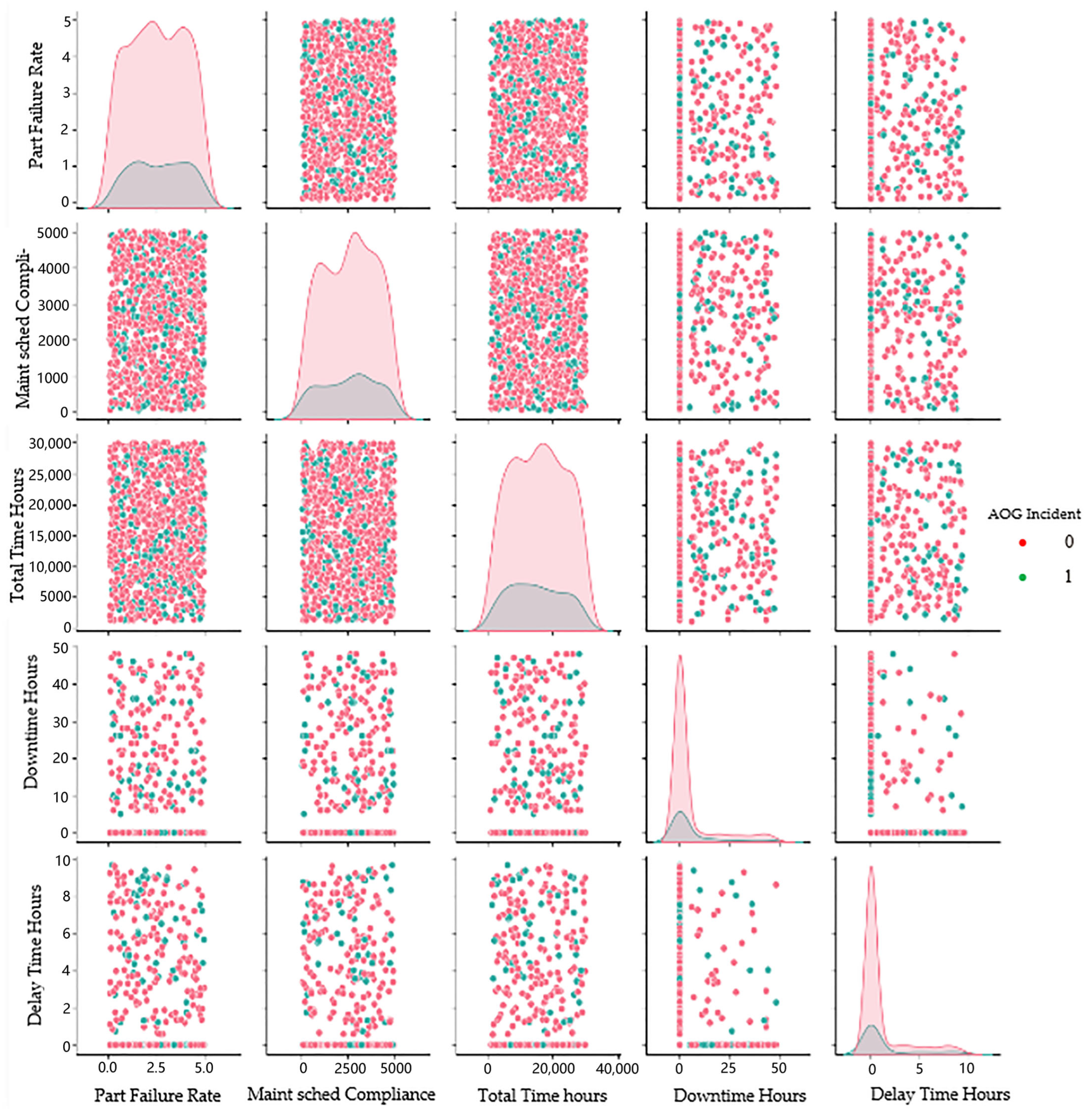

The pair plot (

Figure 16) visually compares continuous variables related to AOG incidents, including part failure rate, Maintenance Schedule Compliance, total flight hours, downtime hours, and delay time hours. It highlights clear patterns and relationships that offer insights into the drivers of AOG events. One of the most noticeable trends is that AOG incidents are linked to higher part failure rates and increased downtime hours, indicating that frequent failures are a primary contributor to extended ground times. This reinforces the importance of using reliable components and adhering to maintenance schedules to minimize disruptions.

Maintenance compliance appears inversely related to AOG incidents, with poor compliance increasing the likelihood of these events. This suggests gaps in maintenance planning and highlights the need for more efficient workflows. While total flight hours has a minimal direct impact on downtime, aircraft with higher usage tend to experience more downtime when AOG incidents occur, likely due to accumulated wear and tear. Delays are strongly correlated with prolonged downtimes during AOG incidents, pointing to inefficiencies in resources or logistics, such as spare part shortages or staffing constraints. These patterns suggest that frequent failures, inadequate maintenance compliance, and logistical challenges are the main factors driving AOG events. Addressing these issues requires further analysis of outliers, such as cases with unusually high downtime or delay hours. Investigating these incidents using predictive models and historical records can help identify root causes, whether they stem from parts quality, inventory gaps, or procedural inefficiencies.

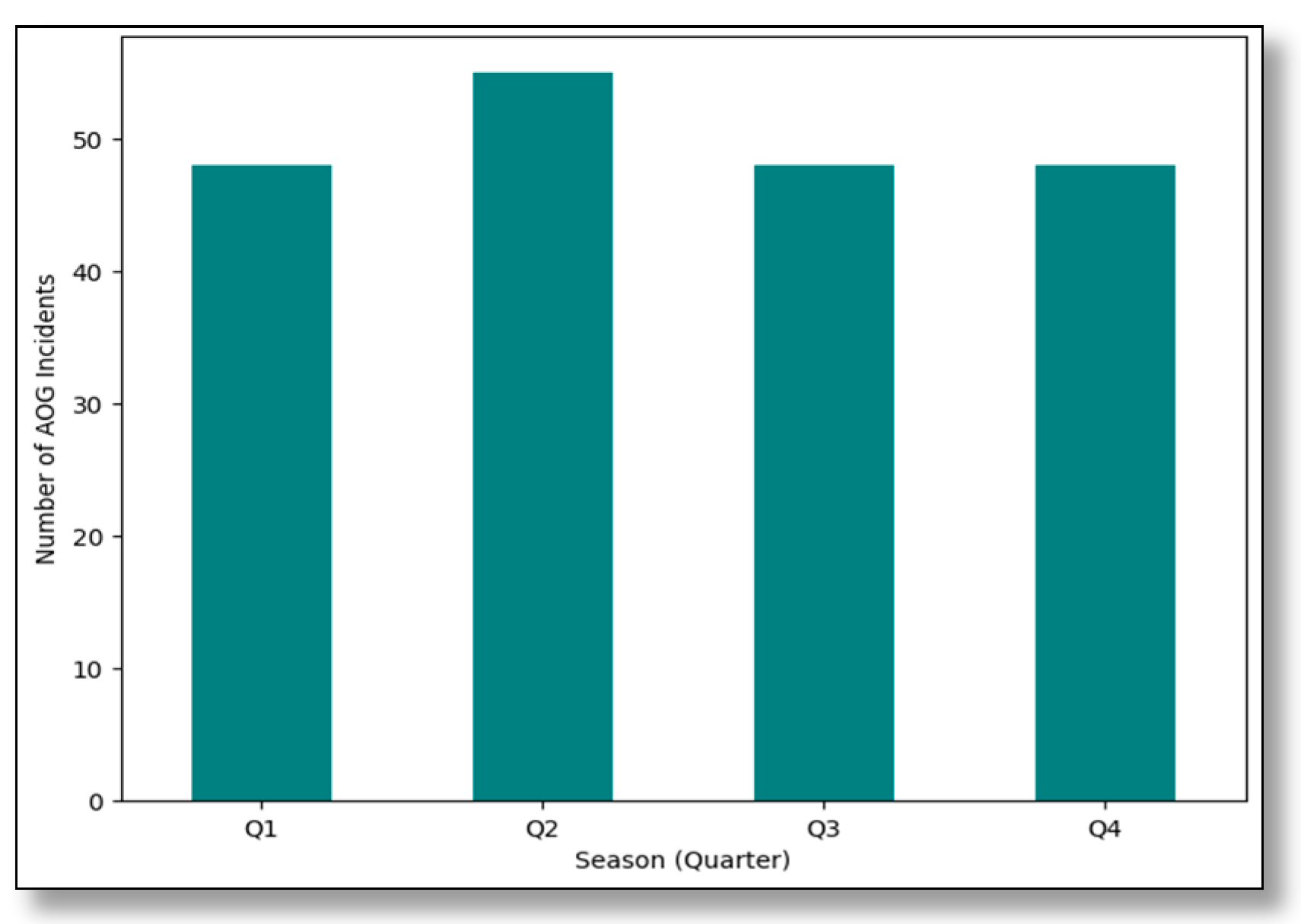

The bar chart (

Figure 17) illustrates the number of AOG incidents across the four quarters (Q1 to Q4). The data reveal a notable peak in Q2, with over 50 incidents, while the other quarters maintain a relatively stable count of approximately 45 incidents each. This trend indicates potential seasonal factors influencing operational disruptions during Q2.

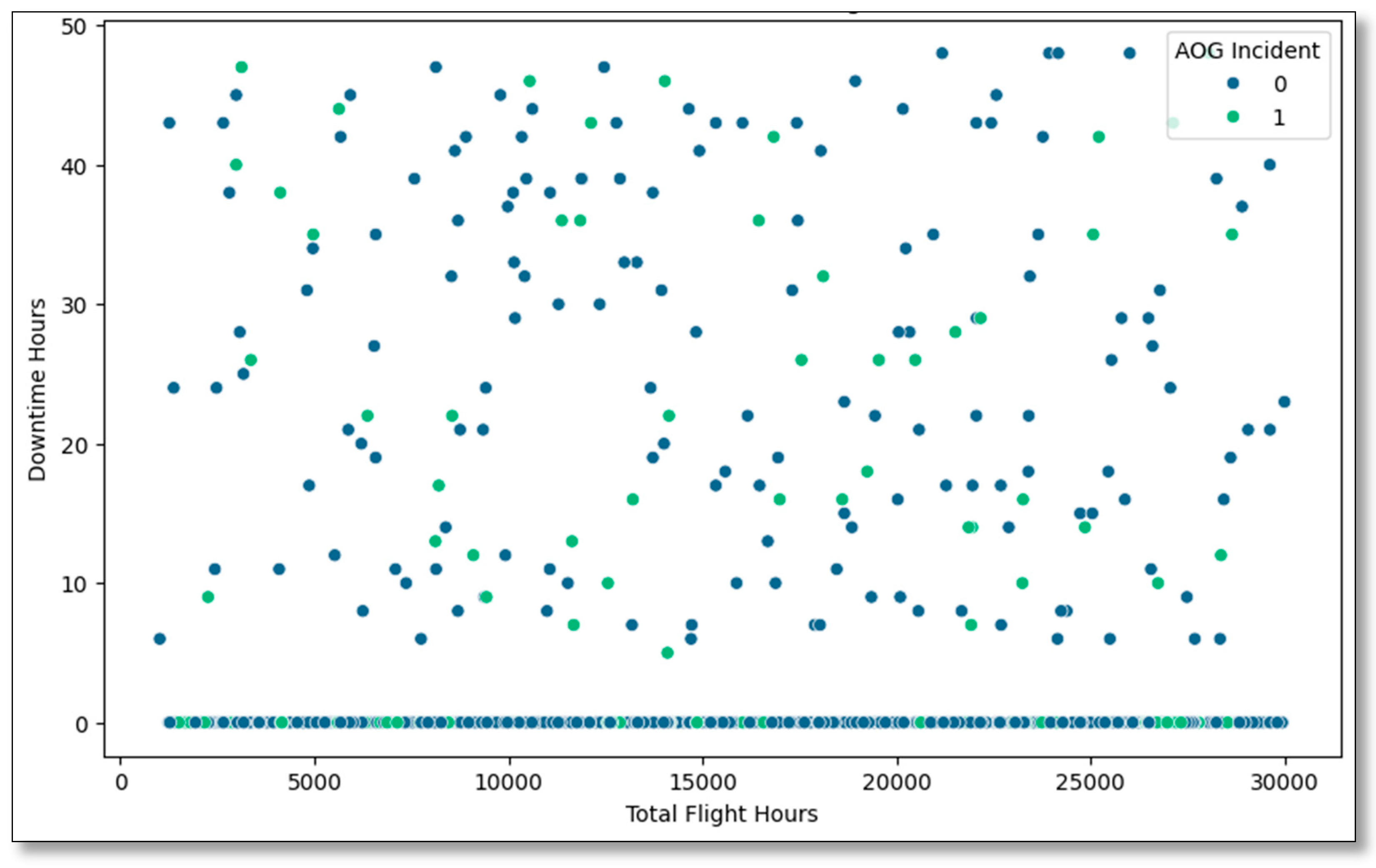

The scatterplot (

Figure 18) examines the relationship between total flight hours and downtime hours, differentiating between AOG incidents (1 = Yes, 0 = No).

Key Observations:

- -

General Trend: The majority of data points cluster along the x-axis at downtime hours close to 0, indicating that most operations have minimal downtime regardless of total flight hours. However, some data points show substantial downtime, reaching up to 50 h.

- -

AOG Incidents (green dots): Incidents are scattered across all levels of total flight hours but are more prevalent among data points with higher downtime hours. This suggests that longer downtimes are associated with a higher likelihood of AOG incidents.

- -

Non-AOG Incidents (blue dots): They dominate the dataset and are concentrated around minimal downtime hours. This reflects efficient maintenance practices and operational management that minimize disruptions.

- -

Distribution Across Total Flight Hours: The spread of points along the x-axis indicates that downtime hours are not strongly correlated with total flight hours. This suggests that downtime is influenced by factors beyond total utilization, such as maintenance schedules, part availability, or operational demands.

- -

Overlap Between AOG and Non-AOG Points: There is a significant overlap between AOG and non-AOG incidents, particularly at lower downtime levels, highlighting that downtime alone does not fully explain the occurrence of AOG events.

This analysis evaluates the performance of three forecasting methods, ARIMA, Holt–Winters Exponential Smoothing, and Logistic Regression, applied to predicting AOG incidents in aviation operations. Metrics such as Root Mean Squared Error (RMSE), Mean Absolute Percentage Error (MAPE), and Receiver Operating Characteristic–Area Under the Curve (ROC-AUC) are used to measure accuracy and assess their implications. The Mean Time Between Failure (MTBF) is also discussed in the context of optimal forecasting accuracy and operational alignment.

The ARIMA model forecasts that the AOG incidents will slightly reduce over the next four quarters. Measures of predictive accuracy present moderate accuracy, with MAPE at 54.9% and RMSE at 3.19. The actual and fitted values corresponding to the time series plot eliminate the possibility of trend analysis using the model. The plot in

Figure 18 shows the predicted series (orange line) compared to the true series (blue line), revealing how well the ARIMA model aligns with actual operational data. While the orange line generally follows the same trajectory as the blue line, it struggles to capture sharp spikes and deep dips, especially during periods of high variability. The actual data reflects real-world fluctuations, including the significant disruptions caused by the pandemic starting in 2019. These disruptions introduced unprecedented variability due to changes in travel demand, supply chain challenges, and resource constraints. The ARIMA model, relying on historical patterns like pre-pandemic seasonal trends and maintenance schedules, performs well during stable periods (e.g., 2019–2020 and 2023 onward). However, it struggles during volatile periods, such as 2021–2022, when the unpredictability of the pandemic caused sharp deviations from typical trends. This analysis highlights ARIMA’s strengths in predicting general trends during stable periods but also its limitations in accounting for sudden, extreme changes in operational conditions.

The analysis of AOG incidents begins with daily incident data accumulation. The compulsory tools for finding the ARIMA model’s parameters include the Autocorrelation Function (ACF) and Partial Autocorrelation Function (PACF), as illustrated in

Figure 19.

The ACF plot illustrates the relationship between AOG incidents and their first differences, helping to identify trends or seasonality in the data. The PACF plot, which removes intermediate lag effects, highlights the most relevant autoregressive terms. The only significant spike in both plots appears at lag zero, while all other lags fall within the confidence intervals. This indicates that apart from its immediate correlation, there is no strong or statistically significant dependency on the data. Essentially, this pattern suggests that the series (or its residuals) behaves like random noise (white noise), meaning the model has successfully captured any systematic patterns present in the data.

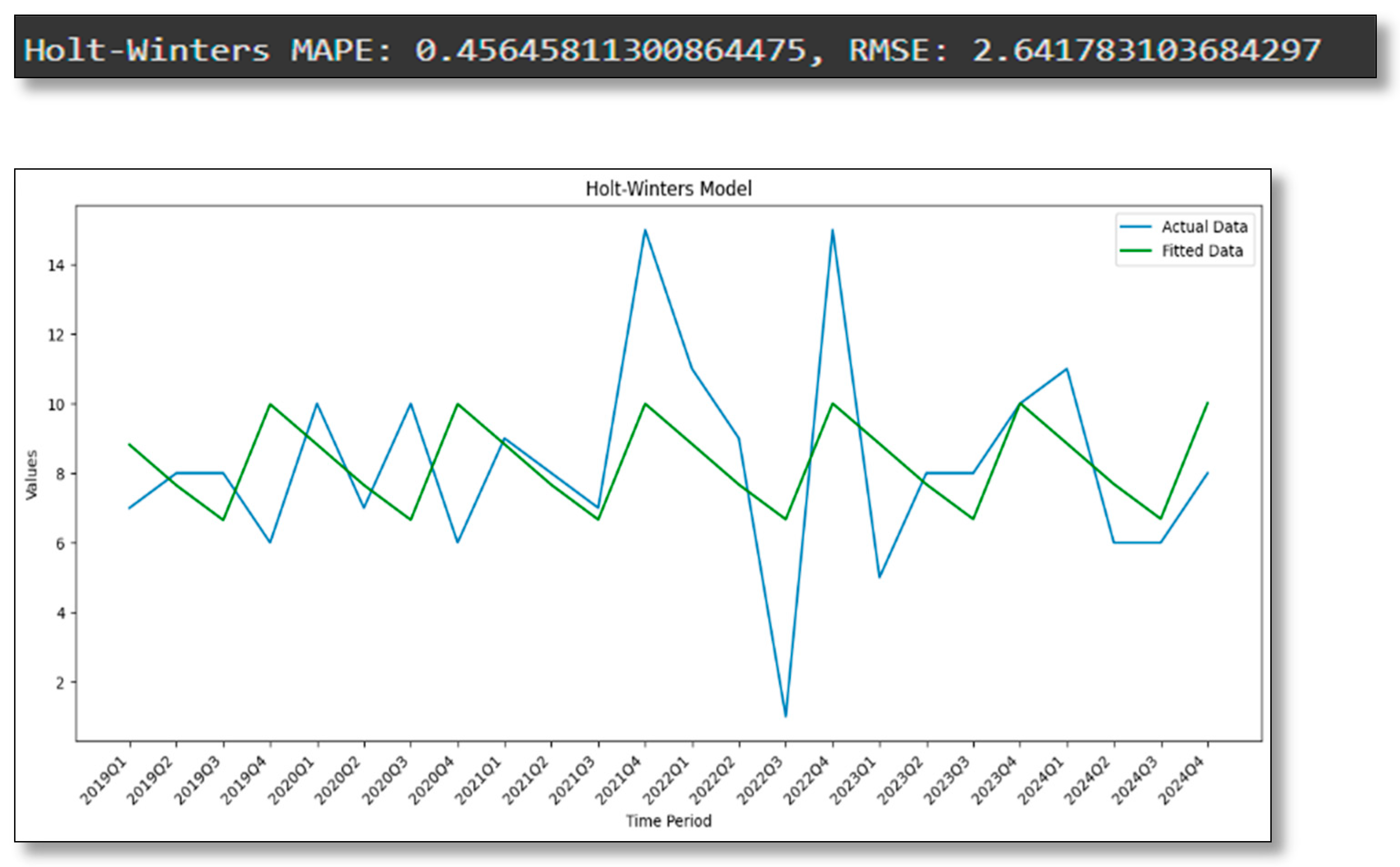

Seasonal adjustments implemented in the Holt–Winters model give a better result than ARIMA in percentage MAPE (7.56%) and RMSE (2.64). Their outlook matches the data recorded, thus allowing it to identify fluctuations related to occasions in AOG incidences. In general, the model can be very effective during the planning of maintenance activities and distribution of resources by predicting periodically recurring fluctuations in rates of incidents. The Holt–Winters model’s performance, shown by a MAPE (

Figure 20) near 0.456 and an RMSE around 2.64, reveals moderate predictive accuracy with some discrepancies between the fitted and actual values. The green line (fitted data) tracks the general direction of the blue line (actual data) but occasionally diverges notably, indicating that certain shifts or peaks in the real observations are not fully captured.

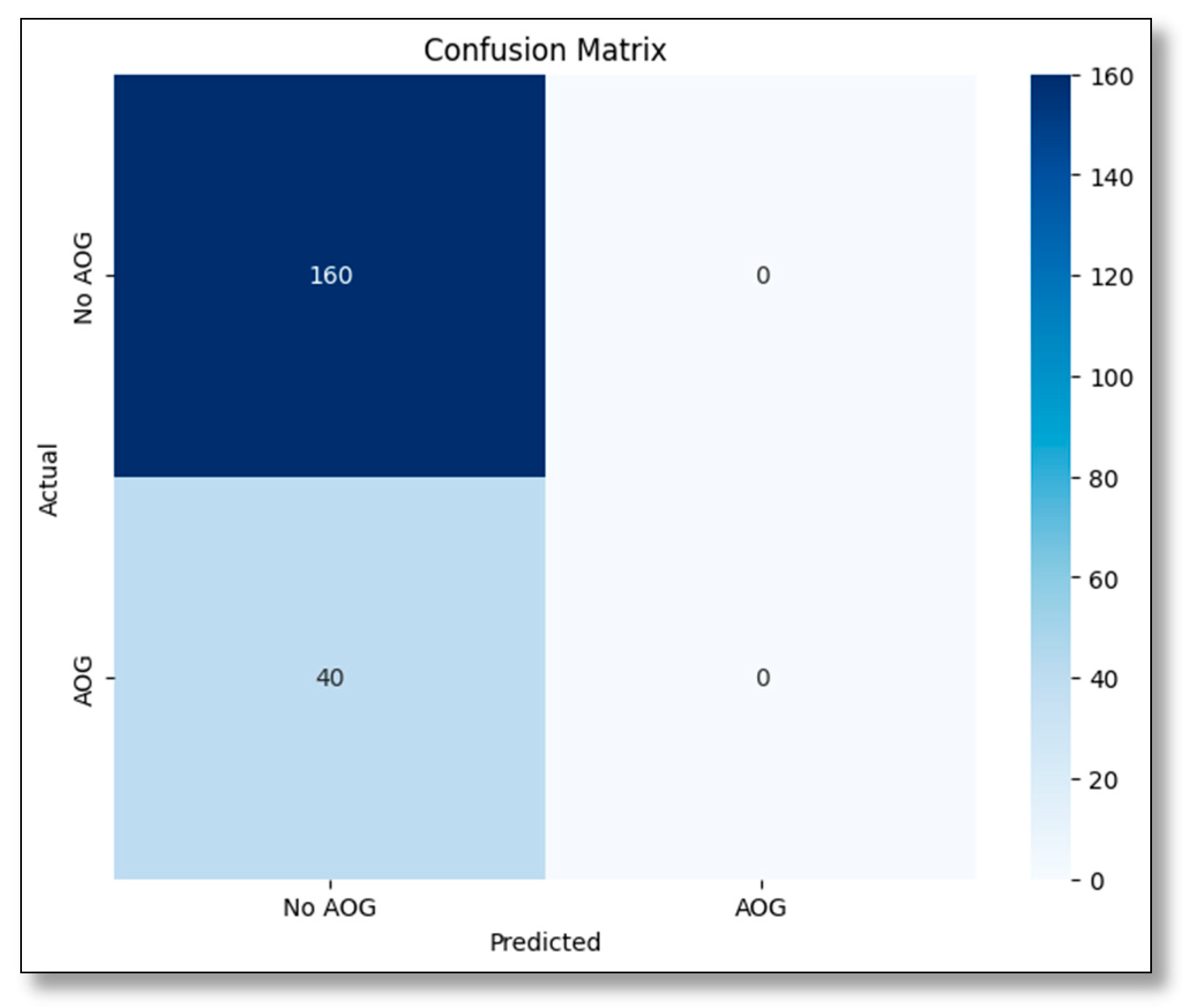

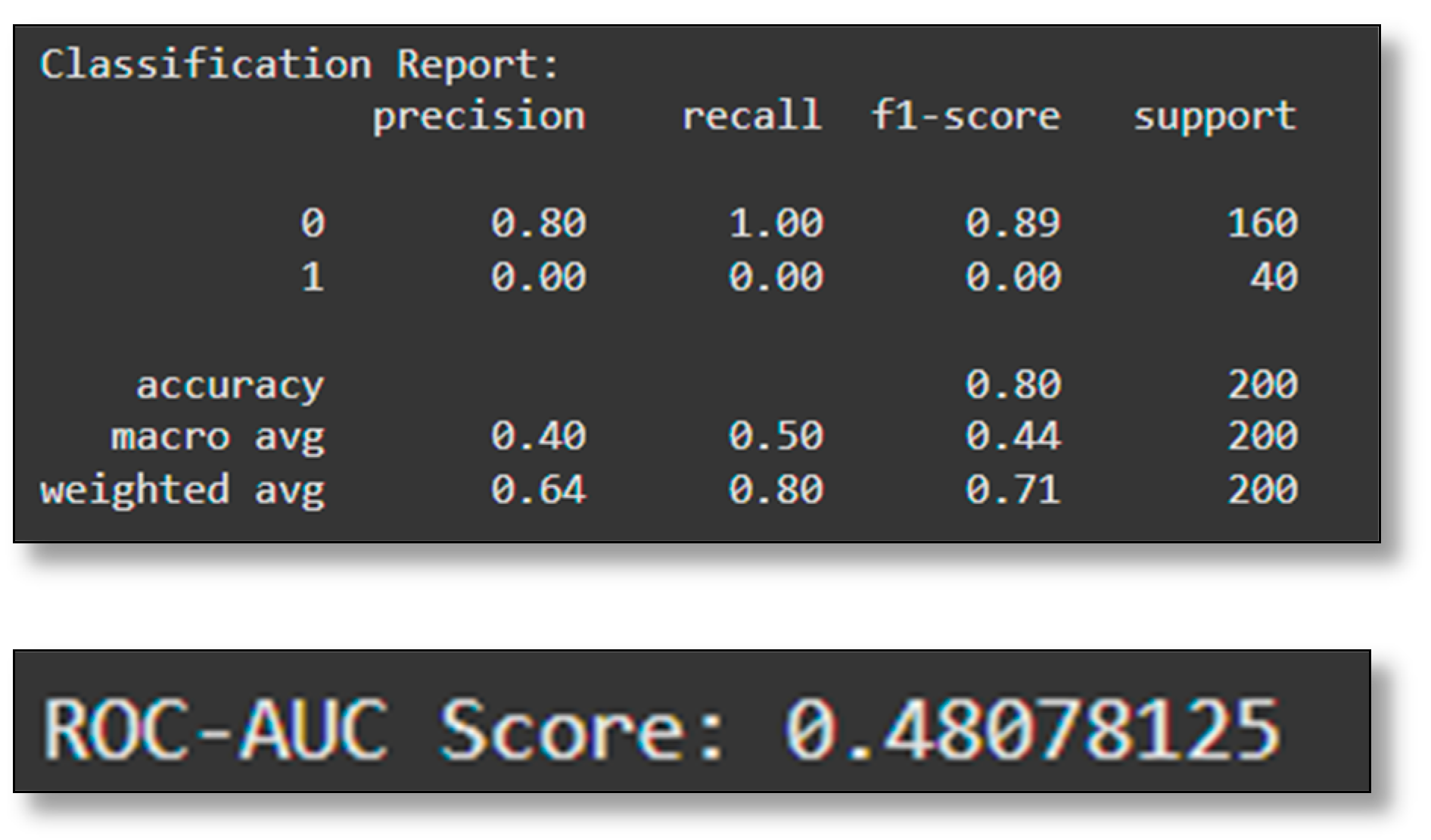

The confusion matrix (

Figure 21 and

Figure 22) reveals that the model predicts every case as “No AOG”, correctly classifying all 160 “No AOG” instances but entirely missing the 40 actual “AOG” cases.

Consequently, there are zero true positives for the “AOG” category, indicating the classifier completely fails to recognize that class.

The Logistic Regression model checks the significance of the predictors, including aircraft type, part failure rate, and compliance with maintenance schedules for AOG incidents. Nevertheless, its accuracy is relatively low, 48% ROC-AUC, meaning it is a non-informative classifier. The classification report shows that the model provides a very high accuracy in non-incident classification, hence showing a high imbalance in the classes in the dataset. (

Figure 23)

For instance, the model completely fails to recognize instances of class “1”, with precision, recall, and F1-score stuck at zero for that category. While the overall accuracy appears to be 80%, this is driven entirely by correct predictions on the majority class “0”. Consequently, the ROC-AUC score of about 0.48 below the 0.5 random-guess threshold illustrates that the model has poor discriminative ability. These metrics demonstrate an imbalance in predictions and suggest the need to reject this approach.

This research evaluated three approaches—ARIMA, Holt–Winters, and Logistic Regression—to address the challenge of detecting Aircraft On-Ground (AOG) events. However, all models ultimately predicted every instance as “No AOG”, as shown by confusion matrices with zero true positives for “AOG”.

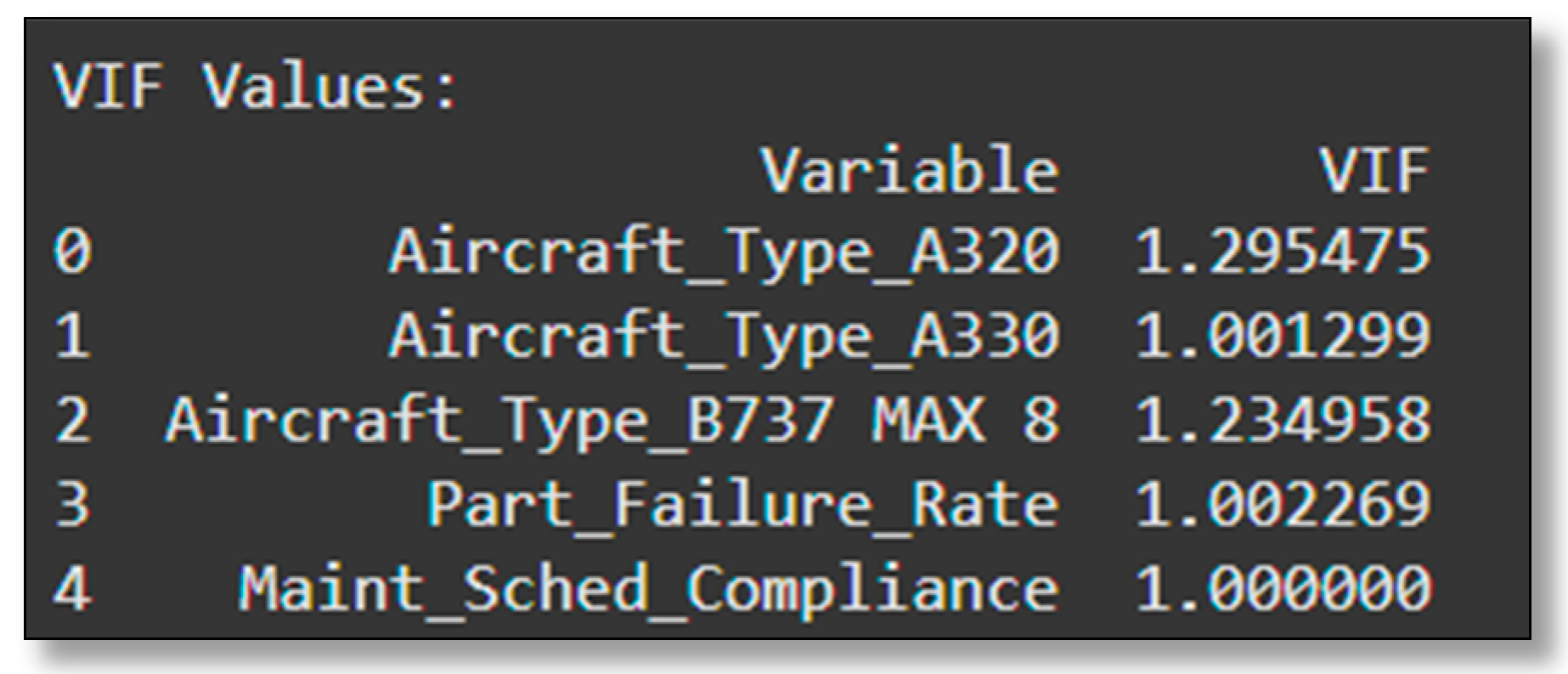

While effective for general time series forecasting, the ARIMA model struggled with class imbalance and failed to identify minority class AOG events. Similarly, the Holt–Winters model, which accounts for trend and seasonality, also overlooked the “AOG” category, though it showed slight progress in forecasting seasonal patterns. Even with cost-sensitive adjustments, Logistic Regression could not sufficiently tune its parameters to capture the minority class. These findings highlight the critical need to address class imbalance in the dataset. Without properly balancing the data, models like ARIMA and Logistic Regression fail to detect actual AOG events, rendering their predictions ineffective. Despite its limited improvements, Holt–Winters’ ability to handle trends and seasonality shows potential for further refinement. All the Variance Inflation Factor (VIF) gauges diagnose multicollinearity among predictors. The obtained VIF values below 1.5 demonstrate little multicollinearity in the regression model, stabilizing the coefficients.

![Applsci 15 05129 i001]()

ARIMA (Autoregressive Integrated Moving Average)

- -

Parameters Used:

- ○

p (Autoregressive Order): 1

- ○

d (Differencing Order): 1

- ○

q (Moving Average Order): 1

- -

Parameter Selection: The parameters were chosen based on an analysis of the Autocorrelation Function (ACF) and Partial Autocorrelation Function (PACF) plots. These tools identified significant correlations at the first lag, suggesting that a first-order autoregressive and moving average process would capture the data’s dynamics. Differences were applied to ensure stationarity.

- -

Performance Metrics:

- ○

MAPE: 54.9% (indicating moderate prediction errors).

- ○

RMSE: 3.19 (quantifying the scale of prediction inaccuracies).

- -

Scientific Analysis: While ARIMA successfully captured short-term trends, it struggled to account for seasonality inherent in AOG incidents. This limitation led to higher MAPE and RMSE values. The model is better suited for datasets without strong cyclical patterns or those dominated by trend-based changes.

Holt–Winters (Exponential Smoothing)

- -

Parameters Used:

- ○

Alpha (Level Smoothing): 0.2

- ○

Beta (Trend Smoothing): 0.1

- ○

Gamma (Seasonal Smoothing): 0.1

- -

Parameter Selection: These parameters were optimized to align with the moderate seasonality observed in the dataset. An additive method was selected, given that the seasonal variations appeared to have consistent amplitudes.

- -

Performance Metrics:

- ■

MAPE: 45.65% (lower than ARIMA, indicating better accuracy).

- ■

RMSE: 2.64 (smaller error magnitude than ARIMA).

- -

Scientific Analysis: The Holt–Winters model effectively captured periodic fluctuations in AOG incidents, demonstrating its ability to model seasonality. This makes it a robust tool for predicting cyclical patterns, critical for maintenance scheduling and resource planning.

Logistic Regression

- -

Parameters Used: Aircraft type, part failure rate, and maintenance compliance.

- -

Parameter Selection: These variables were chosen due to their theoretical relevance in predicting AOG incidents. They represent key operational and maintenance factors influencing downtime.

- -

Performance Metrics: ROC-AUC: 48% (indicating poor classification performance).

- -

Scientific Analysis: Logistic regression performed poorly due to class imbalance in the dataset, with a high number of non-AOG events dominating AOG incidents. The model primarily identified non-AOG incidents with high accuracy but failed to generalize for AOG classification. Its low ROC-AUC suggests that the model does not effectively discriminate between classes, necessitating rebalancing techniques like oversampling or advanced ensemble methods.

RMSE: Measures the average magnitude of prediction errors. For ARIMA (3.19) and Holt–Winters (2.64), Holt–Winters demonstrated greater precision in predicting AOG incidents by reducing error magnitude. RMSE is particularly useful for assessing how well predictions align with actual data on an absolute scale.

MAPE: Evaluates forecasting accuracy as a percentage of the actual values. Holt–Winters achieved a lower MAPE (45.65%) compared to ARIMA (54.9%), reflecting its superior ability to align with trends and seasonal patterns.

Table 9 provides a comparative analysis of the performance of three forecasting models, ARIMA, Holt–Winters exponential smoothing, and Logistic Regression, alongside SamrtLynx’s benchmark MTBF. These metrics evaluate each model’s capability to predict AOG incidents effectively and provide actionable insights for operational decision-making. The key metrics analyzed are RMSE, MAPE, and the calculated MTBF.

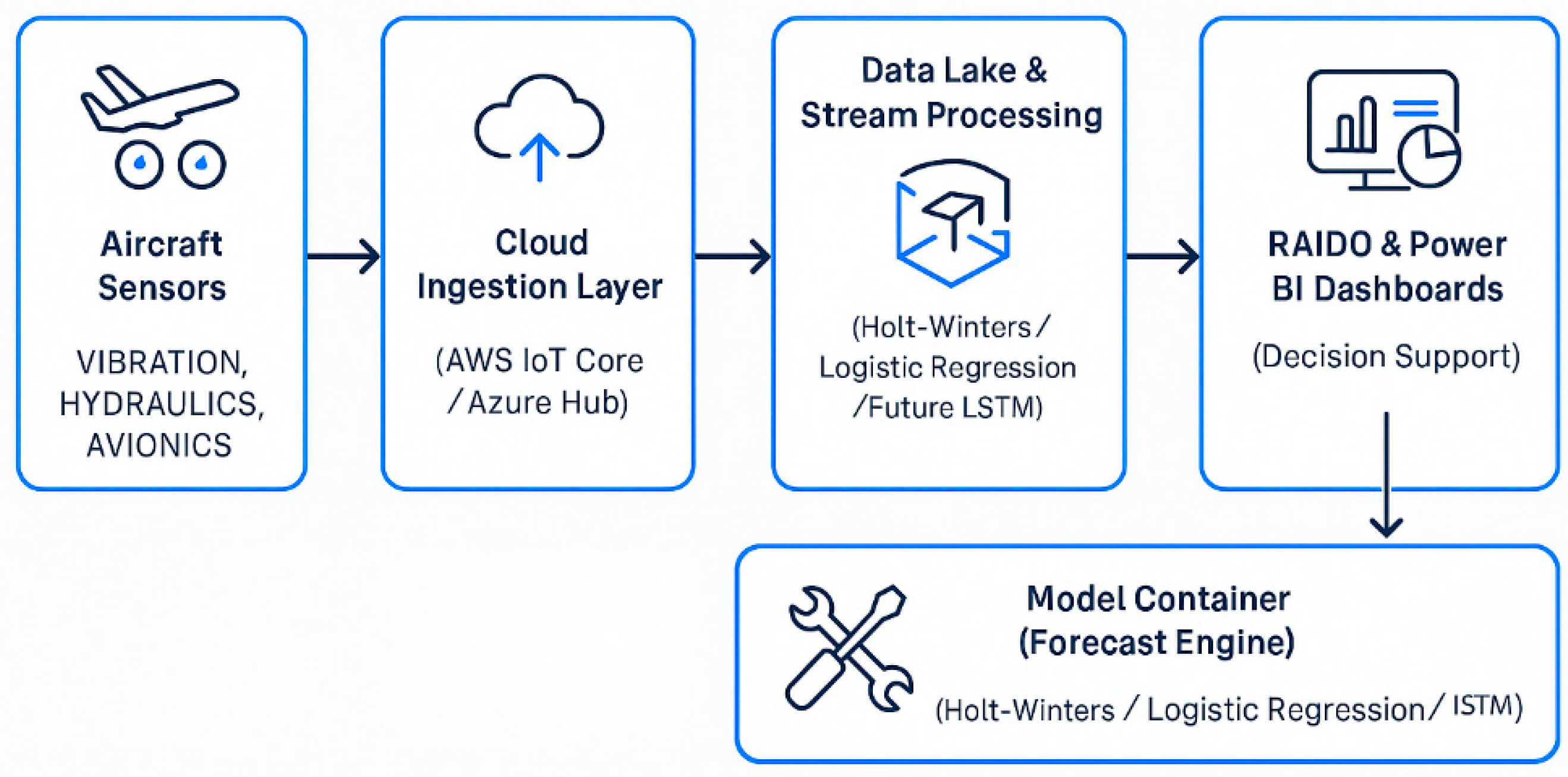

Although advanced models such as Long Short-Term Memory (LSTM) networks and S-curve logistic growth functions could be considered for this study due to their theoretical strengths in capturing complex temporal patterns and long-term adoption behaviors, they were ultimately not implemented. The primary reason was the limited availability of high-frequency, granular datasets necessary to train deep learning models effectively, largely due to confidentiality restrictions and regulatory data governance. Moreover, the black-box nature of neural networks poses significant challenges in aviation, where explainability and traceability of decision-making are mandated by compliance standards. S-curve models, while useful for macro-level lifecycle modeling, are not well suited for short-term, event-based forecasting of discrete maintenance events such as AOG incidents. In contrast, the models selected, Logistic Regression, ARIMA, and Holt–Winters offered greater alignment with the available data, provided transparent and interpretable results, and could be feasibly integrated into existing airline operational systems such as RAIDO and Power BI, as seen in the table below.

Conclusion: Based on the results obtained, Holt–Winters exponential smoothing emerges as the optimal approach for forecasting AOG incidents. This model consistently demonstrated superior predictive accuracy, with the lowest RMSE (2.64) and MAPE (45.6%) among the evaluated methods. Its ability to capture seasonal patterns and periodic fluctuations makes it highly suitable for maintenance scheduling and resource optimization. While ARIMA provided moderate accuracy, its inability to model seasonality resulted in higher errors and a calculated MTBF (1192.54 h) that deviated significantly from the airline’s benchmark (1200 h). Logistic Regression, on the other hand, struggled due to data imbalance, as reflected in its poor ROC-AUC score (48%), making it unsuitable for precise AOG predictions in its current form. Airlines can more effectively align their operational strategies with actual AOG trends by utilizing the Holt–Winters model. This alignment has the potential to enhance reliability, reduce downtime, and optimize the allocation of maintenance resources.

6.3. Optimizing Inventory and Market Strategies with Holt–Winters

The Holt–Winters model’s application has been shown to enable airlines to align its operational strategies more closely with actual AOG trends. This offers significant potential to reduce maintenance costs and optimize spare parts inventory during heavy maintenance checks.

This sub-section explores the impact of applying the Holt–Winters model in aviation, focusing on balancing stock availability with cost control, particularly for high-value, frequently used components. The study links the model’s predictions to optimal safety stock levels using insights from the MTBF metric and data from 2020 to 2024. Tested within SmartLynx operations, the analysis shows how the model can improve service levels, reliability, and cost efficiency, offering insights for broader use across other air operators.

Implementing the Holt–Winters model has demonstrated transformative effects on SmartLynx’s financial performance. These statistical technique outcomes have solidified SmartLynx’s position as a leading player in the competitive aviation sector and proved to be optimal for any other key players in the industry.

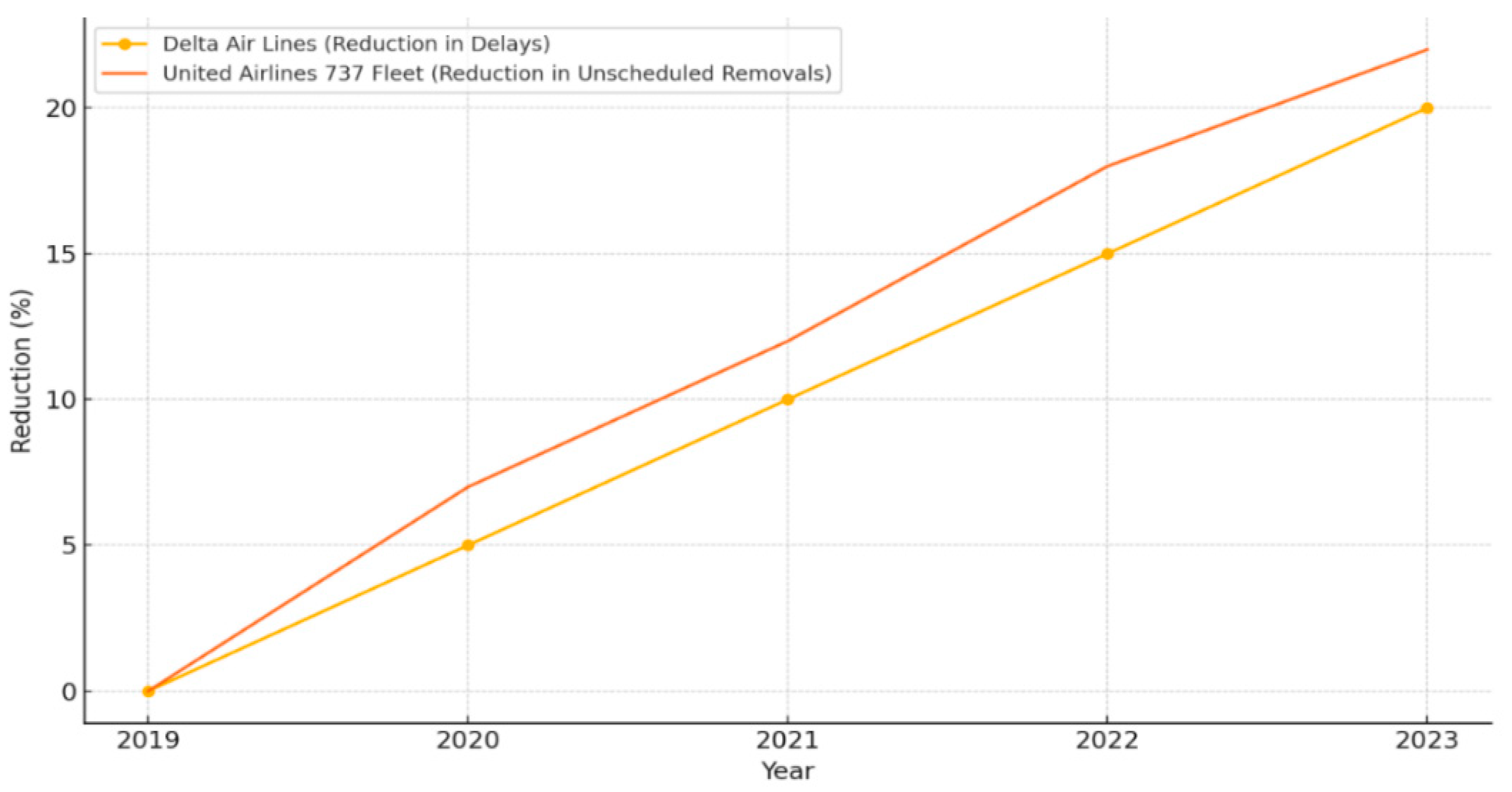

The market dynamics (

Table 10) below reflects projected cost savings, fleet utilization increases, and AOG reductions achieved by various air operators by implementing the Holt–Winters model. These figures were calculated based on the following data sources and methodology:

Data Sources:

- -

SmartLynx: Financial and operational data (2020–2024) sourced from internal financial yearly reports and fleet utilization statistics.

- -

Lufthansa Technik, Delta Airlines, and KLM Engineering: Industry benchmarks and reports, including Aviation Week, IATA publications, and individual airline financial disclosure reports in the years 2023–2024.

- -

Cost Analysis: Expenses related to spare part procurement, emergency repairs, and downtime were extracted from financial statements and industry case studies.

Cost Savings calculation: After Holt–Winters forecasts identify which spare parts and components are likely to fail within a certain time window, air operators can optimize inventory and reduce emergency orders. The resulting cost savings generally come from three areas.

- -

Reduced emergency procurement costs (fewer rush orders and markups).

- -

Lower inventory holding costs (less overstock, lower warehousing expenses).

- -

Decreased downtime-related losses (grounded-aircraft revenue loss, passenger compensation).

The following formulas were used for calculations:

A simplified formula for these annual cost savings (CS) is as follows:

where

- -

EPC = emergency procurement costs (e.g., overnight shipping, supplier markups).

- -

IHC = inventory holding costs (warehouse storage, obsolescence risk).

- -

DR = downtime-related losses (lost ticket sales, passenger accommodation); “pre” and “post” indicate values before and after implementing Holt–Winters.

Percentage increase after implementing Holt–Winters:

The formula for AOG Reduction is as follows:

where AOGpre represents either the number of AOG events or the total AOG hours.

By increasing their MTBF thresholds, each air operator in the table has achieved quantifiable financial and operational gains akin to SmartLynx. For example, KLM’s move from 1190 to 1235 h could generate up to EUR 400,000 in yearly savings, 10–12% higher fleet utilization, and a 20% drop in AOG incidents. Lufthansa’s shift from 1220 to 1250 h might eliminate at least EUR 500,000 in rush fees, boosting revenues by EUR 2 million. Meanwhile, Delta’s target of 1240 h potentially can save EUR 450,000, uplift fleet usage by 10–13%, and cut AOG rates by over 15%.

These hours again were typically calculated using the same framework tested within SmartLynx by analyzing detailed flight hour logs and historical reliability data indicating that components reliably last longer. Improving MTBF levels assessed from actual operational records translates directly into lower costs, better service reliability, and fewer last-minute interruptions. In the following section, we will see how fleet utilization and AOG reduction gains connect to inventory control through a safety stock framework, ensuring airlines maintain the right balance between availability and cost.

In aviation, air operators commonly aim for a 90% service level (Z = 1.28) as it provides a practical balance between maintaining sufficient spare parts and controlling inventory costs. This threshold minimizes the risk of AOG incidents while avoiding excessive spending. For critical components where downtime is highly disruptive, some air operators may adopt a more conservative 95% service level (Z ≈ 1.65) to prioritize reliability over cost. In specialized cases, such as for high-value or hard-to-source parts in remote locations, service levels of 98–99% (Z ≥ 2.05) are used to prevent shortages and ensure uninterrupted operations.

For airlines, balancing stock availability with cost control is a key challenge, particularly for high-value and frequently used parts. Using data from 2020 to 2023, SmartLynx examined how lead time, demand variability, safety stock, and reorder points interplay especially for high-value and frequently moved items. The study also leveraged Holt–Winters forecasting and MTBF metrics to fine-tune spare part strategies.

Among 502 data points, the authors calculated safety stock as a buffer against unexpected demand or supply delays, maintaining a 90% service level (Z = 1.28). This precaution will prove vital for highly variable parts, which could otherwise lead to AOG incidents if left unmanaged. The Z-score remains constant for a given service level, but with a higher MTBF, the total safety stock needed may decrease as per the following formula:

Overall, SmartLynx’s approach, which is anchored by a 90% service level, would help minimize the risk of stockouts while avoiding excessive holding costs. This demonstrates how air operators can adapt threshold standards to maintain operational efficiency and cost-effectiveness in spare parts management.

A frequency chart (

Figure 24) further explores the relationship between lead time and demand variability. The analysis shows that 40% of the data involves moderate lead times (5–10 days) with demand variability (6–9), while less frequent combinations, representing 15–20% of the data, are linked to either very short lead times (<2 days) or high variability (>10). Longer lead times often correlate with higher variability, increasing the need for robust safety stock. Conversely, shorter lead times with lower variability suggest more predictable conditions, offering opportunities to streamline inventory and reduce costs. Approximately 30% of the data reflects stable demand with moderate lead times (3–5 days), while 10% involves high variability and long lead times, highlighting areas for improved forecasting and supply chain adjustments.

Building on the frequency chart of demand standard deviation by lead time, the scatter plot (

Figure 25) highlights the relationship between safety stock (S) and reorder point (R), offering insights into inventory optimization. The plot (

Figure 25) shows a strong positive correlation, where higher safety stock levels align with increased reorder points, reflecting the need for larger buffers to manage demand variability and longer lead times. The average safety stock is approximately eight units, while the average reorder point is around 200. Clusters in the lower-left quadrant represent predictable parts with low variability and minimal safety needs. In contrast, points in the upper-right quadrant (safety stock over 10, reorder points above 300) indicate critical or high-demand items requiring more potent inventory strategies. A Z-score of 1.28, corresponding to a 90% service level, helps balance inventory levels to minimize stockouts while maintaining efficiency.

Factoring in a Mean Time Between Failures (MTBF) of 1245.138 h, the Z-score adjusts dynamically to account for equipment reliability. Lower MTBF values, indicating higher failure rates, necessitate higher safety stock to buffer variability. Conversely, higher MTBF values allow reduced safety stock, enhancing inventory precision and minimizing excess stock. While the Z-score remains constant for a given service level, a higher MTBF reduces demand variability (σd), lowering safety stock requirements.

This system logs critical details such as the timestamp of requests, aircraft tail numbers, reasons for usage (scheduled maintenance or unexpected repairs), quantities withdrawn, and remaining stock. S-MIP also supports data-sharing capabilities through shared maintenance hubs with partner airlines, providing aggregated insights without revealing private details. These aggregated data include metrics like average daily demand for specific parts (e.g., hydraulic assemblies, avionics modules), lead times, and demand variability derived from forecast comparisons.

Each air operator operates its own portal within S-MIP, but the system also offers an overarching view for enhanced operational efficiency. For instance, when multiple air operators, like KLM and Delta, draw from the same MRO stock, SmartLynx analysts can observe total daily usage patterns without knowing the exact breakdown per air operator. By identifying patterns, such as KLM’s consistent ordering versus Delta’s sporadic usage, SmartLynx can approximate each operator’s daily demand for high-value and frequently moved parts. Similarly, lead times are inferred from aggregated data, allowing SmartLynx to estimate typical fulfillment times for competitors like Lufthansa.

Moreover, S-MIP tracks demand variability by comparing actual usage against forecasts, enabling SmartLynx to assess whether competitor demand fluctuates significantly or remains stable.

Below in

Table 11, the authors show how KLM, Lufthansa, and Delta might apply the same safety stock logic, using their own average lead times, demand variances, and newly targeted MTBF figures (all under a 90% service level with Z = 1.28).

Each row in the table shows how other operators’ MTBF, demand variability (σd\sigma_dσd), and lead times shape its safety stock and reorder points. By targeting a 90% service level (Z = 1.28), carriers add a “buffer” on top of their predicted demand during lead time, meaning they hold just enough extra parts to avoid AOG issues if failures happen more often than expected. Lufthansa, dealing with high variability (8–10) and 6–8-day lead times, carries 9–14 units and enforces reorder points between 240 and 300, reflecting its need to protect long-haul flights. Delta, whose numbers fall between SmartLynx and Lufthansa, aimed to reduce its safety stock to 7–11 units by pushing its MTBF from 1215 to 1240 h.

Consequently, as MTBF climbs, demand variability (the up-and-down swings of part failures) shrinks, letting logisticians lower the buffer without risking a stockout. Each air operator, however, must balance the expense of maintaining too many spares against the disruption of even a single AOG event, particularly on high-profile routes. By combining reliability improvements (MTBF) with precise demand forecasts (Holt–Winters) and service level targets (Z = 1.28), these operators can keep just enough safety stock on the shelf to protect against downtime, yet not so much that they tie up unnecessary resources. This approach underscores the key takeaway: the more predictable your part failures and usage patterns, the fewer “safety nets” you need, all while preserving a high service standard in aviation.