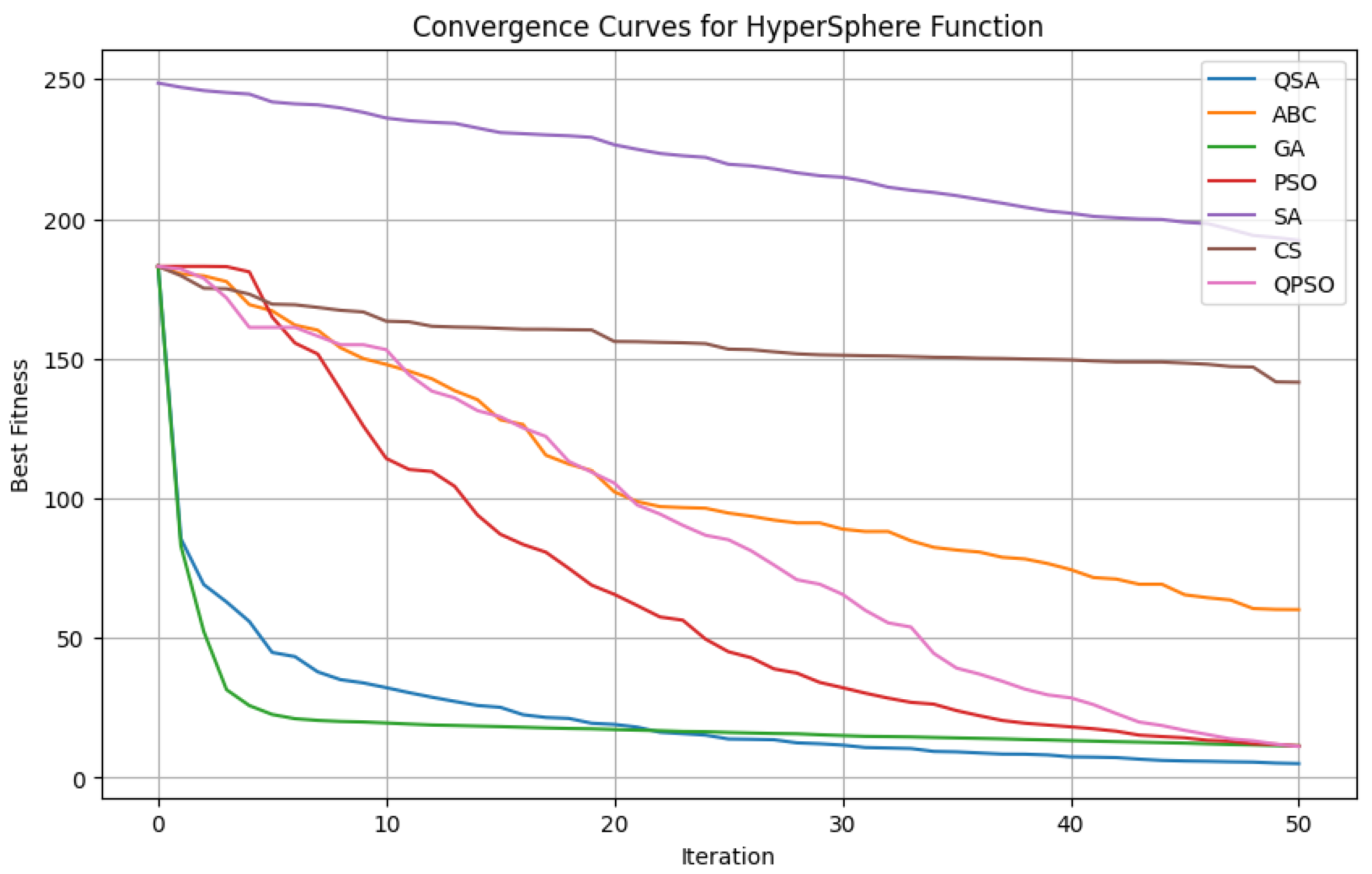

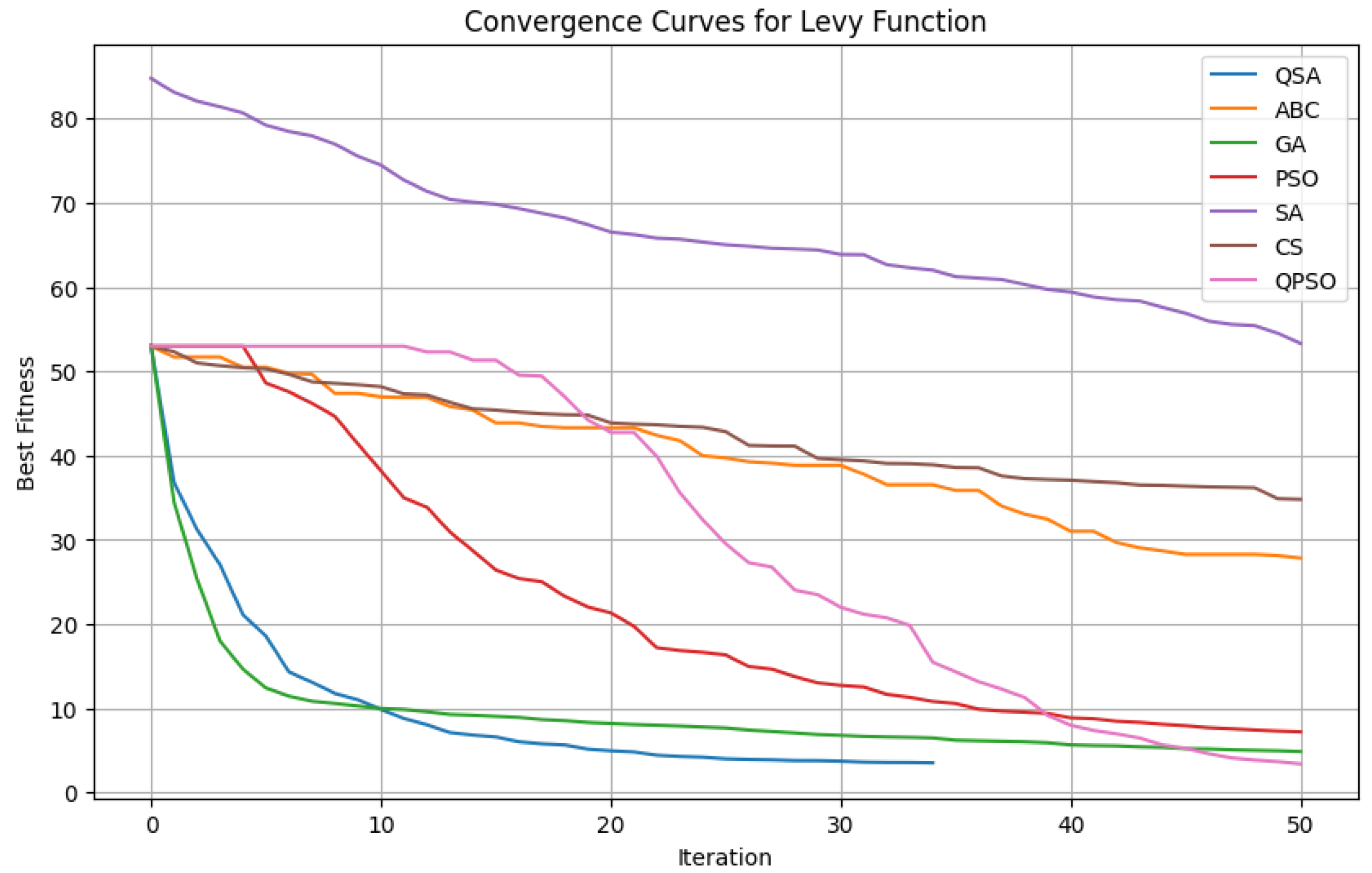

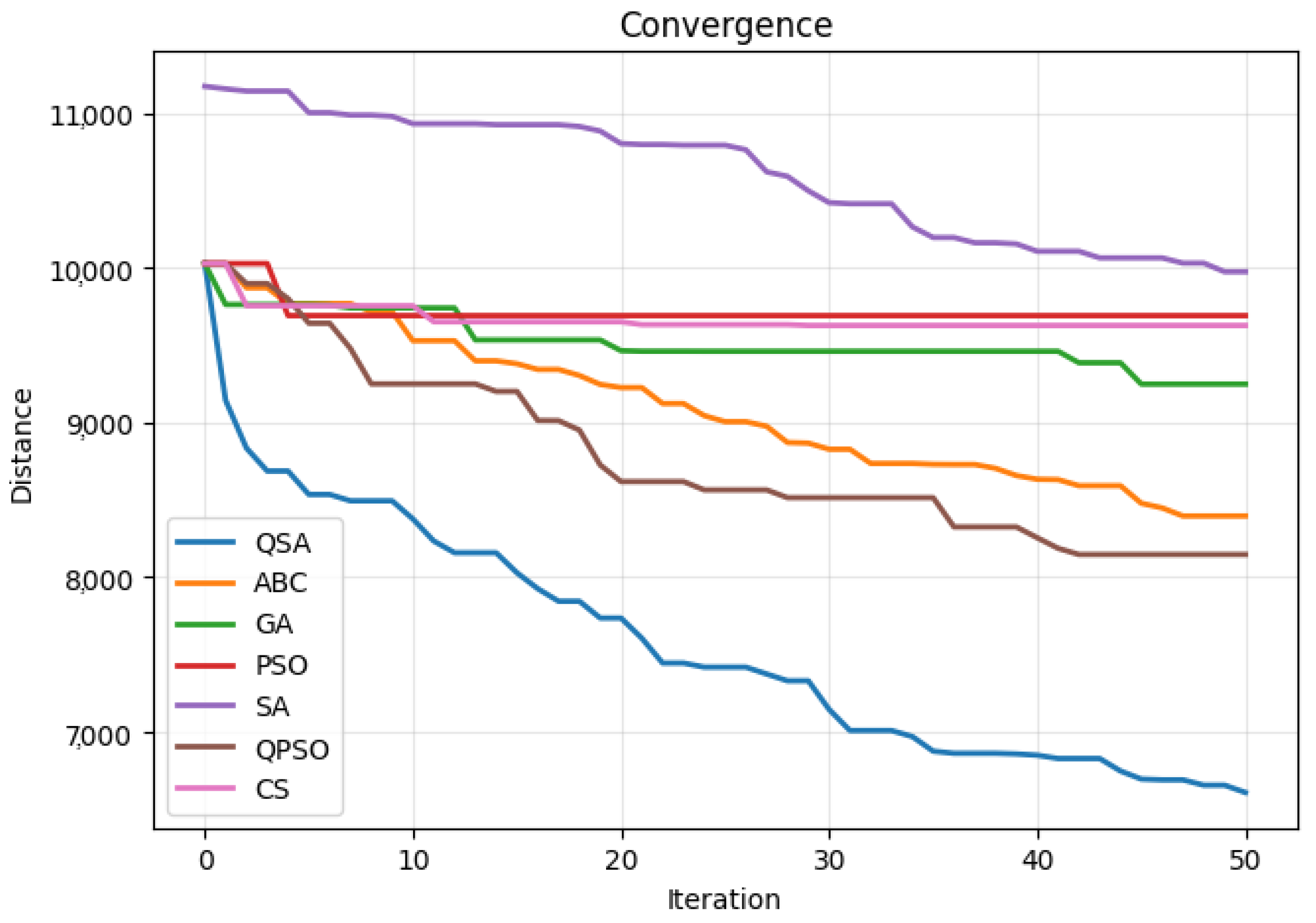

Optimization refers to the process of systematically examining or solving a problem by selecting real or integer values within a defined range and placing them into the function in order to minimize or maximize a real function. Thus, it is the process of modifying the inputs or characteristics of a device to determine the minimum or maximum output or result of the experiment [

1,

2]. The process is called a cost function, objective function or fitness function; the input consists of variables and the output is the cost or fitness function [

3]. The combinatorial explosion that characterizes optimization issues sometimes renders them intractable as their size increases, compelling researchers to employ heuristic or approximation techniques to generate near-optimal solutions in a reasonable amount of time [

4]. There are many different optimization algorithms for solving the problem. The Traveling Salesman Problem (TSP) is the one of test for discrete optimization problems [

5]. Although the TSP is a canonical benchmark for evaluating the performance of metaheuristics in permutation-based discrete optimization, it does not universally represent all problem classes [

6]. In discrete domains, problems such as multidimensional knapsack [

7], job-shop scheduling [

8], and cutting stock tasks [

9] serve as established benchmarks, each targeting distinct algorithmic features such as constraint handling, resource allocation, or combinatorial explosion. Therefore, while the TSP provides a meaningful framework for testing exploration–exploitation balance in discrete settings, it can not be generalized to all metaheuristic benchmarking scenarios. Likewise, in continuous optimization, standardized test suites such as Lévy [

10], HyperSphere [

11], Rastrigin [

12], Rosenbrock, Griewank, and Ackley [

13] functions are extensively used due to their complex landscapes, including multimodality, separability, and non-convexity. The TSP involves finding the shortest possible route that visits a set of cities and returns to the origin [

14]. It is only one prominent example, though; there are numerous other well-known applications, such as the Vehicle Routing Problem (VRP) for fleet management [

15], the Quadratic Assignment Problem (QAP) [

16] for facility location, and other intricate scheduling tasks [

17]. With test functions, we can comprehend how well an optimization technique performs in continuous optimization situations. Test functions are helpful for assessing the precision, robustness, convergence rate, and overall performance of optimization methods [

18]. Test functions such as Lévy and HyperSphere (Sphere) are functions that are often used to evaluate the performance of continuous optimization problems [

19]. There are a number of different methods as metaheuristics for the solution of an optimization problem. Some are inspired by natural processes. As noted in [

20], traditional exact algorithms can solve smaller instances optimally, but because of the exponential expansion in the solution space, they frequently struggle to handle large-scale variants. For large-scale and extremely complicated discrete optimization tasks, metaheuristics—whether or not they are inspired by nature—have therefore proven invaluable [

21]. Over the past three decades, nature-inspired algorithms have become increasingly popular as reliable solutions to combinatorial problems in a variety of fields [

22]. In this work, we introduced a new optimization technique inspired by nature. Using the TSP, we evaluated the performance of our optimization technique on discrete optimization problems. We showed how it performs on continuous optimization problems with using Lévy and Hypersphere test functions. Metaheuristic techniques for TSP-based scheduling optimization problems were thoroughly reviewed by Toaza and Esztergár-Kiss [

23]. They presented the top 15 metaheuristics that have the most impact on TSP solution. Their review indicated that the Genetic Algorithm (GA) has the most impact on the TSP. Additionally, GA outperformed the second algorithm by 38%. Their review listed Simulated Annealing (SA) as the third algorithm and fourth was The Particle Swarm Optimization (PSO) algorithm. The Artificial Bee Colony (ABC), Cuckoo Search (CS), and Quantum Particle Swarm Optimization (QPSO) algorithms were the other popular algorithms. As a result, we used SA, GA, PSO, ABC, CS, and QPSO for benchmarking and the TSP as our algorithm. We also evaluated the performance of these approaches on the continuous optimization problem using test functions. The SA algorithm was developed by Kirkpatrick et al. [

24] as a general optimization technique, and it has since been applied to a range of combinatorial and continuous optimization problems. Inspired by metallurgical annealing, SA systematically lowers the “temperature” parameter to shift from exploratory random moves to opportunistic local improvements. Despite its simplicity, SA has shown remarkable performance on combinatorial problems such as circuit design, pattern recognition, and layout optimization [

24,

25]. Holland [

26] developed the idea of GA and investigated how it might be used for trial allocation. According to Holland [

26], he finds inspiration in the fact that most creatures evolve through two fundamental processes. These are sexual reproduction and natural selection. While the latter allows genes to mix and recombine among offspring, the former selects which individuals in a population survive to reproduce. Genetic material is swapped when the sperm and egg unite, as chromosomes that match align and then partially cross over. A thorough study of genetic algorithms was carried out by Katoch et al. [

27], who covered their historical evolution, present trends, and possible future developments. PSO algorithm was first proposed by Kennedy and Eberhart [

28]. They took inspiration from social behavior of fish and birds. Each potential solution is represented as a “particle” in the search space, and this method optimizes solutions through group cooperation. Since the Kennedy and Eberhart till now, many variations of PSO have been introduced. A thorough review of PSO developments was given by Jain et al. [

29], who also discussed how well they work for various optimization issues. ABC is a swarm intelligence method inspired by the foraging behavior of honey bees [

30,

31]. In ABC, candidate solutions are associated with food sources, and bees can be categorized into employed, onlooker, and scout types. Each plays a role in exploring and exploiting different regions of the search space. Bin packing, flow shop scheduling, and global numerical optimization are just a few of the continuous and discrete optimization issues in which ABC has proven to be successful [

32]. The Discrete Cuckoo Search algorithm represents an enhanced adaptation of the original Cuckoo Search CS method, tailored for solving combinatorial optimization problems such as the Traveling Salesman Problem (TSP). By reconstructing the population structure and introducing a novel category of cuckoos, the authors demonstrated that DCS achieves superior performance on benchmark TSP instances from the TSPLIB, outperforming several conventional metaheuristic algorithms [

33].

More recently, local minimization in large-scale discrete and continuous optimization problems has been seen from fresh angles thanks to quantum-inspired metaheuristics [

34]. Approaches such as quantum-behaved PSO (QPSO) introduce wave-function based position sampling, which can stochastically “jump” through search spaces in a more diverse way than classical PSO [

35]. Similarly, quantum annealing frameworks advocate the use of quantum tunneling to bypass high energy barriers more efficiently than purely thermal-based simulated annealing [

36,

37]. Despite these advances, the majority of quantum-inspired algorithms are primarily targeted at continuous spaces or specialized discrete mappings, and they often lack robust multi-agent collision or repulsion strategies to maintain the diversity of the population in combinatorial landscapes [

38]. The preservation of diversity within a swarm or population is a longstanding challenge in metaheuristics [

39]. Premature convergence—where all solutions cluster around a sub-optimal local pool—can be particularly severe in multi-modal or deceptive problems [

40]. For example, efforts to add repulsive forces swarm-based methods help to prevent swarm collapse [

41], but such collision-based repulsion is not always combined with quantum leaps or local search in a unified framework. It is against this broader background that we present the QSA for optimization challenges. The QSA aims to create a synergy between several elements that are rarely integrated in existing methodologies, such as the following:

Collision-Inspired Repulsion: It is formulated in the QSA by considering the probability of collision of snowflakes in the atmosphere during a snowfall. The QSA introduces a collision term to push the solutions in a continuous auxiliary space. This term guarantees the preservation of diversity by reducing the probability of the population to gather in a single place.

Quantum Tunneling: The QSA introduces “tunneling” jumps that help solutions escape from local minima, especially when they are far from the global optimal solution. It is formulated by quantum annealing and other quantum-inspired metaheuristics. That is, the thermal-based method is powered by this quantum jumping mechanism.

Discrete-Continuous Mapping: Each discrete solution is incorporated in a continuous vector that experiences the collision, tunneling, and thermal motions of the QSA, in a way similar to some discrete PSO adaptations. The benefits of swarm-based random motion and quantum leaps are then applied consistently across permutations, binary vectors, or other combinatorial structures by remapping the new position to a discrete solution. The QSA’s core collision and diversity maintenance mechanisms are conceptually driven by the snowflake analogy, which describes rare collisions and unique crystalline snowflake formations. Similar to burrowing through energy barriers, a feature often missing or understudied in classical swarm intelligence, quantum tunneling is driven by a time-varying schedule.

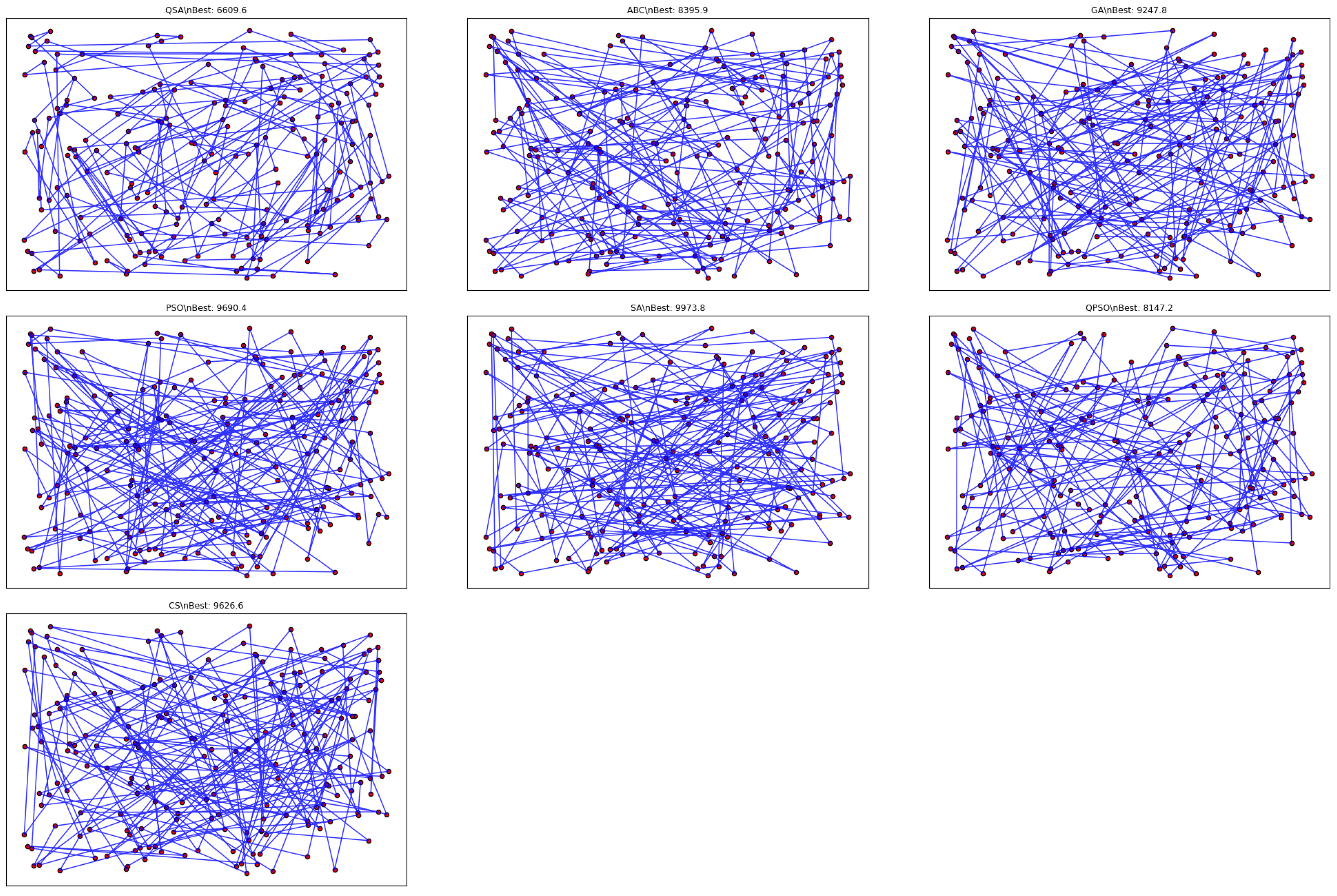

We used TSP with 200 cities across QSA, ABC, GA, SA, PSO, CS, and QPSO in our analysis. The QSA has fared better than other algorithms in the experiment. Additionally, QSA has outperformed the Lévy and HyperSphere test functions.

In order to extend the algorithm’s use beyond the well-known TSP into a variety of distinct domains, including job-shop scheduling, subset selection in feature engineering, and graph-based network design problems, this comprehensive design aims to achieve a well-rounded exploration–exploitation balance. This holistic design of QSA seeks to increase search efficiency, balance exploration and exploitation, and provide greater flexibility for combinatorial and high-dimensional optimization tasks by combining these methods.