Featured Application

The adaptive neuro-fuzzy inference model for video quality assessment can be applied in the real-time monitoring of multimedia streaming services to predict user-perceived quality based on the following objective video parameters: compression rate, frame rate, and spatiotemporal information. This enables dynamic adjustment of streaming session parameters (e.g., designing a bitrate ladder or optimizing video compression settings) to maintain or improve perceived quality and ensures optimal Quality of Experience (QoE) for end-users.

Abstract

Video content and streaming services have become integral to modern networks, driving increases in data traffic and necessitating effective methods for evaluating Quality of Experience (QoE). Accurately measuring QoE is critical for ensuring user satisfaction in multimedia applications. In this study, an optimized adaptive neuro-fuzzy inference model that leverages subtractive clustering for high frame rate video quality assessment is presented. The model was developed and validated using the publicly available LIVE-YT-HFR dataset, which comprises 480 high-frame-rate video sequences and quality ratings provided by 85 subjects. The subtractive clustering parameters were optimized to strike a balance between model complexity and predictive accuracy. A targeted evaluation against the LIVE-YT-HFR subjective ratings yielded a root mean squared error of 2.9091, a Pearson correlation of 0.9174, and a Spearman rank-order correlation of 0.9048, underscoring the model’s superior accuracy compared to existing methods.

1. Introduction

This section is structured to provide context for the study. The motivation for the investigation is first presented, after which the key research contributions are delineated, and the section is concluded with an outline of the manuscript’s overall organization.

1.1. Motivation

Modern networks are increasingly characterized by a surge in video content, which dominates global data traffic. This proliferation of video is driven by the extensive growth of multimedia streaming, online gaming, digital advertising, and the pervasive influence of social networks [1]. Platforms such as Netflix, YouTube, and social media channels have transformed how audiences consume content, making video an indispensable medium for communication, entertainment, and marketing. In this context, high-frame-rate (HFR) video has drawn significant attention due to its ability to deliver smoother motion and enhanced visual clarity, both essential for immersive streaming experiences, realistic virtual environments, and dynamic advertisements [2]. For instance, recently, HFR videos have been gaining momentum in sports event broadcasting, providing enhanced spectating experiences for the viewers [3]. Moreover, modern consumer devices, ranging from smartphones with high-refresh-rate displays to large, high-definition televisions, are increasingly capable of rendering HFR content, further driving demand for superior video quality. The advent of next-generation mobile networks, such as 5G and beyond, has further accelerated this trend by providing higher data rates at lower latency. These improved network capabilities make it feasible to stream HFR content seamlessly, thereby meeting the rising consumer demand for high-quality, real-time video.

However, despite these technological advancements, accurately assessing the Quality of Experience (QoE) for video streaming remains a formidable challenge. QoE encompasses the overall satisfaction of the user, reflecting not only objective transmission parameters but also the subjective perceptual quality of the content [4]. In the context of HFR content, this challenge is even more pronounced. HFR videos require substantially higher bandwidth and lower latency compared to standard videos, and even minor issues such as subtle motion artifacts, slight compression distortions, or occasional frame drops can disrupt the smooth, fluid motion that is essential for an immersive experience. These transmission impairments, which may go unnoticed in standard metrics, can significantly degrade user satisfaction by reducing the perceived realism and visual clarity [3]. Traditional objective quality metrics often fail to capture these intricate nuances of human perception, especially when HFR content’s demanding nature magnifies the impact of even small errors in transmission [5].

To address these challenges, robust and accurate models for video quality assessment (VQA) are imperative. Such models not only facilitate the optimization of streaming services to ensure a superior viewer experience but also enable network operators and content providers to monitor and dynamically adjust delivery parameters in real time. This work presents an optimized adaptive neuro-fuzzy inference system that leverages subtractive clustering for the accurate prediction of QoE in multimedia applications. The approach integrates key video parameters (video compression rate, frame rate, spatial information, and temporal information) to estimate the Mean Opinion Score (MOS) that closely reflects user perception. These parameters were chosen because compression rate directly influences visible artifacts, while video frame rate determines the smoothness of motion, spatial information captures the complexity and detail within scenes, and temporal information reflects the intensity of movement. Together, they encapsulate the technical and perceptual factors that impact overall video quality.

1.2. Key Research Contributions

This research represents a continuation of our previous work, where a complex fuzzy logic-based model for VQA was developed (as published in [6]). In the current study, that model is enhanced by incorporating neural network techniques into the inference system development. Hence, the following contributions of the current work can be identified:

- The integration of neural network techniques within the fuzzy inference system allows the model to simultaneously learn membership function parameters and rules, resulting in a more adaptive and robust system.

- By employing subtractive clustering with optimized parameters such as range of influence, squash factor, and accept/reject ratios, the model generates a lightweight, compact rule base that minimizes computational demands without sacrificing accuracy.

- The new model achieves lower RMSE (Root Mean Squared Error) and higher PCC (Pearson Correlation Coefficient) and SROCC (Spearman Rank-Order Correlation Coefficient) compared to traditional, as well as recently developed, VQA objective models, enhancing the accuracy of MOS prediction.

1.3. Organization of the Manuscript

The remainder of the paper is organized as follows. Section 2 provides a brief overview of the LIVE-YT-HFR database [3,7], discussing the dataset used for model development and validation. Section 3 describes the design and implementation of the optimized adaptive neuro-fuzzy inference system, highlighting the integration of video parameters and the application of subtractive clustering. Section 4 reviews the comparable VQA models and evaluates their performance metrics on the LIVE-YT-HFR dataset, thus allowing a direct comparison with the developed model. Finally, Section 5 concludes and discusses future research directions.

2. Dataset Used for the Model Development

As discussed earlier, this study relies on the publicly available LIVE-YT-HFR video database, detailed in [3,7]. The database comprises 480 video sequences generated from 16 source videos, each modified in terms of compression and frame rate.

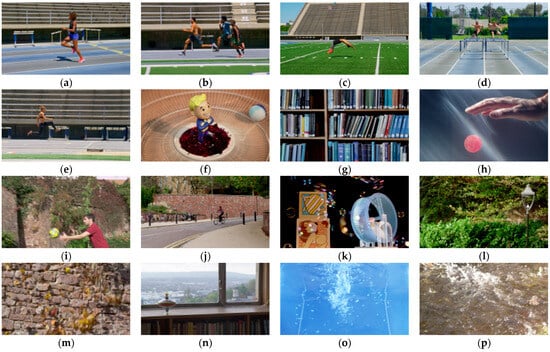

In Figure 1, representative frames from each source video are displayed, highlighting a variety of genres, including sports, natural landscapes, urban settings, and interior environments.

Figure 1.

Extracted frames from the LIVE-YT-HFR database [7]: (a) an individual running; (b) three people running; (c) an individual performing flips; (d) runners clearing hurdles; (e) a long jump; (f) a spinning bobblehead; (g) a bookcase filled with volumes; (h) an underwater ball bounce; (i) two individuals passing a ball; (j) a street cyclist; (k) a hamster running on a wheel; (l) a lamppost in a park; (m) falling leaves; (n) a spinning top; (o) clusters of underwater bubbles; (p) water waves.

2.1. Video-Sequence Preparation and Subjective Quality Assessment

The source material of the video database originates from two video collections. Eleven uncompressed source videos were obtained from the Bristol Vision Institute HFR collection [8], originally recorded at 3840 × 2160 pixels and 120 frames per second (fps). For public release, these videos were spatially downsampled to 1920 × 1080 pixels in YUV 4:2:0 8-bit format and have a duration of 10 s each. In addition, the dataset incorporates five high-motion sports videos captured by Fox Media Group at the same high resolution in YUV 4:2:0 10-bit format, with durations ranging between 6 and 8 s. To ensure a diverse set of visual content, measures such as Spatial Information (SI) and Temporal Information (TI) were computed using the MSU Quality Measurement Tool (version 14.1), compensating for metrics not provided in the public release of the database. In [3], the LIVE-YT-HFR database authors report that the test sequences were generated by applying a frame-dropping technique, which avoids motion blur and produces sequences at varied frame rates. Specifically, 30 test sequences per source were derived at six frame rates (24, 30, 60, 82, 98, and 120 fps) and then encoded with VP9 compression at five Constant Rate Factor (CRF) levels, from low, i.e., lossless (CRF = 0) to the very high compression (CRF = 63), with intermediate settings chosen to ensure a uniform bitrate difference.

Alongside test sequences, the database holds the results of the subjective experiments. The subjective study employed the Single-Stimulus Continuous Quality Evaluation (SSCQE) method [9]. A total of 85 undergraduate students (14 females, 71 males, aged 20–30) participated under standardized conditions on a 27-inch display (3840 × 2160 resolution) with a viewing distance of approximately 75 cm. To minimize bias, the sequences were divided into four subsets (120 videos each), and every participant rated 240 videos across two sessions using uniquely randomized playlists. A continuous five-point quality scale was used to collect ratings, and analyses were performed to verify both inter- and intra-subject consistency. The raw data of these experiments are available on the author’s GitHub page [7].

2.2. Dataset Limitations

Conducting subjective QoE studies is inherently resource-intensive and logistically complex, so every publicly available database reflects design trade-offs. LIVE-YT-HFR was selected because it offers a large open-access corpus that pairs high-frame-rate video with collected MOS. Nevertheless, it focuses on a single compression format (VP9) and was generated under impairment-free network conditions; consequently, it does not capture the influence of alternative codecs (e.g., H.264/AVC, H.265/HEVC) or packet loss, rebuffering, and jitter. The accompanying MOS was obtained from 85 university students, which, despite high inter- and intra-subject consistency reported by the database authors, may not fully represent the broader viewing population. These factors could limit the direct generalization of results presented in this work to other delivery scenarios. Future work will therefore retrain and validate the developed VQA model on additional datasets that include multiple codecs, network impairments, and more heterogeneous subject pools, providing a wider assessment of the model’s robustness.

3. VQA Model Design

This section describes how the LIVE-YT-HFR data were partitioned using five-fold cross-validation and multiple random splits, then details the systematic tuning of subtractive clustering parameters and the ensuing ANFIS (Adaptive Neuro-Fuzzy Inference System) training procedure. The section continues by presenting the learned membership functions, the resulting Sugeno FIS (Fuzzy Inference System), the surface visualizations, and sensitivity analysis that reveal how the input variables jointly influence the predicted MOS.

3.1. Data Preparation and Cross-Validation Strategy

To ensure that the sensitivity analysis is not biased by a single, possibly fortuitous, division of the LIVE-YT-HFR data, the 480 video sequences were repartitioned into multiple, statistically independent training/validation pairs before each ANFIS run. First, a stratified five-fold cross-validation scheme was generated: after permuting the sample indices with a fixed pseudo-random seed, the dataset was divided into five folds of equal size (96 samples per fold). For each fold (1 … 5), the -th subset served as the checking (validation) set, while the remaining four folds were used for training, so every video sequence appeared exactly once in validation and four times in training. Secondly, 30 additional random 80/20 hold-out splits were produced. For each repetition a new permutation of the indices was drawn, while the first 80% of samples were assigned to the training set and the remaining 20% to the validation set.

In total, 35 distinct partitions (five cross-validation and 30 random splits) were obtained. During the sensitivity study, each candidate clustering configuration was evaluated on each split, and the resulting performance metrics were averaged, as discussed in the next section.

3.2. Subtractive Clustering Parameter Selection

The modeling process begins by generating an initial fuzzy inference system (FIS) with MATLAB’s Fuzzy Logic Toolbox (MATLAB version R2024b) [10], where subtractive clustering is first applied to the normalized input space. The purpose of this step is to group similar data points into compact clusters whose centers will become the prototypes of the fuzzy membership functions; each center, therefore, marks a distinct region of the four-dimensional space spanned by compression rate, frame rate, spatial information, and temporal information. Subtractive clustering is well suited to this task because it selects cluster centers purely from the local data-density distribution, automatically determining both the number and the shape of the resulting fuzzy sets [11].

Conceptually, this data-driven separation is analogous to the blind-source-separation strategy used by Chang et al. to suppress range ambiguities in space-borne SAR (Synthetic Aperture Radar) imagery: by first disentangling mixed-signal components, the subsequent processing stages operate on cleaner, better-isolated inputs, leading to improved performance [12]. In the ANFIS framework created in this study, the clustering phase likewise “decouples” the strongly coupled video parameters, providing a compact yet expressive foundation on which ANFIS can later learn an adaptive rule base. Key parameters in this process and their significance are as follows.

- Range of influence (RoI) defines the radius within the normalized data space in which an individual data point influences the “potential” of a candidate cluster center. Thus, it determines how far the influence of a potential cluster center is computed. A larger range of influence means that each data point affects a broader area, which can lead to a smaller number of clusters.

- After the first cluster center is chosen, the potential of all other data points in the vicinity is reduced (“squashed”) around that center. The squash factor (SF) determines how much the potential is diminished in these regions. A higher squash factor results in a stronger reduction of potential near the selected center, which can prevent overlapping clusters and ensure a clearer separation.

- The accept ratio (AR) sets a threshold (relative to the potential of the first selected cluster center) above which a candidate is automatically accepted as the new center.

- In contrast to the accept ratio, the reject ratio (RR) establishes a threshold below which a candidate is automatically rejected.

As previously reported, the ANFIS framework was implemented in MATLAB (version R2024b), where the data about the video sequences were randomly divided into 35 distinct partitions. To avoid making an arbitrary choice of subtractive clustering parameters, for every training/validation split generated in the cross-validation procedure, a grid sweep was conducted for RoI {0.5, 0.6, 0.7, 0.8}, SF {1.1, 1.3, 1.5}, AR {0.5, 0.6, 0.7} and RR {0.1, 0.2, 0.3}. It is noteworthy to mention that the RoI values below 0.5 produce small radii and an excessive number of clusters, while values above 0.8 merge dissimilar regions and over-smooth the input space. Furthermore, moderate squash values (1.1–1.5) ensure that neighboring clusters are sufficiently separated, preventing redundant, highly overlapping membership functions, while the chosen AR and RR thresholds filter candidate centers whose potential is either significant (AR) or negligible (RR), stabilizing the rule count and avoiding spurious clusters caused by noise. Thus, the chosen parameter ranges were selected a priori to balance model compactness and descriptive power.

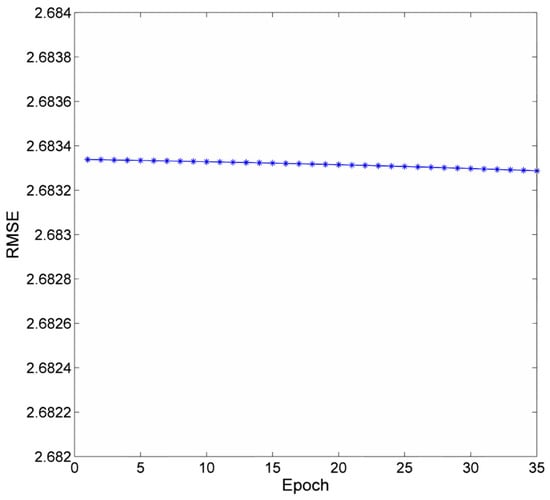

This yielded 4 × 3 × 3 × 3 = 108 configurations per split. A total of 3780 ANFIS trainings were executed (35 partitions × 108 configurations) for 35 epochs each. The combination RoI = 0.8, SF = 1.1, AR = 0.5, and RR = 0.1 achieved the lowest average RMSE (3.080) and the smallest standard deviation (0.317). The ranking of the five best configurations is reported in Table 1, while Figure 2 shows one example of RMSE changes during ANFIS training.

Table 1.

Top-5 subtractive clustering parameter configurations averaged over 35 train/test partitions. Values shown are the mean ± standard deviation (SD) of RMSE and mean PCC. Bold-faced values indicate the chosen parameters used for the ANFIS generation.

Figure 2.

RMSE progression over 35 training epochs on one arbitrarily selected data partition, using the top-ranked subtractive clustering configuration from Table 1.

To verify that the choice of RoI, SF, AR, and RR materially influences prediction accuracy, a non-parametric one-factor Kruskal–Wallis test was run on the complete grid-search log. The test confirms that RoI, SF, and RR had to be tuned, whereas AR may have been kept at its middle value without a loss of accuracy (Table 2).

Table 2.

One-factor Kruskal–Wallis analysis of the influence of subtractive clustering parameters (RoI, SF, AR, RR) on RMSE.

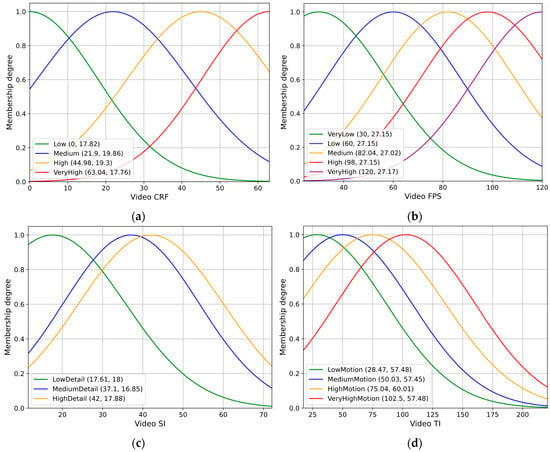

3.3. Membership Functions and Compact Rule Base

The resulting membership functions for the chosen set of RoI, SF, AR, and RR values are depicted in Figure 3; the subplots also illustrate the linguistic interpretations that were assigned to the fuzzy clusters that serve as antecedents in the rule base.

Figure 3.

Membership functions that describe the fuzzy clusters of the input parameters. Note that the subplot legends show linguistic meanings of the fuzzy clusters, as well as the properties of the Gaussian functions in a form (mean, st. dev.): (a) video CRF; (b) video FPS; (c) video SI; (d) video TI.

In addition, the ANFIS framework derives a set of fuzzy IF-THEN rules, wherein each rule associates a unique combination of input membership functions with a corresponding output value using a weighted average approach [10,13]. The final rule set, presented in Table 3, maps these combinations to the predicted MOS values, encapsulating the optimized decision-making process of the model. As seen from Table 3, the model operates on seven rules, making it lightweight and less complex compared to the previous model [6], which relied on 36 rules. Furthermore, it is important to note that, since the ANFIS framework employs a Sugeno-type FIS, the output variable is generated as a weighted combination of rule consequents rather than through membership functions.

Table 3.

The fuzzy rules used in the ANFIS model. The header row lists the four input parameters and the model’s output, predicted MOS rating. Each rule represents a conjunction of input conditions (using the AND operator). For example, rule 1 indicates that IF Video CRF is High AND Video FPS is Low AND SI is HighDetail AND TI is HighMotion THEN the MOS rating corresponds to the OutputFunction1 whose parameters are listed in the last column of the table (i.e., the coefficients used in Equation (4)).

The Sugeno-type FIS is particularly well-suited for VQA due to its computational efficiency and streamlined rule evaluation [14]. Unlike Mamdani systems, where each rule’s fuzzy output must be aggregated and subjected to a, for instance, resource-intensive centroid defuzzification, the Sugeno framework computes each rule’s crisp output via a simple linear function, as exemplified in Table 3, and then forms the final prediction as a weighted average of those rule outputs. In this study, only seven rules are evaluated per inference, compared to 36 in the previous Mamdani-based model [6]. Since each rule in a Sugeno FIS requires only membership-degree lookups and a small number of additions for the consequent’s linear function, the overall inference complexity scales approximately , with rules and inputs, rather than the much heavier integration steps demanded by Mamdani’s centroid method (where is the resolution of the output universe). This reduction in rule count and the use of weighted-average defuzzification translate directly into lower per-sample compute and memory overhead, making the ANFIS-VQA model more suitable for real-time monitoring of streaming services.

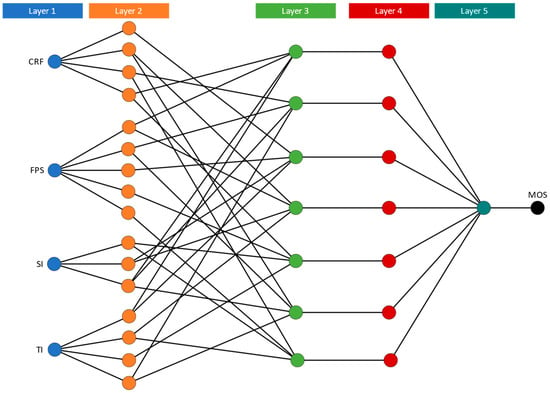

After fuzzification and the formulation of fuzzy rules, the Sugeno-type FIS employed in the ANFIS model produces a crisp output, the predicted MOS rating, using a defuzzification process. Specifically, the fuzzy inference process is structured into five layers (Figure 4) that collectively convert raw input values into a crisp output (the predicted MOS). Note that [13] was used to mathematically describe the inference logic of the model through Equations (1)–(5).

Figure 4.

ANFIS structure of the VQA model.

In Layer 1, each node computes the membership degree of an input using Gaussian membership functions (Figure 3), thereby mapping the raw input to a value via:

where and are the center and spread of the Gaussian function, respectively. Layer 2 determines the firing strength of each fuzzy rule by applying the AND operator with the product method. For a rule with inputs, the firing strength is calculated as:

where is the membership degree of the -th input in the -th fuzzy set. In Layer 3, these firing strengths are normalized by dividing each by the sum of all firing strengths (with being the total number of rules):

Layer 4 computes the crisp output for each rule using a linear function of the inputs:

where the coefficients represent the consequent parameters (see the last column in Table 3). Finally, Layer 5 aggregates these outputs through weighted average defuzzification:

This layered process ensures that the entire fuzzy reasoning, including the embedded implication and aggregation steps characteristic of Sugeno-type systems, is efficiently synthesized into a single, interpretable output that can be directly compared with actual quality measurements.

3.4. Surface Visualisation and Sensitivity Analysis

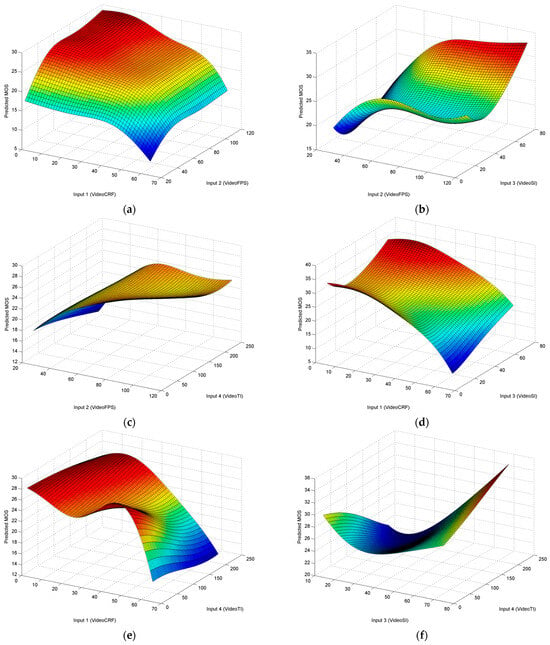

Based on the process described above, as well as the membership functions shown in Figure 3 and the fuzzy rule set listed in Table 3, insight can be gained into how the input parameters collectively influence the output. Figure 5 presents a series of surface subplots that illustrate the relationship between all possible pairs of input parameters and the predicted MOS rating, revealing the nonlinear interactions captured by the neuro-fuzzy inference system.

Figure 5.

The relationship between pairs of input parameters and the output (Predicted MOS): (a) video FPS and CRF; (b) video FPS and SI; (c) video FPS and TI; (d) video CRF and SI; (e) video CRF and TI; (f) video SI and TI.

The visualizations demonstrate that the interplay among variables (specifically, compression rate expressed via CRF levels, frame rate, spatial information, and temporal information) is highly complex. For example, the effect of compression on perceived quality can vary dramatically depending on the frame rate (Figure 5a), the level of spatial detail present in the video (Figure 5d), or the level of motion of the screen (Figure 5e). In addition, the combined impact of spatial and temporal information on user perception is not simply additive, but rather synergistic, resulting in subtle distortions that are challenging to predict with traditional models (Figure 5f).

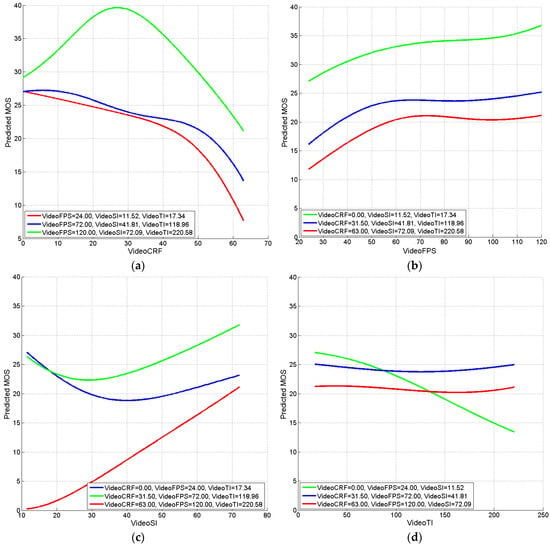

Building on these insights, a targeted sensitivity analysis was conducted to quantify how each input alone drives the MOS prediction under varying context levels of the other three parameters. In Figure 6, four subplots (a–d) show the predicted MOS as one input sweeps from its minimum to maximum value, while the remaining inputs are fixed at low, medium, or high settings.

Figure 6.

Sensitivity analysis of the ANFIS-VQA model. Three input variables remained fixed while varying: (a) VideoCRF; (b) VideoFPS; (c) VideoSI; (d) VideoTI.

As seen in Figure 6a, an increase in VideoCRF leads to a sharp decline in MOS, most dramatically when frame rate, spatial information, and temporal information are all low. This behavior aligns with expectations since heavily compressed video with fast scene changes and dynamic content will suffer more perceptible artifacts. Under high-quality settings, the model remains somewhat robust yet still exhibits quality loss at extreme compression, reflecting its sensitivity to subtle distortions. Figure 6b shows that as the frame rate rises, the MOS prediction increases in a nonlinear manner, especially when other parameters are low, because fluid motion at high FPS mitigates the impact of spatial or temporal shortcomings; improvements taper off beyond around 100 fps, indicating a saturation of the smoothness benefit. In Figure 6c, boosting spatial information yields a consistent uplift in MOS regardless of compression, frame rate, or motion complexity, demonstrating that richer scene detail uniformly enhances perceived quality. Finally, Figure 6d reveals that temporal information has a mildly nonmonotonic effect in low-quality contexts. Predicted MOS improves at moderate motion levels, then plateaus or slightly decreases at very high motion, reflecting the model’s recognition that excessive motion complexity can overwhelm viewers, whereas in high-quality scenarios, increased temporal detail produces a steady gain in perceived quality.

This complexity underscores the fact that user perception of HFR video quality is influenced by multiple interdependent factors and that a sophisticated modeling approach, like the neuro-fuzzy system, is required to capture these multidimensional relationships. Ultimately, the surface plots (Figure 5) and the sensitivity analysis (Figure 6) not only illustrate the direct effects of individual inputs but also highlight how their interactions drive the overall quality assessment, emphasizing the necessity for a robust, adaptive model in real-world multimedia applications. Moreover, the need for such a model becomes even more critical in scenarios involving HFR streaming over mobile networks, where rapidly changing radio conditions can significantly impact end-user performance. In these environments, quick and timely adjustments of streaming parameters, like bitrate ladder or optimizing video compression settings, are essential to ensure uninterrupted video playback and an optimal user experience.

4. Review and Comparative Evaluation of VQA Models

In this section, first, the related works on VQA models are reviewed, intending to highlight their strengths and limitations from the perspective of HFR video quality evaluation. The models under consideration include traditional metrics such as PSNR (Peak Signal-to-Noise Ratio), SSIM (Structural Similarity Index) [15], MS-SSIM (Multi-Scale SSIM) [16], and FSIM (Feature Similarity Index) [17], as well as advanced approaches like ST-RRED (Spatio-Temporal Reduced Reference Entropy Difference) [18], SpEED (Spatio-Temporal Entropic Differencing) [19], FRQM (Frame Rate Quality Metric) [20], DeepVQA [21], GSTI (Gradient-based Spatio-Temporal Index) [22], several variants of AVQBits [23] (AVQBits|M3, AVQBits|M1, AVQBits|M0, AVQBits|H0|s, AVQBits|H0|f), VMAF (Video Multi-method Assessment Fusion) [5,24], and the recently developed FLAME-VQA [6] and BH-VQA (Blind High Frame Rate Video Quality Assessment) [2]. Second, all models were evaluated on the LIVE-YT-HFR video database, and their performances were compared against the current model using standard metrics (namely RMSE, PCC, and SROCC). The test results for the models were taken directly from the experiments reported in [3], except BH-VQA, FLAME-VQA, and AVQBits, whose results were taken from [2,6,23], respectively.

4.1. Review of VQA Models

This subsection begins by reviewing the most commonly used objective metric for video quality evaluation—PSNR. PSNR is a full reference metric that quantifies video quality by comparing the original video with a distorted version. It is computed as a logarithmic ratio of the maximum possible pixel value to the MSE between the two images or video frames [25]. While PSNR is straightforward and computationally efficient, its primary limitation lies in its inability to capture perceptual quality, particularly for HFR content. In HFR videos, the smoothness of motion and subtle temporal artifacts play a crucial role in user experience, yet PSNR only measures pixel-level differences without considering these temporal dynamics or taking into account a user’s perceptual experience. As a result, PSNR may report high quality despite the presence of perceptible motion artifacts or compression distortions, making it less reliable for evaluating HFR video content in terms of human visual perception.

The SSIM metric, introduced by Wang et al. [15], represents an advancement over traditional PSNR metrics by evaluating video quality based on perceptual phenomena. SSIM assesses the similarity between the original and distorted images by comparing luminance, contrast, and structural information within local windows. This approach is designed to align more closely with human visual perception, as it accounts for structural distortions that are often more noticeable to the human eye than simple pixel-level errors. Building on the strengths and limitations of SSIM, MS-SSIM [16] further refines quality assessment by evaluating image quality across multiple scales. In this approach, images are decomposed into several resolutions, and the SSIM index is computed at each scale. These individual scores are then aggregated into a single overall quality measure that captures both coarse and fine perceptual details more effectively. MS-SSIM generally achieves a higher correlation with human subjective assessments compared to standard SSIM, as it is more sensitive to distortions that affect image details at different resolutions.

Next in line in the evolution of similarity indexes is the FSIM. It leverages phase congruency, which serves as a robust measure of feature significance, alongside gradient magnitude to quantify the similarity between the original and distorted images [17]. Unlike SSIM, which primarily compares luminance, contrast, and structural information, FSIM emphasizes the preservation of visual features, thus providing a more perceptually relevant quality assessment. This metric has demonstrated superior performance in capturing subtle details and distortions, particularly in scenarios where fine structural information is paramount. However, both SSIM and MS-SSIM, as well as FSIM, exhibit limitations when applied to HFR video content. While SSIM generally provides a better correlation with subjective quality ratings than PSNR, it does not capture the temporal dynamics of HFR sequences where, as discussed earlier, temporal smoothness and subtle motion artifacts are critical to user experience. Similarly, despite their improved spatial evaluation, MS-SSIM and FSIM still encounter the same challenges when applied to HFR video content.

Contrary to models reviewed thus far, which focus on pixel differences, structural similarity, or feature preservation, both ST-RRED [18] and SpEED [19] utilize entropy-based approaches to assess video quality by measuring the differences in information content between the reference and distorted signals. ST-RRED focuses on computing entropy differences over local spatiotemporal regions using a reduced-reference strategy, which highlights changes in texture and motion consistency. In contrast, SpEED extends this concept by evaluating entropy differences across multiple scales and temporal windows, thereby providing a more comprehensive view of both spatial and temporal distortions while emphasizing computational efficiency. Together, these metrics offer complementary insights into how subtle degradations, such as compression artifacts and motion irregularities, affect perceived video quality, especially in HFR content.

The FRQM introduces a different approach to evaluating video quality with a focus on frame rate variations. As discussed by Zhang et al. in [20], it is designed to capture the impact of frame rate on the overall perceptual quality by jointly assessing spatial fidelity and temporal smoothness. Unlike metrics such as SpEED, which evaluate general spatio-temporal entropy differences, FRQM explicitly models the influence of frame rate variations, addressing the unique challenges posed by HFR video content. It quantifies how deviations from a target frame rate, along with associated artifacts, degrade the visual experience. The authors report that FRQM improved the prediction accuracy over all other tested VQA methods (available at the time) with relatively low complexity.

DeepVQA represents a modern, deep-learning-based approach to video quality assessment that significantly departs from traditional entropy or signal-based metrics. DeepVQA leverages convolutional neural networks to automatically learn hierarchical features from video data, capturing both spatial details and temporal dynamics in a unified framework [21]. The network is trained end-to-end on large datasets of video sequences paired with subjective quality scores, enabling it to directly predict the MOS based on complex visual and motion characteristics. Another modern VQA model suitable for HFR video quality evaluation is GSTI. GSTI computes local gradients to capture the loss of spatial detail, as well as variations in motion dynamics that indicate temporal distortions [22]. By emphasizing the changes in gradient magnitudes, GSTI can differentiate between perceptually significant degradations and minor, non-intrusive variations. Moreover, the method is designed with computational efficiency in mind, facilitating its use in real-time applications.

Ramachandra Rao et al. in [23] propose the AVQBits model, i.e., its multiple variants, namely, AVQBits|M3, AVQBits|M1, AVQBits|M0, AVQBits|H0|s, and AVQBits|H0|f, to more precisely predict video quality by accounting for bit rate variations and content complexity. The “M” versions primarily differ in their modeling of the bit rate impact on perceptual quality, with each variant (M3, M1, M0) representing different parameter configurations that balance model complexity and sensitivity. In contrast, the “H0” variants, distinguished by the suffixes “s” and “f”, incorporate additional considerations: the “s” version emphasizes spatial characteristics, while the “f” variant focuses on finer-grained features. Another modern and cutting-edge VQA model is VMAF, developed by Netflix [24]. Unlike traditional metrics that focus on individual aspects such as PSNR or SSIM, VMAF fuses multiple quality indicators, combining pixel-level fidelity, structural similarity, and perceptual features, using machine-learning techniques. This fusion process results in a more robust assessment of both spatial and temporal artifacts, which is particularly valuable for high-resolution and HFR content [5].

Lu et al. introduced BH-VQA, the first blind metric specifically designed for HFR VQA [2]. BH-VQA extracts multi-scale spatiotemporal features, such as motion coherence and texture complexity, and employs a trained regression model to predict MOS without requiring a reference video. When evaluated on the LIVE-YT-HFR dataset, it achieved high correlations with subjective ratings, demonstrating its suitability for real-time, no-reference quality monitoring in HFR streaming scenarios. Lastly, FLAME-VQA is a comprehensive VQA model originally developed in earlier work [6]. This model is based on an FIS that uses the same input parameters as the current model but relies on a Mamdani rule-based framework with membership functions generated via FCM (Fuzzy C-Means) clustering. In FLAME-VQA, the rules were manually defined and subsequently adjusted through domain expertise and rule induction, resulting in a relatively complex rule base of 36 rules. Moreover, to increase the model accuracy, the weights were used on each rule to further fine-tune and define its contribution to the aggregated and defuzzified result. In contrast, the current model employs the ANFIS framework to automatically generate fuzzy rules and optimize membership functions, yielding a more compact and efficient model with fewer rules while maintaining prediction accuracy.

In the next subsection, the discussed VQA models are evaluated by comparing their predicted MOS ratings against user MOS ratings from the LIVE-YT-HFR database for 480 video sequences, using RMSE, PCC, and SROCC as performance metrics. Due to their inherent simplicity and reliance on basic pixel-level or similarity measures, traditional metrics like PSNR and SSIM are expected to yield higher prediction errors when estimating MOS, as they often fail to capture the nuanced distortions in HFR content. In contrast, advanced metrics that incorporate multi-scale analysis, feature preservation, and/or spatio-temporal dynamics (such as FSIM, ST-RRED, SpEED, GSTI, or VMAF) are anticipated to more accurately reflect the perceptual quality. While conventional methods offer computational efficiency, their limited sensitivity to the temporal and structural complexities of modern video signals suggests that they may not perform as well under conditions demanding fine-grained quality prediction.

4.2. Performance Comparison

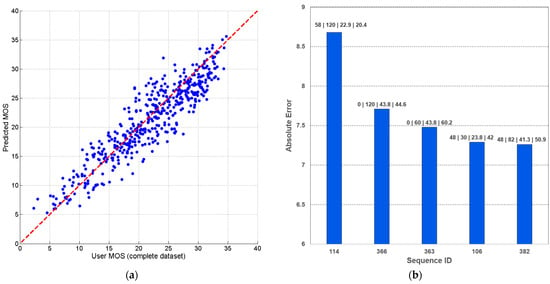

In this section, the performances of the VQA models, reviewed earlier, are evaluated using the LIVE-YT-HFR dataset. For the sake of clarity, the current model, developed within this study, was named a new ANFIS-VQA model. The discussion starts with Figure 7a, which illustrates how the new ANFIS-VQA model’s predictions align with the ground truth MOS ratings from the complete LIVE-YT-HFR dataset. The points are well-correlated along an upward-sloping linear trend, indicating that the model consistently approximates viewer perception. This tight clustering around the best-fit line confirms that the model effectively captures the nonlinear relationships among the input variables used for the modeling, yielding accurate MOS predictions across a diverse range of video content.

Figure 7.

Performances of the new ANFIS-VQA model: (a) correlation between the user MOS ratings in the LIVE-YT-HFR database and the predicted MOS for 480 video sequences; (b) top five test sequences with the largest prediction error (on top of the bars the sequence properties are listed in the format: CFR | FPS | SI | TI).

To investigate the limitations of the new ANFIS-VQA model, the five video sequences with the largest absolute prediction errors were identified (Figure 7b). The worst-case sequences reveal two primary failure modes: sequences with very high compression (e.g., sequence ID 114, CRF = 58, FPS = 120, SI = 22.9, TI = 20.4, error = 8.68) and sequences with low spatial detail or moderate motion (e.g., sequences 366 and 363, CRF = 0 but SI ≈ 44, TI ≈ 44–60, error ≈ 7.7). These cases suggest that, under extreme codec settings or atypical combinations of spatial/temporal statistics, the model’s generalization is challenged. In parallel, we measured a rule-activation sparsity by counting, for each of the 480 samples, how many of the seven rules had non-negligible firing strength (threshold > 0.01). On average, only 4.76 ± 1.36 rules are active per prediction, demonstrating that the inference remains sparse and that most outputs are driven by a small subset of the rule base. This sparsity underpins the model’s computational efficiency and interpretability. Even though seven rules exist, each prediction typically engages fewer than five, reducing evaluation overhead while preserving predictive power.

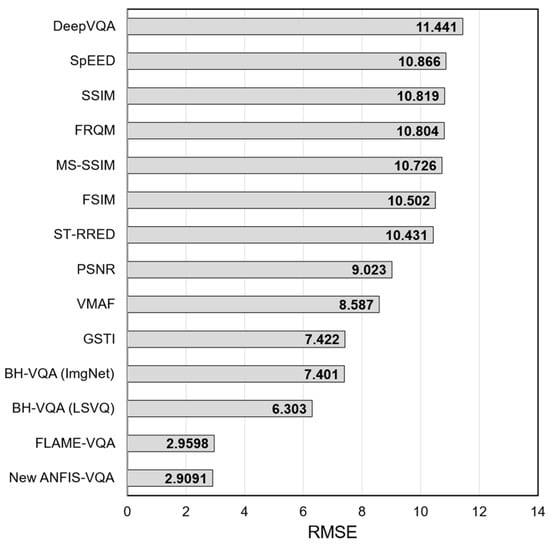

Figure 8 presents the RMSE for each of the evaluated VQA models, except the AVQBits for which the RMSE values were not reported in the original research [23]. The two best-performing methods, i.e., the new ANFIS-VQA (2.9091) and FLAME-VQA (2.9598), stand out with significantly lower RMSE scores compared to all other approaches. Their close scores suggest that both fuzzy logic-based frameworks successfully capture key perceptual factors, with the new ANFIS-VQA demonstrating competitive accuracy while potentially offering advantages in rule generation and interpretability. By contrast, two BH-VQA versions (6.303 and 7.401 for ImgNet and LSVQ, respectively), GSTI (7.422) and VMAF (8.587), register moderately higher RMSE, indicating they still struggle with certain aspects of the dataset’s content. Traditional or less perceptually driven metrics such as PSNR (9.023), ST-RRED (10.431), FSIM (10.502), and MS-SSIM (10.726) exhibit larger deviations from subjective ratings, reflecting limitations in capturing motion- and detail-related distortions. Finally, FRQM (10.804), SSIM (10.819), SpEED (10.866), and DeepVQA (11.441) occupy the higher end of the RMSE spectrum, implying these methods are less aligned with the subjective scores for the database under these conditions.

Figure 8.

Achieved RMSE values between different VQA models (lower is better).

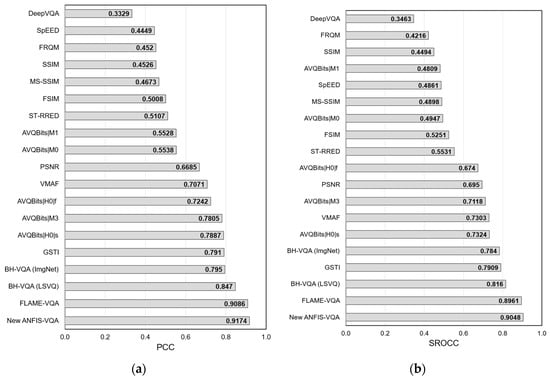

Furthermore, the performances of the VQA models were compared using both PCC and SROCC metrics (Figure 9a and Figure 9b, respectively). The top performers, the new ANFIS-VQA (PCC = 0.9174, SROCC = 0.9048) and FLAME-VQA (PCC = 0.9086, SROCC = 0.8961), demonstrate the strongest correlations with user ratings. Additionally, BH-VQA (LSVQ version) showcases a strong correlation (PCC = 0.847, SROCC = 0.816), proving that the developed deep neural network-based quality-aware feature extractor effectively captures the temporal distortions brought by frame-rate variations and object and camera motion. GSTI (PCC = 0.791, SROCC = 0.7909) and some AVQBits variants (e.g., AVQBits|H0|s and AVQBits|M3) achieve moderate correlations, indicating that their designs partially capture the perceptual aspects of HFR video quality. VMAF shows a respectable correlation, though it does not match the performance of fuzzy logic-based approaches. In contrast, classical metrics such as PSNR, SSIM, and MS-SSIM exhibit weaker alignment with subjective perception due to their limited ability to capture subtle motion artifacts and high-frame-rate dynamics. Advanced methods like ST-RRED, FSIM, FRQM, SpEED, and DeepVQA display varying performance but generally fall short compared to the leading models. For the sake of clarity, Table 4 compares the results depicted in Figure 8 and Figure 9.

Figure 9.

Achieved PCC (a) and SROCC (b) values (higher is better) on the LIVE-YT-HFR video database with different VQA models.

Table 4.

Comparative table of the achieved performance metrics.

These results align with earlier discussions, which anticipated that simpler, pixel-level metrics would yield higher prediction errors and weaker correlations with MOS. Notably, the new ANFIS-VQA model achieves comparable or superior accuracy with seven rules, in contrast to the 36-rule complexity of FLAME-VQA. This significant reduction in rule complexity not only minimizes computational overhead but also highlights the effectiveness of integrating adaptive neuro-fuzzy techniques to capture the perceptual quality of HFR video content.

Notwithstanding, all comparative metrics reported for BH-VQA, DeepVQA, GSTI, VMAF, and related models were evaluated on the LIVE-YT-HFR database. However, the models vary in their use of input data: deep-learning approaches process raw video frames and learn hierarchical feature representations, whereas the new ANFIS-VQA consumes only four precomputed video features. As a result, observed differences in predictive performance may partly arise from these distinct input modalities rather than purely from algorithmic effectiveness. This distinction should be borne in mind when contemplating the presented results.

5. Conclusions

This study was motivated by the increasing demand for effective video quality assessment methods amid the rapid growth of HFR video streaming. The proposed adaptive neuro-fuzzy inference system, developed by integrating subtractive clustering with ANFIS, demonstrated superior performance on the LIVE-YT-HFR dataset, achieving lower RMSE and higher correlation coefficients compared to traditional metrics. This research contributes to the field by showing that a compact, data-driven fuzzy logic model with a streamlined rule base can accurately capture the complex interplay among video compression rate, frame rate, spatial information, and temporal information. Moreover, the model’s efficiency and robustness offer significant advantages for perceptual quality prediction in HFR video content. Future work will proceed in two complementary directions. First, ANFIS-VQA will be retrained and benchmarked on additional public databases covering a wider spectrum of resolutions, codecs (H.264/H.265), and realistic network degradations, so that the model’s generalisability across heterogeneous streaming environments can be rigorously validated. Second, the present fuzzy framework will be combined with advanced machine-learning techniques to refine temporal analysis and further improve predictive accuracy.

Author Contributions

Conceptualization, M.M.; methodology, M.M. and Š.M.; software, M.M. and M.P.; validation, M.M. and Š.M.; formal analysis, Š.M. and M.M.; investigation, Š.M. and M.M.; resources, M.M. and I.G.; data curation, M.M. and Š.M.; writing—original draft preparation, Š.M. and M.M; writing—review and editing, M.M., Š.M., M.P. and I.G.; visualization, M.M. and Š.M.; supervision, Š.M. and M.M. Both M.P. and I.G. acknowledge that the lead authors on this manuscript are M.M. and Š.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research is funded by the University of Zagreb through the grants for core financing of scientific and artistic activities of the University of Zagreb in the academic year 2023/2024, under the project (210275) “Modeling Framework for the Development and Application of Assistive Technologies”.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The analyzed video database and the related subjective ratings used of the videos for the modeling can be found on this link: https://live.ece.utexas.edu/research/LIVE_YT_HFR/LIVE_YT_HFR/index.html (accessed on 3 February 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ANFIS | Adaptive Neuro-Fuzzy Inference System |

| AR | Accept Ratio |

| BH-VQA | Blind High Frame Rate Video Quality Assessment |

| CRF | Constant Rate Factor |

| FCM | Fuzzy C-Means |

| FIS | Fuzzy Inference System |

| FPS | Frames per Second |

| FRQM | Frame Rate Quality Metric |

| FSIM | Feature Similarity Index |

| GSTI | Gradient-based Spatio-Temporal Index |

| HFR | High Frame Rate |

| MOS | Mean Opinion Score |

| RMSE | Root Mean Squared Error |

| MS-SSIM | Multi-Scale Structural Similarity |

| PCC | Pearson Correlation Coefficient |

| PSNR | Peak Signal-to-Noise Ratio |

| QoE | Quality of Experience |

| RoI | Range of Influence |

| RR | Reject Ratio |

| SAR | Synthetic Aperture Radar |

| SD | Standard Deviation |

| SF | Squash Factor |

| SI | Spatial Information |

| SpEED | Spatio-Temporal Entropic Differencing |

| SROCC | Spearman Rank-Order Correlation Coefficient |

| SSCQE | Single-Stimulus Continuous Quality Evaluation |

| SSIM | Structural Similarity Index |

| ST-RRED | Spatio-Temporal Reduced Reference Entropy Difference |

| TI | Temporal Information |

| VQA | Video Quality Assessment |

| VMAF | Video Multi-method Assessment Fusion |

References

- AppLogic Networks. Global Internet Phenomena Report. 2025. Available online: https://www.applogicnetworks.com/phenomena (accessed on 19 March 2025).

- Lu, W.; Sun, W.; Zhang, Z.; Tu, D.; Min, X.; Zhai, G. BH-VQA: Blind High Frame Rate Video Quality Assessment. In Proceedings of the 2023 IEEE International Conference on Multimedia and Expo (ICME), Brisbane, Australia, 10–14 July 2023; 2023; pp. 2501–2506. [Google Scholar]

- Madhusudana, P.C.; Yu, X.; Birkbeck, N.; Wang, Y.; Adsumilli, B.; Bovik, A.C. Subjective and Objective Quality Assessment of High Frame Rate Videos. IEEE Access 2021, 9, 108069–108082. [Google Scholar] [CrossRef]

- Matulin, M.; Mrvelj, Š. Modelling User Quality of Experience from Objective and Subjective Data Sets Using Fuzzy Logic. Multimed. Syst. 2018, 24, 645–667. [Google Scholar] [CrossRef]

- Madhusudana, P.C.; Birkbeck, N.; Wang, Y.; Adsumilli, B.; Bovik, A.C. High Frame Rate Video Quality Assessment Using VMAF and Entropic Differences. In Proceedings of the 2021 Picture Coding Symposium (PCS), Virtual, 29 June–2 July 2021; 2021; pp. 1–5. [Google Scholar]

- Mrvelj, Š.; Matulin, M. FLAME-VQA: A Fuzzy Logic-Based Model for High Frame Rate Video Quality Assessment. Future Internet 2023, 15, 295. [Google Scholar] [CrossRef]

- Chennagiri, P.; Yu, X.; Birkbeck, N.; Wang, Y.; Adsumilli, B.; Bovik, A. LIVE YouTube High Frame Rate (LIVE-YT-HFR) Database. Available online: https://live.ece.utexas.edu/research/LIVE_YT_HFR/LIVE_YT_HFR/index.html (accessed on 3 February 2025).

- Mackin, A.; Zhang, F.; Bull, D.R. A Study of Subjective Video Quality at Various Frame Rates. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 3407–3411. [Google Scholar]

- ITU-R. ITU-R BT.500-11: Methodology for the Subjective Assessment of the Quality of Television Pictures; ITU-R Stands for International Telecommunication Union-Radiocommunication Sector: Geneva, Switzerland, 2000; Available online: https://www.itu.int/dms_pubrec/itu-r/rec/bt/R-REC-BT.500-11-200206-S!!PDF-E.pdf (accessed on 20 April 2025).

- MathWorks Neuro-Adaptive Learning and ANFIS. Available online: https://www.mathworks.com/help/fuzzy/neuro-adaptive-learning-and-anfis.html (accessed on 11 March 2025).

- Rao, U.M.; Sood, Y.R.; Jarial, R.K. Subtractive Clustering Fuzzy Expert System for Engineering Applications. Procedia Comput. Sci. 2015, 48, 77–83. [Google Scholar] [CrossRef]

- Chang, S.; Deng, Y.; Zhang, Y.; Zhao, Q.; Wang, R.; Zhang, K. An Advanced Scheme for Range Ambiguity Suppression of Spaceborne SAR Based on Blind Source Separation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Ross, T.J. Fuzzy Logic with Engineering Applications, 4th ed.; Wiley: Hoboken, NJ, USA, 2016. [Google Scholar]

- Al-Hadithi, B.M.; Gómez, J. Fuzzy Control of Multivariable Nonlinear Systems Using T–S Fuzzy Model and Principal Component Analysis Technique. Processes 2025, 13, 217. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Simoncelli, E.P.; Bovik, A.C. Multiscale Structural Similarity for Image Quality Assessment. In Proceedings of the Thrity-Seventh Asilomar Conference on Signals, Systems & Computers, Pacific Grove, CA, USA, 9–12 November 2003; Volume 2, pp. 1398–1402. [Google Scholar]

- Zhang, L.; Zhang, L.; Mou, X.; Zhang, D. FSIM: A Feature Similarity Index for Image Quality Assessment. IEEE Trans. Image Process. 2011, 20, 2378–2386. [Google Scholar] [CrossRef] [PubMed]

- Soundararajan, R.; Bovik, A.C. Video Quality Assessment by Reduced Reference Spatio-Temporal Entropic Differencing. IEEE Trans. Circuits Syst. Video Technol. 2013, 23, 684–694. [Google Scholar] [CrossRef]

- Bampis, C.G.; Gupta, P.; Soundararajan, R.; Bovik, A.C. SpEED-QA: Spatial Efficient Entropic Differencing for Image and Video Quality. IEEE Signal Process. Lett. 2017, 24, 1333–1337. [Google Scholar] [CrossRef]

- Zhang, F.; Mackin, A.; Bull, D.R. A Frame Rate Dependent Video Quality Metric Based on Temporal Wavelet Decomposition and Spatiotemporal Pooling. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 300–304. [Google Scholar]

- Kim, W.; Kim, J.; Ahn, S.; Kim, J.; Lee, S. Deep Video Quality Assessor: From Spatio-Temporal Visual Sensitivity to A Convolutional Neural Aggregation Network. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Madhusudana, P.C.; Birkbeck, N.; Wang, Y.; Adsumilli, B.; Bovik, A.C. Capturing Video Frame Rate Variations via Entropic Differencing. IEEE Signal Process. Lett. 2020, 27, 1809–1813. [Google Scholar] [CrossRef]

- Ramachandra Rao, R.R.; Göring, S.; Raake, A. AVQBits—Adaptive Video Quality Model Based on Bitstream Information for Various Video Applications. IEEE Access 2022, 10, 80321–80351. [Google Scholar] [CrossRef]

- Netflix VMAF—Video Multi-Method Assessment Fusion. Available online: https://github.com/Netflix/vmaf (accessed on 12 January 2025).

- Kotevski, Z.; Mitrevski, P. Experimental Comparison of PSNR and SSIM Metrics for Video Quality Estimation. In Proceedings of the ICT Innovations 2009, Ohrid, Macedonia, 28–30 September 2009; Davcev, D., Gómez, J.M., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 357–366. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).