Abstract

Arabic poetry follows intricate rhythmic patterns known as ‘arūḍ’ (prosody), which makes its automated categorization particularly challenging. While earlier studies primarily relied on conventional machine learning and recurrent neural networks, this work evaluates the effectiveness of transformer-based models—an area not extensively explored for this task. We investigate several pretrained transformer models, including Arabic Bidirectional Encoder Representations from Transformers (Arabic-BERT), BERT base Arabic (AraBERT), Arabic Efficiently Learning an Encoder that Classifies Token Replacements Accurately (AraELECTRA), Computational Approaches to Modeling Arabic BERT (CAMeLBERT), Multi-dialect Arabic BERT (MARBERT), and Modern Arabic BERT (ARBERT), alongside deep learning models such as Bidirectional Long Short-Term Memory (BiLSTM) and Bidirectional Gated Recurrent Units (BiGRU). This study uses half-verse data across 14 m. The CAMeLBERT model achieved the highest performance, with an accuracy of 90.62% and an F1-score of 0.91, outperforming other models. We further analyze feature significance and model behavior using the Local Interpretable Model-Agnostic Explanations (LIME) interpretability technique. The LIME-based analysis highlights key linguistic features that most influence model predictions. These findings demonstrate the strengths and limitations of each method and pave the way for further advancements in Arabic poetry analysis using deep learning.

1. Introduction

Language is a fundamental component of human intelligence and plays a crucial role in cognitive processes. Among it various forms, poetry stands out as a refined and sophisticated artistic expression. It transcends national boundaries and cultural variations, maintaining its popularity across generations and significantly influencing the development of human society [1].

Poetry represents the earliest form of literary expression in the Arabic language, serving as a powerful medium for articulating Arab self-identity, collective memory, and aspirations. Arabic poetry is typically classified into classical and contemporary forms [2]. Much of the research on Arabic prosody—also known as ‘arud’—has historically focused on morphology and phonetics, a trend that has continued for several years. Analyzing poetic meters is essential to determining whether a piece follows a consistent rhythmic pattern or contains metrical deviations [3].

Modern and traditional Arabic differ in their usage of vowels: short vowels are indicated through diacritic in writing, while long vowels are explicitly represented [4]. In the eighth century, the renowned philologist, Al-Farahidi, pioneered the study of ancient Arabic poetry by poetry by developing a system of poetic meters [5]. The metric structure in Arabic poetry encompasses sixteen distinct meters. Several key terms are used in Arabic prosody, including:

- Tafilah: A distinct metrical foot

- Bayt: A poetic line composed of two half-verses

- Sadr: The initial segment of a half-verse

- Ajuz: The latter segment of a half-verse

- Arud: The final part of the Sadr

- Darb: The final part of the ‘Ajuz’

Due to the diversity and complexity of the Arabic language, categorizing Arabic poetry requires advanced natural language processing (NLP) techniques [6]. The morphological, syntactic, and semantic intricacies of Arabic often exceed the capabilities of traditional rule-based and statistical approaches.

The recent advancements in artificial intelligence (AI)—particularly in deep learning (DL) and transformer-based models—have significantly improved outcomes in tasks such as text classification and sentiment analysis. Applying these cutting-edge methods to Arabic poetry classification offers a promising pathway toward improved understanding and model accuracy [7,8,9].

However, the Arabic NLP landscape remains challenging due to the language’s structural complexity and regional variations. Nonetheless, ongoing research and the development of specialized tools and datasets have facilitated more robust and efficient NLP solutions [10]. As the field continues to grow, the application of Arabic NLP is becoming more widespread across domains, such as healthcare, social media analysis, and even poetry generation [11,12].

Recent studies have increasingly adopted machine learning (ML) and deep learning (DL) techniques to tackle the complex task of poetic meter classification. Recurrent neural networks (RNNs) and various ML and DL models have been employed to classify poems based on their metrical patterns [13,14,15].

For meter categorization, handcrafted features such as word length, phonological patterns, and n-grams have traditionally been used with classic ML techniques, including Support Vector Machines (SVMs), Random Forests, and Decision Trees [16,17,18]. While these approaches are efficient, their adaptability across different languages and poetic forms is limited, as they depend heavily on manual feature engineering. In contrast, DL models like BiGRU and BiLSTM can automatically learn auditory and rhythmic patterns directly from raw text. These models have demonstrated strong performance across diverse literary traditions, effectively capturing both sequential and semantic patterns without requiring extensive preprocessing [19,20,21].

Transformer models have revolutionized NLP since the introduction of the ’Attention is All You Need‘ architecture in 2017 [22]. These models process input data in parallel using self-attention mechanisms, enabling them to capture long-term dependencies and contextual relationships within text. As a result, transformers have become foundational in many advanced NLP applications, including text classification.

Recent advancements in NLP—particularly the emergence of transformer-based models such as Bidirectional Encoder Representations from Transformers (BERT) and its Arabic counterparts—have significantly enhanced Arabic text processing. Comparative analyses have showed improved accuracy in text classification across various domains with the adoption of updated BERT models [23,24].

In this study, we used a public dataset for Arabic meter classification, MetRec [25], and evaluated it using several transformers and DL models. We employed the BERT base Arabic model (AraBERT), Arabic Efficiently Learning an Encoder that Classifies Token Replacements Accurately (AraELECTRA), Multi-dialect Arabic BERT (MARBERT), Modern Arabic BERT (ARBERT), Computational Approaches to Modeling Arabic BERT (CAMeLBERT), and Arabic-BERT for meter classification. In addition to transformer-based models, we used DL architectures such as Bidirectional Long Short-Term Memory (BiLSTM) and Bidirectional Gated Recurrent Units (BiGRU). These networks are capable of capturing long-range dependencies in sequential data, allowing the classification model to learn both spatial and contextual features—an essential requirement for handling the intricacies of Arabic poetry.

We also assessed feature importance and model behavior using the Local Interpretable Model-agnostic Explanations (LIME) interpretability technique. The findings provide meaningful insights into the effectiveness of different architectures for Arabic poetry classification, supporting the development of more advanced NLP solutions for the Arabic language.

The major contributions of this study are as follows:

- We compare and evaluate the performance of various pretrained transformer models—including Arabic-BERT, AraBERT, MARBERT, AraELECTRA, CAMeLBERT, and ARBERT—alongside BiLSTM and BiGRU DL models.

- We evaluate half-verse poems and tune the DL models using different hidden layer configurations, and adjust batch sizes for the transformer models.

- We apply multiple encoding strategies, including pretrained tokenizers (WordPiece and SentencePiece) for transformer models and character-level encoding for DL models.

- We investigate model behavior and feature significance using LIME to gain insights into the model’s decision-making processes.

- We contribute to the expanding of Arabic NLP by assessing interpretability and applicability of different modeling strategies for poetry classification.

The remainder of this paper is structured as follows: Section 2 presents a comprehensive literature review of transformer models and DL methodologies. Section 3 outlines the proposed techniques and model architecture. Section 4 describes the experimental results, followed by an in-depth discussion in Section 5, which also highlights potential avenues for further research. Finally, Section 6 concludes this study.

2. Literature Review

Deep learning methods have been extensively utilized in numerous studies to address Arabic meter classification. Notably, the work by Al-shaibani et al. [26] demonstrated significant progress by modeling the sequential nature of poetic lines using bidirectional recurrent neural networks (RNNs). Their approach effectively captured rhythmic patterns in Arabic poetry, providing a strong foundation for further exploration using advanced models such as Transformers.

Several efforts have aimed to develop algorithms for identifying meters in contemporary Arabic poetry [4]. Some approaches focus on detecting features of classical poetry, including rhythm, punctuation, and alignment, although however, they merely differentiate poetic from non-poetic texts [27]. Other techniques attempt to identify specific meters by converting verses into ‘Arud’ script using Mel Frequency and Linear Predictive Cepstral Coefficients characteristics [28].

Achieving performance levels of up to 75% accuracy, Berkani et al. [29] emphasized that automated meter recognition requires intricate data processing. Their method involves syllables segmentation, phoneme conversion, and the alignment of resulting patterns with predefined meter templates. Building on El-Katib’s approach, Khalaf et al. [30] were noteworthy in automating Arabic meter classification. Their method supports educators and students in understanding prosody and accurately identifying poetic meters, even in complex scenarios.

Albaddawi et al. [31] achieved high accuracy in meter detection by employing a model architecture comprising an embedding layer, five Bi-LSTM layers, a softmax activation function, and an output layer. Similarly, Abandah et al. [32] developed a neural network focused on enhancing diacritical understanding, which is crucial for improving both understanding and meter recognition accuracy.

Talghalit et al. [33] introduced a robust method based on leveraging contextual semantic embeddings and transformer models for Arabic biomedical search application. Their methodology involved preprocessing biomedical text, generating vector representations, and fine-tuning several transformer variants—including BERT, AraBERT, Biomedical BERT, RoBERTa, and Distilled—using a specialized Arabic biomedical dataset. Their model achieved an F1-score of 93.35% and an accuracy of 93.31%. Despite this strong performance, the authors identified key limitations, including Arabic’s complex morphology and the scarcity of domain-specific datasets. They emphasized the necessity of enriched contextual embeddings and expanded datasets to further improve transformer model performance in Arabic biomedical NLP applications.

Combining AraBERT’s contextual comprehension with Long Short-Term Memory (LSTM) for sequence modeling, Alosaimi et al. [34] proposed a sentiment analysis model. Its performance was benchmarked against traditional ML and DL models using various vectorization strategies across four Arabic benchmark datasets. Al-Onazi et al. [13] applied LSTM and convolutional neural network models, potimized using the Hawks optimization method, on the MetRec dataset for meter classification, achieving an accuracy of 98% with precision and recall scores of 86.5%.

In sentiment analysis, ensemble learning—where multiple models are combined to enhance classification accuracy—has proven highly effective. Studies show that integrating multiple deep learning models can outperform individual architectures in Arabic sentiment analysis tasks [35,36]. For instance, Zhou et al. [37] employed a hybrid model combining convolutional and LSTM networks, where the convolutional layers captured local features and LSTM layers captured global context.

AraGPT-2, the Arabic adaptation of Generative Pre-trained Transformer-2, aims to generate synthetic text to improve Arabic classification tasks. Refai’s et al. [38] outlined a three-part methodology: applying similarity metrics to evaluate sentence quality, generating refined Arabic samples using AraGPT-2, and assessing sentiment classification using AraBERT. Their research also addressed class imbalance issues prevalent in Arabic sentiment datasets.

Al Deen et al. [10] explored Arabic Natural Language Inference (NJI) using transformer models such as AraBERT and RoBERTa. The study introduced a novel pretraining strategy combining Named Entity Recognition, allowing for better identification of conflicts and entailments. Using a custom dataset derived from publicly available resources and linguistically enriched pretraining, AraBERT achieved an accuracy of 88.1%, outperforming RoBERTa enhanced with language-specific features.

In another study, Qarah et al. [39] developed a linguistic model for Arabic poetry analysis based on a BERT architecture pretrained from scratch using an extensive Arabic poetry corpus. This model incorporated 768 hidden units, 10 encoder layers, and 12 attention heads per layer, along with a vocabulary of 50,000 words, enabling it to capture the full a breadth of poetic forms and idiomatic expressions in the Arabic literature.

A hybrid methodology integrating deep learning with text representation techniques has been proposed to enhance the classification of Arabic news articles [9]. After comprehensive preprocessing steps including text cleaning, tokenization, lemmatization, and data augmentation, a proprietary attention embedding layer was employed to capture contextual relationships in the text. With data augmentation, the model surpassed the state-of-the-art Arabic language model AraBERTv2, achieving a classification accuracy of 97.69% [40].

In a study by Alshammari et al. [41], the authors introduced a novel AI text classifier designed to address the specific challenges in detecting AI-generated Arabic texts. This approach optimized two transformer models: the Cross-lingual Language Model and AraELECTRA [42]. Achieving an accuracy of 81%, the proposed models outperformed existing AI detectors. CAMeLBERT, when trained on an Arabic poetry dataset, achieved a performance accuracy of 80.9% [43].

Alzaidi et al. [44] enhanced Arabic text representation by utilizing FastText word embeddings, followed by an attention-based BiGRU classification model. This approach included a complete preprocessing pipeline and incorporated hyperparameter tuning to efficiently explore the search space and improve model performance. Similarly, Badri et al. [45] applied NLP techniques to detect hate speech in the Arabic literature across various dialects, leveraging BERT-based pretrained models to capture the linguistic complexity of the Arabic language.

Al-Shaibani and Ahmad [46] proposed a dotless Arabic text representation to reduce vocabulary size while maintaining comparable NLP performance. Their approach demonstrated up to a 50% reduction in vocabulary, with successful results across tasks such as language modeling and translation. Recent efforts in Arabic text-to-speech synthesis have also focused on enhancing emotional expressiveness, particularly in applications designed to assist visually impaired people [47].

Transformer-based architectures have also been adapted for domain-specific applications. For instance, Wang et al. [48] developed a graph transformer model for detecting fraudulent financial transactions. While their work targets the financial sector, it highlights the increasing trend of tailoring transformer models for intricate and domain-specific frameworks. Mustafa et al. [49] explored the use of explainable AI methods—such as LIME and SHapley Additive exPlanations (SHAP)—to clarify model decision-making in Arabic linguistic applications. The integration of explainability tools is considered essential for broader adoption and trust in transformer-based models within domain-specific contexts.

CAMeLBERT, designed specifically for Arabic NLP, is capable of handling linguistic variations, including Classical Arabic (CA), Dialectal Arabic (DA), and Modern Standard Arabic (MSA). It has demonstrated great performance in MSA tasks, particularly in emotion analysis and named entity recognition. Studies indicate that when paired with DL techniques, fine-tuned CAMeLBERT models outperform other models in sentiment analysis [50], achieving high accuracy, recall, and F1-scores in named entity recognition [51,52]. Its capacity to manage morphological and linguistic diversity also makes it suitable for dialect detection, translation, and the processing of the CA literature—an essential step in applying NLP techniques to historical texts [53]. Although BERT has gained prominence in NLP, studies [54,55,56] suggest that its application to Arabic text classification remains limited. However, transformer models specifically trained on extensive Arabic corpora—such as CAMeLBERT, AraBERT, and MARBERT—have shown superior performance. This growing body of research not only reveals promising avenues for addressing current challenges but also highlights the potential for expanding the efficacy of Arabic language applications through the use of specialized transformer architectures.

3. Materials and Methods

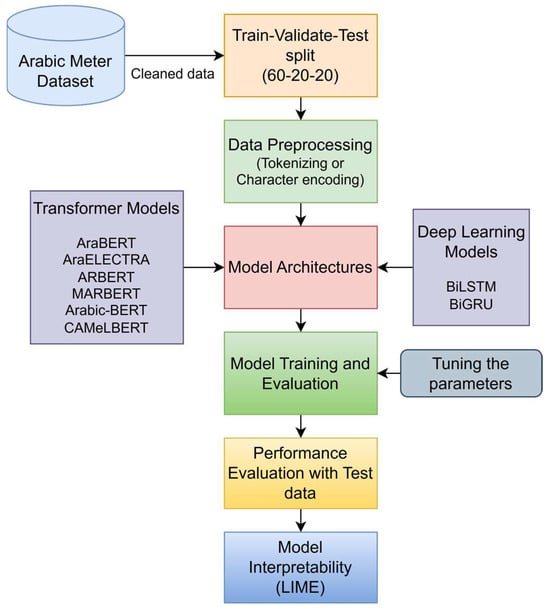

The proposed study comprises several phases: data preprocessing, data splitting into training and testing sets, model implementation, evaluation using various metrics, model testing, and interpretability analysis using the LIME technique. The workflow is illustrated in Figure 1.

Figure 1.

The methodology of the proposed study.

3.1. Dataset and Train-Test Split

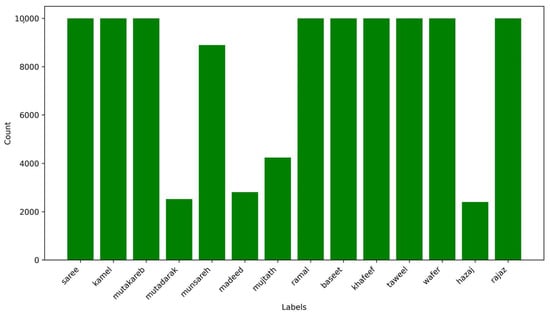

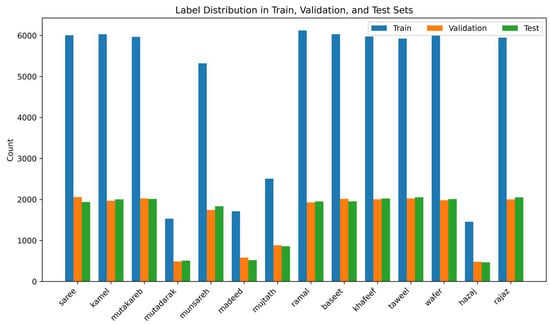

This study utilizes the MetRec Arabic poetry dataset, which comprises 55,440 verses classified across 14 m [26]. The symbol ‘#’ denotes the separation between the left and right parts of each verse. These halves are vertically concatenated to generate half-verse data, resulting in a total of 110,880 half-verses, as depicted in Figure 2.

Figure 2.

Distribution of meter labels in half-verse data. The highest count is 10,002 for the khafeef meter, and the lowest is 2402 for the hazaj meter.

The dataset exhibits moderate class imbalance: 10,000 verses are associated with the saree, kamel, mutakareb, ramal, baseet, taweel, wafer, and rajaz meters; 2524 with mutadarak; 8896 with munsareh; 2812 with madeed; 4244 with mujtath; 10,002 with khafeef; and 2402 with the hazaj meter. Figure 3 presents a word cloud generated from the dataset, while Figure 4 illustrates sample verses along with their associated meter labels.

Figure 3.

Word cloud of the MetRec half-verse dataset.

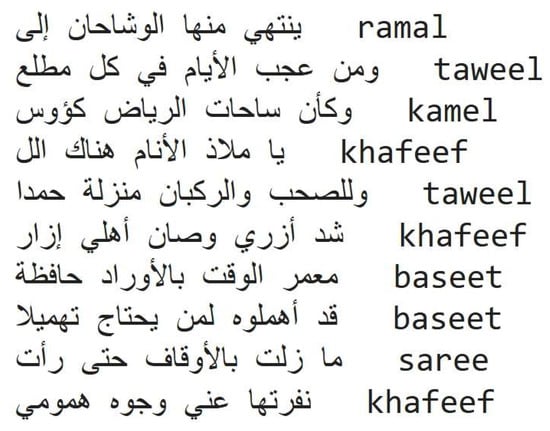

Figure 4.

Sample verses from the dataset with their corresponding meter labels.

After removing unwanted characters, alphabets, and punctuation, the cleaned data are split into training, validation, and testing sets using a 60:20:20 ratio. The training set contains 66,528 half-verses, while both the validation and test sets each contain 22,176. The meter-wise distribution across these splits is shown in Figure 5.

Figure 5.

Meter-wise distribution for the training, validation, and test sets.

3.2. Data Preprocessing

The performance of the proposed models depends significantly on effective data preprocessing. Given the linguistic complexity of Arabic and differing model architectures, two preprocessing strategies were employed: character-level encoding for deep learning (DL) models and tokenization for transformer-based models. In both approaches, label encoding was applied to the target categories.

3.2.1. Tokenization

In natural language processing (NLP), tokenization—the process of breaking text into smaller components—is a crucial first step. Depending on the method, tokens may represent characters, subwords, or words. Among the various tokenization techniques, WordPiece and SentencePiece have become particularly popular for their adaptability to multiple languages and applications [57].

WordPiece, developed for the BERT architecture, is a subword tokenization method [58,59]. It constructs a vocabulary from the characters in the text and identifies frequently co-occurring token pairs to merge into new tokens. This iterative process continues until either no additional pairs can be merged or a pre-defined vocabulary size is achieved, maximizing the likelihood of representing the training data efficiently.

SentencePiece, developed by Google, is another subword tokenization tool. Unlike WordPiece, it applies a data-driven, language-independent strategy by treating the input as a series of characters. SentencePiece supports Byte Pair Encoding (BPE) and Unigram Language Models to produce subword tokens suitable for various NLP applications [57].

Table 1 summarizes the tokenization methods employed by the pretrained transformer models used in this study.

Table 1.

Tokenization methods for various pretrained transformer models.

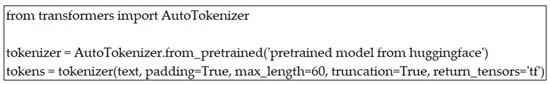

For the proposed study, we employed pretrained tokenizers alongside the corresponding models. The code snippet for invoking the tokenizer is shown in Figure 6. The maximum sequence length was set to 60 and padding was enabled.

Figure 6.

Code snippet for calling the tokenizer from the pretrained model.

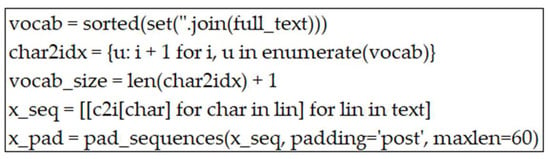

3.2.2. Character Encoding

Character encoding is the process of converting characters into a format that computers can easily access and manipulate. In this study, we addressed the complexity of the Arabic language—rich in morphological structures—by implementing character-level encoding. After removing special characters and spaces from the dataset, a vocabulary was created based on unique characters [26]. Each character was then assigned an index to form a mapping dictionary. This mapping translates every text input into a sequence of numerical indices, thus preserving distinct characters. The maximum sequence length was derived from the longest text in the dataset to ensure consistency in input size through sequence padding. This encoding method is particularly effective for managing spelling variations and noisy text, as it retains character-level patterns. The code snippet for character encoding is depicted in Figure 7.

Figure 7.

Code snippet for character encoding method used in the DL models.

3.2.3. Label Encoding

Label encoding is especially efficient when the target variable comprises discrete classes as it assigns a unique integer to each category. In this study, we used label encoding to map each of the 14 m classes to an integer ranging from 0 to 13, enabling faster processing during model training.

3.3. Model Architectures

Several models were used for classifying Arabic meters, including ARBERT, MARBERT, CAMeLBERT, Arabic-BERT, AraELECTRA, BiGRU, and BiLSTM.

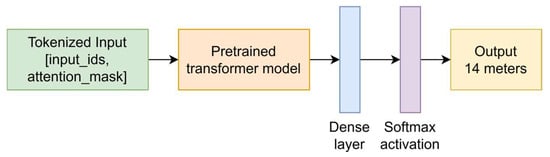

3.3.1. Transformer Models

Transformers are suitable for high-performance tasks as they can handle large volumes of data and complex structures efficiently. The workflow of the transformer model used in this study is shown in Figure 8.

Figure 8.

Transformer model’s architecture.

AraBERT is a transformer model pretrained specifically for Arabic [60]. It was developed to address the challenges of Arabic NLP, particularly the language’s intricate morphology and dialectal variations. AraBERT has demonstrated outstanding performance in various tasks, including sentiment analysis [61]. Built on the original BERT architecture, AraBERT significantly enhances the precision and efficacy of Arabic NLP applications.

AraELECTRA is another transformer model fine-tuned for Arabic language [42], extending the ELECTRA architecture. Unlike conventional masked language models, ELECTRA employs a discriminator network that distinguishes between real and replaced tokens generated by a generator. This approach improves training efficiency while maintaining performance.

CAMeLBERT is a robust Arabic transformer model designed to process different Arabic language forms, including dialectal Arabic, Classical Arabic, and Modern Standard Arabic (MSA) [43]. Unlike AraBERT, CameLBERT is trained on a more diverse corpus, enabling it to capture stylistic nuances in poetry. Its BERT-based architecture is enhanced through extensive fine-tuning for a better comprehension of poetry forms and morphological patterns.

MARBERT and ARBERT are advanced transformer models tailored for Arabic NLP tasks [62]. These models extend the BERT architecture with a bidirectional attention mechanism, allowing them to evaluate context by examining both left and right surroundings. MARBERT was trained on a large corpus comprising various Arabic dialects and MSA, while ARBERT focused primarily on MSA.

Arabic-BERT further expands the BERT architecture by focusing on Arabic-specific linguistic features, particularly those of MSA [63]. All the pretrained transformer models used in this study consist of 12 hidden layers and 12 attention heads in their architecture.

3.3.2. Deep Learning Models

Deep learning architectures such as BiLSTM and BiGRU are commonly used for handling sequential data, including natural language processing, time-series forecasting, and speech recognition. Both methods effectively capture long-terms dependencies and mitigate the vanishing gradient issue inherent in conventional RNNs.

BiLSTM (Bidirectional Long Short-Term Memory) enhances contextual understanding by processing input sequences in both forward and reverse directions [14,64]. The LSTM model includes three main gates: input, output, and forget gates.

where ft is the forget gate at time stamp t, σ is the sigmoid activation function, vf and wf denote the weight matrices for ft, It signifies the data input, af is the error vector, and Lt−1 denotes the hidden state result from the previous time step.

where it is the input gate, vi and wi denote the weight matrices for it, and ai is the error vector.

where is the candidate memory cell, ac is the error vector, and vc and wc are the associated weight matrices.

where CSt is the current cell state, CSt−1 represents the memory state from the previous timestamp, ft, it, is calculated from Equations (1), (2), (3), respectively.

where Ot is the output gate, vo and wo denote the weight matrices for Ot, and ao is the error vector.

where Lt is the hidden state at time Ot, calculated from Equation (5), and CSt from Equation (4). The BiLSTM analyzes the input sequence in both directions.

where the is the forward output and is the backward output of the LSTM. The symbol ‘ʘ’ denotes on operation such as multiplication, averaging, summation, or concatenation.

In contrast, the BiGRU is a simplified variant of LSTM, with fewer parameters and enhanced computational efficiency. Like the BiLSTM, it processes sequences bidirectionally [26]. It includes two gates: the reset gate and the update gate.

where RSt is the reset gate, vr and wr are the weight matrices for RSt, Gt−1 is the hidden state from the previous time step, and ar is the bias vector.

where Pt is the update gate, vp and wp denote the weight matrices, and ap is the error vector.

where is the new hidden state, ah is the error vector, and vh and wh are the weight matrices. RSt is obtained from Equation (8).

where Gt is the final updated hidden state, Pt is calculated from Equation (9), and is from Equation (10).

where the is the forward output and is the backward output of the GRU. The symbol ‘☉’ denotes operations such as multiplication, averaging, summation, or concatenation.

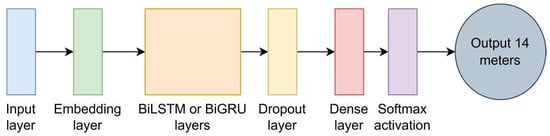

The BiLSTM and BiGRU model architecture is illustrated in Figure 9. The approach begins by converting a character sequence into an integer index using a character encoder, where each index corresponds to a specific vocabulary character. The input layer is shaped according to the training dataset. The embedding layer transforms input tokens into dense vectors of a predetermined size, set to 128 in this study. The BiGRU or BiLSTM layer then processes the sequence bidirectionally, capturing dependencies from past and future tokens. If the number of layers exceeds one, the return-sequence parameter is set to True. The dimensionality of the bidirectional layer is fixed at 256. To mitigate overfitting, a dropout layer with a rate of 0.2 is employed. A fully connected (dense) layer follows, mapping to the number of output classes—14 in this case. The Softmax function is used as the activation function, and the final prediction corresponds to the category with the highest probability in the Softmax output.

Figure 9.

The BiLSTM and BiGRU model architecture.

3.4. Model Evaluation and Parameter Tuning

The system environment used in this study is as follows:

- Windows 10 operating system

- 16 GB RAM

- Intel Core i7 processor

- GPUs—Nvidia GeForce GTX 1080 Ti

- Deep learning libraries: TensorFlow 2.7, Transformers 4.48.1

- Additional evaluation libraries: Scikit-learn 1.0, PyArabic 0.6.14, lime 0.2.0.1

The models were compiled prior to training. The Adaptive Moment Estimation (Adam) optimizer was employed due to its memory efficiency and notable effectiveness [65]. The loss function used was sparse categorical cross-entropy, appropriate for labels formatted as a sparse matrix.

The tuning parameters were model-specific. Deep learning (DL) models were optimized by varying the number of hidden layers, whereas the transformer model was tuned based on batch size. This study employed EarlyStopping and ReduceLRonPlateau as regularization strategies to mitigate overfitting. Training ceased when the model achieved optimal performance based on the monitored parameters, before overfitting could occur.

The EarlyStopping parameter monitored validation loss and stopped training if the loss remained constant or increased over eight consecutive epochs. The ReduceLRonPlateau technique reduced the learning rate by a factor of 0.1 if the loss value remained unchanged over two epochs.

Table 2 presents the parameters used in this study.

Table 2.

Parameters used in the proposed study for each model.

3.5. Performance Evaluation

The model were evaluated using the test dataset. Performance assessment included analyzing the confusion matrix and classification report for each model. For multi-class classification, this study evaluated standard metrics such as accuracy, F1-score, recall, and precision. The formulas for each metric are provided below.

A classification is considered accurate when a verse is correctly categorized into its respective meter (true positive, Tpos). For instance, if a verse written in the saree meter is correctly predicted as saree, it constitutes a true positive. A verse deviating from a specified meter but is accurately recognized as not conforming to that meter is a true negative (Tneg). Conversely, if a verse from one meter is misclassified as belonging to another, this results in a false positive (Fpos) for the predicted meter and a false negative (Fneg) for the actual meter. For instance, if a kamel meter verse is classified as madeed, it counts as an Fpos for madeed and an Fneg for kamel.

3.6. Explainability with LIME

LIME (Local Interpretable Model-Agnostic Explanations) is an interpretability technique that uses a locally approximated interpretable model to explain the predictions of complex models [66]. It can highlight the words or characters that significantly influence the categorization decision in the classification model. LIME approximates the model’s decision in a relatively small area surrounding a specific instance by perturbing the input data and tracking how the model’s prediction changes.

In the context of Arabic meter classification, LIME facilitates the identification of specific words or characters that strongly influence the model’s prediction. This helps to interpret and validate the patterns recognized by the transformer models. The final training parameters for the model are described in Table 3. The tuning parameters are mentioned in Table 2.

Table 3.

Training parameters used in the proposed study for each model.

4. Results

The dataset used in this study is the MetRec data, comprising 55,440 verses and a total of 110,880 half-verses. There are no studies based on half-verses and transformer models. The data are split into training, validation, and testing sets in a 60:20:20 ratio. The class labels are encoded numerically from 0 to 13.

4.1. Deep Learning Models Results Comparison

In the deep learning (DL) models, the text data are encoded using a character-encoding approach. Both BiLSTM and BiGRU models were fine-tuned with varying numbers of hidden layers. The complete performance results are presented in Table 4.

Table 4.

The performance of the DL models in the proposed study.

The BiGRU model achieved its best performance at 5 layers, reaching a testing accuracy of 90.39%, with F1-score, recall, and precision scores all equal to 0.90. The BiLSTM model performed best at 6 layers, attaining a testing accuracy of 90.53%, with recall of 0.90, and both precision and F1-score reaching 0.91.

The EarlyStopping parameter terminated the training process if the validation loss failed to increase or remained stable for eight epochs. Therefore, training stopped at the epochs indicated in the table for each layer.

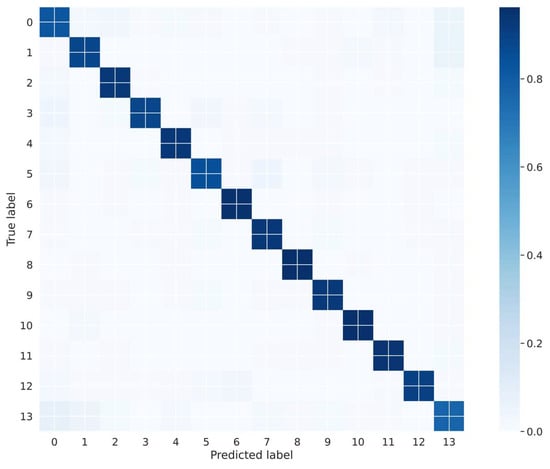

The performance per class for the BiLSTM model with 6 layers is shown in Table 5. The baseet and taweel meters achieved the highest F1-scores (0.96), while the rajaz meter recorded the lowest (0.79). The corresponding confusion matrix is illustrated in Figure 10.

Table 5.

Class-wise results for the BiLSTM model with 6 hidden layers.

Figure 10.

Confusion matrix of the BiLSTM model with 6 layers.

4.2. Transformer Models Results Comparison

In the transformer-based model, input text was encoded using tokenizers. The models were fine-tuned by varying the batch size. Full performance results are summarized in Table 6.

Table 6.

Performance of the transformer models in the proposed study.

The CAMeLBERT model, with a batch size of 64, delivered the best performance among the transformer models, achieving 90.62% testing accuracy along with F1-score, recall, and precision scores of 0.91.

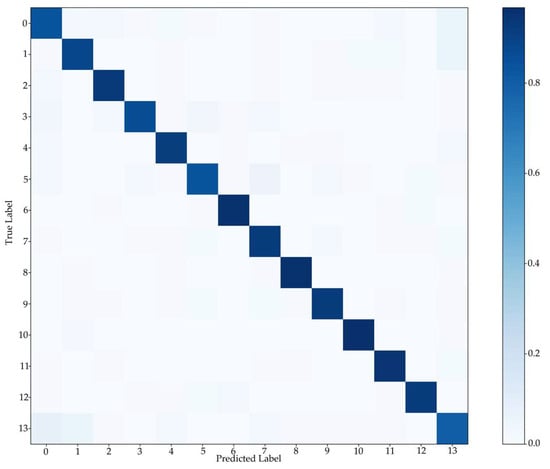

Class-wise results for CAMeLBERT with batch size 64 are provided in Table 7. Similar to the BiLSTM model, baseet and taweel attained the highest F1-score of 0.96, while rajaz had the lowest at 0.80. The confusion matrix is shown in Figure 11.

Table 7.

Class-wise results for CAMeLBERT with batch size 64.

Figure 11.

Confusion matrix of the CAMeLBERT model with batch size 64.

5. Discussion

This study analyzed various models, including pretrained transformer models and bidirectional deep learning (DL) models, using half-verse data comprising 14 m. Among the tested models, CAMeLBERT achieved the highest accuracy of 90.62%, marginally outperforming the BiLSTM model, which reached 90.53%.

Tokenizers were also evaluated using the BiLSTM model. Specifically, SentencePiece (AraBERT) and WordPiece (CAMeLBERT) tokenizers were assessed. The model was tested with two layers, as depicted in Table 8. However, transformer tokenizers did not yield significant performance improvements with BiLSTM. Consequently, character encoding was preferred for the DL models.

Table 8.

Performance of the BiLSTM model using transformer tokenizers.

BiLSTM leverages the benefits of long-term memory and enhances traditional LSTM by processing sequences bidirectionally [67]. Compared to GRUs, LSTMs with memory cells are more effective at capturing long-range dependencies. Al-Shathry [68] conducted further research using a balanced dataset of full-verse poems. By randomly selecting 1000 verses for each of the 14 m, their BiGRU model achieved 98.6% accuracy and scores of 0.90 for recall, precision, and F1-score.

Transformer models have notable advanced NLP challenges, including Arabic meter categorization. These models use deep contextual embeddings to identify intricate linguistic patterns specific to Arabic [34,69]. Although CAMeLBERT was trained on diverse datasets—including one related to poetry—it achieved only 80.9% accuracy on that set [43]. The exceptional performance of transformer models lies in their self-attention mechanism, which allows for more efficient modeling of long-range dependencies, outperforming recurrent networks such as BiLSTM and BiGRU. They are particularly adept at capturing complex linguistic characteristics [55].

Arabic poetry is often governed by systematic metrical structures, which can be effectively represented using half-verses. Analyzing half-verses preserves essential rhythmic and structural features. Table 9 presents a comparative analysis of this study with previous research using the same half-verse dataset.

Table 9.

Comparison with previous studies using the same half-verse dataset.

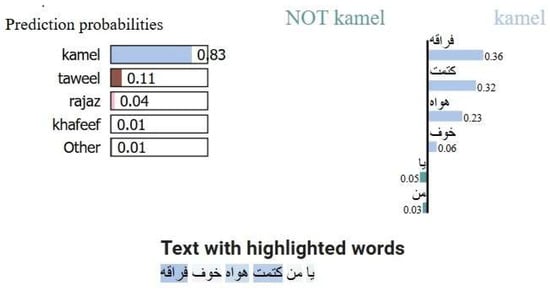

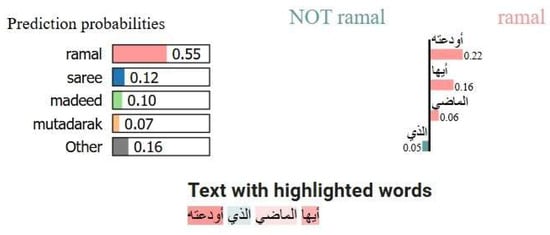

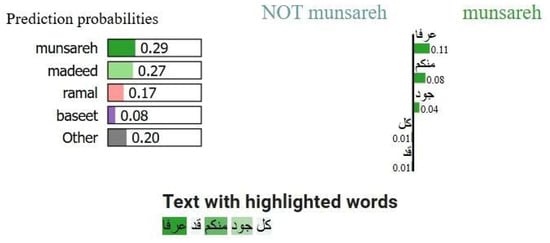

Model explainability using LIME is visualized in Figure 12, Figure 13 and Figure 14 for three representative sample texts. LIME highlights the words that most influence the model’s prediction by underlining them in different colors. These visualizations demonstrate how particular syllables or phrases contribute to the identification of a specific meter, along with the associated probabilities for the top predicted meters.

Figure 12.

LIME explanation with sample text 1.

Figure 13.

LIME explanation with sample text 2.

Figure 14.

LIME explanation with sample text 3.

5.1. Practical Implications

The findings of this study offer valuable practical applications for Arabic poetry analysis and AI-driven literary tools. The high accuracy achieved using half-verse data shows that meter classification can be effectively automated without requiring full verses, enhancing its real-world applicability. Moreover, the ability of DL and transformer models to learn metrical patterns supports the development of educational technologies that allow researchers and students to rapidly analyze and classify Arabic poetic meters.

This research contributes to Arabic NLP, particularly in tasks that demand rhythmic-sensitive analysis. Demonstrating that half-verses suffice for precise classification reduces processing demands and broadens access to AI-driven solutions in Arabic literary and language technologies.

5.2. Limitations and Future Work

While the proposed study employs half-verse data to achieve a higher level of accuracy in Arabic meter classification, it has certain limitations. One of the restrictions is its reliance on a specific dataset which may not adequately represent the diversity of Arabic poetry. Future research should explore the potential for expanding the dataset to encompass a larger diversity of poetic styles and emotions to assess the generalizability of the models.

Additionally, exploring multimodal approaches that combine textual and audio features could provide deeper insights into Arabic poetry. Such interdisciplinary research would bridge computational linguistics, phonetics, and literary analysis, potentially leading to more nuanced and human-like poetic classification systems.

6. Conclusions

This study explored Arabic meter categorization using various deep learning and transformer models, including AraBERT, AraELECTRA, Arabic-BERT, ARBERT, MARBERT, CAMeLBERT, BiGRU, and BiLSTM models. To the best of our knowledge, no prior research has evaluated this combination of methodologies, making our contribution novel in the field.

By applying these methodologies, we introduced new perspectives on processing and categorizing Arabic poetic meters. CAMeLBERT achieved the highest accuracy (90.62%), closely followed by BiLSTM (90.53%). These results underscore the effectiveness of transformer models in capturing the complex metrical structures in Arabic poetry, marking a significant advancement and opening new avenues for Arabic NLP research.

Author Contributions

Conceptualization, A.M.M.; Data Curation, S.S.; Formal Analysis, S.S.; Funding Acquisition, A.M.M.; Methodology, A.M.M. and S.S.; Project Administration, A.M.M.; Resources, A.M.M.; Software, S.S.; Supervision, A.M.M.; Validation, A.M.M.; Writing—Original Draft, A.M.M. and S.S.; Writing—Review and editing, A.M.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Kuwait University, grant number EO06/24.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The dataset is publicly available at [25].

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Yi, X.; Sun, M.; Li, R.; Li, W. Automatic poetry generation with mutual reinforcement learning. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 3143–3153. [Google Scholar] [CrossRef]

- Badawī, M.M. A Critical Introduction to Modern Arabic Poetry; Cambridge University Press: Cambridge, UK, 1975. [Google Scholar]

- Scott, H. Pegs, Cords, and Ghuls: Meter of Classical Arabic Poetry. Ph.D. Thesis, Swarthmore College, Swarthmore, PA, USA, 2010. [Google Scholar]

- Abandah, G.A.; Suyyagh, A.E.; Abdel-Majeed, M.R. Transfer learning and multi-phase training for accurate diacritization of Arabic poetry. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 3744–3757. [Google Scholar] [CrossRef]

- Alkiyumi, M. The creative linguistic achievements of Alkhalil bin Ahmed Al-Farahidi, and motives behind his creations: A case study. Cogent Arts Humanit. 2023, 10, 2196842. [Google Scholar] [CrossRef]

- Mohamed, M.; Al-Azani, S. Enhancing Arabic NLP Tasks through Character-Level Models and Data Augmentation. In Proceedings of the 31st International Conference on Computational Linguistics, Abu Dhabi, United Arab Emirates, 19–24 January 2025; pp. 2744–2757. [Google Scholar]

- Kumar, S.; Sharma, S.; Megra, K.T. Transformer-enabled multi-modal medical diagnosis for tuberculosis classification. J. Big Data 2025, 12, 5. [Google Scholar] [CrossRef]

- Kihal, M.; Hamza, L. Efficient Arabic and english social spam detection using a transformer and 2D convolutional neural network-based deep learning filter. Int. J. Inf. Secur. 2025, 24, 56. [Google Scholar] [CrossRef]

- Azzeh, M.; Qusef, A.; Alabboushi, O. Arabic fake news detection in social media context using word embeddings and pre-trained transformers. Arab. J. Sci. Eng. 2025, 50, 923–936. [Google Scholar] [CrossRef]

- Al Deen, M.M.S.; Pielka, M.; Hees, J.; Abdou, B.S.; Sifa, R. Improving natural language inference in Arabic using transformer models and linguistically informed pre-training. In Proceedings of the 2023 IEEE Symposium Series on Computational Intelligence (SSCI), Mexico City, Mexico, 5–8 December 2023; pp. 318–322. [Google Scholar] [CrossRef]

- Alharbi, A.O.; Alsuhaibani, A.; Alalawi, A.A.; Naseem, U.; Jameel, S.; Kanhere, S.; Razzak, I. Evaluating large language models on health-related claims across Arabic dialects. In Proceedings of the 1st Workshop on NLP for Languages Using Arabic Script, Abu Dhabi, United Arab Emirates, 19–20 January 2025; pp. 95–103. [Google Scholar]

- Senator, F.; Lakhfif, A.; Zenbout, I.; Boutouta, H.; Mediani, C. Leveraging ChatGPT for enhancing Arabic NLP: Application for semantic role labeling and cross-lingual annotation projection. IEEE Access 2025, 13, 3707–3725. [Google Scholar] [CrossRef]

- Al-Onazi, B.B.; ElTahir, M.M.; Alzaidi, M.S.A.; Ebad, S.A.; Alotaibi, S.D.; Sayed, A. Automating Meter Classification of Arabic Poems: A Harris Hawks optimization with deep learning perspective. Fractals 2025, 33, 2540008. [Google Scholar] [CrossRef]

- Atassi, A.; El Azami, I. Comparison and generation of a poem in Arabic language using the LSTM, BiLSTM, and GRU. J. Manag. Inf. Decis. Sci. 2022, 25, 1–8. [Google Scholar]

- Ahmed, M.A.; Hasan, R.A.; Ali, A.H.; Mohammed, M.A. classification of the modern Arabic poetry using machine learning. TELKOMNIKA (Telecommun. Comput. Electron. Control) 2019, 17, 2667–2674. [Google Scholar] [CrossRef][Green Version]

- Alqasemi, F.; Salah, A.-H.; Abdu, N.A.A.; Al-Helali, B.; Al-Gaphari, G. Arabic poetry meter categorization using machine learning based on customized feature extraction. In Proceedings of the 2021 International Conference on Intelligent Technology, System and Service for Internet of Everything (ITSS-IoE), Sana′a, Yemen, 1–2 November 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Naaz, K.; Singh, N.K. A learning approach towards meter-based classification of similar Hindi poems using a proposed two-level data transformation. Digit. Scholarsh. Humanit. 2023, 38, 1166–1182. [Google Scholar] [CrossRef]

- Ahmed, N.; Aziz, S.T.; Mojumder, M.A.N.; Mridul, M.A. Automatic classification of Meter in Bangla poems: A Machine Learning Approach. In Proceedings of the 2023 6th International Conference on Information Systems and Computer Networks (ISCON), Mathura, India, 3–4 March 2023; pp. 1–5. [Google Scholar]

- Yousef, W.A.; Ibrahime, O.M.; Madbouly, T.M.; Mahmoud, M.A. Learning meters of Arabic and English poems with recurrent neural networks: A step forward for language understanding and synthesis. arXiv 2019, arXiv:1905.05700. [Google Scholar]

- Rajan, R.; Chandrika Reghunath, L.; Varghese, L.T. POMET: A corpus for poetic meter classification. Lang. Resour. Eval. 2022, 56, 1131–1152. [Google Scholar] [CrossRef]

- Mahmudi, A.; Veisi, H. Automatic meter classification of Kurdish poems. PLoS ONE 2023, 18, e0280263. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.M.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All You Need. In Proceedings of the Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Rabie, E.M.; Hashem, A.F.; Alsheref, F.K. Recognition model for major depressive disorder in Arabic user-generated content. Beni-Suef Univ. J. Basic Appl. Sci. 2025, 14, 7. [Google Scholar] [CrossRef]

- Valcamonico, D.; Baraldi, P.; Macêdo, J.B.; Moura, M.D.C.; Brown, J.; Gauthier, S.; Zio, E. A systematic procedure for the analysis of maintenance reports based on a taxonomy and BERT attention mechanism. Reliab. Eng. Syst. Saf. 2025, 257, 110834. [Google Scholar] [CrossRef]

- Al-Shaibani, M.S.; Alyafeai, Z.; Ahmad, I. MetRec: A dataset for meter classification of Arabic poetry. Data Brief 2020, 33, 106497. [Google Scholar] [CrossRef]

- Al-Shaibani, M.S.; Alyafeai, Z.; Ahmad, I. Meter classification of Arabic poems using deep bidirectional recurrent neural networks. Pattern Recognit. Lett. 2020, 136, 1–7. [Google Scholar] [CrossRef]

- Zeyada, S.; Eladawy, M.; Ismail, M.; Keshk, H. A proposed system for the identification of Modem Arabic poetry meters (IMAP). In Proceedings of the 2020 15th International Conference on Computer Engineering and Systems (ICCES), Cairo, Egypt, 15–16 November 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Al-Talabani, A.K. Automatic recognition of Arabic poetry meter from speech signals using long short-term memory and support vector machine. ARO-Sci. J. Koya Univ. 2020, 8, 50–54. [Google Scholar] [CrossRef]

- Berkani, A.; Holzer, A.; Stoffel, K. Pattern matching in meter detection of Arabic classical poetry. In Proceedings of the 2020 IEEE/ACS 17th International Conference on Computer Systems and Applications (AICCSA), Antalya, Turkey, 5 November 2020; pp. 1–8. [Google Scholar] [CrossRef]

- Khalaf, Z.; Alabbas, M.; Ali, S. Computerization of Arabic poetry meters. UOS J. Pure Appl. Sci. 2009, 6, 41–62. [Google Scholar]

- Albaddawi, M.M.; Abandah, G.A. Pattern and poet recognition of Arabic poems using BiLSTM networks. In Proceedings of the 2021 IEEE Jordan International Joint Conference on Electrical Engineering and Information Technology (JEEIT), Amman, Jordan, 16–17 November 2021; pp. 72–77. [Google Scholar] [CrossRef]

- Abandah, G.A.; Khedher, M.Z.; Abdel-Majeed, M.R.; Mansour, H.M.; Hulliel, S.F.; Bisharat, L.M. Classifying and diacritizing Arabic poems using deep recurrent neural networks. J. King Saud Univ.-Comput. Inf. Sci. 2020, 34, 3775–3788. [Google Scholar] [CrossRef]

- Talghalit, I.; Alami, H.; Ouatik El Alaoui, S. Contextual semantic embeddings based on transformer models for Arabic biomedical questions classification. HighTech Innov. J. 2024, 5, 1024–1037. [Google Scholar] [CrossRef]

- Alosaimi, W.; Saleh, H.; Hamzah, A.A.; El-Rashidy, N.; Alharb, A.; Elaraby, A.; Mostafa, S. ArabBert-LSTM: Improving Arabic sentiment analysis based on transformer model and long short-term memory. Front. Artif. Intell. 2024, 7, 1408845. [Google Scholar] [CrossRef] [PubMed]

- Karfi, I.E.; Fkihi, S.E. A combined Bi-LSTM-GPT model for Arabic sentiment analysis. Int. J. Intell. Syst. Appl. Eng. 2023, 11, 77–84. [Google Scholar]

- Xu, G.; Meng, Y.; Qiu, X.; Yu, Z.; Wu, X. Sentiment analysis of comment texts based on BiLSTM. IEEE Access 2019, 7, 51522–51532. [Google Scholar] [CrossRef]

- Zhou, H. Research of text classification based on TF-IDF and CNN-LSTM. J. Phys. Conf. Ser. 2022, 2171, 012021. [Google Scholar] [CrossRef]

- Refai, D.; Abu-Soud, S.; Abdel-Rahman, M.J. Data augmentation using transformers and similarity measures for improving Arabic Text Classification. IEEE Access 2022, 11, 132516–132531. [Google Scholar] [CrossRef]

- Qarah, F. AraPoemBERT: A pretrained language model for Arabic poetry analysis. arXiv 2024, arXiv:2403.12392. [Google Scholar] [CrossRef]

- Hossain, M.M.; Hossain, M.S.; Safran, M.; Alfarhood, S.; Alfarhood, M.; Mridha, M.F. A hybrid attention-based transformer model for Arabic news classification using text embedding and deep learning. IEEE Access 2024, 12, 198046–198066. [Google Scholar] [CrossRef]

- Alshammari, H.; El-Sayed, A.; Elleithy, K. AI-generated text detector for Arabic language using encoder-based transformer architecture. Big Data Cogn. Comput. 2024, 8, 32. [Google Scholar] [CrossRef]

- Antoun, W.; Baly, F.; Hajj, H. AraELECTRA: Pre-training text discriminators for Arabic language understanding. In Proceedings of the Sixth Arabic Natural Language Processing Workshop, Virtual, 19 April 2021; pp. 191–195. [Google Scholar]

- Inoue, G.; Alhafni, B.; Baimukan, N.; Bouamor, H.; Habash, N. The interplay of Variant, Size, and task type in Arabic pre-trained language models. In Proceeding of the Proceedings of the Sixth Arabic Natural Language Processing Workshop, Virtual, 9 April 2021; pp. 92–104. [Google Scholar]

- Alzaidi, M.S.A.; Alshammari, A.; Hassan, A.Q.A.; Ebad, S.A.; Sultan, H.A.; Alliheedi, M.A.; Aljubailan, A.A.; Alzahrani, K.A. Enhanced automated text categorization via Aquila optimizer with deep learning for Arabic news articles. Ain Shams Eng. J. 2025, 16, 103189. [Google Scholar] [CrossRef]

- Badri, N.; Kboubi, F.; Chaibi, A.H. Abusive and hate speech Classification in Arabic text using pre-trained language models and data augmentation. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2024, 23, 155. [Google Scholar] [CrossRef]

- Al-Shaibani, M.S.; Ahmad, I. Dotless Arabic text for Natural Language Processing. Comput. Linguist. 2025, 1–42. [Google Scholar] [CrossRef]

- Selim, M.M.; Assiri, M.S. Enhancing Arabic text-to-speech synthesis for emotional expression in visually impaired individuals using the artificial hummingbird and hybrid deep learning model. Alex. Eng. J. 2025, 119, 493–502. [Google Scholar] [CrossRef]

- Wang, X.; Xiangfeng, L.; Wang, X.; Yu, H. Homophilic and heterophilic-aware sparse graph transformer for financial fraud detection. In Proceedings of the 2024 International Joint Conference on Neural Networks (IJCNN), Yokohama, Japan, 30 June–5 July 2024; pp. 1–8. [Google Scholar] [CrossRef]

- Mustafa, A.M.; Nakhleh, S.; Irsheidat, R.; Alruosan, R. Interpreting Arabic transformer models: A study on XAI interpretability for Qur’anic semantic-search models. Jordanian J. Comput. Inf. Technol. 2024, 10. [Google Scholar] [CrossRef]

- Alotaibi, A.; Nadeem, F. An unsupervised integrated framework for Arabic aspect-based sentiment analysis and abstractive text summarization of traffic services using transformer models. Smart Cities 2025, 8, 62. [Google Scholar] [CrossRef]

- Alshammari, N. Bangor University at WojoodNER 2024: Advancing Arabic named entity recognition with CAMeLBERT-Mix. In Proceedings of the Second Arabic Natural Language Processing Conference, Bangkok, Thailand, 16 August 2024; pp. 880–884. [Google Scholar]

- Belbachir, F.; Soukane, A. Enhancing named entity recognition in Arabic: Leveraging transformer-based models and open data sources. In Proceedings of the 2024 2nd International Conference on Foundation and Large Language Models (FLLM), Dubai, United Arab Emirates, 26–29 November 2024; pp. 357–361. [Google Scholar]

- El Mekki, A.; El Mahdaouy, A.; Essefar, K.; El Mamoun, N.; Berrada, I.; Khoumsi, A. BERT-based multi-task model for country and province level MSA and dialectal Arabic Identification. In Proceedings of the Workshop on Arabic Natural Language Processing, Kiev, Ukraine, 19 April 2021. [Google Scholar]

- Alruily, M.; Manaf Fazal, A.; Mostafa, A.M.; Ezz, M. Automated Arabic long-tweet classification using transfer learning with BERT. Appl. Sci. 2023, 13, 3482. [Google Scholar] [CrossRef]

- Alammary, A.S. BERT models for Arabic text classification: A systematic review. Appl. Sci. 2022, 12, 5720. [Google Scholar] [CrossRef]

- Alammary, A.S. Investigating the impact of pretraining corpora on the performance of Arabic BERT models. J. Supercomput. 2024, 81, 187. [Google Scholar] [CrossRef]

- Choo, S.; Kim, W. A study on the evaluation of tokenizer performance in natural language processing. Appl. Artif. Intell. 2023, 37, 2175112. [Google Scholar] [CrossRef]

- Ersoy, A.; Yıldız, O.T.; Özer, S. ORTPiece: An ORT-based Turkish Image Captioning Network Based on transformers and WordPiece. In Proceedings of the 2023 31st Signal Processing and Communications Applications Conference (SIU), Istanbul, Turkey, 5–8 July 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Choi, Y.; Lee, K.J. Performance analysis of Korean morphological analyzer based on transformer and BERT. J. KIISE 2020, 47, 730–741. [Google Scholar] [CrossRef]

- Antoun, W.; Baly, F.; Hajj, H. AraBERT: Transformer-based model for Arabic language understanding. In Proceedings of the 4th Workshop on Open-Source Arabic Corpora and Processing Tools, with a Shared Task on Offensive Language Detection, Marseille, France, 11–16 May 2020; pp. 9–15. [Google Scholar]

- Hussein, H.H.; Lakizadeh, A. A systematic assessment of sentiment analysis models on Iraqi dialect-based texts. Syst. Soft Comput. 2025, 7, 200203. [Google Scholar] [CrossRef]

- Abdul-Mageed, M.; Elmadany, A.; Nagoudi, E.M.B. ARBERT and MARBERT: Deep bidirectional transformers for Arabic. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Online, 3 August 2021; pp. 7088–7105. [Google Scholar]

- Safaya, A.; Abdullatif, M.; Yuret, D. KUISAIL at SemEval-2020 Task 12: BERT-CNN for offensive speech identification in social media. In Proceedings of the Fourteenth Workshop on Semantic Evaluation, Online, 12–13 December 2020; pp. 2054–2059. [Google Scholar]

- Sun, Z.; Zhang, Z.; Zhang, M. BiLSTM personalized-style poetry generation algorithm. In Proceedings of the 2023 IEEE 3rd International Conference on Power, Electronics and Computer Applications (ICPECA), Shenyang, China, 29–31 January 2023; pp. 836–840. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Garreau, D.; Luxburg, U. Explaining the explainer: A first theoretical analysis of LIME. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Online, 26–28 August 2020; pp. 1287–1296. [Google Scholar]

- Verma, B.; Thomas, A.; Verma, R.K. Innovative abstractive Hindi text summarization model incorporating Bi-LSTM classifier, optimizer, and generative AI. Intell. Decis. Technol. 2025, 1–9. [Google Scholar] [CrossRef]

- Al-Shathry, N.; Al-Onazi, B.; Hassan, A.Q.A.; Alotaibi, S.; Alotaibi, S.; Alotaibi, F.; Elbes, M.; Alnfiai, M. Leveraging hybrid adaptive sine cosine algorithm with deep learning for Arabic poem meter detection. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2024. [Google Scholar] [CrossRef]

- Kharsa, R.; Elnagar, A.; Yagi, S. BERT-based Arabic Diacritization: A state-of-the-art approach for improving text accuracy and pronunciation. Expert Syst. Appl. 2024, 248, 123416. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).