2. Related Work

Babajide et al. [

5] and Weber and Achananuparp [

6] focused on predicting future weight and the success of diet control based on factors such as diet management, body fat, sleep time, and physical condition. However, the methods developed in these studies cannot predict visual changes in individuals. To address this limitation, Kuzmar et al. [

7] developed a technique to predict 3D body shape based on height, weight, and four other body measurements using the Skinned Multi-Person Linear (SMPL) model. Similarly, Zeng et al. [

8] incorporated height, weight, and 17 other more deformable body dimensions to obtain more detailed body shape predictions. However, as these studies primarily utilized databases related to Western populations, their findings may not be applicable to Koreans. Additionally, relying solely on height and weight may not be sufficient for determining 3D body shapes similar to the subject’s, while incorporating additional human body measurements into a prediction model can be a complex process. Furthermore, 3D models based on the SMPL may not resemble the subject perfectly, as the SMPL model is based on a mannequin.

Image-to-image translation (I2I) is a computer vision technique that transforms input images into corresponding output images. This technique is particularly useful for predicting changes in images based on specific criteria. Advancements in deep learning have increased its utilization in the medical field as well. A prominent research focus within I2I is the prediction of changes in facial style. For instance, Karras et al. introduced the Style GAN and Style GAN2 algorithms, which enable various style transformations in facial images, such as alterations in hair color, based on human facial data [

9,

10]. These algorithms utilize deep learning to generate realistic and accurate transformations in facial appearance. In a related study, URSF-GAN [

11] was proposed as an unsupervised framework for face frontalization that lifts single-view 2D facial images into 3D space, applies symmetric texture filling, and leverages a Pix2Pix-based network to eliminate artifacts. This approach shows that combining geometric priors such as 3D rotation with adversarial learning can produce photorealistic and identity-preserving facial outputs, even without paired training data.

Generative adversarial networks (GANs) [

12] offer various capabilities, such as data augmentation and Image-to-Image(I2I), which have become increasingly important in the medical field [

13,

14]. Specifically, conditional GAN (cGAN) models have been used for pixel unit prediction in various I2I applications involving medical images [

13,

15,

16]. Representative examples include the prediction of visceral and subcutaneous adipose tissue maps based on 2D body shape data obtained by projecting reconstructed 3D Computed Tomography(CT) data in one direction [

15] and long-term predictions based on X-ray radiography to help doctors with segmentation and diagnosis by erasing the spine [

16].

However, the aforementioned methods are not readily accessible to the general population because of the difficulty in acquiring appropriate data. GAN-based I2I can be used to predict postoperative appearance based on a preoperative image [

17]. I2I has also been employed to predict patient appearance after orbital decompression using RGB images [

13]. However, since the input image cannot be translated directly, latent vector optimization must be performed, which is a notable limitation as it requires about 20 min to complete. This problem can be overcome by employing the Pix2Pix algorithm, which allows for constructing a latent space using the image itself as an input before performing the prediction, all without requiring a separate latent optimization process [

18]. However, studies have reported that Pix2Pix creates blurry images [

13,

19,

20].

In addition to the advancements in facial style prediction, Choi et al. proposed Star GAN, which is a single model that can accommodate multiple data domains, such as age, hair color, and gender [

21], thereby enabling more versatile I2I across different attributes. Researchers have also explored the application of I2I techniques in the medical field. For example, Yoo et al. predicted patient appearances post-orbital decompression surgery based on pre-surgery images, assisting patients in making informed decisions considering the potential surgical outcomes [

13]. Similarly, Wang et al. employed I2I to predict maps of visceral fat and subcutaneous fat using 2D body shape data derived from 3D reconstructions of CT scans [

15]. These studies demonstrate the potential of I2I techniques in various medical applications. However, despite active research related to facial changes in medical applications, few studies have focused on the entire human body because of the limited availability of comprehensive body shape data.

3. Materials and Methods

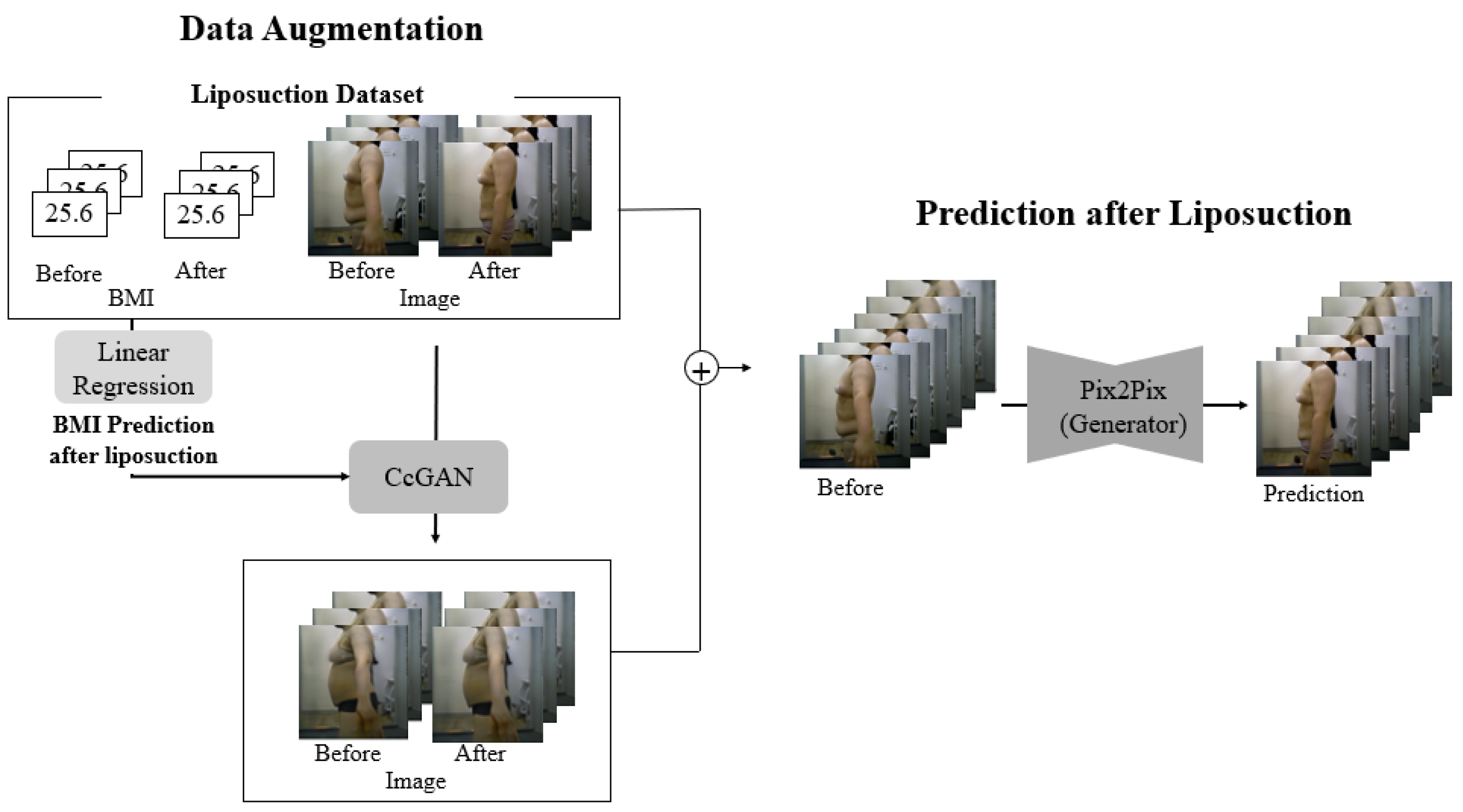

Figure 1 presents the proposed framework, which integrates data augmentation using CcGAN and image-to-image translation with Pix2Pix to predict post-liposuction body shape from preoperative RGB images. Both real and augmented paired data are used to compensate for the limited sample size.

(Data Collection) Since post-liposuction appearance must be predicted using images captured before liposuction, data to be collected must satisfy several conditions: First, to clearly determine the change in body shape, the images must depict the subject with only underwear on. Second, image pairs depicting the same individual before and after liposuction are required. Accordingly, images of 230 patients who underwent liposuction were collected. This study’s protocol was approved by the Institutional Review Board of Dongguk University (DUIRB-202202-14), and the relevant procedures complied with the principles of the Declaration of Helsinki. On the day of liposuction, the patient captured images of their upper and lower body in a fixed position from the front, left, right, and back; two months after liposuction, they captured the same images to record the postoperative changes. All images were captured with the camera 110 cm above the ground and 100 cm away from the heel. To ensure consistency in posture during image acquisition, all participants were instructed to stand with their arms naturally at their sides, feet shoulder-width apart, and eyes facing forward. However, due to the absence of explicit guidelines regarding arm angles or spacing between the arms and torso, individual variations in arm positioning occurred. This led to ambiguous boundaries between the abdomen and the arms in some cases. All images were captured in a closed studio environment without windows to eliminate the influence of natural light, using consistent lighting conditions and fixed camera settings. For participant safety and comfort, the entire process was supervised by medical professionals. A total of 920 images were collected, including front, back, left, and right views of the upper and lower body. Among these, 230 left-side upper body images were selected for model training and evaluation, as this viewpoint most clearly captured changes in the abdominal and flank areas and provided a distinct view of the target region for prediction. The images were taken under controlled conditions in a clinical environment using a DSLR camera (Canon EOS 800D (Canon Inc., Tokyo, Japan), equipped with a 24.2 MP APS-C sensor). All subjects were photographed against a matte white background, and the lighting setup followed a three-point lighting system (left and right-side lights and a front-facing soft light) to minimize skin reflections and shadow artifacts. Continuous lighting with a color temperature of 5500 K was used, and no flash was applied. Additionally, before each session, subjects were instructed to remain under the lighting for five seconds to allow their skin tone to adjust to the environment. Lighting intensity and camera exposure settings were kept consistent across all sessions. These conditions were designed to ensure image consistency and allow accurate comparison of pre- and postoperative body shapes without distortion from lighting variations. All experiments were implemented in Python 3.9.7 using PyTorch 1.13.1 and torchvision 0.14.1. Model training was performed with CUDA 11.4 on an NVIDIA RTX 2060 GPU (NVIDIA Corporation, Santa Clara, CA, USA). Body mass index (BMI) data for the patients were also collected.

(Data Augmentation) As only 230 image pairs were available, data augmentation was necessary to obtain meaningful results for analysis [

22,

23]. Surgical procedures such as double-eyelid surgery and orbital decompression involve a clear and consistent pattern of alteration in appearance. By contrast, although liposuction always results in weight loss, determining whether a specific image depicts pre-liposuction or post-liposuction appearance is impossible. Specifically, for slim people undergoing liposuction [

24], the amount of fat removable is relatively limited, whereas for heavier individuals, removing too much in a single session could be risky [

25]. Thus, images of certain individuals after liposuction could depict more fat than images of other individuals before liposuction. Therefore, conventional GAN-based data augmentation, which relies on discrete categorical labels, could not be performed. Instead, data augmentation was performed using continuous cGAN (CcGAN) [

25] based on BMI [

26,

27], a continuous variable that reflects overall body characteristics and is commonly used to evaluate obesity.

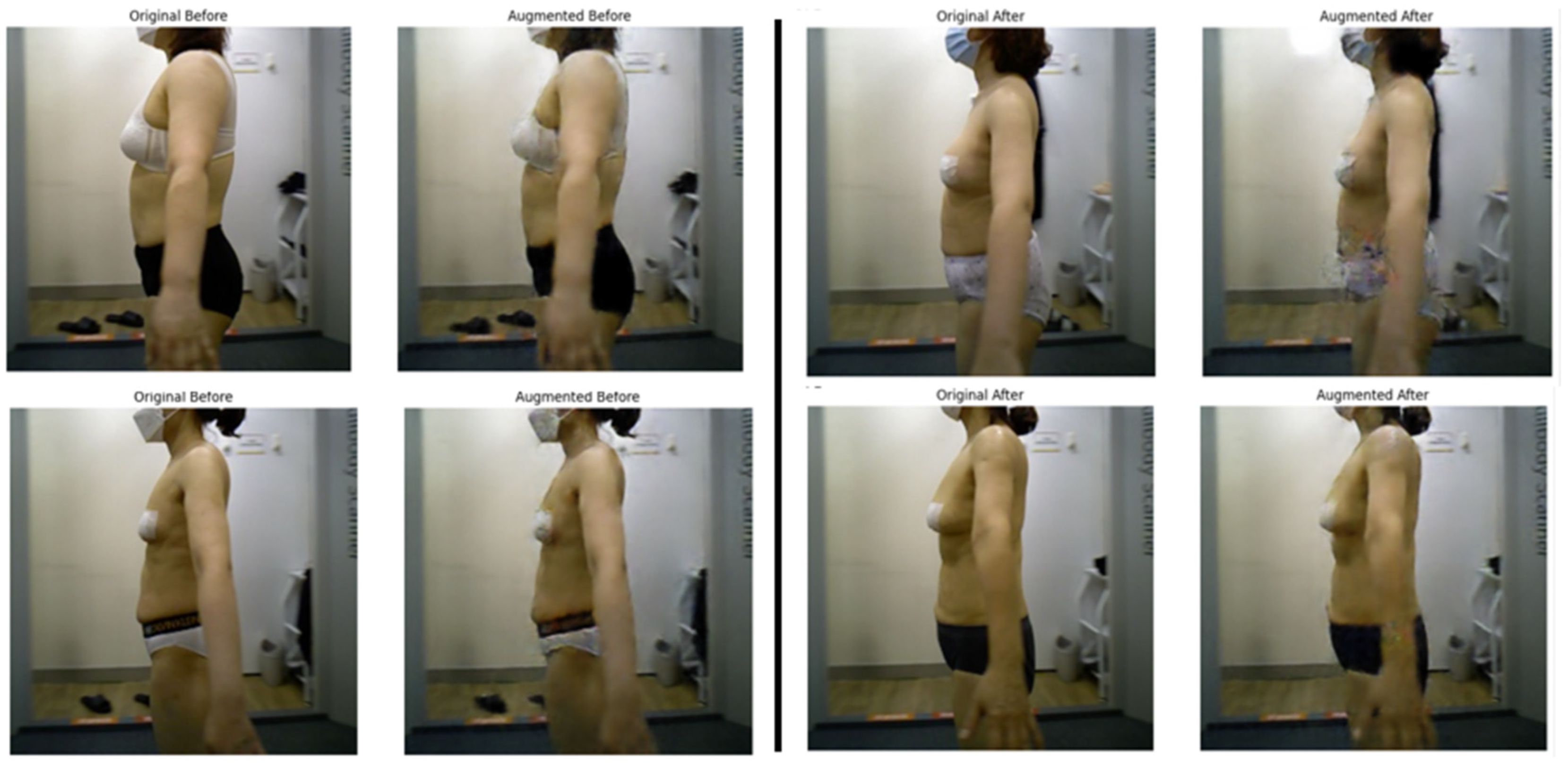

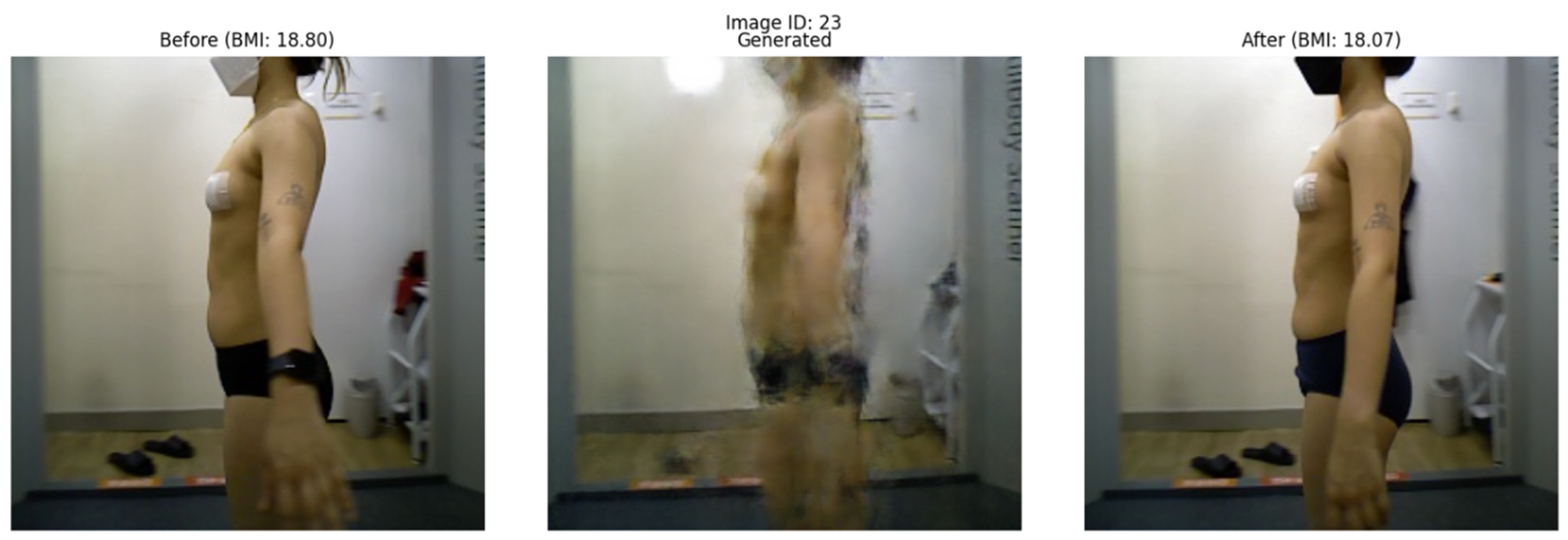

Figure 2 presents side-by-side examples of actual patient images and augmented images generated by the CcGAN. Each pair includes both real and synthetic pre- and post-liposuction images, demonstrating that the generated images realistically reflect changes in BMI.

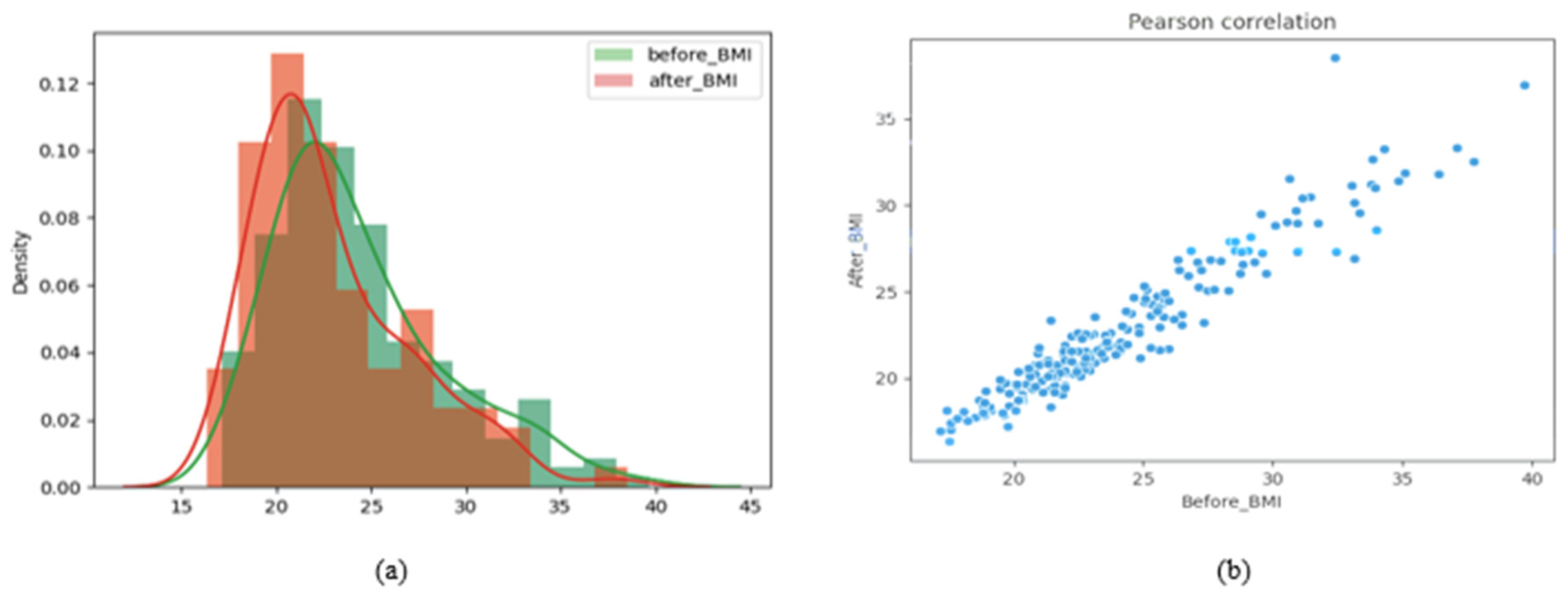

(Linear Regression) Based on the BMI distributions before and after liposuction in

Figure 3a, liposuction appears to shift the BMI to the left (i.e., toward small values). A paired t-test on the BMI values before and after liposuction showed a

p-value of 1.0210 × 10

−33, which indicates a significant difference. A Pearson correlation analysis was also performed to determine whether the BMI values before and after liposuction share a linear relationship [

28]. Pearson correlation coefficients closer to 1 indicate more positive linear relationships [

29]. The calculated Pearson correlation coefficient in this study was 0.9552, which indicates a highly linear relationship.

Figure 3b presents a distribution graph visualizing this linear relationship. Therefore, we performed linear regression to predict the BMI after liposuction based on the BMI before liposuction. Based on these predictions, paired data samples (images before and after liposuction) were generated during data augmentation.

(CcGAN) CcGAN is a generative architecture for conditional image generation based on continuous numerical values as auxiliary information instead of discrete class labels [

30]. While existing cGANs generate images mainly based on categorical labels, they cannot directly utilize continuous data as labels. This limitation is addressed by CcGAN, which comprises a generator and a discriminator.

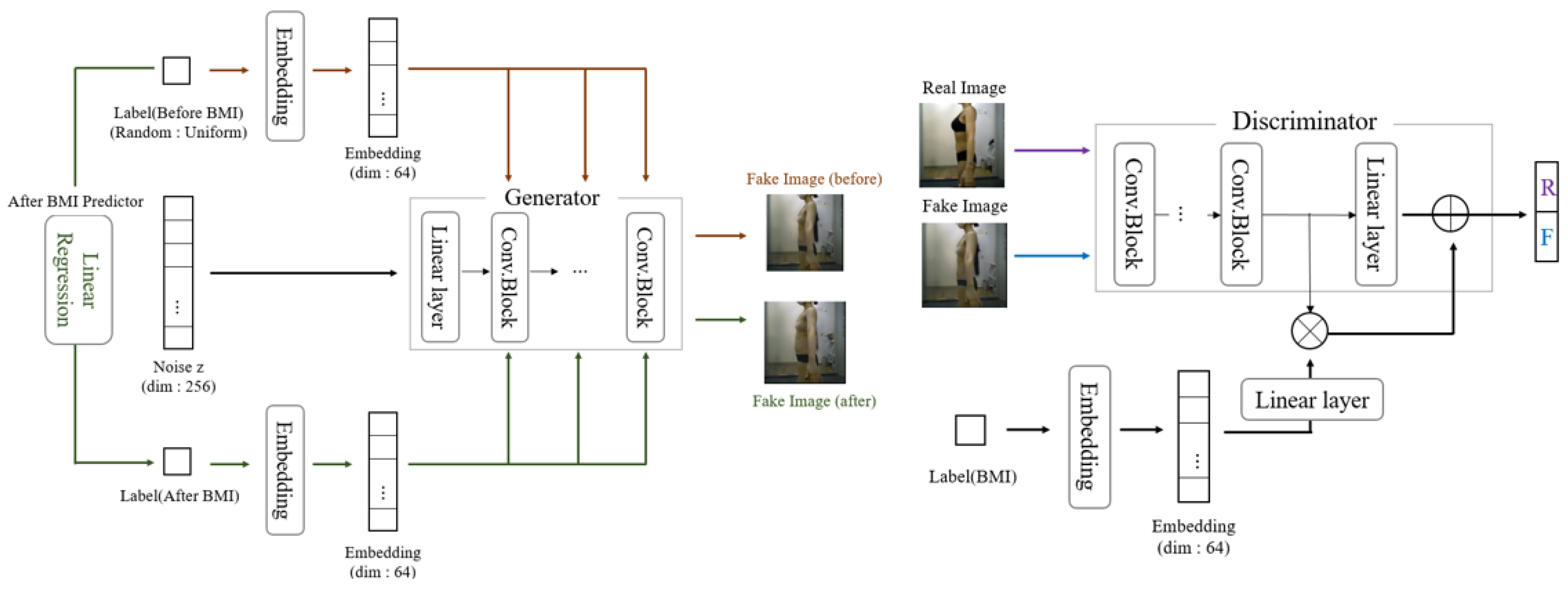

Figure 4 illustrates the CcGAN architecture used in this study. Both the generator and discriminator in CcGAN are implemented as CNNs. The generator receives a noise vector and embedded BMI value, processed through a series of convolutional layers and upsampling blocks. Specifically, the generator consists of five transposed convolutional layers with filter sizes of 512, 256, 128, 64, and 3, respectively. Each layer uses a 4 × 4 kernel, stride of 2, and padding of 1. Batch normalization and ReLU activation are applied to all layers except the last, which uses a tanh activation function to produce a 3-channel RGB image.

The discriminator evaluates the realism of the image using a CNN that processes the image and embedded label through parallel streams before concatenation and final classification. The discriminator consists of five convolutional layers with filter sizes of 64, 128, 256, 512, and 1. All layers use a 4 × 4 kernel. The first three layers apply a stride of 2, and the last two apply a stride of 1. Instance normalization is applied from the second layer onward, and LeakyReLU (slope = 0.2) is used as the activation function. The final layer uses a sigmoid function to classify real vs. fake. More detailed descriptions of the generator and discriminator structures are provided in the later section titled ‘Network Architecture: Pix2Pix’.

The generator generates images based on random noise z and a continuous label. The continuous label is transformed to a specific dimension through embedding before being used. Unlike Pix2Pix, CcGAN does not require an input image, as it synthesizes images from a random noise vector and condition value. Therefore, it does not employ an encoder–decoder structure such as U-Net. Instead, it uses a stack of transposed convolutional layers to upsample the input latent representation into a full-resolution image gradually. We performed data augmentation to create pre-liposuction and post-liposuction images for the same person.

While the noise z was fixed, the BMI values before and after liposuction were utilized differently to generate images of a person before and after liposuction, respectively. Fixing the noise vector z ensures that the identity-related latent features remain constant across the generated pre- and post-liposuction images. This design allows the BMI condition to modulate only the morphological aspects relevant to fat reduction, thereby enabling identity-consistent yet BMI-dependent image generation. Such a strategy aligns with prior work—InfoGAN [

31]—that explicitly separates incompressible noise from semantic latent codes to disentangle identity and attribute factors in conditional generative models. This approach is particularly useful for simulating before-and-after transformations in medical imaging, where preserving individual identity is critical. Specifically, z was randomly derived from a Gaussian function, and the pre-liposuction BMI was derived using a uniform function based on the smallest (16.3176) and largest (39.7025) BMI values in the real dataset. Accordingly, pre-liposuction images were generated by combining the derived pre-liposuction BMI with z.

Further, the BMI after liposuction was predicted via linear regression based on the BMI before liposuction, as mentioned earlier. Post-liposuction images were then generated by combining the predicted post-liposuction BMI with noise z. Thus, 2000 pairs of pre-liposuction and post-liposuction images were created in total. Finally, the complete dataset was separated into training and testing subsets, as outlined in

Table 1.

To confirm whether the generated images corresponded to the input BMI value, we designed a model based on a pretrained ResNet34 architecture that predicts BMI using body images as input. ResNet34 [

32] offers excellent image classification performance [

33] and can be used to derive feature maps reflecting the characteristics of any given input images [

32]. The BMI predictor was trained using BMI data from a total of 400 pre-liposuction and post-liposuction images. For training the BMI predictor, we utilized the Adam optimizer with a learning rate of 0.001, beta values of (0.9, 0.999), and a batch size of 16. The model was trained for 160 epochs, with the mean squared error (MSE) loss between the actual BMI and predicted BMI as the optimization criterion. To ensure robust training, we applied a weight decay of 0.0001 for regularization to prevent overfitting. The dataset was split into 80% training and 20% validation to monitor generalization performance. The learning rate was reduced by a factor of 0.1 if the validation loss did not improve for 10 consecutive epochs using a learning rate scheduler. The model with the smallest validation MSE loss, achieved at epoch 160 with an MSE loss of 0.0082, was selected as the final BMI predictor. The ResNet34 model was implemented using PyTorch 1.13.1 and torchvision 0.14.1. Training was performed on an NVIDIA RTX 2060 GPU (NVIDIA Corporation, Santa Clara, CA, USA), taking approximately 4 h. The input images were resized to 256 × 256 pixels and normalized using ImageNet mean and standard deviation values to align with the pretrained Resnet34 weights. This model was used to evaluate the validity of data generated by CcGAN.

(Network Architecture: Pix2Pix) As our goal was to generate a post-liposuction image based on a pre-liposuction image, we utilized I2I. Most studies on I2I have employed convolutional neural networks with encoder–decoder architectures [

18,

34,

35,

36]. Pix2Pix is a representative model that builds upon the cGAN framework [

37] to create realistic images via I2I [

18]. Pix2Pix comprises a generator and a discriminator, which are trained in an adversarial manner, as shown in

Figure 5.

The generator of the Pix2Pix model is implemented as a Convolutional Neural Network (CNN) based on a U-Net architecture consisting of eight encoder layers and eight decoder layers. The encoder progressively reduces the spatial resolution of the input image (256 × 256 pixels) to 1 × 1, using convolutional layers with filter sizes of 64, 128, 256, 512, 512, 512, 512, and 512. Each encoder layer applies a convolution operation with a kernel size of 4 × 4, stride of 2, and padding of 1. Batch normalization and LeakyReLU activation (with a slope of 0.2) are applied to all encoder layers except the first. The decoder mirrors the encoder’s structure and restores resolution using transposed convolutions with a kernel size of 4 × 4, stride of 2, and padding of 1. Each decoder layer uses ReLU activation and batch normalization, except for the last layer, which applies a tanh activation function without normalization to produce a 3-channel RGB output. Specifically, the decoder consists of eight layers with filter sizes of 512, 512, 512, 512, 256, 128, 64, and 3, corresponding to each upsampling stage. In accordance with the original Pix2Pix implementation, dropout with a rate of 0.5 is applied to the first three decoder layers during training to improve generalization and prevent overfitting. Skip connections are implemented via channel-wise concatenation between each encoder layer and its corresponding decoder layer, allowing spatial information and fine-grained details to be preserved throughout the reconstruction process. This encoder–decoder CNN structure allows feature maps and spatial information to be effectively transmitted without loss [

38,

39,

40]. The discriminator of the Pix2Pix model is implemented as a Convolutional Neural Network (CNN) and follows the PatchGAN architecture, which evaluates the realism of overlapping local image patches instead of assessing the entire image at once. This design is particularly effective for capturing high-frequency visual details, such as contours and textures, that are essential for generating realistic images. The discriminator receives as input a 256 × 256 × 6 tensor formed by concatenating a pre-liposuction image and either a real or generated post-liposuction image along the channel dimension. The network consists of five convolutional layers with filter sizes of 64, 128, 256, 512, and 1, respectively. All layers use a 4 × 4 kernel, with the first three layers applying a stride of 2 and the last two a stride of 1. A padding of 1 is applied to all layers to maintain spatial consistency throughout the network. Each layer uses a LeakyReLU activation function (with a slope of 0.2), and instance normalization is applied from the second layer onward to stabilize training and improve generalization. The final output layer uses a sigmoid activation function to predict the authenticity of each local patch. Unlike traditional discriminators that produce a single real/fake score for the whole image, PatchGAN outputs a probability map—for example, 30 × 30—where each value represents the likelihood that a particular image patch is real. During training, the discriminator is trained on two types of image pairs: one consisting of a pre-liposuction and a real post-liposuction image and the other consisting of a pre-liposuction and a generator-produced post-liposuction image. This patch-based discrimination encourages the generator to produce more realistic local details throughout the image [

18].

The objective function of Pix2Pix comprises cGAN loss and reconstruction loss. Equation (1) defines cGAN loss, where

x represents the pre-liposuction image,

y denotes the post-liposuction image, and

G is the generator. Therefore,

G(

x) is a fake image produced by the generator using the input image

x. In Equation (1), the error is calculated using cross-entropy. Equation (2) serves to reduce the loss in units of pixels as a reconstruction loss. The L1 loss signifies the error between the image created by the generator and the real post-liposuction image. Therefore, Equation (3) represents the final objective function based on Equations (1) and (2), where λ denotes a weight.

Several studies have shown that Pix2Pix produces blurry predictions [

13,

19,

20]. As the L1 loss in Equation (2) represents the average pixel-wise difference between the predicted and real images, the model tends to produce smoothed results to minimize this average [

41]. Thus, the model produces blurry predictions, specifically around high-frequency parts such as edges. To address this issue, the perceptual loss function based on VGGNet [

42] has been proposed as an alternative [

41,

43]. Therefore, we used perceptual loss—which considers the Euclidean distance of the activation layer of VGG16 pretrained using the ImageNet dataset [

33]—together with L1 loss to represent the overall reconstruction loss.

In Equation (4),

φ represents VGG16, and

denotes the feature map derived from the

j-th activation layer in the convolution layer. We used four ReLU Layers in total. As shown in Equation (5), the perceptual loss is the sum of the Euclidean distances of the feature maps corresponding to the real and predicted post-liposuction images. In Equation (4),

W,

H, and

C denote the dimensions of the feature map. Equation (6) represents the final objective function used in this study, where λ signifies a weight.

4. Results

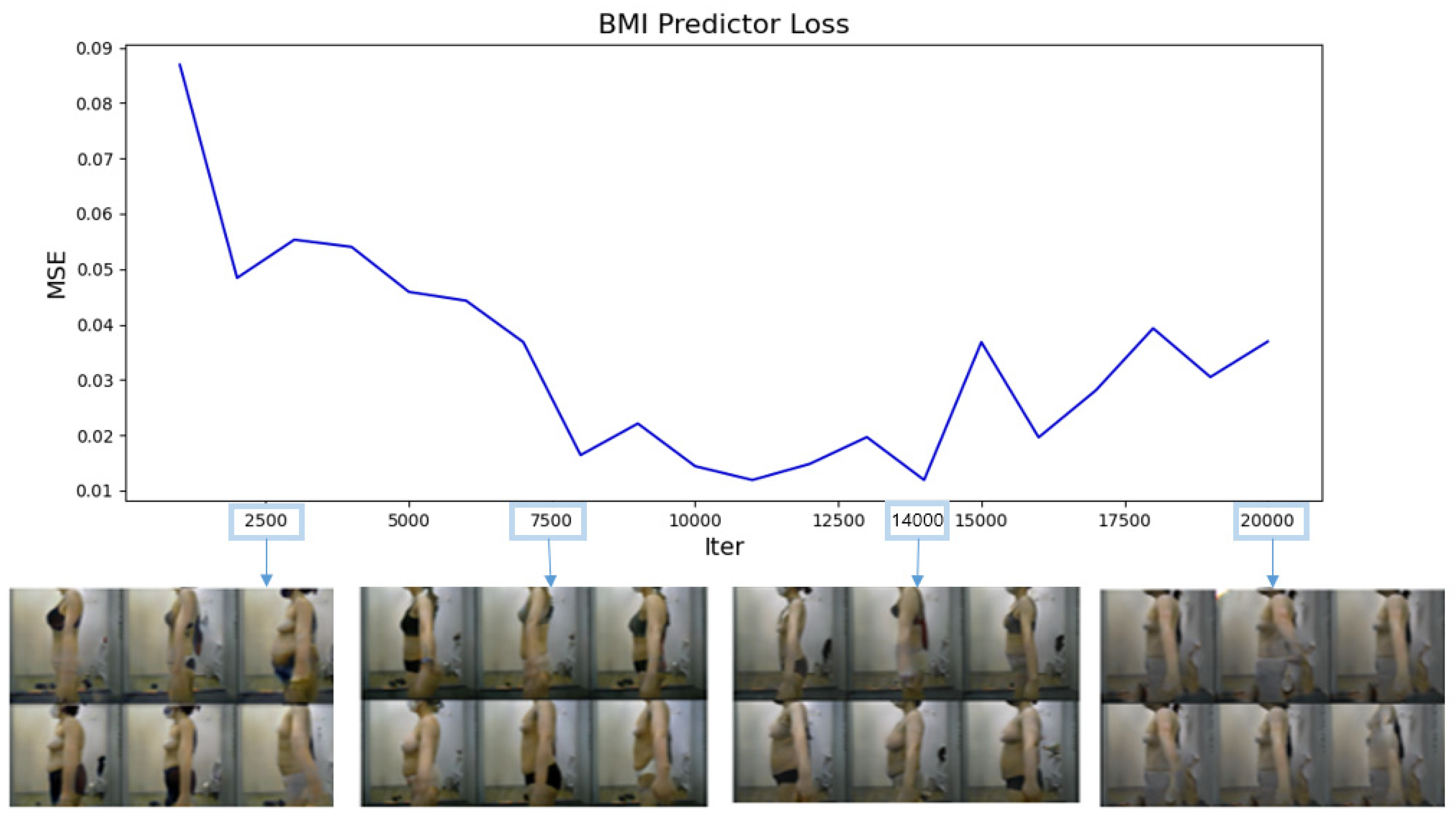

(Data Augmentation) To determine whether the CcGAN correctly generated body shape images according to the input BMI value, we used the ResNet34-based BMI predictor, as mentioned in

Section 3. The CcGAN was trained over a total of 20,000 iterations, and the generator model was saved every 1000 iterations. For training the CcGAN, we used the Adam optimizer with a learning rate of 0.0002, beta values of (0.5, 0.999), and a batch size of 32, following standard GAN training practices [

12]. Continuous conditional labels (BMI values) were embedded into a 128-dimensional vector to ensure stable training. The model was trained for approximately 286 epochs, equivalent to 20,000 iterations, given the dataset size and batch size. The training was conducted on an NVIDIA RTX 2060 GPU. The images generated by each model were evaluated for accuracy by the BMI predictor. We calculated MSELoss between the predicted BMI and the actual BMI. As MSELoss approaches 0, the generated image means that the image corresponding to the target BMI Label is generated.

Figure 5 presents a graph showing the MSELoss calculated every 1000 iterations, along with some of the images generated. During the initial iterations, the images were severely blurred, and the body type did not vary with BMI. The 14,000th-iteration generator model was deemed optimal as it achieved the smallest MSELoss (0.0119) and could generate appropriate body shapes for different BMI values. The 19,000th-iteration model suffered mode collapse [

40] and could generate only similar images or visually abnormal images. Therefore, the 14,000th-iteration generator model was used for data augmentation.

Equation (7) presents the linear regression equation for calculating the BMI after liposuction based on the BMI before liposuction.

Table 2 summarizes the metrics used to evaluate the effectiveness of the linear regression process. R

2 represents the explanatory power of linear regression; the closer its value is to 1, the more accurate the prediction. The R

2 value was determined to be 0.912 in this study, which underscores the accuracy of our predictive model.

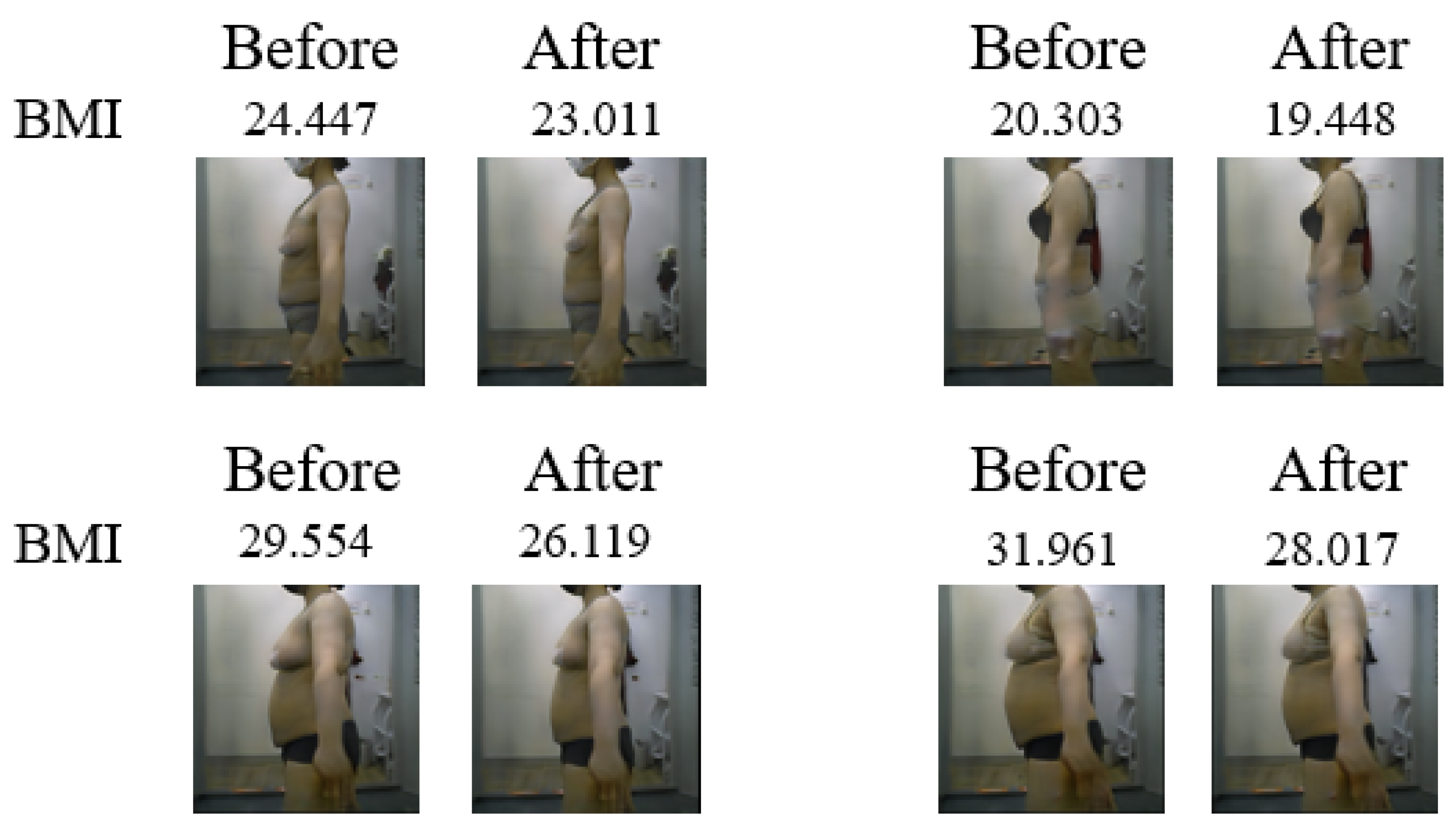

With a fixed noise z, the CcGAN generator created images of individuals before and after liposuction.

Figure 6 shows the images generated considering a total of 4 noise z. When the BMI before liposuction was low, no significant difference could be observed between the images before and after liposuction. This occurs because the BMI values before and after liposuction share a linear relationship, whereby small BMI values are not significantly altered after liposuction. Accordingly, the larger the BMI before liposuction, the more pronounced the difference in body shape between the images before and after liposuction. The pre-liposuction and post-liposuction images in

Figure 7 can be confirmed as depicting the same person for every z value.

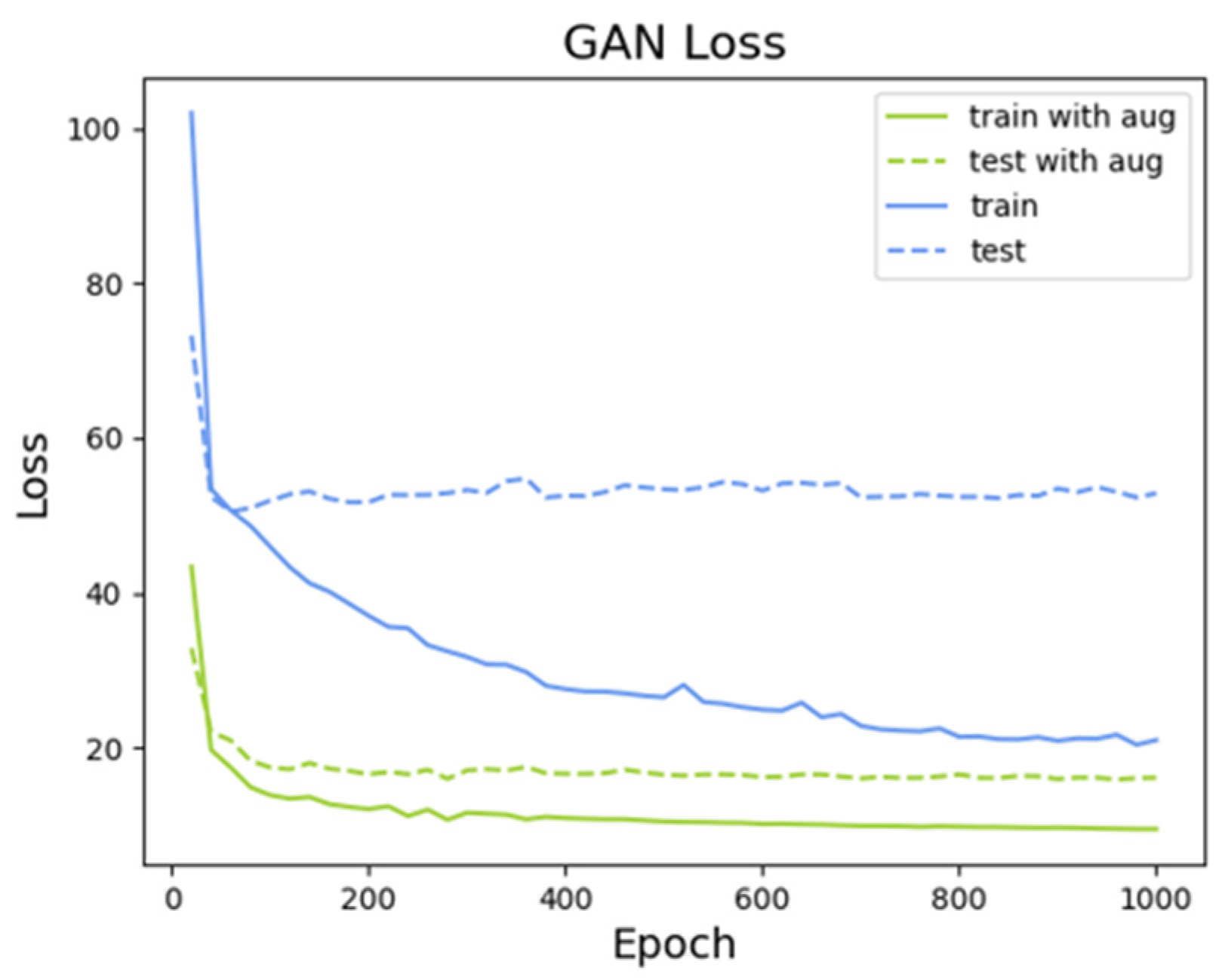

(Predicted Image after Liposuction) Figure 8 shows the reconstruction loss trend. Referring to the objective function in Equation (6),

and

were set to 10 and 100, respectively, before training the model. Without data augmentation, as the epochs progressed, the model’s effectiveness became increasingly dependent on training data, which ultimately resulted in overfitting. Thus, when the model was evaluated on test data, its reconstruction loss showed an increasing trend, which indicates that it did not learn appropriately. Conversely, when data augmentation was performed, the model achieved stable learning.

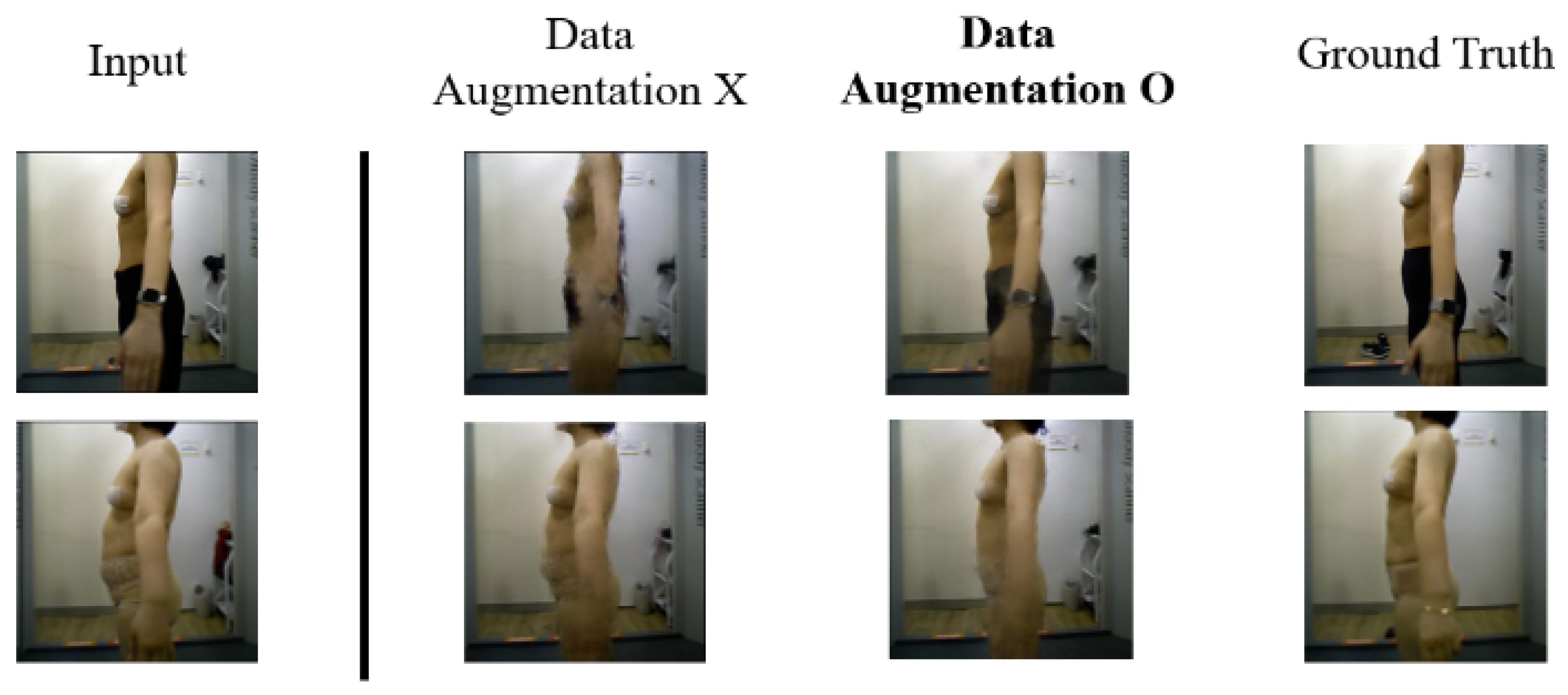

The images generated by the model without and with data augmentation were qualitatively compared.

Figure 8 shows the reconstruction loss trend for the model trained over 860 epochs, which achieved the smallest error. Without data augmentation, although the minimum error was initially achieved, the model was not trained properly; hence, the 200-epoch model—the one with the minimum error—was trained for additional epochs. As seen in

Figure 9, the images generated by the model without data augmentation appeared distorted; however, when data augmentation was performed, although some blurring was still observed around the arm, it was noticeably less than without data augmentation. Pix2Pix was trained using the Adam optimizer with a learning rate of 0.0002 and beta values of (0.5, 0.999), following standard GAN training practices. The Adam optimizer was used as implemented in PyTorch 1.13.1, and model input preprocessing followed the ImageNet statistics for compatibility with the pretrained VGG16 (torchvision 0.14.1) used in the perceptual loss calculation. The batch size was set to 16, and the model was trained for up to 1000 epochs. The objective function combined cGAN loss, L1 loss, and perceptual loss, with their weights empirically set to

= 100 and

= 10. All training was conducted on an NVIDIA RTX 2060 GPU. Input images were resized to 256 × 256 pixels and normalized using ImageNet statistics, specifically by subtracting the channel-wise mean [0.485, 0.456, 0.406] and dividing by the standard deviation [0.229, 0.224, 0.225]. These preprocessing settings ensured consistency with the pretrained VGG16 model used in the perceptual loss calculation. These settings enabled stable training and ensured that the model captured both pixel-level and structural differences between pre- and post-liposuction images.

Figure 10 provides several examples of predicted post-liposuction images generated by the Pix2Pix model trained with L1 and perceptual loss.

This blurring was primarily caused by inconsistent arm placement during image acquisition. Although the camera height and distance were fixed, no explicit instructions were given regarding arm angle or position, leading to variations between subjects. As a result, the boundary between the arms and torso became ambiguous, especially in the abdominal region—reducing the clarity of the predicted contour. This represents a failure case where insufficient posture standardization during data collection negatively impacted prediction accuracy. The corresponding results are shown in

Figure 11.

Another failure case was found in patients with relatively low BMI. In such cases, the physical change after liposuction is often minimal and difficult to detect visually. As a result, the model occasionally failed to generate images with noticeable differences, producing predictions nearly identical to the preoperative image. This limitation is illustrated in

Figure 12.

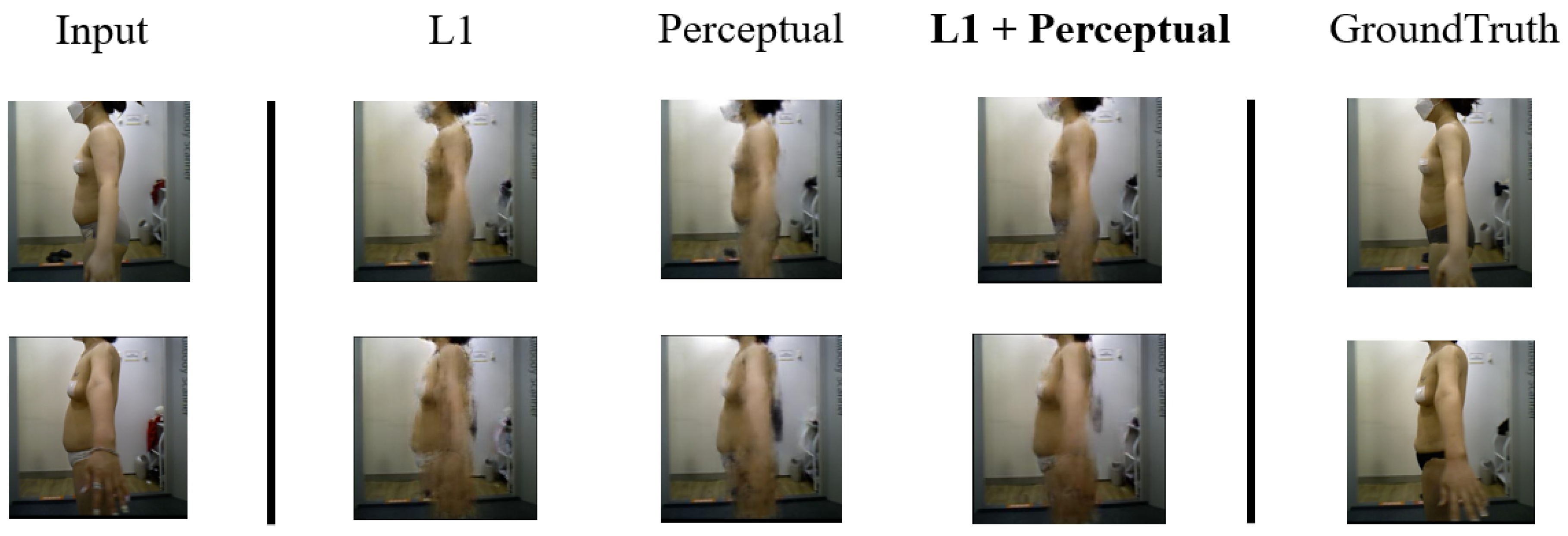

We compared the results of the models based on L1 loss and perceptual loss. The model with the smallest L1 loss was evaluated on the test dataset. As shown in

Figure 9, when L1 loss was used, the images tended to be blurry. This occurred because edge details and other information were not considered, as the model focused solely on minimizing pixel-wise errors. The images generated by the model based on perceptual loss were less blurry. However, in the case of the first subject, when only perceptual loss is used, the body shape exhibited minimal change. By comparison, when L1 loss and perceptual loss were used together, the generated images were even less blurry, and body shape changes were accurately reflected. As shown in

Figure 13, the comparison of predicted postoperative images generated by different loss functions demonstrates the differences clearly. The combination of L1 and perceptual loss results in more realistic and sharper predictions, better reflecting the structural and contour changes after liposuction.

(Quantitative Evaluation) We conducted four types of quantitative evaluation. First, we evaluated the accuracy of the generated images using the BMI predictor, as described earlier. This was measured by the mean squared error (MSE) between the predicted BMI and the ground truth BMI. A lower BMI MSE loss indicates higher prediction accuracy and consistency between the generated image and the actual outcome. Second, the similarity between two given images was determined based on the Learned Perceptual Image Patch Similarity(LPIPS) metric, which can mimic human evaluation. Specifically, the LPIPS metric calculates the similarity between the features of two images using AlexNet [

44] pretrained with ImageNet [

33]; when linear weights are incorporated, the evaluation resembles subjective judgment based on human cognition [

43]. Since LPIPS measures dissimilarity, a lower LPIPS value indicates greater perceptual similarity between the generated and ground truth images and is therefore considered better. Third, we adopted the Structural Similarity Index Measure (SSIM) [

45], a widely used metric in medical image translation, to evaluate the structural fidelity of the generated images. SSIM measures the similarity in structural information, luminance, and contrast between two images. A higher SSIM value (closer to 1.0) indicates better preservation of spatial structure and local textures. Fourth, we used the Peak Signal-to-Noise Ratio (PSNR) [

46] to evaluate image clarity. PSNR measures the ratio between the maximum possible power of a signal and the power of corrupting noise. Higher PSNR values (typically above 30 dB) represent clearer and more accurate reconstructions with less distortion.

Table 3 lists the results of the quantitative evaluation. Among the baseline methods, the L1 + Augmentation model achieved the lowest BMI MSE loss (0.0050), indicating high consistency between predicted and actual BMI values. However, this model yielded relatively high LPIPS (0.2861) and lower SSIM and PSNR scores, suggesting that the generated images lacked visual sharpness and structural clarity—likely due to the tendency of L1 Loss to produce smooth but blurry outputs.

In contrast, Perceptual + Augmentation achieved the lowest LPIPS (0.2820) among the baselines, reflecting improved perceptual similarity to ground truth images. However, this method showed the highest BMI MSE loss (0.0118), indicating reduced consistency in predicted BMI. Although it performed best among baseline methods in terms of LPIPS, its performance was still inferior to our proposed model, which achieved an even lower LPIPS of 0.2709 while also improving structural fidelity and BMI consistency. L1 + Perceptual, trained without augmentation, showed moderate performance but the worst LPIPS (0.3145) and relatively lower PSNR.

Our proposed model, L1 + Perceptual + Augmentation, achieved a strong balance across all metrics. It yielded a low BMI MSE loss (0.0057), the lowest LPIPS (0.2709) overall, and the highest SSIM (0.7814) and PSNR (40.34), indicating that it not only maintained BMI consistency but also improved perceptual realism and image quality. This result demonstrates that combining L1 and perceptual loss with augmentation effectively integrates the advantages of both loss types while improving generalization.

5. Discussion and Conclusions

With the continued development of deep learning, I2I has progressively gained importance in the medical field, with several excellent algorithms being developed. While some methods utilize medical data, most techniques involve using CT or X-ray data, which limits their accessibility to the general public [

15,

16]. This study introduced a model to predict post-liposuction appearance using RGB images, which can be easily captured by potential patients themselves. The proposed model can predict how the body shape will change after liposuction based on the subject’s body measurements. This enables intuitive predictions, which would not be possible when predicting the results for one individual based on those of other people. To the best of our knowledge, this study represents the first attempt at predicting post-liposuction appearance using body shape data. Despite being designed around liposuction, the proposed technique is expected to be useful for predicting changes caused by various other types of surgery that alter appearance and body shape. However, as few studies have sought to exploit such possibilities thus far, active research should be conducted on postoperative prediction using RGB images.

Our method combines data augmentation using a Continuous Conditional GAN (CcGAN) with a Pix2Pix-based image-to-image translation model. The effectiveness of this approach was verified through quantitative evaluation using four metrics: BMI MSE loss, LPIPS, SSIM, and PSNR. Our proposed model, which integrates both L1 and perceptual loss functions with CcGAN-based augmentation, achieved the lowest BMI MSE loss (0.0057), lowest LPIPS (0.2709), highest SSIM (0.7814), and highest PSNR (40.34) among all compared methods (

Table 3). These results indicate that the proposed model generates images that are not only quantitatively consistent with BMI predictions but also visually realistic and structurally faithful.

Notwithstanding its benefits, the proposed method has certain limitations as well. For instance, the BMI predictor used to evaluate the CcGAN was based on ResNet34, and the model with the lowest error was used for data augmentation. Thus, the selection of the optimal model was entirely dependent on the BMI predictor’s evaluation. As described in

Section 3, blurring was frequently observed in the abdominal region, which was primarily attributed to the inconsistency in arm positioning during image acquisition. Although the images were captured from a fixed distance and camera height, no clear instructions were provided regarding the angle, position, or spacing of the arms. As a result, each subject adopted different poses—some with arms naturally hanging close to the body and others with arms slightly spread apart. This inconsistency in arm placement led to ambiguity in the boundary between the abdomen and the arms, especially causing loss of contour clarity or distortion around the abdominal area. Such variations disrupted the model’s ability to learn the structural features of the abdomen consistently and ultimately resulted in blurring or smearing artifacts in the predicted images. To address this issue, future data collection protocols should include explicit pose guidelines, including the angle of the arms, the width between the shoulders, and the distance between the arms and torso. Providing standardized posture instructions to participants prior to image capture will help ensure greater consistency across samples, thereby enabling the model to more accurately learn and reproduce key body regions, particularly the abdominal area.

This study also faced a limitation from the use of only a single-view input—the left lateral image of the upper body. While this viewpoint effectively captures changes in the abdominal and flank areas, it is insufficient for ensuring anatomical consistency from other angles, such as frontal or dorsal views. This limitation may restrict the model’s ability to learn comprehensive morphological changes. Future studies are expected to incorporate multi-view image inputs, including front, side, and back views, to enable more coherent and spatially consistent predictions of body contour changes. Furthermore, conducting quantitative validations for each viewpoint will help improve anatomical fidelity and enhance the generalizability of the model.

A further limitation was observed in patients with relatively low BMI; in such cases, the physical changes before and after liposuction tend to be subtle and less visually noticeable. As a result, the model sometimes failed to capture these minimal differences, generating output images with little to no perceived change. This suggests that the model’s performance may be less effective in cases where the visual impact of liposuction is inherently limited, which poses a challenge for prediction based on subtle morphological cues.

Another critical factor that was not explicitly addressed in this study is the potential variation in fat distribution patterns between genders. Numerous clinical and physiological studies have shown that males and females tend to accumulate and reduce fat differently—males often in the abdominal area and females more evenly distributed across the hips, thighs, and waist. Since our model was trained using images of patients without explicitly separating by gender, these biological differences may influence both the training dynamics and prediction accuracy. Particularly, morphological changes that are subtle or anatomically different because of gender-specific fat localization may not be fully captured by a single unified model. Therefore, it is important to acknowledge that the applicability of the proposed model may vary depending on gender, and future research should consider stratifying training data or building gender-specific models to enhance accuracy and interpretability. Incorporating such considerations will also help define the boundaries of the model’s generalization and clarify its clinical utility.

In addition, the dataset used in this study does not include patients with clinically extreme BMI values (i.e., BMI ≥ 40 or BMI < 16); the model’s generalizability to such cases could not be evaluated. Therefore, future research should involve the collection of data from patients with pathological obesity or severely underweight to more rigorously assess the clinical applicability and robustness of the proposed model.

Moreover, the training dataset used in this study was composed entirely of Korean individuals. While this allows the model to learn the body characteristics and liposuction-related changes typical of this population, it also raises concerns about the model’s ability to generalize to individuals from other ethnicities and body types. Factors such as body shape distribution, fat localization, and skin tone can vary significantly across different populations, potentially reducing prediction accuracy when the model is applied outside the original data domain.

To improve the model’s generalizability and applicability, future studies should aim to collect more diverse datasets that include a broader range of ethnic groups, body shapes, and demographic characteristics. In addition, applying domain adaptation techniques or style transfer-based approaches may help bridge the distribution gap between datasets and enhance model robustness across varying populations.

Furthermore, BMI—a common index for classifying obesity [

27]—was used as the conditional variable for data augmentation. However, while approximate body shapes can be predicted based on BMI, its accuracy may not be sufficient because it depends solely on height and weight [

26,

47,

48]. Therefore, more accurate data could be generated if Dual-energy X-ray Absorptiometry (DXA) [

49], the gold standard for body fat mass evaluation, is used as the conditional variable instead of BMI. Finally, since I2I based on body shape has not been performed previously, we had no reference to assess whether the error of our model is within an allowable range. Therefore, we had to rely on qualitative evaluations mimicking human judgment instead.

The Pix2Pix-based I2I model proposed herein enables patients to predict their appearance after liposuction based on their own body shape data. Building on this approach, we developed a deep learning-based prediction model trained on a paired dataset of pre- and post-liposuction RGB images. This model enables personalized appearance prediction from preoperative images, making the process more intuitive and accessible. To combat the lack of available data, we performed data augmentation using CcGAN, which employs continuous numerical values as conditional variables. The model’s predictive performance was improved by generating body shape data based on BMI values as inputs. Further, by leveraging Pix2Pix’s reconstruction loss along with L1 loss and perceptual loss, our model outputs less blurry images as it minimizes both pixel-wise errors and deviations in the overall image structure. Because our model uses only RGB images rather than professional medical data, it can be utilized by the public to predict their post-surgery appearance without having to visit a hospital or any other specialized medical facility. Therefore, the proposed method can assist people in making informed decisions about whether to undergo liposuction.

In future work, we plan to explore the applicability of advanced generative models such as StyleGAN3, diffusion-based models, and transformer-based conditional generators, which may better accommodate continuous condition variables such as BMI. Models such as DCAN, cGAN, PConv-GAN, StyleGAN, and StyleGAN2 were not adopted in this study because of specific limitations—such as segmentation-focused design, support for only categorical conditions, specialization in inpainting, reliance on latent space optimization, or the need for large-scale datasets—we recognize the potential value of these architectures in related domains. In future research, we aim to revisit these models by applying dataset expansion, customized training strategies, and architectural modifications to assess whether they can be effectively adapted to our paired RGB image setting. This may lead to improvements in image realism, training stability, and generalization across diverse populations. Ultimately, by integrating more advanced generative frameworks into clinical applications, we hope to enhance the robustness, scalability, and personalized utility of postoperative prediction systems in medical practice.