Stable Variable Fixation for Accelerated Unit Commitment via Graph Neural Network and Linear Programming Hybrid Learning

Abstract

1. Introduction

1.1. Acceleration via LP Relaxation

1.2. Acceleration via Machine Learning

1.3. Graph Neural Networks for MIP and UCP

1.4. Contributions

2. Preliminaries

2.1. Three-Binary UCP Formulations

- 1.

- Objective Function:The objective function represents the total cost of the power system, which is shown aswhich comprises the production cost and the startup cost .

- 2.

- Startup cost:The startup cost can be expressed as an MIP formulation in a 3-bin formulation, with and . The cost is determined by comparing the time since the unit was last shut down to a threshold representing the cold-start duration. It is formulated asSince the UCP must account for the initial status of generating units, the classification of hot and cold starts in the early time periods may depend on residual states from the previous day. As a result, the standard rules for determining startup types cannot be uniformly applied across all time periods. In particular, when the following two conditions are satisfied, and , we set , indicating that the startup at time is considered a hot start due to the influence of the unit’s initial state. Otherwise, , and the classification of the startup should instead be determined based on the summation term.

- 3.

- Unit generation limits:

- 4.

- Power balance constraint:

- 5.

- System spinning reserve requirement:

- 6.

- Ramp rate limits:

- 7.

- Minimum up/downtime constraints:Here, is an index used to enforce the minimum up/downtime constraints. Taking Equation (9) as an example, if the unit is in the off state at time (i.e., ), then any startup operation occurring at time would imply that the unit shuts down before completing its required minimum uptime, thereby violating the constraint.

- 8.

- Initial status of units:

- 9.

- State constraints:State constraints were introduced in the 3-bin formulation to link the startup, shutdown, and state variables:

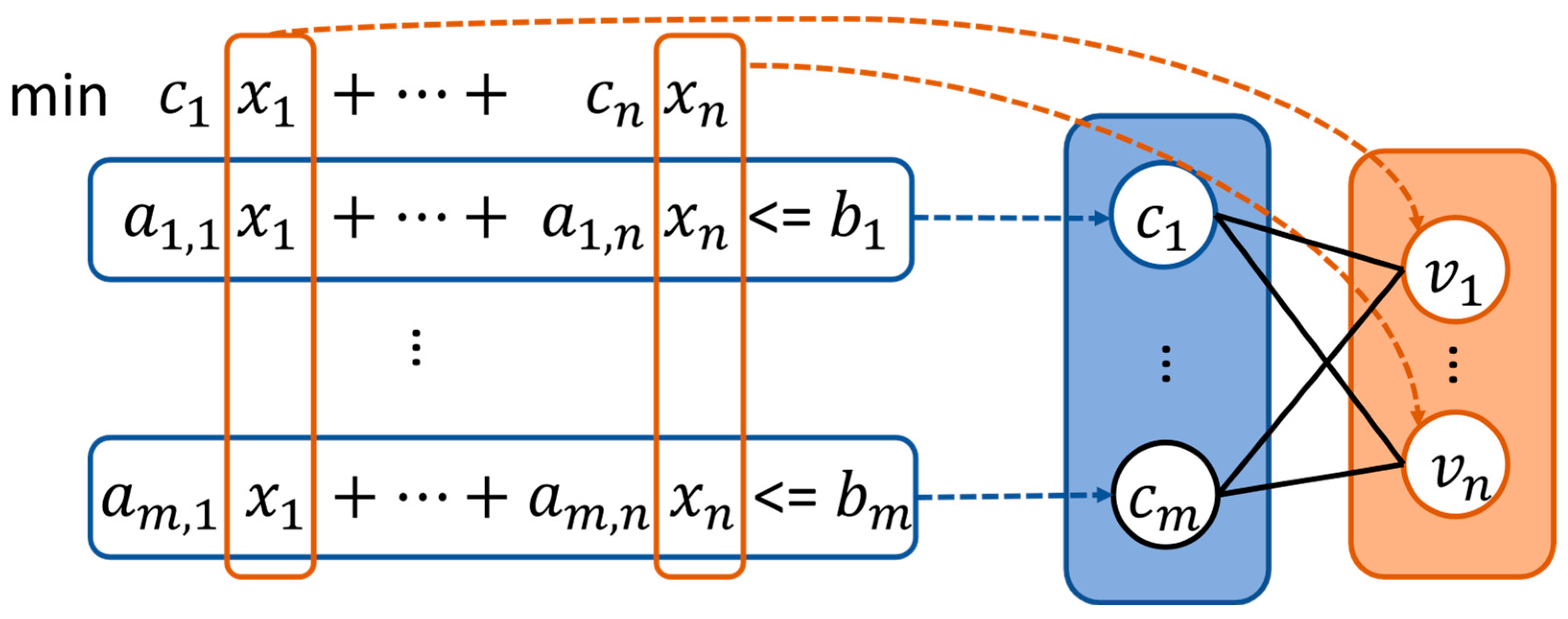

2.2. Bipartite Graph Representation for UCPs

- Variables: , where each variable embedding corresponds to a decision variable , partitioned as binary variables (e.g., commitment, startup, and shutdown variables) and continuous variables (e.g., power output and startup cost).

- Constraints: , where each constraint embedding corresponds to the -th constraint, e.g., operational rules formalized in Equations (2)–(12).

- Edges: , which encodes the relationship where variable appears in constraint with a nonzero coefficient and has an edge embedding .

2.3. Stable Binary Variables

3. Framework

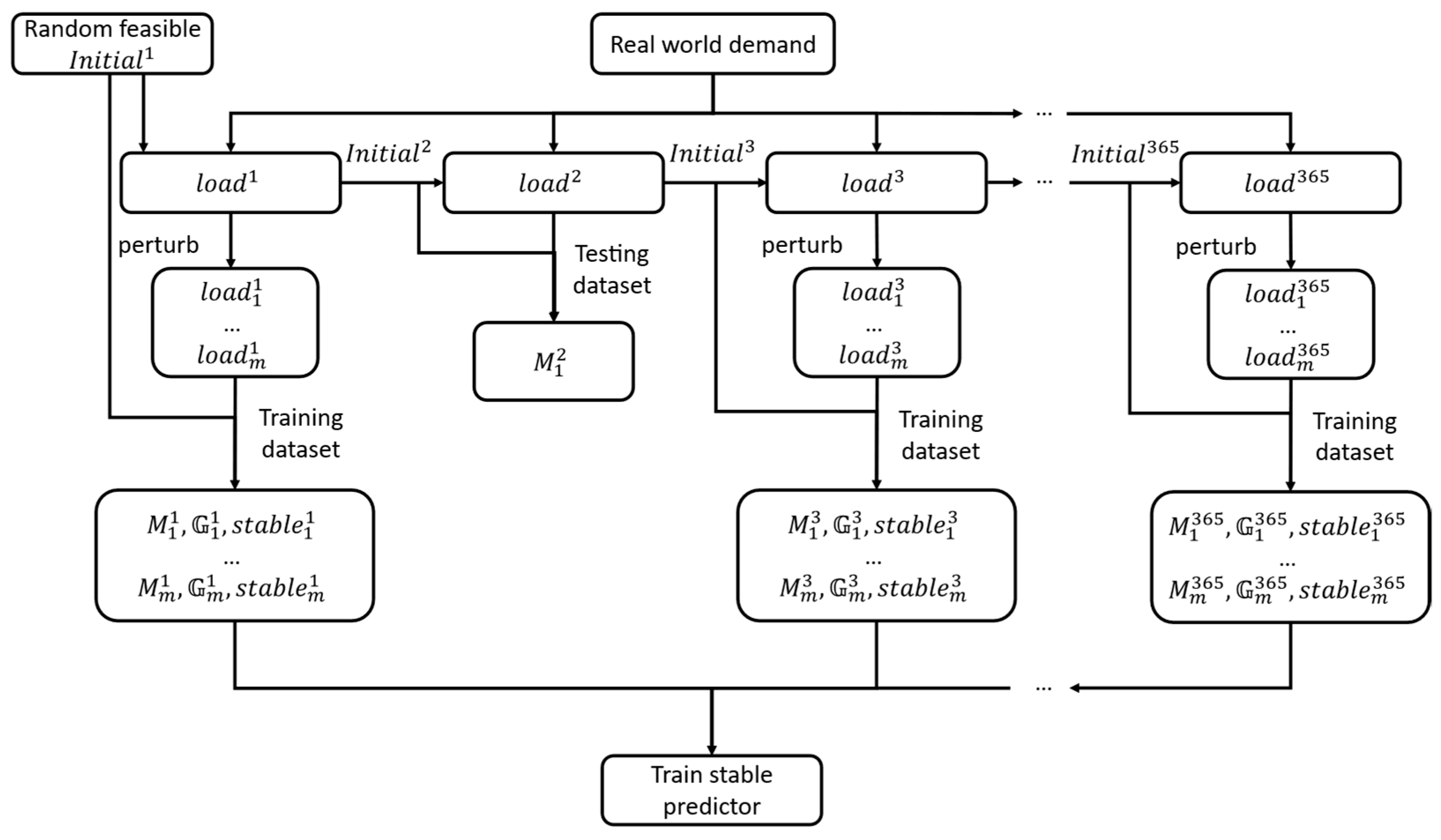

3.1. Dataset Collection

3.2. Offline Phase

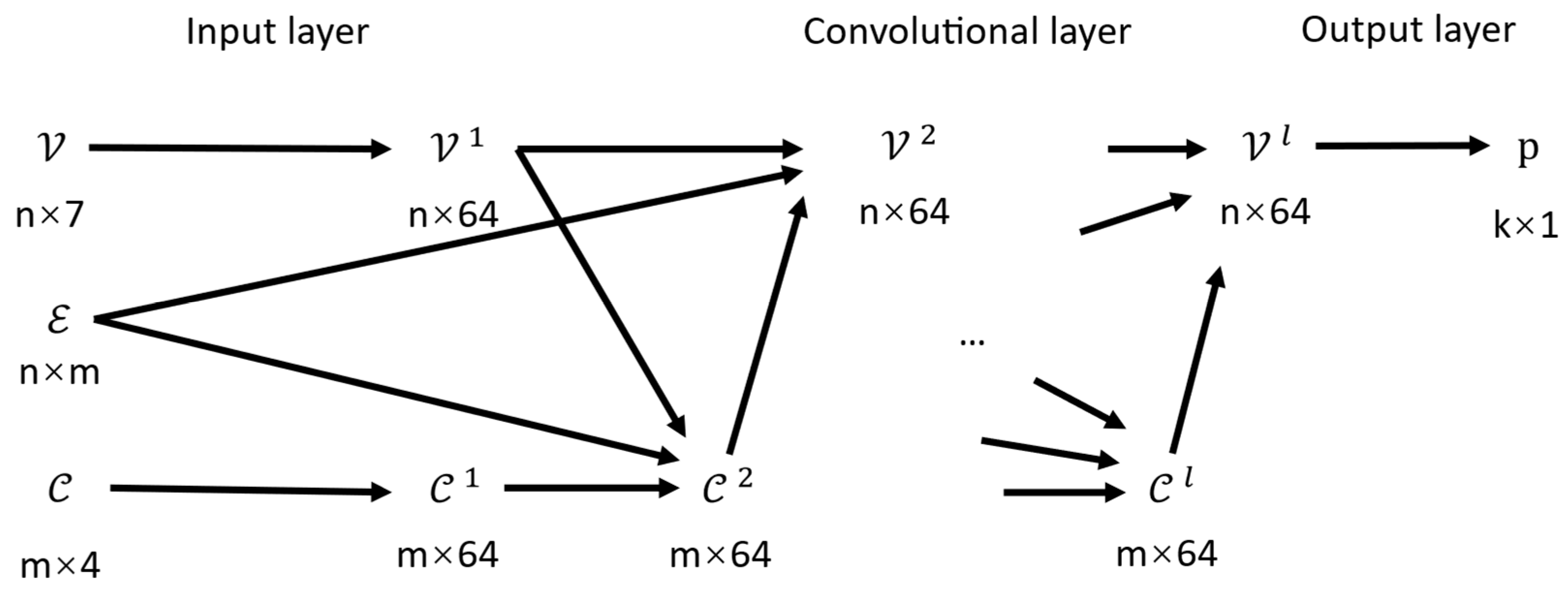

- Input layer: This layer, consisting of a regularization layer, fully connected layers, and activation functions, inputs the embedding , , where , will be embedded into a higher-dimensional latent vector , .

- Convolutional layer: The information of neighboring nodes and is transitioned after a multilayer convolutional layer and takes the following form:where are Multilayer Perceptrons with ReLU activation functions, and represents the layer normalization function.

- Output layer: This layer consists of fully connected layers and a sigmoid activation function, which maps the output to a scalar in the range [0, 1], representing the stability confidence of each variable and . Depending on the task setting, we can selectively retain the stability predictions of specific types of binary variables—for example, only the commitment variables, or all binary variables. The remaining variables are discarded from further consideration.

3.3. Online Phase

3.3.1. Learning-Guided Fixing

- , , and represent commitment variables, startup variables, and shutdown variables of unit in time ;

- and denote the set of units and time periods, respectively.

- represents the stable predictor output (stability confidence score) for the -th index and the variable type ;

- represents the LP relaxation value for the -th index and the variable type ;

- is the stability threshold, such that variables with are considered stable;

- is the integrality tolerance threshold, ensuring that is sufficiently close to a binary value.

- Hard fix: is denoted as the set of indices that are hard-fixed. These variables are forced into a fixed state, ensuring that the solution adheres to the rounded LP relaxation value for each time step. We define as follows:For a given time of unit , if all three binary variables (commitment, startup, and shutdown) are stable, and there are no startup or shutdown actions in the neighboring time steps, then these variables will be hard-fixed to their rounded LP relaxation value. It is worth noting that a partial binary solution from the rounded LP relaxation value does not guarantee feasibility.

- Soft fix: is denoted as the set of indices that are soft-fixed, . For variables , we initialize the variable to the rounded LP relaxation value but allow the solver to adjust the value during the optimization process.

3.3.2. Learning-Guided Branching

4. Numerical Experiments

4.1. Case Settings

- 1.

- 2.

- Demand setting: There are 365 loads from the first day of the year to the 365th day of the year, which are findings that come from [28]. In order to match the loads to the target systems, the loads for the 365 days, , are mapped to the capacity of the corresponding systems, . Specifically, for each system, the upper () and lower () bounds of the target load mapping interval are determined based on the system’s maximum generation capacity scaled by factors and :is then normalized and scaled to to fit the target system:A simple rule is applied for dataset partitioning: days ending with the digit seven are assigned to the validation set, those ending with two are assigned to the testing set, and the remaining days are assigned to the training set. And each load will be perturbed to expand the dataset.

- 3.

- Benchmark setting: The latest Gurobi solver 11.0 was used with the following settings: threads set to 1 and MIPGap set to 1 × 10−6. All other settings were kept at their default values.

4.2. Accuracy Comparison for Different Binary Variables

4.3. Ablation Study

5. Conclusions

- 1.

- Variable Stability Classification: We design a variable fixation strategy that integrates GNN-predicted stability and LP root relaxation to guide the hard and soft fixation of binary variables. Stable variables are fixed to shrink the search space, while temporal flexibility is preserved for variables with uncertain stability. This layered fixation mechanism significantly reduces suboptimality, as confirmed by ablation studies across 50-, 100-, and 1080-unit systems.

- 2.

- Utilization of Multi-Type Binary Variables: Instead of focusing solely on commitment variables, we incorporate startup and shutdown variables into both the MIP formulation and learning framework. These auxiliary variables enhance the stability inference of commitment variables to enable safer and more accurate hard fixation, thereby reducing suboptimality and introducing additional stable candidates for soft fixation, which accelerates the solution process by further reducing the search space. Experiments show that this joint modeling approach consistently improves primal gaps and solving times over single-variable baselines, particularly in large-scale settings.

- 3.

- Stability-Aware Branching Prioritization: We leverage predicted variable stability to guide the branching process in branch-and-bound trajectories. By assigning a higher branching priority to unstable variables, the solver is able to explore critical decisions earlier, tighten bounds faster, and accelerate convergence. This strategy achieved faster gap reduction and earlier convergence.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Nomenclature

| Indices: | |

| g | Index of unit |

| t | Index of time period |

| Sets: | |

| Set of stable binary variables | |

| Set of hard fix binary variables | |

| Set of soft fix binary variables | |

| Constants: | |

| Total number of units | |

| Total number of time periods | |

| Coefficients of the linear production cost function of unit | |

| Hot startup cost of units | |

| Cold startup cost of units | |

| Minimum uptime of units | |

| Minimum downtime of units | |

| Cold startup time of units | |

| Maximum power output of units | |

| Minimum power output of units | |

| System load demand in period | |

| Spinning reserve requirement in period | |

| Ramp-up limit of unit | |

| Ramp-down limit of unit | |

| Startup ramp limit of unit | |

| Shutdown ramp limit of unit | |

| Initial commitment state of unit | |

| Number of periods unit has been online(+) or offline(−) prior to the first period of the time span (end of period 0) | |

| Binary variables: | |

| Commitment status of unit in period , equal to 1 if unit is online in period and 0 otherwise | |

| Startup status of unit in period , equal to 1 if unit starts up in period and 0 otherwise | |

| Shutdown status of unit in period , equal to 1 if unit shuts down in period and 0 otherwise | |

| Continuous variables: | |

| Power output of unit in period | |

| Startup cost of unit in period | |

| Binary variable states: | |

| LP relaxation solution of binary variables | |

| Rounded LP relaxation solution of binary variables | |

| Stable confidence of binary variables | |

| GNN output of binary variables | |

| All binary variables include commitment, startup, and shutdown status | |

| Thresholds: | |

| Stability threshold | |

| Integrality threshold | |

| Temporal flexibility threshold | |

References

- Anjos, M.F.; Conejo, A.J. Unit commitment in electric energy systems. Found. Trends Electr. Energy Syst. 2017, 1, 220–310. [Google Scholar] [CrossRef]

- Hua, B.; Baldick, R.; Wang, J. Representing Operational Flexibility in Generation Expansion Planning Through Convex Relaxation of Unit Commitment. IEEE Trans. Power Syst. 2017, 33, 2272–2281. [Google Scholar] [CrossRef]

- Ongsakul, W.; Petcharaks, N. Unit commitment by enhanced adaptive Lagrangian relaxation. IEEE Trans. Power Syst. 2004, 19, 620–628. [Google Scholar] [CrossRef]

- Delarue, E.; Cattrysse, D.; D’Haeseleer, W. Enhanced priority list unit commitment method for power systems with a high share of renewables. Electr. Power Syst. Res. 2013, 105, 115–123. [Google Scholar] [CrossRef]

- Hobbs, W.J.; Hermon, G.; Warner, S.; Shelbe, G.B. An enhanced dynamic programming approach for unit commitment. IEEE Trans. Power Syst. 1988, 3, 1201–1205. [Google Scholar] [CrossRef]

- Gurobi Optimization, L. Gurobi Optimizer Reference Manual. IEEE Trans. Autom. Control 2024, 28, 1–11. [Google Scholar]

- Ibm, L. ILOG CPLEX. Available online: http://www.ilog.com/products/cplex (accessed on 1 December 2024).

- Bendotti, P.; Fouilhoux, P.; Rottner, C. On the complexity of the unit commitment problem. Ann. Oper. Res. 2019, 274, 119–130. [Google Scholar] [CrossRef]

- Yang, L.; Zhang, C.; Jian, J.; Meng, K.; Xu, Y.; Dong, Z. A novel projected two-binary-variable formulation for unit commitment in power systems. Appl. Energy 2017, 187, 732–745. [Google Scholar] [CrossRef]

- Harjunkoski, I.; Giuntoli, M.; Poland, J.; Schmitt, S. Matheuristics for Speeding Up the Solution of the Unit Commitment Problem. In Proceedings of the 2021 IEEE PES Innovative Smart Grid Technologies Europe (ISGT Europe), Espoo, Finland, 18–21 October 2021; pp. 1–5. [Google Scholar]

- Pourahmadi, F.; Kazempour, J. Unit Commitment Predictor with a Performance Guarantee: A Support Vector Machine Classifier. IEEE Trans. Power Syst. 2024, 40, 715–727. [Google Scholar] [CrossRef]

- Qu, S.; Yang, Z. Optimality Guaranteed UC Acceleration via Interactive Utilization of Adjoint Model. IEEE Trans. Power Syst. 2024, 39, 5191–5203. [Google Scholar] [CrossRef]

- Sayed, A.R.; Zhang, X.; Wang, G.; Wang, Y.; Shaaban, M.; Shahidehpour, M. Deep Reinforcement Learning-Assisted Convex Programming for AC Unit Commitment and Its Variants. IEEE Trans. Power Syst. 2024, 39, 5561–5574. [Google Scholar] [CrossRef]

- Chen, Z.; Liu, J.; Wang, X.; Yin, W. On Representing Mixed-Integer Linear Programs by Graph Neural Networks. In Proceedings of the Eleventh International Conference on Learning Representations, Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Schmitt, S.; Harjunkoski, I.; Giuntoli, M.; Poland, J.; Feng, X. Fast Solution of Unit Commitment Using Machine Learning Approaches. In Proceedings of the 2022 IEEE 7th International Energy Conference (ENERGYCON), Riga, Latvia, 9–12 May 2022; pp. 1–6. [Google Scholar]

- Quan, R.; Jian, J.; Mu, Y. Tighter relaxation method for unit commitment based on second-order cone programming and valid inequalities. Int. J. Electr. Power Energy Syst. 2014, 55, 82–90. [Google Scholar] [CrossRef]

- Ma, Z.; Zhong, H.; Xia, Q.; Kang, C.; Wang, Q.; Cao, X. A Unit Commitment Algorithm with Relaxation-Based Neighborhood Search and Improved Relaxation Inducement. IEEE Trans. Power Syst. 2020, 35, 3800–3809. [Google Scholar] [CrossRef]

- Huang, T.; Ferber, A.; Tian, Y.; Dilkina, B.; Steiner, B. Local branching relaxation heuristics for integer linear programs. In Proceedings of the International Conference on Integration of Constraint Programming Artificial Intelligence, and Operations Research, Nice, France, 29 May–1 June 2023; pp. 96–113. [Google Scholar]

- Gao, Q.; Yang, Z.; Li, W.; Yu, J. Optimality-Guaranteed Acceleration of Unit Commitment Calculation via Few-shot Solution Prediction. IEEE Trans. Power Syst. 2024, 40, 1583–1595. [Google Scholar] [CrossRef]

- Pineda, S.; Morales, J.M. Is learning for the unit commitment problem a low-hanging fruit? Electr. Power Syst. Res. 2022, 207, 107851. [Google Scholar] [CrossRef]

- Xavier, Á.S.; Qiu, F.; Ahmed, S. Learning to solve large-scale security-constrained unit commitment problems. INFORMS J. Comput. 2021, 33, 739–756. [Google Scholar] [CrossRef]

- Yang, N.; Yang, C.; Wu, L.; Shen, X.; Jia, J.; Li, Z.; Chen, D.; Zhu, B.; Liu, S. Intelligent data-driven decision-making method for dynamic multisequence: An E-seq2seq-based SCUC expert system. IEEE Trans. Ind. Inform. 2021, 18, 3126–3137. [Google Scholar] [CrossRef]

- Ramesh, A.V.; Li, X. Feasibility Layer Aided Machine Learning Approach for Day-Ahead Operations. IEEE Trans. Power Syst. 2024, 39, 1582–1593. [Google Scholar] [CrossRef]

- Park, S.; Chen, W.; Han, D.; Tanneau, M.; Van Hentenryck, P. Confidence-Aware Graph Neural Networks for Learning Reliability Assessment Commitments. IEEE Trans. Power Syst. 2024, 39, 3839–3850. [Google Scholar] [CrossRef]

- Han, Q.; Yang, L.; Chen, Q.; Zhou, X.; Zhang, D.; Wang, A.; Sun, R.; Luo, X. A GNN-Guided Predict-and-Search Framework for Mixed-Integer Linear Programming. In Proceedings of the Eleventh International Conference on Learning Representations, Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Qin, J.; Yu, N. Solve Large-scale Unit Commitment Problems by Physics-Informed Graph Learning. arXiv 2023, arXiv:2311.15216. [Google Scholar]

- Gasse, M.; Chételat, D.; Ferroni, N.; Charlin, L.; Lodi, A. Exact Combinatorial Optimization with Graph Convolutional Neural Networks. arXiv 2019, arXiv:1906.01629. [Google Scholar]

- Gridmod. RTS-GMLC: Repository for Grid Modernization Lab Consortium (GMLC) Real-Time Simulation Project. In GitHub. 2023. Available online: https://github.com/GridMod/RTS-GMLC (accessed on 1 December 2024).

- Berthold, T. Primal Heuristics for Mixed Integer Programs. Master’s Thesis, Technische Universität, Berlin, Germany, 2006. [Google Scholar]

| Index | 1 | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|---|

| 0.5 | 0.3 | 0 | 0 | 0 | 1 | |

| 1 | 0 | 0 | 1 | 0 | 1 | |

| 0 | 1 | 0 | 1 | 0 | 1 | |

| 0 | 0 | 0 | 1 | 0 | 1 | |

| 0 | 0 | 1 | 0 | 0 | 1 | |

| 0.4 | 0.48 | 0.6 | 0.2 | 1 | 1 |

| Feature Description | |

|---|---|

| Variables | normalized coefficient of variables in the objective function |

| degree of a variable node in the bipartite representation | |

| average coefficient of the variable in all constraints | |

| maximum value among all coefficients of the variable | |

| minimum value among all coefficients of the variable | |

| whether the variable is a binary variable | |

| LP solution of the variable | |

| Constraints | average of all coefficients in the constraint |

| degree of constraint nodes in the bipartite representation | |

| right-hand-side value of the constraint | |

| whether the constraint is an equation constraint | |

| Edges | coefficient value |

| Case Size | Variable | Integer | Constraint | Nonzero |

|---|---|---|---|---|

| 50 | 6000 | 3600 | 10,848 | 50,678 |

| 100 | 12,000 | 7200 | 21,648 | 101,349 |

| 1080 | 129,600 | 77,760 | 233,328 | 1,109,492 |

| Variable Type | 50 | 100 | 1080 |

|---|---|---|---|

| 0.04773 | 0.02801 | 0.06902 | |

| 0.00951 | 0.00765 | 0.02248 | |

| 0.00923 | 0.00646 | 0.02100 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, L.; Li, P.; Chen, S.; Zheng, H. Stable Variable Fixation for Accelerated Unit Commitment via Graph Neural Network and Linear Programming Hybrid Learning. Appl. Sci. 2025, 15, 4498. https://doi.org/10.3390/app15084498

Yang L, Li P, Chen S, Zheng H. Stable Variable Fixation for Accelerated Unit Commitment via Graph Neural Network and Linear Programming Hybrid Learning. Applied Sciences. 2025; 15(8):4498. https://doi.org/10.3390/app15084498

Chicago/Turabian StyleYang, Linfeng, Peilun Li, Shifei Chen, and Haiyan Zheng. 2025. "Stable Variable Fixation for Accelerated Unit Commitment via Graph Neural Network and Linear Programming Hybrid Learning" Applied Sciences 15, no. 8: 4498. https://doi.org/10.3390/app15084498

APA StyleYang, L., Li, P., Chen, S., & Zheng, H. (2025). Stable Variable Fixation for Accelerated Unit Commitment via Graph Neural Network and Linear Programming Hybrid Learning. Applied Sciences, 15(8), 4498. https://doi.org/10.3390/app15084498