Abstract

Few-shot object detection (FSOD) based on fine-tuning is essential for analyzing optical remote sensing images. However, existing methods mainly focus on natural images and overlook the scale variations in remote sensing images, leading to feature confusion among foreground instances of different classes. Additionally, since only a subset of instances are labeled in FSOD training data, the model might mistakenly treat unlabeled instances as background, leading to confusion between foreground features and background features, particularly those of novel classes. The preceding phenomenon indicates that severe feature confusion in remote sensing FSOD hampers the ability of the model to accurately classify and localize instances. To address these issues, this paper proposes a two-stage FSOD framework based on transfer learning via pseudo-sample generation and feature enhancement (PSGFE), including pseudo-sample generation module (PSGM) and feature enhancement module (FEM). The former reduces the feature confusion between foreground and background by generating pseudo-samples for unannotated background areas. The latter dynamically captures and enhances multi-scale features on the region of interest (ROI), and extracts unique core information for each class to eliminate the feature confusion among foreground instances of different classes. Our method has been validated on the optical remote sensing datasets DIOR and RSOD. It demonstrates superior performance compared to existing methods.

1. Introduction

Rapid advancement in the field of remote sensing technology has substantially increased the volume and fidelity of optical remote sensing images. Accurate analysis and interpretation of these images are crucial tasks in geographic sciences and play important roles in areas such as environmental surveillance [1], resource reconnaissance [2], and calamity detection [3]. Deep learning-based object detection is an important technique for analyzing and understanding optical remote sensing images [4,5], relying on large-scale fully annotated data sets for training. However, the dynamic nature of the geographical environment means that novel classes may emerge in remote sensing images that were not previously encountered. Manually annotating these novel classes is not only costly but also time consuming. Moreover, with limited annotated training samples, traditional detectors are prone to overfitting, severely weakening their generalization ability.

Consequently, demand for few-shot object detection (FSOD) is increasing, aiming to leverage prior knowledge acquired from a richly annotated base class dataset and a limited number of training samples from novel classes to accurately classify and locate both base and novel objects in images [6,7,8]. Its framework generally involves a two-stage training process: training on a huge labeled dataset of base classes, followed by fine-tuning on a balanced set containing only a few annotated instances.

Although FSOD has shown promising results in natural scene datasets [9,10,11] with limited scale variation, optical remote sensing images present unique challenges due to their complex backgrounds. These challenges differ significantly from those encountered in natural scene images in the following aspects [12]: large variability in scale is observed across spatial resolutions, with diverse scales appearing both between different classes and within individual classes [13,14]; and increased inter-class similarity and intra-class variability [15,16]. These factors exacerbate the challenge of accurately recognizing novel class features, increasing the likelihood of misclassification between novel and base class instances, and ultimately diminishing the robustness and overall effectiveness of object detectors. Moreover, FSOD also encounters the following challenge: it is hard to distinguish between background features and foreground features, especially in the novel class [17,18]. In the initial training phase, only base class instances are annotated, while novel class instances are treated as background. In the fine-tuning phase, only a subset of instances is annotated. This will lead the model to misinterpret the features of the foreground, particularly those of the novel class foreground, as background features, impairing its ability to differentiate between the real background and foreground in subsequent detection phases. This issue can be attributed to feature confusion, whether between background features and foreground features or among different foreground classes, which significantly impairs the model performance in classification and localization.

To address the issue of feature confusion, some meta-learning methods [19,20] aggregate the features of support–query sample pairs to make the learned feature space more discriminative. FSCE [21] and MSOCL [22] conduct contrastive learning at different scales to learn multi-scale features, thereby facilitating the distinction between foreground instances and the background. Although these methods help alleviate feature confusion to some extent, they have certain limitations. Meta-learning methods require the construction of support–query sample pairs, making them operationally complex. Contrastive learning methods are susceptible to interference from potential foreground instances in the background, which are mistakenly treated as negative samples, thus hindering the learning of class-specific features. ST-FSOD [23] employs self-training to generate pseudo-samples in order to address the issue of confusion between background and foreground features. However, it relies on a limited number of novel class samples for supervision, which can easily lead to overfitting.

Therefore, it is essential to develop a method that can effectively distinguish the class-specific features of each foreground instance while differentiating them from the background features. Motivated by the aforementioned methods and in order to address these issues, we introduce MSOCL [22] as the baseline network and propose a new two-stage FSOD framework for remote sensing images based on transfer learning via pseudo-sample generation and feature enhancement called PSGFE. Specifically, we employ a pseudo-sample generation module (PSGM) to identify potential foreground instances within the background and incorporate them into training, effectively preventing confusion between foreground features and background features. Additionally, we introduce a feature enhancement module (FEM) to mitigate class confusion caused by multi-scale variations, high inter-class similarity, and significant intra-class variability in remote sensing images.

FEM combines depthwise separable convolutions [24,25] with large-kernel convolutional networks [26] to enhance the features of regions of interest (ROI). This method employs large-kernel convolutions to enlarge the receptive field and extract global features from the image, while depthwise separable convolutions are utilized to capture local features. Concurrently, convolutional layers with multiple kernel sizes dynamically capture multi-scale features of instances. This approach enables the model to focus on the most critical regions within the ROI and to learn salient classification features from complex and diverse foregrounds.

PSGM is proposed to more effectively excavate potential objects within the background, which consists of two modules: the self-training-based pseudo-proposal generation network (STPPGN) and the self-training-based confident pseudo-sample generation network (STCPSGN). STPPGN generates an initial set of pseudo-proposals in the unlabeled background regions. These pseudo-proposals are then filtered and refined by STCPSGN to produce high-quality pseudo-samples, which are subsequently integrated into the training set. STPPGN focuses on the background regions and extracts high-confidence regions to supervise the generation of pseudo-proposals, thereby avoiding the issue in ST-FSOD [23] where the student region proposal network (RPN) relies solely on a limited number of novel class samples for supervision, leading to overfitting on the scarce data and consequently reducing its generalization ability. Additionally, STCPSGN separates the bounding box-head (BBH) for generating pseudo-samples from that for processing samples, thereby avoiding interference.

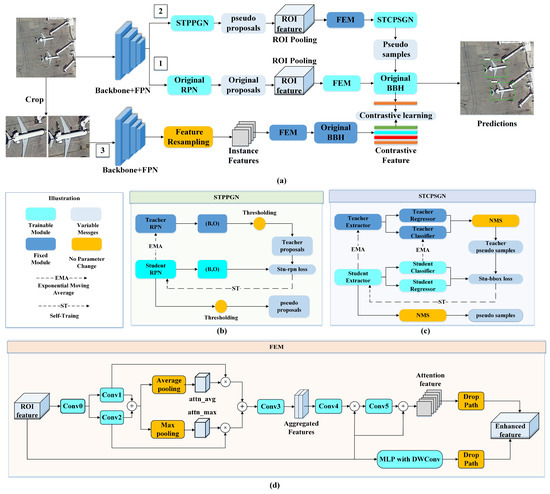

The overall architecture of PSGFE is depicted in Figure 1a, where Branch 1 is the Faster-RCNN [27] object detection branch augmented with an FEM. Branch 2 is the pseudo-sample generation branch, which is our PSGM, consisting of STPPGN and STCPSGN. Branch 3, inspired by the contrastive learning approach [22], facilitates the model in learning multi-scale features of instances by cropping labeled instance objects and conducting contrastive learning with the proposals or pseudo-samples generated by the other two branches. Our main contributions are as follows:

Figure 1.

(a) The overall framework of PSGFE. (b) The internal structure of STPPGN, composed of a set of teacher-student RPNs, used to generate pseudo-proposals. (c) The internal structure of STCPSGN, composed of a set of teacher-student box-heads, used to further screen and process the pseudo-proposals, ultimately generating pseudo-samples. (d) The internal structure of FEM, where Conv0 through Conv5 each denote a distinct convolutional group, with each group containing multiple convolutional operations that include a large kernel convolution and DWConv is depthwise separable convolution.

(1) We propose a pseudo-sample generation module called PSGM to generate pseudo-samples from unlabeled background data. This enables our method to learn the true background features, thereby distinguishing between the real background and potential novel class instances, reducing confusion between them, and enhancing the performance of PSGFE.

(2) We propose an attention mechanism-based feature enhancement module that augments the features of both the pseudo-proposals and ground truth instances within the ROI. This module enables our method to learn the core features of each class, reducing the possibility of confusion, and thereby enhancing the classification capabilities of PSGFE.

(3) Our method achieves significant performance improvements on the optical remote sensing datasets DIOR and RSOD.

The remainder of this paper is as follows: In Section 2, we provide a review of related work. A detailed description of our remote sensing PSGFE method is presented in Section 3. The experimental results, which include comparative and ablation studies, are detailed in Section 4. In Section 5, we conclude the paper.

2. Related Work

2.1. Few-Shot Object Detection

Currently, there are two main approaches to FSOD: meta-learning-based methods and transfer-learning-based methods.

Meta-learning methods focus on transferring prior knowledge from well-annotated base classes to sparsely annotated novel classes by simulating a series of few-shot tasks. These methods mainly consist of two branches: a query branch and a support branch. The query branch learns object detection tasks from query images containing both base and novel classes, while the support branch provides auxiliary information and feature representations for both base and novel classes, enabling the query branch to better adapt to different object classes. A common meta-learning approach involves extracting specific and representative class prototypes from query images, followed by performing specific aggregation operations, such as weighted summation, concatenation, or attention mechanisms with support vectors to improve the ability of the model to recognize novel class objects [28,29,30].

Compared to meta-learning approaches for FSOD, transfer learning methods employ relatively simpler learning strategies [10]. Fine-tuning is a commonly used transfer learning technique, involving pre-training a base-class model on a voluminous annotated dataset, followed by fine-tuning on a small number of target-domain samples. The two-stage fine-tuning approach (TFA), which fine-tunes only the classification and regression branches of the detector while keeping the feature extractor frozen, is one of the most commonly used transfer learning methods [31]. According to TFA, numerous methods have been proposed to address specific challenges in FSOD. Examples include FSCE [21], UP-FSOD [32], and DeFRCN [33]. However, TFA comes with its own set of limitations: (1) Merely fine-tuning can result in the erosion of source–domain knowledge, which in turn diminishes the transferability of the model. (2) Object detectors find it challenging to differentiate between class-agnostic and class-specific knowledge without explicit modeling.

Generative adversarial networks (GANs) [34] have been explored for data augmentation in FSOD by synthesizing realistic samples through adversarial training. CLIP-GAN [35] generates synthetic instances from text prompts, which are then pasted into real backgrounds to enrich training data and improve novel class detection. SS-GAN [36] uses self-supervised GANs for unsupervised few-shot recognition, with the aim of improving generalization through adversarial feature learning. Although these methods effectively enhance model performance by generating new instances, they fail to address the confusion between background and foreground features, which arises from the inability to mine unlabeled foreground instances from the background. To resolve this issue, a method is needed to effectively mine potential foreground instances from the background.

2.2. Few-Shot Object Detection in Remote Sensing Images

In remote sensing, existing FSOD methods are divided into two classes: meta-learning and transfer learning.

Meta-learning methods, such as FSPDM [37] and SAAN [38], acquire knowledge that transcends individual tasks through meta-learning processes. Specifically, P-CNN [39] mitigates the sparse directional space in novel classes due to sample scarcity by employing a prototype-guided RPN. These methods rely on both query and support sets to discern inter-class relationships, a complex procedure. Thus, a simpler detector is still needed.

Transfer learning methods simplify data preparation workflows and procedures. Inspired by FsDet [40]. various two-stage fine-tuning transfer learning models focusing on multiscale feature aggregation have emerged for remote sensing images [41,42]. Inspired by the contrastive learning approach employed in FSCE [21] for clustering proposal features from RPN, AACE [43] enhances feature distinction by integrating a scale-aware search module and a contrastive ROI branch. MSOCL [22] constructs positive and negative sample pairs using proposal features and cropped instance object features, and then conducts contrastive learning. This process brings the features of positive pairs closer in the feature space while pushing those of negative pairs further apart, thereby enhancing the discriminative power of the features. However, negative sample pairs may include unannotated objects, which can interfere with feature learning and degrade performance. Pseudo-sample generation addresses this by mining potential foreground objects in the background to generate pseudo-samples and removing negative samples that highly overlap with these pseudo-samples, thus avoiding interference.

Self-training uses model predictions for unlabeled data to produce pseudo-samples, which are then integrated into the training process to boost the performance of the model on targeted tasks [44]. ST-FSOD [23] utilizes a self-training-based RPN (ST-RPN) to generate proposals for novel classes, utilizing only a limited number of novel class samples for supervision while ignoring areas in the background that are likely to contain novel class objects. In BBH, temporary labels are generated and assigned to ROIs labeled as background. This method reduces confusion between the background and the novel class foreground, thereby enhancing the detection performance.

However, supervising the ST-RPN with only a few novel class samples may lead to overfitting, and the ignored regions it generates could also contain labels. This situation may further exacerbate the confusion between the foreground features and the background features, leading to a decrease in performance. Furthermore, the shared BBH for pseudo-samples and samples may hinder the extraction of salient features. Motivated by this insight, we propose PSGM which effectively solves this issue. STPPGN focuses solely on background regions without any annotations and extracts pseudo-proposals. STCPSGN classifies and regresses pseudo-proposals to form pseudo-samples, enriching the dataset, and concurrently separates the BBH for generating pseudo-samples from that for processing samples to avoid interference.

3. Method

This section provides a detailed introduction to our method. Specifically, Section 3.1 presents the definition of the problem and objectives of FSOD, and Section 3.2 outlines the overall design of PSGFE. Section 3.3 and Section 3.4 detail the implementation of PSGM and loss functions. Section 3.5 introduces FEM, and Section 3.6 summarizes the total loss function used during the fine-tuning phase.

3.1. Problem Setting

Suppose that we have an annotated dataset D, which is divided into two distinct cohorts: the base classes and the novel classes , with a null intersection, i.e., .

In this notation, X represents an instance within the image space I, while corresponds to the label in the label space L. C is the class of instance X, and is the bounding box associated with X. refers to the dataset for base classes while represents the dataset for the novel classes.

In this context, the annotated data in are abundant, whereas those in are scarce. The few-shot scenario can be effectively described using the N-Way-K-Shot terminology. Here, N represents the number of novel classes within the dataset , and K denotes the limited number of annotated samples available for each of these novel classes. The core of FSOD lies in achieving accurate classification and location of novel classes with a limited number of annotated samples. To achieve this aim, we adopt a two-stage training strategy. Initially, a base detector is trained on the dataset , which comprises abundant annotated data from base classes. Subsequently, this base detector is fine-tuned on a balanced dataset , which is formed by selecting k instances from each class in both and . This approach yields a detector that can accurately identify novel classes.

3.2. Method Architecture

To address the confusion among foreground instances of different classes, as well as the confusion between foreground features and background features in remote sensing FSOD, we propose a remote sensing image FSOD framework named PSGFE based on transfer learning, with MSOCL [22] as the baseline model. The overall architecture of this framework is shown in Figure 1a. On the one hand, the framework utilizes PSGM to mine potential foreground instances from the background, which are then filtered and refined to generate high-quality pseudo-samples for training, thereby addressing the confusion between background and foreground instance features. On the other hand, FEM is employed to enhance ROI features in order to highlight the core features of each class and enhance the classification capability of the model, thus resolving the issue of class confusion.

PSGM integrates self-training and teacher–student paradigm. Self-training [44] leverages the pseudo-samples generated by the model itself to further train the model. The teacher–student paradigm uses the output of the teacher model to guide the training of the student model [45]. Specifically, the teacher model makes predictions on unlabeled background regions to generate a set of instructional information, which guides the student model to produce pseudo-samples for training. This approach enables the model to extract potential foreground instances from the background, thereby allowing it to learn the real background features and effectively distinguish them from the foreground features. PSGM is composed of STPPGN and STCPSGN, with their structures illustrated in Figure 1b and Figure 1c, respectively. FEM employs multiple large-kernel convolutions with different kernel sizes and depthwise separable convolutions to enhance ROI features. Large-kernel convolutions expand the receptive field to better capture global image information [46], while depthwise separable convolutions perform convolution operations on each input channel separately, helping the model capture richer local features [47]. This method helps extract distinctive core features of each class from ROIs, thereby addressing the issue of class confusion. Its structure is shown in Figure 1d.

The overall architecture of PSGFE is shown in Figure 1a. It is divided into three parts: Branch 1 is dedicated to object detection using Faster R-CNN with FEM, starting from RPN to generate proposals, followed by ROI pooling and FEM, and finally the original bounding box-head (original BBH) for refinement and classification. Branch 2 is the pseudo-sample generation branch, including the backbone, STPPGN, and STCPSGN. The STPPGN takes in unlabeled background data to produce pseudo-proposals, and the STCPSGN performs ROI pooling and classification regression on them, retaining high-confidence samples to generate high-quality pseudo-samples to integrate into the training set. Branch 3 is the contrastive learning branch for extracting and enhancing multi-scale features to compare them with original proposals and pseudo-samples. It is untrainable and shares weights with those in Branch 1.

3.3. Self-Training-Based Pseudo-Proposal Generation Network

To excavate potential foreground instances from the unannotated background, we introduce STPPGN. In the fine-tuning phase, for each image , our method uses the multiscale features extracted from i to generate two sets of proposals. One set is the original proposals generated by the original RPN from Branch 1, as shown in Figure 1a; the other set is the pseudo-proposals generated by the STPPGN where the structure is shown in Figure 1b. Both sets of proposals have the same structure where denotes the bounding box coordinates of each proposal, and O is the confidence of the proposal, indicating the probability that the bounding box contains an object. As shown in Figure 1b, STPPGN consists of two submodules: teacher RPN and student RPN, which are structurally consistent with the original RPN. The teacher RPN is updated using the exponential moving average of the student RPN weights [48]. The original proposals output by the original RPN may belong to either novel or base classes, and the pseudo-proposals output by STPPGN represent potential foregrounds in the background rather than being specifically novel class proposals.

The teacher RPN generates and produces a set of high-quality teacher proposals , which have an intersection over union (IoU) of less than a threshold with all ground truth objects. The student RPN, under the guidance of the teacher RPN, produces a set of student proposals, denoted by .

During the fine-tuning phase, since the teacher and student RPN are initialized with the weights of the original RPN, they may not produce high-quality outputs at the beginning of training. Therefore, we add the novel class instances in the corresponding images to the outputs of the teacher and student RPN. We only consider the classification loss of a proposal when it matches and has a high overlap with . The advantage of this approach is that it enables the student RPN to fully learn the class-specific features of novel instances without being distracted by their positional information. This enhances its ability to uncover potential unlabeled novel class instances. Let , signify the proposals in that are matched with . If student proposals match a certain proposal in teacher proposal , and conversely, it matches any novel instances in . The loss function of the student RPN can be expressed as follows:

Here, represents the classification loss of the student RPN, while denotes the regression loss of the student RPN. is a coefficient that indicates whether to include the classification loss for .

where denotes the IoU.

Finally, is filtered and subjected to non-maximum suppression (NMS) to generate a high-quality set of pseudo-proposals . This set of pseudo-proposals will be further processed in Section 3.4 to generate pseudo-samples.

3.4. Self-Training-Based Confident Pseudo-Sample Generation Network

To filter and refine generated by STPPGN and generate high-quality pseudo-samples, we proposed STCPSGN. During the fine-tuning phase, there are two sets of proposals to deal with: and . Therefore, we have two networks to handle the proposals: one is the original BBH, located in Branch 1, as shown in Figure 1a, responsible for making precise predictions for ; the other is STCPSGN, which is responsible for screening and refinement to produce high-quality pseudo-samples. These pseudo-samples are incorporated into the training set to guide the predictions of the original BBH.

Similarly to STPPGN, STCPSGN consists of two submodules: teacher bounding box-head (teacher BBH) and student bounding box-head (student BBH). Its structure is shown in Figure 1c. Teacher BBH is updated using the exponential moving average [48] of the student BBH weights. Their structure is consistent with the original BBH, including an ROI feature extraction layer, a bounding box classifier, and a regressor. undergoes RoI pooling first and then sends them to the STCPSGN to generate the final high-quality pseudo-samples that are integrated into the training set. STCPSGN has distinct parameters from the original BBH, focusing on pseudo-proposal processing, thereby resolving feature interference issues in ST-FSOD [23].

Teacher BBH generates teacher pseudo-samples with confidence above the threshold . Student BBH uses as supervision to produce student predictions for computing the loss .

where represents the classification loss of student box-head while denotes the regression loss. The coefficient is used to adjust the regression loss.

Subsequently, are subjected to a filtering and refinement process to generate pseudo-samples , is the region where the student box-head identifies as containing an instance, is its labels, where indicates the bounding box coordinates, and signifies the class label.

If the predictions of the original BBH have bounding boxes that exhibit a high IoU with the pseudo-samples, it indicates the prediction is valid and should be updated with its class label. Specifically, when a sample with a ground truth class C is predicted as the background by the original BBH and it significantly overlaps with pseudo-samples, its label is replaced with the label of the pseudo-samples, and the loss is computed. The loss function for the original BBH can be expressed as follows:

where denotes the classification loss of the original BBH, and represents the regression loss. is the original prediction of the original BBH. is the label for the background.

Furthermore, is used to eliminate negative sample pairs with high overlap in contrastive learning, preventing their interference with feature learning. It is dynamically updated during training, with new predictions replacing old pseudo-labels for existing images.

3.5. Feature Enhancement Module

To augment the features within the ROI, allowing our method to better discern the essential features of each class and mitigate confusion, we propose FEM, which is an attention network that integrates path dropout [25], large kernel convolution, and depth separable convolution, as depicted in Figure 1d. It can be formulated as follows:

where x is an ROI feature from either a proposal, a pseudo-proposal, or an instance. Norm, Attn, and MLP, respectively, are batch normalization, attention mechanism, and multi-layer perceptron. The layer scaling parameters and , are learnable parameters to modulate the contributions of the attention mechanism and the MLP output, respectively. The symbol ⊙ represents element-wise multiplication.

The attention mechanism focuses on local dependencies and saliency in input feature maps, enabling the model to extract core class information, thus mitigating the impact of inter-class similarity and improving classification accuracy. Additionally, the use of multiple convolutional kernels of varying sizes endows the model with different receptive fields, allowing it to focus on important information at various scales. The attention mechanism can be represented as follows:

where and correspond to the mean and maximum values, after applying distinct convolutional kernels to x, respectively. The notation represents large kernel convolution. ⊕ denotes vector concatenation.

MLP with depthwise separable convolutions enhances the operational efficiency of the network by reducing the number of parameters. Additionally, it strengthens the capacity of the model to capture and learn multi-scale features effectively by independently processing features of each input channel and allowing for the efficient integration of features across different channels. This can be represented as follows:

The notation signifies a depthwise separable convolution, while and denote distinct fully connected layers.

3.6. Overall Loss Function

To better enable the model to learn the core features of various classes on different scales, we employ contrastive learning on ROI features compared to instance objects, using the CMSP loss proposed by MSOCL [22], which is expressed as .

In summary, the overall loss function for the few-shot training process can be formulized as

where and are the loss functions for the original RPN and original BBH, respectively, and are the loss functions for student RPN and student BBH. is the contrastive loss and is its weight, setting it at 0.2.

4. Experiments

4.1. Dataset and Implementation

Two publicly available datasets are used to evaluate the method, as detailed below: DIOR [12], a large optical remote sensing dataset with 5862 training, 5863 validation, and 11,738 test images, totaling 23,463 images and 192,472 instances. The images are 800 × 800 pixels with resolutions from 0.5 m to 30 m and cover 20 classes. We bifurcate it into base and novel class subsets for few-shot scenario model assessments, detailed in Table 1.

Table 1.

Two different base/novel classes split setting for DIOR dataset.

RSOD [49], a four-class geospatial dataset for object detection, comprises aircraft, oil tanks, overpasses, and playgrounds. We designate the overpass as the novel class and the rest as the base class.

The training process of our method consists of two distinct stages. In the first stage, Branch 1 is trained using examples from all the base classes, which includes the Faster R-CNN and FEM components. In the second stage, the parameters obtained from the initial phase are utilized to initialize the RPN, FEM, and original BBH of Branch 1 in the subsequent STPPGN and STCPSGN of Branch 2. Additionally, the parameters for Branch 3 are shared with the corresponding components of Branch 1. PSGFE undergoes fine-tuning with a balanced set containing only a few annotated instances of novel classes, activates the pseudo-sample generation module, and ceases gradient computation for Branch 3.

Our method is built based on the PyTorch 1.7 deep learning framework and is trained and evaluated on a single NVIDIA Tesla V100 GPU manufactured by NVIDIA Corporation, Santa Clara, CA, USA. The method utilizes a pre-trained ResNet101 on ImageNet as the feature extractor. The optimizer uses stochastic gradient descent (SGD) with a momentum of 0.9 and a weight decay coefficient of 1 × 104. In the first phase, the initial learning rate is set to 0.01 with a batch size of 16, and the model is trained for 80,000 iterations on the DIOR dataset and 10,000 iterations on the RSOD dataset. In the second phase, the initial learning rate is reduced to 0.001, and the model undergoes 21,000 iterations of training on the DIOR dataset and 3000 iterations on the RSOD dataset.

4.2. Evaluation Metrics

We employ the mean average precision at an intersection over union (IoU) threshold of 0.5 (mAP50) as our evaluation metric. This is a comprehensive indicator that can fully measure the overall performance in multi-class detection tasks. The prediction is considered correct when the IoU between it and the ground truth (GT) object exceeds 0.5. In FSOD, mAP50 is obtained by calculating the average precision (AP) for each novel class at this threshold and then averaging these AP values. The specific calculation formula is as follows:

Here, stands for true positives, for false positives, and for false negatives. The term P refers to precision, while R refers to recall. The variable c indicates a particular class, and N denotes the total number of classes.

A higher value of mAP50 indicates that the model can more effectively balance precision and recall in the detection task, reflecting improved overall performance. The mAP values are presented in percentage form (mAP%).

4.3. Quantitative Results on DIOR Datasets

To confirm the efficacy of PSGFE, we compare several related FSOD algorithms and conduct experiments: transfer-learning-based methods such as TFA [31], FSCE [21] and G-FSOD [50], which utilize balanced sets during the fine-tuning phase and optimize the RoI feature extractor through a specialized module to enhance adaptability to novel classes; and meta-learning-based methods such as RepMet [11], MPSR [30], FsDet [40] and P-CNN [39], which extract feature prototypes and perform feature aggregation to strengthen classification capabilities. MSOCL [22] and ST-FSOD [23] are the two best performing algorithms on the DIOR dataset, both based on transfer learning, and MSOCL [22] is our baseline.

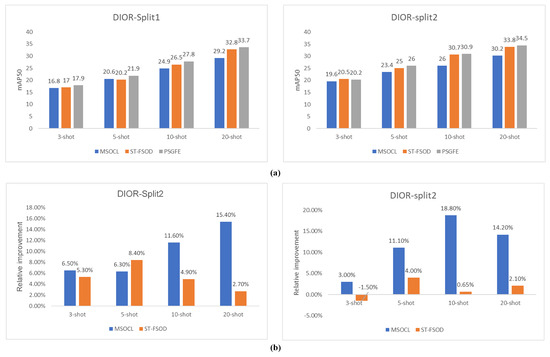

Our results, as shown in Table 2, achieved optimal performance in most cases, with the exception of a suboptimal result in the Split2 3-shot scenario, where the mAP50 of the novel class is 0.3 points lower than ST-FSOD [23]. The reason for this is that the data complexity of Split2 is relatively lower than that of Split1. FEM may focus excessively on certain local features, which can lead to overfitting of the model when there are only three training samples. For a more intuitive comparison, we select MSOCL [22] and ST-FSOD [23] for individual contrast. As illustrated in Figure 2a, in Split1, the mAP50 of our method for novel classes is on average 1.1 higher than that of the second-best method, and it improved by up to 1.3 under the 5-shot condition. Compared to our baseline MSOCL [22], our method achieved at least a 1.1 mAP50 enhancement, and in the 20-shot setting, it was further improved by 4.5 mAP50. In terms of percentage improvement, as shown in Figure 2b, our approach achieved at least a 2.7% increase over the second-best method, with the highest reaching 8.4%. Compared to the baseline, it has at least an improvement of 6.3% and, in the 20-shot configuration, it improved further by 15.4%. In Split2, our method on average surpassed the second-best method by 0.4 mAP50 and on average outperformed the baseline by 3.1 mAP50. Moreover, in all scenarios except for the 3-shot setting, it exceeded the second-best method, achieving an 11.8% improvement compared to the baseline.

Table 2.

Mean average precision (mAP) at different methods on the DIOR dataset is presented in percentage terms. The reported accuracies of all models are the percentage accuracies of mAP50 for the novel class obtained through five random sampling training sessions.The bold parts in the tables indicate the best results under each setting.

Figure 2.

(a) Comparison of mAP50 of novel class between PSGFE and MSOCL [22]. ST-FSOD [23] in DIOR datasets. (b) Variation in PSGFE relative to MSOCL [22] and ST-FSOD [23] in DIOR datasets.

The results demonstrate that the approach of leveraging PSGM to mine potential foreground instances from complex backgrounds and generate high-quality pseudo-samples, combined with enhancing ROI features through FEM, is effective. PSGN effectively mitigates the confusion between background features and foreground features, while FEM effectively alleviates the confusion among features of different foreground instance classes. This validates the effectiveness of PSGFE in addressing the FSOD problem in remote sensing imagery.

4.4. Quantitative Results on RSOD Datasets

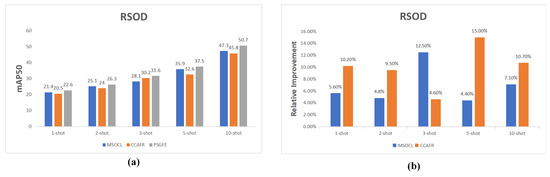

To further substantiate the performance of PSGFE, additional experiments are carried out on the RSOD dataset. We compare our model with classic transfer learning algorithms such as TFA [31], FSCE [21], and CCAFR [51], as well as classic meta-learning algorithms like Meta-RCNN [28] and SAAN [38]. In particular, CCAFR [51] and MSOCL [22] are the two best-performing algorithms on the RSOD dataset. Table 3 shows the precision of several FSOD methods under the mAP50 evaluation criteria. Our results achieve the best performance across all scenarios.

Table 3.

Mean average precision (mAP) at different methods on the RSOD dataset is presented in percentage terms. The reported accuracies of all models are the percentage accuracies of mAP50 for the novel class obtained through five random sampling training sessions. The bold parts in the tables indicate the best results under each setting.

As shown in Table 3, our results achieve optimal performance. The mAP50 of our method is at least 1.2 times higher than that of the second-best method, with the highest improvement of 3.4 under the 10-shot condition. For a more intuitive comparison, we singled out two suboptimal methods, MSOCL [22] and CCAFR [51] for individual contrast. As illustrated in Figure 3. Compared to CCAFR [51], PSGFE achieves an improvement of more than 9% in all scenarios except the 3-shot setting, with a remarkable improvement of 15.0% in the 5-shot setting. Compared to MSOCL [22], PSGFE also has an increase of more than 4% in all scenarios. In general, when in contrasted with suboptimal algorithms, PSGFE demonstrates significant improvements, particularly in 5-shot and 10-shot settings, where our approach outperforms suboptimal algorithms by at least 4%. This indicates that PSGFE exhibits satisfactory generalization capability, which is also demonstrated to be effective on the RSOD dataset.

Figure 3.

(a) Comparison of mAP50 of novel class between PSGFE and MSOCL [22]. CCAFR [51] in RSOD datasets. (b) Variation in PSGFE relative to MSOCL [22] and CCAFR [51] in RSOD datasets.

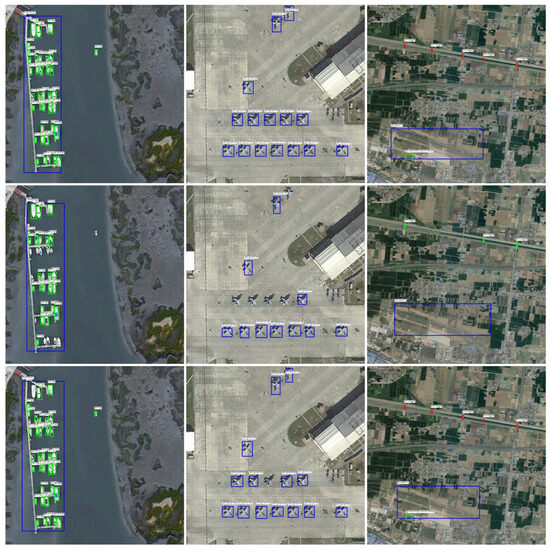

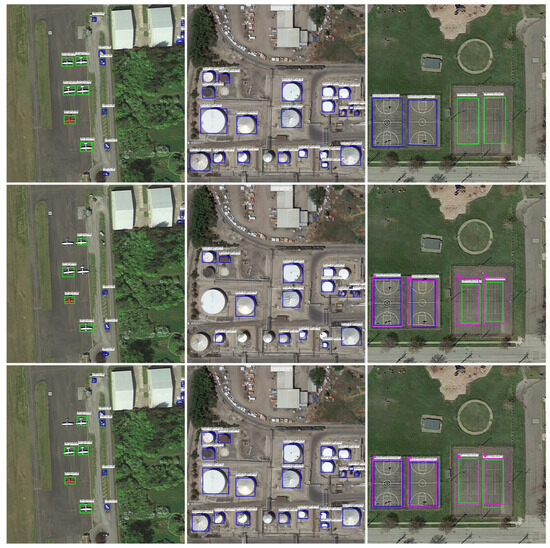

4.5. Qualitative Results

We visualize the novel classes detection results of our method and the baseline, in the DIOR dataset on Split1 20-shot and Split2 20-shot settings, as depicted in Figure 4 and Figure 5. Compared to the baseline, our method exhibits superior detection performance. In featuring a multitude of dense small object instances, as observed in columns 1–2 of Figure 4 and Figure 5, the baseline shows a higher rate of missed objects and is outperformed by PSGFE. In column 1 of Figure 4, the baseline fails to detect isolated ships, some airplanes, and the small bridge over the river. In Figure 5, it fails to detect airplanes, vehicles, and storage tanks. In contrast, our method is capable of detecting these instances, resulting in a lower rate of missed instances. The reason is that during the few-shot fine-tuning phase, there are only a few instances per class, and most instances in such dense images are not provided with annotations, leading the baseline to treat these potential instances as negative samples during contrastive learning, thereby confusing the features of the foreground and background. Our PSGM recalls these instances and generates pseudo-samples refining predictions and excluding these pseudo-samples from negative pairs in contrastive learning, thereby enhancing the detection rate.

Figure 4.

Visualizations of detection results for novel classes in DIOR datasets on Split1 under the 20-shot settings. In both columns, the first row displays the ground truth labels, the second row presents the baseline results, and the third row showcases the results of PSGFE.

Figure 5.

Visualizations of detection results for novel classes in DIOR datasets on Split2 under the 20-shot settings. In both columns, the first row displays the ground truth labels, the second row presents the baseline results, and the third row showcases the results of PSGFE.

In the detection of object instances with high similarity but different scales, as shown in column 3 in Figure 5, both PSGFE and the baseline can detect tennis courts and basketball courts. However, PSGFE yields detection results that are closer to the magenta ground truth (GT) bounding box than those of the baseline. This indicates that our proposed FEM can better distinguish the features of similar objects across different classes and extract effective features from instances of the same class at different scales, thus improving the accuracy of the bounding box.

However, our method still has some limitations. As shown in the first column of Figure 5, some airplanes are missed in our detection results. In Split2, the airplane is a base class. The possible reason for the missed detections is that our method experienced catastrophic forgetting [52] of the features of the base classes while learning the features of novel classes during fine-tuning, which led to a decrease in the detection performance of the base classes and consequently caused the misses. In future research, we will explore effective mechanisms to retain base class knowledge and reduce forgetting of base classes.

4.6. Ablation Experiment

To investigate the contributions of the proposed PSGM and FEM to the performance of the model, we conducted ablation studies on the DIOR dataset with Split1 in 10/20 shot settings. We sequentially integrated these two modules onto the baseline and individually quantified the specific impact of each module on the model performance. The results are presented in Table 4. By incorporating PSGM alone, the mAP50 of the model in Split1 for the 10-shot and 20-shot scenarios increased by 1.4 and 3.6, respectively, with the performance enhancement ratios being 5.6% and 12.3%. This is because PSGM can effectively capture potential foreground information within the background, thereby assisting the model in accurately distinguishing between background and foreground features. Adding FEM alone led to increases in mAP50 by 0.7 and 2.7 for the 10-shot and 20-shot scenarios, respectively, with a performance improvement ratio of 2. 8% and 9. 2%. This demonstrates that our proposed FEM can effectively enhance the features of the ROI, allowing it to better represent the multi-scale information of the proposals. In general, by incorporating both PSGM and FEM, PSGFE achieved optimal performance, significantly outperforming the model with only PSGM or FEM. The ablation experiments demonstrate that the application of PSGM and FEM, either individually or in combination, enhances the model performance, thus validating the effectiveness of our proposed methods.

Table 4.

Ablation experiment of the main components of PSGFE. The bold parts in the tables indicate the best results under each setting.

During the few-shot fine-tuning phase, there are three possible positions for FEM to verify the impact of different implementation positions on the model performance. Therefore, we also conducted additional ablation experiments on the position of FEM in the DIOR dataset with Split1 in the 20-shot scenario, as shown in Table 5. Building on Case 1, FEM is added to Branch 2 and Branch 3, respectively, resulting in performance improvements of 2.9% and 2.0%, respectively. In Case 4, where FEM is added to all branches, mAP50 increased by 1.2, resulting in a performance improvement of 3.9%. The ablation experiments demonstrate the necessity and effectiveness of improving the ROI features of all modules through FEM.

Table 5.

Ablation experiment of the FEM position. The bold parts in the tables indicate the best results under each setting.

5. Conclusions

To tackle the problem of feature confusion, which encompasses both the confusion between foreground features and background features and the confusion between foreground features of different classes, in optical remote sensing few-shot object detection (FSOD), we propose a new two-stage FSOD framework based on transfer learning via pseudo-sample generation and feature enhancement called PSGFE, including a pseudo-sample generation module (PSGM) and feature enhancement module (FEM). PSGM mines potential foreground instances from unlabeled backgrounds and generates pseudo-samples, addressing the confusion between background features and foreground features. FEM enhances ROI features, addressing the confusion among foreground features of different classes. The experiments confirmed the efficacy of PSGM and FEM in improving FSOD performance in optical remote sensing images.

Despite the effectiveness of our method, it has certain limitations. One significant limitation is the degradation in base class performance during fine-tuning, as the model tends to forget previously learned knowledge. Additionally, challenges arise in detecting objects with arbitrary orientations, which can reduce localization performance and classification accuracy. Furthermore, our method increases computational overhead. In future research, we will focus on addressing these three areas: (1) mitigating the forgetting issue by developing a more robust feature embedding that preserves base-class knowledge while accommodating novel classes, (2) exploring rotation-invariant feature extraction techniques to enhance the detection of objects with arbitrary orientations in remote sensing applications, and (3) investigating module lightening to reduce time complexity.

Author Contributions

Conceptualization, Z.H., D.C. and C.Z.; methodology, Z.H., D.C. and C.Z.; software, Z.H.; validation, D.C. and C.Z.; formal analysis, Z.H., D.C. and C.Z.; writing—original draft, Z.H.; writing—review & editing, Z.H., D.C. and C.Z.; visualization, Z.H.; supervision, D.C.; funding acquisition, D.C and C.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Natural Science Foundation of Guangxi Province, China (2025GXNSFAA069540) and the Research Capacity Improvement Project of Young Researcher (No. 2024KY0017).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Publicly available datasets were analyzed in this study. This data can be found here: RSOD dataset at https://github.com/RSIA-LIESMARS-WHU/RSOD-Dataset- (accessed on 1 March 2025) and the DIOR dataset at https://github.com/JiekaiLab/dior (accessed on 1 March 2025).

Acknowledgments

The authors thank all anonymous reviewers for their constructive comments and also thank all editors for their careful proofreading.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Chen, K.; Li, W.; Lei, S.; Chen, J.; Jiang, X.; Zou, Z.; Shi, Z. Continuous remote sensing image super-resolution based on context interaction in implicit function space. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–16. [Google Scholar] [CrossRef]

- Liu, N.; Celik, T.; Li, H.C. MSNet: A multiple supervision network for remote sensing scene classification. IEEE Geosci. Remote Sens. Lett. 2020, 19, 1–5. [Google Scholar] [CrossRef]

- Anbarasan, M.; Muthu, B.; Sivaparthipan, C.B.; Sundarasekar, R.; Kadry, S.; Krishnamoorthy, S.; Dasel, A.A. Detection of flood disaster system based on IoT, big data and convolutional deep neural network. Comput. Commun. 2020, 150, 150–157. [Google Scholar] [CrossRef]

- Li, W.; Chen, Y.; Hu, K.; Zhu, J. Oriented reppoints for aerial object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1829–1838. [Google Scholar]

- Zhang, J.; Xing, M.; Sun, G.C.; Li, N. Oriented Gaussian function-based box boundary-aware vectors for oriented ship detection in multiresolution SAR imagery. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–15. [Google Scholar] [CrossRef]

- Zhang, S.; Wang, L.; Murray, N.; Koniusz, P. Kernelized few-shot object detection with efficient integral aggregation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 19207–19216. [Google Scholar]

- Yang, H.; Cai, S.; Sheng, H.; Deng, B.; Huang, J.; Hua, X.S.; Zhang, Y. Balanced and hierarchical relation learning for one-shot object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 7591–7600. [Google Scholar]

- Huang, P.; Han, J.; Cheng, D.; Zhang, D. Robust region feature synthesizer for zero-shot object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 7622–7631. [Google Scholar]

- Wu, S.; Pei, W.; Mei, D.; Chen, F.; Tian, J.; Lu, G. Multi-faceted distillation of base-novel commonality for few-shot object detection. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer Nature: Cham, Switzerland, 2022; pp. 578–594. [Google Scholar]

- Chen, H.; Wang, Y.; Wang, G.; Qiao, Y. LSTD: A low-shot transfer detector for object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Karlinsky, L.; Shtok, J.; Harary, S.; Schwartz, E.; Aides, A.; Feris, R.; Bronstein, A.M. RepMet: Representative-based metric learning for classification and few-shot object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 5197–5206. [Google Scholar]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Lu, X.; Sun, X.; Diao, W.; Mao, Y.; Li, J.; Zhang, Y.; Fu, K. Few-shot object detection in aerial imagery guided by text-modal knowledge. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–19. [Google Scholar] [CrossRef]

- Cheng, G.; Lang, C.; Wu, M.; Xie, X.; Yao, X.; Han, J. Feature enhancement network for object detection in optical remote sensing images. J. Remote Sens. 2021, 2021. [Google Scholar] [CrossRef]

- Liu, Y.; Pan, Z.; Yang, J.; Zhou, P.; Zhang, B. Multi-Modal Prototypes for Few-Shot Object Detection in Remote Sensing Images. Remote Sens. 2024, 16, 4693. [Google Scholar] [CrossRef]

- Zhou, Z.; Chen, J.; Huang, Z.; Wan, H.; Chang, P.; Li, Z.; Xing, M. FSODS: A lightweight metalearning method for few-shot object detection on SAR images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–17. [Google Scholar] [CrossRef]

- Fan, Q.; Tang, C.K.; Tai, Y.W. Few-shot object detection with model calibration. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer Nature: Cham, Switzerland, 2022; pp. 720–739. [Google Scholar]

- Huang, X.; He, B.; Tong, M.; Wang, D.; He, C. Few-shot object detection on remote sensing images via shared attention module and balanced fine-tuning strategy. Remote Sens. 2021, 13, 3816. [Google Scholar] [CrossRef]

- Gao, H.; Wu, S.; Wang, Y.; Kim, J.Y.; Xu, Y. Fsod4rsi: Few-shot object detection for remote sensing images via features aggregation and scale attention. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 4784–4796. [Google Scholar] [CrossRef]

- Li, J.; Tian, Y.; Xu, Y.; Hu, X.; Zhang, Z.; Wang, H.; Xiao, Y. MM-RCNN: Toward few-shot object detection in remote sensing images with meta memory. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Sun, B.; Li, B.; Cai, S.; Yuan, Y.; Zhang, C. Fsce: Few-shot object detection via contrastive proposal encoding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 19–25 June 2021; pp. 7352–7362. [Google Scholar]

- Chen, J.; Qin, D.; Hou, D.; Zhang, J.; Deng, M.; Sun, G. Multiscale object contrastive learning-derived few-shot object detection in VHR imagery. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Zhang, F.; Shi, Y.; Xiong, Z.; Zhu, X.X. Few-shot object detection in remote sensing: Lifting the curse of incompletely annotated novel objects. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–14. [Google Scholar] [CrossRef]

- Serre, T.; Wolf, L.; Bileschi, S.; Riesenhuber, M.; Poggio, T. Robust object recognition with cortex-like mechanisms. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 411–426. [Google Scholar] [CrossRef] [PubMed]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Li, Y.; Hou, Q.; Zheng, Z.; Cheng, M.M.; Yang, J.; Li, X. Large selective kernel network for remote sensing object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Vancouver, Canada, 18–22 June 2023; pp. 16794–16805. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef]

- Yan, X.; Chen, Z.; Xu, A.; Wang, X.; Liang, X.; Lin, L. Meta R-CNN: Towards general solver for instance-level low-shot learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Long Beach, CA, USA, 16–20 June 2019; pp. 9577–9586. [Google Scholar]

- Lu, X.; Diao, W.; Mao, Y.; Li, J.; Wang, P.; Sun, X.; Fu, K. Breaking immutable: Information-coupled prototype elaboration for few-shot object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington DC, USA, 7–14 February 2023; Volume 37, pp. 1844–1852. [Google Scholar]

- Wu, J.; Liu, S.; Huang, D.; Wang, Y. Multi-scale positive sample refinement for few-shot object detection. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XVI. Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 456–472. [Google Scholar]

- Wang, X.; Huang, T.E.; Gonzalez, J.; Yu, F. Frustratingly simple few-shot object detection. In Proceedings of the 37th International Conference on Machine Learning (ICML), Online, 13–18 June 2020; pp. 9919–9928. [Google Scholar]

- Wu, A.; Han, Y.; Zhu, L.; Yang, Y. Universal-prototype enhancing for few-shot object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Online, 19–25 June 2021; pp. 9567–9576. [Google Scholar]

- Qiao, L.; Zhao, Y.; Li, Z.; Qiu, X.; Wu, J.; Zhang, C. DefRCN: Decoupled Faster R-CNN for few-shot object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Online, 19–25 June 2021; pp. 8681–8690. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 2672–2680. [Google Scholar]

- Lin, S.; Wang, K.; Zeng, X.; Zhao, R. Explore the power of synthetic data on few-shot object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 638–647. [Google Scholar]

- Nguyen, K.; Todorovic, S. A self-supervised GAN for unsupervised few-shot object recognition. In Proceedings of the 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 3225–3231. [Google Scholar]

- Li, X.; Deng, J.; Fang, Y. Few-shot object detection on remote sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–14. [Google Scholar] [CrossRef]

- Xiao, Z.; Qi, J.; Xue, W.; Zhong, P. Few-shot object detection with self-adaptive attention network for remote sensing images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 4854–4865. [Google Scholar] [CrossRef]

- Cheng, G.; Yan, B.; Shi, P.; Li, K.; Yao, X.; Guo, L.; Han, J. Prototype-CNN for few-shot object detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–10. [Google Scholar] [CrossRef]

- Xiao, Y.; Lepetit, V.; Marlet, R. Few-shot object detection and viewpoint estimation for objects in the wild. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 3090–3106. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y.; Hu, H.; Zhao, J.; Zhu, H.; Yao, R.; Du, W.L. Few-shot object detection via context-aware aggregation for remote sensing images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Li, R.; Zeng, Y.; Wu, J.; Wang, Y.; Zhang, X. Few-shot object detection of remote sensing image via calibration. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Ma, S.; Hou, B.; Wu, Z.; Li, Z.; Guo, X.; Ren, B.; Jiao, L. Automatic aug-aware contrastive proposal encoding for few-shot object detection of remote sensing images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–11. [Google Scholar] [CrossRef]

- Wei, C.; Sohn, K.; Mellina, C.; Yuille, A.; Yang, F. Crest: A class-rebalancing self-training framework for imbalanced semi-supervised learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 19–25 June 2021; pp. 10857–10866. [Google Scholar]

- Xie, Q.; Luong, M.T.; Hovy, E.; Le, Q.V. Self-training with noisy student improves imagenet classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 16–20 June 2020; pp. 10687–10698. [Google Scholar]

- Liu, Z.; Mao, H.; Wu, C.Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A convnet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 11976–11986. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6848–6856. [Google Scholar]

- Araslanov, N.; Roth, S. Self-supervised augmentation consistency for adapting semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 19–25 June 2021; pp. 15384–15394. [Google Scholar]

- Long, Y.; Gong, Y.; Xiao, Z.; Liu, Q. Accurate object localization in remote sensing images based on convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2486–2498. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Zhu, P.; Jia, X.; Tang, X.; Jiao, L. Generalized few-shot object detection in remote sensing images. ISPRS J. Photogramm. Remote Sens. 2023, 195, 353–364. [Google Scholar] [CrossRef]

- Miao, W.; Zhao, Z.; Geng, J.; Jiang, W. Few-shot object detection based on contrastive class-attention feature reweighting for remote sensing images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 17, 2800–2814. [Google Scholar] [CrossRef]

- Shmelkov, K.; Schmid, C.; Alahari, K. Incremental learning of object detectors without catastrophic forgetting. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3400–3409. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).