Mitigating Conceptual Learning Gaps in Mixed-Ability Classrooms: A Learning Analytics-Based Evaluation of AI-Driven Adaptive Feedback for Struggling Learners

Abstract

Featured Application

Abstract

1. Introduction

1.1. Background and Context

1.2. Research Problem

1.3. Research Objectives

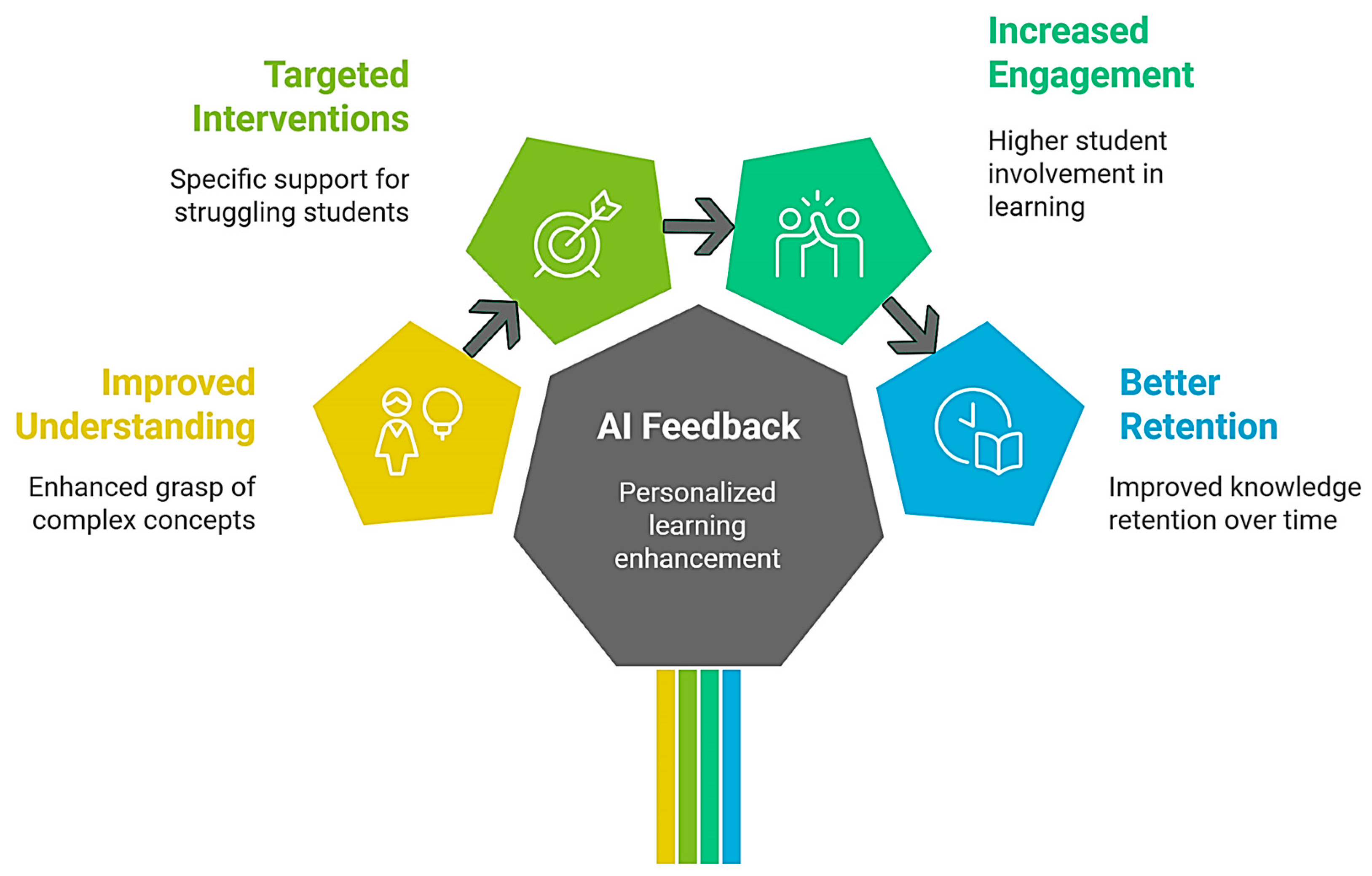

- Evaluate the effectiveness of AI-driven adaptive feedback in improving conceptual mastery among struggling learners.

- Assess the impact of AI feedback on student engagement and cognitive overload in smart learning environments.

- Analyze retention rates and long-term learning outcomes associated with AI-generated feedback interventions.

- Examine variations in feedback effectiveness across different subjects (STEM vs. language education) and student demographics.

1.4. Research Questions

- To what extent does AI-driven adaptive feedback improve conceptual mastery in mixed-ability classrooms?

- How does adaptive feedback influence student engagement and reduce cognitive overload in smart learning environments?

- What impact does AI-driven adaptive feedback have on long-term knowledge retention and course completion rates?

- Are there significant differences in feedback effectiveness based on subject domain, prior knowledge levels, and student demographics?

1.5. Significance of the Study

- Educators seek data-driven strategies for improving student engagement and performance.

- EdTech developers designing adaptive learning platforms with AI-driven interventions.

- Policymakers aim to implement AI-based solutions in higher education.

2. Literature Review

2.1. AI-Driven Adaptive Feedback in Education

2.2. Learning Analytics in Mixed-Ability Classrooms

2.3. Impact on Student Engagement and Retention

2.4. Challenges and Considerations

3. Methodology

3.1. Research Design

- Experimental Group (n = 350): Received AI-driven adaptive feedback.

- Control Group (n = 350): Received traditional instructor-led feedback.

3.2. Participants

3.3. AI-Driven Adaptive Feedback System

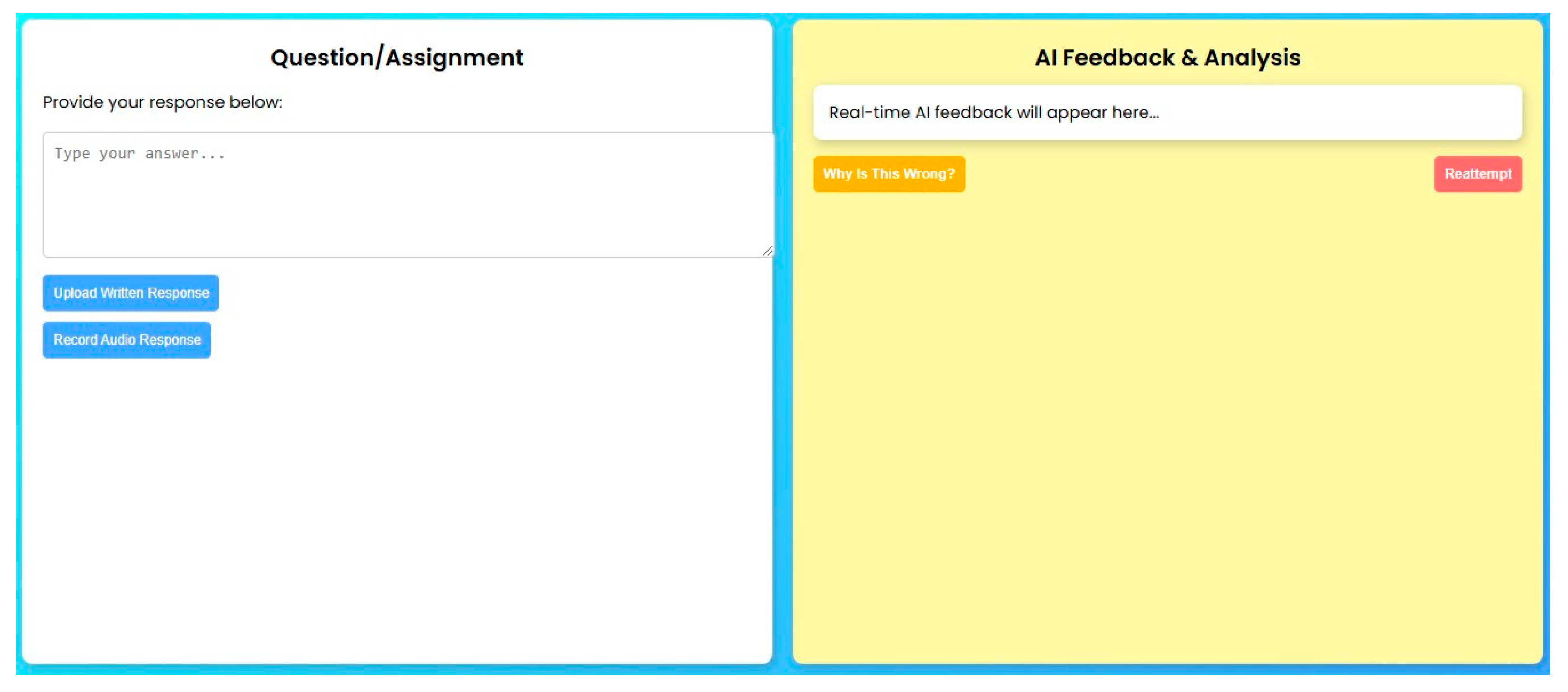

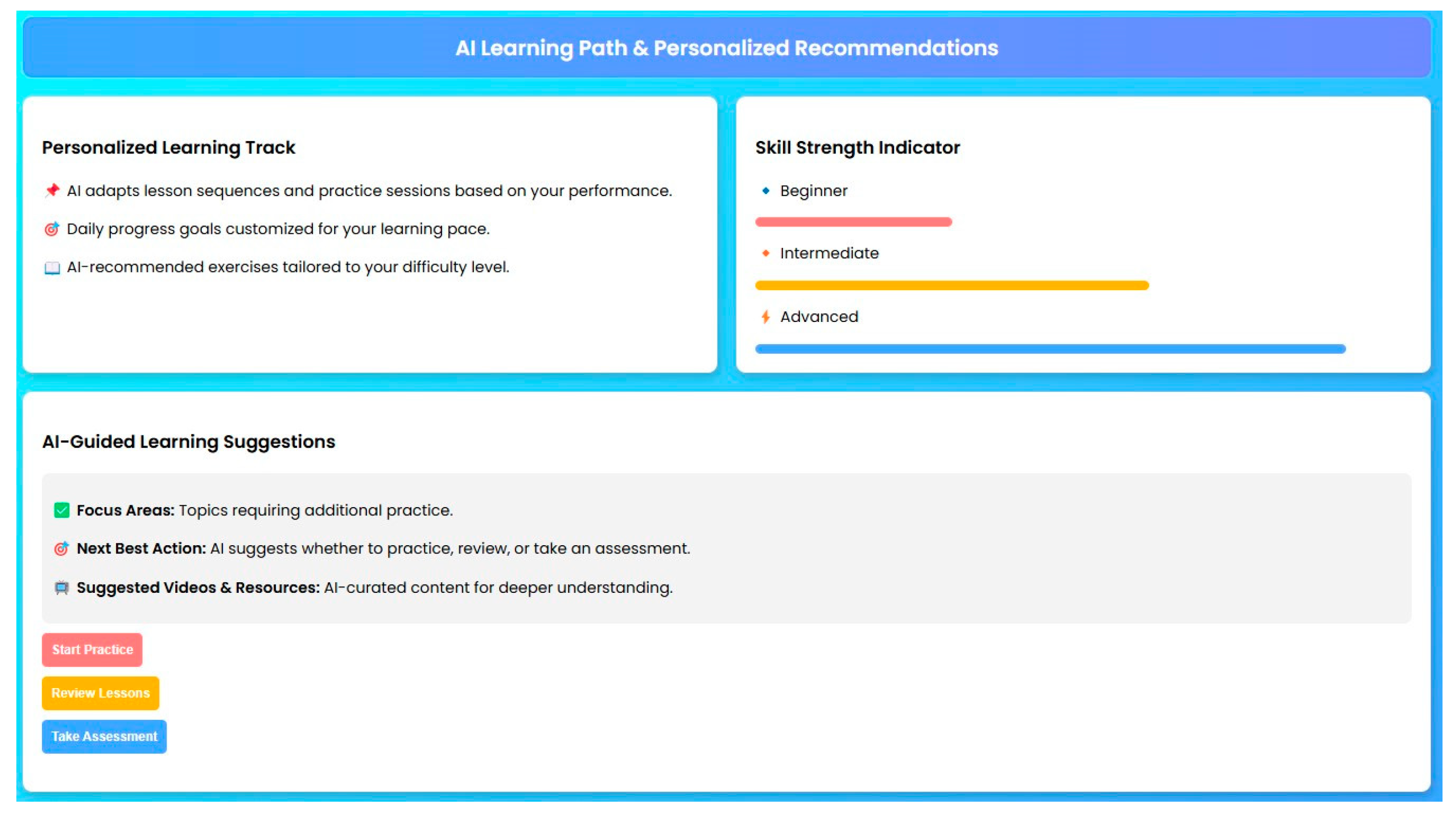

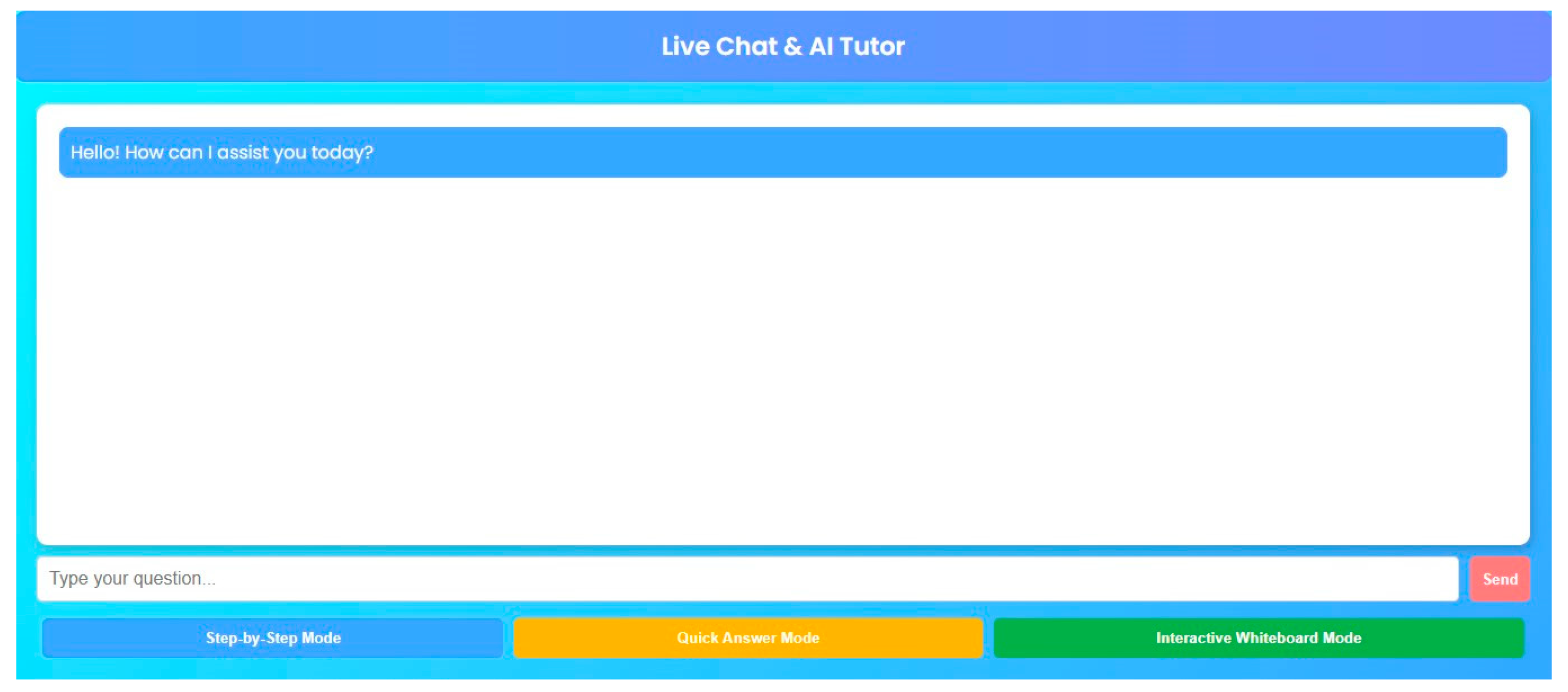

3.3.1. System Architecture and Interface Design

- = AI-generated feedback score.

- = Performance score based on correctness.

- = Response time per question.

- = Engagement level (frequency of AI interactions).

- = Learning weights optimized via machine learning.

3.3.2. Implementation Using OpenAI API Selection and Grouping

3.4. Data Collection Methods

3.4.1. Participant Selection and Grouping

3.4.2. Data Sources and Collection Tools

- System-Generated Learning Logs

- 2.

- Pre- and Post-Study Assessments

- 3.

- Student Engagement and Feedback Surveys

- 4.

- Instructor Observations and Reports

3.4.3. Ethical Consideration, Data Privacy and Data Validation

- The performance data integration process utilized cross-validation methods between AI log results and instructor evaluation results to maintain data accuracy.

- A random audit of feedback logs checked that AI suggested actions complied with expert standards for educational practice.

- Surveys included repetitions of identical questions presented at different times to confirm reporting precision.

3.5. Data Analysis and Statistical Methods

3.5.1. Descriptive Statistics and Data Normalization

- = raw score.

- = mean.

- = standard deviation.

- = mean scores for pre- and post-tests.

- = pooled standard deviation.

- = sample sizes.

- = cognitive load score.

- = weight assigned to each factor (mental demand, physical demand, temporal demand, effort, frustration, performance).

- = raw rating of each factor.

- = retention rate.

- = AI feedback score.

- = learning time per session.

- = engagement frequency.

- = regression coefficients.

3.5.2. AI-Generated Descriptive Statistics and Data Normalization

- Low Complexity Feedback (basic error identification).

- Moderate Complexity Feedback (hints and guided corrections).

- High Complexity Feedback (adaptive learning path suggestions).

4. Results

4.1. Improvement in Conceptual Mastery

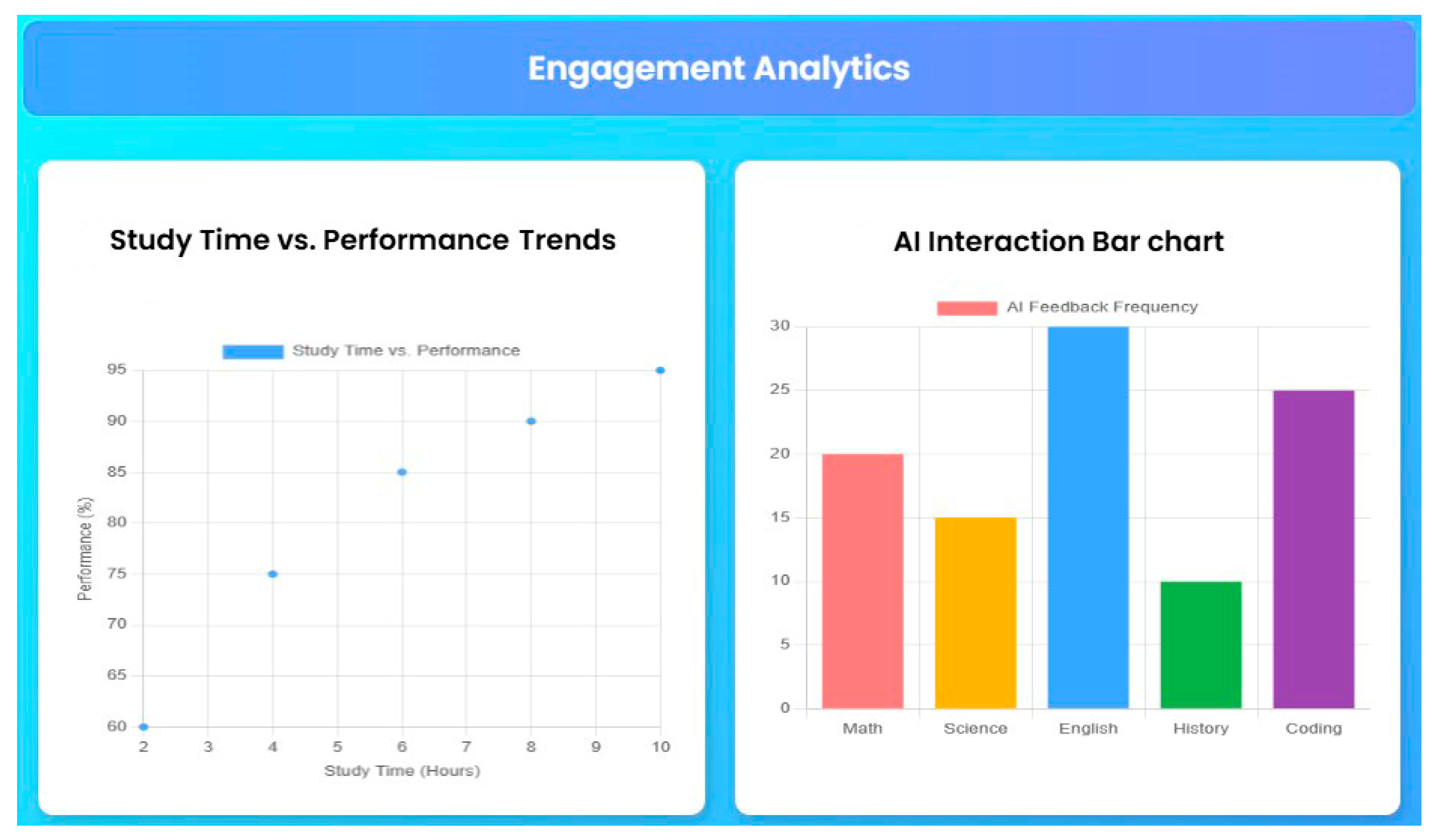

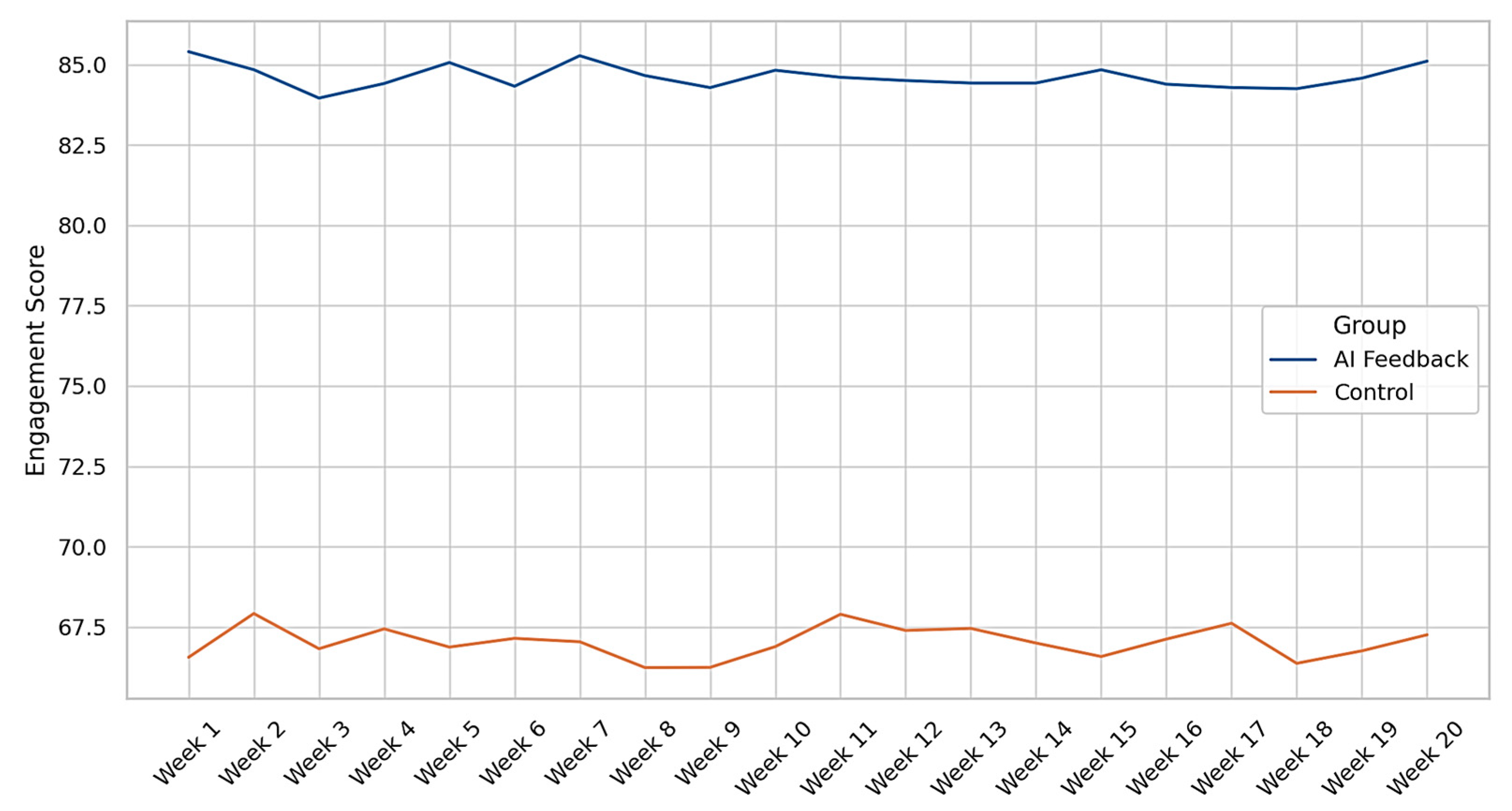

4.2. Student Engagement Trends

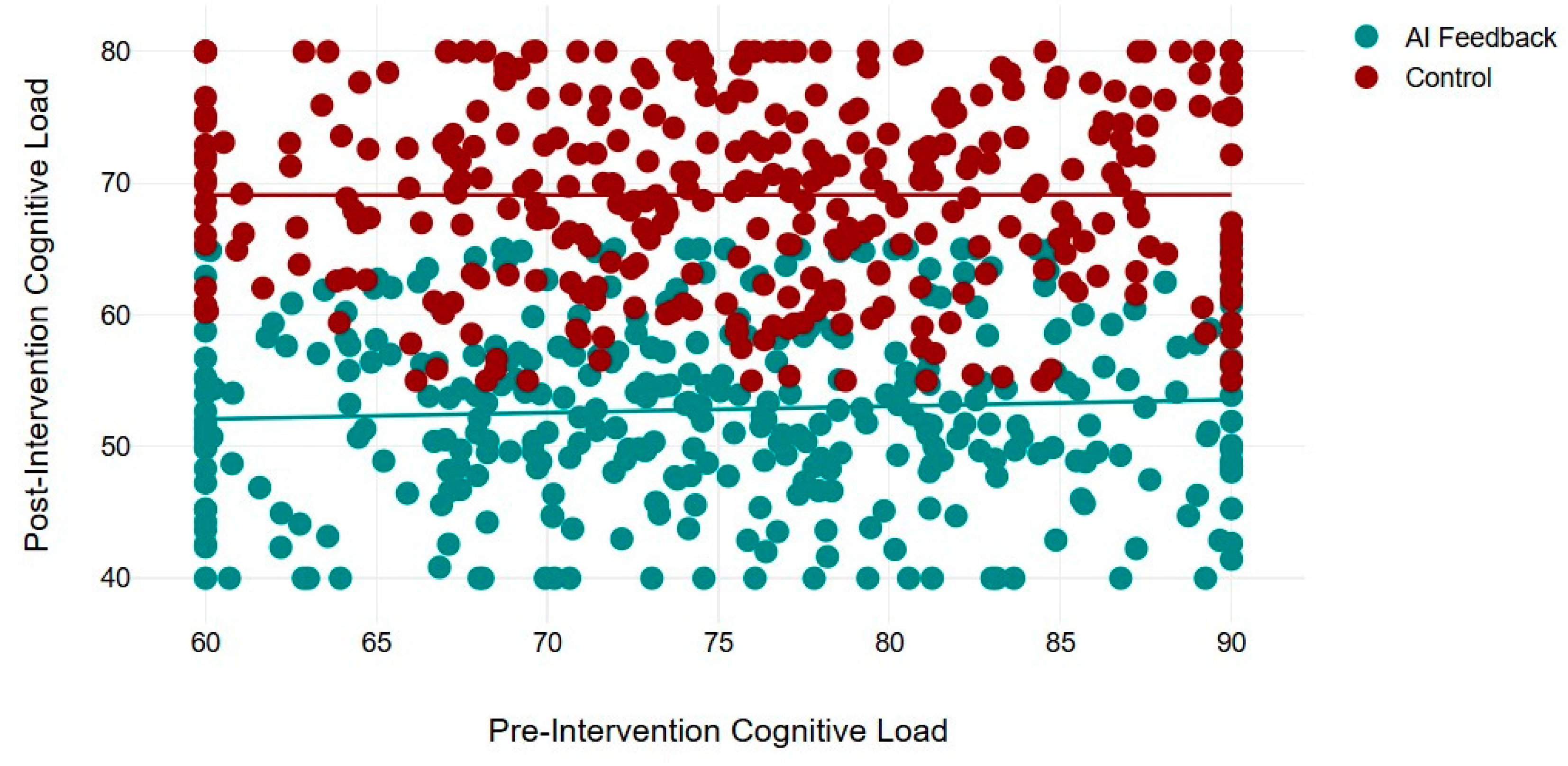

4.3. Reduction in Cognitive Overload

4.4. Knowledge Retention Rate Improvements

4.5. Variations in Feedback Effectiveness Across Subjects

5. Discussion

5.1. Adaptations in Feedback Efficiency

5.2. Enhancement of Conceptual Mastery

5.3. Reduction in Cognitive Load

5.4. Improved Retention Rates

5.5. Discipline-Specific Variations

5.6. Implications for Practice

5.7. Limitations and Future Research

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zawacki-Richter, O.; Marín, V.I.; Bond, M.; Gouverneur, F. Systematic review of research on artificial intelligence applications in higher education—Where are the educators? Int. J. Educ. Technol. High. Educ. 2019, 16, 39. [Google Scholar] [CrossRef]

- Baker, R.S.; Inventado, P.S. Educational data mining and learning analytics. In Learning Analytics; Springer: New York, NY, USA, 2014; pp. 61–75. [Google Scholar] [CrossRef]

- Mane, P.A.; Jagtap, D.S. AI-Enhanced educational platform for personalized learning paths, automated grading, and real-time feedback. Int. J. Sci. Res. Eng. Manag. 2024, 8, 1–7. [Google Scholar] [CrossRef]

- Chigbu, B.I.; Umejesi, I.; Makapela, S.L. Adaptive intelligence revolutionizing learning and sustainability in higher education. In Implementing Interactive Learning Strategies in Higher Education; IGI Global: Hershey, PA, USA, 2024; pp. 151–176. [Google Scholar] [CrossRef]

- Salameh, W.A.K. Exploring the impact of AI-driven real-time feedback systems on learner engagement and adaptive content delivery in education. Int. J. Sci. Res. Arch. 2025, 14, 98–104. [Google Scholar] [CrossRef]

- Sotirov, M.; Petrova, V.; Nikolova-Sotirova, D. Personalized gamified education: Feedback mechanisms and adaptive learning paths. In Proceedings of the 2024 8th International Symposium on Innovative Approaches in Smart Technologies (ISAS), Istanbul, Turkey, 6–7 December 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Sari, H.E.; Tumanggor, B.; Efron, D. Improving educational outcomes through adaptive learning systems using AI. Int. Trans. Artif. Intell. (ITALIC) 2024, 3, 21–31. [Google Scholar] [CrossRef]

- Mishra, M.S. Revolutionizing education: The impact of ai-enhanced teaching strategies. Int. J. Res. Appl. Sci. Eng. Technol. 2024, 12, 9–32. [Google Scholar] [CrossRef]

- Inthanon, W.; Wised, S. Tailoring education: A comprehensive review of personalized learning approaches based on individual strengths, needs, skills, and interests. J. Educ. Learn. Rev. 2024, 1, 35–46. [Google Scholar] [CrossRef]

- Tabassum, S. Managing mixed-ability classrooms. In Futuristic Trends in Social Sciences Volume 3 Book 28; Iterative International Publishers: Chikkamagaluru, India; Selfypage Developers Pvt Ltd.: Karnataka, India, 2024; pp. 34–41. [Google Scholar] [CrossRef]

- Singh, B.; Singh, G.; Ravesangar, K. Teacher-Focused approach to merging intelligent tutoring systems with adaptive learning environments in ai-driven classrooms. In Advances in Higher Education and Professional Development; IGI Global: Hershey, PA, USA, 2024; pp. 77–100. [Google Scholar] [CrossRef]

- Sajja, R.; Sermet, Y.; Cikmaz, M.; Cwiertny, D.; Demir, I. Artificial intelligence-enabled intelligent assistant for personalized and adaptive learning in higher education. Information 2024, 15, 596. [Google Scholar] [CrossRef]

- Ateş, H.; Gündüzalp, C. Proposing a conceptual model for the adoption of artificial intelligence by teachers in STEM education. Interact. Learn. Environ. 2025, 1–27. [Google Scholar] [CrossRef]

- Zhai, X. Conclusions and Foresight on AI-Based STEM Education. In Uses of Artificial Intelligence in STEM Education; Oxford University Press: Oxford, UK, 2024; pp. 581–588. [Google Scholar] [CrossRef]

- Tariq, R.; Aponte Babines, B.M.; Ramirez, J.; Alvarez-Icaza, I.; Naseer, F. Computational thinking in STEM education: Current state-of-the-art and future research directions. Front. Comput. Sci. 2025, 6, 1480404. [Google Scholar] [CrossRef]

- Mohamed, A.M.; Shaaban, T.S.; Bakry, S.H.; Guillén-Gámez, F.D.; Strzelecki, A. Empowering the faculty of education students: Applying ai’s potential for motivating and enhancing learning. Innov. High. Educ. 2024. [Google Scholar] [CrossRef]

- Godwin-Jones, R.; O’Neill, E.; Ranalli, J. Integrating AI tools into instructed second language acquisition. In Exploring AI in Applied Linguistics; Iowa State University Digital Press: Ames, IA, USA, 2024; pp. 9–23. [Google Scholar] [CrossRef]

- Fazal, N.; Tahir, M.S.; Chaudhary, M.; Abbasi, M. Effectiveness of AI integration into computer-assisted language learning (CALL) on student writing skills based on gender. Pak. J. Humanit. Soc. Sci. 2024, 12, 224–230. [Google Scholar] [CrossRef]

- Kenshinbay, T.; Ghorbandordinejad, F. Exploring ai-driven adaptive feedback in the second language writing skills prompt. EIKI J. Eff. Teach. Methods 2024, 2. [Google Scholar] [CrossRef]

- Chan, S.; Lo, N.; Wong, A. Leveraging generative AI for enhancing university-level English writing: Comparative insights on automated feedback and student engagement. Cogent Educ. 2024, 12, 2440182. [Google Scholar] [CrossRef]

- Addas, A.; Naseer, F.; Tahir, M.; Khan, M.N. Enhancing higher-education governance through telepresence robots and gamification: Strategies for sustainable practices in the ai-driven digital era. Educ. Sci. 2024, 14, 1324. [Google Scholar] [CrossRef]

- Hartman, R.J.; Townsend, M.B.; Jackson, M. Educators’ perceptions of technology integration into the classroom: A descriptive case study. J. Res. Innov. Teach. Learn. 2019, 12, 236–249. [Google Scholar] [CrossRef]

- Pappa, C.I.; Georgiou, D.; Pittich, D. Technology education in primary schools: Addressing teachers’ perceptions, perceived barriers, and needs. Int. J. Technol. Des. Educ. 2023, 24, 485–503. [Google Scholar] [CrossRef]

- Theodorio, A.O. Examining the support required by educators for successful technology integration in teacher professional development program. Cogent Educ. 2024, 11, 2298607. [Google Scholar] [CrossRef]

- Patidar, P.; Ngoon, T.; Vogety, N.; Behari, N.; Harrison, C.; Zimmerman, J.; Ogan, A.; Agarwal, Y. Edulyze: Learning analytics for real-world classrooms at scale. J. Learn. Anal. 2024, 11, 297–313. [Google Scholar] [CrossRef]

- Bhavsar, S.S.; Dixit, D.; Sthul, S.; Mane, P.; Suryawanshi, S.S.; Dhawas, N. Exploring the role of big data analytics in personalizing e-learning experiences. Adv. Nonlinear Var. Inequalities 2024, 27, 571–583. [Google Scholar] [CrossRef]

- Zavodna, M.; Mrazova, M.; Poruba, J.; Javorcik, T.; Guncaga, J.; Havlaskova, T.; Tran, D.; Kostolanyova, K. Microlearning: Innovative digital learning for various educational contexts and groups. Eur. Conf. E-Learn. 2024, 23, 442–450. [Google Scholar] [CrossRef]

- Belete, Y. The link between students’ community engagement activities and their academic achievement. Educatione 2024, 3, 61–84. [Google Scholar] [CrossRef]

- Ajani, O.A. Exploring the alignment of professional development and classroom practices in african contexts: A discursive investigation. J. Integr. Elem. Educ. 2023, 3, 120–136. [Google Scholar] [CrossRef]

- Nuangchalerm, P. AI-Driven learning analytics in STEM education. Int. J. Res. STEM Educ. 2023, 5, 77–84. [Google Scholar] [CrossRef]

- Jangili, A.; Ramakrishnan, S. The role of machine learning in enhancing personalized online learning experiences. Int. J. Data Min. Knowl. Manag. Process 2024, 14, 1–17. [Google Scholar] [CrossRef]

- Vázquez-Parra, J.C.; Tariq, R.; Castillo-Martínez, I.M.; Naseer, F. Perceived competency in complex thinking skills among university community members in Pakistan: Insights across disciplines. Cogent Educ. 2024, 12, 2445366. [Google Scholar] [CrossRef]

- Naseer, F.; Khan, M.N.; Tahir, M.; Addas, A.; Aejaz, S.M.H. Integrating deep learning techniques for personalized learning pathways in higher education. Heliyon 2024, 10, e32628. [Google Scholar] [CrossRef]

- Demartini, C.G.; Sciascia, L.; Bosso, A.; Manuri, F. Artificial intelligence bringing improvements to adaptive learning in education: A case study. Sustainability 2024, 16, 1347. [Google Scholar] [CrossRef]

- Alsolami, A.S. The effectiveness of using artificial intelligence in improving academic skills of school-aged students with mild intellectual disabilities in Saudi Arabia. Res. Dev. Disabil. 2025, 156, 104884. [Google Scholar] [CrossRef]

- Okonkwo, C.W.; Ade-Ibijola, A. Chatbots applications in education: A systematic review. Comput. Educ. Artif. Intell. 2021, 2, 100033. [Google Scholar] [CrossRef]

- Kingchang, T.; Chatwattana, P.; Wannapiroon, P. Artificial intelligence chatbot platform: AI chatbot platform for educational recommendations in higher education. Int. J. Inf. Educ. Technol. 2024, 14, 34–41. [Google Scholar] [CrossRef]

- Hadiprakoso, R.B.; Sihombing, R.P.P. CodeGuardians: A gamified learning for enhancing secure coding practices with ai-driven feedback. Ultim. InfoSys J. Ilmu Sist. Inf. 2024, 15, 105–112. [Google Scholar] [CrossRef]

- Saraswat, D. AI-driven pedagogies: Enhancing student engagement and learning outcomes in higher education. Int. J. Sci. Res. (IJSR) 2024, 13, 1152–1154. [Google Scholar] [CrossRef]

- Kushwaha, P.; Namdev, D.; Kushwaha, S.S. SmartLearnHub: AI-Driven Education. Int. J. Res. Appl. Sci. Eng. Technol. 2024, 12, 1396–1401. [Google Scholar] [CrossRef]

- Henderson, J.; Corry, M. Data literacy training and use for educational professionals. J. Res. Innov. Teach. Learn. 2020. ahead-of-print. [Google Scholar] [CrossRef]

- Hu, J. The challenge of traditional teaching approach: A study on the path to improve classroom teaching effectiveness based on secondary school students’ psychology. Lect. Notes Educ. Psychol. Public Media 2024, 50, 213–219. [Google Scholar] [CrossRef]

- Eltahir, M.E.; Mohd Elmagzoub Babiker, F. The influence of artificial intelligence tools on student performance in e-learning environments: Case study. Electron. J. E-Learn. 2024, 22, 91–110. [Google Scholar] [CrossRef]

- Villegas-Ch, W.; Govea, J.; Revelo-Tapia, S. Improving student retention in institutions of higher education through machine learning: A sustainable approach. Sustainability 2023, 15, 14512. [Google Scholar] [CrossRef]

- Falebita, O.S.; Kok, P.J. Strategic goals for artificial intelligence integration among STEM academics and undergraduates in African higher education: A systematic review. Discov. Educ. 2024, 3, 151. [Google Scholar] [CrossRef]

- Hansen, R.; Prilop, C.N.; Alsted Nielsen, T.; Møller, K.L.; Frøhlich Hougaard, R.; Büchert Lindberg, A. The effects of an AI feedback coach on students’ peer feedback quality, composition, and feedback experience. Tidsskr. Læring Og Medier (LOM) 2025, 17. [Google Scholar] [CrossRef]

| Study | Focus | Conceptual Mastery Improvement | Engagement Improvement | Retention Improvement | Comparison to Current Study |

|---|---|---|---|---|---|

| [13] | AI in STEM education | Significant (quantitative not specified) | Not measured | Not measured | Our study quantifies 28% improvement, extends to mixed-ability settings. |

| [12] | Personalized AI feedback | Improved (no % specified) | Increased (qualitative) | Enhanced (qualitative) | We provide specific metrics (28%, 35%, 85%) across diverse disciplines. |

| [34] | Adaptive learning case study | Not specified | Significant (metrics not detailed) | Reduced dropout rates | Our 35% engagement increase and 85% retention rate offer precise, scalable evidence. |

| [16] | AI tutors in higher education | Improved understanding (no %) | Enhanced (qualitative) | Not measured | We add cognitive load reduction (22%) and broader applicability (STEM + Language). |

| Current Study (2025) | AI feedback in mixed-ability classrooms | 28% improvement | 35% increase | 85% retention | Builds on prior work with quantified outcomes, learning analytics, and diverse student needs. |

| Variable | Experimental Group | Control Group |

|---|---|---|

| Feedback Type | AI-Driven Adaptive Feedback | Traditional Instructor Feedback |

| Number of Participants | 350 | 350 |

| Learning Duration | 20 Weeks | 20 Weeks |

| Assessment Type | AI-based adaptive quizzes, real-time feedback | Instructor-led feedback on quizzes and assignments |

| Engagement Metrics | AI-logged interactions, error corrections, adaptive suggestions | Attendance, participation logs |

| Demographic Variable | Experimental Group (n = 350) | Control Group (n = 350) | Total (N = 700) |

|---|---|---|---|

| Gender | 52% Male, 48% Female | 50% Male, 50% Female | 51% Male, 49% Female |

| Age Range (Mean ± SD) | 18–24 (20.8 ± 2.1) | 18–24 (21.2 ± 2.3) | 18–24 (21.0 ± 2.2) |

| Field of Study | 60% STEM, 40% Language | 58% STEM, 42% Language | 59% STEM, 41% Language |

| Prior AI Learning Experience | 42% Yes, 58% No | 40% Yes, 60% No | 41% Yes, 59% No |

| Average GPA (Mean ± SD) | 2.85 ± 0.6 | 2.92 ± 0.7 | 2.89 ± 0.65 |

| Group | Number of Students | Learning Method |

|---|---|---|

| AI-Driven Group | 350 | AI Adaptive Feedback System |

| Control Group | 350 | Traditional Instructor Feedback |

| Assessment Type | Number of Questions | Topics Covered | Score Range |

|---|---|---|---|

| Pre-Study Test | 50 | Baseline Conceptual Knowledge | 0–100% |

| Post-Study Test | 50 | Applied Understanding | 0–100% |

| Survey Question | Scale (1–5) | Data Type |

|---|---|---|

| “How useful was the AI feedback in improving your understanding?” | 1 (Not at all)–5 (Extremely) | Quantitative |

| “Did AI-driven feedback reduce your learning anxiety?” | 1 (Not at all)–5 (Significantly) | Quantitative |

| “Which features of the AI feedback system did you find most helpful?” | Open-ended | Qualitative |

| Group | Pre-Test Mean (SD) | Post-Test Mean (SD) | Improvement (%) |

|---|---|---|---|

| Experimental (AI-Driven Feedback, n = 350) | 52.4 (±12.3) | 80.2 (±10.4) | 28% |

| Control (Instructor Feedback, n = 350) | 51.8 (±11.7) | 65.2 (±9.8) | 14% |

| Performance Group | Average Weekly AI Interactions | Engagement Increase (%) |

|---|---|---|

| High Performers (Top 20%) | 18.2 | 35% |

| Moderate Performers (Middle 60%) | 12.4 | 22% |

| Low Performers (Bottom 20%) | 6.3 | 8% |

| Group | STEM (n = 420) | Language (n = 280) | p-Value |

|---|---|---|---|

| AI Feedback Group | 81.4 (±9.5) | 78.2 (±10.1) | p = 0.028 |

| Instructor Feedback Group | 67.2 (±8.4) | 64.8 (±9.3) | p = 0.036 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Naseer, F.; Khawaja, S. Mitigating Conceptual Learning Gaps in Mixed-Ability Classrooms: A Learning Analytics-Based Evaluation of AI-Driven Adaptive Feedback for Struggling Learners. Appl. Sci. 2025, 15, 4473. https://doi.org/10.3390/app15084473

Naseer F, Khawaja S. Mitigating Conceptual Learning Gaps in Mixed-Ability Classrooms: A Learning Analytics-Based Evaluation of AI-Driven Adaptive Feedback for Struggling Learners. Applied Sciences. 2025; 15(8):4473. https://doi.org/10.3390/app15084473

Chicago/Turabian StyleNaseer, Fawad, and Sarwar Khawaja. 2025. "Mitigating Conceptual Learning Gaps in Mixed-Ability Classrooms: A Learning Analytics-Based Evaluation of AI-Driven Adaptive Feedback for Struggling Learners" Applied Sciences 15, no. 8: 4473. https://doi.org/10.3390/app15084473

APA StyleNaseer, F., & Khawaja, S. (2025). Mitigating Conceptual Learning Gaps in Mixed-Ability Classrooms: A Learning Analytics-Based Evaluation of AI-Driven Adaptive Feedback for Struggling Learners. Applied Sciences, 15(8), 4473. https://doi.org/10.3390/app15084473