Abstract

Frequency spectrum allocation has been a subject of dispute in recent years. Cognitive radio dynamically allocates users to spectrum holes using various sensing techniques. Noise levels and distances between users can significantly impact the efficiency of cognitive radio systems. Designing robust communication systems requires accurate knowledge of these factors. This paper proposes a method for predicting noise levels and distances based on spectrum sensing signals using regression machine learning models. The proposed methods achieved correlation coefficients of over and for noise and distance predictions, respectively. Accurately estimating these parameters enables adaptive resource allocation, interference mitigation, and improved spectrum efficiency, ultimately enhancing the performance and reliability of cognitive radio networks.

1. Introduction

The frequency spectrum, essential for wireless communications, is a limited resource that has become increasingly congested [1,2,3]. Cognitive radio (CR) dynamically allocates communication for secondary users (SUs) in parts of the spectrum, known as spectrum holes, where primary users (PUs) are absent [4,5,6]. Several techniques exist for spectrum sensing, including energy detection, feature detection, Nyquist and sub-Nyquist methods, and multi-bit and one-bit compressive sensing. More recently, machine learning models have been employed to detect the presence of PUs [7,8]. Across these methods, noise can adversely affect the accurate detection of PUs, impacting the efficient use of the spectrum. In multi-user systems, the distance between users significantly influences the noise levels. Thus, predicting the noise level in the received signal and understanding user distances is fundamental for optimizing spectrum efficiency.

In communication systems, noise refers to any unwanted or random interference that compromises the quality of a transmitted signal. Noise can distort the original information being transmitted, leading to errors, diminished signal clarity, and reduced communication performance [9]. Almost all communication channels and systems encounter noise, which can originate from various sources. The noise level can be influenced by several factors, including transmitted power, path loss, shadow fading, multipath fading, and the distance between users. In addition to these factors, additive white Gaussian noise is a type of noise that exists across all frequencies [10]. This noise is characterized by its randomness and uniform energy distribution throughout the frequency spectrum. White Gaussian noise is a common model for representing random background noise in communication systems. By predicting noise levels, one can more effectively optimize system parameters and frequency allocation [11].

The distance between users is an important factor that influences signal quality and, consequently, spectrum efficiency. In spectrum sensing, the strength of the received signal depends on the distance between the transmitter and receiver. Predicting these distances helps to adjust the transmission power levels: users closer to the transmitter require lower power for reliable communication, while users farther away need higher power [12]. This power control optimizes energy efficiency and minimizes interference. Especially in systems with limited resources, such as bandwidth, predicting distances is fundamental for effective resource allocation. Users closer to the transmitter can be assigned greater bandwidth and consequently higher data rates, while those farther away might be allocated smaller bandwidths to improve the signal quality at the same transmission power level. Additionally, distance prediction is essential for providing location-based services. The distance between users can also impact the performance of artificial intelligence models used to increase spectrum efficiency. By estimating distances, services like navigation, location-based advertisements, and emergency services can be offered.

To predict noise and distances between users, regression models and deep learning architectures are recommended [13]. Regression models are a class of machine learning algorithms designed to predict continuous numerical values based on input data. These models play a crucial role in various fields, including economics, finance, healthcare, and natural sciences. They allow for the analysis and forecasting of trends, relationships, and outcomes by learning from historical data. Among the most common traditional machine learning regression approaches are support vector regression (SVR) (an extension of support vector machines to regression), decision trees and random forests [14], linear regression (one of the simplest yet most widely used regression techniques), and ridge and lasso regression (variants of linear regression). In more robust regression approaches, convolutional neural networks (CNNs), initially designed for image analysis, can be adapted for regression tasks [15]. More recently, transformer, designed for forecasting, has shown promise for regression activities [16].

In this article, we propose the use of regression models to predict noise levels and distances based on spectrum sensing signals. During our study, we generated a dataset that considers important parameters, including a wide range of noise power densities, an extensive sensing area, and power leakage from the PUs. We compared both traditional and deep learning models for prediction purposes. Furthermore, we evaluated the results using various metrics. Our proposed method has shown promising results, with a correlation coefficient exceeding 0.98 for noise and over 0.82 for distance. Additional metrics also indicate that our method is effective in predicting noise levels and distances between users. Such predictive capability can be important in designing communication systems to enhance spectrum efficiency.

This article is organized as follows: in Section 2, related works about the prediction of noise levels and distances between users in a communication system are presented; in Section 3, the proposed methodology for data generation, training, and evaluation is described; in Section 4, the experiments conducted and the results achieved are presented; the discussions related to the experiments are presented in Section 5; lastly, in Section 6, the conclusions are presented.

Contributions

The main contributions of this paper can be summarized as follows: (1) the use of the transformer model, one of the newest and most robust networks, which achieved good results for distance prediction even with a limited architecture; (2) for noise prediction, the classical regression methods presented excellent results, achieving a correlation coefficient greater than 0.98, demonstrating a high level of similarity between the predictions and real values; (3) since the current models used for spectrum sensing are very sensitive to signal quality, the proposed approach can help in designing better communication systems based on artificial intelligence models by providing prior information.

2. Related Works

In this section, the related works regarding noise and distance in terms of user prediction in a spectral sensing scheme are described in detail.

2.1. Noise Prediction

The authors proposed a signal-to-noise ratio (SNR) estimation method based on the sounding reference signal (SRS) and a deep learning network in [17]. The proposed deep learning network, called the denoising and image restoration network (DINet), combines a denoising convolutional neural network (DnCNN) and an image restoration convolutional neural network (IRCNN) in parallel. The method was compared to other algorithms, and the results demonstrated superior performance in SNR estimation. The evaluation metric used was the normalized mean square error (NMSE) calculated over 200 test samples, yielding an NMSE value of 0.0012. This result was significantly better than the performance achieved by other algorithms.

In [11], the authors presented a method for estimating SNR in long-term evolution (LTE) systems and fifth-generation (5G) networks. They employed a combination of a CNN and long short-term memory (LSTM), known as a CNN–LSTM neural network. The CNN was utilized to extract spatial features, while the LSTM was used to extract temporal features from the input signal. Data were generated using MATLAB R2020b LTE and 5G toolboxes, taking into consideration modulation types, path delays, and Doppler shifts. The evaluation metric used was NMSE. The NMSE achieved a value of zero in the time domain for SNR ranging from to 32 dB, demonstrating very low latency. However, in the frequency domain, the proposed method exhibited lower performance.

In [18], the authors proposed a noise denoising residual network (NDR-Net), a novel neural network for channel estimation in the presence of unknown noise levels. NDR-Net comprises a noise level estimation subnet (NLES), a DnCNN, and a residual learning cascade (RLC). The NLES determines the noise interval, followed by the DnCNN, which processes the pure noise image. Subsequently, RLC is applied to extract the noiseless channel image. The evaluation metric used for assessing the model’s performance was the mean square error. The experiments conducted across different channel models—tapped delay line-A (TDL-A), TDL-B, and TDL-C—consistently demonstrated low mean square error values. However, it is worth noting that the proposed model’s performance was evaluated within an SNR range of 0 to 35 dB. This limited range does not provide a comprehensive understanding of the model’s robustness, particularly in scenarios with high levels of noise.

In Table 1, some techniques for noise prediction are summarized and compared. The methodology proposed in this article considers several variables that influence signal quality for data generation. The study incorporates noise power density across a wide range of values and explores a spectrum sensing environment where multiple users are in motion at fixed speeds over time. Several regression models are compared using various metrics to highlight the robustness of the proposed method.

Table 1.

Related works on noise prediction.

2.2. Distance Prediction

In [19], the authors proposed a deep learning approach for user equipment positioning in non-line-of-sight scenarios. The impact of variables such as the type of radio data, the number of base stations, the size of the training dataset, and the generalization of the trained models on third-generation partnership project (3GPP) indoor factory scenarios was analyzed. The model trained consisted of a customized residual neural network (ResNet) for the path gain dataset and ResNet-18 for the channel impulse response dataset. The metric used was the quantile of the cumulative distribution function of the horizontal positioning error. The authors obtained the best performance with a large number of samples for training and tuning the models.

In [20], the authors proposed a machine learning algorithm for indoor fingerprint positioning based on measured 5G signals. The dataset was created by collecting 5G signals in the positioning area and processing them to form fingerprint data. A CNN was trained to locate a 5G device in an indoor environment. The metrics used were the root mean square error and the circular error probable. The experiments were conducted in a real field and demonstrated a positioning accuracy of 96% for the proposed method.

Finally, in [21], the authors introduced a location-aware predictive beamforming approach utilizing deep learning techniques for tracking unmanned aerial vehicle communication beams in dynamic scenarios. Specifically, they designed a recurrent neural network called a location-aware recurrent network (LRNet), based on LSTM, to accurately predict unmanned aerial vehicle locations. Using the predicted location, it was possible to determine the angle between the unmanned aerial vehicle and the base station for efficient and rapid beam alignment in the subsequent time slot. This ensures reliable communication between the unmanned aerial vehicle and the base station. The simulation results demonstrate that the proposed approach achieves a highly satisfactory unmanned aerial vehicle to base station communication rate, approaching the upper limit attained by a perfect genie-aided alignment scheme.

In Table 2, some techniques for distance prediction are summarized and compared. The methodology proposed in this article considers several variables that influence signal quality for data generation. The study incorporates Euclidean distances between users across a wide range of values and explores a spectrum sensing environment where multiple users are in motion at fixed speeds over time. Several regression models are compared using various metrics to highlight the robustness of the proposed method.

Table 2.

Related works on distance prediction.

3. Methods

This section presents the proposed methods for data generation and noise and distance predictions.

3.1. System Model

The use of generated data simulating PU signals is proposed in spectrum sensing to predict the noise level of the sensed signal and the initial and final distances between users. For this, the methodology is divided as follows: (1) database generation, where the signals that represent PUs are generated [1,2]; (2) training the proposed regression models [22,23]; and (3) evaluation of the trained models for predicting noise level and distance [13,24]. At the end of the process, it is expected that the models will be able to predict the level of noise and the initial and final distances between the PU and SU during the sensing period.

The dataset utilized for training the proposed regression models comprises signal data, noise levels, and distances between the users. Initially, the SU and PU are positioned within the area , moving randomly for a duration of . During this time, signals are sensed, and data on noise levels () as well as initial () and final () distances are collected. These collected data are then used to train and test the proposed regression models. To validate the effectiveness of the method in predicting noise levels and distances, various metrics are calculated.

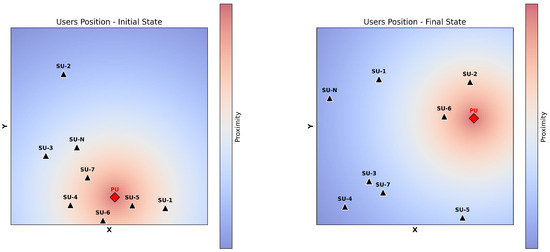

Figure 1 illustrates the representation of the environment just described. The initial state (left) is an example of how users can be positioned within the area, while the final state (right) demonstrates the change in position after . The PU, marked by a red diamond, and the SUs, represented by black triangles, are randomly positioned within the area. In the initial state, the heatmap represents the distance of each SU from the PU, with warmer (red) areas indicating closer proximity and cooler (blue) areas showing greater distances. In the final state, we can observe that the PU and SUs have changed positions, and the distances between the SUs may also vary during the observation period.

Figure 1.

User position over time (initial state on the left; final state on the right).

The output of step (1) is expected to be the signals that represent the PU, the associated noise level for each signal, and the initial and final distances during the sensing. Using this information, two regression models are trained: one for noise level prediction and another for initial and final distance predictions. In step (2), some classical machine learning regression models are employed, including random forest, decision tree, extra trees, XGBosst, LightGBM, SVR, and transformer. The output of this step consists of predicted values for noise level and distances given a test dataset. Lastly, in step (3), several metrics are used to evaluate the best models for these tasks.

3.2. Data Generation

In the spectrum sensing process, the decision on the channel condition is binary, involving two hypotheses: and [25]. Here, represents the hypothesis in which the PU is present, while represents the absence of the PU [2]. For the purposes of this paper, we will only consider hypotheses involving the presence of the PU. We assume that SU and a single PU are moving at a speed v, with their starting positions randomly chosen within a given area. As a result, the users’ locations change over a time interval of . Additionally, we are considering a multi-channel system with bands, each having a bandwidth of . Furthermore, we assume that the PU can utilize consecutive bands [1]. Therefore, the received signal of the i-th SU on the j-th band at time n can be described as

where and is the additive white Gaussian noise (AWGN), whose noise power density is , mean zero, and standard deviation . With being the proportion of power leaked to adjacent bands, represents the bands occupied by the PU and indicates the bands affected by the leaked power of the PU.

In the expression , a simplified path loss model is utilized, which can be written as follows:

where and denote the path-loss exponent and path-loss constant, respectively. Here, represents the Euclidean distance between the PU and SU i at time n. The shadow fading of the channel, indicated by , between the PU and SU i at time n in decibels (dB) can be described by a normal distribution with a mean of zero and a variance of . The term P denotes the power transmitted by the PU within a specified frequency band. Furthermore, the multipath fading factor, denoted as , is modeled as an independent zero-mean circularly symmetric complex Gaussian (CSCG) random variable. Moreover, the data transmitted at time n, represented by , have an expected value of one [1,2].

3.3. Regression Models

The machine learning regression models used to predict interference and distance between the PU and SUs are random forest, decision tree, extra trees, XGBoosting, LightGBM, SVR, and transformer.

3.3.1. Random Forest

Random forest consists of a collection of trees denoted as . Here, x represents an input vector of length q, containing a correlated random vector X, while refers to independent and identically distributed random vectors. In the context of regression, assume that the observed data are drawn independently from the joint distribution of , where Y represents the numerical outcome. This dataset includes -tuples, namely [26]. The prediction of the random forest regression is the unweighted average over the collection

3.3.2. Decision Tree

In the context of regression, the decision tree is based on recursively partitioning the input feature space into regions and then assigning a constant value to each region. This constant value serves as the prediction for any data point that falls within that region. Assume that X is the input data, Y the target variable, and represents the parameters that define the splits in the decision tree [27]. Let be the predicted value for Y given input X and parameter . Given a set of n training samples , where , the decision tree regressor seeks to find optimal split that minimizes the sum of square differences between the predicted value and the actual target value. The prediction for a given X can be represented as

where N is the number of leaf nodes (regions) in the tree, is the constant value associated with the leaf node , and is an indicator function that equals 1 if X falls within the region and 0 otherwise.

3.3.3. Extra Trees

The extra trees approach follows the same step-by-step process as random forest, using a random subset of features to train each base estimator [26], although the best feature and the corresponding value for splitting the node are randomly selected [28]. Random forest uses a bootstrap replica to train the model, while, with extra trees, the whole training dataset is used to train each regression tree [29].

3.3.4. XGBoosting

XGBoosting (XGB) is a highly optimized distributed gradient boosting library. It employs a recursive binary splitting strategy to identify the optimal split at each stage, leading to the construction of the best possible model [30]. Due to its tree-based structure, XGB is robust to outliers and, like many boosting methods, is effective in countering overfitting, making model selection more manageable. The regularized objective of the XGB model during the tth training step [31] is illustrated in Equation (5). Here, represents the loss, which quantifies the disparity between the prediction of the imputed missing value and the corresponding ground truth .

where is the regularizer representing the complexity of the kth tree.

3.3.5. LightGBM

LightGBM (LGBM) is the gradient boosting decision tree (GBDT) algorithm with gradient-based one-side sampling (GOSS) and exclusive feature bundling (EFB). The GOSS technique is employed within the context of gradient boosting, utilizing a training set consisting of n instances {}, where each instance represents an s-dimensional vector in space . In every iteration of gradient boosting, we compute the negative gradients of the loss function relative to the model’s output, resulting in {}. These training instances are then arranged in descending order, based on the absolute values of their gradients, and we select the top- instances with the largest gradient magnitudes to constitute a subset A [32]. For the complementing set , comprising of instances characterized by smaller gradients, a random subset B is extracted, sized at . The division of instances is subsequently determined by the estimated variance gain concerning vector over the combined subset , where

where , , , , and is the coefficient used to normalize the the sum of the gradients over B back to the size of .

3.3.6. SVR

Given n training data , , and represents the space of the input patterns [33]. The goal of the -SVR is to find a function that exhibits a maximum deviation of or less from the target value obtained during training while also maintaining a minimal degree of fluctuation or variability. This function can be described as

where denotes dot product in .

3.3.7. Transformer

A transformer model consists of an encoder and a decoder, each composed of multiple layers of self-attention and feed-forward neural networks. The base structure of a transformer is the self-attention mechanism. Given an input sequence, the self-attention computes a weighted sum of the values. Multi-head attention is used to capture different aspects of relationships. Let the input of a transformer layer be , where n is the number of tokens and d is the dimension of each token. Then, one block layer can be a function defined by [34]

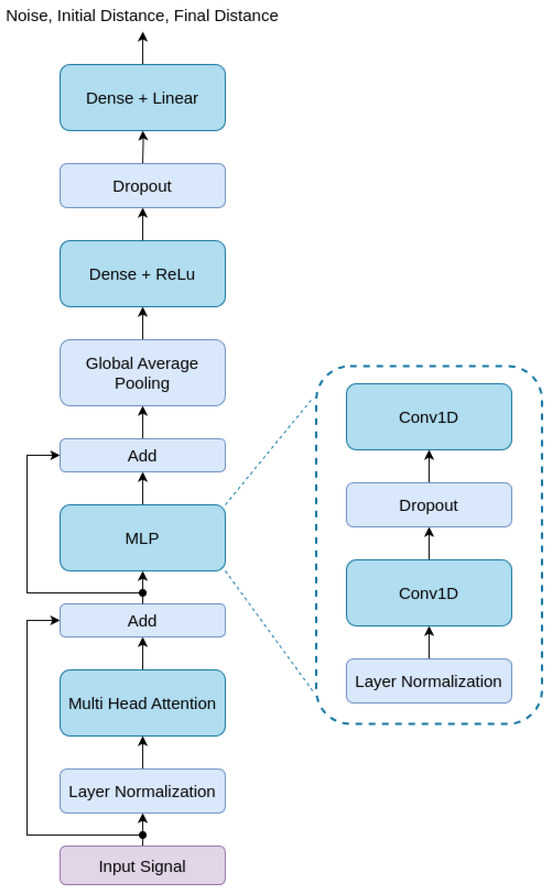

where Equations (8)–(10) refer to attention computation, and Equations (11) and (12) refer to the feed-forward network layer. is the row-wise softmax function, is the layer normalization function, and the activation function. Q, K, V, and , , , , are the training parameters in the layer [34]. In Figure 2, the architecture of the proposed transformer is displayed.

Figure 2.

Architecture of the proposed transformer.

3.4. Evaluation Metrics

The metrics used for evaluation of the regression models are the mean square error, mean absolute error, root mean square error, mean absolute percentage error, R-square, and correlation coefficient [35].

- Mean square error () [35]:where n is the number of data points in the dataset, represents the actual target value of the i-th data point, and represents the predicted value of the i-th data point.

- Mean absolute error () [35]:where denotes the absolute value.

- Root mean square error () [35]:

- Mean absolute percentage error () [35]:

- R-square () [24]:where represents the sum of squared differences between the actual target values and the predicted values. represents the total sum of squares, which is the sum of squared differences between the actual target values and their mean.

- Correlation coefficient [36]:The correlation coefficient is given by the Pearson correlation coefficient:where is the mean of the actual target values and is the mean of the predicted values.

4. Experiments and Results

This section presents the experiments conducted and the results achieved for data generation and noise and distance predictions.

4.1. Data Generation

The first stage of the experiments involves signal generation. It is assumed that multiple SUs and a single PU are moving at a velocity of km/h, and their initial positions are randomly chosen within an area of 250 m × 250 m. As a result, the users’ positions change over a time period of s. Each occupied band has bandwidth of 10 MHz, and the PU can simultaneously use 1 to 3 bands. Additionally, dBm, , , dB, and is randomly chosen between and dBm/Hz. The ratio of leaked power to adjacent bands, , is 10 dBm, resulting in leaked power to adjacent bands being half of the PU signal power. For the experiments, 42,000 instances were generated, divided into for training and for testing. For the transformer method, the training dataset was divided into for training and for validation. A total of 1024 samples per second of the signal were generated. In Table 3, the parameters and their respective values are presented.

Table 3.

Parameters and values for data generation.

4.2. Model Parameters

The random forest, decision tree, extra trees, and SVR models were parameterized using the default settings from the scikit-learn library without additional hyperparameter tuning. For the XGBoost (XGB) and LightGBM (LGBM) models, their specific configurations are provided in Table 4. These models were used as benchmarks to compare with the transformer-based approach.

Table 4.

XGB and LGBM parameters.

Regarding the transformer model, we explored various configurations for key hyperparameters, balancing performance and computational efficiency. Table 5 details the tested values for each parameter. Specifically, we evaluated different head sizes, number of heads, and filter dimensions to analyze their impact on the model’s performance. The transformer block was set to 1 due to computational constraints while maintaining effective learning. Additionally, a single dense layer of size 32 was included, and dropout (0.25) was applied to mitigate overfitting. We used a batch size of 32, the Adam optimizer with a learning rate of 0.001, and the loss function given the regression nature of our task. These configurations were explicitly described to ensure the clarity and reproducibility of the transformer model’s setup.

Table 5.

Transformer hyperparameter configurations.

4.3. Noise Prediction

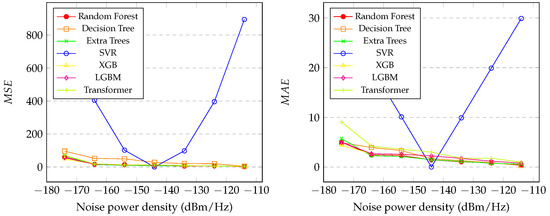

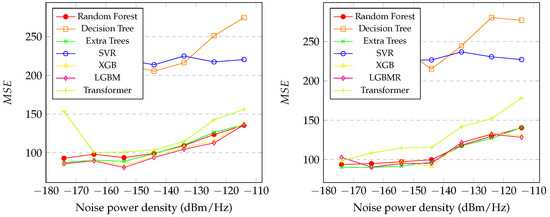

Figure 3 (left) presents the graph of the with the variation in in the proposed regression methods. It is noticeable that SVR had the worst performance, only achieving good results at an of dBm/Hz. Random forest, extra trees, XGB, LGBM, transformer, and decision tree showed similar performance, as indicated in the graph. However, random forest, XGB, LGBM, and extra trees exhibited better performance, especially at lower levels of . XGB was the best model, achieving the lowest level of , , which indicates that the predicted values are close to the actual ones.

Figure 3.

Graphic of the (left) and (right) of proposed methods with between dBm/Hz and dBm/Hz.

Figure 3 (right) presents the graph of the with the variation in in the proposed regression methods. It is also noticeable that SVR had the worst performance, only achieving good results at an of dBm/Hz. Random forest, extra trees, XGB, LGBM, transformer, and decision tree showed similar performance, as indicated in the graph. However, random forest, XGB, LGBM, and extra trees exhibited better performance, especially at levels of dBm/Hz and dBm/Hz. Random forest was the best model, achieving the lowest level of , , which indicates that the predicted values are close to the actual ones.

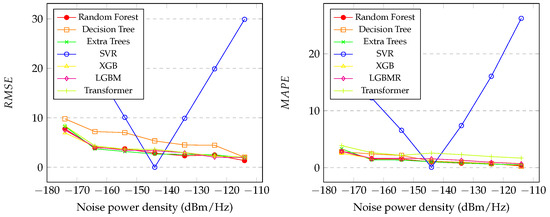

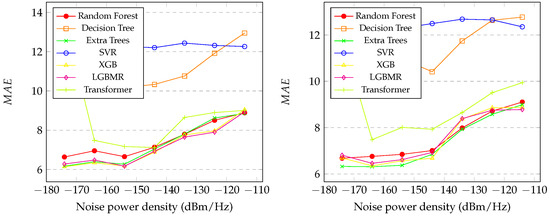

Figure 4 (left) presents the graph of the with the variation in in the proposed regression methods. It is also noticeable that SVR had the worst performance, only achieving good results at an of dBm/Hz. Random forest, XGB, LGBM, transformer, and extra trees showed similar performance, as presented in the graph. XGB was the best model, achieving the lowest level of , , which indicates that the predicted values are close to the actual ones.

Figure 4.

Graphic of the (left) and (right) of the proposed methods with between dBm/Hz and dBm/Hz.

Figure 4 (right) presents the graph of the with the variation in in the proposed regression methods. It is also noticeable that SVR had the worst performance, only achieving good results at an of dBm/Hz. Random forest, XGB, LGBM, extra trees, transformer, and decision tree showed similar performance, as indicated in the graph. However, random forest and extra trees exhibited better performance. Random forest was the best model, achieving the lowest level of , , which indicates that the predicted values are close to the actual ones.

In Table 6, the general metrics for all the proposed models are presented for noise prediction. It is worth noting that, in terms of the correlation coefficient, random forest, XGB, LGBM, transformer, and extra trees exhibited the highest and similar performance. However, random forest performed slightly better on the and metrics, while XGB performed better on the correlation coefficient, , , and . SVR presented the worst performance in the general metrics.

Table 6.

Noise regression comparison metrics of the proposed methods.

4.4. Distance Prediction

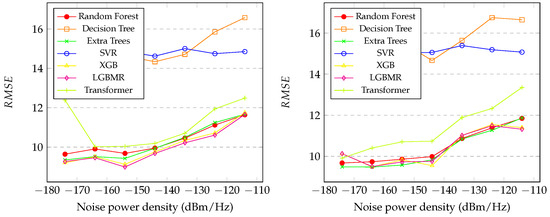

Figure 5 presents the graphics of the values of the initial and final distances between the SU and PU during the spectrum sensing with the variation in in the proposed regression methods. It is noticeable that SVR and decision tree had the worst performance at all levels of noise. Random forest, XGB, transformer, LGBM, and extra trees demonstrated similar performance, as shown in the graph. However, LGBM exhibited slightly better performance than the others, especially at the lowest level of noise, achieving . In the final distance prediction, the extra trees model exhibited slightly better performance, achieving .

Figure 5.

Graphic of the (initial distance on the left and final distance on the right) of the proposed methods with between dBm/Hz and dBm/Hz.

Figure 6 presents the graphics of the values of the initial and final distances between the SU and PU during the spectrum sensing with the variation in in the proposed regression methods. It is also noticeable that SVR and decision tree had the worst performance at all levels of noise. Random forest, XGB, LGBM, transformer, and extra trees showed similar performance, as indicated in the graph. However, XGB exhibited slightly better performance than the others, achieving . In the final distance prediction, the extra trees model exhibited slightly better performance, achieving .

Figure 6.

Graphic of the (initial distance on the left and final distance on the right) of the proposed methods with between dBm/Hz and dBm/Hz.

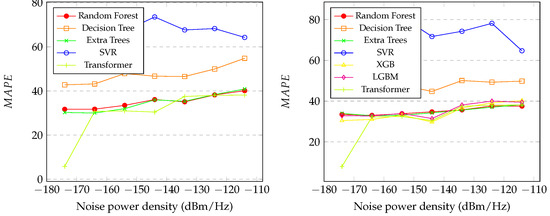

Figure 7 presents the graphics of the values of the initial and final distances between the SU and PU during the spectrum sensing with the variation in in the proposed regression methods. It is also noticeable that SVR and decision tree had the worst performance at all levels of noise. Random forest, XGB, LGBM, transformer, and extra trees showed similar performance, as indicated in the graph. However, LGBM exhibited slightly better performance than the others, achieving . In the final distance prediction, the extra trees model exhibited slightly better performance, achieving .

Figure 7.

Graphic of the (initial distance on the left and final distance on the right) of the proposed methods with between dBm/Hz and dBm/Hz.

Figure 8 presents the graphics of the values of the initial and final distances between the SU and PU during spectrum sensing with variations in using the proposed regression methods. It is also noticeable that SVR and decision tree had the worst performance levels across all levels of noise. Random forest and extra trees showed similar performance, as displayed in the graph on the left. However, transformer exhibited slightly better performance than random forest, especially at dBm/Hz, dBm/Hz, and dBm/Hz, achieving . In the graph on the right, random forest, XGB, LGBM, and extra trees showed similar performance. However, transformer exhibited slightly better performance than the others, achieving .

Figure 8.

Graphic of the (initial distance on the left and final distance on the right) of the proposed methods with between dBm/Hz and dBm/Hz.

In Table 7, the general metrics for all the proposed regression models are presented for the initial distance prediction. It is worth noting that, in all the metrics, random forest, XGB, LGBM, transformer, and extra trees exhibited the highest and similar performance. Transformer performed better in correlation coefficient, , and , demonstrating a high level of correlation between the predictions and the actual values of distances. In terms of and , LGBM achieved the lowest values, indicating that the differences between the predictions and actual values are small. The lowest values were exhibited by XGB.

Table 7.

Initial distance regression comparison metrics of the proposed methods.

In Table 8, general metrics for all the proposed regression models are presented for final distance prediction. Extra trees exhibited the best performance in all the metrics except for , , and correlation coefficient, where transformer exhibited the best performance.

Table 8.

Final distance regression comparison metrics of the proposed methods.

5. Discussion

In Section 4.3, the results for noise prediction were presented, and several important aspects need to be highlighted. In Figure 3 (left), it is noticeable that the models achieved similar performance, except for decision tree and SVR. Interestingly, the best performance occurred at the highest levels of noise, except for SVR, which specialized in a single noise level, dBm/Hz. Figure 3 (right) and Figure 4 (left and right) exhibit similar characteristics to Figure 3 (left). The overall results for random forest, XGB, LGBM, transformer, and extra trees are similar across all the metrics, with random forest and XGB performing particularly well, as demonstrated in Table 6. In contrast, SVR exhibited poor performance overall. Additionally, the correlation coefficients reveal that random forest, XGB, LGBM, and extra trees achieved strong correlations between the predicted and real noise values (Table 6). Due to the limited computational resources and the large amount of generated data, it was not possible to enhance the robustness of the transformer architectures. Furthermore, a search for optimal hyperparameter values for all the models could be a proposed enhancement for the work.

In Section 4.4, the results for the initial and final distance predictions were presented, and several notable observations arise. In Figure 5, it is evident that extra trees and LGBM achieved the highest performance. Unlike the noise prediction, the best performance for both distance predictions occurred at the lowest levels of noise for all the models, which was expected. The behavior of predicting the initial distance mirrors that of predicting the final distance, as indicated in Figure 5, Figure 6, Figure 7 and Figure 8. The general results for random forest, XGB, LGBM, transformer, and extra trees exhibit similarity across all the metrics, with extra trees, transformer, and LGBM slightly outperforming in almost all the metrics for both the initial and final distances, as shown in Table 7 and Table 8, respectively. In contrast, decision tree and SVR showed poor performance overall. Moreover, the correlation coefficients reveal that the predicted distances exhibited indices greater than in relation to the real values in both distances, initial and final. An interesting factor is that, in the initial distance prediction, the values for the XGB and LGBM models returned ’inf’. More studies are needed to understand what happened.

6. Conclusions

In this paper, a noise and distance prediction method based on spectrum sensing signals using regression models was proposed. The conducted experiments have shown that the proposed methods hold promise for predicting noise levels, as well as the initial and final distances between the PU and SU. The correlation coefficient value for XGB is the highest and closest to one (Table 6), indicating a strong correlation between the predicted and actual noise values in the test database. As a result, the proposed methods can greatly benefit various applications, especially in telecommunication and networking, enabling the design of communication systems that meet appropriate requirements for ensuring reliable and efficient data transfer.

Additionally, the predictions for the initial and final distances between the PUs and SUs are presented as results. The conducted experiments have demonstrated that the proposed methods show promise in predicting distances between users. The correlation coefficient values for transformer are the highest, exceeding (Table 7 and Table 8), which implies a good level of correlation between the predicted distances and the actual distances in the test database. It is important to highlight that the number of possible noise levels is limited to seven ( to dBm/Hz), while the number of possible distances is unknown since the distance was chosen randomly within a certain range of the area. Therefore, there may be a difference in performance between the two approaches. Hence, the proposed methods hold the potential to benefit numerous applications, including signal attenuation and path loss, interference and frequency reuse, fading and multipath effects, localization and tracking, and power control.

Author Contributions

Conceptualization, M.V.; methodology, M.V.; software, M.V.; validation, M.V.; formal analysis, M.V.; investigation, M.V.; resources, M.V.; data curation, M.V.; writing—original draft preparation, M.V. and D.A.; writing—review and editing, M.V., D.A., A.C., C.C. and W.S.; visualization, M.V., D.A., A.C., C.C. and W.S.; supervision, M.V., A.C., C.C. and W.S.; project administration, M.V.; funding acquisition, M.V., C.C. and W.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

In this article, we generate a database as described in Section 3.2. All data are available upon request. If you are interested, please contact the corresponding authors, Valadão M. and Sabino W.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Valadão, M.D.; Amoedo, D.; Costa, A.; Carvalho, C.; Sabino, W. Deep cooperative spectrum sensing based on residual neural network using feature extraction and random forest classifier. Sensors 2021, 21, 7146. [Google Scholar] [CrossRef] [PubMed]

- Lee, W.; Kim, M.; Cho, D.H. Deep cooperative sensing: Cooperative spectrum sensing based on convolutional neural networks. IEEE Trans. Veh. Technol. 2019, 68, 3005–3009. [Google Scholar] [CrossRef]

- Valadäo, M.D.; Carvalho, C.B.; Júnior, W.S. Trends and challenges for the spectrum sensing in the next generation of communication systems. In Proceedings of the 2020 IEEE International Conference on Consumer Electronics-Taiwan (ICCE-Taiwan), Taoyuan, Taiwan, 28–30 September 2020; pp. 1–2. [Google Scholar] [CrossRef]

- Valadão, M.D.; Amoedo, D.A.; Pereira, A.M.; Tavares, S.A.; Furtado, R.S.; Carvalho, C.B.; Da Costa, A.L.A.; Júnior, W.S. Cooperative spectrum sensing system using residual convolutional neural network. In Proceedings of the 2022 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 7–9 January 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Valadão, M.D.; Júnior, W.S.; Carvalho, C.B. Trends and Challenges for the Spectrum Efficiency in NOMA and MIMO based Cognitive Radio in 5G Networks. In Proceedings of the 2021 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 10–12 January 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Valadão, M.; Silva, L.; Serrão, M.; Guerreiro, W.; Furtado, V.; Freire, N.; Monteiro, G.; Craveiro, C. MobileNetV3-based Automatic Modulation Recognition for Low-Latency Spectrum Sensing. In Proceedings of the 2023 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 6–8 January 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Gupta, M.S.; Kumar, K. Progression on spectrum sensing for cognitive radio networks: A survey, classification, challenges and future research issues. J. Netw. Comput. Appl. 2019, 143, 47–76. [Google Scholar] [CrossRef]

- Arjoune, Y.; Kaabouch, N. A comprehensive survey on spectrum sensing in cognitive radio networks: Recent advances, new challenges, and future research directions. Sensors 2019, 19, 126. [Google Scholar] [CrossRef]

- Shen, X.; Shi, D.; Peksi, S.; Gan, W.S. A multi-channel wireless active noise control headphone with coherence-based weight determination algorithm. J. Signal Process. Syst. 2022, 94, 811–819. [Google Scholar] [CrossRef]

- Cheon, B.W.; Kim, N.H. AWGN Removal Using Modified Steering Kernel and Image Matching. Appl. Sci. 2022, 12, 11588. [Google Scholar] [CrossRef]

- Ngo, T.; Kelley, B.; Rad, P. Deep learning based prediction of signal-to-noise ratio (SNR) for LTE and 5G systems. In Proceedings of the 2020 8th International Conference on Wireless Networks and Mobile Communications (WINCOM), Reims, France, 27–29 October 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Nagah Amr, M.; ELAttar, H.M.; Abd El Azeem, M.H.; El Badawy, H. An Enhanced Indoor Positioning Technique Based on a Novel Received Signal Strength Indicator Distance Prediction and Correction Model. Sensors 2021, 21, 719. [Google Scholar] [CrossRef]

- Spüler, M.; Sarasola-Sanz, A.; Birbaumer, N.; Rosenstiel, W.; Ramos-Murguialday, A. Comparing metrics to evaluate performance of regression methods for decoding of neural signals. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 1083–1086. [Google Scholar] [CrossRef]

- Jain, P.; Choudhury, A.; Dutta, P.; Kalita, K.; Barsocchi, P. Random forest regression-based machine learning model for accurate estimation of fluid flow in curved pipes. Processes 2021, 9, 2095. [Google Scholar] [CrossRef]

- Wang, X.; Zhao, Y.; Pourpanah, F. Recent advances in deep learning. Int. J. Mach. Learn. Cybern. 2020, 11, 747–750. [Google Scholar] [CrossRef]

- Su, X.; Li, J.; Hua, Z. Transformer-based regression network for pansharpening remote sensing images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5407423. [Google Scholar] [CrossRef]

- Yao, G.; Hu, Z. SNR Estimation Method based on SRS and DINet. In Proceedings of the 2023 15th International Conference on Computer Modeling and Simulation, Dalian, China, 16–18 June 2023; pp. 218–224. [Google Scholar] [CrossRef]

- Li, Y.; Bian, X.; Li, M. Denoising Generalization Performance of Channel Estimation in Multipath Time-Varying OFDM Systems. Sensors 2023, 23, 3102. [Google Scholar] [CrossRef] [PubMed]

- Chatelier, B.; Corlay, V.; Ciochina, C.; Coly, F.; Guillet, J. Influence of Dataset Parameters on the Performance of Direct UE Positioning via Deep Learning. arXiv 2023, arXiv:2304.02308. [Google Scholar] [CrossRef]

- Wang, C.; Xi, J.; Xia, C.; Xu, C.; Duan, Y. Indoor fingerprint positioning method based on real 5G signals. In Proceedings of the 2023 7th International Conference on Machine Learning and Soft Computing, Chongqing, China, 5–7 January 2023; pp. 205–210. [Google Scholar] [CrossRef]

- Conti, A.; Morselli, F.; Liu, Z.; Bartoletti, S.; Mazuelas, S.; Lindsey, W.C.; Win, M.Z. Location awareness in beyond 5G networks. IEEE Commun. Mag. 2021, 59, 22–27. [Google Scholar] [CrossRef]

- Yang, H.; Xie, X.; Kadoch, M. Machine learning techniques and a case study for intelligent wireless networks. IEEE Netw. 2020, 34, 208–215. [Google Scholar] [CrossRef]

- Arjoune, Y.; Kaabouch, N. On spectrum sensing, a machine learning method for cognitive radio systems. In Proceedings of the 2019 IEEE International Conference on Electro Information Technology (EIT), Brookings, SD, USA, 20–22 May 2019; pp. 333–338. [Google Scholar] [CrossRef]

- Chicco, D.; Warrens, M.J.; Jurman, G. The coefficient of determination R-squared is more informative than SMAPE, MAE, MAPE, MSE and RMSE in regression analysis evaluation. PeerJ Comput. Sci. 2021, 7, e623. [Google Scholar] [CrossRef]

- Cichoń, K.; Kliks, A.; Bogucka, H. Energy-efficient cooperative spectrum sensing: A survey. IEEE Commun. Surv. Tutor. 2016, 18, 1861–1886. [Google Scholar] [CrossRef]

- Segal, M.R. Machine Learning Benchmarks and Random Forest Regression; UCSF: Center for Bioinformatics and Molecular Biostatistics: San Francisco, CA, USA, 2004; Available online: https://escholarship.org/uc/item/35x3v9t4 (accessed on 18 March 2025).

- Luo, H.; Cheng, F.; Yu, H.; Yi, Y. SDTR: Soft decision tree regressor for tabular data. IEEE Access 2021, 9, 55999–56011. [Google Scholar] [CrossRef]

- Ahmad, M.W.; Reynolds, J.; Rezgui, Y. Predictive modelling for solar thermal energy systems: A comparison of support vector regression, random forest, extra trees and regression trees. J. Clean. Prod. 2018, 203, 810–821. [Google Scholar] [CrossRef]

- Geurts, P.; Ernst, D.; Wehenkel, L. Extremely randomized trees. Mach. Learn. 2006, 63, 3–42. [Google Scholar] [CrossRef]

- Zhang, X.; Yan, C.; Gao, C.; Malin, B.A.; Chen, Y. Predicting missing values in medical data via XGBoost regression. J. Healthc. Inform. Res. 2020, 4, 383–394. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.Y. Lightgbm: A highly efficient gradient boosting decision tree. Adv. Neural Inf. Process. Syst. 2017, 30, 3146–3154. [Google Scholar]

- Smola, A.J.; Schölkopf, B. A tutorial on support vector regression. Stat. Comput. 2004, 14, 199–222. [Google Scholar] [CrossRef]

- Min, E.; Chen, R.; Bian, Y.; Xu, T.; Zhao, K.; Huang, W.; Zhao, P.; Huang, J.; Ananiadou, S.; Rong, Y. Transformer for graphs: An overview from architecture perspective. arXiv 2022, arXiv:2202.08455. [Google Scholar] [CrossRef]

- Botchkarev, A. Evaluating performance of regression machine learning models using multiple error metrics in azure machine learning studio. SSRN Electron.J. 2018, 3177507. [Google Scholar] [CrossRef]

- Benesty, J.; Chen, J.; Huang, Y.; Cohen, I. Pearson correlation coefficient. In Noise Reduction in Speech Processing; Springer: Berlin/Heidelberg, Germany, 2009; pp. 1–4. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).