1. Introduction

Spending prolonged periods in indoor environments can have an effect on people’s emotional stability, creativity, and stress levels, which are heavily dependent on the physical and environmental elements of architectural spaces [

1,

2,

3,

4]. However, traditional design processes often rely on intuition and subjective judgments to gauge users’ potential emotional experiences; thus, they cannot accurately capture unconscious and immediate emotional states [

4,

5]. Methods such as interviews and surveys commonly used in prior research focus mainly on conscious and verbally expressed emotions but do not fully capture subtle, instantaneous affective responses elicited by spatial stimuli [

6,

7]. To overcome these challenges, neuroscientific approaches have been recently introduced. In particular, electroencephalography (EEG) offers high temporal resolution and noninvasive measurement capabilities, enabling the real-time evaluation of users’ unconscious affective and cognitive states [

8,

9].

Among the various EEG analysis techniques, event-related potential (ERP) analysis is a precise and powerful tool for measuring immediate emotional responses to specific stimuli [

7,

10,

11,

12]. According to neuroscientific research, ERP components N200, P300, and late positive potential (LPP) are closely associated with emotional valence to stimuli (e.g., preference, positivity, or negativity). When users like or dislike a given spatial stimulus, measurable differences in these ERP components can be observed. Further, recent artificial intelligence research shows that convolutional neural network (CNN) long short-term memory (LSTM) models, which capture both the spatial and the temporal properties of EEG data, can effectively learn subtle neural patterns to improve the accuracy of emotion classification [

13]. Therefore, we hypothesize the following:

EEG patterns, particularly ERP components (e.g., N200, P300, and LPP), will significantly differ between preferred and non-preferred stimuli.

A deep learning model trained on EEG signals can effectively detect these distinctions in ERP components, thereby achieving a high accuracy in classifying affective responses (i.e., preferred vs non-preferred) to architectural spaces.

By quantitatively measuring users’ affective experiences in architectural environments using EEG and ERP analysis and integrating these findings with deep learning techniques, we propose a methodology for personalized design. This approach is expected to overcome the limitations of traditional architectural design methods while contributing to a more scientific and objective process for affective architectural design.

This paper briefly reviews the previous research on the relationships between architectural spaces, emotions, and preferences and the theoretical background of EEG-based emotion analysis. It then details the procedures used to preprocess and analyze the EEG data and design and evaluate the model. Through these steps, we aim to establish a process for quantitatively understanding users’ emotional responses to architectural spaces and building a foundational dataset for personalized space design. Ultimately, we show the potential of neurophysiological approaches in architectural practice for incorporating user experiences more deeply, contributing to developments in affective architecture and real-time adaptive space design.

2. Literature Review

2.1. Physical Characteristics of Architectural Spaces and Their Influence on Emotions and Preferences

Several studies have confirmed that the physical attributes of an architectural space significantly affect users’ psychological responses and spatial preferences (see

Table 1) [

14,

15]. Regarding spatial form, curved structures can provide psychological comfort and lead to more positive evaluations, while an overabundance of curves sometimes creates visual confusion or stress [

16,

17,

18,

19,

20]. As for lighting, natural daylight tends to reduce stress and foster positive emotional states, thereby enhancing preference, whereas artificial lighting can elicit a sense of emotional distance in certain cases [

21]. High ceilings can increase openness and creativity, whereas lower ceilings may enhance concentration but risk inducing a sense of confinement [

22]. Other design elements—such as color, materials, and acoustics—also deliver diverse psychological experiences to users and serve as key factors in shaping overall spatial preference [

14]. For example, warm colors and natural materials often foster psychological comfort and increase preference, while cooler tones or synthetic materials may create a sense of detachment.

Such emotional responses triggered by various physical elements have a direct impact on the assessment of buildings and act more broadly as a crucial indicator of how well a space might be utilized or succeed.

These studies have relied on subjective surveys to evaluate emotions or preferences regarding space. The self-reporting approach can be limited because of biases in cognition or memory distortion, underscoring the need for a more objective, quantifiable approach to understanding how architectural environments relate to user emotions.

To address this limitation, this study proposes leveraging EEG-based bio-signal analysis. EEG captures real-time neural responses to specific architectural stimuli—such as images of spaces or virtual reality (VR) environments—thereby quantifying the user’s neurophysiological reactions. By examining ERP components, one can derive meaningful differences in emotional responses. This approach overcomes some of the shortcomings of traditional subjective evaluation methods and may help interpret systematically the causal relationship between emotional responses and the physical properties of architectural spaces.

This study aims to contribute to user-centered architectural design by measuring emotional and cognitive responses objectively and quantitatively via EEG, employing it for preference classification in architectural contexts. An approach like this can effectively integrate emotional factors—often overlooked in traditional design processes—and ultimately enhance user satisfaction and the potential success of a space.

2.2. Theoretical Background of EEG-Based Emotion Analysis

“EEG” is a noninvasive method for measuring the brain’s electrical activity in real time through electrodes placed on the scalp [

23]. It offers a high temporal resolution on the scale of milliseconds, capturing momentary emotional and cognitive processes. EEG signals span multiple frequency bands, from delta (0.5–4 Hz) to gamma (30–100 Hz or higher), each reflecting various cognitive states [

24,

25]. Because of these features, EEG is widely applied in fields such as learning, decision-making, and emotion recognition. Among EEG-based methods, ERP analysis focuses on time-locking the EEG signal to the onset of specific stimuli, thereby isolating and quantifying the brain’s rapid responses to those stimuli [

26]. By observing ERP components such as N100, N200, P300, and LPP following spatial stimuli, one can quantitatively infer how users perceive and evaluate those stimuli [

27].

EEG data can be analyzed from different perspectives—time, frequency, or spatial domain—as summarized in

Table 2. For instance, a time-domain analysis is well suited for ERP assessments, while a frequency-domain analysis uses specific bands (e.g., alpha and beta) to gauge the cognitive load or emotional state [

28,

29,

30,

31,

32,

33,

34,

35,

36].

In the architectural domain, ERP analysis has emerged as a powerful technique for quantifying psychological responses to specific spatial elements. For example, N100 and N200 reflect the recognition of stimuli or the degree of attentional shift, P300 is associated with positive emotions and decision-making, and LPP represents sustained emotional processing over time.

Table 3 summarizes the major ERP components, their typical time ranges, main cognitive functions, and their relevance to architectural design [

46,

47,

48,

49,

50,

51].

Because EEG provides real-time emotional analysis and more objective data than self-reported measures, its potential has been recognized in diverse applications such as human–computer interactions and brain–computer interfaces [

52,

53]. For instance, EEG in gaming or VR environments can detect real-time changes in user state, adjusting interfaces or content dynamically [

54,

55,

56]. The wide range of frequency information (delta to gamma) also enables the simultaneous interpretation of various states like stress, relaxation, or concentration [

24,

27,

57,

58].

However, EEG is sensitive to noise from eye blinks, electromyographic signals, and electrical interference, and its relatively low spatial resolution complicates pinpointing localized activations in the brain [

52,

53]. Moreover, even when exposed to identical stimuli, EEG patterns can vary greatly among individuals, making it challenging to construct generalized models [

59]. To overcome these problems, sophisticated preprocessing methods such as independent component analysis or empirical mode decomposition are needed, possibly in tandem with multi-modality approaches (EEG plus functional magnetic resonance imaging) and large-scale data training using deep learning [

60,

61].

Table 4 summarizes the key advantages and limitations of EEG-based emotion analysis.

Recently, an increasing number of empirical studies in the architectural domain have applied EEG.

Table 5 presents representative examples of this research and their key findings.

As shown in

Table 5, EEG can quantitatively measure users’ emotional and cognitive reactions in real time, providing a systematic interpretation of how design factors in an architectural environment affect human response [

74]. When combined with VR or augmented reality technologies, EEG also facilitates experiments on design scenarios during early phases of construction [

64,

72]. Despite the field of neuro-architecture being at a relatively early stage—requiring larger sample sizes and studies across various environmental conditions—integrating EEG throughout the design process can effectively incorporate user emotional factors. This may ultimately advance architectural environments that enhance quality of life [

58,

75,

76].

2.3. Deep Learning-Based EEG Data Analysis

“Deep learning” is a machine learning technique based on multilayer artificial neural networks, modeled after the structure of the human brain. It excels at learning complex, nonlinear data patterns and is particularly effective for unstructured data such as images, audio, and time series [

77]. Since EEG is a complex biological signal, deep learning has recently garnered attention for its capacity to automatically extract meaningful features from such data. Deep learning models comprise an input layer, multiple hidden layers, and an output layer, typically trained via backpropagation algorithms. Optimizers such as stochastic gradient descent, adaptive moments of gradient (Adam), and root-mean-square propagation and regularization methods like dropout and batch normalization are used to prevent overfitting and enhance generalizability [

78,

79].

As outlined in

Table 6, activation functions provide nonlinear transformations that help learn the complex spatiotemporal patterns in multichannel EEG data. EEG’s high temporal resolution and inter-channel spatial correlation increase the difficulty of analysis [

80,

81]. Through its layered architecture, deep learning can jointly learn these spatiotemporal characteristics, positioning the method as effective for EEG-based emotion recognition or cognitive state classification [

61,

82].

Different deep learning models are applied depending on data characteristics and analytical objectives. CNNs excel at extracting spatial features in multichannel EEG data but may be limited in modeling temporal dependencies. In contrast, recurrent neural networks and variants like LSTM are better at handling time-series continuity but can be computationally expensive [

77,

83,

84,

85]. Gated recurrent units offer a simpler structure than LSTM, reducing computational load, whereas the transformer model leverages parallel processing and attention mechanisms for large-scale data learning but demands significant computational resources [

78,

86].

Originally praised for their performance in image processing, CNNs have shown notable success in detecting spatial patterns across multiple EEG channels [

87,

88,

89]. The convolutional layers in a CNN capture local features in multichannel EEG data, while pooling layers reduce spatial dimensions to mitigate noise and improve computational efficiency [

77,

88]. Meanwhile, LSTM, a specialized recurrent neural network architecture, addresses the challenge of long-term dependencies in sequential EEG data [

85,

90,

91]. LSTM alleviates vanishing or exploding gradients by incorporating input, forget, and output gates, making it particularly effective for ERP interval analysis or irregular EEG pattern detection [

92,

93,

94,

95].

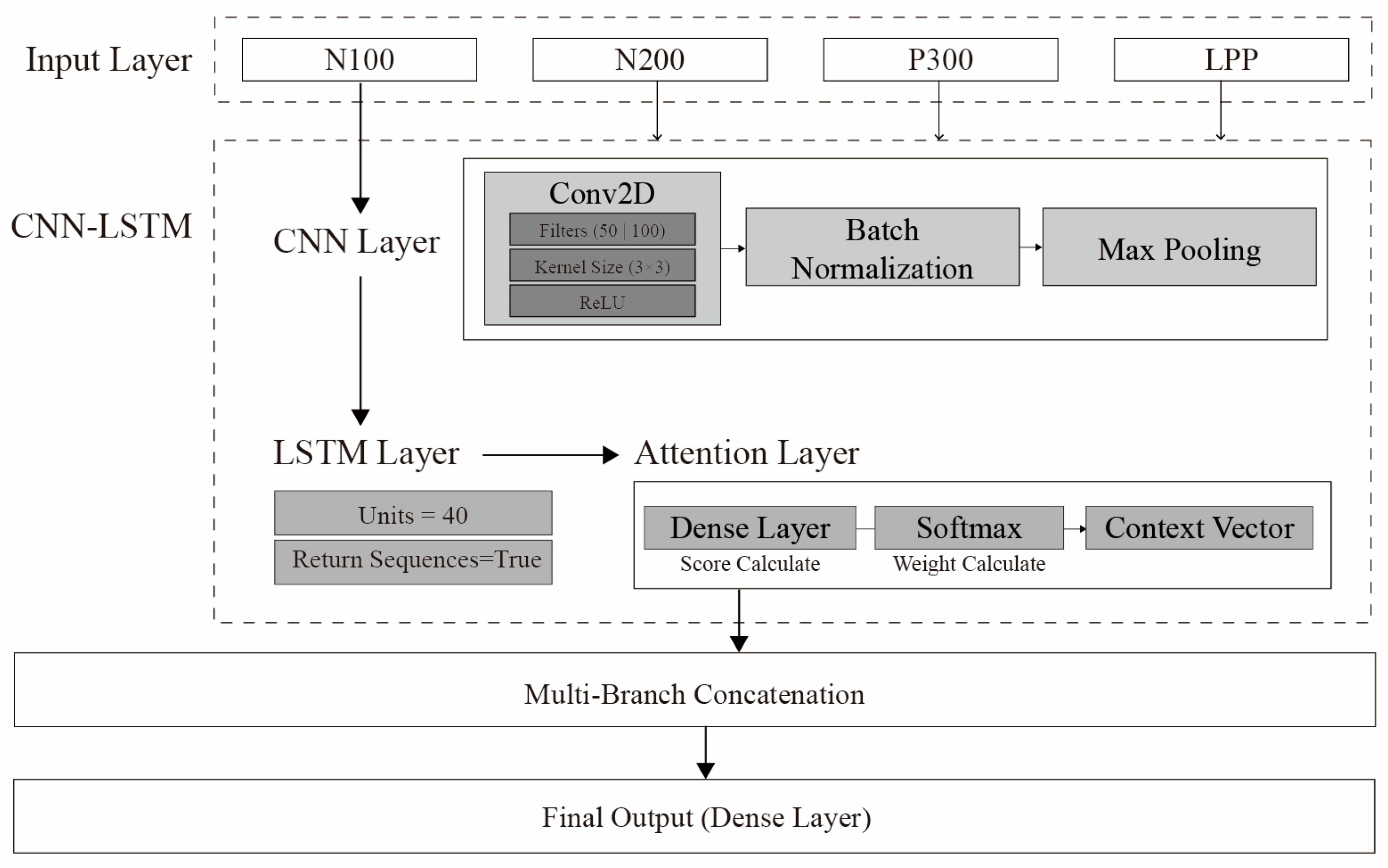

Recently, hybrid CNN-LSTM approaches, which combine the ability of CNNs to extract spatial features with the ability of LSTM to capture temporal dependencies, have shown a superior accuracy and generalization over single-model alternatives [

96].

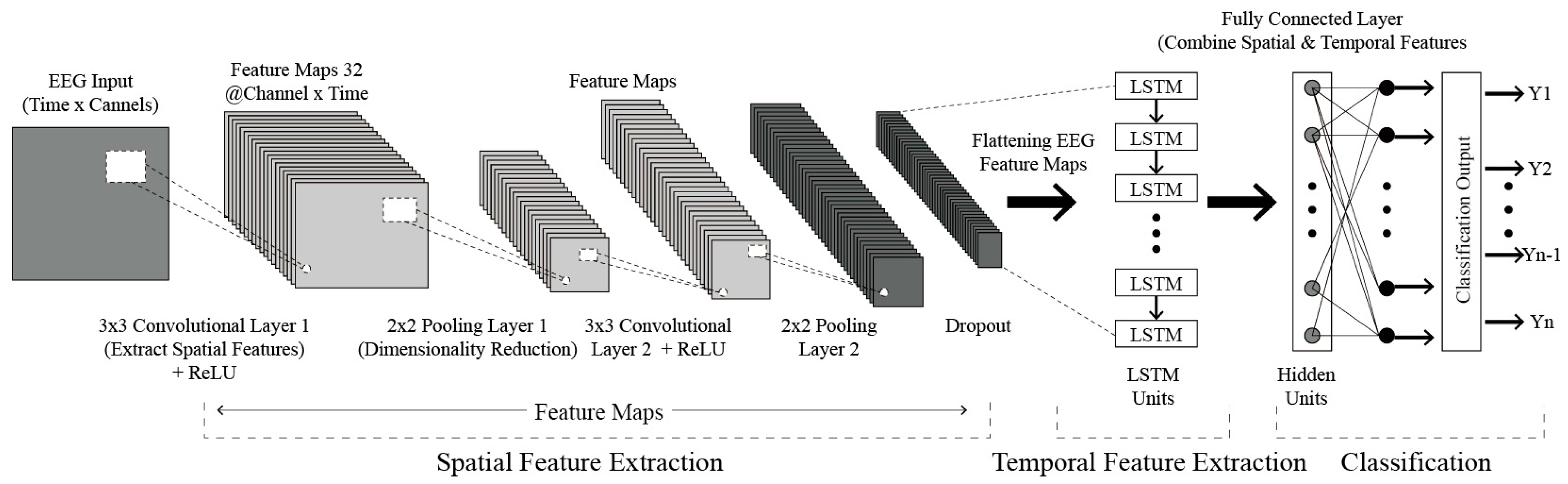

Table 7 briefly compares CNN, LSTM, and CNN-LSTM models, while

Figure 1 visualizes an example CNN-LSTM architecture [

96,

97].

Empirical studies show EEG-based deep learning models excel in various tasks, including emotion recognition, motor imagery classification, seizure detection, and sleep stage classification, among others [

92,

99,

100,

101,

102].

Table 8 summarizes key studies that achieved a circa 85–90% or higher accuracy in these tasks [

91,

93,

99,

103,

104,

105,

106]. CNN-LSTM hybrid models have recorded over 90% accuracy in emotion recognition, significantly expanding the potential of EEG-based brain–computer interface applications [

102,

107].

Building on these previous works, the present study applies a CNN-LSTM hybrid model to EEG emotion analysis for classifying users’ emotional preference toward architectural stimuli. Our goal is to achieve a high classification accuracy and robust generalization, while simplifying complex preprocessing steps.

3. Materials and Methods

3.1. Experimental Setup

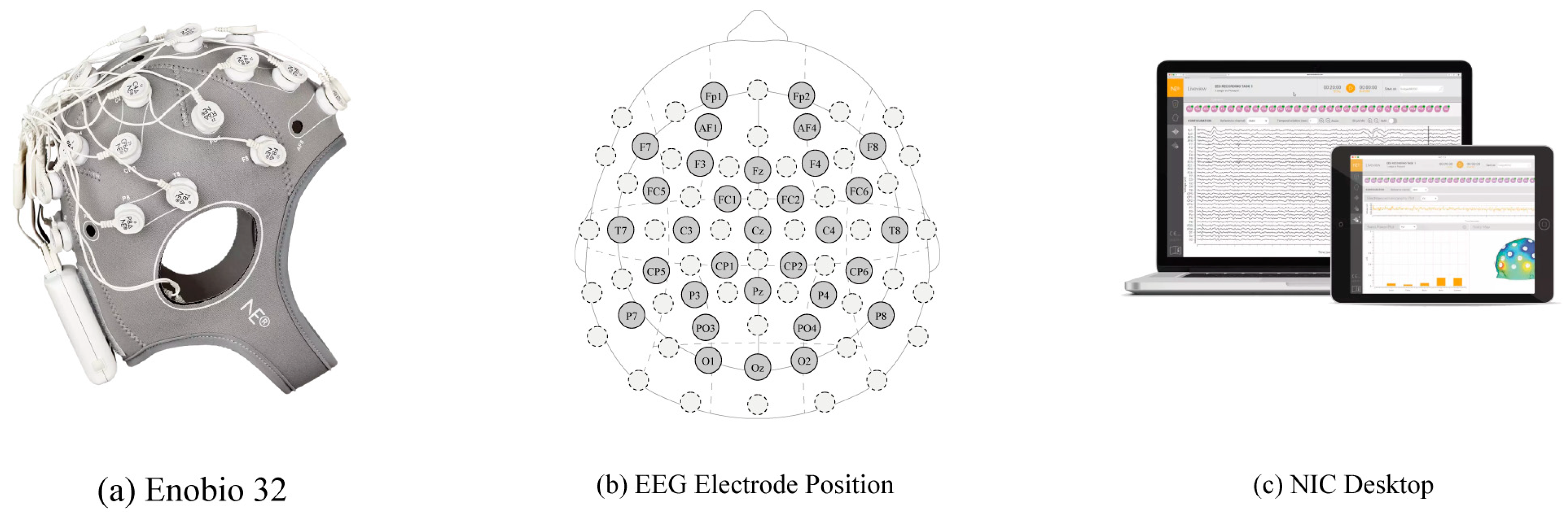

EEG data were used to quantitatively analyze user preferences and emotional responses to architectural stimuli. We employed an Enobio 32 (Neuroelectrics, Barcelona, Spain)—a 32-channel wireless EEG system—and NIC software (NIC v2.1.3.11) for precise, reproducible data collection.

Figure 2 shows the EEG device with channel positions and the NIC software platform.

The Enobio 32 noninvasively measures high-density neural signals at a 24-bit resolution [

112,

113]. It supports a 500 Hz sampling rate and covers key EEG frequency bands (delta, theta, alpha, beta, and gamma) [

113,

114]. Its electrode placement follows the international 10–20 standard, which includes frontal (Fz, F3, and F4), parietal (Pz, P3, and P4), and occipital regions, all closely tied to emotion and cognition [

7,

13,

112,

115].

The NIC software provides real-time EEG visualization, time–frequency analysis, and 3D brainwave mapping [

112,

114,

116,

117]. Data are stored in multiple formats (e.g., CSV and EDF). A 60 Hz line filter and visual bandpass filtering from 1 to 50 Hz were applied to minimize noise, focusing especially on alpha and beta bands, which are strongly associated with emotional responses [

26,

118,

119,

120]. Additionally, the wireless setup lets participants move more naturally, reducing external signal interference and user anxiety, thus improving data reliability [

112,

121].

For architectural stimuli, we used Python 3.9 and the google-images-search library (“

https://pypi.org/project/Google-Images-Search/ (accessed on 25 January 2025)”) to collect images of 25 architectural styles (e.g., modern, contemporary, and rustic) [

122]. All images had a minimum resolution of 1920 × 1080 pixels and were standardized for brightness, color, and contrast to minimize visual discrepancies [

123,

124].

During the experiment, each image was presented in a random order on a monitor for 5 s, followed by a 2 s rest interval to reduce participant fatigue and clearly capture EEG responses (ERP) [

13,

125]. After viewing each image, participants indicated their response—like (1), neutral (2), or dislike (3)—via a keyboard input, which was synchronized with the EEG signal [

126].

The NIC software’s event markers align image-presentation timing and participant-response timing with the EEG signal [

127,

128]. This enables precise mapping between each image’s preference rating and the corresponding EEG pattern. EEG and event data were stored in various file formats (.easy, .edf, and .nedf), ensuring a broad compatibility with different analytical tools. The header files contain participant IDs, the experiment date and time, channel information, and event information that precisely matches stimulus onsets and responses.

3.2. Pilot Experiment

Preliminary Validation

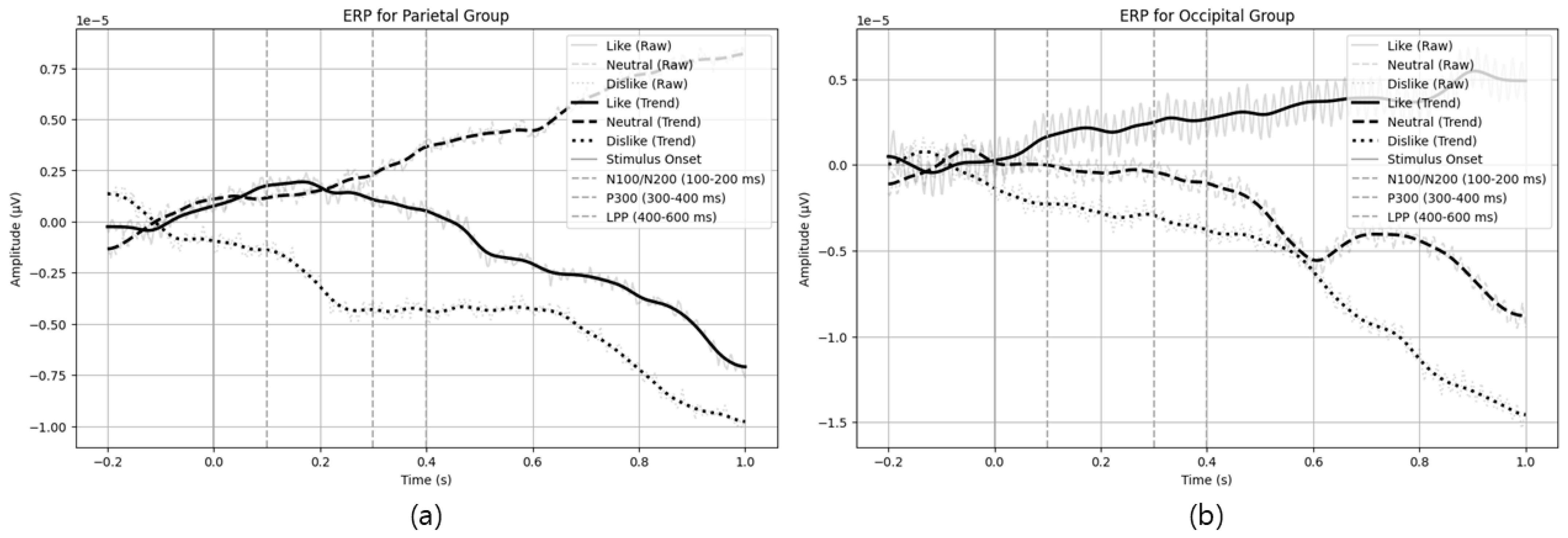

Before conducting the main experiment, we performed a pilot experiment with eight architecture majors to verify the suitability of stimulus presentation and data analysis procedures. Participants watched multiple architectural images while EEG was recorded, focusing in particular on ERP components (N100/N200, P300, and LPP) [

129,

130].

Figure 3 illustrates example ERP patterns in parietal and occipital channels, confirming the experimental design was appropriate for measuring emotional responses.

From the pilot experiment, parietal channels showed more negative amplitudes (N100/N200 range) and sustained positive amplitude in the LPP range for ‘like’ (preferred) stimuli, indicating robust initial sensory processing and prolonged emotional engagement. In contrast, ‘dislike’ (non-preferred) stimuli elicited a higher positive amplitude near the P300 range, suggesting a deeper cognitive processing of negative stimuli. Similar trends were observed in occipital channels, where preferred stimuli showed strong negative amplitudes in the 0.1–0.2 s interval, and non-preferred stimuli displayed positive amplitude increases around P300. These ERP differences reflect variations in visual and cognitive processing triggered by the emotional value of the stimuli.

Table 9 summarizes the observed ERP features and interpretations for each stimulus type (like, neutral, and dislike).

The pilot results indicate that this research protocol is indeed suitable for exploring emotional responses; stable EEG patterns were obtained [

131,

132,

133,

134]. Building on these insights, we further investigate the potential to predict user preference and analyze architectural elements that induce affective responses in the main experiment.

3.3. Experiment Design and Procedure

3.3.1. Participants

The main experiment involved 40 participants (20 males and 20 females, average age 23.98 years), including architecture students and practitioners. A G*Power analysis indicated an optimal sample size of around 32–34, but 40 participants were recruited to accommodate potential dropouts [

135,

136,

137,

138,

139,

140,

141,

142,

143].

Table 10 summarizes the age distribution of the participants. Selection criteria accounted for the influence of architectural knowledge on spatial emotion evaluation, and participants reported no neurological or psychiatric conditions. All participants had normal or corrected-to-normal vision and lifestyle factors that might affect EEG signals (e.g., excessive alcohol/caffeine consumption and smoking) were also controlled [

26,

135,

136,

137,

138,

139]. This study was approved by the Institutional Review Board(IRB) at Hanyang University (HYUIRB-202504-006), and all participants provided written informed consent [

144].

3.3.2. Stimuli Selection and Categorization

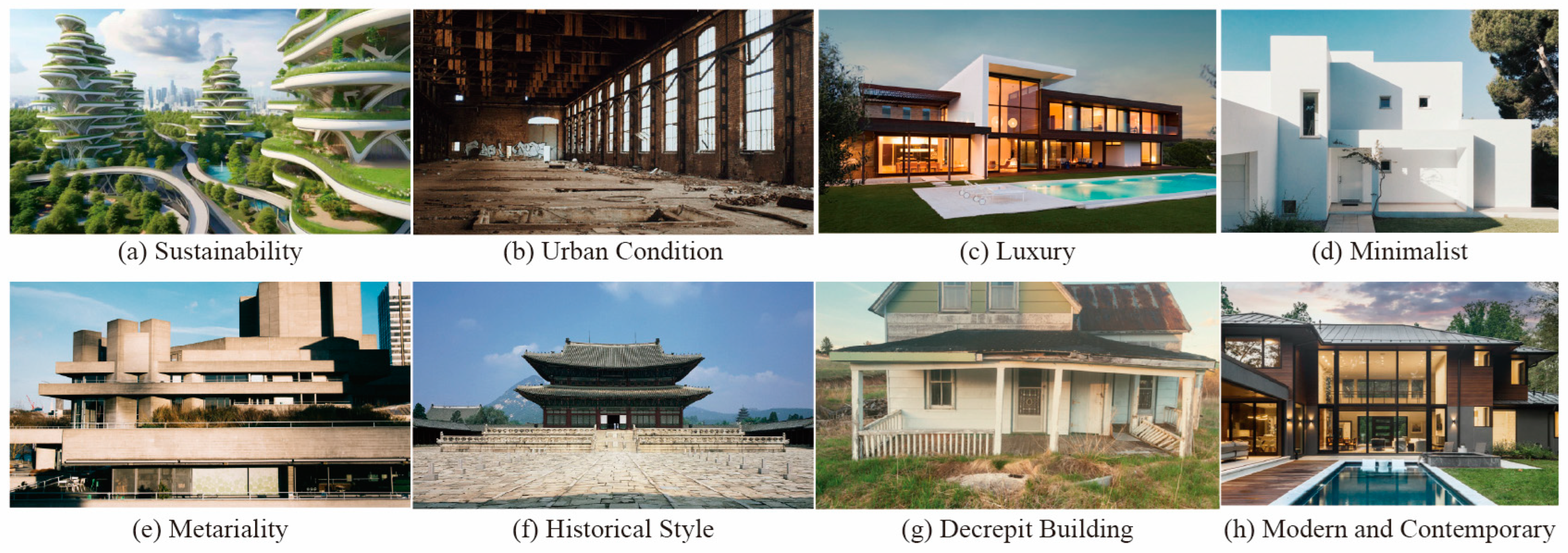

To evaluate neural responses to various architectural styles, we initially referred to 25 distinct design styles proposed in previous research [

145]. These styles were chosen based on (1) diversity (covering cultural, regional, and temporal variations), (2) comparability (visually distinctive characteristics enabling comparative analysis), and (3) popularity and recognition. For each of the 25 styles, we used Google Images API (SerpApi) to crawl approximately 10 candidate images per style, resulting in an initial pool of 250 images.

To ensure the visual consistency and reliability of EEG data, we applied the following screening procedures:

Only high-resolution images of at least 2 megapixels were selected to maintain visual clarity.

Brightness, luminance, and contrast were standardized to eliminate unnecessary visual biases.

Images were presented in a random order to mitigate learning effects.

Duplicate or low-quality images were excluded to enhance data reliability.

Additionally, each image was verified by two architecture experts to ensure it accurately represented its intended category (e.g., visible eco-friendly elements for Sustainability, deteriorated facades for Urban Condition). Any image that did not clearly exhibit category-specific attributes or that showed irrelevant content was removed.

As a result, we finalized 100 images (four images per style on average) for the EEG experiment. However, for illustrative clarity,

Figure 4 shows eight representative styles—Sustainability, Urban Condition, Luxury, Minimalist, Materiality, Historical Style, Decrepit Buildings, and Modern and Contemporary—along with typical features characteristic of each. By covering a broad spectrum of aesthetic and functional attributes, these styles can elicit varying degrees of liking or disliking among users, making them well suited for EEG-based affective analysis.

3.3.3. Experimental Setup and Environment

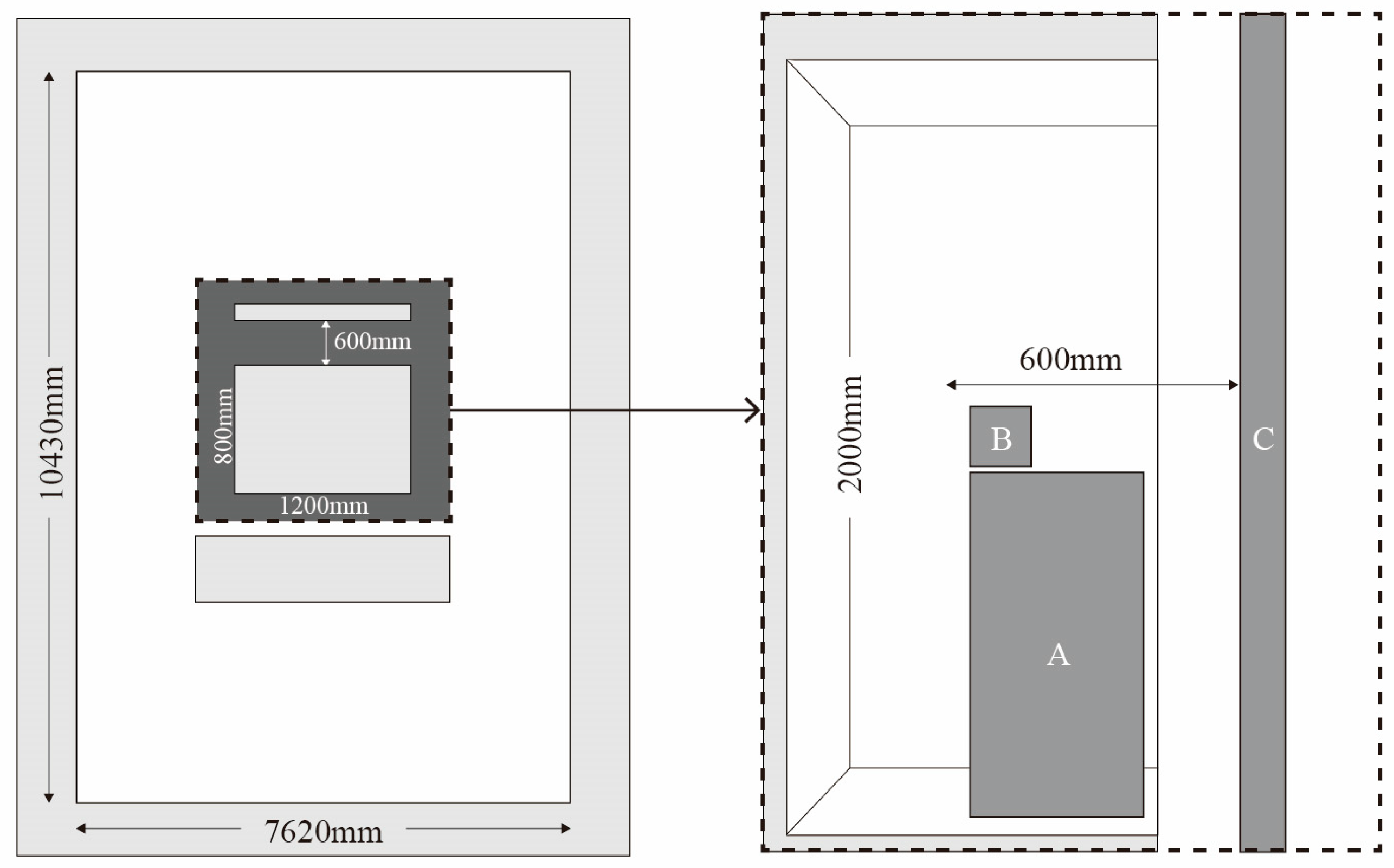

Experiments took place in Room 410 of the Design Computing Lab at Hanyang University’s Science and Technology building. The lab space measures approximately 7620 × 10,430 × 2640 mm. A booth measuring 800 × 1200 × 2000 mm was placed at the center, with interior surfaces finished in aluminum-coated film to provide uniform lighting and block external electromagnetic interference [

53,

146,

147]. The monitor (1920 × 1080 pixels resolution) was positioned 60 cm from participants (

Figure 5).

3.3.4. Experimental Procedure

Participants were seated in a noise-controlled environment. Prior to the experiment, participants were instructed to, as follows:

Avoid excessive movement to minimize EEG artifacts.

Maintain a visual fixation on the screen during the stimulus presentation.

Rate each image based on their intuitive emotional response.

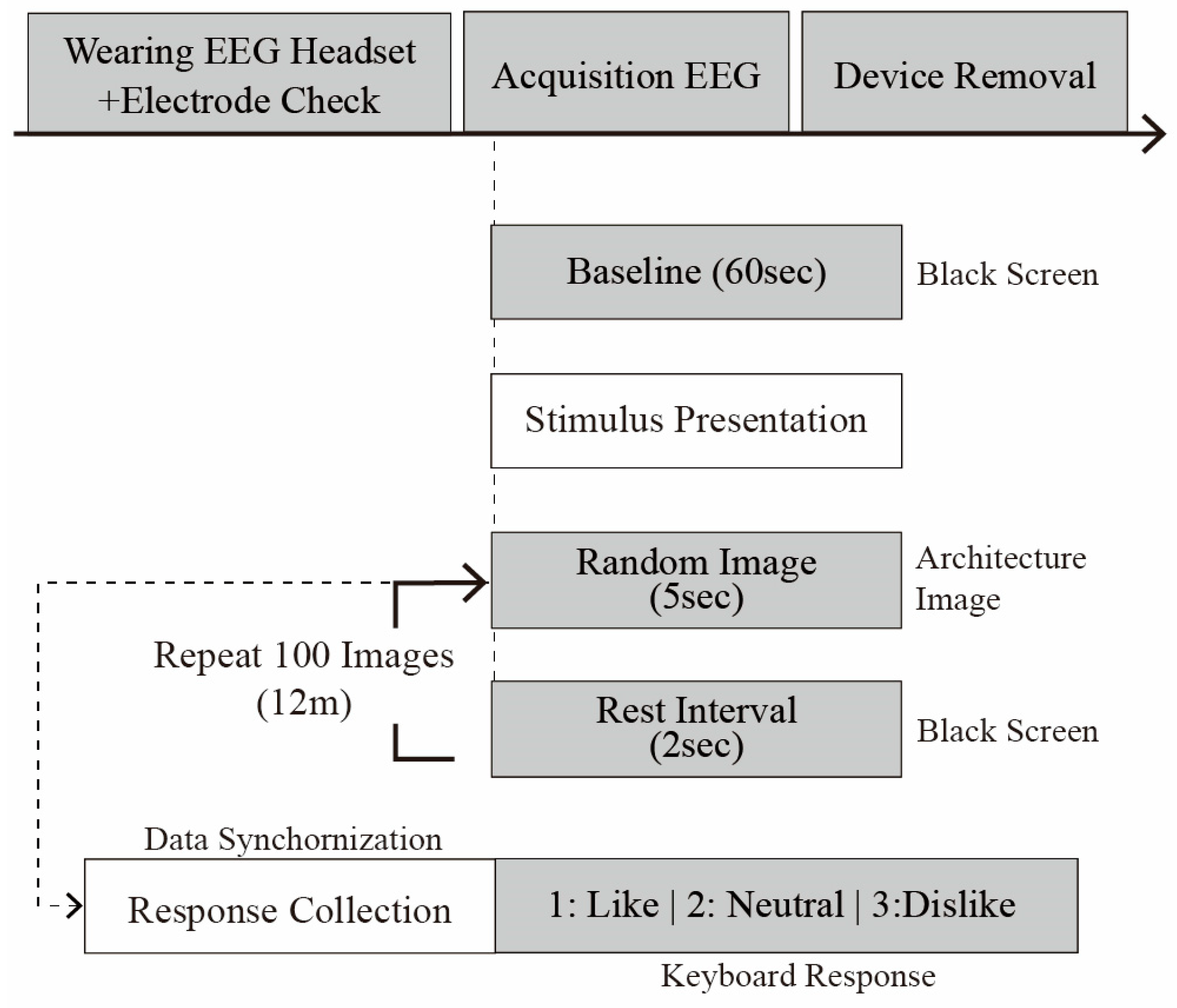

To establish baseline resting-state brain activity, participants first gazed at a black screen for 60 s while EEG signals were recorded. Subsequently, architectural images were presented sequentially, and EEG responses were continuously recorded. Each trial proceeded as follows (see

Figure 6):

Stimulus Presentation (t = 0): an architectural image was presented for 5 s.

Participants rated the image using a keyboard, selecting one of three options (1: like; 2: neutral; or 3: dislike).

An interval of 2 s was introduced between each stimulus to prevent neural carryover effects.

The trial sequence was repeated 100 times for a total duration of approximately 12 min.

The EEG data were stored in multiple formats (.easy, .edf, .nedf, and .info). Event files recorded the timestamp for image presentation, response inputs, and the preference code (i.e., 1: like, 2: neutral, and 3: dislike).

4. Data Analysis and Model Development

4.1. EEG Data Preprocessing

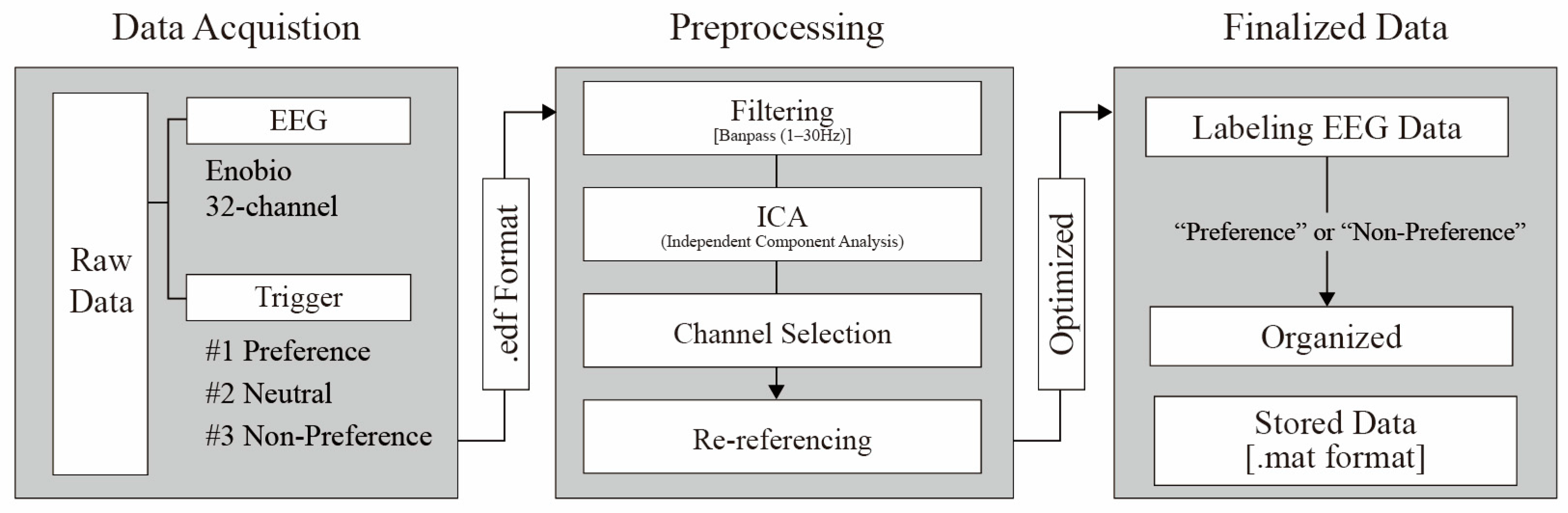

To improve signal quality and ensure accurate analysis, we conducted a multistep preprocessing procedure on the EEG data [

148,

149]. This included (1) filtering and artifact removal, (2) channel selection and re-referencing, and (3) final dataset construction, as shown in

Figure 7.

The initial dataset comprised 4000 EEG trials collected from 40 participants, with each participant evaluating 100 architectural images. EEG signals were recorded at a sampling rate of 500 Hz using the 10–20 electrode system, with a focus on the parietal (Pz, P3, and P4) and occipital (Oz, O1, and O2) regions. Each trial included a 1-s baseline (−200 ms to 0 ms) followed by a 5-s stimulus response window (0–5 s).

Firstly, we applied a 1–30 Hz bandpass filter to preserve frequency bands particularly relevant to emotional and cognitive processing (notably, alpha [8–12 Hz] and beta [12–30 Hz]), while removing low- and high-frequency noise [

150,

151].

A finite impulse response filter (order = 2048, Hamming window) was first applied to band pass the EEG signals within the 1–30 Hz range. This was aimed at preserving the frequency bands most relevant to emotional and cognitive processing—namely, the alpha (8–12 Hz) and beta (12–30 Hz) bands—while attenuating low- and high-frequency noise. To eliminate power line interference, a fourth-order Butterworth notch filter was applied at 60 Hz.

Next, we conducted an independent component analysis to identify and remove physiological artifacts such as eye blinks, muscle movements, and cardiac signals. Next, the ‘pop_runica’ function in the EEGLAB toolbox was used to apply the extended infomax algorithm (‘extended’, 1), with default EEGLAB parameters including a maximum iteration count of 512 and an initial learning rate of 0.001 [

116,

152].

Following the independent component analysis decomposition, the ICLabel plugin was used to automatically classify each independent component into categories such as ‘brain’, ‘eye’, and ‘muscle’. Components with a probability of 0.9 or higher in the ‘eye’ or ‘muscle’ categories were marked for removal. This criterion was implemented using the ‘pop_icflag’ function, with the threshold set as [NaN NaN; 0.9 1; 0.9 1; NaN NaN; …], ensuring the preservation of neural signals while selectively removing artifact-related components.

In our analysis, we focused on parietal (Pz, P3, and P4) and occipital (e.g., Oz and O2) channels. Parietal channels are closely linked to spatial cognition, attention, and emotion processing—particularly useful for examining P300—while occipital channels are central to visual information processing [

26,

153,

154,

155].

We then applied a common average reference across channels to reduce variance and enhance dataset consistency [

156,

157,

158]. This yielded an improved interpretability in ERP analyses [

158,

159,

160].

We performed common average referencing by subtracting the average signal across all channels from each individual channel. This method reduces the common-mode noise shared among electrodes and mitigates reference bias between channels [

161,

162]. Specifically, common average referencing suppresses unnecessary global fluctuations (e.g., non-neural noise uniformly distributed across electrodes), thereby enhancing the relative differences between channels and improving the interpretability of ERP and other signal analyses.

EEG data are originally recorded with an initial reference electrode (typically represented as a zero-filled channel). By including this initial reference channel in the averaging process and subsequently removing it after re-referencing, the full-rank status of the data can be preserved. This procedure is consistent with recommendations from Makoto’s preprocessing pipeline and the EEGLAB tutorial and is crucial for preventing rank deficiency issues such as the generation of ghost independent components [

156,

163,

164].

Following preprocessing, artifacts such as motion-induced noise and excessive eye blinks were removed, resulting in 3200 usable trials (80% retention rate). We then segmented the EEG signals into 5-s epochs corresponding to each stimulus and labeled them either “preference” (preferred), “neutral,” or “non-preference” (disliked) before saving the data in the MATLAB (.mat) format.

Given that our primary focus was on differentiating clear preference responses, trials labeled “neutral” were excluded from the final dataset, ensuring a balanced classification between preference and non-preference categories.

Table 11 summarizes the final dataset configuration.

These preprocessing steps formed the foundation for exploring differences in neural signals between preference and non-preference stimuli, enabling a robust analysis of how users’ emotional responses varied with architectural images. The final dataset was central to the subsequent deep learning model development.

4.2. ERP Component Analysis

4.2.1. Heatmap Visualization

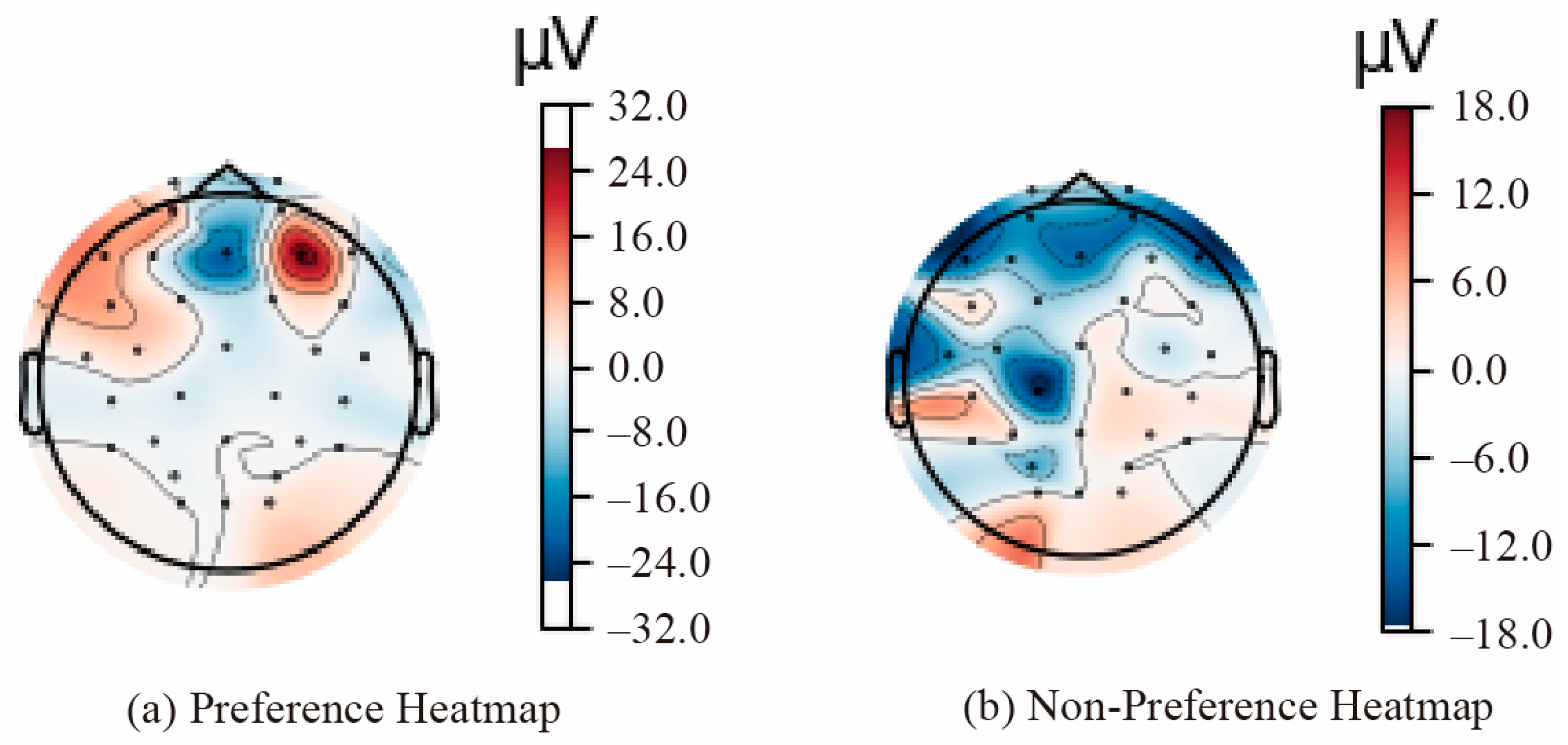

Before building our model, we visualized brainwave changes in the parietal and occipital regions for preference and non-preference stimuli as heatmaps [

165]. Because the parietal region is crucial for emotion evaluation and attention, and the occipital region is key for the initial processing of visual stimuli, previous research has shown that observing ERP changes in these areas can provide insights into the neural correlates of emotional responses to architectural stimuli [

166,

167,

168].

Figure 8 presents a heatmap of the average brain activation across all participants, illustrating the differences between preference (a) and non-preference (b) conditions. EEG signals were extracted from selected parietal (Pz, P3, and P4) and occipital (Oz, O1, and O2) channels. In the occipital area, preferred stimuli exhibited a strong positive potential, whereas non-preferred stimuli presented a broader negative potential, indicating differing neural responses to architectural stimuli.

According to Davidson [

120] and related research, this pattern reflects approach tendencies for preferred stimuli (positive emotion) versus withdrawal tendencies for disliked stimuli (negative evaluation). In the occipital area, non-preference stimuli yield a pronounced negative potential, suggesting a more intensified selective attention or visual rejection response [

129,

168].

Table 12 summarizes the observed activation patterns and corresponding interpretations for parietal and occipital regions.

4.2.2. ERP Components (N100, N200, P300, LPP)

We used MATLAB R2024b’s EEGLAB and FastICA for preprocessing, analyzing windows from −0.2 s before stimulus onset to 1.0 s after [

26,

169]. This interval encompasses early sensory responses (N100 and N200), resource allocation (P300), and sustained emotional processing (LPP). To reduce noise, we applied a 40-point moving average filter.

Table 13 outlines each ERP component’s typical time range.

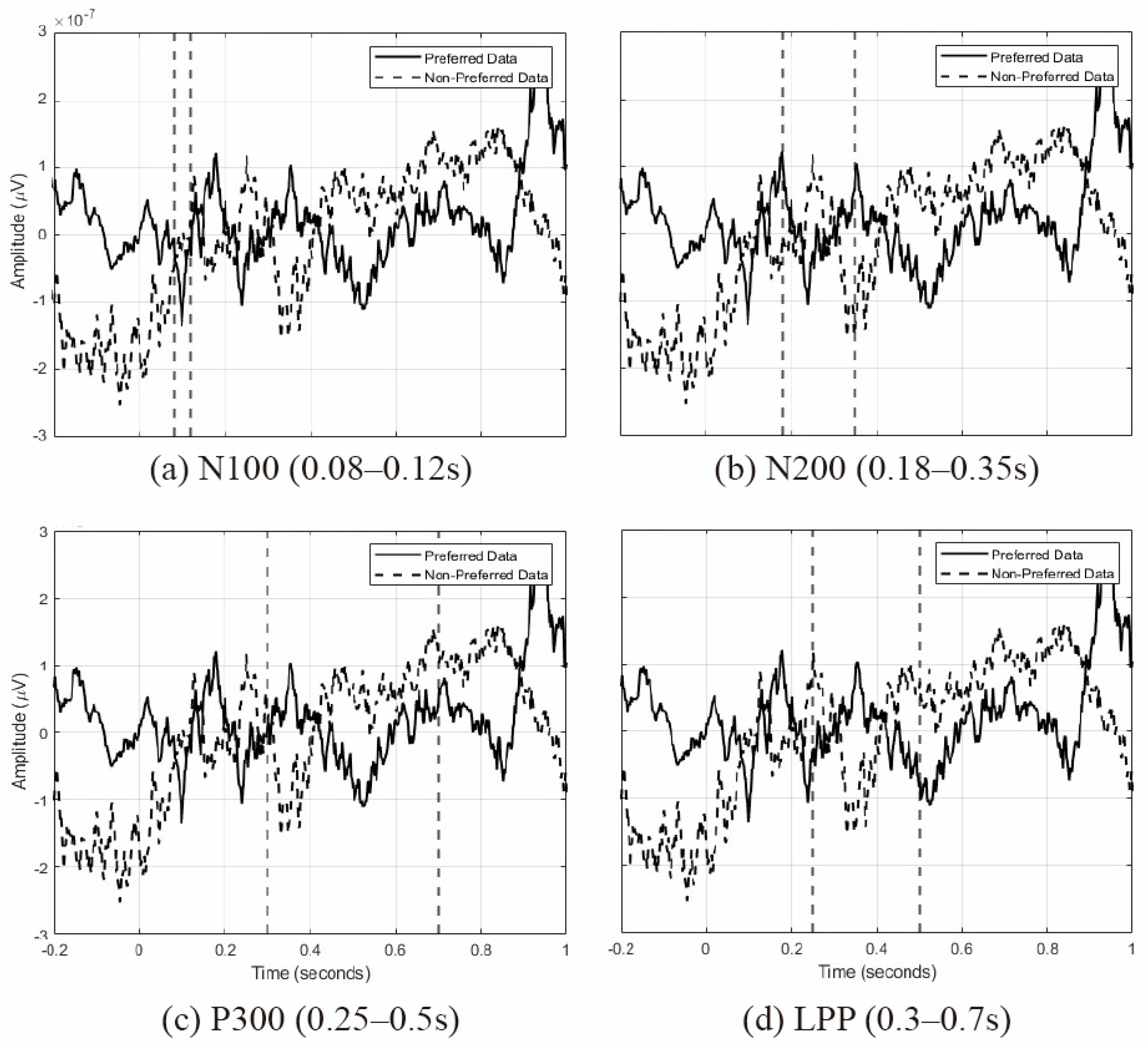

Figure 9 shows the average waveforms for each ERP component, derived from the EEG signals recorded by the parietal (Pz, P3, and P4) and occipital (Oz, O1, and O2) electrodes. These waveforms illustrate the neural responses under preference and non-preference conditions. Specifically, the following points summarize the main characteristics and implications of each ERP component’s waveform:

N100 (80–120 ms): after stimulus onset, preferred stimuli exhibit an initial negative deflection (N100) followed by a positive rebound, indicating a heightened sensory attention, whereas non-preferred stimuli show a more stable negative amplitude (see

Figure 9a).

N200 (180–350 ms): preferred stimuli display a sharp positive-to-negative transition, suggesting a strong recognition of relevant stimuli, while non-preferred stimuli show a delayed negative amplitude, indicating mismatch detection (see

Figure 9b).

P300 (250–500 ms): for preferred stimuli, a robust positive peak around 300 ms reflects the processing of significant stimuli, whereas non-preferred stimuli show an initial positive deflection followed by a negative shift (see

Figure 9c).

LPP (300–700 ms): preferred stimuli maintain a continuous positive amplitude, signifying a high emotional engagement, while non-preferred stimuli remain at a negative level, indicating a weaker emotional processing (see

Figure 9d).

Collectively, these results show preferred stimuli invoke stronger responses across early sensory processing, resource allocation, and emotional evaluation, whereas non-preferred stimuli elicit relatively weaker and sometimes delayed responses. This confirms that architectural images can reliably differentiate user emotion at the neural level, offering crucial insights for future model training and affective design.

To examine whether ERPs were elicited in response to architectural stimuli, paired t-tests were conducted on data collected from 40 participants, with a focus on the ERP components N100, N200, P300, and LPP. The results revealed statistically significant differences between preferred and non-preferred conditions in the early ERP components (N100 and N200). These findings suggest that early attentional responses and the detection of aversive stimuli may vary depending on the type of architectural space presented.

In contrast, no significant differences were observed in the later ERP components (P300 and LPP), possibly because of the relatively short stimulus duration or individual variability. This outcome implies that late-stage cognitive and affective processing may have been less pronounced under the experimental conditions. Future studies incorporating prolonged stimulus exposure or additional environmental factors may help clarify these late ERP responses.

In summary, the findings indicate that architectural stimuli can evoke significant differences in early ERP components, suggesting their potential utility as neural indicators of the initial cognitive processing of visual and emotional aspects of architectural environments.

4.3. Model 1: CNN-LSTM with ERP Segments

To predict emotional responses to architectural images, we built a CNN-LSTM hybrid model capable of simultaneously learning both the spatial and temporal characteristics of EEG data. This model extracts spatial features through 2D convolutional layers and learns temporal dependencies via LSTM layers.

Table 14 describes the hyperparameters and roles of the CNN and LSTM components.

Figure 10 visually depicts the CNN-LSTM model architecture.

The final output of the LSTM layer had a hidden state dimension of 40, which was passed through a fully connected layer, leading to the final classification stage. The classification layer consisted of two output neurons with a Softmax activation function, designed to compute the probabilities for the two target classes: preference and non-preference. To address the issue of class imbalance, focal loss (α = 0.25, γ = 2.0) was employed as the loss function, and the Adam optimizer was used with a learning rate of 0.000203.

We utilized Optuna to optimize key hyperparameters such as LSTM unit count, CNN filter count, dropout rate, learning rate, and batch size. After 50 trials, the optimal combination was as shown in

Table 15.

By incorporating focal loss, the model emphasized the contribution of difficult-to-classify samples (e.g., non-preferred stimuli) by assigning greater loss values to these instances. The training was conducted using a mini batch size of 16 over a total of 30 epochs. Early stopping was applied to terminate training when the validation loss failed to improve over a predefined number of iterations, thereby preventing overfitting and enhancing generalization.

To address data imbalance between preference (positive) and non-preference (negative) classes, we applied focal loss, which places a greater emphasis on challenging samples. We used α = 0.25 and γ = 2.0. After 30 training epochs, the model’s performance metrics were as follows:

Recall: 98.76% (very high, rarely missing positive/preference cases);

Precision: 72.11% (relatively low; some non-preference cases misclassified as preference);

F1 score: 83.36%;

Accuracy: 77.09%.

Although precision was somewhat modest, the model’s exceptionally high recall could be advantageous for real-time emotion monitoring applications.

4.4. Model 2: CNN-LSTM with Significant Features (t-Test)

The second model focuses on extracting only those features from the ERP intervals (e.g., N100, N200, P300, and LPP) that show statistically significant differences between preference and non-preference stimuli. By removing noise and superfluous information, we feed only key variables (e.g., mean amplitude, peak, area under the curve, and peak latency) that directly contribute to distinguishing emotional responses, aiming for improved performance and learning efficiency.

Firstly, we performed

t-tests on each ERP interval to determine which variables exhibited significant differences between preferred and non-preferred stimuli (

p < 0.05) (

Table 16).

Table 17 summarizes the

t test results by electrode, and

Table 18 lists the final channel–feature combinations chosen based on statistical significance and neuroscientific relevance.

These selected features were processed by a CNN (Conv1D) layer to capture partial spatial patterns, followed by an LSTM layer for temporal dependency (

Table 19).

We also employed SMOTEENN to address minority-class imbalance (non-preferred samples) by combining an oversampling of challenging cases with noise filtering.

The final classification layer, consistent with Model 1, consisted of two output neurons with a Softmax activation function for binary classification between preference and non-preference. The model was trained using focal loss and the Adam optimizer.

Using an 80–20 train–validation split and EarlyStopping (stopping within 50 epochs), the final model achieved the following:

Accuracy: 81.90%;

Precision: 85.00%;

Recall: 70.00%;

F1 score: 73.00%.

By focusing on statistically significant ERP features, Model 2 achieved an improved accuracy and precision. However, its recall was somewhat lower, indicating subtle positive responses may have been missed. Still, these outcomes provide a robust neuroscientific foundation for designing affective architectural spaces.

4.5. Model Evaluation and Comparison

We then compared the performances of Model 1 (ERP Segment CNN-LSTM) and Model 2 (t-test-based CNN-LSTM). Model 1, which learns comprehensive spatiotemporal ERP features, and achieved a very high recall (98.76%), rarely missing positive/preferred cases. However, its precision (72.11%) was relatively low, leading to more false positives. Meanwhile, Model 2, using only statistically significant ERP features, improved accuracy (81.90%) and precision (85.00%) but had a lower recall (70.00%).

Table 20 summarizes each model’s strengths and weaknesses. In short, Model 1’s extensive coverage of ERP segments yields a higher recall, whereas Model 2’s targeted features lead to a better accuracy and precision.

To further evaluate the generalization performance of Models 1 and 2, a fivefold cross-validation was conducted. Given the relatively limited dataset and intersubject variability in ERP signals, some variation in accuracy was observed across folds. However, Model 2 consistently outperformed Model 1 in terms of classification accuracy. This result supports the notion that extracting meaningful ERP features and feeding them into the CNN-LSTM architecture can lead to a more stable and improved classification performance.

Further, a paired t-test was conducted to statistically compare the cross-validation accuracies of Models 1 and 2. The results revealed a significant difference (t = –10.4913, p = 0.0005), indicating that the ERP feature-based approach of Model 2 is more effective for EEG-based affective classification. These findings suggest that additional data collection and further feature optimization could further enhance model performance.

These findings validate the feasibility of EEG-based emotion analysis for distinguishing preference versus non-preference in response to architectural stimuli. In practical terms, designers may choose or combine these models based on whether they prioritize sensitivity (high recall) or precision in capturing user preferences. The next section discusses potential real-world applications of these models and their significance for personalized affective architecture.

5. Discussion

This study confirms that users’ emotional responses toward and preferences for architectural stimuli can be quantitatively evaluated using EEG. We proposed a methodology based on deep learning models to objectively classify these responses. We recruited a total of 40 participants and collected their neural responses to architectural images using a 32-channel EEG device. Thorough data preprocessing (filtering, noise removal, channel selection, and re-referencing) ensured high-quality EEG data. Subsequently, ERP components (N100, N200, P300, and LPP) were analyzed to identify spatiotemporal differences in brain responses between preferred and non-preferred stimuli. The results revealed significant differences in the P300 and LPP intervals, demonstrating that an ERP-based evaluation of architectural preferences is valid, supporting Hypothesis 1.

The two CNN-LSTM deep learning models proposed in this research performed differently depending on the ERP features used. Model 1 (CNN-LSTM using the entire ERP) achieved a high recall of about 98.76%, minimizing missed detections of emotional responses, but its precision was relatively lower (72.11%). Model 2 (CNN-LSTM using t-test-selected ERP features) had an improved accuracy (81.90%) and precision (85.00%) but exhibited a lower recall (70.00%). This indicates that the entire ERP dataset can provide a broader range of emotional information but may be sensitive to noise, whereas selectively using statistically significant ERP features enhances precision but may overlook subtle neurological changes. Hence, ERP feature selection plays a crucial role in determining model performance, partially supporting Hypothesis 2.

To further validate model performance and generalizability, a fivefold cross-validation was conducted. Although both models showed some variability across folds, Model 2 consistently demonstrated a superior and more stable performance. Moreover, a paired t-test revealed a statistically significant difference in accuracy between Model 1 and Model 2 (t = −10.4913, p = 0.0005), confirming that the ERP-based feature selection may be more effective for EEG-based emotion classification and model performance can vary according to how input data are constructed.

Several important limitations should be noted. Firstly, the sample size (n = 40) is relatively small, warranting caution in generalizing the results. Larger-scale follow-up studies are needed to improve reliability. Additionally, because EEG data may not fully conform to a normal distribution, we adopted a t-test for exploratory analysis. Future research should consider nonparametric approaches (e.g., Mann–Whitney U test) or multiple comparison corrections (e.g., false discovery rate) to enhance the robustness of statistical analyses. Finally, this study employed image-based stimuli. Therefore, long-term measures in real-world or VR spaces and the integration of other physiological signals (e.g., heart rate and galvanic skin response) are warranted to ensure ecological validity.

Nevertheless, the value of the methodology proposed here is that it offers an objective approach to incorporating users’ affective responses during the early stages of architectural design. Accordingly, the findings of this study should be considered in relation to recent EEG-based research in the field of neuroarchitecture. While this study highlights the feasibility of applying EEG-based emotion analysis in architectural design, several challenges must be addressed before it can be practically applied, as follows:

6. Conclusions

Based on EEG and ERP analysis, the findings of this research demonstrate that users’ emotional preferences for architectural stimuli can be quantified. Notably, distinct variations between preferred and non-preferred stimuli were identified in the P300 and LPP components, suggesting that EEG-based emotion classification is neurophysiologically valid. When evaluating the CNN-LSTM deep learning models, Model 1, which utilized the entire ERP dataset, achieved a high recall but a relatively lower precision. In contrast, Model 2, which selectively incorporated only statistically significant ERP features, demonstrated a high accuracy and precision but a lower recall. Results from the fivefold cross-validation further confirmed the more consistent and superior performance of Model 2. A paired t-test indicated a statistically significant difference in accuracy between the two models, supporting the effectiveness of selective ERP feature extraction. These findings underscore the potential of EEG-based emotion classification as an objective and neurophysiologically valid evaluation tool while emphasizing the importance of identifying relevant ERP components.

Nonetheless, the study is limited by a small sample size, assumptions of data normality, and concerns regarding ecological validity. Future research should address these limitations by expanding the participant pool, gathering data in real-world settings, and integrating multiple physiological signals.

Moreover, this work highlights a promising approach to embedding user emotions and preferences into the architectural design process. As real-world validation expands, user diversity increases, and technical methodologies become more refined, these neurophysiological strategies may significantly contribute to the development of truly human-centric, affective architectural environments.

Author Contributions

Conceptualization, J.E.C. and H.J.J.; Methodology, J.E.C. and S.Y.K.; Software, J.E.C., S.Y.K. and Y.Y.H.; Validation, J.E.C.; Formal analysis, J.E.C. and Y.Y.H.; Data curation, J.E.C., S.Y.K. and Y.Y.H.; Writing—original draft, J.E.C.; Writing—review & editing, J.E.C., S.Y.K. and Y.Y.H.; Supervision, J.E.C. and S.Y.K.; Project administration, H.J.J.; Funding acquisition, H.J.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by a National Research Foundation of Korea (NRF) grant funded by the Korean government (MSIT) (No. NRF-2022R1A2C3011796).

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Ethics Committee of Hanyang University (HYUIRB-202504-006, approved on 22 March 2025).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The datasets presented in this article are not readily available because the data are part of an ongoing study. Requests to access the datasets should be directed to the corresponding author.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Abbreviations

The following abbreviations are used in this manuscript:

| Adam | Adaptive moments of gradient |

| CNN | Convolutional neural networks |

| CNN-LSTM | Convolutional neural network long short-term memory |

| EEG | Electroencephalography |

| ERP | Event-related potential |

| LPP | Late positive potential |

| LSTM | Long short-term memory |

| VR | Virtual reality |

References

- Ergan, S.; Radwan, A.; Zou, Z.; Tseng, H.-a.; Han, X. Quantifying Human Experience in Architectural Spaces with Integrated Virtual Reality and Body Sensor Networks. J. Comput. Civ. Eng. 2018, 33, 04018062. [Google Scholar] [CrossRef]

- Nanda, U.; Pati, D.; Ghamari, H.; Bajema, R. Lessons from neuroscience: Form follows function, emotions follow form. Intell. Build. Int. 2013, 5, 61–78. [Google Scholar] [CrossRef]

- Eberhard, J.P. Brain Landscape: The Coexistence of Neuroscience and Architecture; Oxford University Press: Oxford, UK, 2009; pp. 1–280. [Google Scholar]

- Bower, I.; Tucker, R.; Enticott, P.G. Impact of built environment design on emotion measured via neurophysiological correlates and subjective indicators: A systematic review. J. Environ. Psychol. 2019, 66, 101344. [Google Scholar] [CrossRef]

- Evans, G.W.; McCoy, J.M. When Buildings Don’t Work: The Role of Architecture in Human Health. J. Environ. Psychol. 1998, 18, 85–94. [Google Scholar] [CrossRef]

- Eastman, C.; Newstetter, W.; McCracken, M. Design Knowing and Learning: Cognition in Design Education; Elsevier: Amsterdam, The Netherlands, 2001; pp. 79–103. [Google Scholar]

- Kim, N.; Chung, S.; Kim, D.I. Exploring EEG-based Design Studies: A Systematic Review. Arch. Des. Res. 2022, 35, 91–113. [Google Scholar] [CrossRef]

- Hu, L.; Shepley, M.M. Design Meets Neuroscience: A Preliminary Review of Design Research Using Neuroscience Tools. J. Inter. Des. 2022, 47, 31–50. [Google Scholar] [CrossRef]

- Ball, L.J.; Christensen, B.T. Advancing an understanding of design cognition and design metacognition: Progress and prospects. Des. Stud. 2019, 65, 35–59. [Google Scholar] [CrossRef]

- Gero, J.S.; Milovanovic, J. A framework for studying design thinking through measuring designers’ minds, bodies and brains. Des. Sci. 2020, 6, e19. [Google Scholar] [CrossRef]

- Vieira, S.; Gero, J.S.; Delmoral, J.; Gattol, V.; Fernandes, C.; Parente, M.; Fernandes, A.A. The neurophysiological activations of mechanical engineers and industrial designers while designing and problem-solving. Des. Sci. 2020, 6, e26. [Google Scholar] [CrossRef]

- Kim, J.; Kim, N. Quantifying Emotions in Architectural Environments Using Biometrics. Appl. Sci. 2022, 12, 9998. [Google Scholar] [CrossRef]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.-S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. DEAP: A Database for Emotion Analysis; Using Physiological Signals. IEEE Trans. Affect. Comput. 2012, 3, 18–31. [Google Scholar] [CrossRef]

- Ricci, N. The Psychological Impact of Architectural Design. Bachelor’s Thesis, Claremont McKenna College, Claremont, CA, USA, 2018. [Google Scholar]

- Heydarian, A.; Pantazis, E.; Wang, A.; Gerber, D.; Becerik-Gerber, B. Towards user centered building design: Identifying end-user lighting preferences via immersive virtual environments. Autom. Constr. 2017, 81, 56–66. [Google Scholar] [CrossRef]

- Bar, M.; Neta, M. Humans prefer curved visual objects. Psychol. Sci. 2006, 17, 645–648. [Google Scholar] [CrossRef] [PubMed]

- Madani Nejad, K. Curvilinearity in Architecture: Emotional Effect of Curvilinear Forms in Interior Design. Ph.D. Thesis, Texas A&M University, College Station, TX, USA, 2007. [Google Scholar]

- Vartanian, O.; Navarrete, G.; Chatterjee, A.; Fich, L.B.; Leder, H.; Modroño, C.; Nadal, M.; Rostrup, N.; Skov, M. Impact of contour on aesthetic judgments and approach-avoidance decisions in architecture. Proc. Natl. Acad. Sci. USA 2013, 110, 10446–10453. [Google Scholar] [CrossRef]

- Banaei, M.; Hatami, J.; Yazdanfar, A.; Gramann, K. Walking through Architectural Spaces: The Impact of Interior Forms on Human Brain Dynamics. Front. Hum. Neurosci. 2017, 11, 477. [Google Scholar] [CrossRef]

- Roelfsema, P.R.; Scholte, H.S.; Spekreijse, H. Temporal constraints on the grouping of contour segments into spatially extended objects. Vis. Res. 1999, 39, 1509–1529. [Google Scholar] [CrossRef]

- Zadeh, R.S.; Shepley, M.M.; Williams, G.; Chung, S.S.E. The Impact of Windows and Daylight on Acute-Care Nurses’ Physiological, Psychological, and Behavioral Health. HERD Health Environ. Res. Des. J. 2014, 7, 35–61. [Google Scholar] [CrossRef]

- Meyers-Levy, J.; Zhu, R. The Influence of Ceiling Height: The Effect of Priming on the Type of Processing That People Use. J. Consum. Res. 2007, 34, 174–186. [Google Scholar] [CrossRef]

- Teplan, M. Fundamentals of EEG measurement. Meas. Sci. Rev. 2002, 2, 1–11. [Google Scholar]

- Aldayel, M.S.; Ykhlef, M.; Al-Nafjan, A.N. Electroencephalogram-Based Preference Prediction Using Deep Transfer Learning. IEEE Access 2020, 8, 176818–176829. [Google Scholar] [CrossRef]

- Zhu, L.; Lv, J. Review of Studies on User Research Based on EEG and Eye Tracking. Appl. Sci. 2023, 13, 6502. [Google Scholar] [CrossRef]

- Luck, S.J. An Introduction to the Event-Related Potential Technique; MIT Press: Cambridge, MA, USA, 2014. [Google Scholar]

- Siuly, S.; Li, Y.; Zhang, Y. EEG Signal Analysis and Classification. IEEE Trans. Neural Syst. Rehabilit Eng. 2016, 11, 141–144. [Google Scholar] [CrossRef]

- Dehais, F.; Duprès, A.; Blum, S.; Drougard, N.; Scannella, S.; Roy, R.N.; Lotte, F.; Dehais, F.; Duprès, A.; Blum, S.; et al. Monitoring Pilot’s Mental Workload Using ERPs and Spectral Power with a Six-Dry-Electrode EEG System in Real Flight Conditions. Sensors 2019, 19, 1324. [Google Scholar] [CrossRef]

- Zhang, L.; Wade, J.; Bian, D.; Fan, J.; Swanson, A.; Weitlauf, A.; Warren, Z.; Sarkar, N. Cognitive Load Measurement in a Virtual Reality-Based Driving System for Autism Intervention. IEEE Trans. Affect. Comput. 2017, 8, 176–189. [Google Scholar] [CrossRef] [PubMed]

- KOK, A. On the utility of P3 amplitude as a measure of processing capacity. Psychophysiology 2001, 38, 557. [Google Scholar] [CrossRef] [PubMed]

- Brouwer, A.-M.; Hogervorst, M.A.; Van Erp, J.B.; Heffelaar, T.; Zimmerman, P.H.; Oostenveld, R. Estimating workload using EEG spectral power and ERPs in the n-back task. J. Neural Eng. 2012, 9, 045008. [Google Scholar] [CrossRef]

- Kabbara, A. Brain Network Estimation from Dense EEG Signals: Application to Neurological Disorders. Ph.D. Thesis, Université de Rennes; Université Libanaise, Rennes, France, 2018. [Google Scholar]

- Wilson, G.F.; Russell, C.A. Performance Enhancement in an Uninhabited Air Vehicle Task Using Psychophysiologically Determined Adaptive Aiding. Hum. Factors 2007, 49, 1005–1018. [Google Scholar] [CrossRef]

- Mühl, C.; Jeunet, C.; Lotte, F. EEG-based workload estimation across affective contexts. Front. Neurosci. 2014, 8, 114. [Google Scholar]

- Zarjam, P.; Epps, J.; Chen, F. Characterizing working memory load using EEG delta activity. In Proceedings of the 2011 19th European Signal Processing Conference, Barcelona, Spain, 29 August–2 September 2011; pp. 1554–1558. [Google Scholar]

- Dijksterhuis, C.; De Waard, D.; Brookhuis, K.A.; Mulder, B.L.; de Jong, R. Classifying visuomotor workload in a driving simulator using subject specific spatial brain patterns. Front. Neurosci. 2013, 7, 149. [Google Scholar] [CrossRef]

- Zarjam, P.; Epps, J.; Chen, F.; Lovell, N.H. Estimating cognitive workload using wavelet entropy-based features during an arithmetic task. Comput. Biol. Med. 2013, 43, 2186–2195. [Google Scholar] [CrossRef]

- Walter, C.; Schmidt, S.; Rosenstiel, W.; Gerjets, P.; Bogdan, M. Using Cross-Task Classification for Classifying Workload Levels in Complex Learning Tasks. In Proceedings of the 2013 Humaine Association Conference on Affective Computing and Intelligent Interaction, Geneva, Switzerland, 2–5 September 2013. [Google Scholar] [CrossRef]

- Tian, Y.; Zhang, H.; Jiang, Y.; Li, P.; Li, Y. A Fusion Feature for Enhancing the Performance of Classification in Working Memory Load With Single-Trial Detection. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1985–1993. [Google Scholar] [CrossRef]

- Roy, R.N.; Bonnet, S.; Charbonnier, S.; Campagne, A. Mental fatigue and working memory load estimation: Interaction and implications for EEG-based passive BCI. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013. [Google Scholar] [CrossRef]

- Subha, D.P.; Joseph, P.K.; Acharya, U.R.; Lim, C.M. EEG Signal Analysis: A Survey. J. Med. Syst. 2008, 34, 195–212. [Google Scholar] [CrossRef] [PubMed]

- Ma, Y.; Shi, W.; Peng, C.-K.; Yang, A.C. Nonlinear dynamical analysis of sleep electroencephalography using fractal and entropy approaches. Sleep Med. Rev. 2018, 37, 85–93. [Google Scholar] [CrossRef] [PubMed]

- Dimitrakopoulos, G.N.; Kakkos, I.; Dai, Z.; Lim, J.; deSouza, J.J.; Bezerianos, A.; Sun, Y. Task-Independent Mental Workload Classification Based Upon Common Multiband EEG Cortical Connectivity. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 1940–1949. [Google Scholar] [CrossRef]

- Sakkalis, V. Review of advanced techniques for the estimation of brain connectivity measured with EEG/MEG. Comput. Biol. Med. 2011, 41, 1110–1117. [Google Scholar] [CrossRef]

- Kakkos, I.; Dimitrakopoulos, G.N.; Gao, L.; Zhang, Y.; Qi, P.; Matsopoulos, G.K.; Thakor, N.; Bezerianos, A.; Sun, Y. Mental Workload Drives Different Reorganizations of Functional Cortical Connectivity Between 2D and 3D Simulated Flight Experiments. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1704–1713. [Google Scholar] [CrossRef] [PubMed]

- Romeo, Z.; Spironelli, C. P1 Component Discloses Gender-Related Hemispheric Asymmetries during IAPS Processing. Symmetry 2023, 15, 610. [Google Scholar] [CrossRef]

- Patel, S.H.; Azzam, P.N. Characterization of N200 and P300: Selected Studies of the Event-Related Potential. Int. J. Med. Sci. 2005, 2, 147–154. [Google Scholar] [CrossRef]

- Goto, N.; Lim, X.L.; Shee, D.; Hatano, A.; Khong, K.W.; Buratto, L.G.; Watabe, M.; Schaefer, A. Can Brain Waves Really Tell If a Product Will Be Purchased? Inferring Consumer Preferences From Single-Item Brain Potentials. Front. Integr. Neurosci. 2019, 13, 19. [Google Scholar] [CrossRef]

- Cacioppo, J.T. Feelings and emotions: Roles for electrophysiological markers. Biol. Psychol. 2004, 67, 235–243. [Google Scholar] [CrossRef]

- Chen, J.; He, B.; Zhu, H.; Wu, J. The implicit preference evaluation for the ceramic tiles with different visual features: Evidence from an event-related potential study. Front. Psychol. 2023, 14, 1139687. [Google Scholar] [CrossRef]

- Almeida, R.; Prata, C.; Pereira, M.R.; Barbosa, F.; Ferreira-Santos, F. Neuronal Correlates of Empathy: A Systematic Review of Event-Related Potentials Studies in Perceptual Tasks. Brain Sci. 2024, 14, 504. [Google Scholar] [CrossRef] [PubMed]

- Johal, P.K.; Jain, N. Artifact removal from EEG: A comparison of techniques. In Proceedings of the 2016 International Conference on Electrical, Electronics, and Optimization Techniques (ICEEOT), Chennai, India, 3–5 March 2016. [Google Scholar] [CrossRef]

- Henry, J.C. Electroencephalography: Basic principles, clinical applications, and related fields. Neurology 2006, 67, 2092–2092–a. [Google Scholar] [CrossRef]

- Liu, Y.; Sourina, O.; Nguyen, M.K. Real-Time EEG-Based Human Emotion Recognition and Visualization. In Proceedings of the 2010 International Conference on Cyberworlds, Singapore, 20–22 October 2010. [Google Scholar] [CrossRef]

- Li, Y.; Li, X.; Ratcliffe, M.; Liu, L.; Qi, Y.; Liu, Q. A real-time EEG-based BCI system for attention recognition in ubiquitous environment. In Proceedings of the 2011 International Workshop on Ubiquitous Affective Awareness and Intelligent Interaction, Beijing, China, 18 September 2011; pp. 33–40. [Google Scholar] [CrossRef]

- Cincotti, F.; Mattia, D.; Aloise, F.; Bufalari, S.; Astolfi, L.; Fallani, F.D.V.; Tocci, A.; Bianchi, L.; Marciani, M.G.; Gao, S.; et al. High-resolution EEG techniques for brain–computer interface applications. J. Neurosci. Methods 2008, 167, 31–42. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Hart, S. Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research. In Human Mental Workload; Elsevier: Amsterdam, The Netherlands, 1988. [Google Scholar]

- Ramachandran, V.S.; Hirstein, W. The science of art: A neurological theory of aesthetic experience. J. Conscious. Stud. 1999, 6, 15–51. [Google Scholar]

- Gao, Z.; Wang, X.; Yang, Y.; Mu, C.; Cai, Q.; Dang, W.; Zuo, S. EEG-based spatio–temporal convolutional neural network for driver fatigue evaluation. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 2755–2763. [Google Scholar] [CrossRef]

- Ismail, L.E.; Karwowski, W. A Graph Theory-Based Modeling of Functional Brain Connectivity Based on EEG: A Systematic Review in the Context of Neuroergonomics. IEEE Access 2020, 8, 155103–155135. [Google Scholar] [CrossRef]

- Craik, A.; He, Y.; Contreras-Vidal, J.L. Deep learning for electroencephalogram (EEG) classification tasks: A review. J. Neural Eng. 2019, 16, 031001. [Google Scholar] [CrossRef]

- Li, J.; Jin, Y.; Lu, S.; Wu, W.; Wang, P. Building environment information and human perceptual feedback collected through a combined virtual reality (VR) and electroencephalogram (EEG) method. Energy Build. 2020, 224, 110259. [Google Scholar] [CrossRef]

- Erkan, İ. Examining wayfinding behaviours in architectural spaces using brain imaging with electroencephalography (EEG). Archit. Sci. Rev. 2018, 61, 410–428. [Google Scholar] [CrossRef]

- Marín-Morales, J.; Higuera-Trujillo, J.L.; Greco, A.; Guixeres, J.; Llinares, C.; Gentili, C.; Scilingo, E.P.; Alcañiz, M.; Valenza, G. Real vs. immersive-virtual emotional experience: Analysis of psycho-physiological patterns in a free exploration of an art museum. PLoS ONE 2019, 14, e0223881. [Google Scholar] [CrossRef]

- Zou, Z.; Yu, X.; Ergan, S. Integrating biometric sensors, VR, and machine learning to classify EEG signals in alternative architecture designs. In Proceedings of the ASCE International Conference on Computing in Civil Engineering, New Orleans, LO, USA, 11–14 May 2019; pp. 169–176. [Google Scholar]

- Vaquero-Blasco, M.A.; Perez-Valero, E.; Lopez-Gordo, M.A.; Morillas, C. Virtual Reality as a Portable Alternative to Chromotherapy Rooms for Stress Relief: A Preliminary Study. Sensors 2020, 20, 6211. [Google Scholar] [CrossRef]

- Cruz-Garza, J.G.; Darfler, M.; Rounds, J.D.; Gao, E.; Kalantari, S. EEG-based investigation of the impact of room size and window placement on cognitive performance. J. Build. Eng. 2022, 53, 104540. [Google Scholar] [CrossRef]

- Yeom, S.; Kim, H.; Hong, T. Psychological and physiological effects of a green wall on occupants: A cross-over study in virtual reality. Build. Environ. 2021, 204, 108134. [Google Scholar] [CrossRef]

- Zou, Z.; Ergan, S. Evaluating the effectiveness of biometric sensors and their signal features for classifying human experience in virtual environments. Adv. Eng. Inform. 2021, 49, 101358. [Google Scholar] [CrossRef]

- Kalantari, S.; Tripathi, V.; Kan, J.; Rounds, J.D.; Mostafavi, A.; Snell, R.; Cruz-Garza, J.G. Evaluating the impacts of color, graphics, and architectural features on wayfinding in healthcare settings using EEG data and virtual response testing. J. Environ. Psychol. 2022, 79, 101744. [Google Scholar] [CrossRef]

- Liu, C.; Zhang, Y.; Sun, L.; Gao, W.; Zang, Q.; Li, J. The effect of classroom wall color on learning performance: A virtual reality experiment. Build. Simul. 2022, 15, 2019–2030. [Google Scholar] [CrossRef]

- Kalantari, S.; Rounds, J.D.; Kan, J.; Tripathi, V.; Cruz-Garza, J.G. Comparing physiological responses during cognitive tests in virtual environments vs. in identical real-world environments. Sci. Rep. 2021, 11, 10227. [Google Scholar] [CrossRef]

- Bower, I.S.; Hill, A.T.; Enticott, P.G. Functional brain connectivity during exposure to the scale and color of interior built environments. Hum. Brain Mapp. 2023, 44, 447–457. [Google Scholar] [CrossRef]

- Taherysayah, F.; Malathouni, C.; Liang, H.-N.; Westermann, C. Virtual Reality (VR) and Electroencephalography (EEG) in Architectural Design: A systematic review of empirical studies. J. Build. Eng. 2024, 85, 108611. [Google Scholar]

- Lau-Zhu, A.; Lau, M.P.H.; McLoughlin, G. Mobile EEG in research on neurodevelopmental disorders: Opportunities and challenges. Dev. Cogn. Neurosci. 2019, 36, 100635. [Google Scholar] [CrossRef]

- Rad, P.N.; Behzadi, F.; Yazdanfar, S.A.; Ghamari, H.; Zabeh, E.; Lashgari, R. Exploring methodological approaches of experimental studies in the field of neuroarchitecture: A systematic review. HERD Health Environ. Res. Des. J. 2023, 16, 284–309. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Vaswani, A. Attention is all you need. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2017. [Google Scholar]

- Sabour, S.; Frosst, N.; Hinton, G.E. Dynamic routing between capsules. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2017. [Google Scholar]

- Grech, R.; Cassar, T.; Muscat, J.; Camilleri, K.P.; Fabri, S.G.; Zervakis, M.; Xanthopoulos, P.; Sakkalis, V.; Vanrumste, B. Review on solving the inverse problem in EEG source analysis. J. Neuroeng. Rehabil. 2008, 5, 25. [Google Scholar] [CrossRef] [PubMed]

- He, B.; Ding, L. Electrophysiological mapping and neuroimaging. In Neural Engineering; Springer: New York, NY, USA, 2012; pp. 499–543. [Google Scholar]

- Roy, Y.; Banville, H.; Albuquerque, I.; Gramfort, A.; Falk, T.H.; Faubert, J. Deep learning-based electroencephalography analysis: A systematic review. J. Neural Eng. 2019, 16, 051001. [Google Scholar]

- Yang, J.; Ma, Z.; Wang, J.; Fu, Y. A Novel Deep Learning Scheme for Motor Imagery EEG Decoding Based on Spatial Representation Fusion. IEEE Access 2020, 8, 202100–202110. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 6088. [Google Scholar] [CrossRef]

- Graves, A. Long Short-Term Memory; Springer: Singapore, 2012. [Google Scholar] [CrossRef]

- Cho, K. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv 2014, arXiv:1406.1078. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 84–90. [Google Scholar] [CrossRef]

- Lawhern, V.J.; Solon, A.J.; Waytowich, N.R.; Gordon, S.M.; Hung, C.P.; Lance, B.J. EEGNet: A compact convolutional neural network for EEG-based brain–computer interfaces. J. Neural Eng. 2018, 15, 056013. [Google Scholar] [CrossRef]

- Schirrmeister, R.T.; Springenberg, J.T.; Fiederer, L.D.J.; Glasstetter, M.; Eggensperger, K.; Tangermann, M.; Hutter, F.; Burgard, W.; Ball, T. Deep learning with convolutional neural networks for EEG decoding and visualization. Hum. Brain Mapp. 2017, 38, 5391–5420. [Google Scholar] [CrossRef] [PubMed]

- Li, G.; Lee, C.H.; Jung, J.J.; Youn, Y.C.; Camacho, D. Deep learning for EEG data analytics: A survey. Concurr. Comput. Pract. Exp. 2020, 32, e5199. [Google Scholar] [CrossRef]

- Yang, Y.; Wu, Q.J.; Zheng, W.-L.; Lu, B.-L. EEG-based emotion recognition using hierarchical network with subnetwork nodes. IEEE Trans. Cogn. Dev. Syst. 2017, 10, 408–419. [Google Scholar] [CrossRef]

- Yin, Y.; Zheng, X.; Hu, B.; Zhang, Y.; Cui, X. EEG emotion recognition using fusion model of graph convolutional neural networks and LSTM. Appl. Soft Comput. 2021, 100, 106954. [Google Scholar] [CrossRef]

- Zhang, T.; Zheng, W.; Cui, Z.; Zong, Y.; Li, Y. Spatial–temporal recurrent neural network for emotion recognition. IEEE Trans. Cybern. 2018, 49, 839–847. [Google Scholar] [CrossRef]

- Wang, P.; Jiang, A.; Liu, X.; Shang, J.; Zhang, L. LSTM-Based EEG Classification in Motor Imagery Tasks. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 2086–2095. [Google Scholar] [CrossRef] [PubMed]

- Kumar, S.; Sharma, A.; Tsunoda, T. Brain wave classification using long short-term memory network based OPTICAL predictor. Sci. Rep. 2019, 9, 9153. [Google Scholar] [CrossRef]

- Liu, T.; Yang, D.; Liu, T.; Yang, D. A Densely Connected Multi-Branch 3D Convolutional Neural Network for Motor Imagery EEG Decoding. Brain Sci. 2021, 11, 197. [Google Scholar] [CrossRef]

- Chambon, S.; Galtier, M.N.; Arnal, P.J.; Wainrib, G.; Gramfort, A. A deep learning architecture for temporal sleep stage classification using multivariate and multimodal time series. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 758–769. [Google Scholar] [CrossRef]

- Abdallah, M.; An Le Khac, N.; Jahromi, H.; Delia Jurcut, A. A hybrid CNN-LSTM based approach for anomaly detection systems in SDNs. In Proceedings of the 16th International Conference on Availability, Reliability and Security, Vienna, Austria, 17–20 August 2021; pp. 1–7. [Google Scholar]

- Chao, H.; Zhi, H.; Dong, L.; Liu, Y. Recognition of Emotions Using Multichannel EEG Data and DBN-GC-Based Ensemble Deep Learning Framework. Comput. Intell. Neurosci. 2018, 2018, 750904. [Google Scholar] [CrossRef]

- Tayeb, Z.; Fedjaev, J.; Ghaboosi, N.; Richter, C.; Everding, L.; Qu, X.; Wu, Y.; Cheng, G.; Conradt, J. Validating Deep Neural Networks for Online Decoding of Motor Imagery Movements from EEG Signals. Sensors 2019, 19, 210. [Google Scholar] [CrossRef]

- Dose, H.; Møller, J.S.; Iversen, H.K.; Puthusserypady, S. An end-to-end deep learning approach to MI-EEG signal classification for BCIs. Expert Syst. Appl. 2018, 114, 532–542. [Google Scholar] [CrossRef]

- Zhang, R.; Zong, Q.; Dou, L.; Zhao, X. A novel hybrid deep learning scheme for four-class motor imagery classification. J. Neural Eng. 2019, 16, 066004. [Google Scholar] [CrossRef]

- Li, J.; Zhang, Z.; He, H. Hierarchical convolutional neural networks for EEG-based emotion recognition. Cogn. Comput. 2018, 10, 368–380. [Google Scholar]

- Zeng, H.; Wu, Z.; Zhang, J.; Yang, C.; Zhang, H.; Dai, G.; Kong, W. EEG emotion classification using an improved SincNet-based deep learning model. Brain Sci. 2019, 9, 326. [Google Scholar] [CrossRef]

- Li, X.; Song, D.; Zhang, P.; Hou, Y.; Hu, B. Deep fusion of multi-channel neurophysiological signal for emotion recognition and monitoring. Int. J. Data Min. Bioinform. 2017, 18, 1–27. [Google Scholar] [CrossRef]

- Topic, A.; Russo, M. Emotion recognition based on EEG feature maps through deep learning network. Eng. Sci. Technol. Int. J. 2021, 24, 1442–1454. [Google Scholar]

- Xu, B.; Zhang, L.; Song, A.; Wu, C.; Li, W.; Zhang, D.; Xu, G.; Li, H.; Zeng, H. Wavelet transform time-frequency image and convolutional network-based motor imagery EEG classification. IEEE Access 2018, 7, 6084–6093. [Google Scholar] [CrossRef]

- Alhagry, S.; Fahmy, A.A.; El-Khoribi, R.A. Emotion recognition based on EEG using LSTM recurrent neural network. Int. J. Adv. Comput. Sci. Appl. 2017, 8, 355–358. [Google Scholar] [CrossRef]

- Acharya, U.R.; Oh, S.L.; Hagiwara, Y.; Tan, J.H.; Adeli, H. Deep convolutional neural network for the automated detection and diagnosis of seizure using EEG signals. Comput. Biol. Med. 2018, 100, 270–278. [Google Scholar] [CrossRef]

- Affes, A.; Mdhaffar, A.; Triki, C.; Jmaiel, M.; Freisleben, B. A convolutional gated recurrent neural network for epileptic seizure prediction. In Proceedings of the How AI Impacts Urban Living and Public Health: 17th International Conference, ICOST 2019, New York City, NY, USA, 14–16 October 2019; pp. 85–96. [Google Scholar]

- Supratak, A.; Dong, H.; Wu, C.; Guo, Y. DeepSleepNet: A model for automatic sleep stage scoring based on raw single-channel EEG. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 1998–2008. [Google Scholar] [CrossRef] [PubMed]

- Enobio. Neuroelectrics Enobio Instructions for Use; Neuroelectrics: Barselona, Spain, 2022. [Google Scholar]

- He, C.; Chen, Y.-Y.; Phang, C.-R.; Stevenson, C.; Chen, I.-P.; Jung, T.-P.; Ko, L.-W. Diversity and Suitability of the State-of-the-Art Wearable and Wireless EEG Systems Review. IEEE J. Biomed. Health Inform. 2023, 27, 3830–3843. [Google Scholar] [CrossRef] [PubMed]

- Rocha, P.; Dagnino, P.C.; O’Sullivan, R.; Soria-Frisch, A.; Paúl, C. BRAINCODE for Cognitive Impairment Diagnosis in Older Adults: Designing a Case–Control Pilot Study. Int. J. Environ. Res. Public Health 2022, 19, 5768. [Google Scholar] [CrossRef]

- Vidyarani, K.; Dhananjaya, B.; Tejaswini, S. Driver drowsiness detection using LabVIEW. IAETSD J. Adv. Res. Appl. Sci. 2018, 5, 23–32. [Google Scholar]

- Delorme, A.; Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis; PubMed. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef]

- Oostenveld, R.; Fries, P.; Maris, E.; Schoffelen, J.-M. FieldTrip: Open Source Software for Advanced Analysis of MEG, EEG, and Invasive Electrophysiological Data. Comput. Intell. Neurosci. 2011, 2011, 156869. [Google Scholar] [CrossRef]

- Cohen, M. Analyzing Neural Time SERIES Data: Theory and Practice; MIT Press: Cambridge, MA, USA, 2014. [Google Scholar]

- Balconi, M.; Mazza, G. Brain oscillations and BIS/BAS (behavioral inhibition/activation system) effects on processing masked emotional cues.: ERS/ERD and coherence measures of alpha band. Int. J. Psychophysiol. 2009, 74, 158–165. [Google Scholar] [CrossRef]

- Davidson, R.J. What does the prefrontal cortex “do” in affect: Perspectives on frontal EEG asymmetry research. Biol. Psychol. 2004, 67, 219–234. [Google Scholar] [CrossRef]

- Collado-Mateo, D.; Adsuar, J.C.; Olivares, P.R.; Cano-Plasencia, R.; Gusi, N. Using a dry electrode EEG device during balance tasks in healthy young-adult males: Test–retest reliability analysis. Somatosens. Mot. Res. 2015, 32, 219–226. [Google Scholar] [CrossRef]

- Google-Images-Search Library. Available online: https://pypi.org/project/Google-Images-Search/ (accessed on 25 January 2025).

- Soleymani, M.; Asghari-Esfeden, S.; Fu, Y.; Pantic, M. Analysis of EEG Signals and Facial Expressions for Continuous Emotion Detection. IEEE Trans. Affect. Comput. 2016, 7, 17–28. [Google Scholar] [CrossRef]

- Herzog, T.R.; Bryce, A.G. Mystery and Preference in Within-Forest Settings. Environ. Behav. 2007, 39, 779–796. [Google Scholar] [CrossRef]

- Ekman, P.; Davidson, R.J. Voluntary Smiling Changes Regional Brain Activity. Psychol. Sci. 1993, 4, 342–345. [Google Scholar] [CrossRef]

- Lang, P.; Bradley, M.M. The International Affective Picture System (IAPS) in the study of emotion and attention. In Handbook of Emotion Elicitation and Assessment; Oxford University Press: Oxford, UK, 2007; Volume 29, pp. 70–73. [Google Scholar]

- Jarcho, J.M.; Berkman, E.T.; Lieberman, M.D. The neural basis of rationalization: Cognitive dissonance reduction during decision-making. Soc. Cogn. Affect. Neurosci. 2011, 6, 460–467. [Google Scholar] [CrossRef]

- Greco, A.; Valenza, G.; Scilingo, E.P. Advances in Electrodermal Activity Processing with Applications for Mental Health; Springer: Singapore, 2016. [Google Scholar] [CrossRef]

- Olofsson, J.K.; Nordin, S.; Sequeira, H.; Polich, J. Affective picture processing: An integrative review of ERP findings. Biol. Psychol. 2008, 77, 247–265. [Google Scholar] [CrossRef] [PubMed]

- Schupp, H.T.; Markus, J.; Weike, A.I.; Hamm, A.O. Emotional Facilitation of Sensory Processing in the Visual Cortex. Psychol. Sci. 2003, 14, 7–13. [Google Scholar] [CrossRef]

- Delplanque, S.; Lavoie, M.E.; Hot, P.; Silvert, L.; Sequeira, H. Modulation of cognitive processing by emotional valence studied through event-related potentials in humans. Neurosci. Lett. 2004, 356, 1–4. [Google Scholar] [CrossRef] [PubMed]

- Keil, A.; Bradley, M.M.; Hauk, O.; Rockstroh, B.; Elbert, T.; Lang, P.J. Large-scale neural correlates of affective picture processing. Psychophysiology 2002, 39, 641–649. [Google Scholar] [CrossRef]

- Hajcak, G.; Weinberg, A.; MacNamara, A.; Foti, D. ERPs and the study of emotion. In The Oxford Handbook of Event-Related Potential Components; Oxford University Press: Oxford, UK, 2012; Volume 441, 474p. [Google Scholar]

- Cuthbert, B.N.; Schupp, H.T.; Bradley, M.M.; Birbaumer, N.; Lang, P.J. Brain potentials in affective picture processing: Covariation with autonomic arousal and affective report. Biol. Psychol. 2000, 52, 95–111. [Google Scholar] [CrossRef]

- Franz, G.; Wiener, J.M. From Space Syntax to Space Semantics: A Behaviorally and Perceptually Oriented Methodology for the Efficient Description of the Geometry and Topology of Environments. Environ. Plan. B Plan. Des. 2008, 35, 574–592. [Google Scholar] [CrossRef]

- Nasar, J.L. Urban Design Aesthetics. Environ. Behav. 1994, 26, 377–401. [Google Scholar] [CrossRef]

- Chu, S.K.; Cha, S.H. Human Experience Using Virtual Reality for an Optimal Architectural Design. KIBIM Mag. 2024, 14, 1–10. [Google Scholar] [CrossRef]

- Banaei, M.; Ahmadi, A.; Gramann, K.; Hatami, J. Emotional evaluation of architectural interior forms based on personality differences using virtual reality. Front. Archit. Res. 2020, 9, 138–147. [Google Scholar]

- Razoumnikova, O.M. Functional organization of different brain areas during convergent and divergent thinking: An EEG investigation. Cogn. Brain Res. 2000, 10, 11–18. [Google Scholar] [CrossRef]

- Cohen, J. A Power Primer. In Methodological Issues and Strategies in Clinical Research; American Psychological Association: Washington, DC, USA, 2016. [Google Scholar]

- Faul, F.; Erdfelder, E.; Lang, A.-G.; Buchner, A. G*Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 2007, 39, 175–191. [Google Scholar] [CrossRef] [PubMed]