1. Introduction

The stability of modern artificial intelligence (AI) models is essential for various applications and devices [

1,

2,

3,

4]. Machine learning (ML) techniques are increasingly integrating additional technologies to tackle cybersecurity challenges. As new vulnerabilities arise, these threats can compromise the integrity of the ML models, which attackers specifically target [

5]. Notably, data poisoning (DP) attacks are designed to undermine the integrity of a model during both the training and testing stages. Hence, there is a pressing need to develop advanced defense strategies that bolster the resilience of these models against potential DP threats while ensuring the overall reliability of AI-based systems [

6]. AI-adapted decision systems have seen extensive development capitalizing on the availability of data to enhance their efficiency [

7]. These traffic datasets often serve as comprehensive records that encompass various attributes, features, and relevant information. The enormous volume of network traffic carrying such attributes within centralized systems makes it challenging to maintain the effectiveness of AI-driven cybersecurity models [

8,

9,

10,

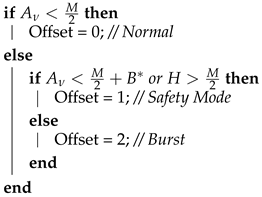

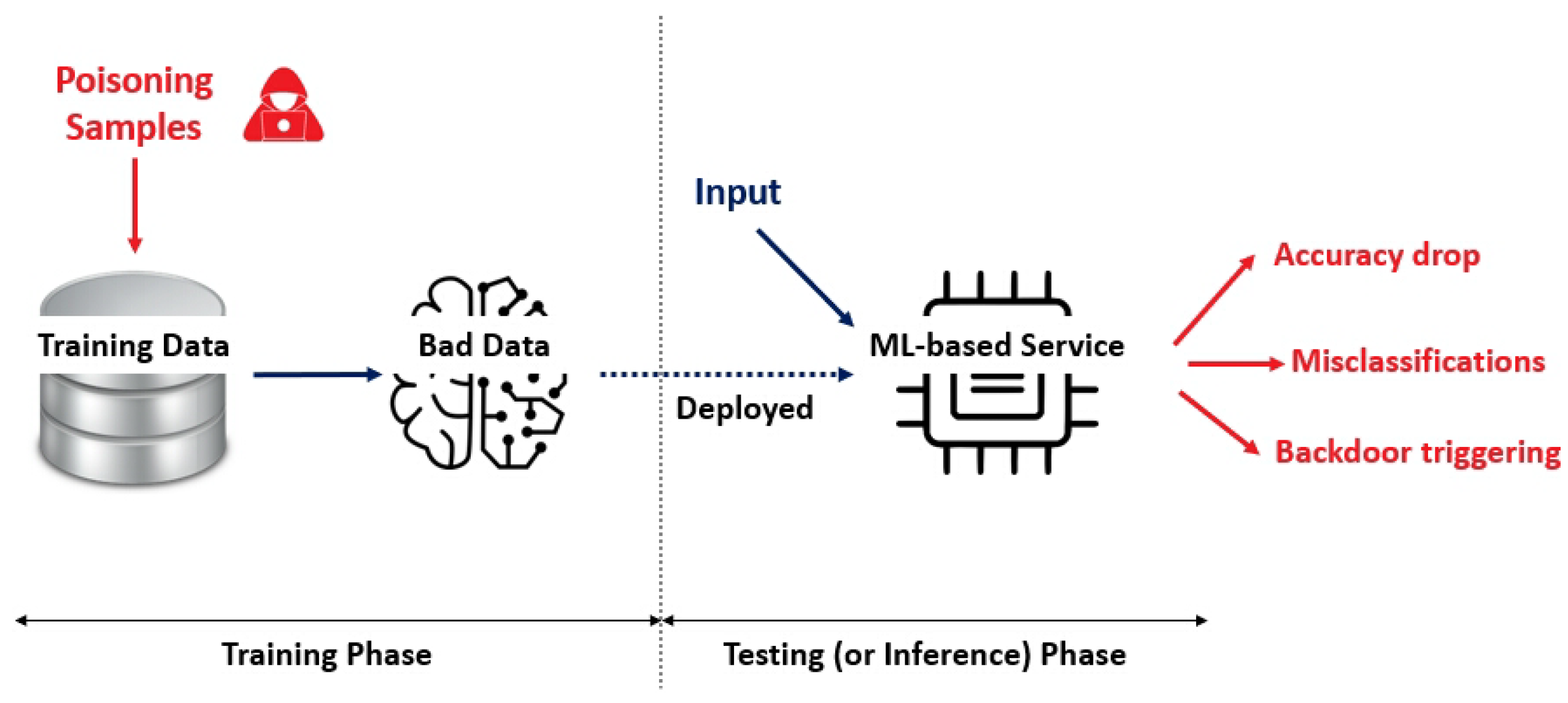

11]. Security threats posed to machine learning frameworks are generally categorized into two phases: training and testing (or inference). Importantly, data poisoning (DP) attacks can manifest during both phases, presenting significant risks to the integrity of these models (see

Figure 1). DP not only encompasses the inclusion of corrupted data samples but also involves data that lead to unauthorized alterations of hyper-parameters in AI models through illicit means [

6]. A prevalent form of DP attack includes the injection of malicious samples into the targeted training set, which disrupts either the feature values or the labels of the training examples during the training phase, thereby affecting the model reliability by modifying its hyper-parameters [

6].

DP attacks can take place not only during the training phase but also specifically during the testing phase, where the emphasis is often on altering model parameters as opposed to corrupting the test samples themselves [

12,

13]. In this context, many studies have found that the predominant defense strategies implemented during testing prioritize the protection of the integrity of testing nodes. Rather than directly filtering or securing the data, these strategies aim to safeguard the framework within which testing occurs [

6,

14]. In this context, an advanced method for range ambiguity suppression in spaceborne SAR systems utilizing blind source separation has been proposed, enhancing robust signal processing techniques for DP detection [

15]. Recent developments have also introduced Trusted Execution Environments (TEEs), which serve as secure execution environments and provide substantial protection for both the code and data, thereby strengthening overall cybersecurity measures. These TEEs ensure that the information contained within them remains confidential and maintain its integrity against potential threats [

14]. While TEEs have been extensively studied within specific artificial intelligence (AI) systems, the methodologies for developing these secure environments are applicable beyond just AI scenarios [

6]. Additionally, various non-AI-specific defense mechanisms can contribute to protecting trained machine learning models during the testing phase [

16,

17]. Furthermore, innovative blockchain-based solutions have emerged as effective methods for ensuring integrity across interconnected nodes, making these techniques adaptable for use within AI systems to counteract DP attacks. The implementation of blockchain-based defense mechanisms has gained attention due to their potential to ensure the integrity of connected nodes throughout distributed networks. These mechanisms have been meticulously designed to not only enhance the security of the network as a whole but also manage the risks associated with data exposure and manipulation. A significant advantage of these techniques is their adaptability; they can be effectively integrated into artificial intelligence (AI) systems as defensive strategies against data poisoning (DP) attacks. This adaptability makes blockchain technology a valuable asset for safeguarding AI models from vulnerabilities that could compromise their performance and reliability. Consequently, leveraging blockchain-based approaches in AI systems can help establish a robust framework for defending against the malicious activities associated with DP, thereby contributing to a more secure and trustworthy AI deployment [

6].

The technologies adapted from blockchain have been extensively used in myriad applications that go well beyond the realm of cryptocurrencies. This innovative technology serves as an encrypted ledger that is equitably distributed among all participating nodes, providing a robust infrastructure that facilitates secure transactions between entities that may be unknown to one another [

18,

19,

20,

21]. Moreover, blockchain technology not only enhances the security of these transactions but also plays a crucial role in maintaining the integrity of the nodes involved in the network. Blockchain technology provides a decentralized and tamper-resistant method for enhancing security, setting it apart from traditional approaches. By utilizing a distributed ledger, blockchain significantly reduces the vulnerabilities linked to single points of failure typical in centralized systems, which endows it with greater resilience against threats such as data alteration and unauthorized access. Transactions benefit from cryptographic features that uphold their integrity and authenticity, thereby enhancing trust among participants. One major strength of blockchain technology is its decentralized peer-to-peer network, which reduces security risks linked to centralized data storage. By distributing data across multiple nodes, this approach minimizes vulnerabilities, making blockchain inherently resilient against attacks and enhancing overall system security. Furthermore, the immutable nature of blockchain allows for transparent audit trails, enhancing accountability in various applications, particularly in sectors like finance, healthcare, and supply chain management. The adaptive capability of blockchain extends to integration with AI systems, providing added layers of security through innovative defense strategies against threats like data poisoning. These characteristics position blockchain as a formidable alternative to conventional security measures, addressing contemporary cybersecurity challenges more effectively while promoting a robust and secure digital landscape. Given these substantial benefits, blockchain has been increasingly integrated into various cybersecurity applications. Among the most pressing concerns addressed by these emerging techniques is the potential for a 51 percent attack, a method where an entity gains control over the majority of the network, compromising its security [

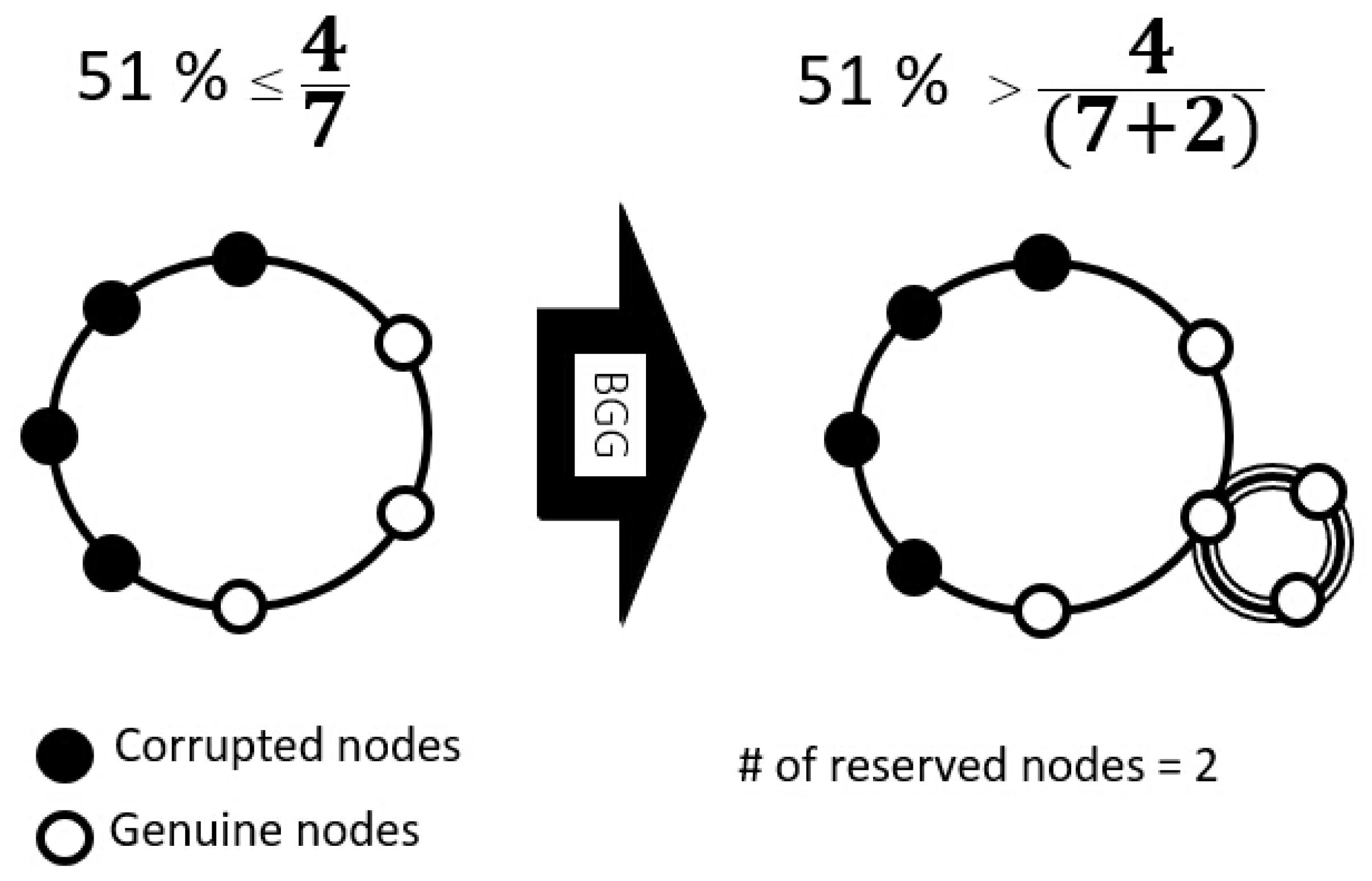

22]. Efforts to design effective countermeasures against this kind of attack reflect the growing importance of blockchain in protecting against the vulnerabilities inherent in centralized systems, thus augmenting its presence in the cybersecurity landscape [

17]. The 51 percent attack represents a significant threat in the blockchain ecosystem, as it involves a malicious entity gaining control over the majority of the computational power of networks. This type of attack can lead to the generation of fraudulent blocks containing false transaction information, effectively undermining the integrity of the blockchain. Such vulnerabilities are especially concerning, as attackers can exploit this power to affect various applications, including artificial intelligence (AI) systems, potentially compromising their operations. A significant benefit of blockchain-based networks lies in their built-in ability to withstand various conventional cyber threats which frequently impact centralized systems. By distributing information across a wide array of nodes and employing cryptographic techniques, these networks enhance their defenses and create a more secure operational environment compared to traditional setups. By distributing data across numerous nodes and utilizing cryptographic technologies, blockchain networks are better equipped to guard against attacks that could have severe repercussions in centralized environments, thereby enhancing their overall security posture in the digital landscape [

16,

17].

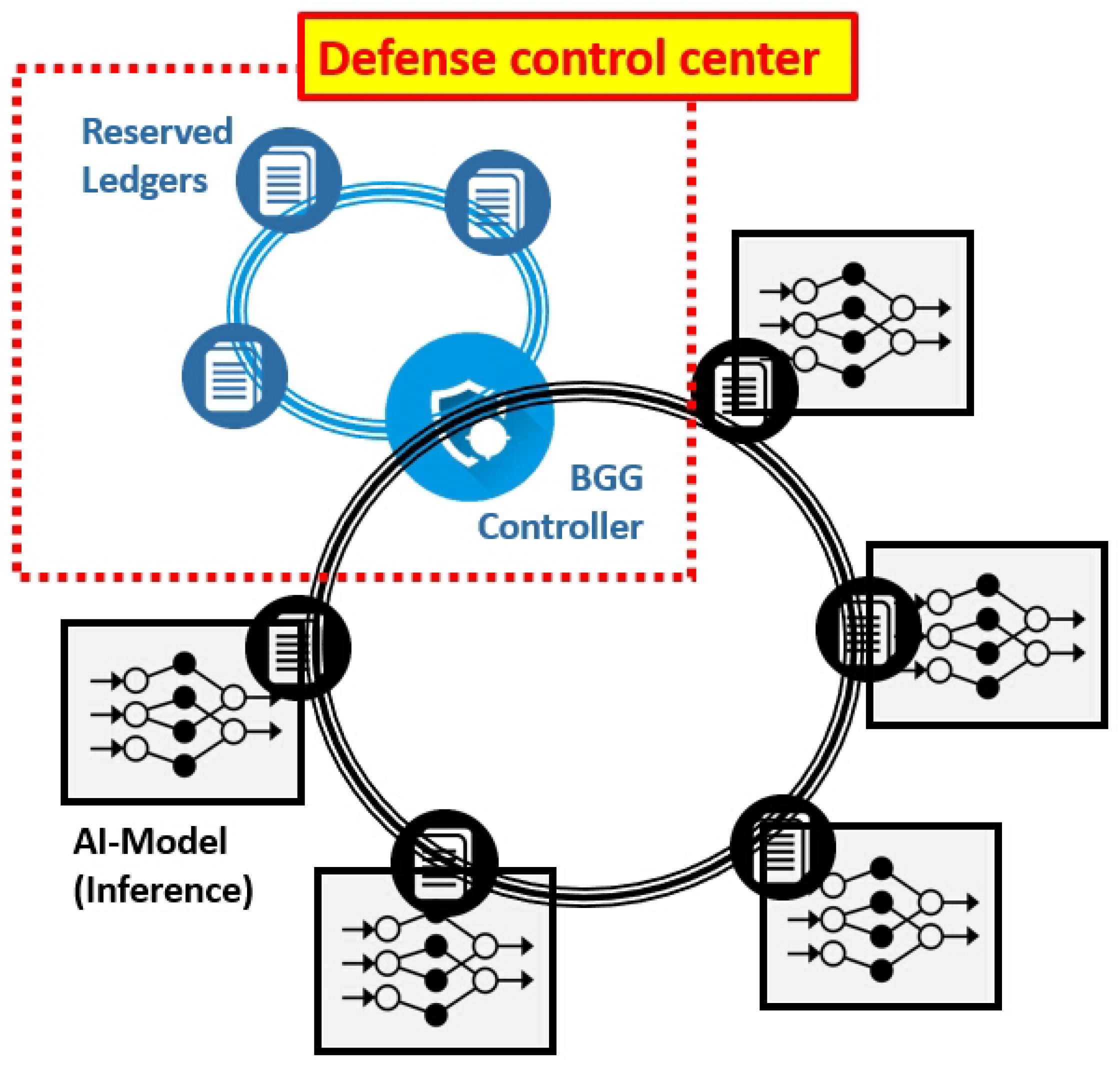

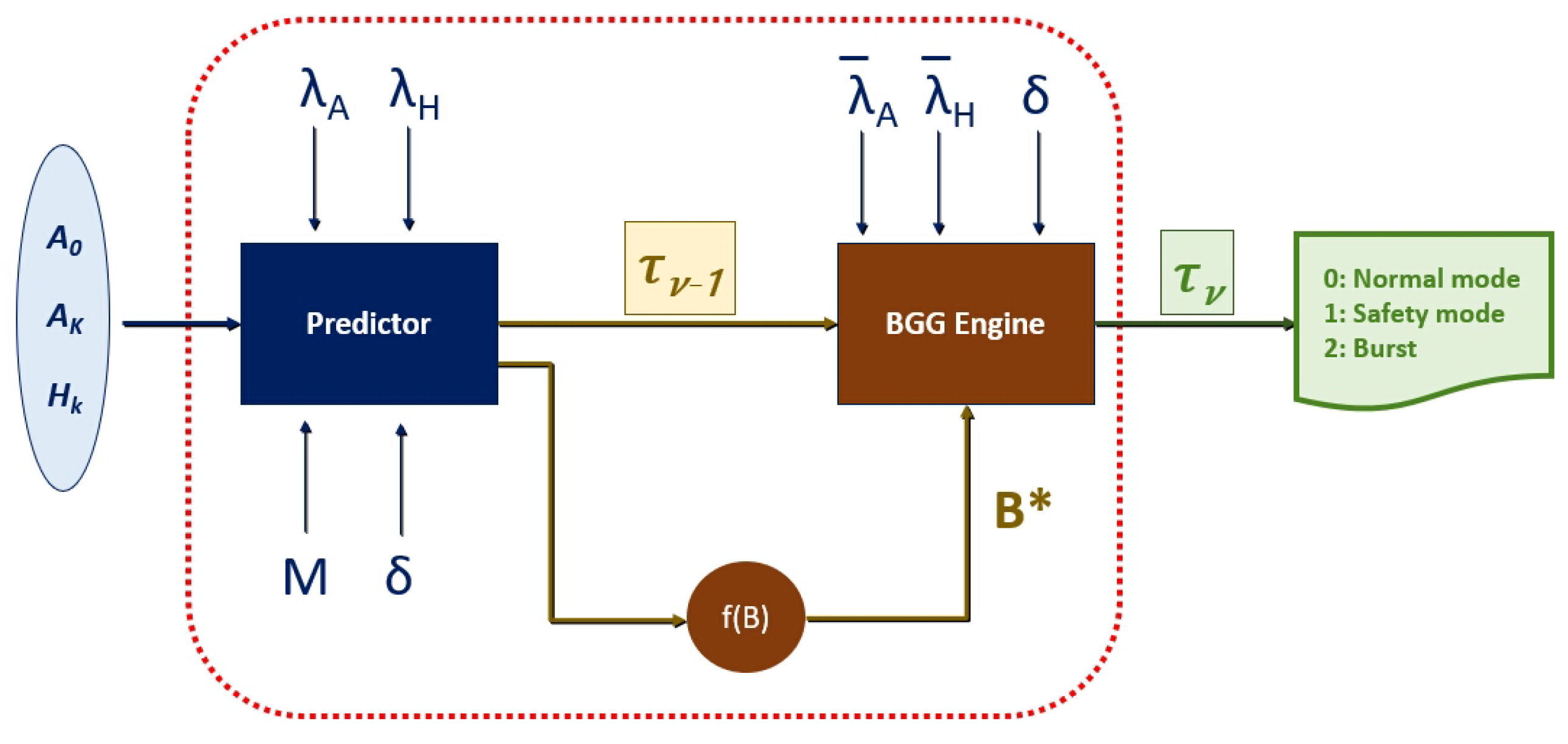

The main contributions of this paper are significant advancements in the integration of blockchain technology into a robust data poisoning (DP) defense system specifically designed for distributed collective AI model networks. Key achievements include the development of the Blockchain Governance Game (BGG) by incorporating artificial intelligence (AI) methodologies, which enhances its effectiveness in cybersecurity applications. Another crucial aspect is the framework developed to effectively prevent 51 percent attacks, thereby safeguarding the integrity of the network against majority control threats. Additionally, the BGG controller uniquely operates at the intersection of blockchain and AI, utilizing machine learning (ML) and deep learning (DL) algorithms for superior security performance. The innovative BGG controller comprises two key components: the Predictor, which uses a convolutional neural network (CNN) to foresee potential attacks by predicting when an adversary may acquire over half of the nodes; and the BGG decision engine, designed based on BGG theory. This newly proposed controller is designed to deliver high cybersecurity performance even with limited data collection and minimal computational power, ensuring resource efficiency while maintaining robust protection measures. The proposed method features an innovative application of the Blockchain Governance Game (BGG) framework to develop advanced data poisoning (DP) defense strategies, setting it apart from standard techniques. Unlike typical approaches that mainly concentrate on filtering damaged data or securing systems, this method takes a proactive stance by anticipating potential DP attack scenarios. It utilizes a Predictor component that employs convolutional neural networks (CNNs) to enhance its predictive capabilities and fortify defenses effectively. This proactive strategy facilitates prompt defensive maneuvers, thereby safeguarding the integrity of artificial intelligence (AI) systems throughout their operational stages. Moreover, the incorporation of blockchain technology fortifies data integrity and security via decentralized validation and immutable ledger frameworks, effectively mitigating risks that centralized systems might inadvertently overlook. The collaboration of machine learning algorithms within the BGG elevates adaptive responses and resource optimization, distinctly highlighting the originality and innovative essence of this data poisoning (DP) defense mechanism in contrast to traditional methods within the cybersecurity landscape.

This paper is organized as follows: The basic knowledge of a DP attack and its defense mechanism has been explained in this section. The theoretical background of the BGG and the BGG-based DP defense network structure are introduced in

Section 2. In

Section 3, the BGG controller, which is the core component of the BGG-based DP defense network, is introduced. The BGG controller in the control center is the decision system of the machine-learning-enabled Blockchain Governance Game, and its sub-components, the Predictor and the BGG engine, are also explained in this section. The simulation result of the BGG controller is provided in

Section 4. Lastly, the conclusion is shown in

Section 5.

4. BGG Controller Simulation Results

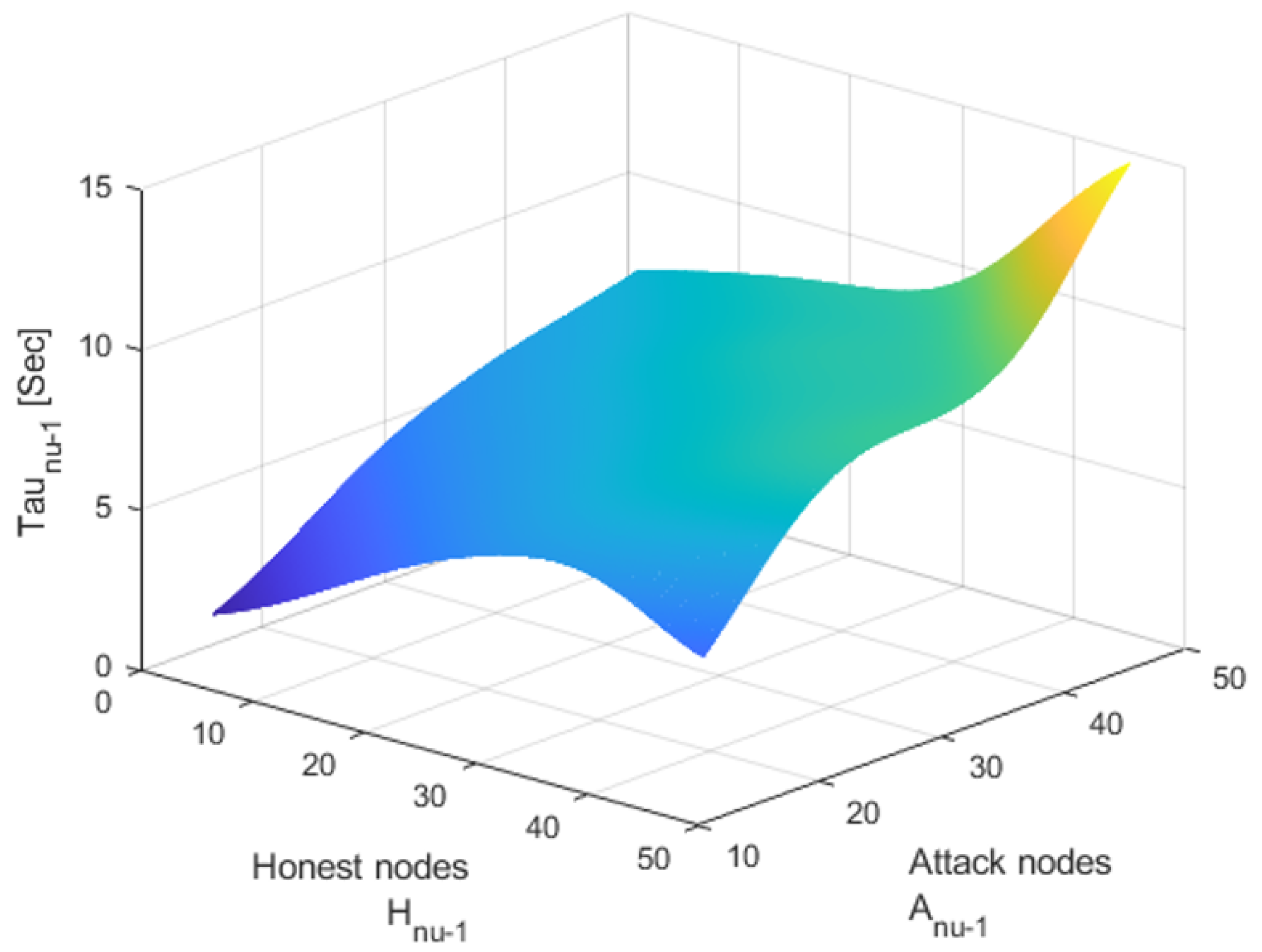

The datasets for training and testing are randomly generated because actual datasets for governing blocks are not available at this moment. The training and testing datasets for the BGG controller were generated through a systematic random sampling process, addressing the absence of actual datasets for governance blocks. The generated samples are mainly the numbers of attacked nodes at each moment

for making the decision. The decision is about choosing the best strategy for operating the DP defense mechanism. Specifically, a total of 10,000 samples were created, consisting of simulated scenarios where nodes are attacked at specific moments

. These datasets comprise separate sets, with 70% allocated for training, 15% for validation, and 15% for testing. The Predictor is trained to predict whether an attacker governs more than a half of the total number of nodes at the next observation moment

. This simulation includes generating datasets for both training and testing. The progress measures of the CNN (i.e., the Predictor) are shown in

Table 1. This structure ensures a robust evaluation of the range of attack patterns and dynamics between genuine and corrupted nodes. During the training phase, the Predictor in the BGG controller is tasked with predicting whether an attacker could gain control over more than half of the total nodes, demonstrating the capacity of the model to generalize these simulated conditions for real-world scenarios effectively. Such meticulous dataset generation and partitioning are crucial for enhancing the controller’s predictive capabilities against data poisoning attacks.

It is noted that the measurements of the BGG controller might not be the same because the training data for the machine learning are generated differently for each trial. For this trial, the best cross-entropy performance was

at 6 epochs, shown in

Figure 6a. The dataset for training is divided into three different sets: 70% for training, 15% for testing, and another 15% for validation within the training dataset. The overall training session was settled after 12 epochs in the validation.

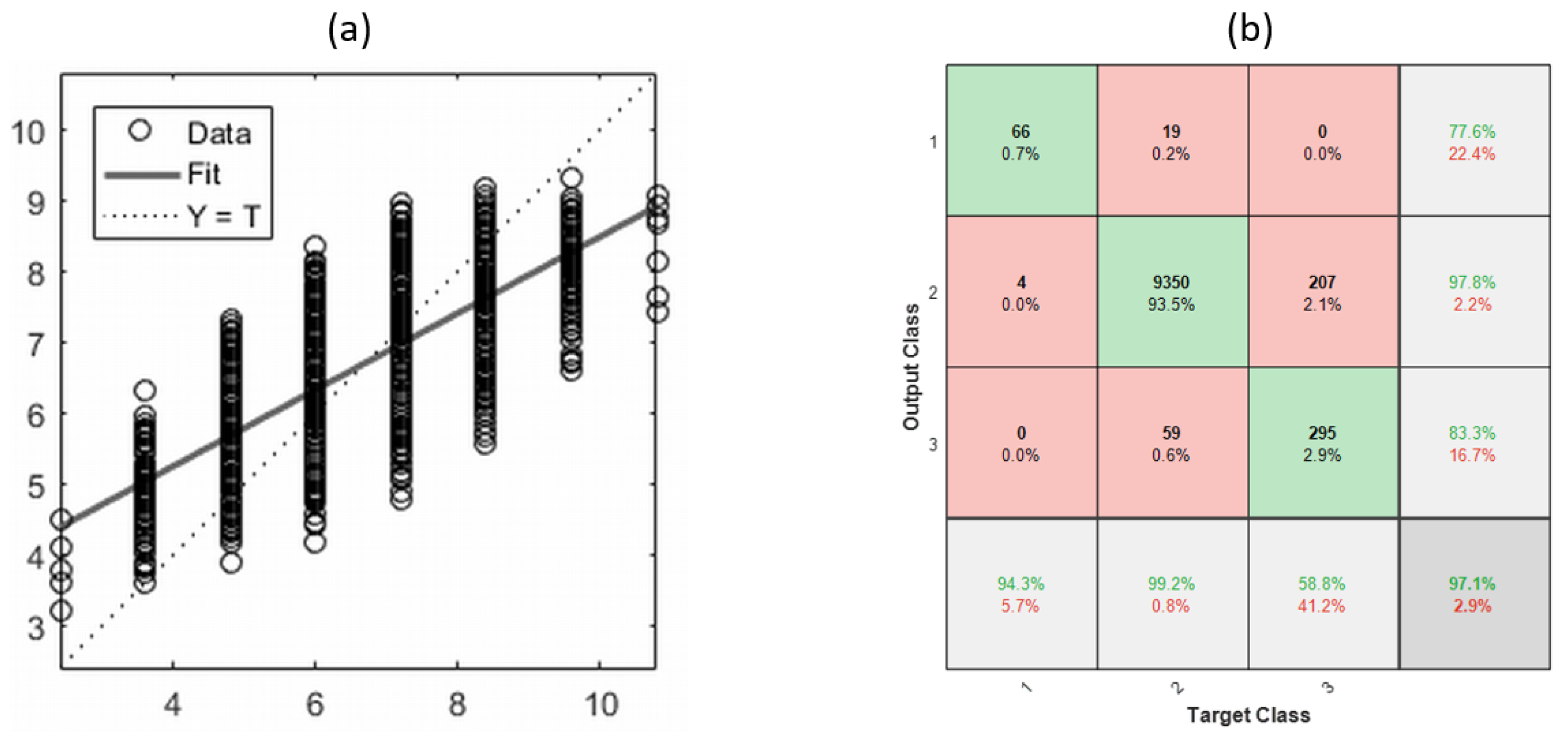

An error histogram represents the overall gap between target values and predicted values after the training of a feed-forward CNN and the Predictor has been trained based on 10,000 samples. The error histogram based on the simulated training data is shown in

Figure 6b and the bin corresponding error is around

with 20 bins. The simulation also provides the regression graph of the Predictor training, which is shown in

Figure 7a. Additionally, the regression function between the predicted values (i.e., target) and the output values is as follows:

where

T is the target variable and

Y is the output variable. In this simulation, the mean square error (MSE) of the training is

. The regression function (

23) indicates the linear relationship between the prediction and the output based on the machine learning training.

In the testing phase of the BGG controller, an additional dataset consisting of 10,000 attack samples was provided for evaluation. This dataset was used to assess the BGG controller, which operates in conjunction with the Predictor and the BGG engine. It is essential to recognize that the testing outcomes for the BGG controller may differ due to the variations in how the testing data are generated. In

Figure 7b, the confusion matrix illustrates the performance of the BGG controller, achieving an impressive prediction accuracy of 96%. Despite correctly predicting some attempted attacks (82 out of 10,000), the BGG controller cannot defend against them as it exceeds system capacity limits due to a shortage of reserved nodes. Consequently, the BGG controller achieves a performance score of 94.2% (=

. It is noted that repeated training may yield different simulation results owing to variations in the initial conditions and the selection of folding samples.