1. Introduction

Masonry work plays a crucial role in construction projects, directly influencing both schedules and costs [

1,

2]. The efficiency of masonry work is determined by several factors, including brick transportation speed, placement accuracy, and worker fatigue [

3,

4,

5]. Given the labor-intensive nature of masonry tasks, workers are required to perform repetitive and physically demanding activities [

6], which can lead to variations in productivity and an increased risk of fatigue over time [

3]. These variations can cause delays in project timelines and inconsistencies in construction quality [

7]. To mitigate these challenges, real-time monitoring and quantitative productivity assessment are essential for optimizing efficiency and maintaining consistency in masonry work [

7,

8,

9].

Therefore, computer vision-based monitoring systems have been widely adopted in construction to automate worker activity tracking [

10,

11,

12], productivity assessment [

13,

14], and safety monitoring [

12,

15,

16,

17,

18]. These systems leverage deep learning-based object detection and tracking algorithms to identify workers [

17,

19,

20], analyze movement patterns [

21,

22], and evaluate task efficiency [

23,

24]. Among these, You only Look Once (YOLO), Faster Region-based Convolutional Neural Network (Faster R-CNN), and Single Shot Multibox Detector (SSD) are commonly used models, offering real-time detection capabilities that facilitate the continuous monitoring of worker behavior in dynamic construction environments [

25,

26,

27,

28].

Computer vision technologies have been utilized in construction for various monitoring applications, including safety compliance verification [

29,

30,

31], progress tracking [

32,

33,

34,

35,

36], and automated productivity analysis [

37,

38]. For instance, several studies have employed YOLO-based detection systems to identify workers wearing personal protective equipment (PPE) and to monitor compliance with safety regulations [

39,

40]. Additionally, motion analysis and pose estimation techniques have been integrated into construction site monitoring to evaluate worker posture, fatigue levels, and ergonomic risks [

41,

42,

43]. Other research has explored the automated recognition of construction tasks, enabling productivity assessments based on detected worker actions and movements [

44,

45].

Despite these advancements, existing object detection models primarily rely on frame-by-frame image processing, which presents challenges in highly dynamic and cluttered construction environments [

15,

46,

47,

48]. Workers frequently interact with materials, tools, and other workers, resulting in occlusion scenarios that disrupt tracking continuity [

49,

50,

51]. Addressing these limitations requires more advanced tracking methodologies that can maintain persistent worker identification even in occlusion-heavy environments [

52,

53,

54].

Occlusion is a major challenge in computer vision-based construction monitoring, particularly in masonry work [

48,

55]. During brick transportation, workers carrying multiple bricks may partially or fully obscure their own bodies [

56], preventing conventional object detection models from maintaining continuous tracking [

48]. Similarly, as the height of the masonry wall increases, parts of the worker’s body become progressively occluded by the stacked bricks, leading to tracking inconsistencies and data loss in movement analysis [

57]. The inability to accurately track workers under these conditions results in gaps in productivity assessments and unreliable performance metrics. For example, in a continuous monitoring situation, if a worker is frequently occluded by construction materials, the detection model may fail to recognize their presence for extended periods [

58]. As a result, the system might incorrectly register the worker as inactive, leading to inaccurate productivity measurement results [

38,

59]. Furthermore, the limitations of existing models can lead to misinterpretations of worker activities, inaccurate performance evaluations, and difficulties in automating construction site monitoring [

15,

60]. Addressing the occlusion problem is crucial for enhancing the accuracy of automated worker tracking and ensuring reliable productivity evaluations [

58,

61].

To overcome these challenges, SAM-based unified and robust zero-shot visual tracker with motion-aware instance-level memory (SAMURAI) has emerged as a promising solution [

62]. Unlike conventional object detection models that process each frame independently, SAMURAI incorporates motion-aware memory selection mechanisms, enabling objects to be tracked continuously even under occlusion scenarios [

62]. By integrating temporal continuity across multiple frames, SAMURAI significantly improves tracking accuracy in highly dynamic environments where objects frequently disappear and reappear due to physical obstructions [

62,

63]. SAMURAI was developed to address long-term object tracking (LTOT) challenges [

62], particularly in scenarios where standard object detection models fail due to occlusion [

62]. Unlike YOLO, which relies solely on frame-based detection, SAMURAI leverages previous frame information to maintain object identities, ensuring greater tracking stability [

62].

SAMURAI is based on the Segment Anything Model (SAM) and its advanced version, SAM2, incorporating a motion-aware instance-level memory selection mechanism [

62]. Unlike conventional object detection models, SAMURAI utilizes SAM2’s segmentation-driven framework to enhance object tracking performance, particularly in occlusion-heavy environments [

62]. This approach allows SAMURAI to refine object tracking by integrating motion-aware selection and affinity-based filtering, ensuring persistent identification of occluded objects across frames [

62]. Recent studies have demonstrated its potential in long-term tracking tasks, including surveillance and autonomous systems [

62], suggesting that its occlusion-resistant capabilities could also be applicable to construction site monitoring.

Despite the increasing adoption of SAMURAI in other industries [

62], studies investigating SAMURAI’s application in construction remain limited. To facilitate the adoption of SAMURAI in the construction sector, it is essential to evaluate whether it can provide a robust and reliable alternative to YOLO for real-time construction monitoring. While previous research has extensively explored YOLO-based worker tracking [

64,

65], no comprehensive study has directly compared the performance of YOLO and SAMURAI in masonry work. Since YOLO remains the most widely used detection model but continues to exhibit weaknesses in persistent worker tracking under occlusion [

66,

67], a direct comparative analysis with SAMURAI is necessary to determine its viability as an effective solution for construction monitoring challenges.

To address these challenges, this study aims to bridge this gap by assessing how SAMURAI performs under occlusion conditions and its potential impact on masonry productivity monitoring. Building on this, this study further evaluates the applicability of occlusion-aware object tracking techniques to improve the accuracy of masonry worker productivity analysis in construction sites. By assessing the strengths and limitations of YOLO and SAMURAI in occlusion-heavy environments, this research explores methods to enhance the reliability of productivity assessments in masonry work. Furthermore, by providing a comparative analysis of these models, this study contributes to the decision-making process for selecting appropriate monitoring techniques based on real-world construction site requirements. The findings may also support advancements in real-time construction productivity monitoring and facilitate data-driven task analysis, ultimately improving the efficiency and accuracy of worker tracking in dynamic construction environments.

2. Methodology

2.1. Research Framework

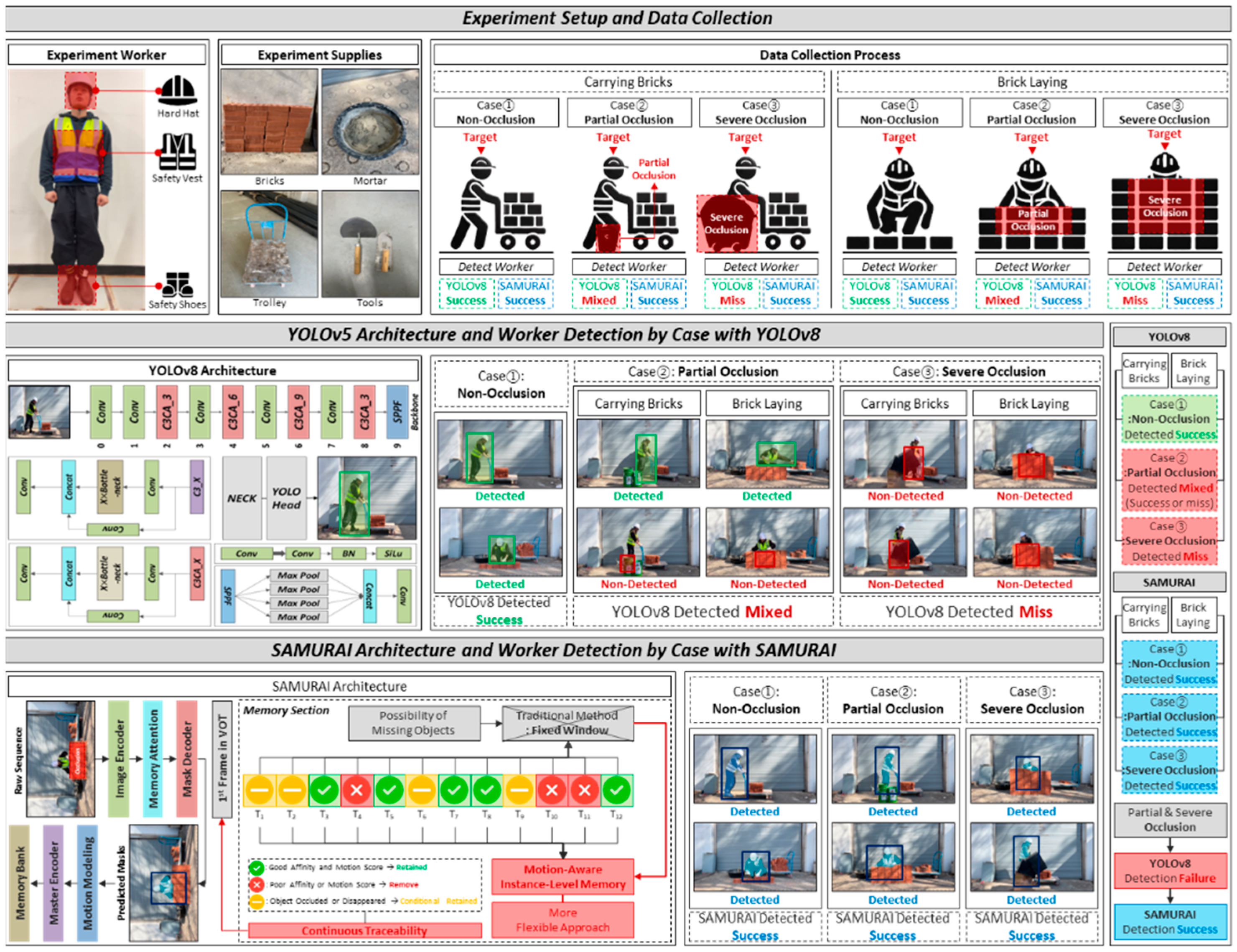

This section presents the proposed framework that integrates experimental setup and data collection and compares YOLOv8 and SAMURAI. The primary objective is to evaluate the applicability of these models for detecting construction workers during masonry tasks, focusing on their performance under different levels of occlusion.

Figure 1 illustrates the overall process. YOLOv8 and SAMURAI are applied to both brick transportation and bricklaying tasks, using datasets with labeled worker images to ensure accurate detection. Video data are collected using a single camera positioned at a fixed location, capturing worker movements and occlusion scenarios. The framework categorizes occlusion into three levels: (1) non-occlusion (no obstruction), (2) partial occlusion (partial obstruction by materials or tools), and (3) severe occlusion (significant obstruction of the worker’s body).

While YOLOv8 processes each frame independently, SAMURAI maintains temporal continuity across frames, enabling more consistent detection even when workers are partially or fully obscured. The detailed structure and methodology of each algorithm will be explained in subsequent sections.

2.2. Experiment Design and Data Collection

This section describes the experimental design and data collection process to evaluate the performance of YOLOv8 and SAMURAI in brick transportation and brick laying tasks. The experiment was conducted in an outdoor environment simulating a construction site.

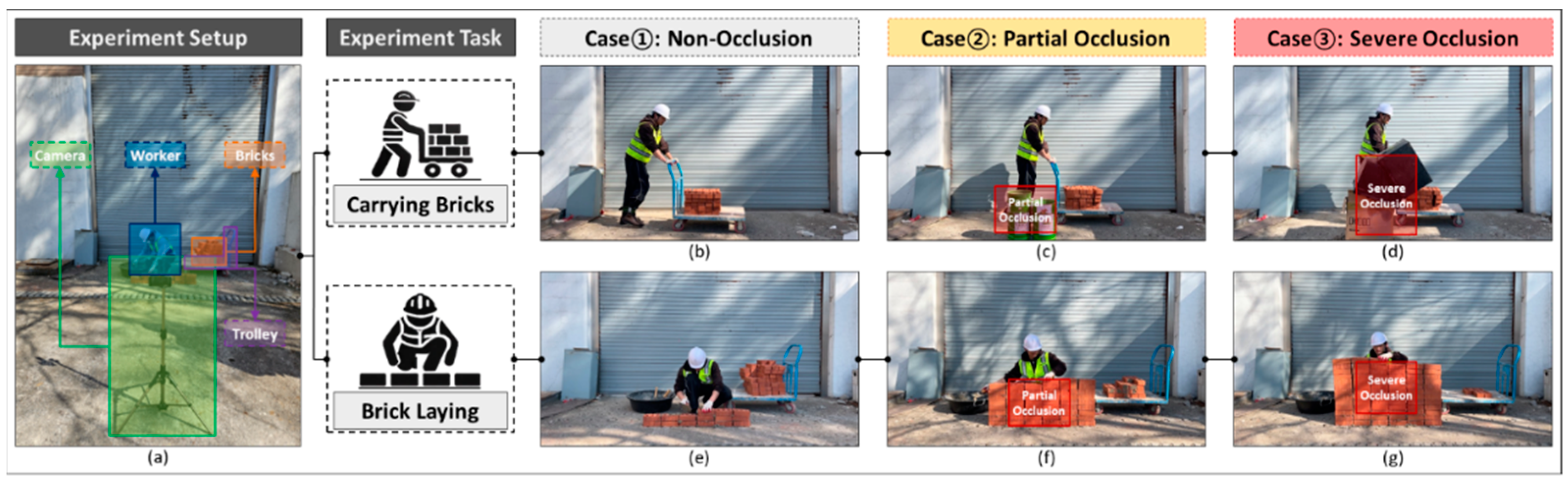

Figure 2a illustrates the experimental setup, showing the fixed camera position and the relative locations of the worker and bricks. A single fixed camera was used to capture two tasks performed by the worker: (1) brick transportation and (2) brick laying. The camera was positioned to clearly capture the worker from the front.

In the brick transportation task, the worker pushed a trolley loaded with bricks, and the level of occlusion was classified based on the degree to which the worker’s body was obstructed by an obstacle.

Figure 2b–d visually represent these occlusion levels: Case ① (non-occlusion) occurred when the worker’s entire body was visible without any obstruction; Case ② (partial occlusion) occurred when the obstacle obscured the worker below the knees; and Case ③ (severe occlusion) occurred when the obstacle obscured the worker from the waist up, significantly limiting visibility. The intersection over union (IoU) values for each occlusion level in the brick transportation task were as follows: Case ① (non-occlusion) ranged between 0.85 and 0.92, with an average IoU of 0.89; Case ② (partial occlusion) ranged between 0.67 and 0.75, with an average IoU of 0.71; and Case ③ (severe occlusion) exhibited the lowest values, ranging from 0.45 to 0.58, with an average IoU of 0.52.

In the brick laying task, the worker crouched while stacking bricks, adding one layer at a time until reaching a total of 13 layers. The levels of occlusion were classified based on the degree to which the worker’s body was obscured by the stacked bricks. As depicted in

Figure 2e–g, Case ① (non-occlusion) occurred when the worker’s feet were visible (up to two-three layers of bricks); Case ② (partial occlusion) occurred when only the worker’s upper body was visible (up to seven-eight layers); and Case ③ (severe occlusion) occurred when only the worker’s face was visible (up to twelve layers). For the brick laying task, IoU values varied depending on the occlusion level: Case ① (non-occlusion) had IoU values between 0.82 and 0.90, with an average of 0.86; Case ② (partial occlusion) had values between 0.55 and 0.68, averaging 0.61; and Case ③ (severe occlusion) demonstrated the lowest IoU values, ranging from 0.38 to 0.50, with an average of 0.44.

For each task, videos were recorded until the worker reached each occlusion level. The collected video frames were annotated with bounding boxes around the worker, and the resulting dataset was used to evaluate the performance of YOLOv8 and SAMURAI, as described in the subsequent sections.

2.3. YOLOv8 and SAMURAI Architecture

This section describes the architectures of YOLOv8 and SAMURAI, outlining their core components and operational principles. YOLOv8 processes each frame independently, enabling rapid object detection [

68,

69]. In contrast, SAMURAI incorporates temporal dependencies through memory-based learning, facilitating continuous tracking across frames [

62]. The subsequent subsections detail the structural elements of each architecture and explain the applied detection and tracking methodologies used in this study. Additionally, the differences between YOLOv8 and SAMURAI in object detection and tracking are outlined to provide a clear understanding of their respective mechanisms and implementation in this research.

2.3.1. YOLOv8 Architecture

YOLOv8 is a deep learning-based object detection model that builds upon the efficiency and accuracy of its predecessors in the YOLO algorithm [

70,

71]. It maintains the fundamental one-stage detection pipeline, allowing for rapid object localization and classification within a single forward pass [

72,

73]. YOLOv8 consists of three primary components: the backbone, neck, and head, as illustrated in

Figure 3 [

74,

75].

Compared to YOLOv5, YOLOv8 introduces several architectural improvements. It replaces the C3 modules of YOLOv5 with Cross-Stage Partial connections with two convolutional layers and fusion (C2f), which improves feature fusion while reducing the number of parameters. Additionally, YOLOv8 removes the anchor box mechanism (anchor-free), simplifying the detection head and enabling more flexible object localization [

76]. The backbone is responsible for extracting low-level to high-level spatial features from input images using convolutional layers. The enhanced modules such as C2f and the use of the Sigmoid Linear Unit (SiLU) activation function in place of Leaky Rectified Linear Unit (Leaky ReLU) further contribute to better convergence and detection precision [

76]. It employs Cross-Stage Partial (CSP) connections to enhance gradient flow and reduce computational redundancy while maintaining detection performance. The extracted features are then forwarded to the neck, which utilizes Spatial Pyramid Pooling-Fast (SPPF) layers to aggregate multi-scale features. This enhances the model’s ability to detect objects of varying sizes, making it particularly useful in dynamic environments. Finally, the YOLO head performs object classification and bounding box regression, producing the final detection results.

For this study, YOLOv8 was applied to detect construction workers performing brick transportation and bricklaying tasks. The model was trained on an annotated dataset consisting of worker images captured in an outdoor environment. The detection performance was evaluated across different occlusion conditions, ensuring a comprehensive analysis of the model’s effectiveness in real-world construction scenarios.

Figure 3 illustrates the improved architecture of YOLOv8, including updated backbone modules and anchor-free detection head, which distinguish it from previous YOLO versions and contribute to its enhanced performance.

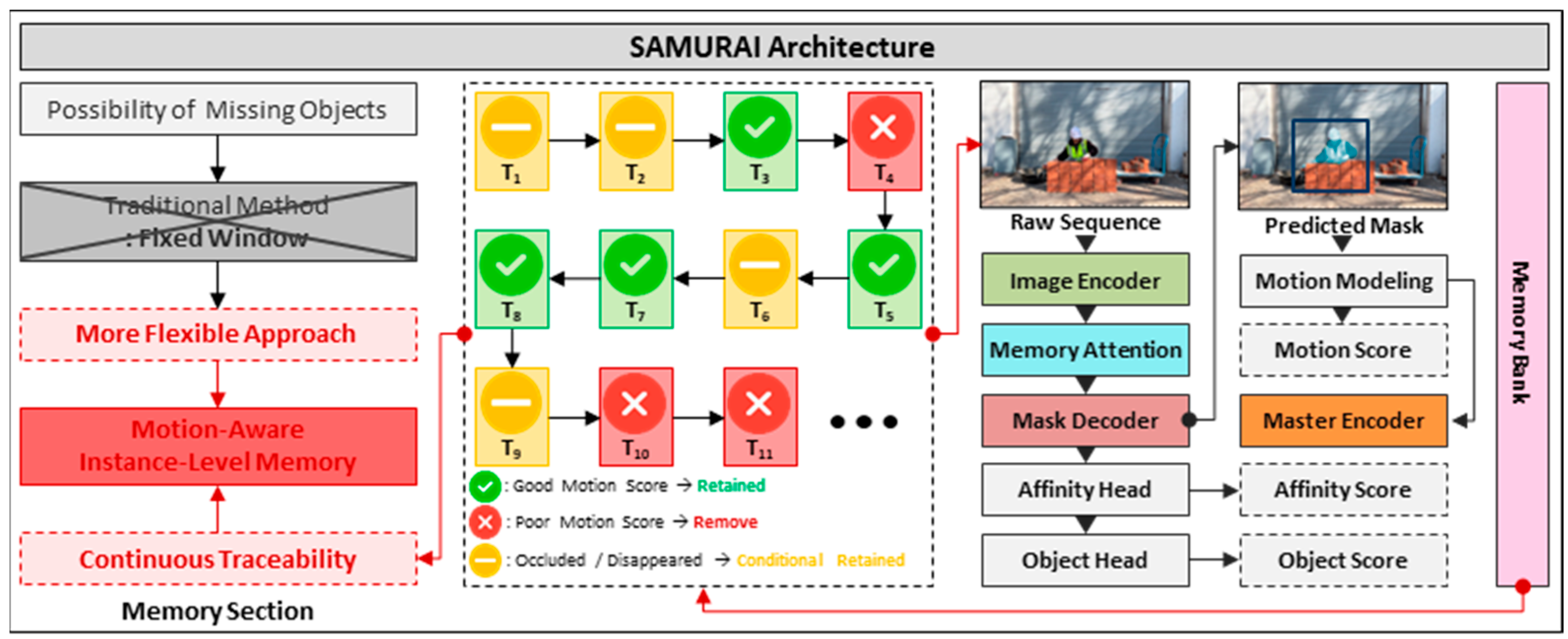

2.3.2. SAMURAI Architecture

SAMURAI is an object tracking architecture that utilizes temporal relationships between consecutive frames to track object locations [

62]. Unlike single-frame-based detection models, SAMURAI incorporates a memory-based approach to maintain object information over time [

62]. This study applies SAMURAI to analyze worker movements in a construction environment and assess detection performance under different occlusion conditions.

The SAMURAI architecture consists of key components, including an image encoder, memory attention, mask decoder, and additional modules for processing and evaluation. The image encoder processes input frames and extracts feature maps, converting visual data into structured representations. These extracted features are then used to detect objects and analyze spatial relationships. The memory attention module utilizes stored object information from previous frames to determine object locations in the current frame, facilitating tracking across sequential frames.

The mask decoder reconstructs object locations using the memory-attended information. Additionally, the motion modeling module accounts for object movement characteristics such as displacement and velocity. The master encoder further refines detection results, and two auxiliary components, the affinity head and object head, assess the confidence of detected objects based on the consistency of their features across frames.

SAMURAI includes a motion-aware memory selection mechanism, which prioritizes and utilizes relevant temporal features for each object across sequential frames. This mechanism enables continuous object tracking by dynamically selecting memory features that reflect motion patterns, even under conditions involving occlusion or scene changes.

In this study, SAMURAI is used to track construction workers performing brick transportation and bricklaying tasks. The collected data are processed through the memory module, allowing for the verification of detection consistency across frames. The detailed evaluation of detection performance will be discussed in subsequent sections.

Figure 4 illustrates the SAMURAI architecture implemented in this study.

2.3.3. Differences in Detection Between YOLOv8 and SAMURAI

This section presents the structural and operational differences between YOLOv8 and SAMURAI, focusing on their distinct detection methodologies. The primary difference between these models lies in how they process frames and track objects over time. YOLOv8 functions as a frame-based object detection model, where each image is analyzed independently without referencing previous frames. This approach involves extracting features directly from each frame using a convolutional neural network (CNN) and performing object localization and classification within that frame. As a result, YOLOv8 provides detection results based only on the current frame without incorporating temporal dependencies.

On the other hand, SAMURAI utilizes a memory-based tracking mechanism that references object information from previous frames. By integrating memory attention, SAMURAI can refine detection results based on past observations, allowing for frame-to-frame consistency in tracking. Additionally, motion modeling is employed to estimate object movement trajectories, leveraging historical motion patterns to complement detection results.

Table 1 summarizes the key differences between YOLOv8 and SAMURAI in object detection and data processing.

2.4. Performance Comparison Between YOLOv8 and SAMURAI

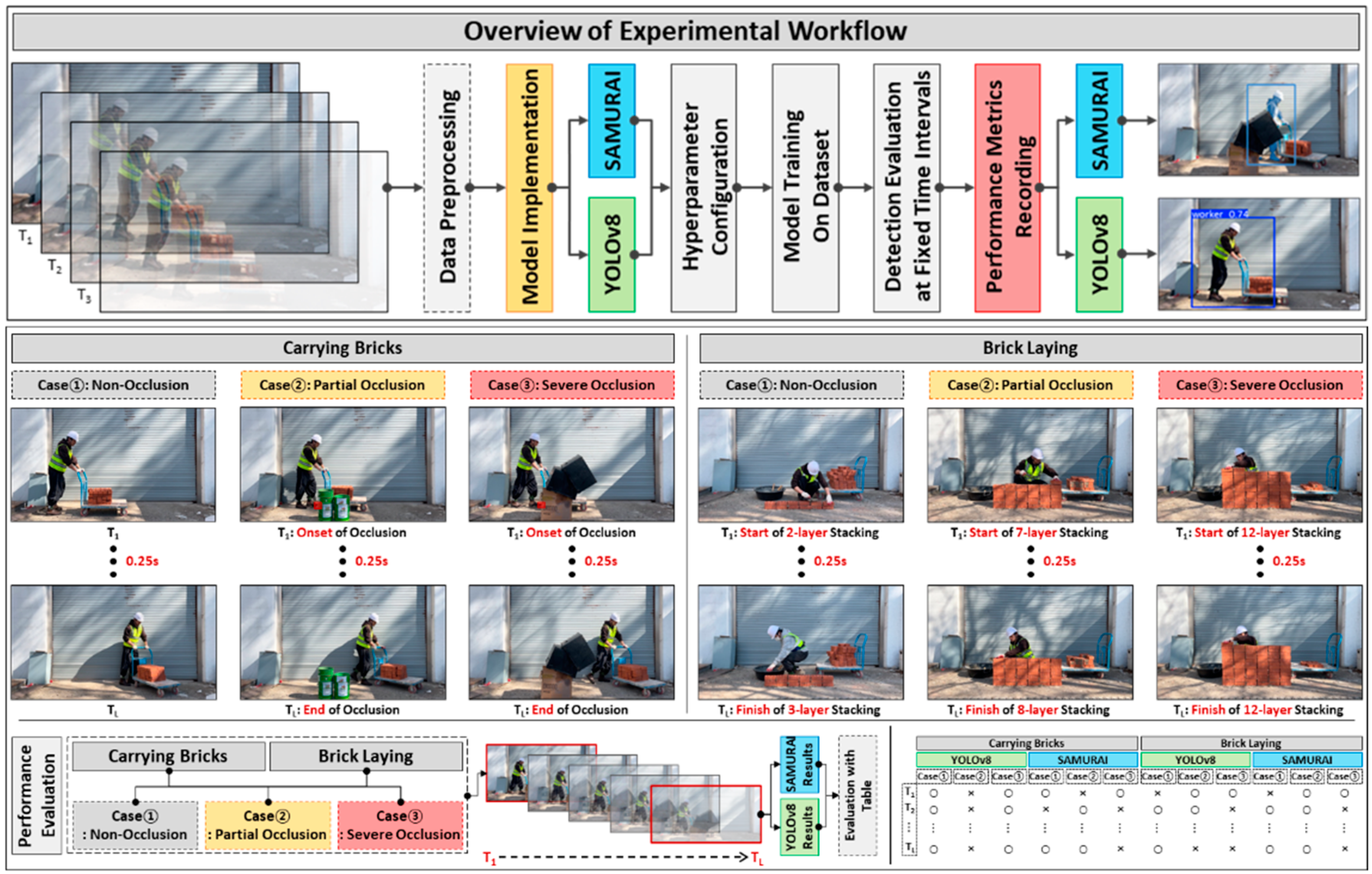

This section presents the methodology for evaluating the detection performance of YOLOv8 and SAMURAI under varying occlusion conditions. The evaluation process systematically segments video sequences into fixed temporal intervals and measures detection accuracy at each time-step. To ensure structured analysis, detection results are compiled in tabular format, allowing for a comparative assessment based on task type, applied algorithm, occlusion case, and time-based evaluation intervals.

Figure 5 visually represents the evaluation segments for each occlusion scenario, including key time points (T₁ to T₁) and occlusion severity levels. Additionally, it illustrates the overall experimental workflow, detailing the processes of data preprocessing, model implementation (YOLOv8 and SAMURAI), and systematic performance evaluation across different tasks and conditions. T

1 denotes the initial evaluation time-step for each occlusion scenario, while T

L (last time-step) represents the final evaluation time point.

For the brick transportation task, performance evaluation encompasses Case ① (non-occlusion), Case ② (partial occlusion), and Case ③ (severe occlusion). The evaluation period starts from the moment the worker enters the occlusion region until they are fully visible again. In all cases, a total duration of 15 s is analyzed, with detection results recorded at 0.25 s intervals, yielding 60 evaluation time points (T1 to T60). In Case ①, the worker remains fully visible without obstruction, providing a baseline for detection performance. In Case ②, the worker becomes partially occluded as an obstacle obstructs the lower body, and evaluation continues until visibility is fully restored. In Case ③, severe occlusion occurs when the worker’s upper body and head are significantly obscured by the obstacle, posing additional detection challenges. By dividing the evaluation into 0.25 s intervals, the study assesses how YOLOv8 and SAMURAI perform during the occlusion period.

For the brick laying task, performance evaluation is based on occlusion progression due to the increasing height of stacked bricks rather than worker movement. The non-occlusion condition (Case ①) is defined as the stage when the worker remains fully visible, corresponding to brick layers up to the second and third rows. Partial occlusion (Case ②) is observed when only the upper body of the worker is visible, occurring from the seventh to eighth brick layers. Severe Occlusion (Case ③) begins when only the worker’s face or part of the upper body is visible, occurring from the twelfth brick layer onward. Since stacking two brick layers takes approximately one minute, the evaluation follows the same segmentation approach as the brick transportation task, recording detection results at 0.25 s intervals over 240 time-steps (T1 to T240).

By structuring the evaluation in this manner, detection trends can be analyzed across time, and the comparative performance of YOLOv8 and SAMURAI under varying occlusion levels can be assessed. The subsequent section discusses the experimental results and provides a detailed performance comparison based on the collected data.

All experimental evaluations were conducted using Python 3.10 and PyTorch 2.0 on a workstation equipped with an NVIDIA RTX 3090 GPU and Intel Core i9-12900K CPU. Both YOLOv8 and SAMURAI were implemented in the same software environment to ensure consistency in detection performance measurement across time-steps. Detection results were recorded and processed systematically at each predefined interval, supporting accurate and reproducible evaluation.

3. Experimental Evaluation

This section presents the evaluation results of YOLOv8 and SAMURAI for worker detection in brick transportation and brick laying tasks. The analysis focuses on detection performance across different occlusion levels, comparing the two models based on their ability to identify workers under varying degrees of visibility. The evaluation follows a structured time-based analysis, ensuring a comprehensive comparison of detection trends.

To systematically compare the detection performance, the evaluation framework categorizes the results into three occlusion cases: Case ① (non-occlusion), Case ② (partial occlusion), and Case ③ (severe occlusion). For each case, detection accuracy is recorded at predefined time intervals to analyze temporal detection consistency. The results are presented in a tabular format, with additional visual representations highlighting key detection outcomes at different occlusion stages.

The results are divided into two sections based on experimental tasks.

Section 3.1 analyzes detection performance in brick transportation, where occlusion occurs as the worker moves behind obstacles, such as a trolley loaded with bricks.

Section 3.2 evaluates detection performance in brick laying, where occlusion progressively increases as more brick layers are stacked in front of the worker. Each subsection details the detection trends observed for YOLOv8 and SAMURAI, offering insights into their object detection consistency across time and occlusion conditions. A final summary in

Section 3.3 consolidates the findings and provides a comparative analysis of the detection effectiveness of both models.

3.1. Detection Performance in Brick Transportation

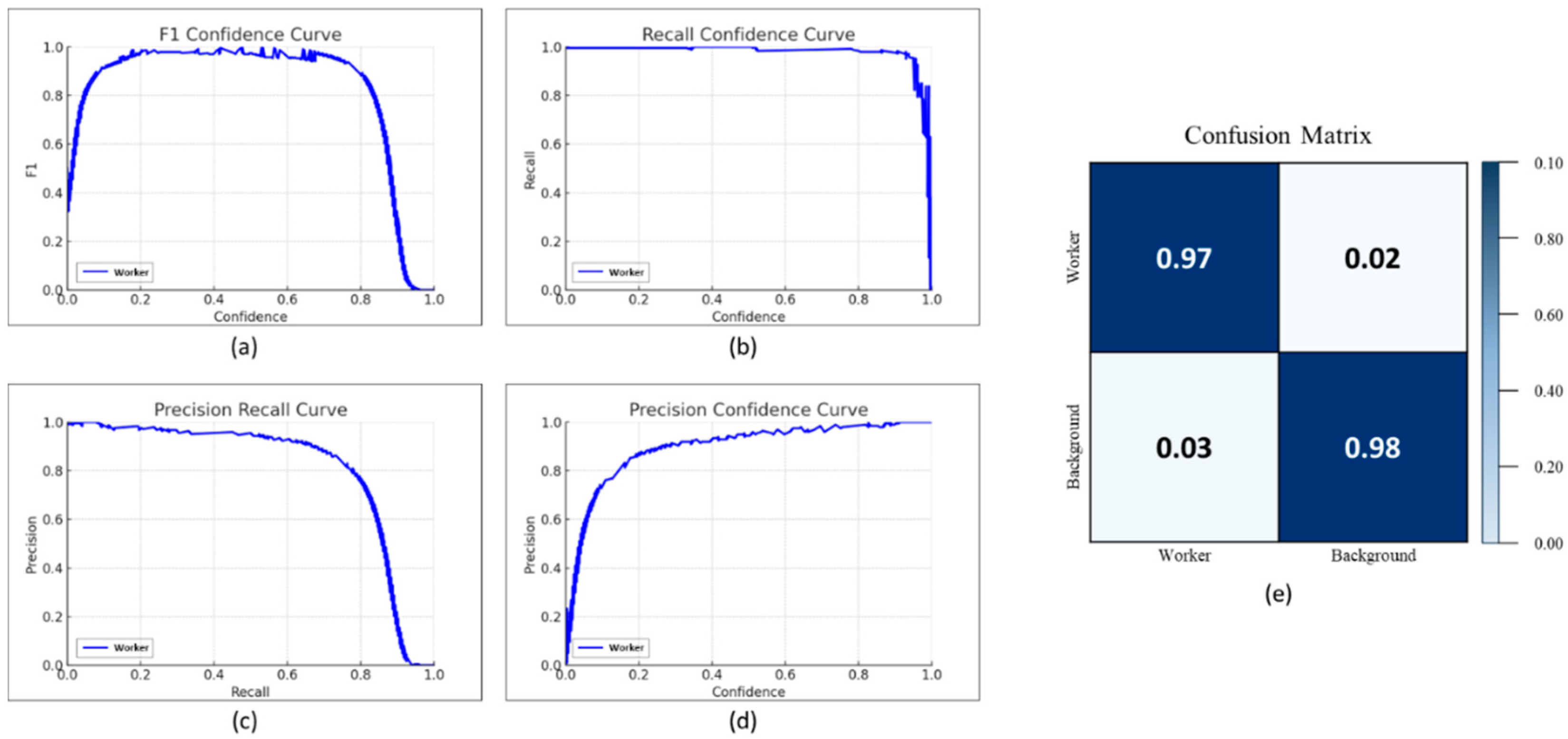

The detection performance of YOLOv8 and SAMURAI was evaluated for the brick transportation task by analyzing detection results under three occlusion conditions: non-occlusion, partial occlusion, and severe occlusion. The evaluation process involved segmenting video sequences into specific time intervals and measuring detection accuracy at each time-step. Performance assessment was conducted using multiple metrics, including the F1–confidence curve, recall–confidence curve, precision–recall curve, precision–confidence curve, and confusion matrix, as shown in

Figure 6.

Detection results were analyzed over 60 evaluation time-steps, recorded at 0.25 s intervals. Detection accuracy was determined based on the classification of true positives (TPs), true negatives (TNs), false positives (FPs), and false negatives (FNs). The accuracy for each occlusion case was computed using the following equation:

Based on the values in the confusion matrix, the accuracy was calculated to be 0.975.

Each detection event was recorded at 0.25 s intervals, and detection success was systematically analyzed at each time-step. The evaluation period for each case was set to 15 s, resulting in 60 detection time-steps from T

1 to T

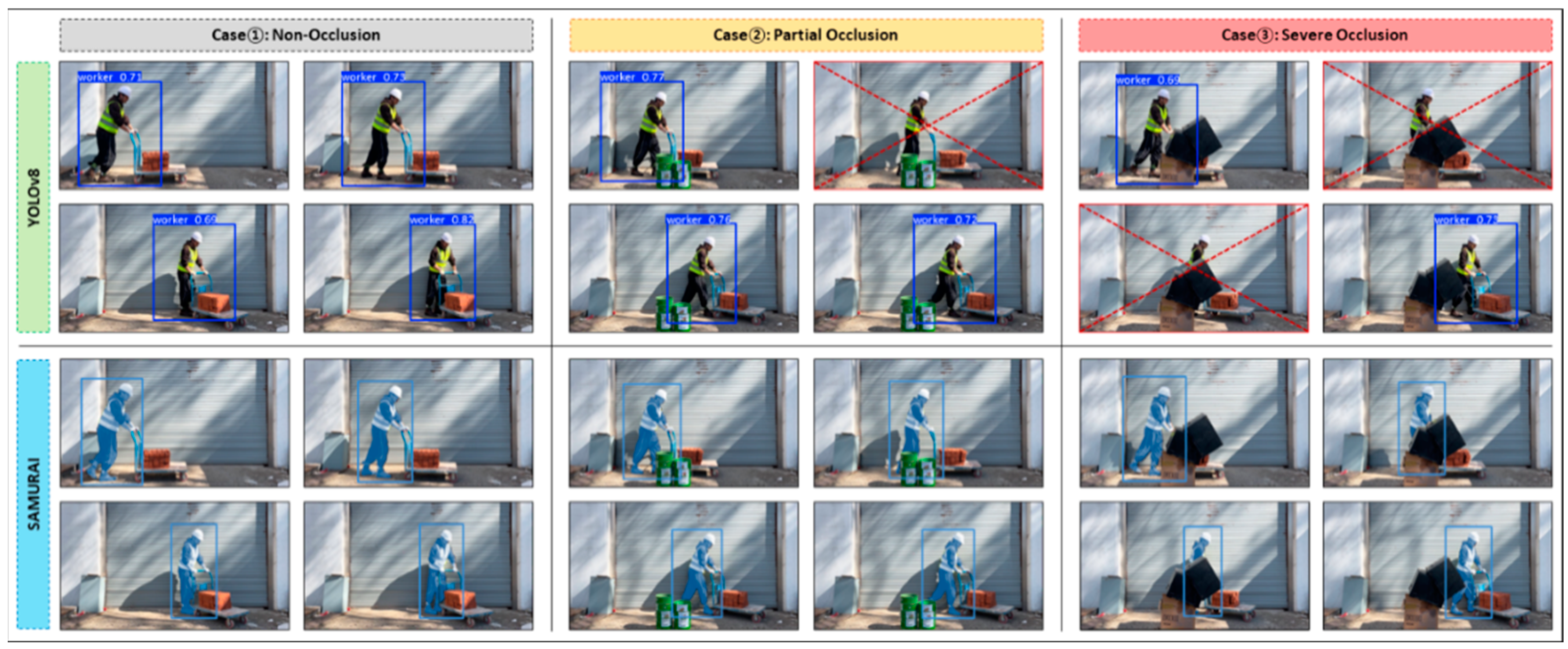

60. The dataset included sample detection images for visualization, with four representative detections per algorithm for each occlusion level, totaling twenty-four images, as shown in

Figure 7.

Detection accuracy varied across occlusion levels and algorithms. The time-series detection status for YOLOv8 and SAMURAI in brick transportation is summarized in

Table 2, which provides a structured comparison of detection performance across different occlusion conditions, now incorporating accuracy values. The non-occlusion case was evaluated separately for YOLOv8 and SAMURAI. For the partial occlusion case, the analysis focused on frames where the worker was partially obstructed by an obstacle, continuing until the obstruction was fully removed. The severe occlusion case was evaluated for the period when the worker’s upper body was significantly obstructed, affecting visibility.

Table 2 provides a detailed time-step-based evaluation, outlining detection success at each recorded time-step, now including accuracy values. The overall performance was computed using the following equation:

YOLOv8 detected 56 out of 60 time-steps in the non-occlusion case, achieving an accuracy of 93.33%. In the partial occlusion case, it detected 49 out of 60 time-steps, achieving an accuracy of 81.67%. In the severe occlusion case, it detected 41 out of 60 time-steps, resulting in an accuracy of 68.33%. Similarly, SAMURAI detected 59 out of 60 time-steps in the non-occlusion case, achieving an accuracy of 98.33%. In the partial occlusion case, it detected 57 out of 60 time-steps, achieving an accuracy of 95.00%. In the severe occlusion case, it detected 56 out of 60 time-steps, resulting in an accuracy of 93.33%

3.2. Detection Performance in Brick Laying

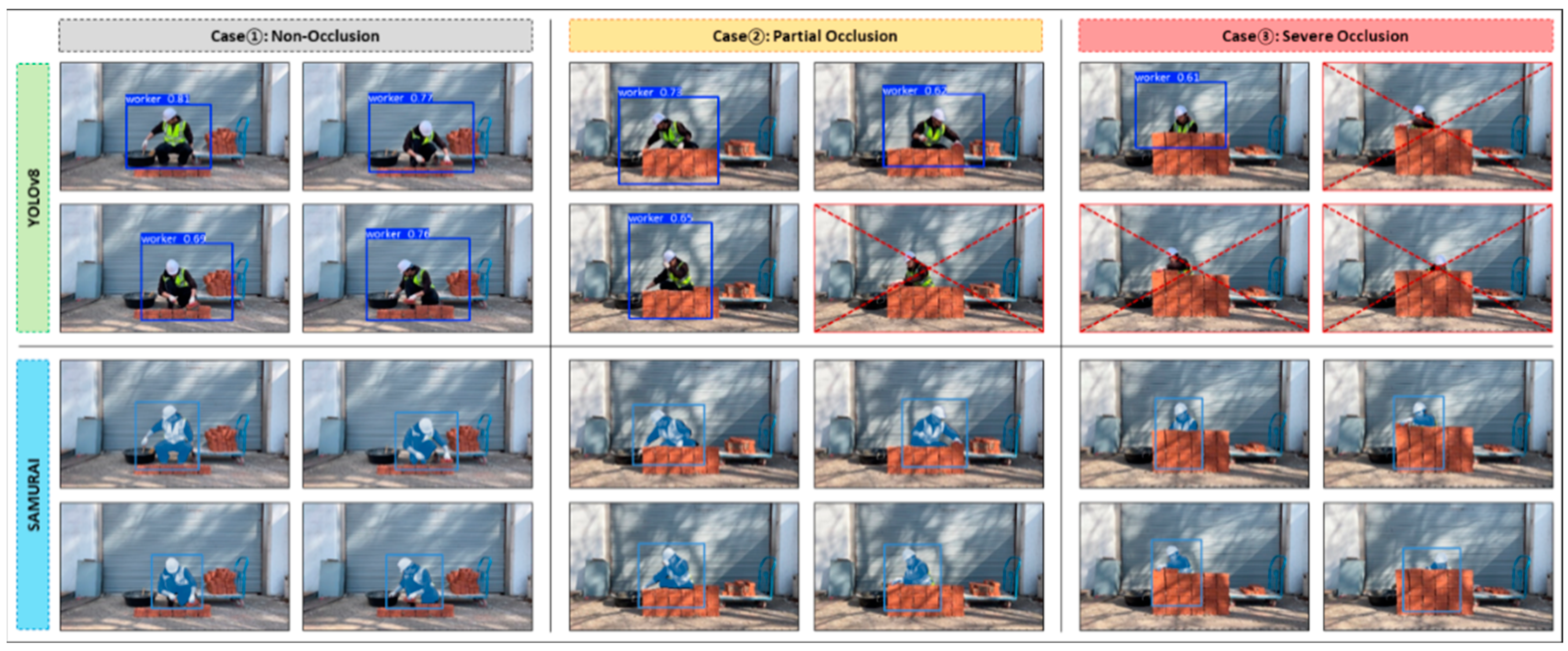

The detection performance of YOLOv8 and SAMURAI was also evaluated for the brick laying task. Unlike the brick transportation task, which used 60 evaluation time-steps, the brick laying task was analyzed over 240 time-steps. As in the previous analysis, three occlusion conditions were considered: non-occlusion, partial occlusion, and severe occlusion.

Figure 8 presents representative detection cases for each occlusion level of YOLOv8 and SAMURAI, including four cases per algorithm and per occlusion condition, totaling 24 detection examples.

Table 3 provides a structured comparison of detection performance across different occlusion conditions, summarizing the number of correctly detected time-steps for each algorithm. As in

Section 3.1, detection accuracy was calculated using the previously introduced formula.

Table 3 provides a detailed breakdown of detection success at each recorded time-step for the brick laying task. YOLOv8 detected 223 out of 240 time-steps in the non-occlusion case, achieving an accuracy of 92.92%. In the partial occlusion case, it detected 185 out of 240 time-steps, achieving an accuracy of 77.08%. In the severe occlusion case, it detected 117 out of 240 time-steps, resulting in an accuracy of 48.75%. Similarly, SAMURAI detected 236 out of 240 time-steps in the non-occlusion case, achieving an accuracy of 98.33%. In the partial occlusion case, it detected 226 out of 240 time-steps, achieving an accuracy of 94.17%. In the severe occlusion case, it detected 222 out of 240 time-steps, resulting in an accuracy of 92.50%.

3.3. Comparison of the Detection Accuracy of YOLOv8 and SAMURAI

Section 3.1 and

Section 3.2 presented the detection performance of YOLOv8 and SAMURAI in two different construction tasks: brick transportation and brick laying. Detection accuracy varied across occlusion conditions and tasks, with YOLOv8 and SAMURAI demonstrating different levels of robustness under various occlusion scenarios. To provide a direct comparison, the detection accuracy of each case was computed as the ratio of correctly detected time-steps to the total number of time-steps across both tasks.

The total number of time-steps used for evaluation was 300, derived from 60 time-steps in the brick transportation task and 240 time-steps in the brick laying task. The final accuracy comparison was computed using the following equation:

Table 4 summarizes the comparative detection accuracy of YOLOv8 and SAMURAI for each occlusion condition across both tasks.

The results in

Table 4 indicate that detection accuracy was highest in Case ① (non-occlusion), followed by Case ② (partial occlusion), and lowest in Case ③ (severe occlusion). Additionally, SAMURAI consistently achieved higher detection accuracy than YOLOv8 across all occlusion conditions. These findings highlight variations in detection robustness depending on the occlusion level and the nature of the construction task. Further analysis of these results will be discussed in subsequent sections.

4. Discussion

This section analyzes the detection performance of YOLOv8 and SAMURAI across different occlusion levels and construction tasks. The impact of occlusion severity on detection accuracy is examined, along with differences between brick transportation and brick laying tasks. Additionally, this section discusses how detection speed and accuracy affect each other, explaining the advantages and disadvantages of each model. The practical applications and limitations of YOLOv8 and SAMURAI are evaluated, and potential improvements, such as hybrid detection models, are proposed for future research.

4.1. Analysis of Detection Performance

The detection performance of YOLOv8 and SAMURAI was assessed across different occlusion conditions and construction tasks, focusing on accuracy, processing speed, and computational efficiency. The evaluation results highlight the strengths and limitations of each model in various scenarios.

Both models demonstrated high detection accuracy under non-occlusion conditions (Case ①). However, as occlusion increased, YOLOv8’s performance declined significantly. In partial occlusion (Case ②), YOLOv8 showed reduced accuracy compared to SAMURAI, and in severe occlusion (Case ③), its accuracy dropped to approximately 52.67%. In contrast, SAMURAI maintained a relatively high accuracy of 92.67%, indicating that its memory-based tracking mechanism helps maintain consistent detection results even when objects are occluded for extended periods.

The impact of occlusion also varied by task type. In the brick transportation task, which involved 15 s video clips, both models achieved relatively high accuracy due to the worker’s continuous movement, which periodically exposed them to the camera. However, in the brick laying task, which used 60 s video clips, detection accuracy decreased due to the prolonged periods in which workers remained stationary behind stacked bricks. YOLOv8 performed better in dynamic environments where occlusion was temporary, whereas SAMURAI demonstrated greater robustness in scenarios where occlusion persisted over time.

In addition to accuracy, key evaluation metrics such as precision, recall, F1-score, and IoU were analyzed to provide a comprehensive assessment of detection performance. YOLOv8 achieved a precision of 97.00%, recall of 98.00%, and F1-score of 0.97, indicating strong performance under minimal occlusion conditions. Similarly, SAMURAI recorded a precision of 96.50%, recall of 94.80%, and F1-score of 0.95, maintaining high detection quality even under occlusion-heavy scenarios.

Beyond accuracy, detection speed is also a crucial factor in evaluating model performance. YOLOv8 processed frames at approximately 28–32 FPS, making it suitable for real-time applications. However, its frame-by-frame detection approach made it more susceptible to occlusion. In contrast, SAMURAI prioritized accuracy over speed, operating at approximately 9–12 FPS. While this slower speed limits its suitability for real-time applications, its ability to maintain high accuracy in occlusion-heavy environments makes it more reliable for scenarios where precise detection is required.

Table 5 provides a summary of detection accuracy, precision, recall, F1-score, processing speed, and video processing times for each task, offering a detailed comparison between the two models.

These findings indicate that YOLOv8 is approximately 2.5 to 3.5 times faster than SAMURAI in terms of frame processing speed. However, SAMURAI achieves about 1.3 times higher accuracy on average across all occlusion conditions. YOLOv8 is well-suited for real-time applications requiring fast inference, while SAMURAI provides superior accuracy, particularly under occlusion, albeit with higher computational demands. The inclusion of precision, recall, and F1-score enhances the reliability of performance evaluation, providing a more comprehensive view of each model’s detection capabilities under various site conditions.

4.2. Contribution and Limitations

The comparative analysis of YOLOv8 and SAMURAI highlights the balance between detection speed and accuracy. YOLOv8 is highly effective in environments with minimal occlusion, ensuring fast detection with lower computational costs. However, its frame-by-frame processing makes it vulnerable to detection failures under occlusion. In contrast, SAMURAI excels in environments with frequent occlusions, maintaining higher accuracy even in challenging detection scenarios. Quantitatively, SAMURAI demonstrates approximately 1.3 times higher average detection accuracy compared to YOLOv8 across all occlusion conditions, though at the cost of 2.5–3.5 times slower processing speed. These differences suggest that a hybrid detection approach combining YOLOv8’s speed and SAMURAI’s occlusion resilience could serve as a viable alternative for optimizing performance across diverse construction environments. Moreover, this comparison contributes to the decision-making process in selecting an appropriate monitoring algorithm based on the specific requirements of construction sites. By analyzing the strengths and weaknesses of both models, this study provides insights that can guide the development of optimized monitoring strategies tailored to the specific requirements of construction site operations.

The implementation of accurate worker tracking systems has significant implications for construction productivity and safety management [

58,

59]. Enhanced detection accuracy, particularly in occlusion-heavy environments, enables more reliable productivity measurements, facilitates data-driven performance evaluations, and supports proactive safety monitoring by maintaining continuous worker visibility [

15,

58]. For instance, in masonry construction, accurate tracking can help identify inefficient work patterns, optimize material handling procedures, and reduce safety risks associated with blind spots.

From a practical standpoint, integrating SAMURAI into existing monitoring systems may influence project workflows, budget allocation, and system scalability. For example, deploying SAMURAI may require more robust hardware infrastructure, such as high-performance GPUs, and increased maintenance due to its computational demands. These factors can affect cost-efficiency and scalability, especially in large or resource-constrained projects. Nonetheless, in environments where precise worker tracking is critical, the enhanced detection performance may justify the additional investment by improving safety outcomes and operational oversight.

However, study has several limitations. The experiments were conducted in a controlled environment, meaning that real-world variations in lighting, background complexity, and unpredictable worker movements could introduce variability in detection performance. The focus on masonry work limits the generalizability of the findings to other construction tasks, such as steel frame assembly or excavation, which may present different occlusion patterns and movement dynamics. Additionally, the evaluation focused on specific occlusion cases, which may not fully capture the diversity of real-world occlusion scenarios in construction sites. Moreover, the occlusion levels in this study were defined using IoU thresholds. However, IoU alone may not fully capture the dynamic nature and complexity of occlusion in real-world environments. Future studies should consider incorporating additional metrics or qualitative assessments to improve occlusion characterization. Furthermore, SAMURAI’s computational performance under high-resolution inputs or complex scenes was not evaluated in this study. Future research should assess whether SAMURAI can maintain its detection accuracy advantage in high-resolution video streams (e.g., 4K) and evaluate latency across different hardware configurations to determine practical deployment feasibility. From a practical implementation perspective, SAMURAI’s higher computational requirements present challenges for deployment in resource-constrained environments, potentially necessitating more substantial hardware investments compared to YOLOv8-based systems. The trade-off between detection accuracy and computational efficiency must be carefully considered within the context of available infrastructure and monitoring objectives. Therefore, further testing in diverse environmental conditions and various computational setups is necessary to validate the generalizability and practical feasibility of the findings.

While YOLOv8 is expected to be advantageous in real-time safety monitoring applications where immediate detection is critical, SAMURAI is anticipated to offer superior accuracy in occlusion-heavy environments where long-term worker tracking is required. Based on the current technological capabilities, YOLOv8 may be more suitable for open construction sites with minimal occlusion and where rapid detection is prioritized, whereas SAMURAI would be better suited for complex, multi-level construction environments with frequent occlusions where tracking continuity is essential. Understanding the strengths and limitations of each model allows for the development of more adaptable detection systems, potentially leveraging a hybrid approach that balances speed and accuracy to optimize detection performance in construction environments.

4.3. Future Research

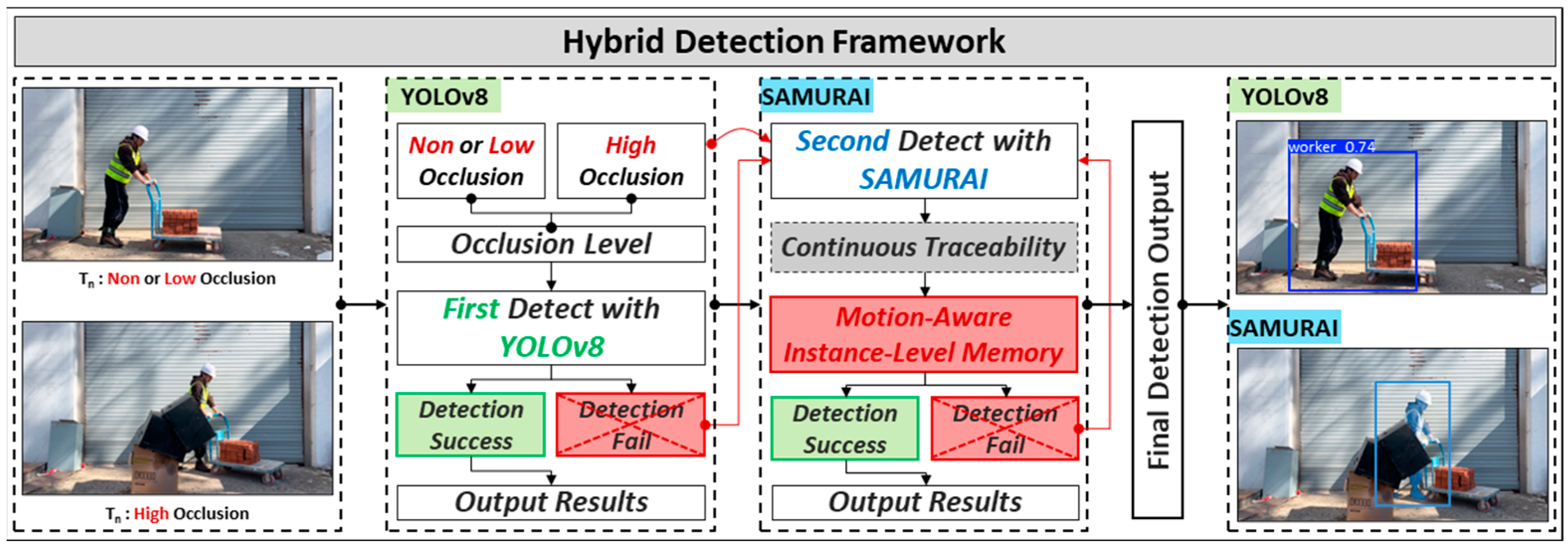

To further enhance the effectiveness of worker detection systems in construction environments, several future research directions are proposed. One potential area of future study is the proposed development of hybrid models that leverage the strengths of both YOLOv8 and SAMURAI. By combining YOLOv8’s fast detection speed with SAMURAI’s robust occlusion handling, a more efficient and accurate detection framework could be achieved. For instance, a conceptual two-stage detection process could be implemented, where YOLOv8 serves as the primary detection system, followed by SAMURAI applying additional occlusion correction techniques. The illustrative workflow of this conceptual hybrid detection framework is shown in

Figure 9.

This figure hypothetically illustrates the two-stage detection process that integrates YOLOv8 and SAMURAI, highlighting the role of each model and the expected detection outcome. The first stage employs YOLOv8, which excels in fast real-time object detection. YOLOv8 is applied as the primary detection model, quickly identifying workers under non-occlusion or low-occlusion conditions. If YOLOv8 successfully detects a worker, the result is directly output as the final detection. However, in cases where occlusion is present, YOLOv8 often fails to maintain detection due to its frame-based processing.

In this conceptual framework, YOLOv8’s detection failure is inferred through predefined conditions such as low detection confidence or temporal discontinuity (e.g., missing detection in sequential frames). When these conditions are met, the system assumes potential occlusion and activates SAMURAI for secondary tracking.

To address this issue, the second stage introduces SAMURAI, which incorporates motion-aware instance-level memory to enhance detection under high-occlusion conditions. When YOLOv8 fails to detect a worker due to severe occlusion, SAMURAI is activated as a secondary detection model, leveraging its tracking mechanism to re-identify the worker. By maintaining continuous traceability across frames, SAMURAI can recover detection failures caused by occlusion and provide more stable worker tracking in construction environments.

This proposed hybrid framework is designed to balance the trade-off between speed and accuracy by prioritizing YOLOv8 for rapid detection in clear conditions while leveraging SAMURAI for occlusion correction in challenging scenarios. The integration of both models minimizes the computational burden associated with using SAMURAI for the entire detection process while ensuring reliable worker tracking under varying occlusion levels.

In addition, future research should consider benchmarking SAMURAI against other state-of-the-art tracking algorithms, such as transformer-based trackers and memory-augmented networks. Comparative evaluations with these advanced models would provide deeper insights into SAMURAI’s unique strengths and limitations, allowing for a more comprehensive understanding of its potential applications across diverse tracking scenarios.

Another crucial research direction involves evaluating performance in diverse environmental conditions. While this study was conducted in a controlled setting, future experiments should consider varied lighting conditions and complex backgrounds to assess the adaptability of YOLOv8 and SAMURAI. Additionally, testing in environments with more intricate occlusion patterns could provide further insights into SAMURAI’s robustness and limitations.

Overall, future studies should focus on enhancing hybrid detection strategies and testing in varied real-world conditions to develop more reliable and scalable worker detection models for construction environments.

5. Conclusions

This study evaluated the detection performance of YOLOv8 and SAMURAI for monitoring workers in masonry tasks, specifically in brick transportation and brick laying. The results demonstrate that YOLOv8 provides fast real-time detection, making it well-suited for dynamic environments where immediate monitoring is necessary. However, its reliance on frame-by-frame detection leads to a significant decline in accuracy under occlusion, particularly in cases of prolonged or severe obstructions. In contrast, SAMURAI maintains a high detection accuracy even in occlusion-heavy conditions due to its memory-based tracking mechanism, but its higher computational demands limit its real-time applicability.

Through this comparative analysis, it was observed that each model has distinct advantages and limitations depending on the work environment and occlusion conditions. YOLOv8 performs effectively when occlusion is minimal, ensuring rapid inference, while SAMURAI is more robust in environments with frequent occlusion, allowing for the more stable tracking of workers despite visual obstructions. These findings highlight the need to select the appropriate detection model based on the specific requirements of the construction site, balancing the need for speed and accuracy according to the operational demands.

Furthermore, this study proposes a hybrid detection approach that integrates YOLOv8’s speed with SAMURAI’s robustness against occlusion. While the potential benefits of such an approach have been outlined, further research is required to develop and validate an integrated framework that optimizes both real-time performance and detection accuracy. Additionally, future studies should focus on evaluating the models under diverse environmental conditions, including varying lighting, complex backgrounds, and different types of occlusions, to ensure broader applicability in real-world construction settings.

By addressing the challenges of worker detection during the performance of masonry tasks, this study contributes to improving productivity monitoring and safety management on construction sites. The findings provide a foundation for developing more adaptive and reliable vision-based monitoring systems, ensuring their effectiveness in diverse and dynamic construction environments.