Abstract

Few-shot named entity recognition (NER) involves identifying specific entities using limited data. Metric learning-based methods, which compute token-level similarities between query and support sets to identify target entities, have demonstrated remarkable performance in this task. However, their effectiveness deteriorates when the distribution of the support set differs from that of the query set. To address this issue, we propose a novel approach that leverages the synergy between the large language model (LLM) and the metric learning-based few-shot NER approach. Specifically, we use the LLM to refine low-confidence predictions produced by the metric learning-based few-shot NER model, thus improving overall recognition accuracy. To further reduce the difficulty of entity classification, we introduce multiple label-filtering strategies to reduce the difficulty for LLMs in performing entity classification. Furthermore, we explore the impact of prompt design on enhancing NER performance. Experimental results show that the proposed method increases the micro-F1 score on Few-NERD and CrossNER by 0.86% and 4.9%, respectively, compared to previous state-of-the-art methods.

1. Introduction

Named entity recognition (NER), as a core task in information extraction, aims to identify words or phrases in text that belong to specific semantic categories. Few-shot NER focuses on leveraging limited data to recognize target categories.

In recent years, studies on few-shot NER have predominantly utilized metric learning, which calculates token-level similarities between query and support samples to identify target entities. The core principle of metric learning is to learn distance or similarity functions that ensure favorable geometric properties in the particular representation space. This approach typically comprises two stages: embedding functions and metric-based classification. The embedding function maps high-dimensional vectors into a low-dimensional space, positioning entities of the same type closely together while distinguishing entities of different types. Metric-based classification then assigns labels to entities based on a chosen distance or similarity metric. Das et al. [1] propose CONTaiNER, which optimizes Gaussian distribution embeddings through contrastive learning, allowing the model to acquire more generalized feature representations and demonstrate superior performance in few-shot NER tasks. Similarly, Huang et al. [2] introduce COPNER, which implements a prompt-based contrastive learning mechanism with class-anchor words, facilitating explicit alignment of entities with their corresponding class labels. However, the metric learning paradigm exhibits a significant limitation: its reliance on token-level similarity between query and support sets can lead to substantially degraded recognition performance when their distributions diverge.

Large language models (LLMs) have recently made significant advancements in tasks such as semantic understanding, question answering, and logical reasoning [3,4,5,6]. Several studies have evaluated the performance of LLMs on NER tasks, showing that they still lag behind existing specialized methods [7,8]. Furthermore, enhancing the performance of LLMs in NER tasks typically requires dividing them into multiple subtasks, thereby increasing resource consumption and computational costs [9]. An alternative approach entails constructing question–answer pairs to fine-tune LLM [10]. However, this approach escalates computational resource demands and conflicts with the constraints of few-shot learning.

To address these issues, we propose a collaborative approach that combines small and large language models to enhance entity recognition accuracy in few-shot settings. Specifically, we initially deploy the small language model (SLM) fine-tuned with metric learning for few-shot NER. This few-shot NER model then performs preliminary entity identification and classification of the query. When token-level similarity between the query and support sets is low, the query is often distant from feature clusters, resulting in reduced entity prediction accuracy. To address this issue, we utilize an LLM for secondary recognition of queries exhibiting low token-level similarity to the support set. LLMs typically exhibit higher accuracy in these scenarios by drawing on their extensive prior knowledge, contextual understanding, and capacity to incorporate provided entity-type definitions. Thus, our method effectively mitigates classification uncertainty and performance degradation stemming from low cosine similarity and limited semantic coverage in support sets, enhancing the performance of few-shot NER.

To further enhance the performance of LLMs in few-shot NER tasks, we introduce multiple label-filtering strategies. By restricting the label scope predicted by an LLM, we reduce the complexity of entity classification. We also design structured prompts to improve entity classification accuracy. Additionally, we investigate three zero-shot prompting strategies—chain-of-thought prompting [11], metacognitive prompting [12], and self-verification prompting [13]—and evaluate their impact on the performance of few-shot NER.

Overall, the domain-specific knowledge of an SLM complements the generalization capabilities of an LLM, thereby enhancing the performance of a few-shot NER task. Experimental results demonstrate that our collaborative approach, when integrated with various open-source LLMs, improves F1 scores across multiple few-shot NER benchmarks.

Our primary contributions are summarized as follows:

- We propose a hybrid framework combining the metric learning-based few-shot NER model and the LLM for improving the performance of few-shot NER, addressing limitations of metric learning when support set coverage is insufficient;

- We introduce label-filtering strategies, such as retaining top-N labels, to reduce classification complexity and enhance LLM performance;

- We design structured prompts to enhance entity classification accuracy and investigate three zero-shot prompting strategies, evaluating their impact on few-shot NER performance.

2. Related Work

2.1. Few-Shot Named Entity Recognition

Few-shot NER is the task of identifying and classifying named entities in text when only a limited amount of labeled data are available for each entity type. In recent years, metric learning frameworks such as prototypical networks and contrastive learning have seen widespread application in few-shot NER tasks, significantly improving recognition performance in the few-shot setting. For instance, Fritzler et al. [14] first apply prototypical networks to NER by generating prototypes for each entity type and classifying test samples based on their distance to these prototypes. Since the non-entity category (“O”) does not need to closely align with any specific prototype in the vector space, the similarity score is adjusted using hyperparameters and optimized through a modified softmax function to improve classification accuracy for non-entity tokens. The proposal by Hou et al. [15] combines prototypical networks with label semantic information. L-TapNet projects both label prototypes and their semantic representations into a feature space that separates different label distributions, thereby enhancing domain adaptability and cross-domain generalization.

Despite the effectiveness of prototypical networks in optimizing distances between prototypes of entity types and samples, they often struggle to learn accurate and discriminative prototypes in the presence of noisy labels. To address this challenge, researchers have introduced contrastive learning as an alternative to traditional prototype-based methods. Contrastive learning simultaneously increases the similarity of positive instances and decreases the similarity of negative instances, thereby encouraging semantically similar entities to cluster while pushing dissimilar entities apart [16]. For example, Das et al. [1] developed CONTaiNER, which optimizes distances in the Gaussian embedding space via contrastive learning to learn more generalized representations, ultimately achieving superior performance in few-shot NER tasks. Xiao et al. [17] propose LACNER, which integrates contrastive learning with label words to enhance token representations and employ dropout-induced noise as a form of data augmentation. However, entity classification performance under metric learning frameworks heavily depends on the quality and distribution of the support set. When the support set lacks certain semantic features, the model’s performance significantly declines and becomes more vulnerable to noisy sample interference.

Although metric learning has advanced few-shot NER on the model side, data scarcity remains a challenge. A straightforward solution is to synthesize new data. Current research on word-level transformations for NER primarily focuses on entity replacement or context (non-entity) substitution. For example, Dai et al. [18] explored simple augmentation strategies such as label-wise word replacement, synonym substitution, and random shuffling of words within predefined text segments for few-shot NER. COSINER, a cosine-similarity-based vocabulary replacement strategy, showed significant improvements over random replacement methods [19]. Nevertheless, because NER requires precise syntactic and semantic understanding, direct substitution can disrupt sentence integrity and negatively impact training outcomes.

2.2. Named Entity Recognition Based on LLM

By training on vast amounts of data, LLMs exhibit robust few-shot learning and generalization capabilities [20]. During inference, supplying a few demonstrations enables the LLM to perform tasks accurately without fine-tuning [21]. Leveraging this characteristic, some studies have applied the LLM to NER via prompt engineering. For instance, Xie et al. [7] decompose the NER task into simpler subproblems and extract entities sequentially by type. Wei et al. [9] introduce a two-stage dialogue paradigm: the first stage identifies potential element types (e.g., entities, relationships, events), while the second stage extracts specific mentions of each type. In another study, Wang et al. [22] propose GPT-NER, transforming sequence-labeling-based NER into a text generation task that requires the model to mark entity positions while copying other content verbatim. Although these works offer initial insights into integrating the LLM with NER through in-context learning, they still lag behind state-of-the-art few-shot NER methods.

2.3. Language Model Plug-Ins

In addition to their strong in-context learning abilities, LLMs can be further enhanced by integrating external tools. Several studies have explored this idea by leveraging the SLM to complement LLM capabilities in natural language understanding (NLU) tasks. For instance, Xu et al. [23] suggest using the SLM as a plug-in to improve the LLM’s NLU capabilities and mitigate instability in in-context learning. However, their work does not specifically target named entity recognition, nor does it consider a few-shot or metric-learning-based setting.

Ma et al. [24] propose an approach that integrates span-based few-shot NER models [25,26] with LLM-based re-ranking of uncertain entities. Their work primarily focuses on span-based NER, where entity spans are first identified using local models before being refined by the LLM. This approach differs fundamentally from our metric-learning-based framework, where entity recognition is formulated as a similarity computation problem rather than a span-detection task. Similarly, Zhang et al. [27] employ uncertainty estimation to facilitate collaboration between LLM and the local NER model, aiming to reduce errors in recognizing unseen entities. While effective, their framework is designed for span-based or fully supervised settings, rather than the few-shot setting we investigate.

In contrast, our work explores the integration of the SLM and the LLM in a metric learning-based few-shot NER framework, an area that remains underexplored. Metric learning-based NER presents unique challenges, such as sensitivity to distributional shifts between the support and query sets, which span-based or fully supervised models do not explicitly address. Additionally, unlike prior studies that rely heavily on manually designed chain-of-thought (CoT) prompts, our method reduces reliance on handcrafted demonstrations, making it more adaptable to different NER tasks.

3. Methodology

3.1. Method Overview

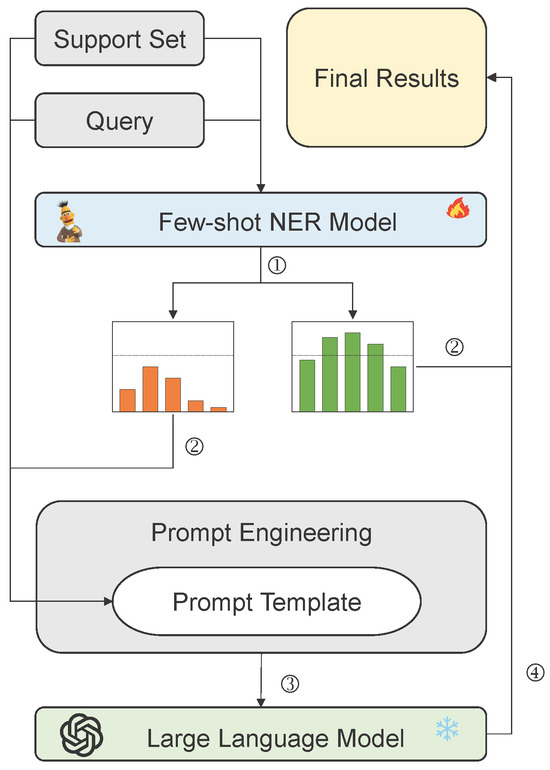

Figure 1 illustrates our proposed method, which consists of four key steps. First, we utilize the few-shot NER model to identify named entities by computing cosine similarity scores between the query and support sets. Second, words within each entity requiring re-prediction are filtered using a similarity threshold, while restricting the range of visible labels for the large language model to prevent unnecessary label predictions. Third, the filtered candidate words, label scope, type definitions, and few-shot examples are incorporated into a structured prompt template. We apply various prompt engineering techniques to process these inputs, then feed the prompts into the LLM to generate final predictions. Finally, we integrate predictions from both the few-shot NER model and LLM to generate the final entity recognition results.

Figure 1.

The overview of our method. The different numbers correspond to the different stages described in the Method Overview.

3.2. Select the Candidate Word

In this study, we choose LACNER as the metric learning-based few-shot NER model [17]. Specifically, given a support set , which consists of multiple sequences, each containing n words, and a query (test) set , which also consists of multiple sequences, each containing m words. In the few-shot NER method based on metric learning, and are fed into the SLM to generate representation. Then, we compute the cosine similarity between and . The label corresponding to the support sample with the highest similarity is assigned as the entity type of the query. Formally,

where denotes the k-th support sample and its label, while and represent the semantic embeddings of the query and support samples, respectively.

Building on this framework, we define the confidence score of the prediction for query as the maximum cosine similarity between that query and the support sets:

Meanwhile, the support sets are ranked in descending order based on their cosine similarity with the query, and the corresponding labels are preserved. Specifically,

where represents the descending-order ranking of the support sets according to .

Then, the tuple of prediction result for the query is defined as follows:

Finally, we select specific words that require re-prediction. Given a prediction result tuple , if the confidence score of the query is less than or equal to the threshold ,

and the word is part of an entity, it is retained and passed to the LLM for further refinement.

3.3. Limit the Scope of the Labels to Be Predicted

In prompt construction, a common practice is to concatenate all entity types from the dataset before supplying them to the LLM for prediction. However, study shows that the LLM struggle with fine-grained NER tasks [27]. This limitation arises mainly from the large number of label categories and their fine granularity, making it challenging for the LLM to accurately differentiate labels given limited instructions and examples. Furthermore, including explanations for all entity type definitions increases both input length and computational cost. To alleviate the LLM’s cognitive burden and minimize input length, we propose several strategies to limit the scope of the labels to be predicted. These strategies are designed to enhance the efficiency and accuracy of fine-grained NER tasks by reducing the complexity of label prediction.

- (1)

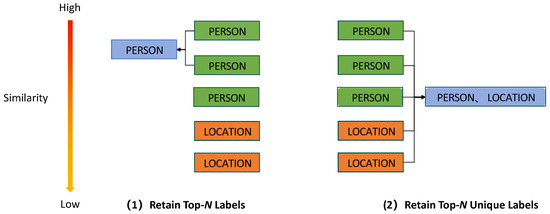

- Retain Top-N Labels

As shown on the left side of Figure 2, we propose a way to retain only the top N labels with high similarity from few-shot NER predictions. This approach ensures that the most relevant entity types are considered while keeping the size of label set manageable. The steps in this process are outlined as follows:

- First, we collect all label predictions for a given query .

- These labels are then ranked based on the cosine similarity between the support set and the query.

- The top N labels are selected from this ranked list.

- To ensure completeness, the “None” label is always included if it is not already in the selected list.

- To avoid redundancy, any duplicate labels are eliminated from the final selection.

Formally, we define the label filtering process as follows:

where selects the top N labels from the ranked label set, removes duplicate labels, and the inclusion of “None” ensures that non-entity predictions are always considered.

- (2)

- Retain the Top-N Unique Labels

As illustrated on the right side of Figure 2, this way focuses on ensuring diversity within the selected labels. The primary steps are as follows:

- Start with the ranked label predictions for a given query .

- Iterate through the ranked labels and select unique ones until N unique labels are collected.

- If the “None” label is not already included, append it to the final set.

- Exclude any duplicate labels from the final selections.

Mathematically, this process is expressed as follows:

where represents the first N unique labels selected from the ranked label set.

- (3)

- Retain All Entity Labels

In this setting, no label filtering is applied, meaning that the model has access to the entire set of possible entity labels. The process can be summarized as follows:

- All entity labels appearing in the dataset are provided to the model during inference.

- There is no restriction on the number of labels.

- The “None” label is included by default to represent cases without named entities.

Figure 2.

The overview of two ways for limiting the scope of labels to be predicted.

3.4. Prompt Design for LLM

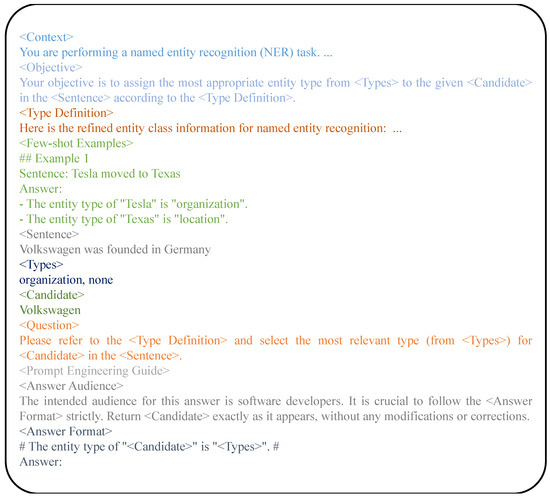

Large language models acquire knowledge from prompts by combining task descriptions with demonstrations, enabling them to identify and perform new tasks without explicit gradient updates. As illustrated in Figure 3, we design a structured prompt for the classification of candidate word. This prompt consists of eleven components: Context, Objective, Type Definition, Few-shot Examples, Sentence, Types, Candidate, Question, Prompt Engineering Guide, Answer Audience, and Answer Format. In this section, we focus on three key components: Type Definition, Few-shot Examples, and Prompt Engineering Guide.

Figure 3.

The template of candidate word classification prompt.

- (1)

- Type Definition

Across different datasets, entity types may share the same label but have distinct definitions. Relying solely on the LLM’s inherent knowledge may fail to capture these nuances. To mitigate this issue, we create dataset-specific entity-type definitions. Specifically, we compile official annotation guidelines, relevant examples, and entity-type details from the dataset, then utilize GPT-4o to generate descriptive definitions based on this information.

- (2)

- Few-shot Examples

First, we generate sentence embeddings for each sentence in support set using the sentence embedding model. Next, we rank the sentences based on their semantic similarity to the test sample and select the top four most relevant sentences as few-shot examples. Moreover, LLM performance in few-shot learning is sensitive to positional bias, where the order of examples affects the results [28]. To mitigate this effect, examples with higher similarity to the test sample are positioned closer to it in the prompt sequence.

- (3)

- Prompt Engineering Guide

To harness the reasoning capabilities of the LLM, we introduce three zero-shot prompt engineering techniques: COT prompting, metacognitive prompting, and self-verification prompting. COT prompting operates by decomposing complex tasks into a sequence of subproblems, reformulating the conventional “input-output” structure into an “input–reasoning chain–output” framework. We implement the zero-shot COT strategy by incorporating the instruction “Answer the question step by step” into the prompt, incrementally guiding the model’s reasoning process.

While COT prompting is highly effective for arithmetic and commonsense reasoning, capturing task semantics and implicit context is more critical for NLU tasks. To address this, we introduce metacognitive prompting, which emulates human metacognition to enhance the model’s understanding of task requirements. The metacognitive prompt is shown in Table 1. The metacognitive prompting consists of five key steps:

- 1.

- Text Interpretation: Guiding the model to understand the sentence and candidate word, including their positions and roles, similar to human understanding.

- 2.

- Preliminary Judgment: Conducting an initial classification of the candidate’s type based on type definitions and constraints, reflecting human early judgment.

- 3.

- Critical Evaluation: Reviewing the preliminary classification. If uncertainty remains, cross-checking type definitions and few-shot examples to refine the classification, akin to human self-reflection.

- 4.

- Decision: Confirming the final entity type and offering a concise rationale, paralleling human decision-making.

- 5.

- Confidence Assessment: Evaluating confidence in the final output, simulating the human process of judging reliability.

Table 1.

Metacognitive prompt.

Table 1.

Metacognitive prompt.

| Sections | Content |

|---|---|

| Text Interpretation | Understand the given Sentence and the Candidate. Clarify the Candidate’s position and role within the Sentence. |

| Preliminary Judgment | Based on the <Type Definition> and the given Types, make an initial guess about which entity type (if any) the Candidate should belong to. |

| Critical Evaluation | Critically reassess your preliminary judgment. If you are uncertain, carefully review the Type Definitions and Few-shot Examples to ensure you are selecting the most suitable type. If it does not fit any type, consider “none”. |

| Decision | Confirm your final decision on the Candidate’s entity type and provide a brief explanation of why you selected that type. |

| Confidence Assessment | Evaluate your confidence level (0–100%) in this classification and briefly explain why you have that level of confidence. |

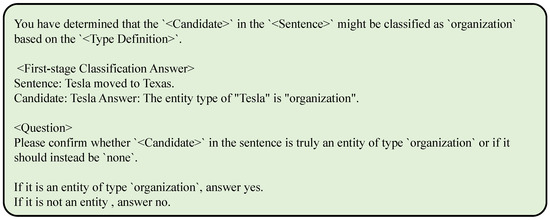

Moreover, large language models can exhibit hallucination or over-prediction, particularly in NER tasks, where they often label non-entities as valid entities [8,22]. To address this, we introduce self-verification prompting, which enables the model to validate its own predictions. An illustrative example of self-verification prompt is shown in Figure 4. In practice, we use the multi-turn dialogue format, sequentially providing the candidate classification prompt, prediction results, and self-verification prompt to the LLM. If the verification outcome aligns with the initial prediction, we retain the original result; otherwise, the candidate is classified as a non-entity.

Figure 4.

Self-verification prompt.

By applying the above prompt design methods, the LLM can refine the classifications and combine these results with those generated by the few-shot NER model to produce the final predictions.

4. Experiments

4.1. Datasets

We selected two widely datasets for few-shot NER tasks: Few-NERD and CrossNER. Both datasets span multiple domains and entity types, facilitating a comprehensive evaluation of model performance across diverse scenarios.

Few-NERD, introduced by Ding et al. [29], is specifically designed for few-shot NER tasks. It comprises eight coarse-grained and 66 fine-grained entity types, serving as a comprehensive benchmark for evaluating model performance in few-shot settings. Additionally, it defines two tasks with distinct granularities: INTRA and INTER. In the INTRA setting, the training, validation, and test sets contain distinct coarse-grained categories. In the INTER setting, they may share the same coarse-grained categories, but the fine-grained categories remain mutually exclusive. Few-NERD is distributed under the Creative Commons Attribution-ShareAlike 4.0 International License (CC BY-SA 4.0).

CrossNER comprises NER datasets from four domains—OntoNotes 5.0 [30], CoNLL-03 [31], WNUT-17 [32], and GUM [33]—and serves as a benchmark for evaluating model generalization in cross-domain environments. CrossNER is distributed under the Apache License 2.0.

4.2. Experimental Setup

To reduce experimental costs, we sample subsets from the test datasets. For the Few-NERD, we randomly select 100 support sets and 100 query sets are randomly selected. For the CrossNER, we select 200 support sets and 200 query sets in the 1-shot setting, and 100 support sets and 100 query sets in the 5-shot setting. In the main experiments, chain-of-thought prompting, metacognitive prompting, and self-verification prompting are not applied. Experiments related to prompt engineering are discussed in Section 4.7.

We choose LACNER as the metric learning-based few-shot NER model. In the experiments, LACNER’s hyperparameters and setting are exactly the same as those reported by Xiao et al. [17]. For LLMs, we employ various open-source models. To expedite inference, we employ vLLM and set the temperature parameter to 0 to ensure accuracy in the generated results. All models, except for DeepSeek-V2.5, which is accessed via an external API, are executed on a single A100 GPU (Nvidia, Santa Clara, CA, USA).

To ensure fairness, we generate three sets of few-show NER model predictions, each using a different random seed, and subsequently feed these predictions into the LLM to minimize randomness. Each experiment is repeated three times, and the average micro-F1 score is reported. We use BGE-large-en-v1.5 as the sentence embedding model. Furthermore, all F1 scores mentioned in this paper refer to micro-F1.

4.3. Baseline

We select different baseline from two distinct perspectives. First, we adopt the state-of-the-art method LACNER as metric learning-based few-shot NER model. Second, we include several LLMs as baselines, which employ in-context learning to address few-shot NER tasks. Adopting the prompt design methodology outlined in [9], we partition NER into two phases—entity recognition and entity classification—progressively addressing each phase via multi-turn dialogue. Furthermore, LLM Rerank combines the SLM and the LLM for few-shot NER tasks, leveraging the SLM for initial filtering and the LLM for re-ranking [24].

4.4. Analysis of the Impact of Thresholds on Model Performance

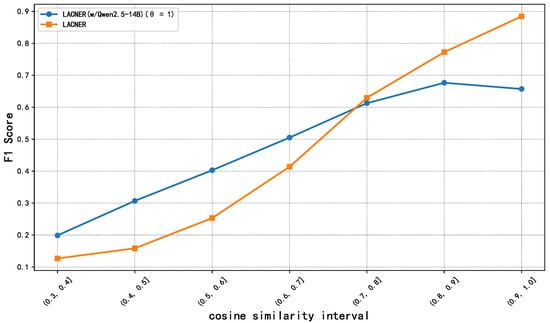

By setting threshold appropriately, uncertain or potentially misclassified samples are redirected to the LLM for re-prediction. The process of appropriately setting the threshold is detailed in Appendix A. To validate the effectiveness of the LLM in handling low-confidence predictions, we conduct experiments on the CoNLL-03 using Qwen-2.5-14B. As shown in Figure 5, we explore two threshold configurations: (1) setting , where all samples are classified only by LACNER, and (2) setting , where the LLM re-predicts every entity word identified by LACNER.

Figure 5.

Comparison of micro-F1 of models with different cosine similarity intervals.

Figure 5 demonstrates that for low-confidence predictions, achieves the higher F1 score than LACNER alone, whereas for predictions with higher confidence, LACNER alone performs better. It shows that when the confidence of prediction is low, the LLM leverages its extensive world knowledge and reasoning capabilities to correct recognition errors. Thus, integrating the LLM with the metric-based few-show NER model enhances overall performance.

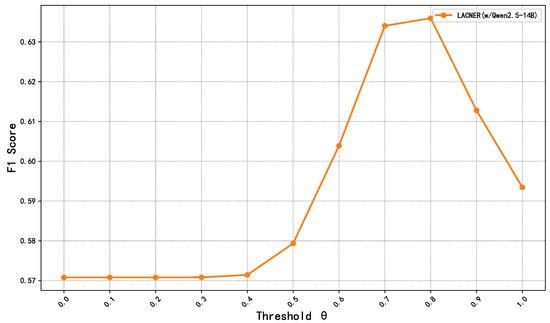

We next analyze the impact of threshold selection on the performance of model. As shown in Figure 6, we evaluate by choosing ten threshold points at intervals of 0.1. The results show that as varies, the F1 score initially rises before declining. At lower thresholds, the LLM corrects many misclassified samples, leading to improved overall performance. However, at higher thresholds, the LLM erroneously modifies correct predictions, resulting in performance degradation. This trend suggests that applying the LLM to high-confidence samples may introduce additional errors, diminishing overall performance. Thus, selecting an optimal threshold is crucial for maximizing the collaborative benefits of both the few-shot NER model and LLM.

Figure 6.

Comparison of performance on CoNLL across various thresholds using the micro-F1 metric (%).

4.5. Comparison with Other Methods

In this section, we conduct experiments on various open-source LLMs with different parameters, comparing the performance of different methods and exploring the contributions of various LLM to LACNER. The experimental results are shown in Table 2 and Table 3.

Table 2.

Comparison of performance on Cross-NER with the micro-F1 metric (%).

Table 3.

Comparison of performance on Few-NERD with the micro-F1 metric (%).

As shown in Table 2, the performance of few-shot NER methods based on the LLM improves significantly with increasing the parameter of the LLM. For example, in the 1-shot setting, when the parameters of the Qwen2.5 series increase from 7B to 14B, the F1 score on all datasets improves. Specifically, Qwen2.5-14B achieves up to 10.84% higher F1 score compared to Qwen2.5-7B. Additionally, DeepSeek-V2.5, which has the largest number of parameters, exhibits the maximum F1 improvement of 24.9% over Llama3.1-8B. It indicates that the larger parameter LLM possess richer world knowledge and stronger reasoning capabilities, leading to better generalization and few-shot learning performance in extremely low-resources.

However, as the number of examples increases, the performance gains of the LLM tend to plateau, meaning the benefit from more examples becomes limited. In contrast, LACNER leverages metric learning to fully capture domain-specific knowledge, and its performance gap with the LLM widens as the number of training samples grows.

Re-identifying low-confidence entity words filtered by LACNER using large models leads to significant improvements across all settings. In the 1-shot setting, compared to using LACNER alone, achieves the F1 improvement ranging from 2.27% to 8.99%, with an average gain of 6.73%. These results indicate that the extensive knowledge and strong reasoning capabilities of the LLM compensate for LACNER’s limitations in representation learning for certain entity types, as well as classification errors due to limited support set examples, thereby enhancing overall performance. In the 5-shot setting, as LACNER learns entity representations more effectively, fewer samples require re-identification by the LLM, leading to smaller performance gains compared to the 1-shot setting. Nevertheless, achieves the average F1 improvement of 3.06%.

Overall, the integration of the LLM enhances LACNER’s performance; both Qwen2.5-14B and DeepSeek-V2.5 significantly boost LACNER’s performance in the 1-shot and 5-shot settings. These findings confirm that the parameter scale and pretraining quality of the LLM significantly impact their generalization and knowledge transfer capabilities in downstream tasks.

Furthermore, compared with LLM Rerank, improves the average F1 score by 3.44% in the 1-shot setting and by 1.55% in the 5-shot setting. It indicates that restricting the scope of predicted labels can enhance the classification accuracy of the LLM. Additionally, the structured prompting approach proposed in this study outperforms the multiple choice prompting used in LLM Rerank by improving the LLM’s understanding of task requirements and providing relevant background knowledge.

Compared with CrossNER, the Few-NERD contains a greater variety of entity labels with finer granularity, a larger labeling space, and higher differentiation complexity. On CrossNER, the LLM performed well in the 1-shot setting, benefiting from their extensive prior knowledge. However, when applied independently to Few-NERD, the LLM demonstrated lower performance, particularly in the INTRA, where the disparity is more pronounced. It suggests that as task complexity and label granularity increase, LLMs face greater challenges.

Although integrating an LLM with LACNER continues to enhance performance, the improvements become increasingly nuanced. Specifically, in the INTRA setting, improves the average F1 score by 1.76% compared to LACNER, while in the INTER setting, it achieves a 1.52% improvement. The disparity in these collaborative improvements narrows, suggesting that as task complexity increases, LLMs face greater challenges.

Finally, compared with LLM Rerank, achieves the average F1 improvement of 0.95% in the INTRA setting and 0.78% in the INTER setting. Moreover, unlike LLM Rerank, it removes the need for manually creating the COT examples.

4.6. Analysis of the Impact of Predicted Label Scope on Collaborative Capabilities

As shown in Table 4, the advantage of Qwen2.5-14B over LACNER gradually decreases as the number of entity types increases. In some cases, LACNER even outperforms Qwen2.5-14B. As the number of entity types grows, distinguishing fine-grained categories with subtle differences becomes more challenging. In contrast, the metric learning-based few-shot NER model, trained on diverse examples, effectively learns to differentiate these subtle nuances. Thus, implementing an appropriate label scope filtering strategy during collaboration is crucial for reducing confusion in the LLM.

Table 4.

Performance comparison of Qwen2.5-14B and LACNER across datasets with varying numbers of entity types in the 1-shot setting.

Table 5 and Table 6 present the impact of varying the scope of predicted labels on the Few-NERD and CrossNER. Table 5 shows that when all entity types are provided, the classification space for the LLM becomes excessively large. Compared to the “retain top-1 label” strategy, performance drops by up to 1.72%, suggesting that limiting the label scope improves model focus. Similarly, Table 6 reveals a comparable trend in the 5-shot setting of CrossNER, where the “retain top-N labels” strategy achieves the best performance. This approach prevents the LLM from being overwhelmed by excessive predicted labels while enabling it to reference additional labels when the metric learning-based few-shot NER model’s predictions are uncertain or when test samples lie near cluster boundaries. However, in the 1-shot setting of CrossNER, the significant domain discrepancy between the source and target domains leads to suboptimal representations learned by LACNER. In this case, providing all predicted labels more effectively leverages the LLM’s capabilities.

Table 5.

Effects of predicted label scope on the Few-NERD with the micro-F1 metric (%).

Table 6.

Effects of predicted label scope on the CrossNER with the micro-F1 metric (%).

4.7. Analysis of the Effectiveness of Various Methods in Prompt Design

In this section, we first examine the effectiveness of incorporating type definitions and few-shot examples in our prompt. We then investigate whether prompt engineering can further enhance overall performance.

- (1)

- Analysis of the Effectiveness of Type Definitions and Few-Shot Examples in In-Context Learning

Table 7 presents the performance of two strategies—type definitions and few-shot examples—using ablation on the CrossNER. The results show that employing both type definitions and few-shot examples yields the best performance under both 1-shot and 5-shot settings. Specifically, in the 1-shot setting, the combined approach outperforms the baseline (which does not apply these strategies) by 1.8%, while in the 5-shot setting, it achieves the 0.52% improvement. These findings suggest that incorporating both type definitions and demonstrations enhances the model’s comprehension of the task and entity type definitions, thereby improving classification accuracy. However, on the OntoNotes, removing the demonstrations leads to better performance, indicating that noise in these demonstrations may hinder model understanding. When noise exists in the support set, the classification performance of large and small language models is affected to varying degrees. Large language models, which leverage extensive world knowledge, exhibit stronger robustness against noise, whereas smaller models are more prone to noise interference in few-shot setting.

Table 7.

Effect of type definitions and demonstrations on the CrossNER with the micro-F1 metric (%). TD stands for type definition, and FSE stands for few-shot examples.

- (2)

- Analysis of the Effectiveness of Prompt Engineering

Prompt engineering techniques can further stimulate the model’s reasoning abilities. We evaluate how different zero-shot prompting approaches affect the performance of model.

As shown in Table 8, metacognitive prompting improves performance by 0.76% in the 1-shot setting and 0.23% in the 5-shot setting compared to our prompt. Emulating human metacognition enhances the model’s ability to comprehend and execute NER tasks more effectively. However, zero-shot CoT and self-verification prompting generally lead to performance degradation. The CoT prompting is originally designed for tasks requiring explicit step-by-step reasoning, such as mathematical or logical problems. In contrast, NER primarily relies on textual semantics and contextual understanding, which do not align perfectly with CoT’s strengths. For self-verification prompting, if the initial prediction is highly uncertain, the mechanism allows the model to reassess and correct errors, explaining its effectiveness on certain datasets (e.g., CoNLL). However, if the model is overly confident in an incorrect prediction, the verification process may reinforce the error by drawing upon the same underlying knowledge base and reasoning patterns. Therefore, for tasks heavily reliant on semantic understanding, such as NER, prompt designs should prioritize enhancing text comprehension.

Table 8.

Impact of different prompt engineering methods on the CrossNER with the micro-F1 metric (%).

5. Limitations of the Study

Our proposed method achieves significant improvements in few-shot NER by leveraging the collaboration between small and large language models. However, several limitations warrant consideration for future refinement.

To begin with, our approach uses cosine similarity as the confidence score to determine which predictions from the small language model require refinement by the large language model. Although cosine similarity is effective due to its scale invariance and standardized range—making it a practical choice for metric learning frameworks—it may not fully capture the uncertainty inherent in predictions. For instance, when the support set poorly represents the query set’s distribution, cosine similarity might overestimate confidence in erroneous predictions or fail to reflect subtle uncertainties. Alternatives such as Monte Carlo Dropout [34] or Evidential Neural Networks [35], could provide richer uncertainty estimates by modeling prediction variability or distributional properties. Nevertheless, these methods require architectural or training modifications, which are beyond the scope of this study. Investigating such alternatives in future work could enhance the robustness of confidence estimation.

Moreover, the threshold , which determines which predictions are refined by the LLM, is manually selected based on the performance of validation. While this approach proved effective in our experiments, it is time-consuming and may not generalize across datasets or domains. Therefore, an adaptive or automated threshold-setting mechanism could improve the method’s scalability and practicality.

6. Conclusions

In this paper, we propose a few-shot NER method based on the collaboration of large and small language models. The method uses the SLM to make preliminary predictions on test samples, filtering out entities with lower confidence levels, which are then re-identified and corrected by the large language model. This paper also designs multiple prediction label filtering strategies to restrict the range of labels predicted by the LLM, reducing the difficulty of entity classification. Additionally, we adopt the structured approach to designing prompts, further optimizing experimental results through prompt engineering.

Experiments conducted on the Few-NERD and CrossNER demonstrate that the proposed method enhances the performance of few-shot NER. In both 1-shot and 5-shot settings, the collaborative approach outperforms baseline models, and its versatility has been validated across various open-source LLMs. Ablation studies further confirm the effectiveness of key components such as the candidate word selecting module, prediction label filtering module, structured prompts, and prompt engineering.

Author Contributions

Conceptualization, Y.X. and Q.Y.; methodology, Y.X.; validation, Y.X., J.Z., and Q.Y.; formal analysis, Y.X.; investigation, Y.X.; resources, Q.Y.; data curation, Y.X.; writing—original draft preparation, Y.X.; writing—review and editing, Q.Y.; supervision, Q.Y.; project administration, Q.Y.; funding acquisition, Q.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The dataset we used is the newest Few-NERD Arxiv V6 Version. It can be downloaded at https://ningding97.github.io/fewnerd/ (accessed on 10 February 2025). CrossNER can be downloaded at https://atmahou.github.io/attachments/ACL2020data.zip (accessed on 10 February 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| NER | Named Entity Recognition |

| LLM | Large Language Model |

| SLM | Small Language Model |

| NLU | Natural Language Understanding |

| COT | Chain-of-Thought |

Appendix A

To establish the optimal threshold value for each setting, we perform hyperparameter tuning using the validation set. Specifically, we employ a grid search approach, testing values in the range of 0.5 to 0.8 with an increment of 0.05. For each candidate , we evaluate the model’s performance using the micro-F1 score. The resulting optimal values for each setting appear in Table A1 and Table A2 below. These values then apply in our experiments to achieve the results reported in Section 4.

Table A1.

Threshold settings on the CrossNER.

Table A1.

Threshold settings on the CrossNER.

| 1-Shot | 5-Shot | ||||||

|---|---|---|---|---|---|---|---|

| CONLL | GUM | WNUT | Onto | CONLL | GUM | WNUT | Onto |

| 0.75 | 0.60 | 0.75 | 0.75 | 0.75 | 0.55 | 0.75 | 0.75 |

Table A2.

Threshold settings on the Few-NERD.

Table A2.

Threshold settings on the Few-NERD.

| INTRA | INTER | ||||||

|---|---|---|---|---|---|---|---|

| 1∼2shot | 5∼10shot | 1∼2shot | 5∼10shot | ||||

| 5way | 10way | 5way | 10way | 5way | 10way | 5way | 10way |

| 0.50 | 0.55 | 0.65 | 0.65 | 0.55 | 0.60 | 0.60 | 0.70 |

References

- Das, S.S.S.; Katiyar, A.; Passonneau, R.J.; Zhang, R. CONTaiNER: Few-Shot Named Entity Recognition via Contrastive Learning. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Dublin, Ireland, 22–27 May 2022; pp. 6338–6353. [Google Scholar] [CrossRef]

- Huang, Y.; He, K.; Wang, Y.; Zhang, X.; Gong, T.; Mao, R.; Li, C. Copner: Contrastive learning with prompt guiding for few-shot named entity recognition. In Proceedings of the 29th International Conference on Computational Linguistics, Gyeongju, Republic of Korea, 12–17 October 2022; pp. 2515–2527. [Google Scholar]

- Zhang, C.; Wu, S.; Zhang, H.; Xu, T.; Gao, Y.; Hu, Y.; Chen, E. Notellm: A retrievable large language model for note recommendation. In Proceedings of the Companion Proceedings of the ACM Web Conference 2024, Singapore, 13–17 May 2024. [Google Scholar] [CrossRef]

- Jeong, S.; Baek, J.; Cho, S.; Hwang, S.J.; Park, J. Adaptive-RAG: Learning to Adapt Retrieval-Augmented Large Language Models through Question Complexity. In Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers), Mexico City, Mexico, 16–21 June 2024; Duh, K., Gomez, H., Bethard, S., Eds.; pp. 7036–7050. [Google Scholar] [CrossRef]

- Raina, V.; Gales, M. Question-Based Retrieval using Atomic Units for Enterprise RAG. In Proceedings of the Seventh Fact Extraction and VERification Workshop (FEVER), Miami, FL, USA, 15 November 2024; Schlichtkrull, M., Chen, Y., Whitehouse, C., Deng, Z., Akhtar, M., Aly, R., Guo, Z., Christodoulopoulos, C., Cocarascu, O., Mittal, A., et al., Eds.; pp. 219–233. [Google Scholar] [CrossRef]

- Pan, L.; Albalak, A.; Wang, X.; Wang, W. Logic-LM: Empowering Large Language Models with Symbolic Solvers for Faithful Logical Reasoning. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2023, Singapore, 6–10 December 2023; Bouamor, H., Pino, J., Bali, K., Eds.; pp. 3806–3824. [Google Scholar] [CrossRef]

- Xie, T.; Li, Q.; Zhang, J.; Zhang, Y.; Liu, Z.; Wang, H. Empirical Study of Zero-Shot NER with ChatGPT. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–10 December 2023; pp. 7935–7956. [Google Scholar]

- Han, R.; Yang, C.; Peng, T.; Tiwari, P.; Wan, X.; Liu, L.; Wang, B. An Empirical Study on Information Extraction using Large Language Models. arXiv 2023, arXiv:2305.14450. [Google Scholar] [CrossRef]

- Wei, X.; Cui, X.; Cheng, N.; Wang, X.; Zhang, X.; Huang, S.; Xie, P.; Xu, J.; Chen, Y.; Zhang, M.; et al. Chatie: Zero-shot information extraction via chatting with chatgpt. arXiv 2023, arXiv:2302.10205. [Google Scholar]

- Zhou, W.; Zhang, S.; Gu, Y.; Chen, M.; Poon, H. Universalner: Targeted distillation from large language models for open named entity recognition. arXiv 2023, arXiv:2308.03279. [Google Scholar]

- Kojima, T.; Gu, S.S.; Reid, M.; Matsuo, Y.; Iwasawa, Y. Large language models are zero-shot reasoners. Adv. Neural Inf. Process. Syst. 2022, 35, 22199–22213. [Google Scholar]

- Wang, Y.; Zhao, Y. Metacognitive Prompting Improves Understanding in Large Language Models. In Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers), Mexico City, Mexico, 16–21 June 2024; pp. 1914–1926. [Google Scholar] [CrossRef]

- Kadavath, S.; Conerly, T.; Askell, A.; Henighan, T.; Drain, D.; Perez, E.; Schiefer, N.; Hatfield-Dodds, Z.; DasSarma, N.; Tran-Johnson, E.; et al. Language models (mostly) know what they know. arXiv 2022, arXiv:2207.05221. [Google Scholar]

- Fritzler, A.; Logacheva, V.; Kretov, M. Few-shot classification in named entity recognition task. In Proceedings of the 34th ACM/SIGAPP Symposium on Applied Computing, Limassol, Cyprus, 8–12 April 2019; pp. 993–1000. [Google Scholar] [CrossRef]

- Hou, Y.; Che, W.; Lai, Y.; Zhou, Z.; Liu, Y.; Liu, H.; Liu, T. Few-shot Slot Tagging with Collapsed Dependency Transfer and Label-enhanced Task-adaptive Projection Network. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 1381–1393. [Google Scholar] [CrossRef]

- Khosla, P.; Teterwak, P.; Wang, C.; Sarna, A.; Tian, Y.; Isola, P.; Maschinot, A.; Liu, C.; Krishnan, D. Supervised contrastive learning. Adv. Neural Inf. Process. Syst. 2020, 33, 18661–18673. [Google Scholar]

- Xiao, Y.; Yang, Q.; Zou, J.; Zhou, S. Lacner: Enhancing few-shot named entity recognition with label words and contrastive learning. In Proceedings of the 2024 International Joint Conference on Neural Networks (IJCNN), Yokohama, Japan, 30 June–5 July 2024; pp. 1–8. [Google Scholar] [CrossRef]

- Dai, X.; Adel, H. An Analysis of Simple Data Augmentation for Named Entity Recognition. In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 8–13 December 2020; pp. 3861–3867. [Google Scholar] [CrossRef]

- Bartolini, I.; Moscato, V.; Postiglione, M.; Sperlì, G.; Vignali, A. COSINER: Context similarity data augmentation for named entity recognition. In International Conference on Similarity Search and Applications; Springer: Berlin/Heidelberg, Germany, 2022; pp. 11–24. [Google Scholar] [CrossRef]

- Lyu, Y.; Li, Z.; Niu, S.; Xiong, F.; Tang, B.; Wang, W.; Wu, H.; Liu, H.; Xu, T.; Chen, E. Crud-rag: A comprehensive chinese benchmark for retrieval-augmented generation of large language models. ACM Trans. Inf. Syst. 2025, 43, 1–32. [Google Scholar] [CrossRef]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Wang, S.; Sun, X.; Li, X.; Ouyang, R.; Wu, F.; Zhang, T.; Li, J.; Wang, G. Gpt-ner: Named entity recognition via large language models. arXiv 2023, arXiv:2304.10428. [Google Scholar]

- Xu, C.; Xu, Y.; Wang, S.; Liu, Y.; Zhu, C.; McAuley, J. Small Models are Valuable Plug-ins for Large Language Models. In Proceedings of the Findings of the Association for Computational Linguistics ACL 2024, Bangkok, Thailand, 11–16 August 2024; pp. 283–294. [Google Scholar] [CrossRef]

- Ma, Y.; Cao, Y.; Hong, Y.; Sun, A. Large Language Model Is Not a Good Few-shot Information Extractor, but a Good Reranker for Hard Samples! 1 0572–10601. [CrossRef]

- Fu, J.; Huang, X.J.; Liu, P. SpanNER: Named Entity Re-/Recognition as Span Prediction. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Virtual, 1–6 August 2021; pp. 7183–7195. [Google Scholar] [CrossRef]

- Chen, W.; Zhao, L.; Luo, P.; Xu, T.; Zheng, Y.; Chen, E. Heproto: A hierarchical enhancing protonet based on multi-task learning for few-shot named entity recognition. In Proceedings of the 32nd ACM International Conference on Information and Knowledge Management, Birmingham, UK, 21–25 October 2023; pp. 296–305. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhao, Y.; Gao, H.; Hu, M. Linkner: Linking local named entity recognition models to large language models using uncertainty. In Proceedings of the ACM on Web Conference 2024, Virtual, 13–17 May 2024; pp. 4047–4058. [Google Scholar] [CrossRef]

- Zhao, Z.; Wallace, E.; Feng, S.; Klein, D.; Singh, S. Calibrate before use: Improving few-shot performance of language models. In Proceedings of the 38th International Conference on Machine Learning: ICML 2021, Virtual, 18–24 July 2021; pp. 12697–12706. [Google Scholar]

- Ding, N.; Xu, G.; Chen, Y.; Wang, X.; Han, X.; Xie, P.; Zheng, H.; Liu, Z. Few-NERD: A Few-shot Named Entity Recognition Dataset. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Virtual, 1–6 August 2021; pp. 3198–3213. [Google Scholar] [CrossRef]

- Pradhan, S.; Moschitti, A.; Xue, N.; Ng, H.T.; Björkelund, A.; Uryupina, O.; Zhang, Y.; Zhong, Z. Towards robust linguistic analysis using ontonotes. In Proceedings of the Seventeenth Conference on Computational Natural Language Learning, Sofia, Bulgaria, 8–9 August 2013; pp. 143–152. [Google Scholar]

- Sang, E.T.K.; De Meulder, F. Introduction to the CoNLL-2003 Shared Task: Language-Independent Named Entity Recognition. In Proceedings of the Seventh Conference on Natural Language Learning at HLT-NAACL 2003, Edmonton, Canada, 1 May–1 June 2003; pp. 142–147. [Google Scholar]

- Derczynski, L.; Nichols, E.; Van Erp, M.; Limsopatham, N. Results of the WNUT2017 shared task on novel and emerging entity recognition. In Proceedings of the 3rd Workshop on Noisy User-Generated Text, Copenhagen, Denmark, 7 September 2017; pp. 140–147. [Google Scholar] [CrossRef]

- Zeldes, A. The GUM corpus: Creating multilayer resources in the classroom. Lang. Resour. Eval. 2017, 51, 581–612. [Google Scholar] [CrossRef]

- Gal, Y.; Ghahramani, Z. Dropout as a bayesian approximation: Representing model uncertainty in deep learning. In Proceedings of the 33nd International Conference on Machine Learning: ICML 2016, New York City, NY, USA, 19–24 June 2016; pp. 1050–1059. [Google Scholar]

- Zhang, Z.; Hu, M.; Zhao, S.; Huang, M.; Wang, H.; Liu, L.; Zhang, Z.; Liu, Z.; Wu, B. E-NER: Evidential Deep Learning for Trustworthy Named Entity Recognition. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2023, Toronto, ON, Canada, 9–14 July 2023; pp. 1619–1634. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).