Abstract

In recent years, the safety of vulnerable road users (VRUs), such as pedestrians, cyclists, and micro-mobility users, has become an increasingly significant concern in urban transportation systems worldwide. Reliable and accurate detection of VRUs is essential for effective safety protection. This survey explores the techniques and methodologies used to detect VRUs, ranging from conventional methods to state-of-the-art (SOTA) approaches, with a primary focus on infrastructure-based detection. This study synthesizes findings from recent research papers and technical reports, emphasizing sensor modalities such as cameras, LiDAR, and RADAR. Furthermore, the survey examines benchmark datasets used to train and evaluate VRU detection models. Alongside innovative detection models and sufficient datasets, key challenges and emerging trends in algorithm development and dataset collection are also discussed. This comprehensive overview aims to provide insights into current advancements and inform the development of robust and reliable roadside detection systems to enhance the safety and efficiency of VRUs in modern transportation systems.

1. Introduction

1.1. Motivation

The world faces critical challenges in safeguarding vulnerable road users (VRUs), including pedestrians, cyclists, and micro-mobility users, as evidenced by concerning statistics. In the US, 2021 National Highway Traffic Safety Administration (NHTSA) data showed that pedestrian fatalities are up by 13% and bicyclists up by 5% [1]. Taking California for an example, in 2020 alone, the state recorded 1013 pedestrian fatalities, alongside 136 cyclist fatalities, according to data from the California Office of Traffic Safety (OTS) [2]. Moreover, the California Highway Patrol (CHP) reported that 1043 pedestrians and 153 bicyclists were killed during the same period [3]. These figures underscore the urgent need to address those factors contributing to road user vulnerability, such as distracted driving, and unsafe interactions at intersections. In 2021, there has been a rise in pedestrian fatalities, which increased by 9.4% from 1013 in 2020 to 1108 in 2021 [2].

According to the definition of Federal Highway Administration (FHWA), a VRU could be a pedestrian, a bicyclist, an e-cyclist, a person using other conveyance like a scooter or skateboard, or a highway worker on foot in a work zone, but this does not include motorcyclists [1]. To protect small and active objects like these in a complex traffic system is a major challenge. With the advancement in computer vision technology, more advanced detection methods have been proposed continuously over the past decades, using either 2D or 3D object detection. From the perspective of traffic management departments, the increasing availability of cost-effective and highly accurate sensors in recent years presents an opportunity to enhance real-time detection and protection of vulnerable road users, in addition to their traditional use for monitoring traffic flow. Usually, roadside sensors are mounted on the poles at the intersections and middle blocks with fixed locations and angles, which is different from onboard sensors’ operating conditions. With well-developed sensors and corresponding detection models, VRUs can be detected accurately, which brings the following benefits: (1) VRU safety can be assured as much as possible; (2) drivers could be aware of the situation by receiving the warning messages in advance if the vehicle/smartphone is connected to the roadside unit (RSU); and (3) the intersection could be smarter by optimizing the priority and phase duration for different road users, especially VRUs.

Roadside detection systems have made significant strides in practical applications to safeguard Vulnerable Road Users (VRUs). Key examples include the Intelligent Roadside Unit (IRSU) developed by the University of Sydney and Cohda Wireless, which integrates camera and LiDAR sensors for precise VRU detection [4], and Commsignia’s V2X units [5], which combine cameras, LiDAR, and RADAR to enhance traffic services and VRU protection. Ouster’s BlueCity solution [6], implemented in Chattanooga, utilizes LiDAR-based traffic management to optimize signal timings and improve safety by processing real-time data [7]. These systems showcase the effectiveness of roadside detection technologies in improving VRU safety and traffic management. Additionally, the National Highway Traffic Safety Administration (NHTSA) is advancing connected vehicle systems, underscoring the continued importance of such technologies in creating safer roadways for VRUs, reducing accidents, and optimizing traffic management globally.

Unlike previous surveys that focus solely on vehicle or pedestrian detection, this paper provides a comprehensive review of VRU detection models and datasets from a roadside perspective, which is an area that has not been systematically explored in detail. This survey will explicitly answer the following questions: (1) What sensors should be used for roadside VRU detection? (2) What models should be used for each type of sensor? (3) How does it work to fuse multiple sensors? (4) Which dataset is suitable for a specific use case? Additionally, we highlight emerging challenges and opportunities, such as refining the definition of VRU subclasses and addressing the limitations of current datasets. By systematically analyzing roadside VRU detection from multiple perspectives—including sensors, models, and datasets—this work serves as a reference for researchers and practitioners developing advanced roadside safety systems.

1.2. Organization

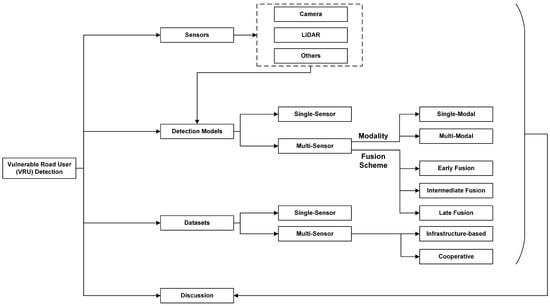

The rest of this paper is organized as follows: sensors, detection models, datasets, and discussions are introduced one by one, respectively. In Section 2, multiple roadside sensors that have been used for traffic surveillance or object detection are listed. In Section 3, different detection approaches by sensor node multiplicity and sensor modality, as well as sensor fusion methods are introduced. Specifically, given the lack of infrastructure-based VRU detection models and the insufficiency of real-world data collected from roadside sensors, this paper will include VRU detection models developed using either roadside or onboard data. Section 4 discusses the infrastructure-based datasets, besides the vehicle–infrastructure cooperative datasets, which include data from both roadside and onboard sources. In Section 5, the challenges and opportunities are discussed in detail, which may guide the algorithm development/data collection. Section 6 concludes this review (the structure of this paper is depicted in Figure 1).

Figure 1.

Organization of this review.

2. Typical Roadside Sensors

To develop a holistic understanding of the overall effectiveness of various sensors employed for VRU perception in transportation systems, Table 1 offers a condensed overview of those commonly utilized in traffic monitoring at intersections. Each type of sensor used alone possesses distinct capabilities and advantages/disadvantages relative to different scenarios and applications. For practical applications, cost and durability are also emphasized in the table. In the following content, camera will be used to indicate a monocular camera.

Table 1.

Detection Sensors.

The commonly used infrastructure-based sensors are summarized in Table 1, with each offering distinct advantages. Cameras provide rich 2D information at a relatively low cost, making them the most widely used for roadside sensing. LiDAR, known for its precise 3D depth information and stability across various weather conditions, has gained traction in recent years. RADAR, with its ability to detect objects at long range and measure speed accurately, is often used in traffic monitoring but is less common for detailed VRU detection due to its lower spatial resolution.

Compared to previous studies, which primarily focus on onboard sensors for VRU detection, this paper emphasizes the selection of roadside sensors, where factors such as a wider field of view, environmental robustness, and long-term deployment feasibility are critical. While previous research has extensively explored camera-based roadside sensing, recent advancements in LiDAR technology have led to its increasing adoption, particularly in sensor fusion setups to enhance detection accuracy. Given these trends, this paper primarily focuses on cameras and LiDAR, as they are the most widely used and effective sensors for roadside VRU detection.

Upon the data collection with different sensors under real-time traffic conditions, the downstream data processing module will be the detection. Thus, along with this, the papers regarding the detection models for VRU will be discussed.

3. VRU Detection Models

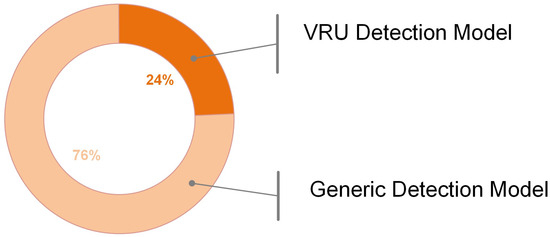

In the traffic domain, with the progress of autonomous driving, an increasing number of algorithms and datasets for vehicle detection have been proposed, and vehicle detection accuracy has become increasingly accurate. In comparison, VRU detection has received less attention from scholars. Some generic detection algorithms include pedestrians and bicyclists in the categories of recognized objects, but their recognition rates are generally low. For example, VoxelNet’s [8] detection performances in car, pedestrian, and cyclist are 81.97%, 57.86%, and 67.17% in average precision on the easy KITTI [9] validation set. At the same time, research focusing on VRU detection models and datasets has not made systematic progress, which makes the summary of the relevant literature on VRU detection even more important. The papers on the detection models we reviewed are all related to VRU detection. Some are generic detection models with other VRUs in the categories of recognized objects, and some are specifically focusing on VRU detection. The ratio of detection models we reviewed belonging to the two categories is shown in Figure 2. Specifically, the VRU detection models are developed for various explicit VRUs, including pedestrians, cyclists, skateboarders, e-scooter users, wheelchair users, stroller users, and even pedestrians using umbrellas, which are scattered and not systematic [10,11,12,13,14,15,16]. In general, the VRU detection models utilized rather conventional or simple methods implemented on a specific VRU category without progressive progress.

Figure 2.

Ratio of detection models.

VRUs are generally small in size, have inconsistent speed distribution, and are very dynamic. The inconsistent speed distribution means similar-sized scooters, bicycles, and skateboards move rather fast; however, pedestrians and wheelchairs move slower. The elderly, especially, move much more slowly. Dynamic changes mean that VRUs do not necessarily travel strictly in prescribed lane areas or along smooth predictable trajectories; they may rush out of blind spots at any time, and children may run across pedestrian areas. Thus, the different characteristics of VRUs make them vulnerable in open traffic environments, highlighting the importance of accurate detection and classification of VRU subclasses. Various challenging scenarios and the lack of the number and variety of VRUs in general datasets all increase the difficulty of the detection model development.

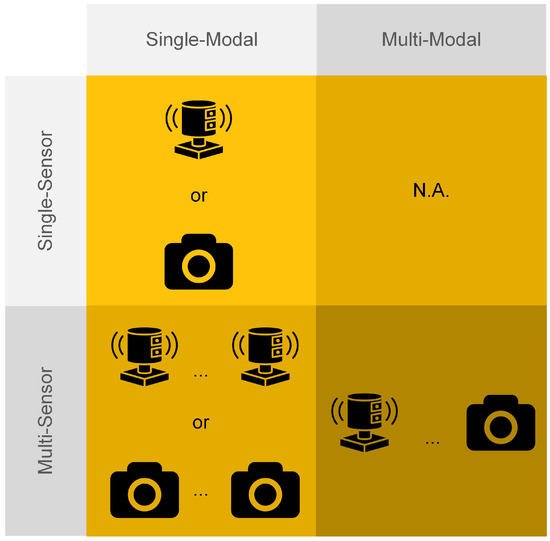

In the following introduction to VRU detection, existing studies are categorized into single-sensor and multi-sensor approaches. Multi-sensor studies are further classified based on sensor modality and fusion strategies, as shown in Figure 3. To provide a comprehensive overview, this paper presents a summary Table 2 highlighting VRU-related detection models, offering readers a clear understanding of the key methodologies and trends in the field.

Figure 3.

Matrix of sensor and modal (this figure was designed using resources from Flaticon.com).

Table 2.

Overview of generic deep learning (DL)-based vulnerable road user (VRU) detection methods.

3.1. Single-Sensor

The single-sensor detection methods mean there is only a single sensor used for detection, such as a single camera or a single LiDAR.

3.1.1. Camera-Based Detection

The camera is the most commonly used sensor for detecting VRUs. Thus, the camera-based detection method is one of the earliest and most mature algorithm types. Two decades ago, Viola and Jones [72] introduced a method using the Haar-like feature and Adaboost algorithm for real-time detection of human faces that surpassed all contemporaneous algorithms in both real-time performance and detection accuracy, without imposing any constraints. Dalal and Triggs [73] presented the Histogram of Oriented Gradients (HOG) feature descriptor, which significantly improved upon the scale-invariant feature transform and shape context. This detector has become a cornerstone in various object detection systems and has found extensive use in practical applications. For example, HOG was used on pedestrian detection, and the miss rate was under 0.01 at 10-6 False Positives Per Window (FPPW) on the original MIT pedestrian database [73,74]. The Deformable Part-based Model (DPM) is another classical 2D detector, which is the SOTA method of traditional methods and is commonly used in VRU detection [13]. In general, HOG and DPM are suitable for detecting non-rigid objects like pedestrians, with tolerance to the pose and view variations.

With the development of deep learning (DL), convolutional neural networks (CNNs) [75] began to be widespread by extracting the features with data-driven methods instead of manual feature engineering. In addition, Girshick et al. [76] introduced Regions with CNN features (R-CNN) for object detection, making the detection in an image with multiple objects possible by generating region proposals. Concurrently, He et al. [77] developed Spatial Pyramid Pooling Networks (SPPNets), which achieved feature representation independent of image size and operated 20 times faster than R-CNN while maintaining accuracy. Later, many additional fast and accurate 2D image detection algorithms were proposed. Fast R-CNN [78] is over 200 times faster than R-CNN. Faster R-CNN [17] is the first end-to-end and near real-time DL detector, which is also the start of anchor-based detection models. Feature Pyramid Networks (FPNs) [20] utilized a pyramid-like structure to detect different-scale objects in the same image, which can be combined with Faster R-CNN. You Only Look Once (YOLO) [79] is the first one-stage DL detector with extremely fast speed. As of now, it has been updated to YOLO v9 [80]. Single Shot MultiBox Detector (SSD) [18] is also a one-stage detector with significantly improved accuracy, but is not suitable for small object detection. Another simple dense one-stage detector is RetinaNet [19], which addresses the detection of rare or small class objects with focal loss function. In addition, there are some anchor-free methods, such as CornerNet [25] and CenterNet [26]. In recent years, Transformers [81] incorporating attention mechanisms have emerged as the predominant trend in most object perception tasks. Vision Transformer (ViT) [27], DEtection TRansformer (DETR) [28], Swin Transformer [29], and Pyramid Vision Transformer (PVT) [30] are the milestone Transformer-based 2D detection models. The trend of 2D detection models is becoming simpler in structure with fewer steps without many manual definitions and introducing new backbone networks.

Regarding the existing VRU detection research, researchers mainly utilized the CNN-based 2D detectors, fine-tuned them, and trained them with specific class datasets to detect different VRUs, not limited to pedestrians. Garcia-Venegas et al. [10] compared the performance of Faster R-CNN [17], SSD [18] and Region-based Fully Convolutional Network (R-FCN) [82] with different feature extractors in cyclist detection. As a result, the SSD was chosen as the detector because of its higher Frames Per Second (FPS) for real-time implementation. Additionally, this work utilized the Kalman filter to enhance the robustness against false negative detections and object occlusions. Another work also used Faster R-CNN employing transfer learning from diverse pre-trained architectures to detect pedestrians with infrastructure-based cameras [83], which proposed a solution for the occlusion challenge for onboard detection. Zhou et al. [84] refined the FPN with multi-scale feature fusion and attention mechanisms, which showed better performance on crowded pedestrian detection; however, the limitation of computational efficiency still existed. Mammeri et al. [85] explored the application of CNN-based algorithms for identifying VRUs like pedestrians, motorcyclists, and cyclists from the roadside. In the experiment, the detection performance of the large-scale object was better than the small-scale object. For example, the detection performance of small objects using the YOLOv3 [21] and Faster R-CNN was worse by about 5% in mAP, compared with the detection performance of large objects. Sharma et al. [86] proposed a rare open-source vulnerable pedestrian dataset BGVP, and trained and tested five pre-trained models, including YOLOv4 [22], YOLOv5 [23], YOLOX [24], Faster R-CNN, and EfficientDet [87], where YOLOv4 and YOLOX performed the best. In general, these methods are still limited by low computational efficiency, small size, and heavy occlusion of the objects.

Similar to 2D detection, camera-based 3D detection methods also follows the trend from CNN-based methods to Transformer-based methods. FCOS3D [31], based on CNNs, generates 3D bounding boxes from monocular images by utilizing depth estimation. In contrast, DETR3D [32] and PETR [33] adopt Transformer-based models, combining features from multiple views to improve detection precision. In recent years, the Bird’s Eye View (BEV) feature space was preferred because it contains rich spatial information within the global view. BEVDepth [34] improved depth estimation by adding depth supervision, a camera-aware module, and refinement. BEVHeight [35] predicted height to the ground instead of depth, enabling distance-agnostic optimization. The Calibration-free BEV Representation (CBR) [36] eliminated the need for calibration, but at the cost of a drop in AP3D|R40. Complementary-BEV (CoBEV) [37] combined depth and height with a two-stage feature selection and BEV distillation, showing superior performance.

Specifically, BEVHeight, CBR, and CoBEV were all developed based on roadside cameras. Thus, BEV-based 3D detection methods using cameras could become a new trend for VRU detection, as roadside cameras provide rich depth and height information, and many intersections are already equipped with them, reducing the need for upgrades. Regarding the variation in roadside cameras with different pitch angles and focal lengths, Jinrang et al. [88] proposed UniMono, which addresses this 3D detection challenge by predicting an independent normalized depth that is unaffected by these variations, making the algorithm adaptable to different camera settings.

In addition to the commonly detected VRUs, such as pedestrians and cyclists, some subclasses, like pedestrians using umbrellas, have also attracted attention from a small number of researchers. Shimbo et al. [13] proposed the Parts Selective DPM to detect pedestrians carrying umbrellas, aiming to address the umbrella occlusion issue in rainy weather. Huang et al. [89] employed a cascade decision tree to detect wheelchairs for a healthcare system. Gilroy et al. [11] focused on e-scooter detection in densely populated urban areas to avoid misclassifying e-scooters as pedestrians. Yang et al. [16] used YOLOv5 [23] to detect strollers. Sharma et al. [86] collected a VRU dataset and categorized VRUs into children, elderly individuals, and persons with disabilities. These rare subclasses of VRUs are often overlooked, yet they represent very vulnerable groups within the transportation system. The definition of VRU subclasses and corresponding detection methods remains insufficient. An explicit definition of VRU subclasses would greatly benefit research in this domain.

3.1.2. LiDAR-Based Detection

LiDAR is a remote sensing technique employing pulsed laser light to measure distances at various angles relative to the sensor (i.e., spherical coordinates of detections in the LiDAR frame of reference). LiDAR can offer detailed 3D point cloud data to accurately determine the 3D positions of traffic objects [90]. The detection methods proposed using LiDAR could be categorized into conventional methods and deep learning methods.

Conventional methods often use some statistical techniques to process the point cloud data. At the early stage, researchers use manually selected features of point clouds as input to classification models, which belong to non-deep learning methods. Limited by the price and synchronization issues related to using multiple LiDAR sensors, many researchers start with one LiDAR to detect road users at the intersection. A skateboarder detection method using a 16-channel LiDAR was proposed by Wu et al. [15]. They utilized 3D Density Statistic Filtering (3D-DSF) [91] to filter the background, and Density-Based Spatial Clustering of Applications with Noise (DBSCAN) [92] to cluster the points. With a two-step classification method, the clustered object is first classified as a vehicle or non-vehicle object. If it is a non-vehicle object, the second step will be deployed with the object’s dynamic features including the average speed in its historical trajectory and the standard deviation of the speed. Thus, the non-vehicle objects were classified as skateboarders, cyclists, and pedestrians. Because of the similar speeds of skateboarders and cyclists, these two may be misclassified using this method. Classical machine learning techniques such as K-Nearest Neighbors (KNN) [93], Support Vector Machine (SVM) [94], Random Forest (RF) [95], and Naïve Bayes (NB) [96] are compared in the two-step classification tasks. RF performed best in both steps under their own datasets. To further optimize the detection results of pedestrians using the same LiDAR, researchers developed efficient detecting methods according to different distances [97]. The detecting area was divided into three sub-areas in concentric circles according to the distance to the LiDAR, and DBSCAN was deployed with different radii and minimum numbers of points in different subareas. Taking the number of points in a cluster, 2D distance to LiDAR, and direction of the clustered points’ distribution as object features, a Back Propagation Artificial Neural Network (BPANN) [98] was used to classify the vehicles and pedestrians. Based on previous research, Song et al. [99] used 3D-DSF and DBSCAN to filter the background and cluster the points, respectively. Then, the overall performance of SVM, RF, BPANN, and Probabilistic Neural Network (PNN) [100] were compared, using measurements of a 16-channel LiDAR as inputs. SVM performed best, benefiting from its better nonlinear regression ability of the Gaussian kernel. In general, conventional methods have the limitation of scene adaptability; different methods may be suitable for different data with different hand-crafted features.

With the rapid advancement of deep learning, some researchers have begun leveraging it for 3D VRU detection due to its strong regression and classification capabilities, enabling the prediction of bounding boxes and object classes through feature learning. From the perspective of generic 3D object detection, the detection models can be divided into front view (FV)-based, bird’s eye view (BEV)-based, voxel-based, and point-based categories. The different categories represent different feature-extracting ways. FV-based and BEV-based methods aim to project the point clouds to the 2D plane and utilize a rather mature 2D detection method to detect the 3D objects, such as VeloFCN [38] and PIXOR [39]. In recent years, voxel-based and point-based methods have become the mainstream because of the richer information encoded. Voxel-based methods divide the 3D space into a structured grid of voxels and use CNNs to extract features, as seen in VoxelNet [8] and SECOND [40]. A variant, pillar-based methods, segment point clouds into pillars by extending voxel boundaries in the z-direction, as demonstrated in PointPillars [41]. Point-based methods directly process raw, unstructured 3D point clouds, using networks like PointNet [101] or PointRCNN [44] to capture fine-grained local and global features. For VRU detection, different feature-extracting categories have their characteristics, which are analyzed in Table 3 to show their pros and cons for VRU detection.

Table 3.

Comparison of different LiDAR detection methods by category.

Specifically, in DL-based roadside VRU detection methods using a single LiDAR sensor, researchers have primarily enhanced conventional approaches by replacing one of the modules with a deep learning model, while maintaining the original pipeline. In the early stages, researchers simply replaced the final classification step with DL and attempted to transform the data and features from 3D to 2D. For example, in [103], after background filtering, clustering, and feature extraction, a lightweight Visual Geometry Group Network (VGGNet) was used to classify objects with hand-crafted features as inputs.

With the VGGNet and a rich set of features, the model outperformed the Random Forest (RF) by 14.4% and 11.9% in detecting pedestrians and cyclists, respectively, and surpassed the Support Vector Machine (SVM) by 10.4% and 14.3% in the same categories. Similarly, Zhang et al. [104] proposed a Global Context Network (GCNet) prototype, consisting of three stages: gridding, clustering, and classification. In the gridding stage, the LiDAR point clouds were transformed into a grid format in a plane, enabling the use of a CNN for classifying clustered objects, much like image classification in the 2D plane.

Recently, researchers have shifted towards using end-to-end deep learning networks for roadside VRU detection with LiDAR, replacing traditional clustering, feature extraction, and classification steps. Some studies focused on adapting mature models developed for onboard applications to roadside scenarios. Blanch et al. [105] analyzed key milestones of LiDAR-based detection for onboard systems and compared their performance in roadside surveillance, including SECOND [40], CenterPoint [42] with voxel encoding, CenterPoint with pillar encoding, and PillarNet [43]. Compared to anchor-based methods, center-based methods showed better performance with lower computational demands. Among the methods tested, CenterPoint using voxel representation with specific hyperparameters (distinct from onboard setups) achieved the best performance for pedestrian detection in video surveillance scenarios. Meanwhile, fewer researchers have focused on developing dedicated roadside 3D object detection models, including for VRU objects. Taking the Feature Enhancement Network (FecNet) as an example, FecNet [102] is a pillar-based feature enhancement cascade network designed for roadside object detection with LiDAR. Compared to conventional 3D detection methods, FecNet emphasizes the importance of foreground features with a foreground attention branch to reduce the similarity between objects and the background. Furthermore, multi-level feature fusion strengthens the model’s ability to detect objects at different scales, which is critical for roadside sensing. Based on their dataset, FecNet achieved a mean Average Precision (mAP) of 86.32%, and demonstrated an excellent trade-off between accuracy and efficiency with the smallest model size, outperforming models like PointPillars, SECOND, PointRCNN [44], PV-RCNN [45], PV-RCNN++ [46], and PillarNet. Shi et al. [106] combined voxel-based encoding, center-based proposals, and a Transformer-based detector in an innovative approach for roadside sensing purposes. Tested on the DAIR-V2X-I [107] roadside detection dataset, their method outperformed all other models in cyclist detection, including PointPillars, SECOND, Object DGCNN [108], and FSD [109]. In general, models specifically developed for roadside detection using LiDAR remain limited, and there is insufficient focus on VRU detection.

3.2. Multi-Sensor

Unlike single-sensor, multi-sensor techniques use several sensors for detection at the same time, which is usually called sensor fusion. In this paper, we define two kinds of classification methods for sensor fusion. One is classified by modal, which includes single-modal and multi-modal, corresponding to the type of sensors in sensor fusion. The other one is classified by fusion scheme, including early fusion, intermediate fusion, and late fusion.

3.2.1. Classification by Modality

With the development of the perception research domain, multiple sensors have been utilized to detect the VRUs in traffic systems. In the early stage, some researchers utilized the combination of three low-cost sensors, i.e., Doppler microwave RADAR sensors, passive infrared (PIR), and geomagnetic sensors at the roadside to perceive if there is a pedestrian or vehicle passing [14]. With the cost of sensors going down, increasing numbers of advanced accurate sensors are being utilized at intersections. Different sensors have different advantages in detecting VRUs, which is explained in Table 1. Cameras can provide texture and color, but their detection results are significantly affected by the weather and illumination. RADAR is suitable for long-range detection and operates effectively in all weather conditions but has low resolution. LiDARs can provide depth information under various environmental conditions but with a lower density of detail than camera imagery. Infrared cameras can be effectively used to detect VRUs but can be affected by temperature. Thus, multiple sensors should be combined to detect objects cooperatively, which will fully tap the potentials of different sensors. The detection methods based on sensor modality can be classified into single-modal and multi-modal. For single-modal methods, we focus on the camera-only and the LiDAR-only methods, while for multi-modal approaches, combining the camera with LiDAR or combining the camera with RADAR are prevalent setups.

- Single-ModalCamera only: Multiple cameras can provide a wider field of view (FOV) and more accurate results in VRU detection, particularly in addressing issues related to illumination and occlusion. Inspired by research in the multiple-camera multi-object tracking (MOT) domain [110], cameras from different directions can be fused to detect and track objects. In this approach, objects are first detected in each camera view, and then their movement is tracked across multiple cameras, which constitutes the fusion in the temporal aspect. This paper, however, focuses only on the detection aspect, meaning that the primary goal of using multiple cameras from different directions is to solve issues of illumination and occlusion to ensure VRUs are detected, rather than performing re-identification and tracking. In this context, detections from different cameras are used to complement one another. Moreover, camera fusion can enhance the detection performance. For example, in InfraDet3D [111], a fusion method for the 3D detection results from two roadside cameras was proposed as one of the baseline approaches. In this method, the detections from two cameras are transformed to the same reference frame and matched within a threshold distance. The merging process involved combining matched detections by choosing the central position and yaw vector of the identified object from the nearest sensor. The dimensions of the merged detections were determined by averaging the measurements from both detectors. In general, camera-only fusion with a post-processing technique is designed to complement the detection results from different cameras and improve the detection accuracy of objects detected simultaneously, thus enhancing the safety of VRUs with greater confidence.In recent years, deep learning-based feature fusion methods for multi-view 3D object detection, such as DETR3D [32] and PETR [33], have been proposed using onboard-collected images. However, their potential performance in roadside detection remains underexplored.LiDAR only: Multiple LiDARs can improve detection accuracy by registering data from different viewpoints to obtain a higher-quality point cloud or by combining detections through late fusion. Meissner et al. [112] established a real-time pedestrian detection network of eight multilayer laser scanners in the KoPER project [113]. The collected data from different sensors are simply registered together. Then, a real-time adaptive foreground extractor was proposed using the Mixture of Gaussian (MoG) [114], and then an improved DBSCAN [112] was achieved by projecting the points to a 2D plane and dividing the clustering area with cells to reduce computation. Limited by the fixed range and the required minimum number of clusters in DBSCAN, when it comes to two pedestrians being very close, it is very challenging to separate them. In InfraDet3D [111], different fusion methods of two LiDARs were proposed as the baseline methods, including early fusion and late fusion. Early fusion of a LiDAR-only setup is to merge numerous point cloud scans generated by various LiDAR sensors at time step t to create a unified and densely populated point cloud, which used Fast Global Registration [115] to register the merged point cloud in the initial transformation and point-to-point Iterative Closest Point (ICP) [116] for the refinement. A late fusion baseline method of LiDAR was also talked about in the paper, which continued to use the same late fusion approach mentioned in camera-only fusion. In this work, pedestrians and cyclists were considered in the detection but not as the main objects. Although not much attention is given to the VRUs, the two fusion methods proposed are very straightforward and computationally cheap, which gives some inspiration for roadside real-time VRU sensing. Additionally, feature-level fusion using multiple LiDARs has been explored. For example, PillarGrid [117] extracts features from two different LiDARs in a pillar-wise manner and fuses them in a grid-wise fashion to enhance detection performance and extend the detection range.

- Multi-ModalRegarding multi-modal sensor fusion, various sensor combinations can be used for detection, including but not limited to cameras and LiDAR or cameras and RADAR. However, this paper primarily focuses on these two common combinations for VRU detection, with other multi-modal fusion methods briefly introduced.Camera and LiDAR: The fusion of camera and LiDAR sensors is one of the most common multi-modal sensor combinations. Cameras provide rich features that are beneficial for classification and detection during the daytime, while LiDAR can detect objects at night and offer more accurate point cloud data without distortion. In recent years, many promising fusion methods using cameras and LiDAR have been proposed. Generic fusion methods are listed in Table 4, with fusion strategies categorized based on pairs of sensor-derived feature types. Each category combines specific types of features from LiDAR and camera sensors; for example, FV/BEV and feature map represent features derived from LiDAR’s FV/BEV and camera’s feature map.

Table 4. Comparison of different generic camera and LiDAR fusion methods by category.In roadside sensor fusion applications, methods have evolved from assigning distinct functions to individual sensors for detection to integrating detections or features from multiple sensors. Shan et al. [4] designed an infrastructure-based roadside unit (IRSU) with two cameras, one LiDAR, and a Cohda Wireless RSU to detect pedestrians in the blind spots of connected and autonomous vehicles (CAVs), providing the CAVs with collision avoidance data. In this setup, the cameras were used for object detection and classification, while LiDAR was used to generate 3D dimensions and orientations. InfraDet3D [111] proposed a camera–LiDAR fusion method where LiDAR detection results were first transformed into the camera coordinate system, and then the detections from both sensors were synchronized based on the distance between the center points of the detected objects. In this method, the camera’s detection served as a supplement to LiDAR detection, employing a late fusion strategy. In CoopDet3D [118], Zimmer et al. extracted features with roadside data from a camera and LiDAR. The features were transformed into a bird’s eye view (BEV) representation. This method outperformed InfraDet3D on the TUMTraf dataset. VBRFusion [119] was proposed for VRU detection at medium to long ranges, based on the DAIR-V2X dataset [107]. VBRFusion enhanced the encoding of point and image feature mapping, as well as the fusion of voxel features, to generate high-dimensional voxel representations. In general, roadside fusion methods are gradually shifting from conventional function fusion approaches to feature fusion methods, aligning with generic LiDAR and camera sensor fusion techniques.Camera and RADAR: The combination of a camera and RADAR as a sensing unit is a cost-effective solution for detection. Similar to LiDAR-based detection models, this category includes both conventional methods and deep learning-based methods for fusing data from cameras and RADAR. Conventional methods often employ weighted averaging and clustering techniques, while deep learning approaches leverage neural networks to improve detection accuracy and robustness [120]. Bai et al. [121] developed a fusion method that capitalizes on the strengths of both cameras and RADAR to detect road users, including VRUs. RADAR detection results provide the distance and velocity of the target by measuring the time delay and phase shift of the echo signal. The camera detects the target’s angle and classification using YOLO V4 [22]. After calibrating the coordinates of both sensors, the results from the two sensors are fused using the nearest-neighbor correlation method. Similarly, in RODNet, RGB images are used to classify and localize objects, while transformed radio frequency images are utilized only for object localization [122]. Liu et al. [123] proposed methods for both single-scan and multi-scan fusion using Dempster’s combination rule to enhance evidence fusion performance. They also employed pre-calibration and the nearest-neighbor correlation method to associate detections from the camera and RADAR. Furthermore, the authors fused the detection results from multiple scans by associating detections from adjacent scans, which improved overall performance. With camera and RADAR fusion, challenging scenarios, particularly those captured under poor lighting conditions, can be detected more accurately.Regarding the DL-based methods, CenterFusion [67] associates RADAR point clouds with image detections, concatenates features from both the camera and RADAR, and recalculates the depth, rotation, and velocity of the objects. CRAFT [68] introduced the Soft-Polar Association and Spatio-Contextual Fusion Transformer to facilitate information sharing between the camera and RADAR. In recent years, inspired by BEV-based 3D detection methods with cameras, BEV-based feature maps have also been generated in camera and RADAR fusion, leveraging RADAR’s precise occupancy information. RCBEV4d [69] extracted spatial–temporal features from RADAR and applied point fusion and ROI fusion with transformed BEV image features. CRN [70] achieved comparable performance to LiDAR detectors on the nuScenes dataset, outperforming them at long distances. RCBEVDet [71] introduced a dual-stream RADAR backbone to capture both local RADAR features and global information, along with a RADAR Cross-Section (RCS)-aware BEV encoder that scatters point features across multiple BEV pixels rather than just one. This method outperformed previous approaches on the nuScenes dataset. In general, RADAR serves as a complementary information source to camera-based 3D detection methods, providing more accurate depth information, especially in adverse weather conditions.Others: Another camera combination involves the fusion of RGB cameras and infrared (IR) cameras, which can significantly improve detection performance, particularly under poor visibility conditions such as adverse weather or nighttime [124,125]. Pei et al. [126] also proposed a multi-spectral pedestrian detector that combines visible-optical (VIS) and infrared (IR) images. This method achieves state-of-the-art (SOTA) performance on the KAIST dataset [127]. When the RGB and infrared cameras share the same field of view (FOV), an image fusion technique can be employed to create a single combined image, making it easier to distinguish VRUs from the background [128].MMPedestron [129] is a novel multi-modal model for pedestrian detection that consists of a unified multi-modal encoder and a detection head. It can fuse data from five modalities (RGB, IR, Depth, LiDAR, and Event) and is extendable to include additional modalities. The data sources can also span multiple domains, such as traffic surveillance, autonomous driving, and robotics. Trained on a unified dataset composed of five public datasets with different modalities, MMPedestron surpasses the existing SOTA models for each individual dataset. This demonstrates the potential of a unified generalist model for multi-sensor perception in pedestrian detection, outperforming sensor fusion models tailored to specific sensor modalities.

Table 4. Comparison of different generic camera and LiDAR fusion methods by category.In roadside sensor fusion applications, methods have evolved from assigning distinct functions to individual sensors for detection to integrating detections or features from multiple sensors. Shan et al. [4] designed an infrastructure-based roadside unit (IRSU) with two cameras, one LiDAR, and a Cohda Wireless RSU to detect pedestrians in the blind spots of connected and autonomous vehicles (CAVs), providing the CAVs with collision avoidance data. In this setup, the cameras were used for object detection and classification, while LiDAR was used to generate 3D dimensions and orientations. InfraDet3D [111] proposed a camera–LiDAR fusion method where LiDAR detection results were first transformed into the camera coordinate system, and then the detections from both sensors were synchronized based on the distance between the center points of the detected objects. In this method, the camera’s detection served as a supplement to LiDAR detection, employing a late fusion strategy. In CoopDet3D [118], Zimmer et al. extracted features with roadside data from a camera and LiDAR. The features were transformed into a bird’s eye view (BEV) representation. This method outperformed InfraDet3D on the TUMTraf dataset. VBRFusion [119] was proposed for VRU detection at medium to long ranges, based on the DAIR-V2X dataset [107]. VBRFusion enhanced the encoding of point and image feature mapping, as well as the fusion of voxel features, to generate high-dimensional voxel representations. In general, roadside fusion methods are gradually shifting from conventional function fusion approaches to feature fusion methods, aligning with generic LiDAR and camera sensor fusion techniques.Camera and RADAR: The combination of a camera and RADAR as a sensing unit is a cost-effective solution for detection. Similar to LiDAR-based detection models, this category includes both conventional methods and deep learning-based methods for fusing data from cameras and RADAR. Conventional methods often employ weighted averaging and clustering techniques, while deep learning approaches leverage neural networks to improve detection accuracy and robustness [120]. Bai et al. [121] developed a fusion method that capitalizes on the strengths of both cameras and RADAR to detect road users, including VRUs. RADAR detection results provide the distance and velocity of the target by measuring the time delay and phase shift of the echo signal. The camera detects the target’s angle and classification using YOLO V4 [22]. After calibrating the coordinates of both sensors, the results from the two sensors are fused using the nearest-neighbor correlation method. Similarly, in RODNet, RGB images are used to classify and localize objects, while transformed radio frequency images are utilized only for object localization [122]. Liu et al. [123] proposed methods for both single-scan and multi-scan fusion using Dempster’s combination rule to enhance evidence fusion performance. They also employed pre-calibration and the nearest-neighbor correlation method to associate detections from the camera and RADAR. Furthermore, the authors fused the detection results from multiple scans by associating detections from adjacent scans, which improved overall performance. With camera and RADAR fusion, challenging scenarios, particularly those captured under poor lighting conditions, can be detected more accurately.Regarding the DL-based methods, CenterFusion [67] associates RADAR point clouds with image detections, concatenates features from both the camera and RADAR, and recalculates the depth, rotation, and velocity of the objects. CRAFT [68] introduced the Soft-Polar Association and Spatio-Contextual Fusion Transformer to facilitate information sharing between the camera and RADAR. In recent years, inspired by BEV-based 3D detection methods with cameras, BEV-based feature maps have also been generated in camera and RADAR fusion, leveraging RADAR’s precise occupancy information. RCBEV4d [69] extracted spatial–temporal features from RADAR and applied point fusion and ROI fusion with transformed BEV image features. CRN [70] achieved comparable performance to LiDAR detectors on the nuScenes dataset, outperforming them at long distances. RCBEVDet [71] introduced a dual-stream RADAR backbone to capture both local RADAR features and global information, along with a RADAR Cross-Section (RCS)-aware BEV encoder that scatters point features across multiple BEV pixels rather than just one. This method outperformed previous approaches on the nuScenes dataset. In general, RADAR serves as a complementary information source to camera-based 3D detection methods, providing more accurate depth information, especially in adverse weather conditions.Others: Another camera combination involves the fusion of RGB cameras and infrared (IR) cameras, which can significantly improve detection performance, particularly under poor visibility conditions such as adverse weather or nighttime [124,125]. Pei et al. [126] also proposed a multi-spectral pedestrian detector that combines visible-optical (VIS) and infrared (IR) images. This method achieves state-of-the-art (SOTA) performance on the KAIST dataset [127]. When the RGB and infrared cameras share the same field of view (FOV), an image fusion technique can be employed to create a single combined image, making it easier to distinguish VRUs from the background [128].MMPedestron [129] is a novel multi-modal model for pedestrian detection that consists of a unified multi-modal encoder and a detection head. It can fuse data from five modalities (RGB, IR, Depth, LiDAR, and Event) and is extendable to include additional modalities. The data sources can also span multiple domains, such as traffic surveillance, autonomous driving, and robotics. Trained on a unified dataset composed of five public datasets with different modalities, MMPedestron surpasses the existing SOTA models for each individual dataset. This demonstrates the potential of a unified generalist model for multi-sensor perception in pedestrian detection, outperforming sensor fusion models tailored to specific sensor modalities.

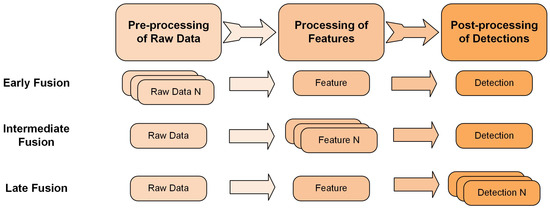

3.2.2. Classification by Fusion Scheme

Regarding the phase of sensor fusion [130], as depicted in Figure 4, a multi-sensor perception system can be categorized into three main classes: (1) Early fusion (EF) involves the fusion of raw data during the pre-processing phase. (2) Intermediate fusion (IF) entails the fusion of features during the feature extraction stage. (3) Late fusion (LF) involves the fusion of perception outcomes during the post-processing phase. Each fusion scheme has its own set of advantages and drawbacks. For instance, EF and IF typically require more computational resources and more complex model structures to efficiently extract features and achieve higher accuracy. In contrast, LF generally employs conventional rule-based methods to improve final performance with greater efficiency, making it suitable for real-time applications. Therefore, different scenarios may require different fusion schemes to meet specific objectives. Moreover, various fusion schemes may be applied simultaneously within a detection model to leverage the strengths of each approach.

Figure 4.

The fusion scheme.

The following section highlights some major studies classified by fusion scheme:

- Early FusionSharing the raw sensor data with other sensors to extend the perceptual range or point cloud density (multiple LiDARs) and enhance detection accuracy is a logical approach. In line with this strategy, the raw data collected from various sensors is transformed into a unified coordinate system to facilitate subsequent processing [131]. However, this kind of strategy is inevitably sensitive to some issues such as sensor calibration, data synchronization, and communication capacity, which are critical considerations in real-time implementations [132]. For example, with a limited communication bandwidth, it may be viable to transmit limited-resolution image data, but it might not be practical to transmit LiDAR point cloud data in real-time. For instance, a 64-beam Velodyne LiDAR operating at 10 Hz can produce approximately 20 MB of data per second [9].

- Intermediate FusionThe fundamental principle behind IF can be succinctly described as employing deeply extracted features for fusion at intermediate stages within the perception pipeline. IF relies on hidden features primarily derived from deep neural networks, which exhibit greater robustness compared to the raw sensor data utilized in early fusion. Moreover, feature-based fusion approaches typically employ a single detector to produce object perception outcomes, thereby eliminating the necessity of consolidating multiple proposals as required in LF. For example, MVX-Net utilizes the IF strategy to fuse features from 2D images and 3D points or voxels, and it is processed by VoxelNet [8]. Bai et al. also proposed the PillarGrid to fuse the extracted features of two LiDARs to improve the detection accuracy [117].

- Late FusionLate fusion also adopts a natural cooperative paradigm for perception, wherein perception outcomes are independently generated and subsequently fused. Unlike EF, although LF still requires a relative positioning for merging perception results, its robustness to calibration errors and synchronization issues is significantly improved. This is primarily because object-level fusion can be established based on spatial and temporal constraints. Rauch et al. [133] employed the Extended Kalman Filter (EKF) to coherently align the shared bounding box proposals, leveraging spatiotemporal constraints. Moreover, techniques such as Non-Maximum Suppression (NMS) [134] and other machine learning-driven proposal refining methods find extensive applications in LF methodologies for object perception [135].

Thus, different fusion schemes have their advantages and disadvantages. Early fusion and late fusion are both straightforward, but early fusion requires accurate calibration and sufficient communication bandwidth, and late fusion may result in limited detection accuracy. Deep fusion is a balanced and relatively well-performing method because of its low communication requirements and high detection accuracy.

4. Datasets

In the first stages of research, because of the lack of public infrastructure-based VRU datasets, some researchers chose to utilize some existing vehicle-based datasets including pedestrians or cyclists to evaluate their algorithms, because these datasets are well labeled and widely accepted. In [105], the authors listed some datasets developed for autonomous driving such as KITTI [9], Waymo [136], and nuScenes [137], and analyzed which one is more suitable for the LiDAR-assisted pedestrian detection for video surveillance. The nuScenes dataset was chosen because of its abundant annotations, like bounding boxes, orientations, and velocities of pedestrians. Also, it has relatively low spatial resolution and high temporal resolution, which aligns with the requirements of roadside setup in real-world situations. By integrating continuous sweeps from nuScenes, the range of the distances to the sensor is changeable and controllable for the specific detection task, e.g., VRU detection at intersections.

In this paper, we have reviewed the existing VRU detection datasets, including single-sensor datasets and multi-sensor datasets. Within single-sensor datasets, we include both the vehicle-based datasets and infrastructure-based datasets because of the limited amount of the latter. Within multi-sensor datasets, we further divide them into infrastructure-based datasets and vehicle–infrastructure cooperative datasets.

4.1. Single-Sensor Datasets

Single-sensor datasets are primarily based on cameras, and these datasets are listed in Table 5. The table only includes datasets that focus on pedestrian detection in traffic-related scenes, and some older datasets are excluded. In the object class attribute, VRU object classes are bolded, and the “Ignore Region” refers to detected humans that do not need to be matched, such as those truncated by image boundaries or depicted as fake humans on posters.

Table 5.

Infrastructure-based single-sensor datasets.

There are several factors that single-sensor datasets should consider. The image resolution and the sufficiency of both images and annotations can directly impact detection performance. The sufficiency of VRU classes is particularly important for in-depth research on VRU detection, such as detecting children or wheelchair users, which is critical for protecting these specific VRU categories. The variety of scenes is essential to ensure the successful detection of VRUs under different seasons, weather conditions, and lighting, especially some safety critical scenes. Detailed annotations for occlusion and truncation can help researchers develop more robust detectors. Lastly, orientation labels are valuable as they allow researchers to predict both the bounding box and the orientation simultaneously, which are crucial pieces of information for object tracking and state prediction of VRUs.

4.2. Multi-Sensor Datasets

Regarding multi-sensor datasets, both infrastructure-based and cooperative datasets will be discussed, as both types include infrastructure components, and the shared information from the vehicle side can help detect VRUs from the roadside.

In chronological order, the details of various infrastructure-based datasets are shown in Table 6. KoPER [113] is one of the earliest roadside datasets, collected using 14 eight-layer laser scanners and two monochrome Charged-Coupled Device (CCD) cameras. The sensors were mounted at a height of over 5 m and were tuned to reduce occlusion. The VRUs labeled in this dataset include pedestrians and cyclists. BAAI-VANJEE [144] is a dataset collected using a 32-line LiDAR and four cameras, mounted at 4.5 m, with partial synchronization of the data from different sensors. The VRUs in this dataset comprise pedestrians, bicycles, and tricycles. DAIR-V2X-I [107] is a roadside dataset collected across several intersections, where at least one pair of cameras and LiDAR sensors was installed at each location. The data from different sensors were fully synchronized, and the VRU objects in this dataset include cyclists, tricyclists, barrows, and pedestrians. Zimmer et al. proposed the TUMTraf Intersection Dataset, which includes pedestrians and bicycles as VRUs, along with complex driving maneuvers such as left/right turns, overtaking, and U-turns [118], which are safety critical scenes. In LLVIP, visual and infrared cameras were used to collect pedestrian and cyclist data in low-light scenes [125]. In TUMTraf Event, a visual camera and event-based camera were used to collect roadside detection data, especially for motion blur and low-light scenes [145]. Recently, the MMPD multi-modal dataset was released, which incorporates five public datasets with different fusion modalities and scenarios [129].

Table 6.

Infrastructure-based multi-sensor datasets.

The cooperative datasets contain data from both vehicles and infrastructure, as summarized in Table 7. These datasets benefit roadside detection by sharing information from vehicles. A widely used dataset is Rope3D [146], which uses one LiDAR on a vehicle and several cameras on the roadside. Within Rope3D, various setups were explored by collecting data with different camera mounting heights, pitch angles, and focal lengths. Yu et al. [107] released the DAIR-V2X-C, which also provided cooperative tracking annotations. The TUMTraf V2X cooperative perception dataset [147] provided some corner cases such as tailgating, overtaking, U-turns, traffic violations, and occlusion scenarios; however, the duration of each case may be too short for training a robust model. An SOTA vehicle-to-everything (V2X) cooperative detection dataset is V2X-Real [148]. Based on the ego agent type, the authors propose four types of datasets: vehicle-centric, infrastructure-centric, vehicle-to-vehicle (V2V), and infrastructure-to-infrastructure (I2I), each tailored for specific research tasks. This dataset contains the most abundant frames and annotations and includes a scooter category not recorded by other datasets. Another highlight is that the number of pedestrians exceeds the number of vehicles, making it well suited for VRU detection.

Table 7.

Cooperative multi-sensor datasets.

Additionally, the simulated dataset V2X-SIM is also mentioned [149]. However, its limitation is that it only includes one type of VRU: pedestrians. Simulated datasets are particularly useful for generating corner cases, such as accidents and occlusions involving VRUs, which are difficult and hazardous to capture in real-world settings. In conclusion, a comprehensive dataset that covers a wide range of VRU categories and includes diverse scenarios, including corner cases, is still lacking.

5. Discussion

5.1. Detection Models

5.1.1. Challenges

The following are challenges found in the detection models:

- Real-time ProcessingAs GPU computing performance continues to advance, researchers are developing more complex models and exploring the fusion of diverse data across various modalities and scenarios. The goal is to train robust models with strong generalization capabilities that are not constrained by a single modality or scenario. However, in practical roadside assistance systems, deploying large-scale multi-modal models can be challenging due to limitations in computing performance and communication latency between sensors and the edge computer. Therefore, developing lightweight models that offer robust performance and high tolerance to variations presents a significant research direction in road-assisted detection systems.

- Non-rigid Small 3D Object DetectionGiven the small size of VRUs, accurately detecting them in 3D is inherently more challenging due to the limited information collected by sensors. Relying on a single sensor may not provide sufficient data, making sensor fusion a more effective solution to address the missing features and enhance detection accuracy.Considering the non-rigid characteristic of the VRUs, such as walking, running, sitting and standing, it will be more challenging to detect them compared with rigid objects like vehicles. Compared with 2D detection, there is much less research focusing on this issue in 3D detection with LiDAR, which may be the result of sparse points on VRU itself and most LiDAR’s application in rigid object detection such as for vehicles. However, with the advancement of LiDAR resolution and the ability to capture more detailed VRU gestures, this area presents promising opportunities for future research.

- Domain Generalization from Onboard to RoadsideIn real-world applications, many pre-trained models are directly used for practical purposes, such as roadside surveillance. However, most pre-trained detection models that use point clouds as input are primarily designed and trained for onboard applications. A key question arises: how adaptable are these pre-trained onboard detection models to roadside detection tasks? Typically, when a LiDAR-based detection model trained on onboard datasets is directly applied to roadside data, performance degradation often occurs. This issue falls under domain generalization, where the challenge is ensuring the model performs well despite domain differences between development and deployment data, with minimal additional investment.

5.1.2. Trends and Future Research

The following are potential future trends in related research:

- Low-cost Solution for Comparative Resilience to LiDARAn increasing number of studies are exploring multi-camera 3D detection combined with RADAR as an affordable alternative to LiDAR. RADAR provides robust performance under varying lighting conditions and is effective for depth measurement, while cameras deliver high resolution and rich semantic information. Additionally, RADAR demonstrates strong weather robustness, velocity estimation, and long-range detection, offering several advantages over LiDAR [68]. Although RADAR has limitations, such as sparsity, inaccurate measurements, and ambiguity, researchers have proposed RADAR noise filtering modules to enhance detection accuracy [150,151]. Notably, CRAFT [68] achieves a 41.1% mAP on the nuScenes test dataset, with 46.2% AP for pedestrians and 31.0% AP for bicycles. However, none of the existing methods have been specifically tailored for VRU detection. As a result, roadside VRU detection using camera and RADAR fusion remains largely unexplored, representing a promising area for future research.

- Unified Generalist ModelRecently, some researchers have attempted to develop unified detection models that integrate multiple modalities and domains to enhance robustness across various settings. For example, MMPedestron [129] fused data from five modalities across domains, ranging from traffic surveillance to robotics. As a result, it outperforms existing state-of-the-art models on individual datasets. In this context, variations in roadside data, such as tilt angle, mounting height, and resolution, may not pose limitations for a unified model trained with diverse data.

- Tracking by DetectionRecently, a new trend has been combining the detection and tracking module as an end-to-end method to solve the detection and tracking at the same time, which solves VRU occlusion issues while saving the training process and avoiding the complex data association process.

5.2. Datasets

5.2.1. Challenges

The following are challenges found in the related datasets:

- Unclear Definition of VRU SubclassesExisting datasets lack a variety of VRUs, and the VRU subclasses within these datasets are inconsistent, which increases the difficulty of developing detection models. As a result, a clear definition of VRU subclasses is missing, and some rare VRUs do not receive sufficient attention. To facilitate dataset collection, annotation, and the development of corresponding detection models, we propose a standardized classification for most VRU categories, building on previous classifications found in various datasets and the Intersection Safety Challenge hosted by the U.S. Department of Transportation [152]. The detailed VRU classifications are presented in Table 8.

- Insufficient Critical VRU ScenariosMost perception datasets focus on detecting main road users, such as vehicles, while often overlooking critical corner cases, such as high-risk interactions between vehicles and pedestrians [153]. This creates a strong need for real-world or synthetic datasets that adequately represent critical VRU scenarios. Although simulated datasets can generate challenging situations like accidents and occlusions, a comprehensive dataset covering diverse VRU categories and corner cases is still lacking.

5.2.2. Trends and Future Research

The following is the potential future trend in related research:

- Sensor Placement TestingA quick and precise evaluation of roadside sensor placement before installation is crucial for effective data collection or monitoring. Sensor placement without proper evaluation can be time-consuming and may make it difficult to identify the optimal viewpoint. This is especially critical for VRU detection, where it is essential to select locations that cover sidewalks and crossings while minimizing occlusion. Therefore, evaluating the influence of sensor placement on the view and performance of baseline detection models is necessary to ensure optimal functionality.For instance, Cai et al. (2023) [154] conducted a simulation study comparing various LiDAR placements across multiple intersection scenarios. This approach enabled the evaluation of different placements alongside various detection algorithms, using real-world mirror scenarios for comparison. This study offers valuable insights into sensor placement testing, highlighting how small changes in sensor positioning can significantly impact VRU detection accuracy. Proper sensor placement is thus a key factor in optimizing detection performance and minimizing false negatives, making it an essential aspect of system design.

Table 8.

Detailed classification of VRUs.

Table 8.

Detailed classification of VRUs.

| Classes * | Subclasses | Reference |

|---|---|---|

| Pedestrian |

| [73,83,85,86,97,105,112,129,131,138,139,141,155,156,157,158,159] |

| Wheelchair |

| [12] |

| Bicycle |

| [10] |

| Non-Motorized Device |

| [13,16,89] |

| Scooter |

| [11] |

| Skateboard | N.A. | [15] |

* The items listed refer to pedestrians or individuals using devices, such as the pedestrian using a wheelchair.

6. Conclusions

This paper presents a comprehensive review of VRU detection for roadside-assisted safety, covering various roadside sensors, detection models, and datasets. The review follows a progression from generic techniques to specific VRU applications, analyzing existing models and datasets while highlighting challenges and emerging trends in the field. We aim to provide researchers with a concise overview of roadside VRU detection, fostering inspiration for future advancements. However, several limitations must be acknowledged. The article selection may be biased toward studies from more accessible or well-known databases. Additionally, this review primarily focuses on LiDAR and cameras, potentially overlooking other emerging technologies. The consulted databases may also have missed relevant studies, particularly those in non-English publications or published after the review period. These factors could impact the completeness and generalizability of the findings. Future research should broaden inclusion criteria and explore a wider range of sensor technologies.

Author Contributions

Conceptualization, G.W.; information collection, Z.Z.; formal analysis, Z.Z.; investigation, Z.Z. and C.W.; writing—original draft preparation, Z.Z.; writing—review and editing, Z.Z., C.W., G.W. and M.J.B.; visualization, Z.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by California Department of Transportation (65A1057).

Acknowledgments

The contents of this paper only reflect the views of the authors, who are responsible for the facts presented herein. The contents do not necessarily reflect the official views of California Department of Transportation.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Sun, C.; Brown, H.; Edara, P.; Stilley, J.; Reneker, J. Vulnerable Road User (VRU) Safety Assessment; Technical Report; Department of Transportation, Construction and Materials Division: Jefferson City, MO, USA, 2023. [Google Scholar]

- California Traffic Safety Quick Stats; California Office of Traffic Safety: Elk Grove, CA, USA, 2021.

- California Quick Crash Facts; California Highway Patrol: Sacramento, CA, USA, 2020.

- Shan, M.; Narula, K.; Wong, Y.F.; Worrall, S.; Khan, M.; Alexander, P.; Nebot, E. Demonstrations of Cooperative Perception: Safety and Robustness in Connected and Automated Vehicle Operations. Sensors 2020, 21, 200. [Google Scholar] [CrossRef] [PubMed]

- Commsignia. Vulnerable Road User (VRU) Detection Case Study. n.d. Available online: https://www.commsignia.com/casestudy/vru (accessed on 23 March 2025).

- Ouster. BlueCity Solution. 2025. Available online: https://ouster.com/products/software/bluecity (accessed on 23 March 2025).

- Ouster. Ouster BlueCity to Power Largest Lidar-Enabled Smart Traffic. 2025. Available online: https://investors.ouster.com/news-releases/news-release-details/ouster-bluecity-power-largest-lidar-enabled-smart-traffic (accessed on 23 March 2025).

- Zhou, Y.; Tuzel, O. Voxelnet: End-to-end learning for point cloud based 3d object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4490–4499. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? the kitti vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- García-Venegas, M.; Mercado-Ravell, D.A.; Pinedo-Sánchez, L.A.; Carballo-Monsivais, C.A. On the safety of vulnerable road users by cyclist detection and tracking. Mach. Vis. Appl. 2021, 32, 109. [Google Scholar] [CrossRef]

- Gilroy, S.; Mullins, D.; Jones, E.; Parsi, A.; Glavin, M. E-Scooter Rider detection and classification in dense urban environments. Results Eng. 2022, 16, 100677. [Google Scholar] [CrossRef]

- Beyer, L.; Hermans, A.; Leibe, B. DROW: Real-Time Deep Learning-Based Wheelchair Detection in 2-D Range Data. IEEE Robot. Autom. Lett. 2017, 2, 585–592. [Google Scholar] [CrossRef]

- Shimbo, Y.; Kawanishi, Y.; Deguchi, D.; Ide, I.; Murase, H. Parts Selective DPM for detection of pedestrians possessing an umbrella. In Proceedings of the 2016 IEEE Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 19–22 June 2016; pp. 972–977. [Google Scholar] [CrossRef]

- Wang, H.; Li, C.; Zhang, Y.; Liu, Z.; Hui, Y.; Mao, G. A scheme on pedestrian detection using multi-sensor data fusion for smart roads. In Proceedings of the 2020 IEEE 91st Vehicular Technology Conference (VTC2020-Spring), Victoria, BC, Canada, 18 November–16 December 2020; pp. 1–5. [Google Scholar]

- Wu, J.; Xu, H.; Yue, R.; Tian, Z.; Tian, Y.; Tian, Y. An automatic skateboarder detection method with roadside LiDAR data. J. Transp. Saf. Secur. 2021, 13, 298–317. [Google Scholar] [CrossRef]

- Yang, J.; Tong, Q.; Zhong, Y.; Li, Q. Improved YOLOv5 for stroller and luggage detection. In Proceedings of the 2023 4th International Conference on Computer Engineering and Application (ICCEA), Hangzhou, China, 7–9 April 2023; pp. 252–257. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the 29th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; Volume 1, pp. 91–99. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016; Lecture Notes in Computer Science; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; Volume 9905, pp. 21–37. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Farhadi, A.; Redmon, J. Yolov3: An incremental improvement. In Proceedings of the Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; Springer: Berlin/Heidelberg, Germany, 2018; Volume 1804, pp. 1–6. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Ultralytics. YOLOv5: A State-of-the-Art Real-Time Object Detection System. 2021. Available online: https://docs.ultralytics.com (accessed on 23 March 2025).

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Law, H.; Deng, J. Cornernet: Detecting objects as paired keypoints. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 734–750. [Google Scholar]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. CenterNet: Keypoint Triplets for Object Detection. arXiv 2019, arXiv:1904.08189. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2021, arXiv:2010.11929. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Computer Vision—ECCV 2020; Lecture Notes in Computer Science; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; Volume 12346, pp. 213–229. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Online, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Online, 11–17 October 2021; pp. 568–578. [Google Scholar]

- Wang, T.; Zhu, X.; Pang, J.; Lin, D. Fcos3d: Fully convolutional one-stage monocular 3d object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Online, 11–17 October 2021; pp. 913–922. [Google Scholar]

- Wang, Y.; Guizilini, V.C.; Zhang, T.; Wang, Y.; Zhao, H.; Solomon, J. Detr3d: 3d object detection from multi-view images via 3d-to-2d queries. In Proceedings of the Conference on Robot Learning, PMLR, London, UK, 8–11 November 2022; pp. 180–191. [Google Scholar]

- Liu, Y.; Wang, T.; Zhang, X.; Sun, J. Petr: Position embedding transformation for multi-view 3d object detection. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 531–548. [Google Scholar]

- Li, Y.; Ge, Z.; Yu, G.; Yang, J.; Wang, Z.; Shi, Y.; Sun, J.; Li, Z. Bevdepth: Acquisition of reliable depth for multi-view 3d object detection. Proc. AAAI Conf. Artif. Intell. 2023, 37, 1477–1485. [Google Scholar]

- Yang, L.; Yu, K.; Tang, T.; Li, J.; Yuan, K.; Wang, L.; Zhang, X.; Chen, P. Bevheight: A robust framework for vision-based roadside 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 21611–21620. [Google Scholar]

- Fan, S.; Wang, Z.; Huo, X.; Wang, Y.; Liu, J. Calibration-free bev representation for infrastructure perception. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; pp. 9008–9013. [Google Scholar]

- Shi, H.; Pang, C.; Zhang, J.; Yang, K.; Wu, Y.; Ni, H.; Lin, Y.; Stiefelhagen, R.; Wang, K. CoBEV: Elevating Roadside 3D Object Detection with Depth and Height Complementarity. arXiv 2023, arXiv:2310.02815. [Google Scholar] [CrossRef] [PubMed]

- Li, B.; Zhang, T.; Xia, T. Vehicle detection from 3d lidar using fully convolutional network. arXiv 2016, arXiv:1608.07916. [Google Scholar]

- Yang, B.; Luo, W.; Urtasun, R. Pixor: Real-time 3d object detection from point clouds. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7652–7660. [Google Scholar]

- Yan, Y.; Mao, Y.; Li, B. SECOND: Sparsely Embedded Convolutional Detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef] [PubMed]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. Pointpillars: Fast encoders for object detection from point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 12697–12705. [Google Scholar]

- Yin, T.; Zhou, X.; Krahenbuhl, P. Center-based 3d object detection and tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 11784–11793. [Google Scholar]

- Shi, G.; Li, R.; Ma, C. PillarNet: Real-Time and High-Performance Pillar-Based 3D Object Detection. In Computer Vision—ECCV 2022; Lecture Notes in Computer Science; Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T., Eds.; Springer Nature: Cham, Switzerland, 2022; Volume 13670, pp. 35–52. [Google Scholar] [CrossRef]

- Shi, S.; Wang, X.; Li, H. Pointrcnn: 3d object proposal generation and detection from point cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 770–779. [Google Scholar]