1. Introduction

With the rapid development of mobile robotics technology, path planning, as one of the core issues in mobile-robot autonomous navigation, has garnered widespread attention [

1]. The goal of path planning is to find an optimal path from the starting point to the destination within a given environment while avoiding obstacles [

2]. Its applications span across various domains, including material handling in industrial production, intelligent transportation in logistics, robot guidance in the service industry [

3], and unmanned combat in military fields, demonstrating its broad potential for application [

4].

Currently, mobile-robot path planning algorithms can be classified into traditional path planning algorithms, sampling-based path planning algorithms, and intelligent bionic algorithms [

5]. Traditional path planning algorithms include the A* algorithm [

6], the Dijkstra algorithm [

7], the D* algorithm [

8], and the artificial potential field method [

9], while sampling-based path planning algorithms include the PRM algorithm [

10] and the RRT algorithm [

11]. Intelligent bionic path planning algorithms include neural network algorithms [

12], particle swarm optimization [

13], ant colony optimization [

14], and genetic algorithms [

15].

Genetic algorithms, as global optimization algorithms based on biological evolution principles, have gradually become effective tools for solving complex path planning problems due to their strong global search capabilities and robustness [

16]. However, the application of traditional genetic algorithms in robot path planning still has many shortcomings [

17]. On one hand, the initial population quality is low, leading to slow convergence [

18]; on the other hand, traditional selection strategies may allow exceptional individuals to dominate the population, causing the algorithm to fall into local optima and reducing population diversity and exploration ability [

19]. Furthermore, the crossover operation lacks sufficient local search capabilities and may disrupt well-formed gene combinations, while the mutation operation lacks directionality [

20], potentially damaging high-fitness individuals and making path quality difficult to control.

To address these issues, various improvement methods have been proposed in the literature. For instance, in reference [

21], a population initialization strategy based on linear ranking was proposed for the CBPRM algorithm. By assigning scores to environmental cells based on their distance to the target, linear ranking replaces the roulette selection method to determine cell selection probabilities. Starting from the initial point, the strategy excludes cells containing obstacles and sequentially selects available cells to construct a feasible path to the target point. If no available cells are found before reaching the target, path construction terminates. In reference [

22], two special lists are used to select parents: one list contains high-fitness paths chosen by elite selection, and the other contains paths with different fitness values. This approach improves the quality of offspring while allowing low-fitness parents to explore the search space. During offspring generation, multiple potential offspring are created based on feasible crossover points, and the two paths with the highest fitness are selected after sorting. In traditional mutation operations, only one node typically has the chance to mutate. Reference [

23] designs a dual AGV collaborative safety steering radius, integrating multiple factors into the global path planning algorithm to prevent steering collisions and balance the planning efficiency with map utilization. It introduces the starting- and ending-point posture information and initializes the path based on safety steering rules, thoroughly considering the potential for steering collisions between the dual AGVs at the start and end points, thus generating a safer initial path. Reference [

24] introduces a Markov chain that can predict future states based on current ones. The path is represented as a grid number, and during mutation operations, the initial number sequence is randomly truncated. From the truncation point, subsequent grid numbers are selected based on state transition probabilities to form a new sequence. Rules are set to avoid the robot from going back or remaining stationary, guiding individual changes to find better paths. Reference [

25] introduces operators for loop removal, insertion–deletion, and optimization, which are used for deleting loops, adjusting nodes, and shrinking paths.

Although these improvements have achieved significant results in some respects, they also increase the complexity of the algorithm, extend computation time, and impose higher requirements on hardware. At the same time, these improvement directions are relatively narrow and make it difficult to achieve global optimization. Therefore, this paper proposes a series of improvements, including a dichotomy-based multi-step method for population initialization, a tournament selection strategy, an adaptive crossover strategy, a two-layer encoding mutation strategy and the Bezier curve optimization of the optimal path. These improvements not only enhance the quality and diversity of the population but also strengthen the algorithm’s adaptability and search efficiency at different stages. The goal is to provide a more efficient and robust solution for robot path planning.

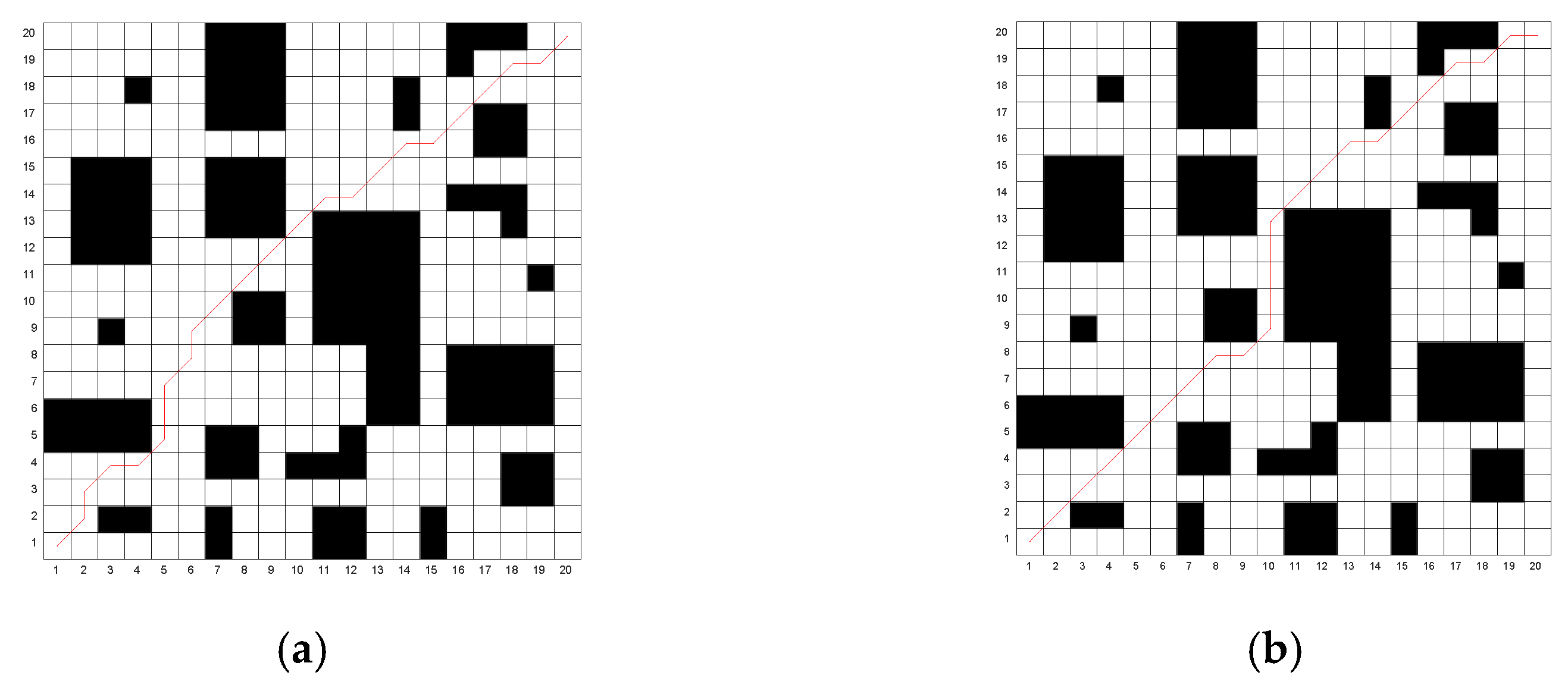

2. Environment Mapping

In the application of path planning, the grid method is a commonly used modeling approach. This method discretizes the continuous planning space into a series of equally sized grids (grid cells), simplifying complex environmental information into a simple one-dimensional array format. Each grid cell corresponds to a region in the actual environment, and the specific attributes of that region are represented by the state of the grid map. To ensure the effectiveness of path planning, the size of each grid cell is typically determined based on the robot’s own dimensions, the size of obstacles, and the characteristics of the working environment, in order to be applicable to non-grid environments.

In the grid map, black grid cells represent obstacles, while white grid cells indicate the feasible free-movement areas. Additionally, to prevent the robot from exceeding the boundaries of the map during the path planning process, it is typically assumed that the perimeter of the grid map is completely surrounded by obstacles, thus forming a safe boundary constraint, as shown in

Figure 1.

The coordinates (x, y) of each grid cell can be uniquely mapped to a grid index, and the relationship between the grid index and coordinates is given by the following formula:

where floor is the floor function, num is the grid index, row is the number of columns in the grid map, and G(x, y) is the matrix of feasible grid cells.

3. Improved Genetic Algorithm

3.1. Population Initialization

The quality of the initial population in a genetic algorithm is crucial to the solution’s outcome. The initial population generated by traditional genetic algorithms tends to be of poor quality, which in turn affects the convergence speed of the algorithm. In this study, a binary search method combined with a multi-step length approach is used for population initialization. This not only significantly enhances the diversity of the population but also effectively improves its quality.

3.1.1. Dichotomy Method

The core idea of the dichotomy method is to recursively find the midpoint between two grid cells. It is primarily implemented using two approaches: the row-based method and the column-based method.

The processing flow of the base–row system and base–column system adjusts the judgment sequence according to the base dichotomy type: the base–row system checks if the vertical coordinate difference |Y − y| is greater than one, while the base–column system checks if the horizontal coordinate difference |X − x| is greater than one. When the difference exceeds the threshold, a bisection operation is performed: the coordinate difference is divided by two and randomly rounded (either rounded up or down), with the same calculation rule applied to both horizontal and vertical coordinates. After generating the midpoint, if an obstacle is encountered, the movement direction is chosen based on the base direction type: the base–row system uses horizontal (left/right) displacement, while the base–column system uses vertical (up/down) displacement, continuing to move within the current row or column to find a feasible grid. If no valid path is found after the displacement, the algorithm is terminated, indicating that no path exists between the start and end points in the current map. The base–row system and base–column system each ensure that there is one grid per row or column. By evenly splitting the population into two base direction modes and introducing the random rounding of the half-coordinate difference and obstacle-avoiding random-direction selection mechanisms, the initial population diversity is significantly enhanced, thereby optimizing the global performance of the algorithm.

After applying the dichotomy method to generate intermediate points between the start and end points, the path is divided into two segments. For each segment, the search for intermediate points continues, further subdividing the path until adjacent rows or columns can no longer be divided. The final path generated will consist of multiple nodes that are evenly distributed.

3.1.2. The Multi-Step Method

Traditional path planning algorithms typically use the grid center points as a standard, where the path travels from the starting point through several center points to the destination. This method is simple and effective, but it has significant drawbacks: the path is constrained by the grid centers, often resulting in a stair-step or polyline shape, lacking smoothness and a natural feel. Additionally, in complex obstacle environments, excessive reliance on grid centers may prevent the discovery of an effective path, resulting in a lack of flexibility and robustness.

Since the Bresenham algorithm does not require floating-point operations, it conserves hardware resources, as its integer arithmetic avoids the high cost and inefficiency associated with complex floating-point calculations. Therefore, an improved multi-step method based on the Bresenham algorithm is proposed, which eliminates the constraint of grid centers. This method directly detects the linear connectivity between two points. If the two points are directly connected, a straight-line path is generated; if obstructed by an obstacle, intermediate nodes are dynamically inserted, prioritizing the midpoint or nearby free grid cells for adjustment, to ensure the connectivity and feasibility of the path. As shown in

Figure 2, the green dashed line represents the determination of whether the straight line passes through an obstacle based on the Bresenham algorithm; the black solid line represents the feasible path segment; P1, P2, and P3 are insertion points; and the red circles indicate locations where the straight line intersects obstacles.

However, the traditional Bresenham algorithm can only directly identify the dark-blue grid cells shown in

Figure 3, while the light-colored grid cells will be missed. To address this issue, this paper improves the Bresenham algorithm by adding a detection mechanism for light-colored grid cells, enabling it to identify all the grid cells that the line passes through. Method for detecting light-colored grid cells: taking the line with slope

and the grid cells A

and B

from

Figure 3 as an example, when the Bresenham algorithm computes the change in the y-coordinate of grid cell B, compare

with

. If

>

, the line passes through the cell above A; if

=

, the line passes through only both grid cells A and B; if

<

, the line passes through the cell below B. This is shown in

Figure 3.

The specific steps of the improved Bresenham algorithm for detecting the grid cells a straight line passes through are as follows:

Step 1: Input the coordinates of the two endpoints, (,) and (,), and initialize an empty array [X, Y].

Step 2: Calculate dx = || and ||. If dx < dy, swap the x and y coordinates to ensure stepping along the y-axis. If > , swap the start and end coordinates to ensure traversal from left to right.

Step 3: Traverse the x coordinates from left to right. Each time x moves one step forward, accumulate the error. If the error exceeds the threshold, update the y coordinate and add the updated coordinates (x, y) to the [X, Y] set (if dx < dy, swap the coordinates before adding to [X, Y]). Each time the y coordinate is updated, check for any missing grid cells. If there are any, they should be supplemented promptly, and the supplemented grid cells should also be added to [X, Y] (similarly, when dx < dy, swap the coordinates before adding to [X, Y]).

Step 4: Return the array [X, Y], which contains the coordinates of all grid cells on the path.

The improved Bresenham algorithm is shown in Algorithm 1.

| Algorithm 1 Improved Bresenham algorithm |

| 1: Input: Coordinates of two endpoints (x1,y1) and (x2,y2) |

| 2: Output: Coordinate set [X, Y] |

| 3: Initialize parameters |

| 4: dx←|x2 − x1|; dy←|y2 − y1|; steep←(dy > dx); |

| 5: if steep |

| 6: Swap x and y coordinates for both endpoints; |

| 7: end |

| 8: if x1 > x2 |

| 9: Swap both coordinates of start and end points; |

| 10: end |

| 11: error ← 0; y←y1; [X, Y]←[∅, ∅]; k←dy/dx; |

| 12: step ← sign(y2 − y1); |

| 13: for x = x1: x2 |

| 14: [a, b] ← steep ? [y, x] : [x, y]; |

| 15: X ← [X, a]; Y ← [Y, b]; |

| 16: error = error + dy; |

| 17: if 2 × error >= dx |

| 18: ideal ← (x + 0.5 − x1) × k + y1; |

| 19: diff ← ideal − (y + 0.5); |

| 20: if diff > 0 |

| 21: [a_add, b_add] ← steep ? [y + 1, x] : [x, y + 1]; |

| 22: X ← [X, a_add]; Y ← [Y, b_add]; |

| 23: elseif diff < 0 |

| 24: [a_add, b_add] ← steep ? [y, x + 1] : [x + 1, y]; |

| 25: X ← [X, a_add]; Y ← [Y, b_add]; |

| 26: end |

| 27: y ← y + step; error ← error − dx; |

| 28: end |

| 29: end |

3.1.3. Generating Initial Population Individuals

Since the path generated by the dichotomy method is discontinuous, a multi-step approach is required to connect adjacent grid cells, thereby generating a continuous path. This ensures the coherence and feasibility of each individual in the path planning process.

3.2. Improved Selection Strategy

The traditional selection method in genetic algorithms is roulette wheel selection, where the selection probability is associated with the individual’s fitness. When a few exceptional individuals dominate the population, they may be selected frequently during the selection process, causing the next generation to be quickly dominated by these individuals. As a result, the population becomes homogeneous, losing competitiveness and exploration ability, potentially falling into a local optimum. The selection probability can be expressed as

where

is the selection probability of the i-th individual in the roulette wheel selection method,

is the fitness value of the i-th individual in the population, and

is the population size, where

.

To address this issue, an improved tournament selection method is proposed. This method reduces the absolute dominance of exceptional individuals by using multiple rounds of group competitions, introducing more randomness and competitive layers. As a result, individuals with lower fitness values may also be selected, effectively preserving population diversity and avoiding convergence to a local optimum. The specific steps are as follows:

Step 1: Set the population size to n (where n is a multiple of 32).

Step 2: Randomly divide the population into G groups, where . Each group undergoes a layered competition, including preliminary rounds, semifinals, and finals. The competition selects the four individuals with the highest fitness in each group. In each round of the tournament, a total of 4G individuals are selected.

Step 3: Repeat Step 2 for eight rounds of the tournament. In the end, a total of n individuals are selected.

The improved tournament selection is shown in Algorithm 2.

| Algorithm 2 Improved Tournament Selection |

| 1: Input: Population P (size N); Fitness values F (length N) |

| 2: Output: selected_pop |

| 3: Initialize parameters |

| 4: Group size G ← 32; Groups per tournament K ← 4; Winners per group W ← 4; |

| 5: selected_pop ← ∅; |

| 6: T ← N/(K × W); |

| 7: for t = 1 : T |

| 8: I ← randperm(NP); |

| 9: Split I into K groups G1,…,G_K (each size G); |

| 10: for i = 1: K |

| 11: S1 ← []; S2 ← [] ; W_i← [] ; |

| 12: for j: 2:G_K |

| 13: (ind1, ind2) ← (G_i[j], G_i[j + 1]); |

| 14: S1 ← S1 ∪ [F[ind1] > F[ind2] ? ind1 : ind2]; |

| 15: end |

| 16: for j: 2: length(S1) |

| 17: S2 ← S2 ∪ [F[ind1] > F[ind2] ? ind1 : ind2]; |

| 18: end |

| 19: for j: 2: length(S2) |

| 20: (ind1, ind2) ← (S2[j], S2[j + 1]); |

| 21: W_i ← W_i ∪ [F[ind1] > F[ind2] ? ind1 : ind2]; |

| 22: end |

| 23: selected_pop ←selected_pop ∪ W_i; |

| 24: end |

| 25: end |

3.3. Improved Crossover Strategy

In traditional genetic algorithms, crossover operations typically involve a fixed number of crossover points and randomly selected parents. This fixed crossover point approach lacks flexibility. In the early stages of the algorithm, strong exploration capabilities are usually needed to broaden the search space, whereas in the later stages, more refined exploitation capabilities are required to fine-tune the solution. Fixed crossover points do not meet these dynamic needs. Additionally, the completely random selection of parents may lead to the crossover of individuals with significantly different fitness levels, which can dilute or even destroy superior genes with superior ones, thereby reducing the quality of new individuals and hindering the algorithm’s evolution toward the optimal solution. To address these issues, this paper proposes adaptive crossover point numbers and dynamic threshold selection.

The adaptive number of crossover points is determined by the following formula:

where P is the number of crossover points; floor is the floor function (which rounds down to the nearest integer),

is the maximum number of crossover points, t is the current iteration number, and T is the maximum number of iterations.

To promote the rapid convergence of the population and improve the quality of offspring, when selecting parents, the first parent is chosen from the top 30% of individuals based on fitness rankings, while the second parent is selected randomly. However, the difference in fitness values between these two parents must satisfy the following formula:

where

is the fitness difference between the two parents,

is the upper limit of the threshold,

is the maximum fitness difference in the current iteration,

is a function of the coefficient of variation CV, 0.7 is the base threshold to ensure that individuals with lower fitness have a chance to participate in crossover in the early stages, maintaining the diversity of the population, and 0.3 is the dynamic adjustment coefficient, which gradually tightens the selection criteria as iterations progress, avoiding premature strict selection or the participation of individuals with large fitness differences in crossover, thereby ensuring the quality of the population.

When the fitness values of the population individuals are too dispersed, it leads to a large number of individuals failing to meet

, thereby reducing population diversity. On the other hand, if the fitness is too concentrated, a large number of individuals will easily satisfy

, causing individuals with significant fitness differences to participate in crossover, which reduces the quality of the new offspring. To address this, the coefficient of variation CV is introduced in this paper to measure the degree of dispersion of the population’s fitness, and its calculation formula is

where

is the fitness value of the i-th individual,

is the average fitness value of the individuals after selection in the current iteration, and n is the number of individuals in the population.

can adjust

appropriately based on the population’s dispersion. The expression is determined by the following formula:

where K is the critical value of the dispersion coefficient, and a is the adjustment coefficient.

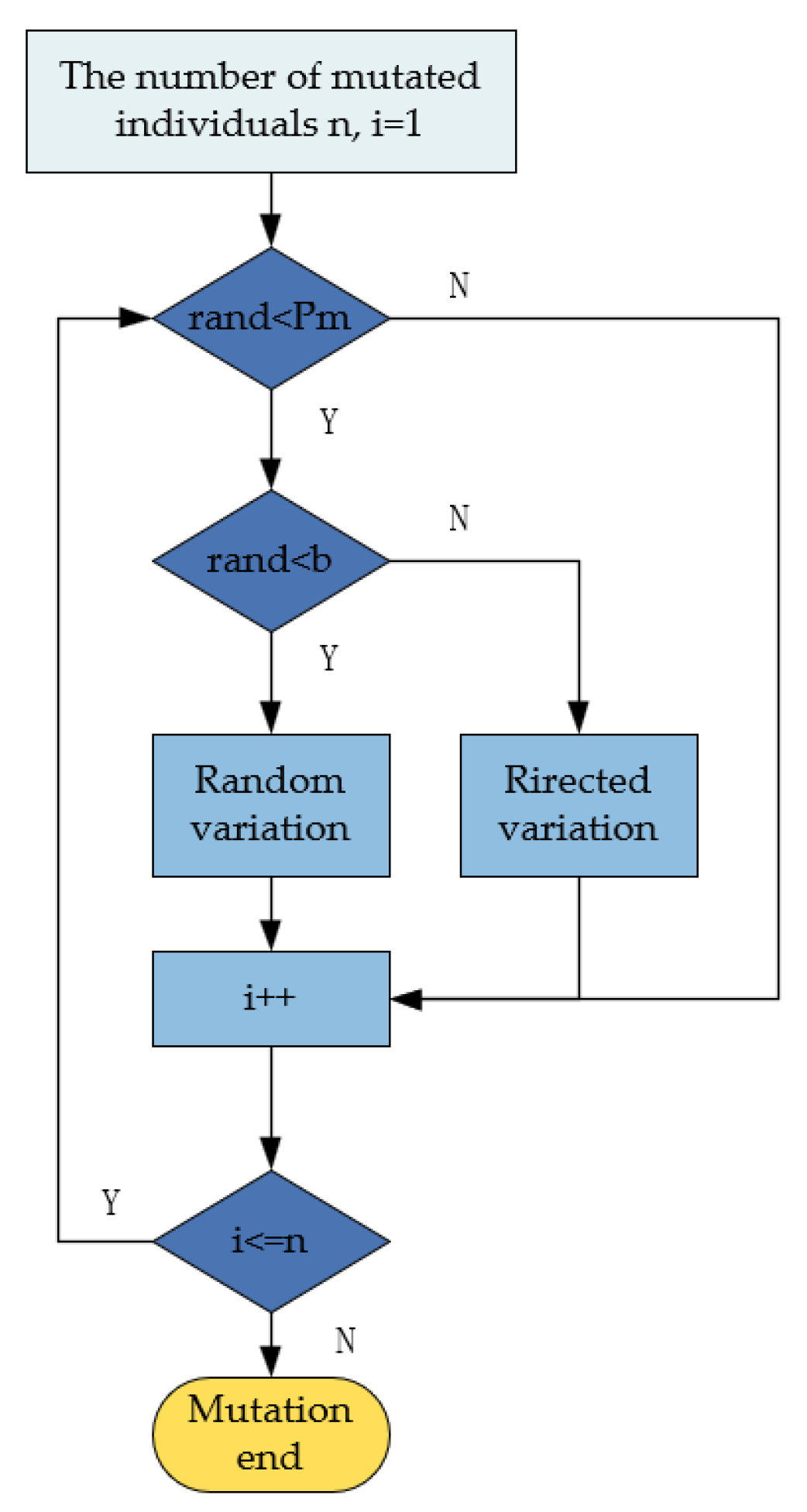

3.4. Improved Mutation Strategy

Traditional mutation methods typically rely on single random mutations, but due to the lack of directionality, they often result in difficulties in controlling the quality of the path and may even disrupt individuals with higher fitness, negatively affecting overall optimization performance. Therefore, this paper employs a dual-layer mutation approach. The first layer, random mutation, enhances population diversity, prevents premature convergence, and increases the likelihood of escaping local optima. The second layer, goal-oriented mutation, combines the current search direction to guide the solution towards better regions, thereby improving convergence speed and search efficiency. Furthermore, with the progress of iterations, the dynamic adjustment of layer selection probability enables the algorithm to focus on exploration in the early stages, avoiding premature convergence to local optima while concentrating efforts on searching for better solution regions in the later stages, thus enhancing the convergence speed. The flowchart of the improved mutation strategy is shown in

Figure 4.

As the iterations progress, the probability of random mutations should gradually reduce to avoid damaging excellent individuals and to accelerate convergence. Therefore, the layer selection probability b needs to be gradually adjusted, and its expression is given by

where B is the layer selection probability, b is the probability adjustment coefficient for selection, t is the current iteration number, and T is the maximum number of iterations.

Random mutation is performed by randomly selecting two nodes from the path as mutation nodes and connecting these two nodes using the multi-step method. In reference [

26], a target-oriented mutation method is used, where a circular area is drawn with the parent node as the center and a radius 1.4 times the straight-line distance from the parent node to its previous and next nodes. Nodes within the circle are selected as candidate mutation nodes. This results in the traversal of too many mutation nodes, consuming a large amount of hardware resources. To address this, the method in this paper is optimized. First, the two nodes before and after the parent node are connected to form a straight line L1. Then, a perpendicular line L2 is drawn from the parent node to line L1, denoted as L2. Then, the symmetric line L3 of L2 with respect to line L1 is constructed, denoted as L3. Using the improved Bresenham algorithm, we obtain all the grid cells traversed by the straight lines L2 and L3 and designate them as candidate mutation nodes. Then, we use the multi-step method to make the two nodes before and after the parent node pass through the candidate mutation nodes. The probability of selecting each candidate mutation node is given by

where

is the probability of selecting the i-th candidate mutation node and

is the fitness value of the path formed by the parent node’s adjacent nodes passing through the i-th candidate mutation node. As shown in

Figure 5, the black line represents the parental path, with the deep-blue grid P1 indicating the parent node. The deep-blue grids P2 and P3 correspond to the previous and next points relative to the parent node, respectively. The yellow solid line represents line L1, while the green dashed lines represent lines L2 and L3. The light-blue grids P4–P7 represent the candidate mutation nodes.

3.5. Bezier Curve Optimization of the Optimal Path

The optimal path generated by the genetic algorithm after iteration consists of multiple line segments, resulting in a non-smooth path. To solve this problem, Bezier curves can be used to optimize the optimal path. A Bezier curve is a curve formed by multiple control points, and the shape of the curve is determined by these control points. As shown in

Figure 6, there are three control points, A, B, and C, with

,

and K satisfying Formula (12). As

changes, the point K moves along a trajectory, which is the Bezier curve. The coordinates of K satisfy Formula (13).

3.6. Algorithm Flow

The algorithm flow of this paper is shown in

Figure 7.

5. Conclusions

This paper addresses the limitations of traditional genetic algorithms in mobile-robot path planning and proposes a path planning method based on an improved genetic algorithm. By using a dichotomy-based multi-step method for population initialization, a tournament selection strategy, an adaptive crossover strategy, a dual-layer encoding mutation strategy, and Bezier curve optimization for the optimal path, the method significantly improves path length, smoothness, iteration speed, and computational efficiency. Experimental results show that the path planning performance of the improved algorithm outperforms traditional genetic algorithms and existing improvement methods, demonstrating high practical value.

However, the improved genetic algorithm proposed in this paper still has certain limitations. Although the improved strategies effectively enhance path quality in static environments, the current experiments mainly focus on static obstacle environments, and the adaptability to moving obstacles has not been fully validated. While the algorithm has been preliminarily validated for feasibility in navigation experiments on a mobile-robot hardware platform (

Figure 11 and

Table 3), it has not yet been applied in extreme resource-constrained environments, such as low-power microcontrollers. Future research should focus on lightweight algorithm design to reduce high hardware demands and integrate the dynamic window method into the genetic algorithm to achieve dynamic obstacle avoidance.