Research on Improved Bridge Surface Disease Detection Algorithm Based on YOLOv7-Tiny-DBB

Abstract

1. Introduction

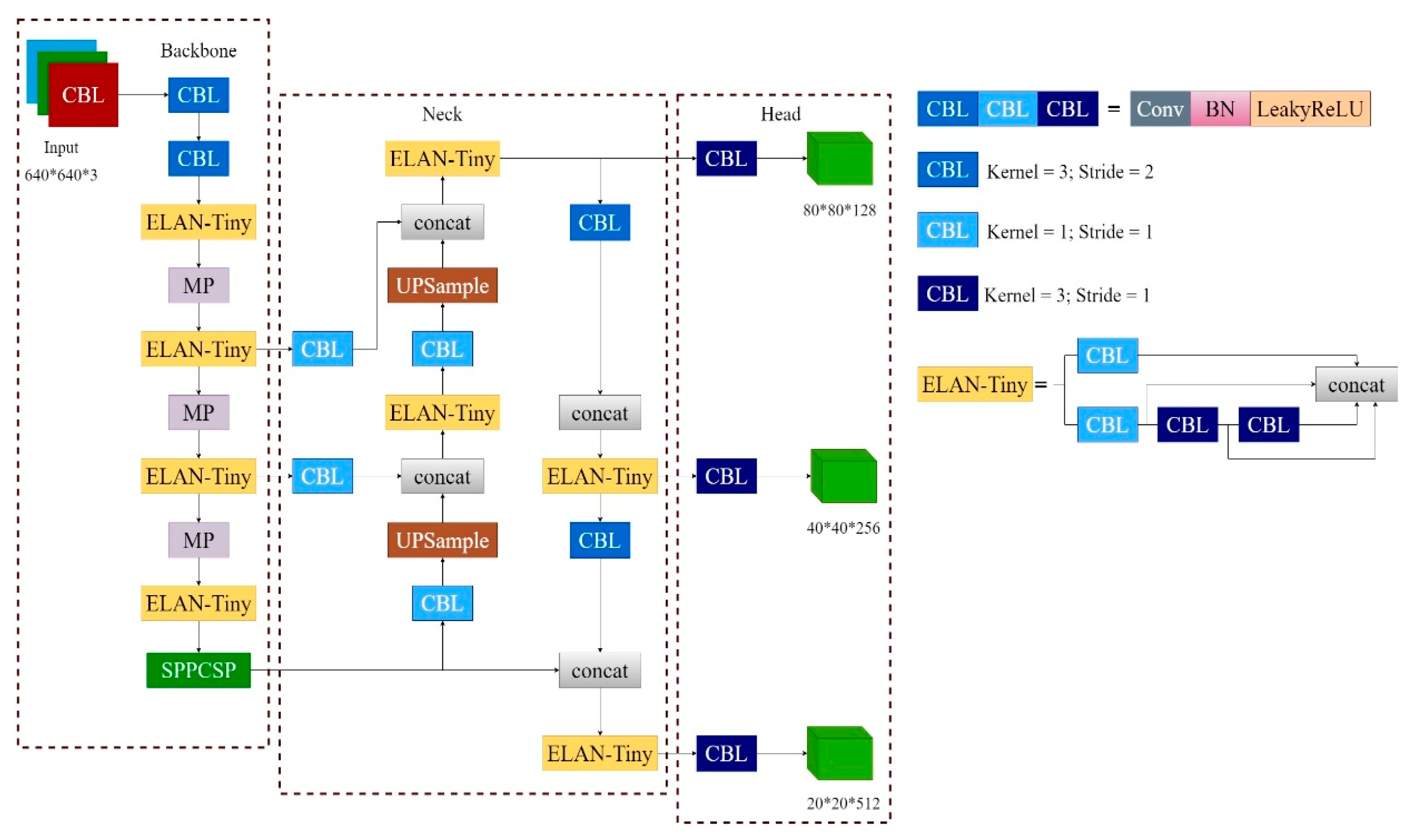

2. Principles of the YOLOv7-Tiny Algorithm

3. Algorithm Enhancement

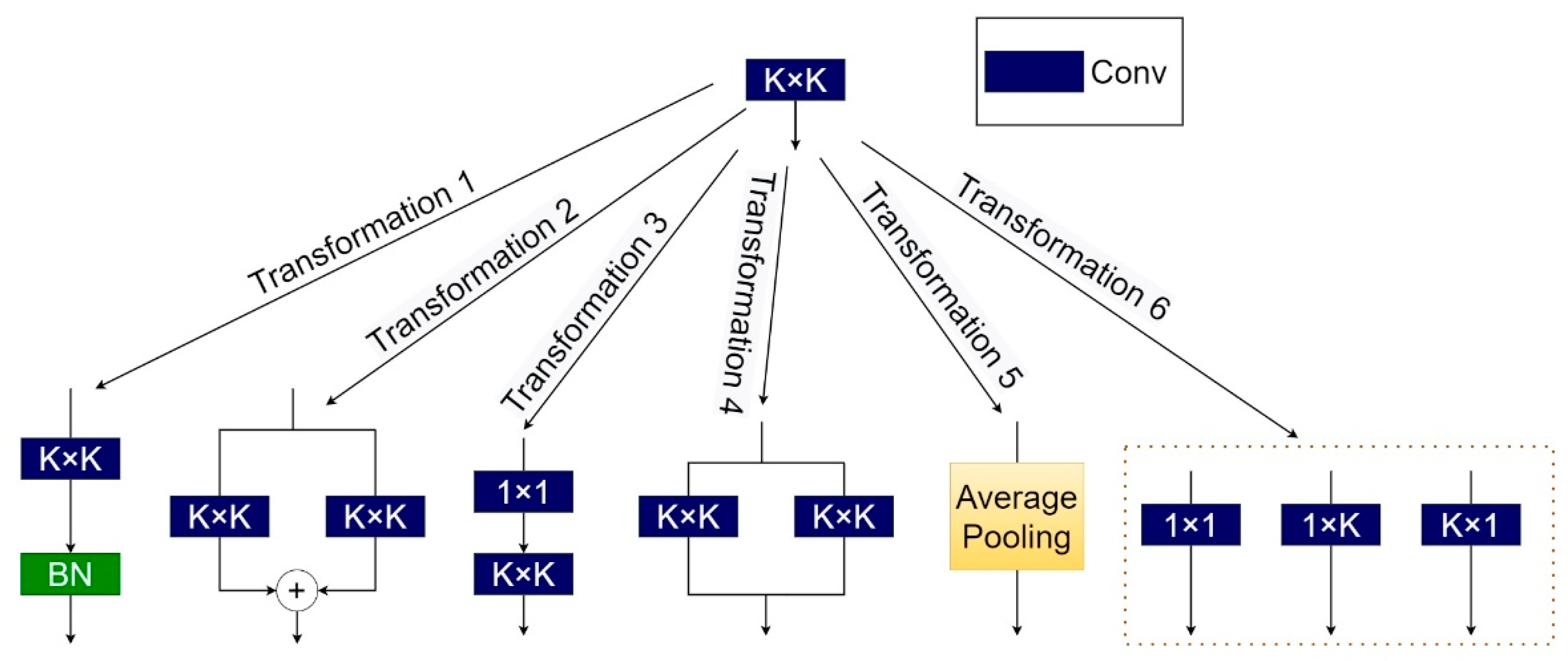

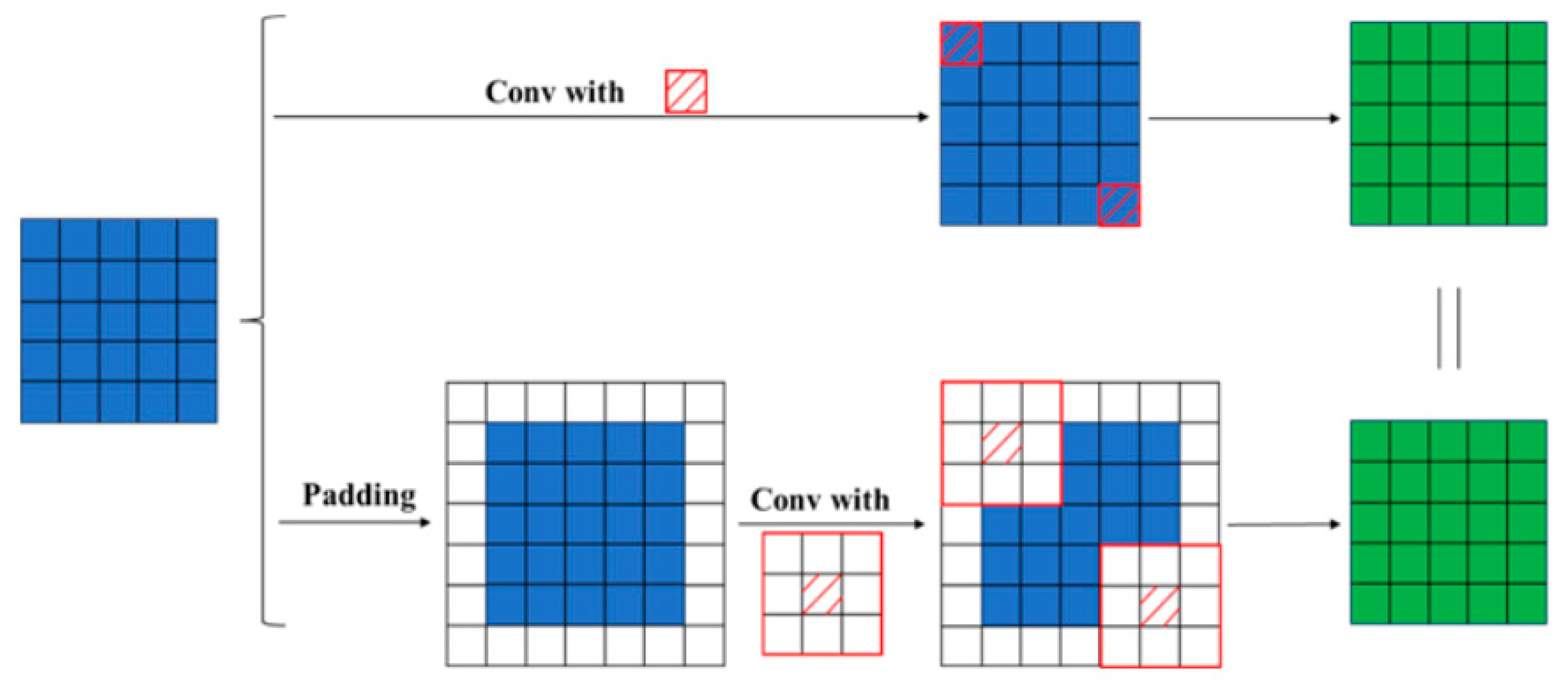

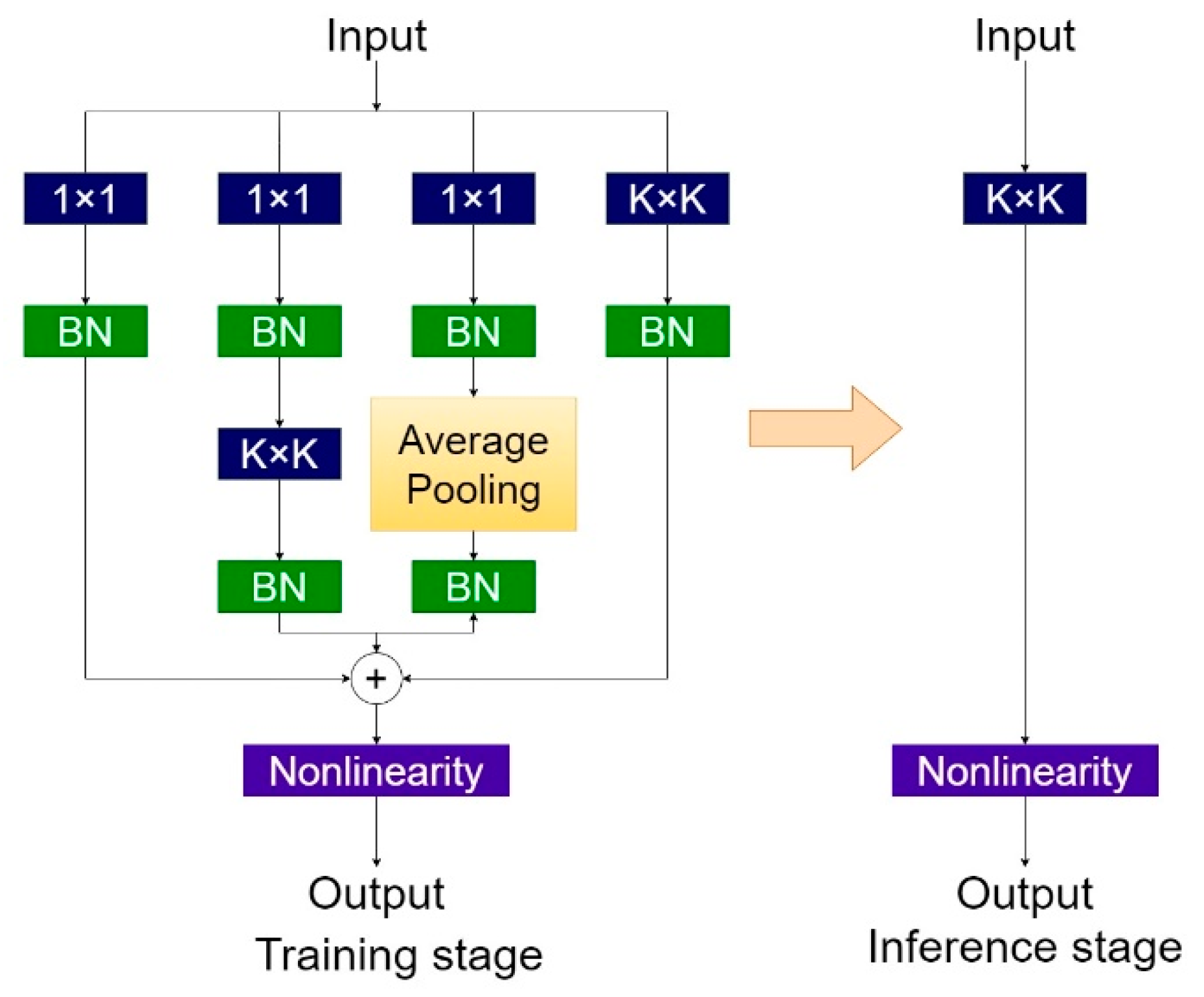

3.1. Diverse Branch Block (DBB) Module Introduction

3.2. Refinement of the Loss Function

3.3. Enhanced YOLOv7-Tiny-DBB Algorithm

4. Experimental Analysis and Validation

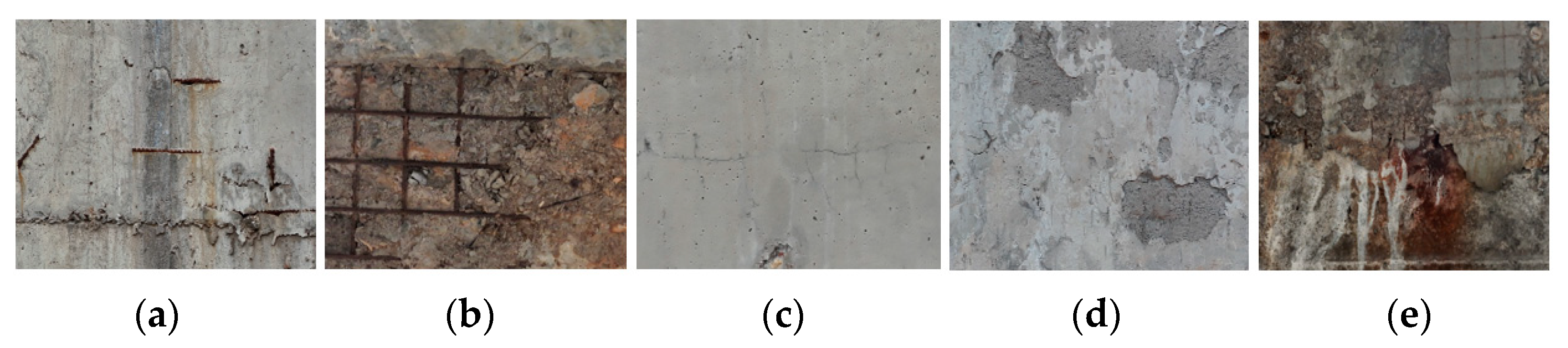

4.1. Dataset and Experimental Environment

4.2. Performance Evaluation Metrics

4.3. Experimental Results and Analysis

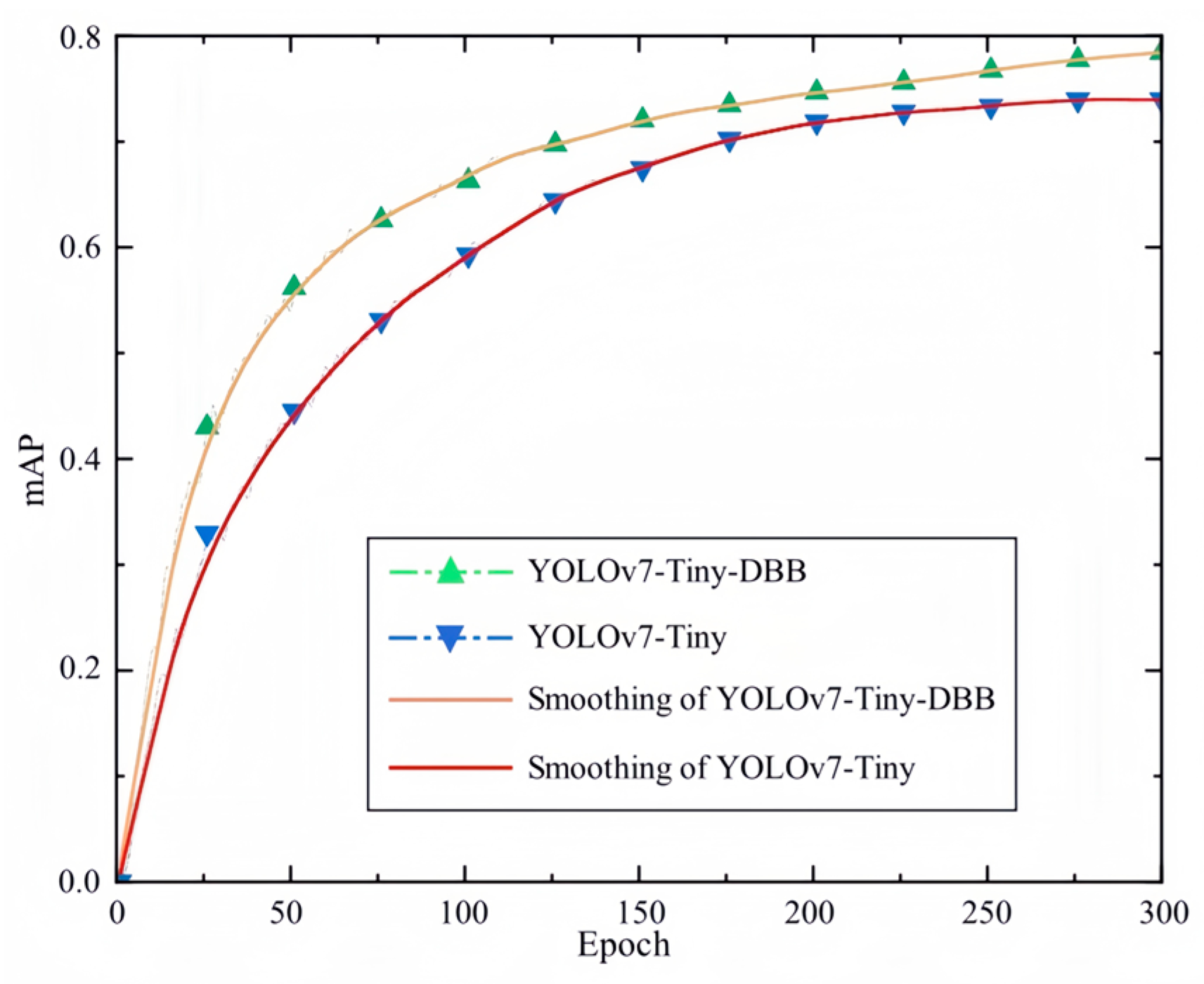

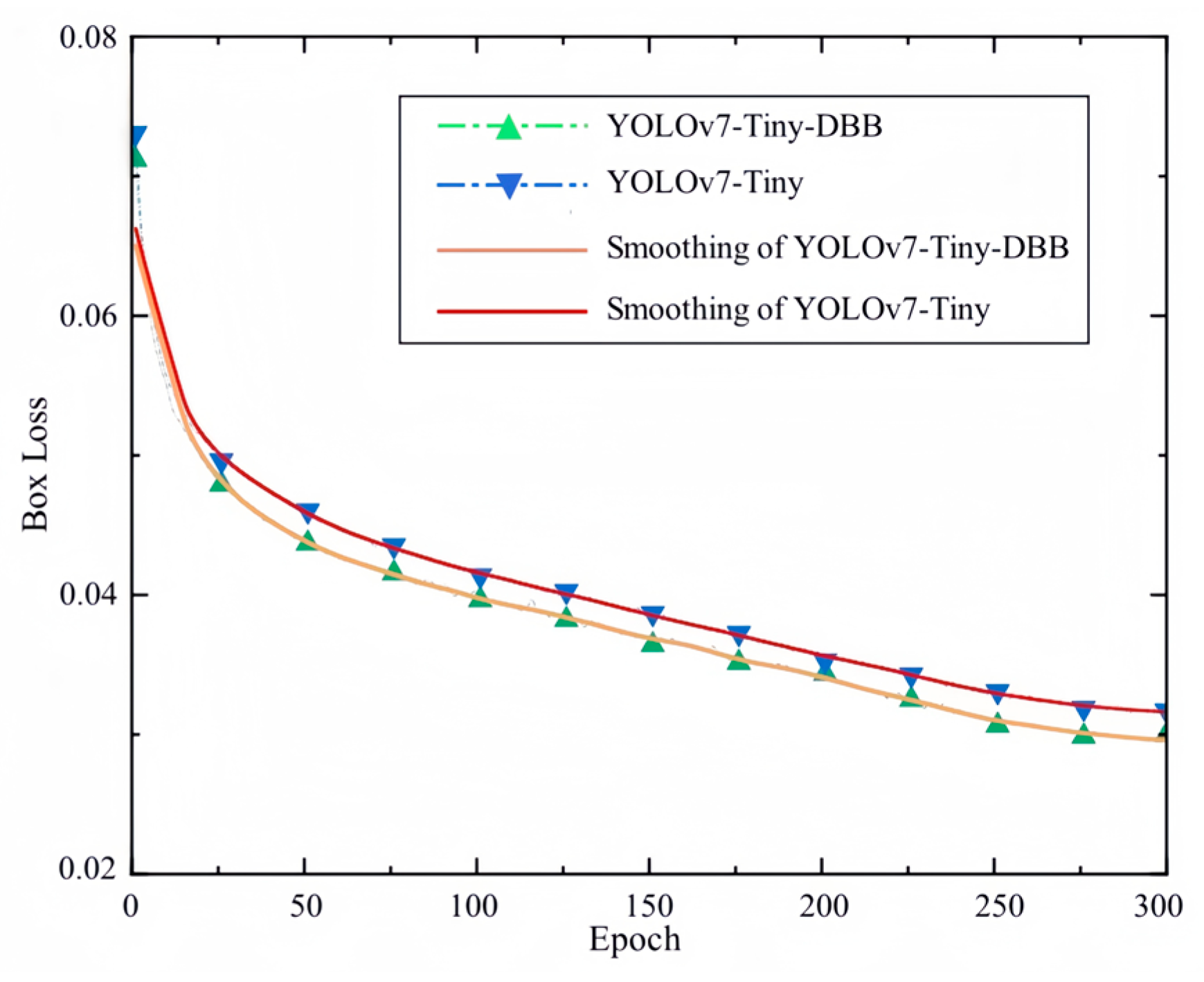

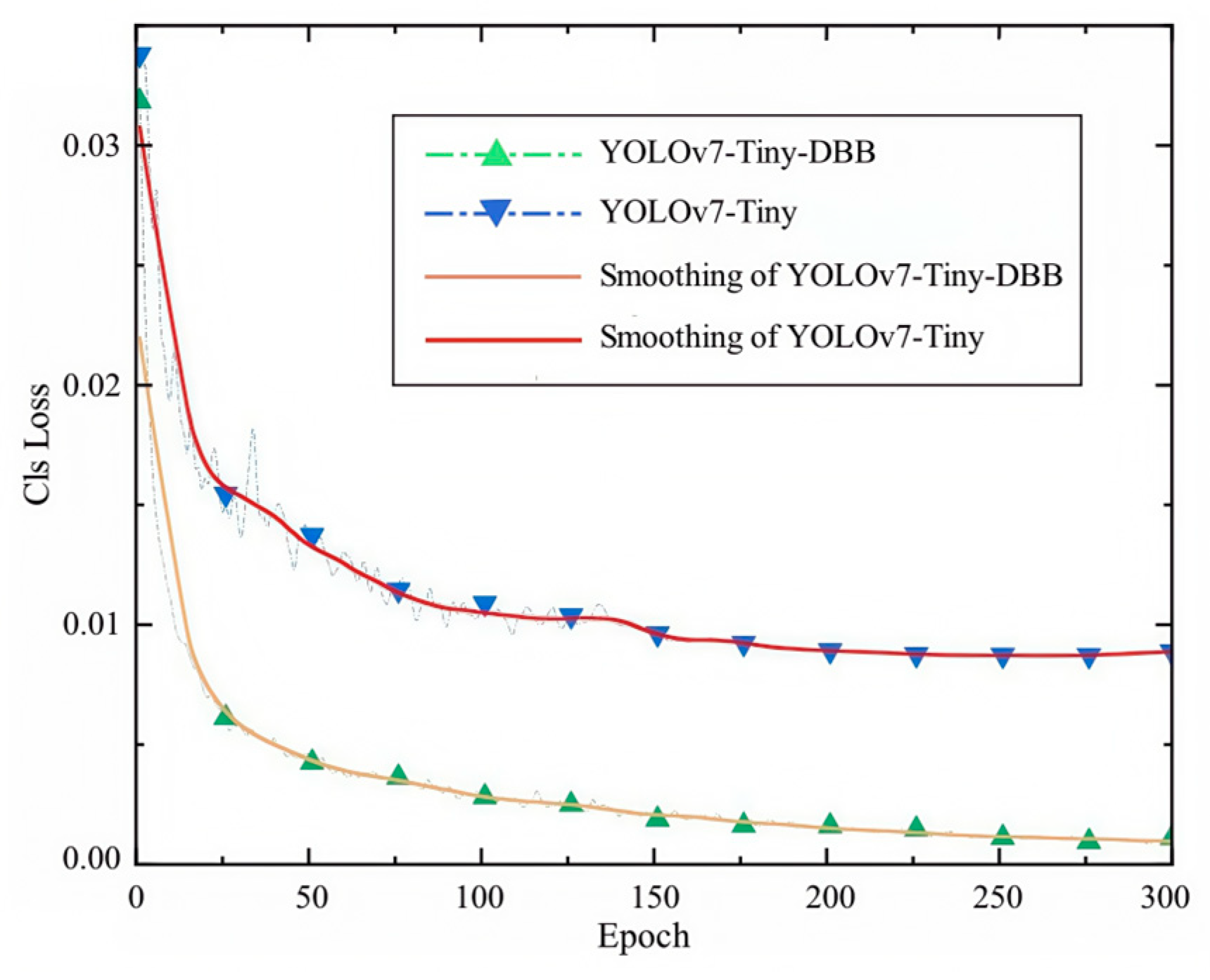

4.3.1. Analysis of the Training Results of the Improved Algorithm

4.3.2. Analysis of the Testing Results for the Improved Algorithm

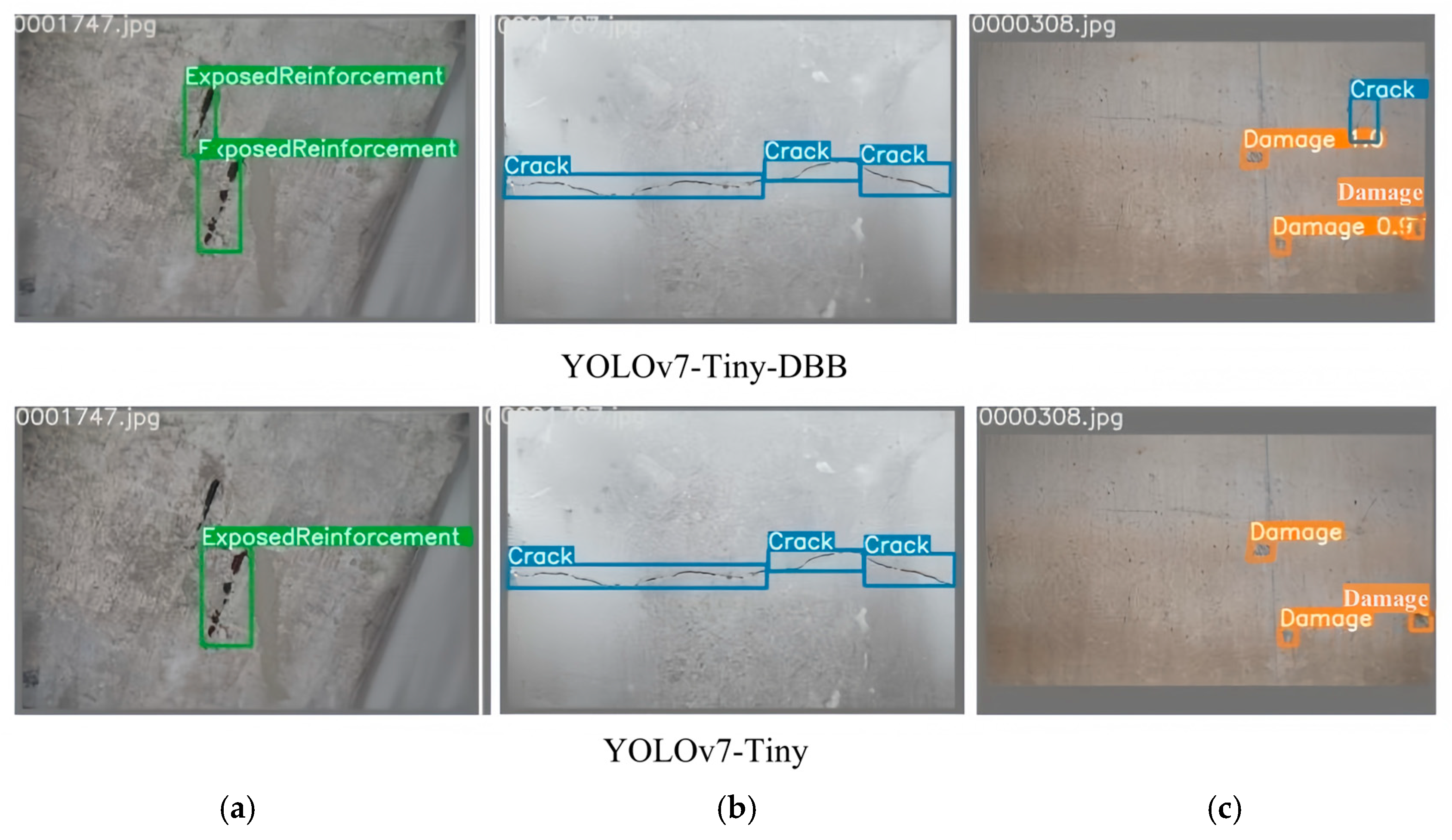

4.3.3. Visualization Effects of Bridge Diseases Detection Before and After Algorithm Improvement

5. Conclusions

- (1)

- In response to the problems posed by the diverse target types, variable morphological characteristics, numerous small sample targets, and a high likelihood of missed detections in bridge surface diseases, this study replaced the ELAN-Tiny module of the original YOLOv7-Tiny algorithm with a DBB module. Additionally, the traditional CIoU loss function had been replaced with a boundary box regression loss function based on MPDIoU. Based on this foundation, the improved YOLOv7-Tiny-DBB detection algorithm for the identification of surface defects in bridges had been proposed. This approach not only enriched the extraction of feature information but also enhanced regression prediction capabilities, effectively addressing the issue of missed detections encountered with the YOLOv7-Tiny algorithm.

- (2)

- The proposed improved YOLOv7-Tiny-DBB detection algorithm was effectively trained and tested using a self-constructed augmented dataset. The results indicated that the modified algorithm achieved an increase of 4.2% in precision, 6.5% in recall, 5.4% in F1 score, and 7.3% in mean Average Precision (mAP) compared to the original YOLOv7-Tiny algorithm. Additionally, the detection speed improved by 13.1 FPS, and further validation through ablation experiments confirmed the efficiency and effectiveness of the proposed improvements.

- (3)

- The improved YOLOv7-Tiny-DBB algorithm demonstrated a significant reduction in both the number of parameters and floating-point operations, resulting in enhanced detection speed and performance. Visualization tests indicated that the application of this improved algorithm effectively mitigated the risk of detecting the reinforcement exposure defects and the microcracks defects. This advancement provided a novel approach for deploying the real-time detection of surface diseases on bridges using industrial edge devices.

- (4)

- In future research, it is essential to further enhance the construction of datasets that capture the distribution of bridge defects under various challenging conditions. Additionally, comparisons and improvements with more advanced network models should be conducted to increase the effectiveness of this method. Building on this foundation, experimental studies on real-time detection of the apparent bridge defects using edge devices will be initiated.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Worley, S.B.; Ervin, E.K. Health study of reinforced concrete test bridge with pier damage. ACI Struct. J. 2017, 4, 959–968. [Google Scholar]

- Jiang, Y.Y.; Liu, Y.; Zhou, X.Y. Grading diagnosis of beam bridge support separation disease within one cluster based on the difference in the probability density function of the measured data. Struct. Health Monit. 2023, 22, 3659–3676. [Google Scholar] [CrossRef]

- Gan, L.F.; Liu, H.; Yan, Y.; Chen, A.A. Bridge bottom crack detection and modeling based on faster R-CNN and BIM. IET Image Process. 2024, 18, 664–677. [Google Scholar]

- Xu, W.C. Vehicle load identification using machine vision and displacement influence lines. Buildings 2024, 14, 392. [Google Scholar] [CrossRef]

- Hurtado, A.C.; Alamdari, M.M.; Kim, E.A.; Chang, K.C.; Kim, W.C. A data-driven methodology for bridge indirect health monitoring using unsupervised computer vision. Mech. Syst. Signal Process. 2024, 210, 111109. [Google Scholar] [CrossRef]

- Song, F.; Liu, B.; Yuan, G.X. Pixel-Level crack identification for bridge concrete structures using unmanned aerial vehicle photography and deep learning. Struct. Control. Health Monit. 2024, 2024, 1299095. [Google Scholar]

- Phan, T.N.; Nguyen, H.H.; Ha, T.T.H.; Thai, H.T.; Le, K.H. Deep learning models for UAV-assisted bridge inspection: A YOLO benchmark analysis. In Proceedings of the 2024 International Conference on Advanced Technologies for Communications (ATC), Ho Chi Minh City, Vietnam, 17–19 October 2024; pp. 1–13. [Google Scholar]

- Schlonsak, R.; Schreiter, J.P. Bridge detection in autonomous shipping: A YOLOv8 approach with autodistill and grounded SAM. J. Phys. Conf. Ser. 2024, 2867, 012019. [Google Scholar]

- Ameli, Z.; Nesheli, S.J.; Landis, E.N. Deep learning-based steel bridge corrosion segmentation and condition rating using mask RCNN and YOLOv8. Infrastructures 2024, 9, 3. [Google Scholar]

- Wu, Y.J.; Shi, J.F.; Ma, W.X.; Liu, B. Bridge crack recognition method based on YOLOv5 neural network fused with attention mechanism. Int. J. Intell. Inf. Technol. 2024, 20, 1–25. [Google Scholar]

- Su, H.F.; Kamanda, D.B.; Han, T.; Guo, C.; Li, R.Z.; Liu, Z.L.; Su, F.Z.; Shang, L.H. Enhanced YOLO v3 for precise detection of apparent damage on bridges amidst complex backgrounds. Sci. Rep. 2024, 14, 8627. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.F.; Feng, C.Q.; Chen, W.L.; Fan, J.S. Review of bridge apparent defect inspection based on machine vision. China J. Highw. Transp. 2024, 37, 1–15. [Google Scholar]

- Liao, Y.N.; Yao, L. Bridge disease detection and recognition based on improved YOLOX algorithm. J. Appl. Opt. 2023, 44, 792–800. [Google Scholar]

- Wang, Y.L.; Zhang, Z.; Yin, H. Detection method of dense bridge disease targets based on SE-YOLOv3. J. Phys. Conf. Ser. 2020, 1544, 012141. [Google Scholar]

- Yu, Z.W.; Shen, Y.G.; Shen, C.K. A real-time detection approach for bridge cracks based on YOLOv4-FPM. Autom. Constr. 2021, 122, 103514. [Google Scholar]

- Zhang, Y.J.; Chen, X.; Yan, W.B. Automated intelligent detection system for bridge damages with Fractal-features-based improved YOLOv7. Signal Image Video Process. 2025, 19, 243. [Google Scholar]

- Ding, H.P.; Tang, Q.L. Optimization of the road bump and pothole detection technology using convolutional neural network. J. Intell. Syst. 2024, 33, 20240164. [Google Scholar]

- Carvalho, R.; Melo, J.; Graa, R.; Ricardo; Santos, G.; Vasconcelos, M.J.M. Deep learning-powered system for real-time digital meter reading on edge devices. Appl. Sci. 2023, 13, 2315. [Google Scholar]

- Samanta, A.; Hatai, I.; Mal, A.K. A survey on hardware accelerator design of deep learning for edge devices. Wirel. Pers. Commun. 2024, 137, 1715–1760. [Google Scholar]

- Ma, W.X.; Cai, Z.R.; Wang, C.; Lin, Y. Edge devices modulation recognition method based on lightweight hybrid neural network. Inf. Countermeas. Technol. 2024, 3, 83–94. [Google Scholar]

- Sun, F.L.; Li, Z.X.; Liang, Y.Q.; Dong, M.M.; Ge, G.Y. Detection of illegal manning of electric vehicles based on improved YOLOv5 algorithm and edge devices. Mod. Comput. 2023, 29, 1–11. [Google Scholar]

- Li, X.X.; Yuan, L.; Chen, X.L.; Chen, Y.P. Edge computing technology and system development of bridge smart detection. J. Hunan City Univ. (Nat. Sci.) 2021, 30, 1–5. [Google Scholar]

- Qin, L.M.; Xu, Z.; Wang, W.H.; Wu, X.F. YOLOv7-based intelligent weed detection and laser weeding system research: Targeting veronica didyma in winter rapeseed fields. Agriculture 2024, 14, 910. [Google Scholar] [CrossRef]

- Dapinder, K.; Neeraj, B.; Akanksha; Shashi, P. A swin-YOLO: Attention—Swin Transformers in YOLOv7 for air-to-air unmanned aerial vehicle detection. In Pattern Recognition; Springer: Cham, Switzerland, 2025; pp. 159–173. [Google Scholar]

- Wang, L.; Bai, J.X.; Wang, P.; Bai, Y. Research on pedestrian detection algorithm in industrial scene based on improved YOLOv7-tiny. IEEJ Trans. Electr. Electron. Eng. 2024, 19, 1203–1215. [Google Scholar]

- Xie, G.B.; Lin, S.Z.; Lin, Z.Y.; Wu, C.F.; Liang, L.H. Road defect detection algorithm based on improved YOLOv7-tiny. J. Graph. 2024, 45, 987–997. [Google Scholar]

- Fan, Q.; Yao, L.D.; Zhao, Y.; Li, H.M.; Chen, R.H. Lightweight traffic vehicle and pedestrian target detection algorithm based on improved YOLOv7-tiny. J. Yangzhou Univ. (Nat. Sci. Ed.) 2024, 27, 34–42. [Google Scholar]

- Li, Y.F.; Li, H. A novel real-time object detection method for complex road scenes based on YOLOv7-tiny. Clust. Comput. 2024, 27, 13379–13393. [Google Scholar]

- Zhang, L.; Zou, F.S.; Wang, X.F.; Wei, Z.Z.; Li, Y. Improved algorithm for YOLOX-S object detection based on diverse branch block (DBB). In Proceedings of the 2022 6th International Conference on Electronic Information Technology and Computer Engineering, Xiamen, China, 21–23 October 2022. [Google Scholar]

- Budiarsa, R.; Wardoyo, R.; Musdholifah, A. Face recognition with occluded face using improve intersection over union of region proposal network on Mask region convolutional neural network. Int. J. Electr. Comput. Eng. 2024, 14, 3256–3265. [Google Scholar]

- Cho, Y.J. Weighted intersection over union (wIoU) for evaluating image segmentation. Pattern Recognit. Lett. 2024, 185, 101–107. [Google Scholar]

- Xiong, C.; Zayed, T.; Jiang, X.; Alfalah, G.; Abelkader, E.M. A novel model for instance segmentation and quantification of bridge surface cracks—The YOLOv8-AFPN-MPD-IoU. Sensors 2024, 24, 4288. [Google Scholar] [CrossRef]

- Wang, J.X.; Liu, M.; Su, Y.H.; Yao, J.H.; Du, Y.R.; Zhao, M.H.; Lu, D.Z. Small target detection algorithm based on attention mechanism and data augmentation. Signal Image Video Process. 2024, 18, 3837–3853. [Google Scholar]

- Li, J.; Liu, S.; Chen, D.; Zhou, S.; Li, C. APD-YOLOv7: Enhancing sustainable farming through precise identification of agricultural pests and diseases using a novel diagonal difference ratio IOU loss. Sustainability 2024, 16, 8855. [Google Scholar] [CrossRef]

- Ma, S.L.; Xu, Y. MPDIoU: A loss for efficient and accurate bounding box regression. arXiv 2023, arXiv:2307.07662. [Google Scholar]

- Che, A.A.; Wang, C.; Lu, K.; Tao, T.; Wang, W.Y.; Wang, B. Multi-label classification for concrete defects based on efficientnetV2. In Advanced Intelligent Computing Technology and Applications; Springer: Singapore, 2024; pp. 37–48. [Google Scholar]

- Qiu, C.Q.; Tang, H.; Xu, X.X.; Liang, J.; Ji, J.; Shen, Y.J. Optimized strategies for vehicle detection in autonomous driving systems with complex dynamic characteristics. Eng. Res. Express 2025, 7, 015249. [Google Scholar]

- Huang, B.Q.; Wu, S.B.; Xiang, X.J.; Fei, Z.S.; Tian, S.H.; Hu, H.B.; Weng, Y.L. An improved YOLOv5s-based helmet recognition method for electric bikes. Appl. Sci. 2023, 13, 8759. [Google Scholar] [CrossRef]

- Zhang, Y.S.; Chen, G.D.; Lin, C.G.; Mou, H.L.; Xiong, H.N.; Lin, J.X. Research on bridge damage detection method based on YOLO-Bridge. J. Jiamusi Univ. (Nat. Sci. Ed.) 2024, 42, 13–17+91. [Google Scholar]

| Parameter | Initialize Learning Rate | Momentum | Weight Decay | Refinement of Training Epochs | Batch Size | Image Size |

|---|---|---|---|---|---|---|

| Value | 0.01 | 0.937 | 0.0005 | 300 | 16 | 640*640 |

| Algorithm | Precision (%) | Recall (%) | F1 (%) | AP (%) | mAP (%) | ||

|---|---|---|---|---|---|---|---|

| Crack | Damage | Exposed Reinforcement | |||||

| YOLOv7-Tiny | 73.0 | 69.0 | 70.9 | 85.5 | 70.4 | 64.0 | 73.3 |

| YOLOv7-Tiny-DBB | 77.2 | 75.5 | 76.3 | 88.0 | 80.1 | 73.7 | 80.6 |

| Algorithm | Phase | Number of Layers | Parameter Quantity/M | Floating-Point Arithmetic Numbers (GFLOPs) | mAP (%) |

|---|---|---|---|---|---|

| SSD Ref. [39] | - | - | 24.28 | - | 58.9 |

| Faster R-CNN Ref. [39] | - | - | 286.16 | - | 52.1 |

| YOLOv7 | - | 415 | 37.62 | 106.5 | - |

| YOLOv7-Tiny | - | 263 | 6.23 | 13.9 | 73.3 |

| YOLOv7-Tiny-DBB | Training phase | 355 | 8.11 | 17.9 | - |

| Inference Phase | 172 | 6.01 | 13.0 | 80.6 |

| Methodology | DBB Module | MPDIoU | Parameter Quantity/M | GFLOPs | mAP (%) | Time per Picture (ms) | FPS |

|---|---|---|---|---|---|---|---|

| 1 | × | × | 6.23 | 13.9 | 73.3 | 21.7 | 46.1 |

| 2 | √ | × | 6.01 | 13.0 | 79.4 | 17.0 | 58.8 |

| 3 | √ | √ | 6.01 | 13.0 | 80.6 | 16.9 | 59.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

An, H.; Fan, Y.; Jiao, Z.; Liu, M. Research on Improved Bridge Surface Disease Detection Algorithm Based on YOLOv7-Tiny-DBB. Appl. Sci. 2025, 15, 3626. https://doi.org/10.3390/app15073626

An H, Fan Y, Jiao Z, Liu M. Research on Improved Bridge Surface Disease Detection Algorithm Based on YOLOv7-Tiny-DBB. Applied Sciences. 2025; 15(7):3626. https://doi.org/10.3390/app15073626

Chicago/Turabian StyleAn, Haichao, Ying Fan, Zhuobin Jiao, and Meiqin Liu. 2025. "Research on Improved Bridge Surface Disease Detection Algorithm Based on YOLOv7-Tiny-DBB" Applied Sciences 15, no. 7: 3626. https://doi.org/10.3390/app15073626

APA StyleAn, H., Fan, Y., Jiao, Z., & Liu, M. (2025). Research on Improved Bridge Surface Disease Detection Algorithm Based on YOLOv7-Tiny-DBB. Applied Sciences, 15(7), 3626. https://doi.org/10.3390/app15073626