Abstract

This paper introduces Marker-Assisted Region Scanning for Simultaneous Localization and Mapping (MARS-SLAM), a novel approach to optimizing SLAM in unknown environments. Designed to enhance autonomous exploration in extreme conditions, MARS-SLAM ensures efficient navigation while providing a systematic method for verifying mapping completion. The approach leverages virtual markers to track unexplored regions, guiding the robot through an organized and comprehensive exploration process. Markers are placed at the LiDAR sensor’s range limit in free areas, maintaining a dynamic list of regions yet to be visited. Mapping is considered complete when no markers remain, signifying full coverage of the environment. Target marker selection is based on age (creation order) and distance (path length from the robot). The method was validated in three virtual environments of varying complexity, demonstrating superior performance compared to alternative navigation strategies, including predefined zigzag routes and routes generated by Ant Colony Optimization (ACO). Experimental results show that MARS-SLAM achieves complete and accurate mapping while significantly reducing the number of poses required. Specifically, it achieves a 64.39% reduction in poses compared to ACO and 71.07% compared to zigzag navigation, highlighting its efficiency in complex environments.

1. Introduction

The concept of Simultaneous Localization and Mapping (SLAM) [1] emerged as a fundamental problem in mobile robotics, initially addressed by probabilistic methods such as EKF-SLAM [2], FastSLAM [3], and Graph-SLAM [4], which used Kalman filters and graphs for trajectory and map estimation. In the last decade (2015 to 2025), the number of publications related to SLAM per year has increased by 292.7% [5], reflecting growing interest and advancements in the field. With advances in computing, visual approaches such as ORB-SLAM [6], LSD-SLAM [7], and DSO [8] improved real-time pose estimation. In the context of autonomous driving, sensor fusion has become essential for handling dynamic environments, leading to the development of methods such as LIO-SAM [9] and VINS-Mono [10], which integrate LiDAR and IMU data to enhance localization accuracy.

Recently, the state of the art in SLAM has incorporated deep-learning-based techniques, such as DeepVO [11] and CodeSLAM [12], which leverage neural networks to learn compact environment representations and improve the robustness of visual odometry. Additionally, innovative approaches like NeRF-SLAM [13], 3D Gaussian Splatting [14], and NICE-SLAM [15] explore implicit representations to enhance high-fidelity 3D scene reconstruction. Hybrid methods such as R3LIVE [16] and NeRF-LOAM [17] combine the advantages of classical and modern techniques to overcome challenges in unstructured environments and variable lighting conditions. The integration of SLAM with Large Language Models (LLMs) [18] and semantic perception systems, such as SuMa++ [19] and SemanticKITTI [20], has demonstrated advancements in understanding complex scenes.

In the current landscape, approaches like DL-SLOT [21] and LIO-SEGMOT [22] incorporate multi-object tracking to handle dynamic obstacles, while ClusterSLAM [23] and TwistSLAM [24] improve odometry accuracy in dense urban scenarios. These advancements show SLAM evolving to meet autonomous vehicle demands.

Despite these advancements, one of the key challenges in SLAM remains ensuring the completeness of the generated maps. SLAM allows robots to map and localize simultaneously. This capability is crucial for applications ranging from autonomous vehicles [25] to space exploration [26]. Ensuring full coverage, especially in dynamic scenarios, remains a challenge.

Traditional SLAM approaches often rely on random or exploratory navigation methods that, although capable of covering most of the environment, lack efficient mechanisms to systematically explore unmapped regions [1]. This leads to shadowed areas, redundant paths, and map gaps [27,28]. Industrial robots need efficient mapping for logistics and safety. In search-and-rescue, full coverage is vital for finding survivors. The ability to accurately detect and close unexplored areas makes MARS-SLAM particularly suitable for these domains, where incomplete maps could lead to inefficiencies or critical mission failures.

Moreover, conventional methods lack a deterministic process to verify mapping completion, often resulting in unnecessary exploration that wastes time, energy, and computational resources. To address these challenges, this research proposes MARS-SLAM, a novel approach that enhances mapping completeness through the use of virtual markers placed in unexplored regions. These markers, derived from LiDAR sensor readings, dynamically guide the robot’s exploration, ensuring systematic coverage while optimizing resource usage. The key contributions of this study are:

- 1.

- A marker-based methodology for identifying and tracking unexplored areas, enabling a structured exploration process that minimizes redundant revisits.

- 2.

- An adaptive navigation strategy that prioritizes markers based on their distance and antiquity, improving mapping efficiency.

- 3.

- An optimized path-finding system that enhances navigation efficiency when direct access to a target marker is obstructed.

- 4.

- A method for identifying mapping completion, ensuring that the exploration process effectively detects when the environment has been fully mapped.

To visually illustrate the structure of MARS-SLAM and its contributions, Figure 1 presents a mind map summarizing the main components of our methodology. This diagram provides an intuitive overview of the proposed approach, highlighting its core mechanisms and objectives. Despite its advantages, MARS-SLAM faces challenges in highly dynamic environments, where frequent and unpredictable obstacles can interfere with the correct inclusion of markers. In highly dynamic environments, the main difficulty lies in the ever-changing nature of the scenario, which can compromise the reliability of the marking system and, consequently, the accuracy of navigation. Since MARS-SLAM relies on the placement and tracking of virtual markers to guide exploration, any unexpected change in the environment can render previously registered markers obsolete. For example, if a marker is placed in an area that later becomes inaccessible due to the emergence of a moving obstacle, the robot may fail in its attempt to reach that region, requiring constant reassessments of the exploration strategy.

Figure 1.

Mind map illustrating the key components of the MARS-SLAM methodology.

Thus, the research presented in this paper aims to answer the following question: How can the use of markers to identify unexplored regions optimize the SLAM process, ensuring completeness and accuracy of mapping in unknown environments? Section 2 reviews related works, exploring existing SLAM approaches and identifying the gaps that our methodology seeks to address. In Section 3, a detailed description of MARS-SLAM is provided, including the flowchart and strategies implemented for the method’s operation. Section 4 presents the experimental results obtained in various scenarios. Finally, Section 5 concludes the paper, discussing the contributions and limitations of MARS-SLAM, as well as suggesting potential directions for future research.

2. Related Works

This section presents studies on path planning and SLAM optimization, highlighting advances and limitations. Table 1 summarizes the primary algorithms, applications, and constraints. Table 2 compares key characteristics, illustrating MARS-SLAM’s contributions to completion detection and uncertainty reduction.

Table 1.

Summary of methods related to path planning and SLAM optimization, highlighting the approaches, main algorithms, application environments, and their limitations.

Table 2.

Characteristics’s comparison addressed in related works, where ✗ indicates the absence of a feature and ✓ denotes its presence.

2.1. Path Planning Approaches

In [29], a proposal is presented that integrates inertial SLAM with trajectory planning for UAVs, focusing on maximizing the accuracy of navigation estimates in environments where GPS is unreliable. The combination of information-based measures, such as entropy, with an observability analysis offers a robust methodology to control UAV actions and improve map quality and localization estimates.

In [30], a solution for the path planning problem in the context of active SLAM is presented. The main technical contribution lies in the adaptation of the D* algorithm to handle negative edge weights, a significant challenge in the domain of pathfinding algorithms due to the presence of negative cycles. The algorithm stands out for its ability to efficiently recalculate paths in dynamic scenarios with moving obstacles, maintaining smooth robot motion and reducing localization uncertainty.

In [31], an analysis of the applicability of SLAM algorithms and path planning in indoor rescue environments is proposed. The research evaluates three widely used SLAM algorithms: GMapping, Hector-SLAM, and Cartographer, combining them with the A* algorithms for global path planning and DWA for local planning. The goal is to determine the effectiveness of these combinations in simulated, emulated, and real rescue environments.

2.2. SLAM Optimization Approaches

In [32], the authors introduce the P-SLAM algorithm, which enhances the efficiency of mobile robot exploration and mapping through the prediction of environmental structures in unexplored regions. By using an environmental structure predictor, P-SLAM generates hypotheses based on observations from neighboring explored regions and compares them with the constructed map, allowing mobile robots to decide whether or not to explore those areas, saving time. The implementation of P-SLAM with a Rao–Blackwell particle filter showed effectiveness in indoor environments, reducing exploration time and speeding up the SLAM process. The Bayesian formulation derived from P-SLAM demonstrates its viability for real-time operations.

In [33], the authors develop an approach for exploring unknown environments using an exploration EM algorithm adapted to the context of pose SLAM, without the need for explicit feature modeling. The proposed framework uses point cloud segmentation to improve place recognition and virtual map construction, allowing the prediction of the uncertainty resulting from future sensing actions. The research identifies the need to improve path primitives, which are currently limited to frontier locations, to mitigate the increase in uncertainty during exploration, pointing to promising directions for future work.

In [34], the challenges of autonomous exploration and map building for mobile robots, especially in unknown environments, are addressed. The study presents a new boundary exploration method using a Double-Layer Rapid-Exploration Random Tree (DL-RRT), combined with Affinity Propagation (AP) clustering and Bayesian Optimization (BO). The DL-RRT method enhances the exploration of boundary points both globally and locally, while AP clustering optimizes the selection of boundary points by grouping and reducing their number. BO further refines exploration by prioritizing the grouped points based on desired parameters.

In [35], an approach for 3D environment exploration by unmanned ground vehicles (UGVs) is presented. The proposed method, called FIT-SLAM, combines Fisher information and navigability estimates to optimize the exploration rate and SLAM (Simultaneous Localization and Mapping) accuracy. The method suggests the use of a global navigability map that considers environmental constraints and a goal selection and path planning method that incorporates information from the landmarks detected by SLAM. The study shows that by transforming the 3D exploration space into a 2D navigability map, FIT-SLAM significantly reduces the time to search for frontier clusters and the computational cost associated with 3D voxel mapping, while also improving the exploration rate and localization accuracy.

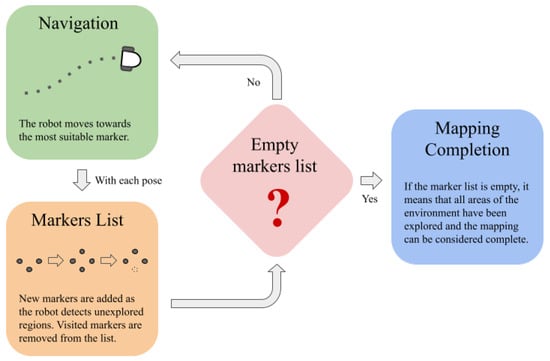

3. Marker-Assisted Region Scanning

To ensure the effective and accurate completion of mapping, a method is proposed that utilizes markers to identify and track unexplored regions on the map. The primary goal of this method is to ensure that all areas of the environment are mapped, avoiding gaps that could compromise the navigability and usefulness of the generated map. The markers play a role in this process, serving as indicators of regions that still need to be explored. As the robot progresses in building the map, it adds markers to unexplored areas based on sensor readings. These markers are stored in a list, which, over time, reflects the mapping progress. The mapping process is considered complete when the marker list is empty, indicating that no unexplored regions remain.

Initially, the robot performs a full 360° rotation, analyzing the surrounding environment and placing markers in unexplored areas, with the goal of ensuring complete coverage of the spaces around the robot. Once the full rotation is complete, the robot selects one marker as the target and moves toward that marker. The robot then chooses the next most suitable marker and repeats the process. This cycle of marker selection and navigation continues until all markers have been removed, indicating that the mapping of the environment is complete. The detailed steps of the method are described in Algorithm 1.

3.1. Update Robot State

This process continuously updates the robot’s state during navigation, determining its new pose and LiDAR measurements based on the current action and the specified target. It plays a role in maintaining the robot’s alignment with its goal, dynamically adjusting its trajectory as needed and gathering essential data to support the ongoing mapping of the environment. The algorithm plays a vital role in enabling the robot to adapt its trajectory as needed, allowing for real-time decision making that enhances its ability to navigate complex spaces.

Algorithm A1 requires the robot’s current pose (), target marker (), executed action (A), grid map (G), and path list (). It outputs the updated pose () and LiDAR measurements (L). The process involves three main steps: identifying the assigned action (rotation or movement), updating the pose and LiDAR readings, and checking for obstacles. If the path is clear, the robot moves directly to the marker. Otherwise, a path-planning algorithm determines an alternative route, storing intermediate points in to navigate around obstacles.

| Algorithm 1 Marker-Assisted Region Scanning |

Ensure: Grid map of the environment (G)

|

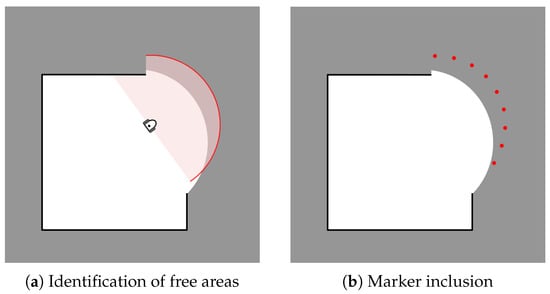

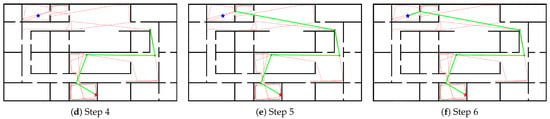

3.2. Marker Addition

Markers are created based on readings from the LiDAR, a sensor used by the robot to scan the environment. Each measurement provides the distance to the first obstacle detected along that direction. The LiDAR has a maximum range, beyond which the measurements are not considered. During the robot’s movement, at each registered pose, all LiDAR measurements are analyzed to identify potential obstacle-free areas. To ensure that markers are efficiently distributed without redundancy, the algorithm imposes a restriction: a new marker can only be added if no other marker exists within a predetermined minimum distance. This criterion is enforced by calculating the Euclidean distance between the new marker and all previously placed markers, ensuring proper spacing and preventing unnecessary overlap. Figure 2 illustrates the process of identifying free areas and adding markers.

Figure 2.

Process of identifying free areas and adding markers during the robot’s navigation. (a) shows how free areas are identified based on LiDAR readings, where the red line represents the detected free area; (b) illustrates the addition of markers in these areas, represented by red dots, ensuring a minimum distance between them.

Algorithm A2 takes the grid map (G), LiDAR measurements (L), and the existing marker list (M) as input. It checks whether a new marker can be placed by verifying if the corresponding area is still unknown (represented by a gray pixel) and if it satisfies the minimum distance requirement . If both conditions are met, a new marker is added. If a marker already exists nearby, the algorithm examines neighboring cells for unexplored areas before placing a new marker. Figure 2 illustrates this process.

3.3. Marker Selection

After the initial navigation period, the robot needs to choose a marker as a target to map an unexplored area. For this selection, the robot identifies markers that have free access, meaning it is possible to plot a direct path from the robot’s current position to the marker without encountering obstacles. Once the markers with free access are identified, the robot uses two criteria to select the target marker: age and distance. Each marker is associated with a specific pose, recorded in a pose list starting from pose 0 up to the robot’s current pose. For example, a marker created at pose 10 is considered older than one create at pose 50. The robot then selects between the oldest marker and the closest marker. If the distance to the oldest marker is more than times the distance to the closest marker, the closest marker is chosen as the target ( is a parameter adjusted based on the desired navigation behavior). Otherwise, the oldest marker is selected. When there are no markers with free access, the robot selects a target marker using the tournament method.

Algorithm A3 describes this logic in a structured way, starting with the filtering of markers with free access () and, if no such markers are found, applying the tournament selection method. After filtering the accessible markers, the algorithm then considers the distance () and the age of the oldest marker (), and compares it with the distance to the closest marker (). Based on this comparison and the parameter , the algorithm determines the target marker () that will guide the robot in its next exploration step. This efficient selection process allows the robot to balance the exploration of new areas with older markers while also considering the need to optimize the path by prioritizing closer markers when appropriate.

3.4. Marker Removal

Marker removal is a step to ensure that the map remains up to date, reflecting only the areas that still need to be explored. During the robot’s navigation, at each step, it is checked whether any of the previously added markers are located in a region that has already been mapped. Specifically, a marker is removed when its cell, as well as the neighboring cells, are no longer unknown. Figure 3 illustrates the process of map updating and the subsequent removal of markers during the robot’s navigation. Figure 3a shows the map being updated as the robot maps new areas. In these updates, previously unknown regions are converted into mapped areas, indicating that the robot has already explored these cells. Figure 3b demonstrates the marker removal process.

Figure 3.

Process of map updating and marker removal during navigation, where marker represented by red dots. (a) The map update with new information obtained by the robot. (b) The removal of markers from areas that are no longer unknown, ensuring that only unexplored regions retain markers.

Algorithm A4 takes the grid map (G) and marker list (M) as input and returns an updated list () containing only markers in unexplored regions. The process iterates through each marker’s coordinates and checks if the corresponding cell in G is still unknown. If the area has been fully mapped, the marker is discarded. If any neighboring cell remains unexplored, the marker is retained. This ensures that only markers in unknown regions are preserved, improving mapping efficiency. Figure 3 illustrates this process.

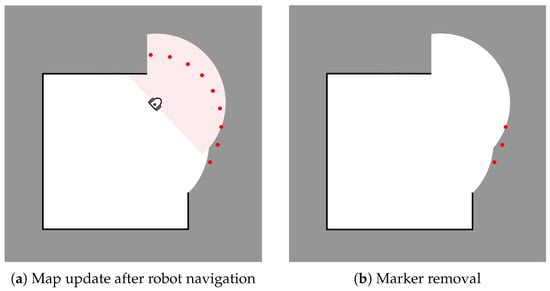

3.5. Path Finding

In the situation where the target marker does not have free access, the robot needs to return to the pose where the target marker is created to ensure a clear path to the target. To optimize the path, a method is developed to reduce the set of poses needed to reach the target marker. The approach leverages the known path recorded in the pose list to determine the smallest set of poses that connect the robot’s current position to the target marker. The method operates as follows: starting from the robot’s current position, poses are traversed at intervals defined by a previously established constant until the target marker’s pose is reached. At each step, the closest pose in the pose list index to the target marker’s pose that has a free path to the current position is identified.

The specifics of this procedure are detailed in Algorithm A5. Initially, the algorithm sets the path as an empty list and defines a search step size s. A loop continues until a valid path is found. In each iteration, the algorithm generates a set of candidate poses by calculating the indices based on the target marker’s pose index and the current pose index k, considering increments defined by the search step size. If there are no candidate poses available, the loop terminates. For each pose in the candidate set , the algorithm checks for obstacles between the current pose and the candidate pose. If a clear path is identified (i.e., no obstacles), the algorithm updates the path by adding the candidate pose and then updates the current pose index k to the candidate pose. This process repeats until a direct, obstacle-free route is established between the robot’s current position and the target marker. Figure 4 visually illustrates this path optimization process, demonstrating the stages of checking and selecting intermediate poses until the final path is defined.

Figure 4.

The set of images shows the process of locating the optimized path using the pose list. The goal is to identify a path from the red star (starting point) to the blue star (target point). The red points represent the list of posed already traversed by the robot. The green path represents the currently constructed route.

4. Simulation Platform

The MARS-SLAM simulation platform models autonomous robot navigation in complex virtual environments. The system employs a robot with a LiDAR sensor to map surroundings, detect obstacles, and explore uncharted regions using marker-based search. Developed in Python, it uses Pygame for visualization and Pandas for data management. The platform simulates a 2D environment where the robot scans and updates an occupancy map in real time.

Algorithm 2 describes the simulation’s execution flow, divided into six stages. The algorithm starts with initializing the simulation environment and instantiating the robot, with its properties specific to each map, including LiDAR range, obstacle avoidance distance, motor speed parameters, and a conversion factor for meters to pixels. During the simulation, the robot captures user events, clears the screen, updates its position, detects obstacles, updates movement control, and maps the environment. In each iteration, the robot is graphically rendered on the screen, and the map display is updated, providing a visual representation of the navigation progress.

| Algorithm 2 MARS-SLAM Simulation |

|

4.1. Simulation Environment Initialization

The virtual environment used in MARS-SLAM simulations is generated from a grayscale PNG image, with dimensions of 900 by 1600 pixels. Each pixel of the image corresponds to a unique coordinate in the simulated environment, representing a position on the map that the robot will explore. The image is loaded and processed by the simulator, which interprets the different grayscale values to define the navigability of the environment. White pixels (255, 255, 255) indicate free areas, where the robot can move without restrictions, while black pixels (0, 0, 0) represent obstacles on the map, marking regions where the robot cannot enter. Among the various mapping techniques, metric maps stand out for their detailed and precise nature [36]. Metric maps provide a detailed representation of the environment’s geometry, describing the shape, dimensions, and arrangement of objects.

Metric maps are essential in robotics for describing the environment’s geometry with high accuracy. Mathematically, a metric map is modeled as a matrix where each cell, or pixel, represents a specific state of the environment. This text describes how a metric map works, focusing on the mathematical representation and the states of the pixels. A metric map is represented as a matrix M of size , where each element represents a cell in the environment. The cell can be in one of three states:

- Free (White | 255, 255, 255): The cell is free of obstacles.

- Occupied (Black | 0, 0, 0): The cell contains an obstacle.

- Unknown (Gray | 150, 150, 150): The state of the cell has not been determined.

By using this image as a base, the simulator transforms the graphical representation into a coordinate grid, where each pixel is associated with a cell in the occupancy map. This approach allows the robot to accurately locate its position in the environment and update the map according to the explored or avoided areas. The simulation platform allows real-time graphical rendering of the environment and the robot. The map image is loaded into memory, and its visualization is configured so that the regions explored by the robot during the simulation are overlaid on the base map. Thus, the exploration progress is displayed in real time, with the path traveled and the mapped areas highlighted.

4.2. Robot Instantiation

The robot is modeled based on a simple kinematic system, where the left and right motor speeds control its direction and linear velocity. The initial speed of both motors is set as , while the maximum configured speed is . The robot’s initial position is randomly selected within the map’s boundaries. The robot is positioned with coordinates in meters, converted to pixels using a conversion rate. The initial orientation () is also randomly defined, covering the range from to .

The LiDAR sensor is simulated to take measurements in nM directions, covering a field of view of angle degrees with a configurable maximum of pixel. It is used to detect obstacles within this field of view and maximum distance. The robot is programmed to avoid obstacles that are within a distance of pixel. If an obstacle is detected within this limit, the robot adjusts its direction to avoid collisions by calculating the evasion angle based on the LiDAR readings. The evasion direction is stored in control variables, and the robot continues its path after the correction. The parameters used in the robot’s instantiation are detailed in Table 3.

Table 3.

Parameters used in robot instantiation.

4.3. Robot Position Update

The robot’s position is updated based on the left () and right () motor speeds, which determine both linear and angular movement. These speeds are used to calculate the variation in the robot’s position and orientation in space. The robot’s linear velocity (v) can be calculated as the average of the left () and right () motor velocities:

Additionally, the robot’s angular velocity (w) can be determined from the motor velocities and the distance between the robot’s wheels (L):

To calculate the variations in the robot’s x and y coordinates, as well as its orientation , the differential motion equations can be used. Suppose that during a small time interval , the robot moves with velocities v and w. The variations in the x and y coordinates and in the orientation can be calculated as follows:

These equations describe the variations in the robot’s position and orientation based on the left and right motor velocities and the time interval . They are essential for predicting the change in the robot’s pose in a 2D mobile robotics environment.

4.4. Obstacle Detection

Obstacle detection is performed by the robot’s LiDAR sensor, which checks for the proximity of objects around the robot to avoid collisions. The process focuses on analyzing a limited subsection of the LiDAR’s field of view, corresponding to the range from −25 to +25 relative to the robot’s current orientation. This choice aims to reduce processing time and concentrate resources on detecting obstacles directly in front of the robot, which are more relevant for immediate navigation.

The LiDAR sensor takes distance measurements in various directions within this range, and the data from each measurement are compared to a predefined minimum distance threshold, called mOd. If an obstacle is detected at a distance less than or equal to this value, it is identified as a potential obstacle, and its information, such as the measured distance and detection angle, is stored in an obstacle list. This process is repeated for all measurements within the defined field of view, ensuring the robot has a clear and updated view of nearby obstacles. The detection is continuous, allowing the robot to adjust its path as necessary to avoid collisions. The obstacle position information is then used by the robot’s control system to efficiently avoid obstacles.

4.5. Movement Control Update

The robot’s movement control is based on the angular speeds of the wheels: (right wheel) and (left wheel). This control is adjusted according to obstacle detection and autonomous navigation using the MARS-SLAM method. The robot alternates between avoiding obstacles and navigating toward predefined markers, depending on environmental conditions.

Initially, the algorithm checks if the mapping process has been completed. If the mapping is complete, the robot stops its movement, setting and to zero. If the mapping is still ongoing, the robot continues navigating by adjusting its movement according to the marker position and obstacles in the environment. This control mechanism allows the robot to follow the exploration strategy and avoid obstacles simultaneously, ensuring that the robot navigates smoothly through the environment while maintaining safety and efficient exploration.

4.6. Environment Mapping

During navigation, the robot uses the LiDAR sensor to scan its surrounding environment. This mapping process involves continuous data capture, which are stored in a dataframe, serving as the main repository of simulation data. Each row in the dataframe records a state of the robot at a given time instance, including information such as position, orientation, and LiDAR readings. The structure of these data allows for a detailed analysis of the robot’s behavior over time and enables precise tracking of the environment’s exploration.

The LiDAR performs measurements within a 180-degree field of view, ranging from −90 to +90 relative to the robot’s current orientation. At each simulation step, a set of distance readings is generated, where each reading corresponds to the distance to the nearest obstacle along a specific direction. These readings, along with the robot’s coordinates (x, y) and its orientation (), are stored in the dataframe, which is updated at each simulation step. The captured data include:

- Distance readings: The distances measured by the LiDAR to the detected obstacles along each scan angle.

- Coordinates (x, y): The robot’s actual position in the environment.

- Orientation (): The angle representing the robot’s direction.

- Timestamp: A time record that allows synchronization and temporal analysis of the data.

The data collected by the LiDAR are used to build a grid map of the environment. This map is composed of pixels where white pixels represent free space and black pixels represent obstacles. For each LiDAR measurement, the distance to the nearest obstacle and the corresponding scan angle (relative to the robot’s orientation) are used to calculate the position of the obstacle. The position of the obstacle is determined using the following equations:

where d is the distance measured by the LiDAR, is the scan angle, are the coordinates of the pose, is the orientation of the pose. After calculating the position of the obstacle, a line of free space is drawn from the pose’s coordinates to the obstacle. This line draws each pixel along the path as free (white). This ensures that the robot has clear information about the explored areas without obstacles. Once the free space is marked, the position of the obstacle is updated in the grid map by marking a black point at the calculated obstacle position. This point represents the detected obstacle at that particular scan angle. This process is repeated for every LiDAR measurement at each pose. As the robot moves, the map is continuously updated to reflect the current state of the environment.

5. Results

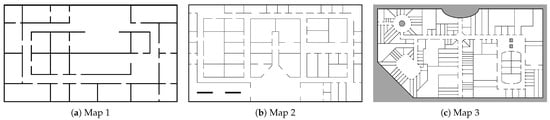

In this section, the results of the experiments conducted to evaluate the efficiency and stability of the proposed MARS-SLAM method are presented. The experiments are obtained for three distinct scenarios, aiming to analyze the performance of the approach in various contexts. The maps of the virtual environments used in the simulations are shown in Figure 5. The maps are designed with increasing levels of complexity, with Map 1 being the least complex, followed by the more challenging Map 2, and culminating in the highly complex Map 3, to thoroughly test the adaptability of the MARS-SLAM method. The simulations are performed on a computer with the following specifications: Intel(R) Core(TM) i7-10750H CPU @ 2.59 GHz, 24 GB of RAM, and Microsoft Windows 11 Home operating system (version 10.0.22631). The development environment and tools used for the implementation and simulations included Python 3.7.7 and the necessary libraries for calculations and visualization of the results.

Figure 5.

Maps of the virtual environments used in the simulations, designed with different levels of complexity to test the adaptability of the MARS-SLAM method. Map 1 presents a simple layout, Map 2 has intermediate complexity, and Map 3 is the most challenging, with narrow areas and multiple obstacles.

5.1. Experiments

To provide a robust comparison of MARS-SLAM, two alternative routes are created. The objective of these simulations is to establish a solid basis for comparison with the new proposal. To achieve this, a pragmatic approach is developed that, under the assumption of prior knowledge of the map, allows for the distribution of markers in a grid format, with a distance proportional to the LiDAR scan limit. This distribution ensures that by visiting all the markers, the mapping is complete. These routes will provide an order of magnitude for evaluating the efficiency of MARS-SLAM. Comparing MARS-SLAM with these routes will help determine whether the method maps the whole map with a similar number of poses, even without prior knowledge of the environment. If MARS-SLAM yields comparable results to the alternative routes, this would indicate that the method is efficient, as it can achieve similar results even though it has no prior knowledge of the map. To compare MARS-SLAM, two alternative routes are developed using markers distributed in a grid:

- 1.

- Standard Zigzag Route: In this route, the robot is positioned at the first marker of the first row and navigates from left to right in even rows and from right to left in odd rows, creating a zigzag pattern.

- 2.

- ACO-Optimized Route: Here, a heuristic based on the Ant Colony Optimization (ACO) algorithm [37] is applied to construct a route that is close to the optimal route.

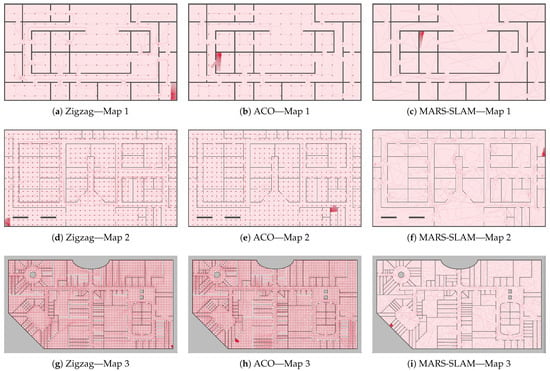

These routes are generated for the three virtual scenarios shown in Figure 5. Figure 6 presents the final mapping results (100% completion) for all three maps, comparing the performance of the Zigzag, ACO, and MARS-SLAM methods. The already-mapped areas are shaded in pink, red dots represent the markers added by the robot, and the small pink dots indicate the robot’s recorded poses throughout the mapping process.

Figure 6.

Final mapping results (100% completion) using the three methods (Zigzag, ACO, and MARS-SLAM) on three different maps. The red line represents the path taken by the robot. In the Zigzag and ACO methods, the red points indicate the markers used to construct the planned routes. To view the simulation videos, visit the YouTube playlists: Map 1 (https://www.youtube.com/playlist?list=PLJzSAmlKHHmIBUAnraCmh2obtFYAUhLN0), Map 2 (https://www.youtube.com/playlist?list=PLJzSAmlKHHmIKY1uH8QDqK_tTexXWEYEj), and Map 3 (https://www.youtube.com/playlist?list=PLJzSAmlKHHmKA6d4Yu6si1FDlAaU4POUs), all accessed on 16 March 2025.

The simulations demonstrate that MARS-SLAM successfully achieves its main objective, which is to perform a complete mapping of the environment, utilizing an efficient stopping criterion to identify the conclusion of the navigation task. Each simulation is repeated 10 times to ensure the robustness of the results. Table 4 presents the averages and standard deviations for the number of poses require for complete mapping by each method, as well as the mapping completeness. Table 5 presents the average times and standard deviations related to three time categories: preprocessing, navigation, and total simulation time. The preprocessing time refers to the initial phase before navigation, which includes setting up specific parameters for each method. Navigation time, on the other hand, represents the period in which the robot is actively exploring the environment and mapping unknown regions. To assess the efficiency of the marker selection method, three distinct versions of MARS-SLAM are tested, each using a different marker selection method: Tournament (MST), Proximity (MSP), and Age (MSA). This approach aims to determine whether the selection method impacts the effectiveness of MARS-SLAM, allowing a comparative analysis of the results obtained with each selection strategy.

Table 4.

Data showing the number of poses () and mapping completeness () used by each method in the three maps, i.e., Zigzag, ACO, and three versions of MARS-SLAM, using three different selection methods: Tournament (MST), Proximity (MSP), and Age (MSA).

Table 5.

Data showing the preprocessing time (), navigation time (), and total simulation time () used by each method in the three maps, i.e., Zigzag, ACO, and three versions of MARS-SLAM, using three different selection methods: Tournament (MST), Proximity (MSP), and Age (MSA).

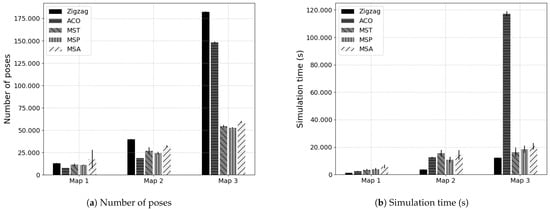

Figure 7 illustrates the comparison between the evaluated methods based on two criteria: the number of poses and the total simulation time. Figure 7a compares the number of poses used by each method across the three maps; Figure 7b compares the required simulation time. It is observed that for Maps 1 and 2, MARS-SLAM demonstrate consistency, showing a number of poses similar to the comparative methods. However, in Map 3, MARS-SLAM achieves significantly superior performance, using fewer poses, which validates its effectiveness in complex unknown environments.

Figure 7.

Comparison between the methods for the number of poses (a) and total simulation time (b) across three different maps.

Although simulation time is an important factor as it directly impacts resource costs during the SLAM process, it can be managed or even justified in many applications. On the other hand, the number of poses stands out as a more relevant indicator of the quality of the final mapping. A method that generates a complete map with a reduced number of poses demonstrates higher efficiency by avoiding redundancies and producing a more accurate and compact representation of the environment. As a result, the maps generated are more suitable for reuse and future applications since they contain sufficient data to efficiently represent the environment without compromising completeness. Thus, when evaluating the performance of MARS-SLAM, the number of poses becomes a criterion, reflecting the system’s ability to create optimized, high-quality maps while reducing unnecessary data overhead. Table 6 presents the percentage variations in the number of poses when comparing different methods across three scenarios.

Table 6.

The number of poses variation in Maps 1, 2, and 3, comparing Zigzag, ACO, MST, MSP, and MSA methods.

5.2. Discussion

Overall, MARS-SLAM yields superior results, particularly when combined with the MST strategy, which reduces the number of poses by 64.39% compare to ACO and 71.07% compare to Zigzag in Map 3. This significant reduction reflects the method’s efficiency in complex environments, such as Map 3, where the implementation of Zigzag and ACO requires the inclusion of a large number of markets. The increased number of markers made the optimization process too costly for ACO, which failed to find an efficient route within the simulation time limit. This reinforces the superiority of MARS-SLAM in handling complex environments, where its ability to reduce the number of poses translates into more efficient navigation and higher-quality mapping. A point to highlight is that MARS-SLAM is designed to operate in completely unknown environments, which significantly distinguishes it from the ACO and Zigzag methods. While these methods require prior knowledge of the map to distribute a marker grid that guides navigation, MARS-SLAM can autonomously explore and map unknown areas, adapting to real-time discoveries. This makes MARS-SLAM a more robust and versatile solution, as it does not depend on prior information or artificial setups to operate efficiently. Its ability to identify and explore unexplored regions adaptively, without the need for prior planning, positions it as a valuable tool for applications where the environment is entirely new or unpredictable.

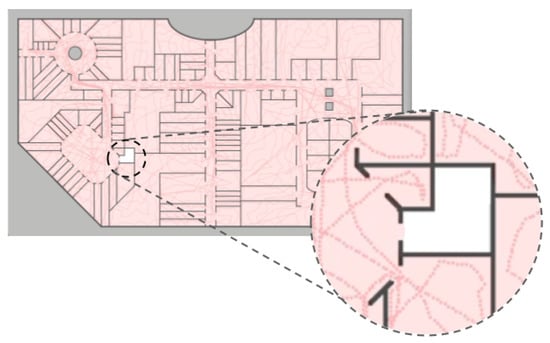

In terms of completeness, as presented in Table 4, all methods are able to fully map the environment, with minor variations in Map 3, where the complexity of the environment led to a slight reduction in mapping completeness for MST (99.8%) and MSP (99.5%). Among the 30 simulations conducted with MARS-SLAM on Map 3 (10 simulations for each strategy), in 2 of them, the method did not achieve 100% completeness. This occurs due to the specifics of the marker placement methodology, referred to as the shadow effect. As illustrated in Figure 8, the shadow effect occurs due to a combination of factors related to the LiDAR’s functionality and the marker placement methodology in the system. This effect happens when the robot, while navigating through dense or narrow regions, fails to add markers correctly in all unmapped areas. As a result, the robot perceives some unexplored areas as already mapped, since the absence of markers indicates to it that the area has been explored. This issue is partly caused by the limited reading angle of the LiDAR, which only performs measurements from −90 to 90, along with the strategy of adding new markers only at the limits of the LiDAR’s range when it detects no obstacles. In narrow environments, such as corridors or doorways, the robot may pass by a structure without successfully adding markers within the passage, as the LiDAR, depending on the robot’s orientation, may not capture the complete area (such as a narrow entrance). For example, when passing perpendicularly to a narrow doorway, the robot may only detect the doorframe and not “see” the inner space, which prevents markers from being placed in that area. This effect is also intensified in maps with dense structures, where marker dispersion is high and insufficient to cover all spaces, leading to a false perception of mapping completeness.

Figure 8.

Example of the shadow effect caused by the robot passing through narrow areas. In these situations, the LiDAR may fail to correctly detect unmapped regions, resulting in the creation of shadow zones where the robot erroneously believes the area has been fully mapped.

The shadow effect represents a critical challenge for complete mapping accuracy in MARS-SLAM, revealing the need for further investigation and improvements in the marker placement methodology. This phenomenon, which occurs mainly in narrow areas poorly detected by the LiDAR, indicates the need for adjustments in sensor configuration or complementary strategies to ensure these shadowed regions are properly mapped. Measures such as increasing marker density in more complex areas or adjusting LiDAR parameters could mitigate this effect and improve mapping completeness.

Moreover, despite the overall good performance of MARS-SLAM, particularly when compared to ACO and Zigzag, in more complex scenarios like Map 3, the challenges encountered in environments with many narrow zones or sharp angles highlight the difficulties in accurate marker placement. In these cases, the method may struggle with precise marker positioning, which in turn increases processing time and the complexity of navigation. Although MARS-SLAM still maintains a significant advantage over alternative approaches, these factors demonstrate the importance of enhancing the method to handle scenarios with low visibility or high obstacle density.

6. Conclusions

This paper proposed MARS-SLAM, a novel method that optimizes the Simultaneous Localization and Mapping process by leveraging markers to enhance navigation efficiency in unknown environments. Experimental results in three distinct virtual scenarios demonstrated that MARS-SLAM, particularly when combined with the MST strategy, significantly outperforms traditional approaches such as Zigzag and ACO. Notably, in the most complex scenario (Map 3), MARS-SLAM reduced the number of poses by 71.07% compared to Zigzag and 64.39% compared to ACO, highlighting its efficiency in minimizing redundant movements while maintaining mapping accuracy.

MARS-SLAM’s capability to autonomously explore unknown environments without requiring prior map knowledge makes it a robust and adaptable solution. Unlike Zigzag and ACO, which rely on predefined marker grids, MARS-SLAM dynamically identifies unexplored regions, ensuring efficient coverage with fewer poses. However, challenges remain in highly constrained environments, where the shadow effect from marker placement can impact mapping completeness. Future work should focus on refining sensor configurations, optimizing marker distribution, and adapting the method to dynamic environments with moving obstacles.

Additionally, real-world validation is essential to confirm the method’s applicability. Although our current implementation was developed and validated in a simulated environment, careful consideration was given to its feasibility in real-world applications. The method is already structured to handle data storage and manipulation in a manner compatible with microcontrollers, and the same control commands used in simulation can be applied to a real robotic system. However, real-time SLAM must be incorporated to ensure continuous localization during mapping. Furthermore, porting the MARS-SLAM code from Python to C++ is necessary to meet the computational constraints of embedded systems.

Another important research direction is the evaluation of alternative sensor configurations beyond LiDAR. While LiDAR provides high-precision mapping, incorporating additional sensors can improve the system’s ability. Adapting MARS-SLAM to work with RGB-D cameras, stereo vision, or radar requires refining the unmapped region detection algorithm to ensure accurate marker placement.

Author Contributions

Conceptualization, L.M.R. and N.N.; methodology, L.M.R. and N.N.; software, L.M.R.; validation, N.N. and P.V.R.d.C.; formal analysis, L.M.R. and N.N.; investigation, L.M.R. and N.N.; resources, N.N. and P.V.R.d.C.; data curation, L.M.R.; writing—original draft preparation, L.M.R. and N.N.; writing—review and editing, N.N. and P.V.R.d.C.; visualization, L.M.R.; supervision, N.N.; project administration, N.N. and P.V.R.d.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Fundação Coordenação de Aperfeiçoamento de Pessoal de Nível Superior (CAPES) grant number 88887.799125/2022-00 and Fundação de Amparo à Pesquisa do Estado do Rio de Janeiro (FAPERJ) grant number E-26/201.013/2022.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no competing interests.

Appendix A. Algorithms

| Algorithm A1 Update Robot State |

| Require: Current Pose (), Target marker (), Action (A), Grid Map (G), Path () Ensure: Updated Pose (), Updated LiDAR Measurements (L)

|

| Algorithm A2 Marker Addition |

| Require: Grid map (G), LiDAR measurements (L), Marker list (M) Ensure: Updated Marker List (M)

|

| Algorithm A3 Marker Selection |

| Require: Marker list (M), Robot’s current pose () Ensure: Target marker ()

|

| Algorithm A4 Marker Removal |

| Require: Grid map (G), Marker list (M) Ensure: Updated marker list ()

|

| Algorithm A5 Path finding |

| Require: Pose list (P), Robot’s pose index (k), Target marker’s pose index (z) Ensure: Path ()

|

References

- Aulinas, J.; Petillot, Y.; Salvi, J.; Lladó, X. The SLAM problem: A survey. In Artificial Intelligence Research and Development; IOS Press: Amsterdam, The Netherlands, 2008; pp. 363–371. [Google Scholar]

- Bailey, T.; Nieto, J.; Guivant, J.; Stevens, M.; Nebot, E. Consistency of the EKF-SLAM algorithm. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–13 October 2006; IEEE: Piscataway, NJ, USA, 2006; pp. 3562–3568. [Google Scholar]

- Montemerlo, M.; Thrun, S. FastSLAM 1.0. FastSLAM: A Scalable Method for the Simultaneous Localization and Mapping Problem in Robotics; Springer: Berlin/Heidelberg, Germany, 2007; pp. 27–62. [Google Scholar]

- Thrun, S.; Montemerlo, M. The graph SLAM algorithm with applications to large-scale mapping of urban structures. Int. J. Robot. Res. 2006, 25, 403–429. [Google Scholar] [CrossRef]

- Wang, K.; Guo, J.; Chen, K.; Lu, J. An In-Depth Examination of SLAM Methods: Challenges, Advancements, and Applications in Complex Scenes for Autonomous Driving. IEEE Trans. Intell. Transp. Syst. 2025. early access. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-scale direct monocular SLAM. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 834–849. [Google Scholar]

- Engel, J.; Koltun, V.; Cremers, D. Direct sparse odometry. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 611–625. [Google Scholar] [PubMed]

- Shan, T.; Englot, B.; Meyers, D.; Wang, W.; Ratti, C.; Rus, D. Lio-sam: Tightly-coupled lidar inertial odometry via smoothing and mapping. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 5135–5142. [Google Scholar]

- Qin, T.; Li, P.; Shen, S. Vins-mono: A robust and versatile monocular visual-inertial state estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar]

- Wang, S.; Clark, R.; Wen, H.; Trigoni, N. Deepvo: Towards end-to-end visual odometry with deep recurrent convolutional neural networks. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 2043–2050. [Google Scholar]

- Bloesch, M.; Czarnowski, J.; Clark, R.; Leutenegger, S.; Davison, A.J. Codeslam—Learning a compact, optimisable representation for dense visual slam. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2560–2568. [Google Scholar]

- Rosinol, A.; Leonard, J.J.; Carlone, L. Nerf-slam: Real-time dense monocular slam with neural radiance fields. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 3437–3444. [Google Scholar]

- Fei, B.; Xu, J.; Zhang, R.; Zhou, Q.; Yang, W.; He, Y. 3D gaussian splatting as new era: A survey. IEEE Trans. Vis. Comput. Graph 2024. [Google Scholar] [CrossRef]

- Zhu, Z.; Peng, S.; Larsson, V.; Xu, W.; Bao, H.; Cui, Z.; Oswald, M.R.; Pollefeys, M. Nice-slam: Neural implicit scalable encoding for slam. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12786–12796. [Google Scholar]

- Lin, J.; Zhang, F. R 3 LIVE: A Robust, Real-time, RGB-colored, LiDAR-Inertial-Visual tightly-coupled state Estimation and mapping package. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 10672–10678. [Google Scholar]

- Deng, J.; Wu, Q.; Chen, X.; Xia, S.; Sun, Z.; Liu, G.; Yu, W.; Pei, L. Nerf-loam: Neural implicit representation for large-scale incremental lidar odometry and mapping. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 8218–8227. [Google Scholar]

- Lyu, Z.; Zhang, J.; Lu, M.; Li, Y.; Feng, C. Tell me where you are: Multimodal llms meet place recognition. arXiv 2024, arXiv:2406.17520. [Google Scholar]

- Chen, X.; Milioto, A.; Palazzolo, E.; Giguere, P.; Behley, J.; Stachniss, C. Suma++: Efficient lidar-based semantic slam. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 4530–4537. [Google Scholar]

- Behley, J.; Garbade, M.; Milioto, A.; Quenzel, J.; Behnke, S.; Stachniss, C.; Gall, J. Semantickitti: A dataset for semantic scene understanding of lidar sequences. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9297–9307. [Google Scholar]

- Tian, X.; Zhu, Z.; Zhao, J.; Tian, G.; Ye, C. DL-SLOT: Tightly-coupled dynamic LiDAR SLAM and 3D object tracking based on collaborative graph optimization. IEEE Trans. Intell. Veh. 2023, 9, 1017–1027. [Google Scholar]

- Lin, Y.K.; Lin, W.C.; Wang, C.C. Asynchronous state estimation of simultaneous ego-motion estimation and multiple object tracking for LiDAR-inertial odometry. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 10616–10622. [Google Scholar]

- Huang, J.; Yang, S.; Zhao, Z.; Lai, Y.K.; Hu, S.M. Clusterslam: A slam backend for simultaneous rigid body clustering and motion estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019 2019; pp. 5875–5884. [Google Scholar]

- Gonzalez, M.; Marchand, E.; Kacete, A.; Royan, J. Twistslam: Constrained slam in dynamic environment. IEEE Robot. Autom. Lett. 2022, 7, 6846–6853. [Google Scholar]

- Takleh, T.T.O.; Bakar, N.A.; Rahman, S.A.; Hamzah, R.; Aziz, Z. A brief survey on SLAM methods in autonomous vehicle. Int. J. Eng. Technol 2018, 7, 38–43. [Google Scholar]

- Bogue, R. Robots for space exploration. Ind. Robot. Int. J. 2012, 39, 323–328. [Google Scholar]

- Blochliger, F.; Fehr, M.; Dymczyk, M.; Schneider, T.; Siegwart, R. Topomap: Topological mapping and navigation based on visual slam maps. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 3818–3825. [Google Scholar]

- Zhan, Z.; Jian, W.; Li, Y.; Yue, Y. A slam map restoration algorithm based on submaps and an undirected connected graph. IEEE Access 2021, 9, 12657–12674. [Google Scholar]

- Bryson, M.; Sukkarieh, S. Active airborne localisation and exploration in unknown environments using inertial SLAM. In Proceedings of the 2006 IEEE Aerospace Conference, Big Sky, MT, USA, 4–11 March 2006; IEEE: Piscataway, NJ, USA, 2006; p. 13. [Google Scholar]

- Maurović, I.; Seder, M.; Lenac, K.; Petrović, I. Path planning for active SLAM based on the D* algorithm with negative edge weights. IEEE Trans. Syst. Man, Cybern. Syst. 2017, 48, 1321–1331. [Google Scholar]

- Zhang, X.; Lai, J.; Xu, D.; Li, H.; Fu, M. 2D Lidar-Based SLAM and Path Planning for Indoor Rescue Using Mobile Robots. J. Adv. Transp. 2020, 2020, 8867937. [Google Scholar]

- Chang, H.J.; Lee, C.G.; Lu, Y.H.; Hu, Y.C. P-SLAM: Simultaneous localization and mapping with environmental-structure prediction. IEEE Trans. Robot. 2007, 23, 281–293. [Google Scholar]

- Wang, J.; Shan, T.; Englot, B. Virtual maps for autonomous exploration with pose SLAM. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 4899–4906. [Google Scholar]

- Liu, Y.; Zhou, Z.; Sang, H.; Yu, S.; Yan, Y.; Er, M.J. Efficient Exploration of Mobile Robot Based on DL-RRT and AP-BO. IEEE Trans. Instrum. Meas. 2024, 73, 8505109. [Google Scholar]

- Saravanan, S.; Chauffaut, C.; Chanel, C.; Vivet, D. FIT-SLAM–Fisher Information and Traversability estimation-based Active SLAM for exploration in 3D environments. arXiv 2024, arXiv:2401.09322. [Google Scholar]

- Thrun, S. Learning occupancy grid maps with forward sensor models. Auton. Robot. 2003, 15, 111–127. [Google Scholar] [CrossRef]

- Dorigo, M.; Birattari, M.; Stutzle, T. Ant colony optimization. IEEE Comput. Intell. Mag. 2006, 1, 28–39. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).