1. Introduction

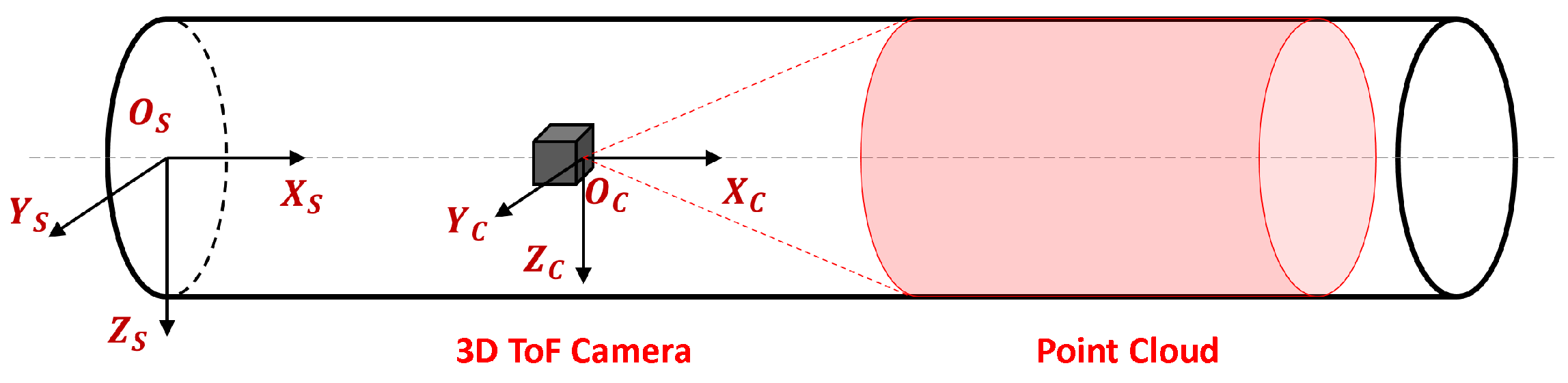

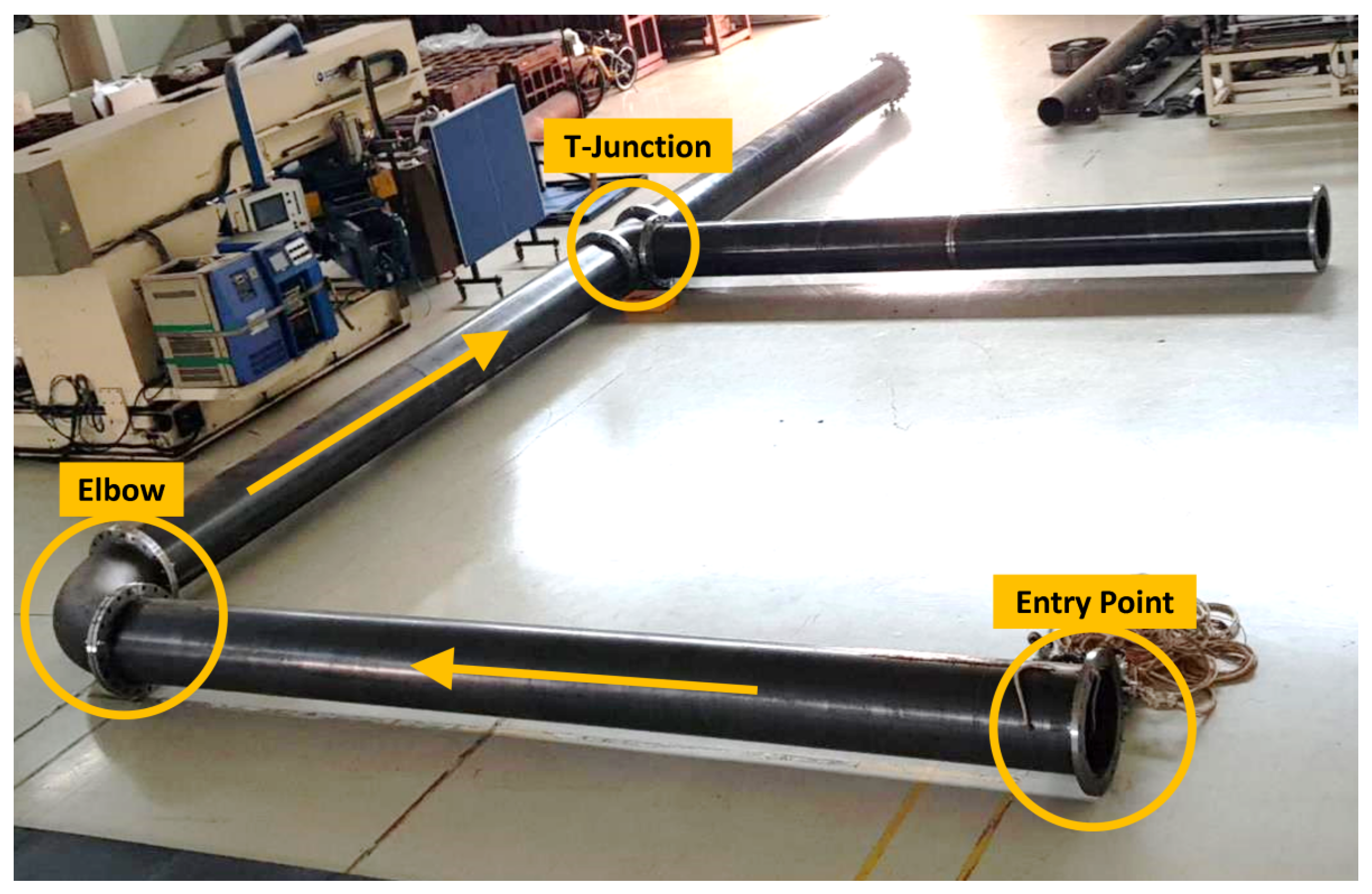

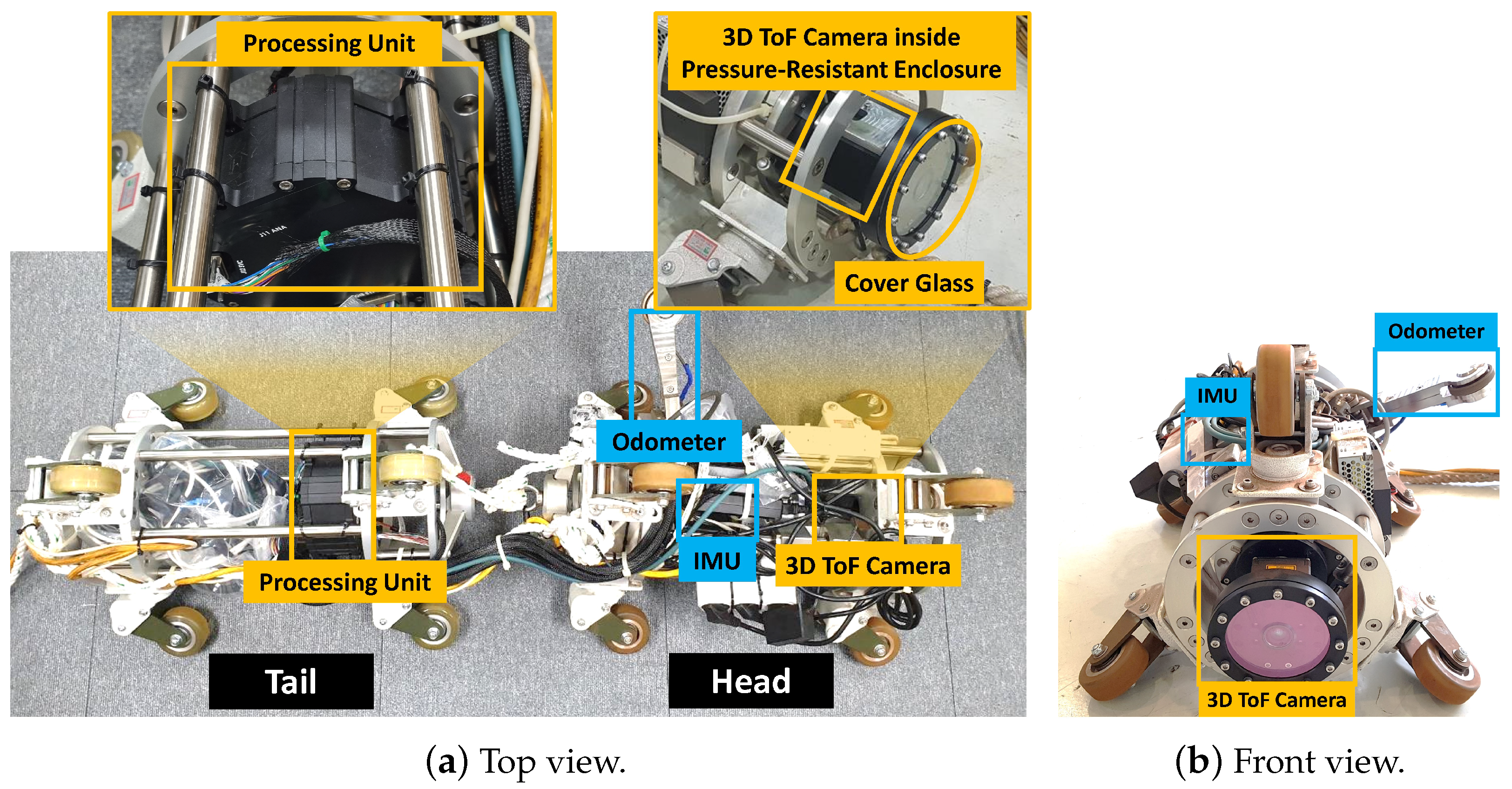

In-line inspection (ILI) plays a crucial role in pipeline management, ensuring the safety and reliability of natural gas pipelines. A pipeline inspection gauge (PIG) is a device widely used in ILI to measure irregularities in the pipe, including corrosion, cracks, and deformations [

1,

2,

3]. In natural gas pipelines, a PIG is passively moved by the pressure of the gas flow over hundreds of kilometers, carrying various sensors, including magnetic, acoustic, optical, etc. For efficient data collection, a PIG is required to move at a stable speed. However, features such as elbows, T-junctions, significant thickness changes, or heavy welds can cause a PIG device to become stuck. Once the pressure builds up behind a PIG device, it can result in sudden speed surges reaching tens of meters per second [

4,

5]. This phenomenon, known as speed excursion, not only prevents the sensors from fully collecting data but also incurs damage to PIGs and pipelines. In lower-pressure pipelines, the speed excursion is even more severe, making them nearly impossible for PIG operation. To address this problem, newer PIG systems are equipped with advanced mechanisms like pressure controls and active joints to regulate the speed to smoothly move through elbows, T-junctions, significant thickness changes, or heavy welds. Detecting and estimating these features along the pipeline plays a key role in the success of these control mechanisms. While significant thickness change and heavy welds need adaptive pressure controls, elbows and T-junctions require much more complicated control maneuvers involving both pressure controls and active joints. This paper focuses on elbow and T-junction detection and estimation (ETDE).

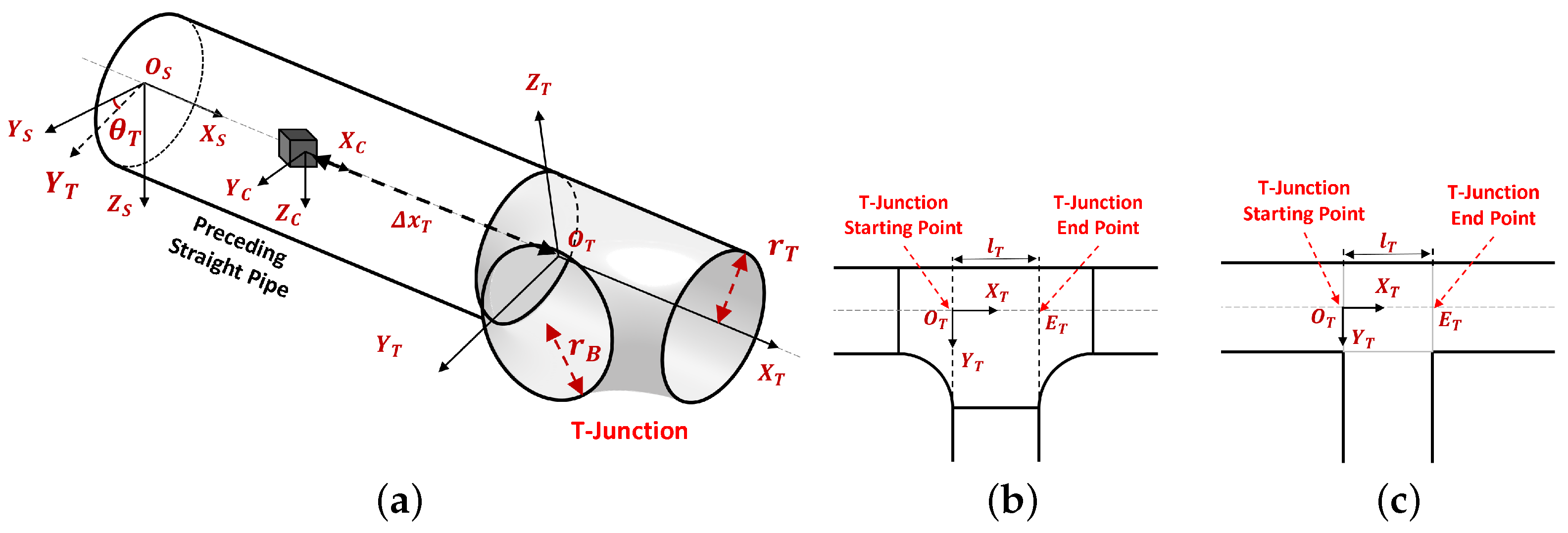

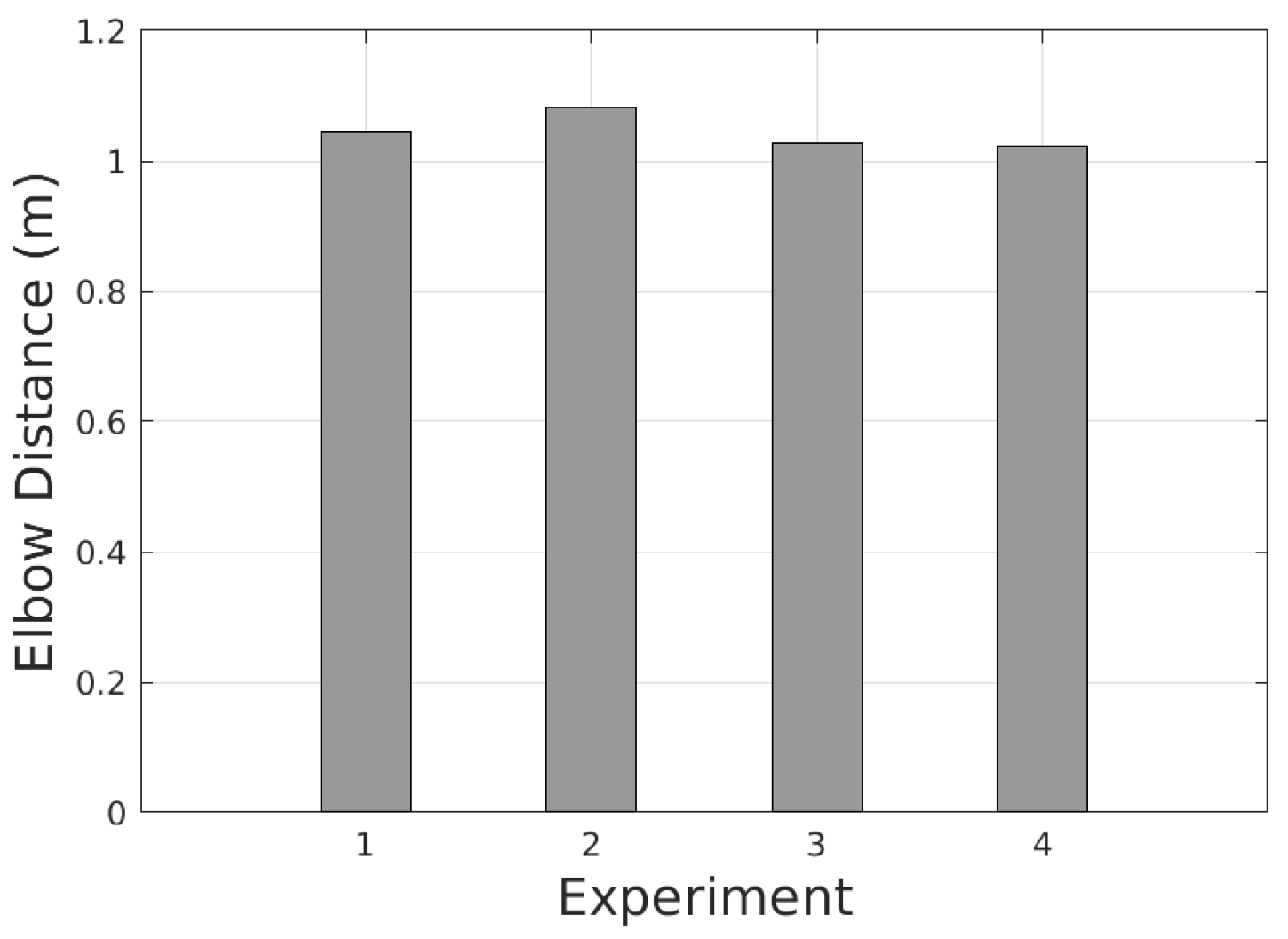

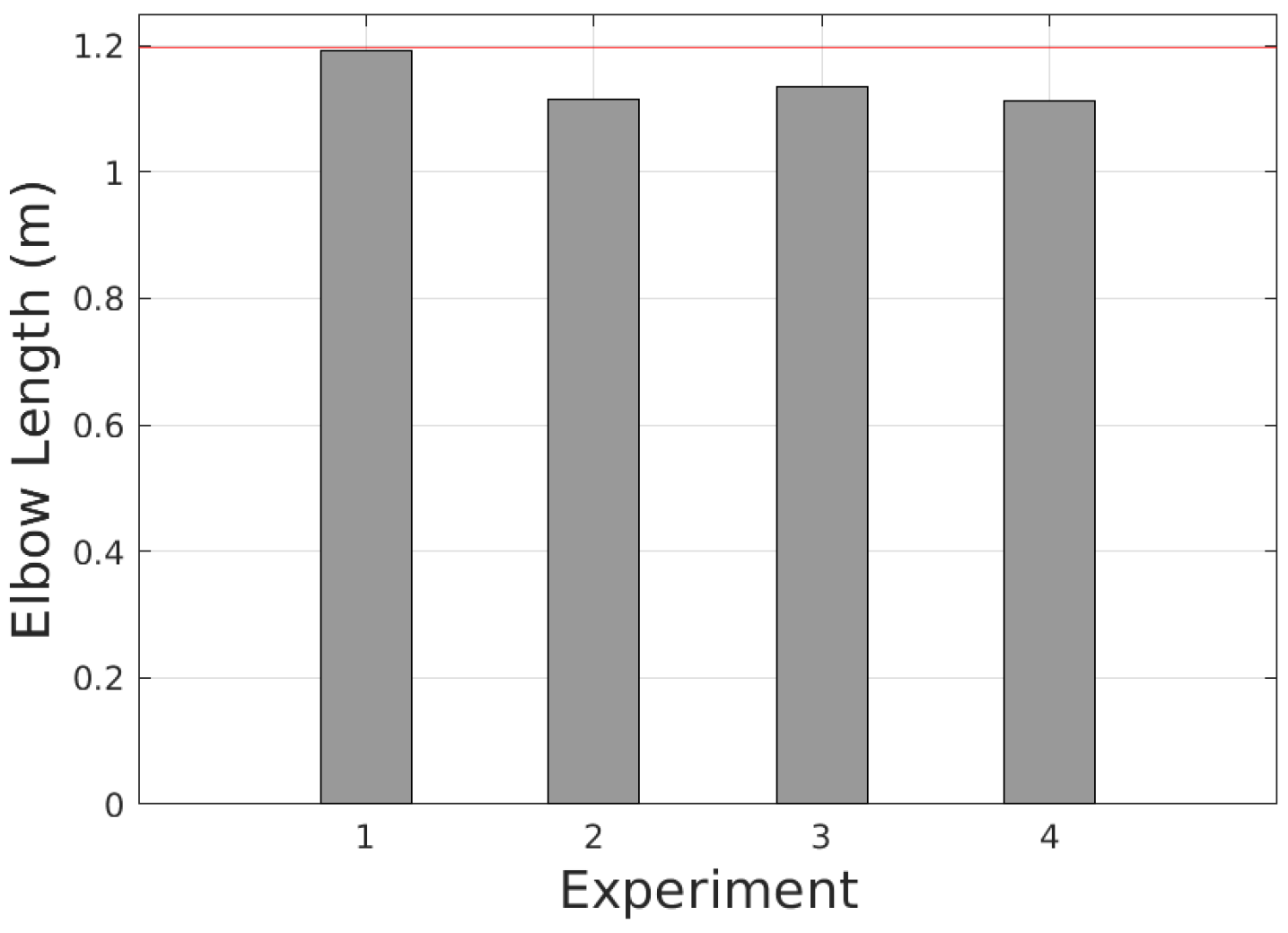

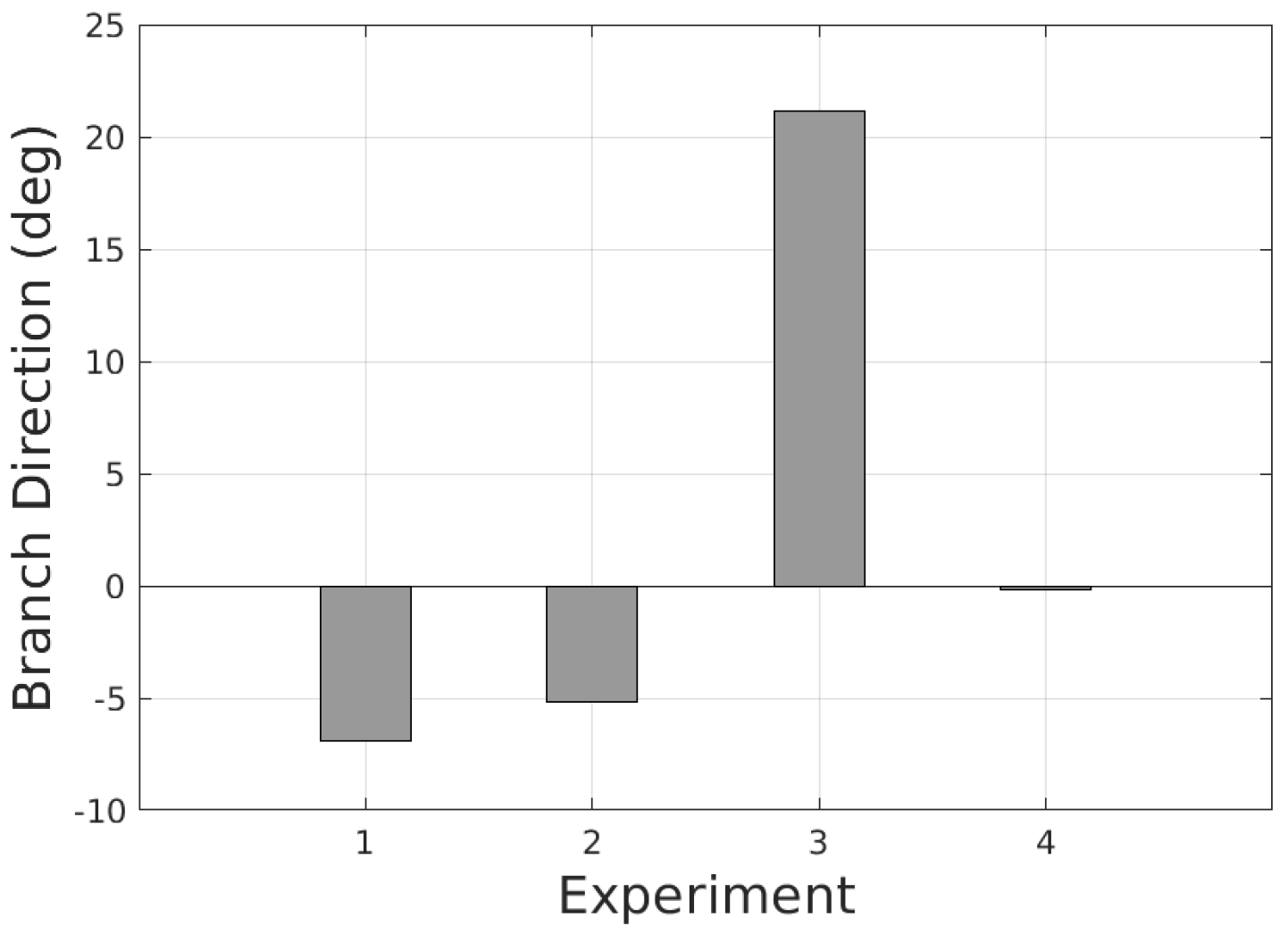

The ETDE problem involves detecting elbows and T-junctions as well as estimating their critical parameters to enable PIG devices to navigate through these features smoothly. For elbows, these parameters consist of the distance to the elbow, its direction, radius, and length. Similarly, T-junction parameters include the distance to the T-junction, the direction of its branch, the branch radius, and the length of the T-junction. To be integrated into PIG systems, ETDE must address two key constraints: limited processing power and real-time operation. ETDE is performed on the PIG processing unit with limited processing power due to the limited battery and long operating distance. It also needs to perform ETDE in real-time to promptly react to the existence of elbows and T-junctions. These conflicting constraints present significant challenges to the ETDE problem. Given these constraints, ETDE needs to provide early and reliable detection to support maneuvering in high-speed movement while achieving reasonable accuracy.

The existing approaches for ETDE rely on either contact sensors [

6,

7], 2D cameras [

8,

9,

10,

11], stereo camera [

12,

13], or 3D ranging sensors [

14,

15]. While contact sensors approaches [

6,

7] offer low-complexity solutions, they are unsuitable for PIGs due to delayed detection and the potential for PIG and pipe damage at high speeds. On the other hand, cameras [

8,

9,

10,

11] and stereo 2D cameras [

12,

13] suffer from inherently high complexity due to image processing. The 3D ranging sensors are a promising approach for ETDE in PIG systems. However, the existing approach [

14,

15] requires high computation power for processing the entire point cloud, making them impractical under real-time and resource-constrained conditions. To overcome these challenges, ETDE solutions must be carefully designed and optimized to account for the unique geometric characteristics of elbows and T-junctions, ensuring both computational efficiency and real-time performance.

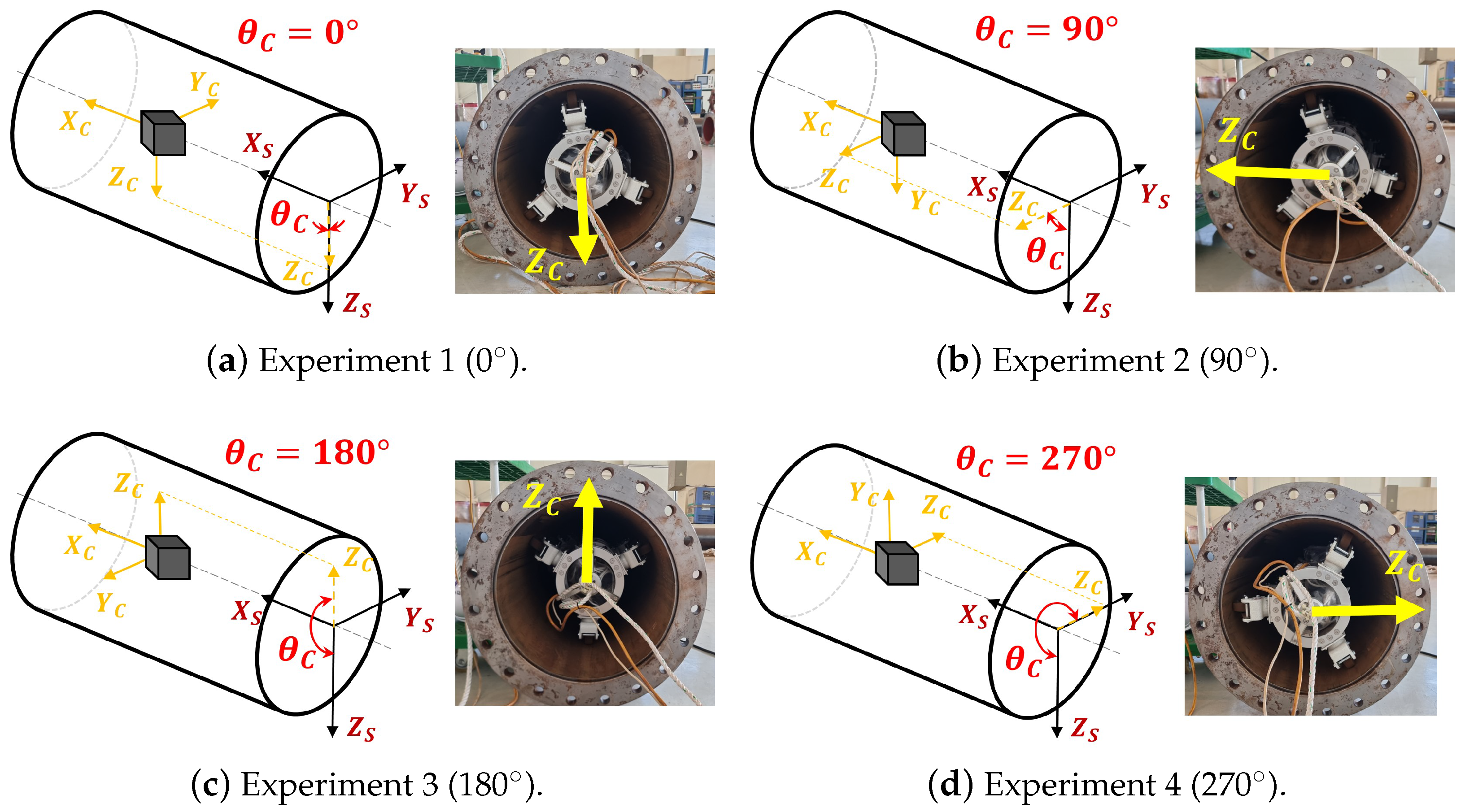

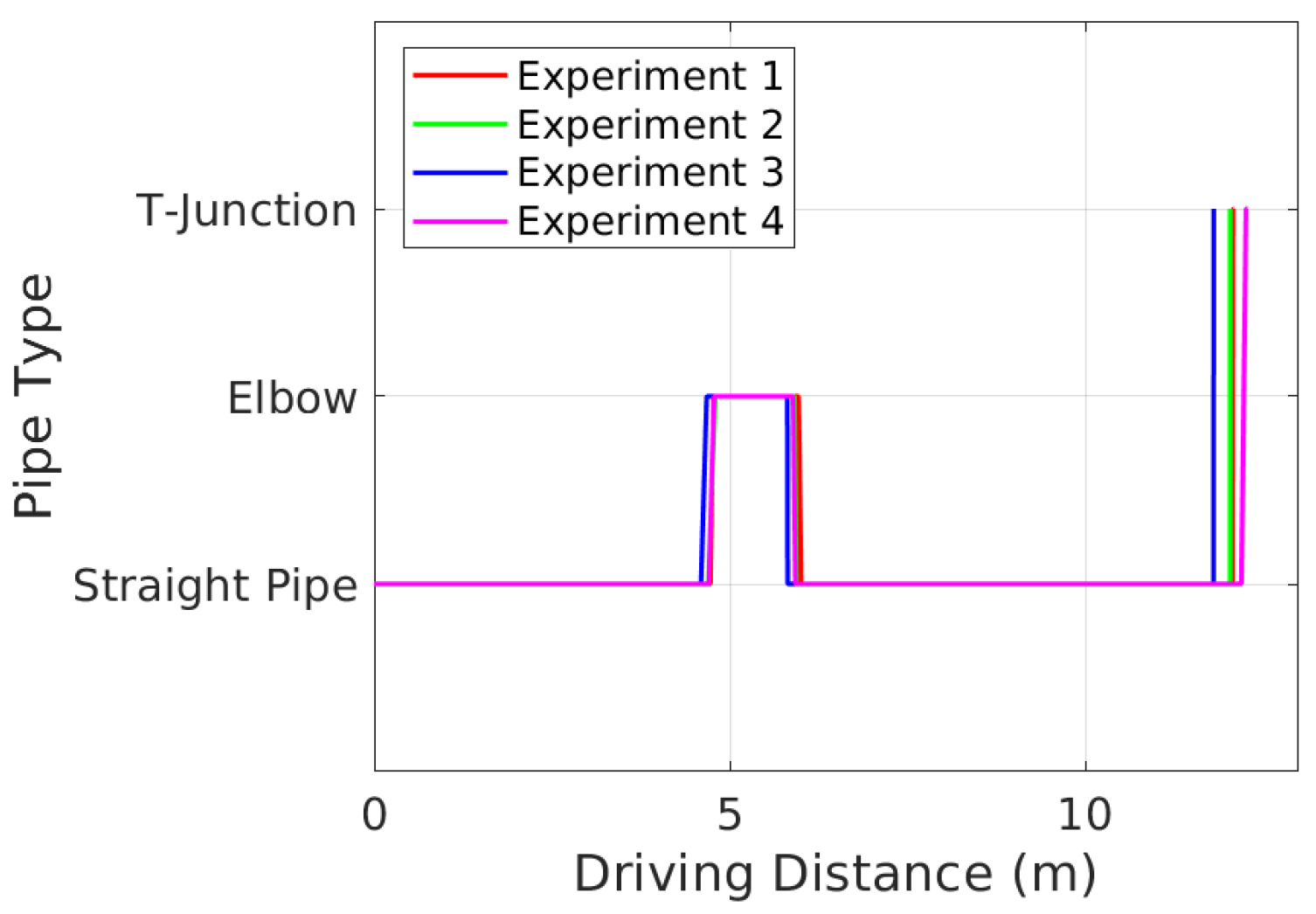

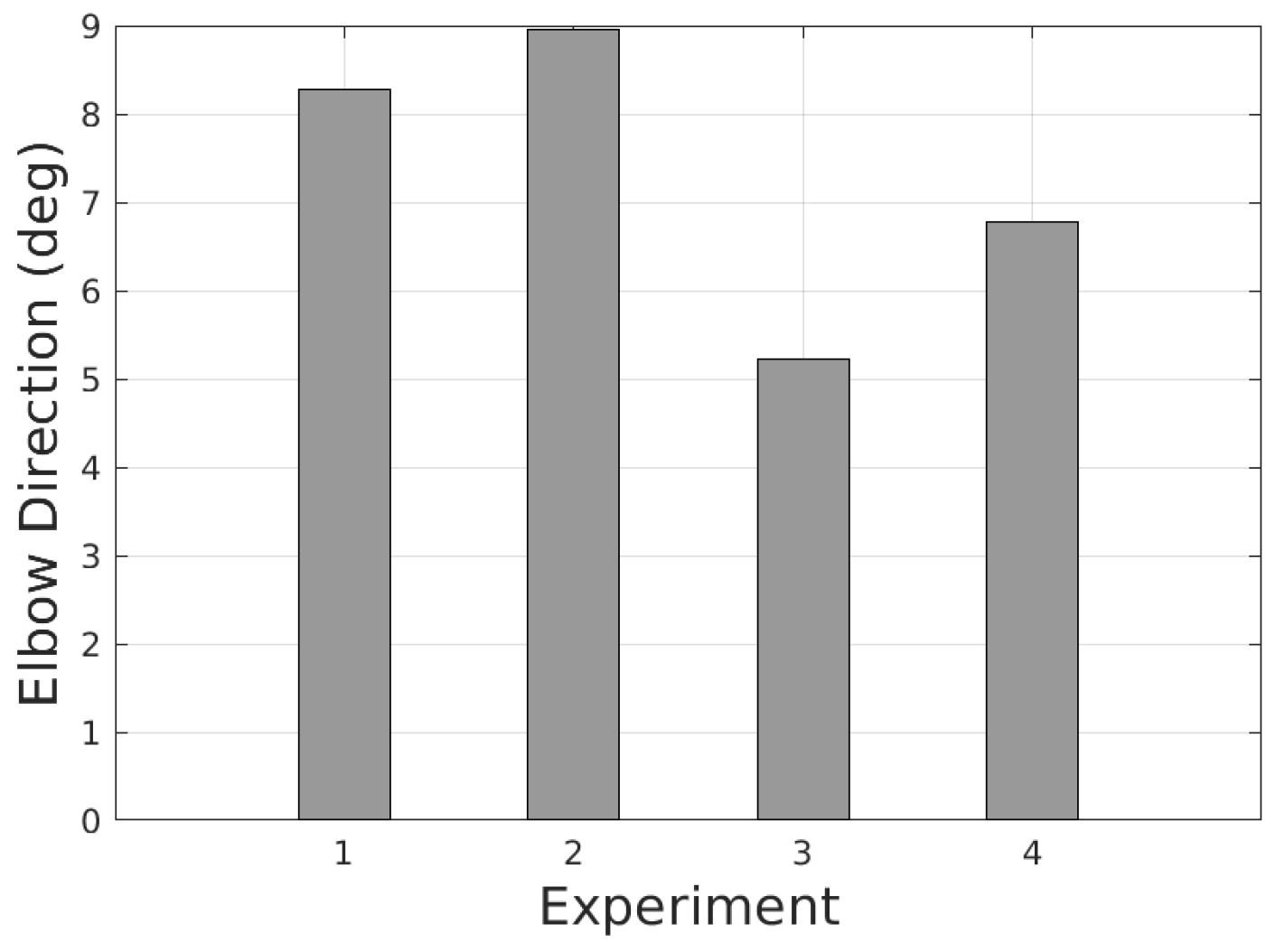

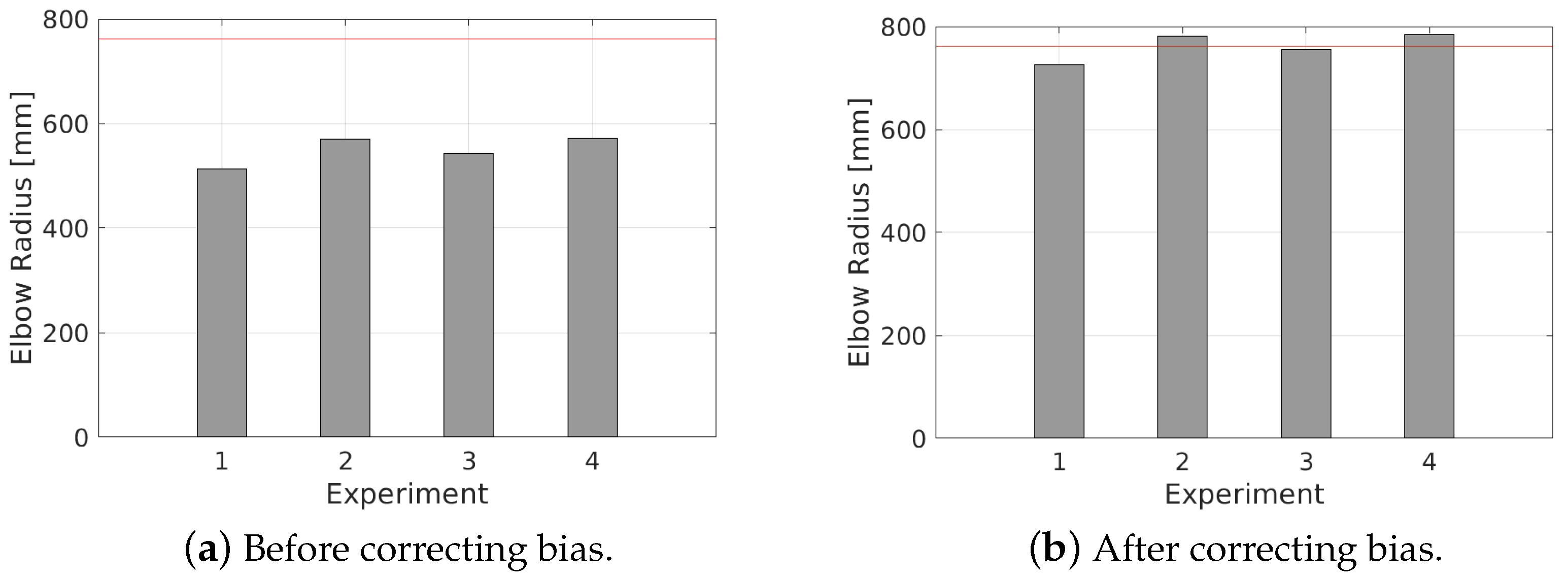

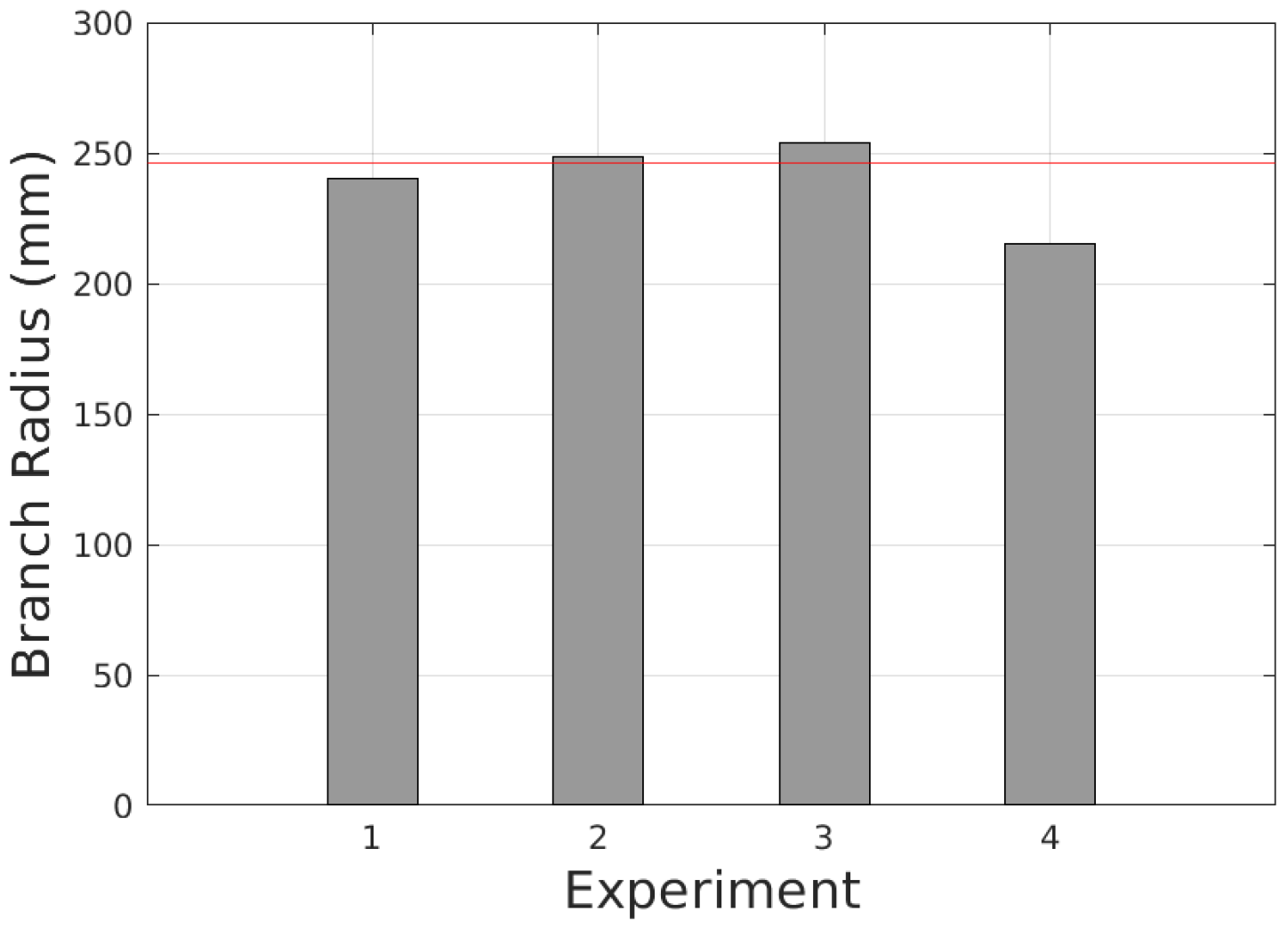

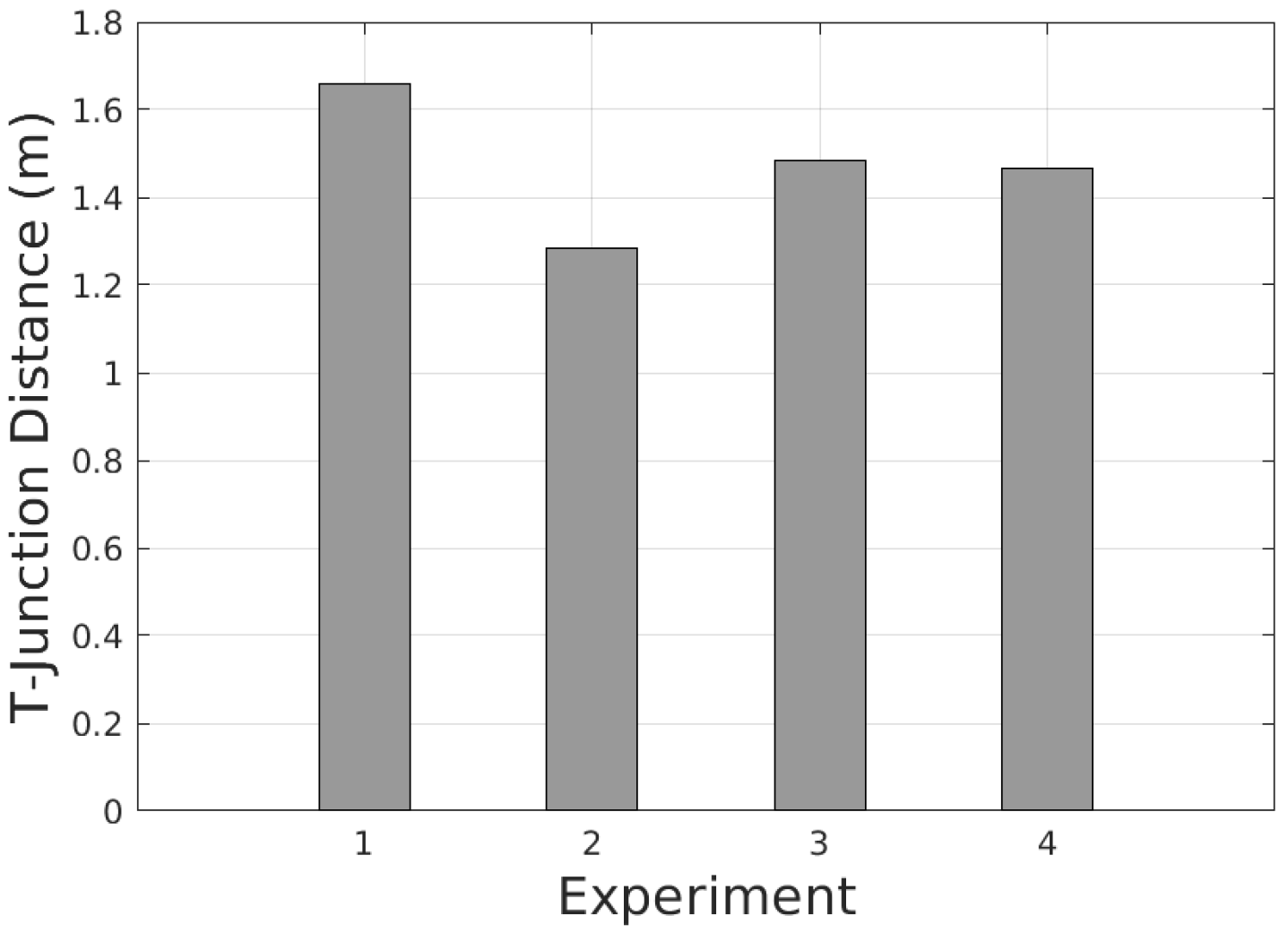

In this paper, we propose a real-time elbow and T-junction detection and estimation (RT-ETDE) framework, designed to address the challenges of computational efficiency and real-time processing in PIG systems. The RT-ETDE framework consists of a set of lightweight schemes for ETDE that rely on intelligent point cloud partition, feature extraction, and simple geometric solutions based on the characteristics of the point cloud in elbows and T-junctions. We present a linear-time sampling scheme for the camera pose and straight pipe diameter estimation. The elbow and T-junction are detected by evaluating the coverage of the point cloud in the inner and outer area from the straight pipe. The elbow direction is estimated by the deviation direction of the cloud, while the elbow radius and distance are computed via the estimation of its tangent. The elbow length is estimated by detecting the deviation of the point cloud from the elbow model. On the other hand, the branch direction of the T-junction is computed by identifying the highest intensity area. The branch radius is estimated based on the circumcircle through three points on the branch, while the T-junction distance and length are directly inferred from these points. To ensure real-time operation, several techniques are applied, including point skipping, language-dependent optimizations, and a multi-processor offloading scheme to distribute computational tasks across processors in the real PIG processing unit. The results collected with the pull-rig robot and the prototype pipeline validate that the RT-ETDE framework consistently detects and estimates the elbow and T-junction, as well as satisfying real-time constraints with a 10 Hz frame rate on the real PIG processing unit. The contribution of this paper is summarized as follows:

We propose an RT-ETDE framework which is the first solution that simultaneously achieves real-time operation, early detection, and full parameter estimation of elbows and T-junctions using 3D point clouds.

We propose a set of schemes for detecting and estimating each parameter of elbows and T-junctions in the RT-ETDE framework. Each scheme is designed to be computationally efficient and robust by relying on intelligent point cloud partition, feature extraction, and simple geometric solutions.

We present several techniques, including a multi-processor offloading scheme, to ensure the real-time constraint.

We validate the performance of the framework with real experiments. The results show that the detection and estimation are consistent.

The processing time result on the real PIG processing unit indicates that the RT-ETDE framework satisfies the 10-Hz real-time constraint.

The remainder of this paper is organized as follows.

Section 2 introduces related works.

Section 3 describes the system model and defines the problem. In

Section 4, we present our RT-ETDE framework. The real-time adaptation techniques are presented in

Section 5. The numerical results are discussed in

Section 6, and finally, the conclusion of this paper is given in

Section 7.

2. Related Works

ETDE under limited processing power and real-time constraints is critical for modern PIG control systems in natural gas pipelines. Existing methods for ETDE are primarily designed for short pipelines or slower-moving, non-isolated robots, where these constraints are less critical. These approaches can be categorized based on the sensors used: contact sensors [

6,

7], 2D cameras [

8,

9,

10,

11], stereo cameras [

12,

13], and 3D ranging sensors [

14,

15]. They are summarized in

Table 1.

The first approach involves attaching contact sensors to the front of the robot to detect elbows and T-junctions by physically interacting with the inner pipe surface. In [

6], three contact sensors are evenly placed circumferentially to detect elbows by observing deviations in the center point of the contact points. Consecutive center points are aggregated to estimate the direction and radius of the elbow. Similarly, ref. [

7] uses four contact sensors to detect elbows, where the direction and position are computed from the two most deviated sensors. Because of their simplicity in processing, these approaches are ideal for the limited processing power and real-time systems in general. However, they are unsuitable for PIG in natural gas pipelines since they detect features only after partial entry into the elbow, limiting reaction time for the control system. Moreover, at high speeds, contact sensors risk damaging both the pipelines and themselves.

The second approach employs a single 2D camera to detect the elbow and T-junction. In [

8], a camera paired with an LED illuminator is used to detect elbows and T-junctions by analyzing high-reflection areas caused by small incident angles at the elbows and T-junctions. The location and shape of these areas in the image are directly inputted to a fuzzy logic control algorithm. To improve the accuracy regarding lighting variations, a deep learning technique based on convolutional neural networks is introduced in [

9] to detect elbows and T-junctions from 2D camera images. In [

10], a rotating line-shaped laser paired with a 2D camera generates consecutive images to form a full scan of the pipe. The elbow and T-junction create a gap area in the scanning image. By analyzing the shape and position of this gap area, the elbow and T-junction can be detected, and their direction is also estimated. The approach in [

11] uses a camera paired with four circle-and-dot laser pointers to detect the elbow. The circle and dot can only be seen in the image if an elbow exists in front of the robot. The direction and distance to the elbow can be inferred by comparing the distortion pattern of circles and dots to known patterns. These approaches rely on image processing which is of high computational complexity and opposed to the PIG constraints. Additionally, relying on the deformation of a pattern which is abstract information leads to late detection and partial parameter estimation with low granularity.

The third approach involves a stereo camera. In [

12], the existence of T-junction is detected by edge detection using one camera of the stereo camera while stereo matching between both cameras of the stereo camera is performed to find the distance. However, the detection and distance measurement with the stereo camera is not accurate in the pipeline environment due to unclear features on the pipe wall. To address this issue, the approach in [

13] additionally uses the second distance measurement mechanism using a rotating laser profiler. When the robot is close to the T-junction detected by the stereo camera, the highly accurate laser profiler is activated to detect and measure the distance to it. These approaches share the same computational complexity issues with the 2D camera.

The fourth approach utilizes 3D ranging sensors. In [

14], a pseudo-3D ranging sensor using a rotating 2D LIDAR detects elbows and T-junctions by recognizing outlier points inside or outside the straight pipe. The direction and radius of elbows are estimated by fitting circles to LIDAR data at various angles, but this scheme depends heavily on accurate calibration and is sensitive to misalignment, limiting its reliability at longer distances. In [

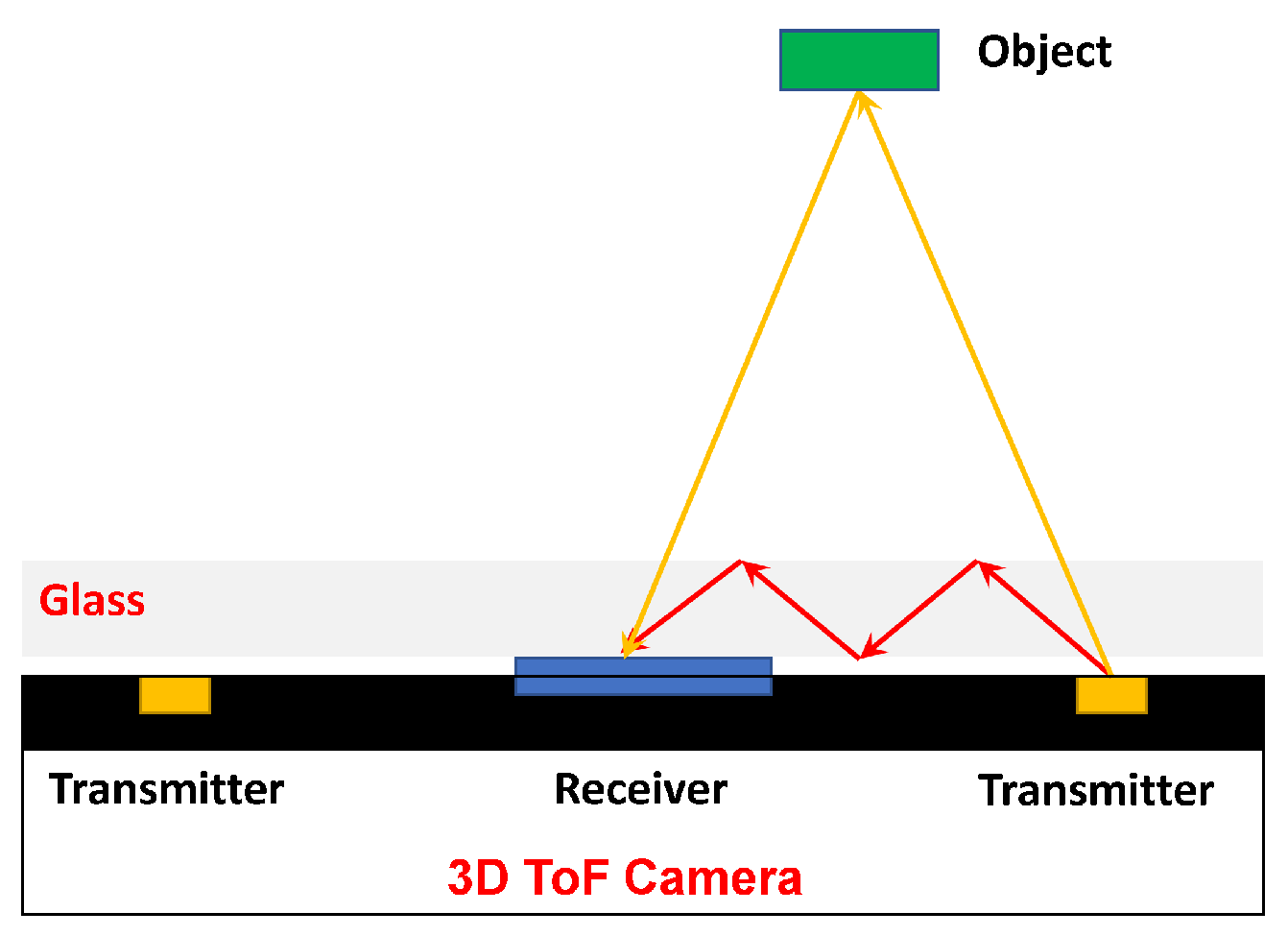

15], a 3D time-of-flight (ToF) camera is employed to model the straight pipe and identify outlier points. These points are grouped into blobs, with gradients analyzed to distinguish between elbows and T-junctions. However, both approaches require processing the entire point cloud, resulting in excessive computational complexity.

The fourth approach utilizes 3D ranging sensors to detect and estimate the elbow and T-junction. In [

14], a pseudo-3D ranging sensor created by rotating a 2D LIDAR is equipped in front of the robot. The elbow and T-junction are detected by recognizing the existence of points inside or outside of the straight pipe, respectively. Additionally, to estimate the direction and radius of the elbow, 2D LIDAR data at each rotating angle are fitted with the circle, and the rotating angle with the smallest fitting error indicates the direction of the elbow. However, the rotation mechanism requires multiple frame processing and careful calibration. Additionally, the elbow direction and radius estimation are sensitive to misalignment of the robot inside the pipe and only effective at close distances. The 3D ToF camera is a solution for these multiple frame issues in [

15]. The measured points are first used for fitting to find the circular cylinder model of the straight pipe. Then, the measurement points that are outlier points from the straight pipe model are considered candidate regions. They are first grouped into blobs of connected pixels. Gradients of points inside a blob and between blobs are used to evaluate the shape change inside a blob and between blobs, respectively. Blobs with small shape change internally and big changes to the other shapes are considered to be elbows or T-junctions. Only the distance to these features is estimated in this work. In general, both approaches in [

14,

15] rely on the processing of entire measurement points, which is high in computational complexity.

The existing approaches share three common issues when considering their application to PIG systems, including the following: (1) excessive processing complexity for real-time and resource-constrained systems, (2) late detection leaving insufficient time for corrective maneuvers, and (3) incomplete estimation of parameters. In this paper, we address these issues by proposing the RT-ETDE framework using a 3D ToF camera that consists of a set of lightweight schemes for ETDE. Unlike [

14,

15], RT-ETDE does not use the whole point cloud for detection and estimation but intelligently partitions the point cloud into groups and extracts only simple representative features, such as centroid, extreme point, or the number of points, to apply simple geometric solutions. Additionally, we propose several real-time adaptation techniques to ensure the real-time constraint. This is the first work that supports early detection and full parameter estimation of elbows and T-junctions in real time.

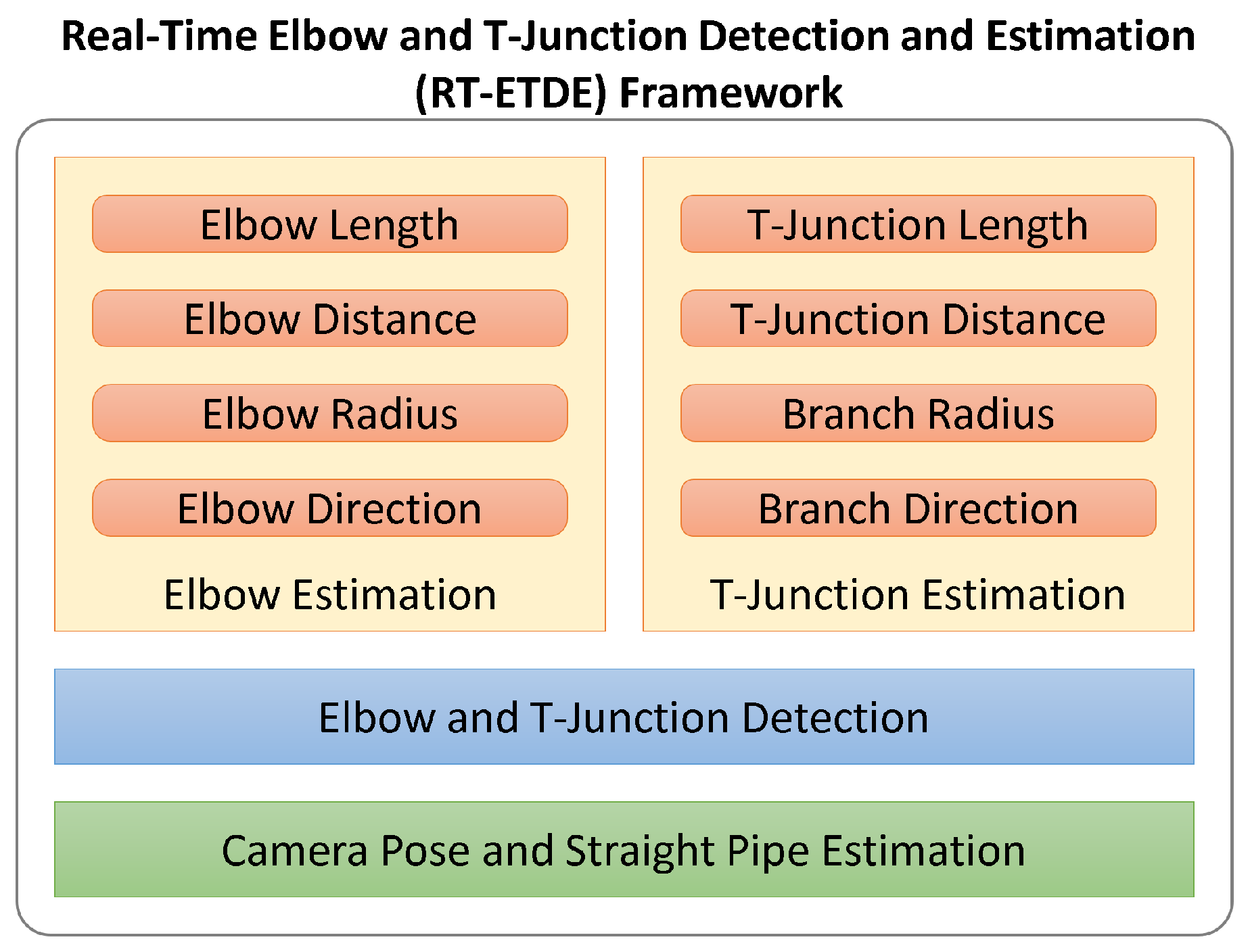

4. Real-Time Elbow and T-Junction Detection and Estimation (RT-ETDE) Framework

In this section, we propose the real-time elbow and T-junction detection and estimation (RT-ETDE) framework consisting of four blocks, as shown in

Figure 4. The first block estimates the camera pose in SCS and the straight pipe model. It enables the transformation of the camera point cloud to SCS and the identification of points on the straight pipe. The second block detects the presence of the elbow and the T-junction. Upon detection, the framework activates either the elbow or T-junction estimation accordingly. When the elbow is detected, the elbow estimation estimates the elbow direction, radius, distance from the 3D ToF camera, and length. Similarly, for a T-junction, the branch direction, diameter, distance from the 3D ToF camera, and length are estimated.

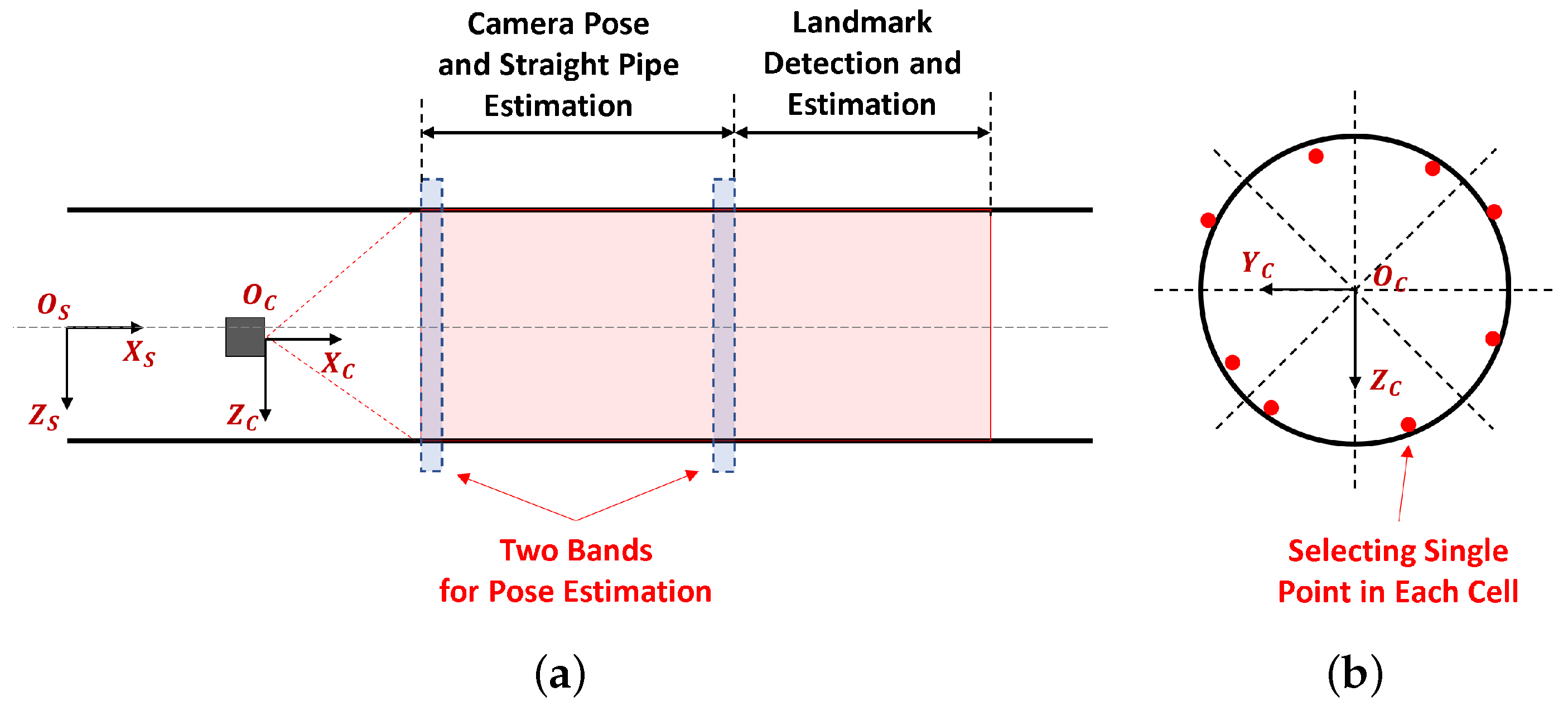

4.1. Camera Pose and Straight Pipe Estimation Based on Linear-Time Point Sampling

Camera pose and straight pipe estimation is an important preprocessing step in the RT-ETDE framework. Camera pose estimation in the straight pipe allows the transformation of the point cloud to SCS, which is necessary to remove the effect of the misalignment between the camera and the straight pipe. On the other hand, the straight pipe estimation provides the elliptic cylinder model of the pipe, which can be used to separate points on this pipe and points on the elbow and T-junction.

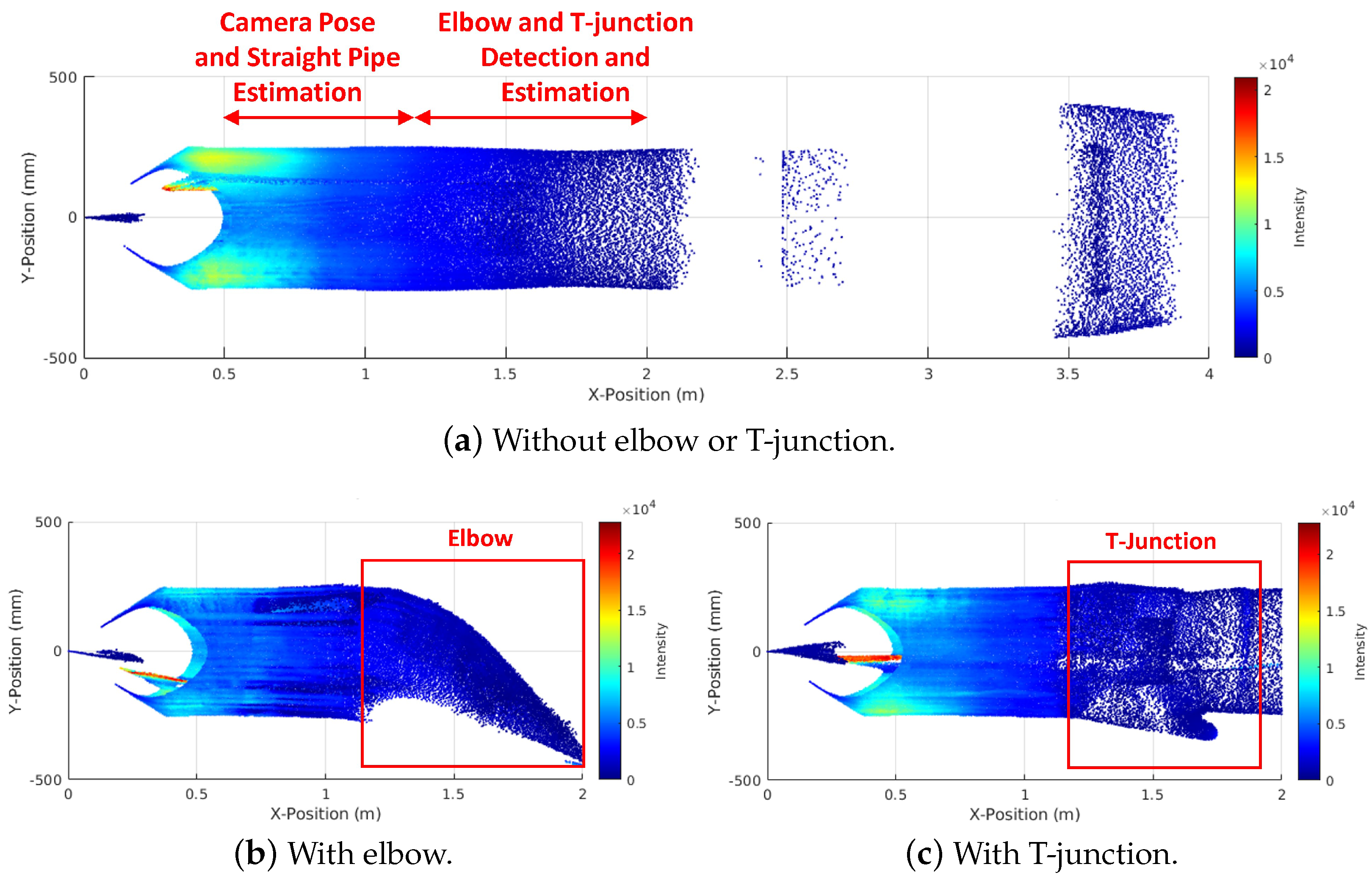

This process uses a portion of the point cloud closer to the 3D ToF camera, reserving the remainder for ETDE, as illustrated in

Figure 5a. We apply the camera pose and pipe diameter estimation scheme based on the elliptic cylinder fitting in [

17]. The scheme estimates the 7-tuple

where

are the major and minor axes of the elliptic cylinder representing the straight pipe, and

are the camera pose in the SCS, consisting of roll, pitch, yaw, y-displacement, and z-displacement. This scheme finds the optimal pose that transforms the point cloud to SCS and fits to the elliptic cylinder represented by optimal

and

.

The estimation scheme in [

17] uses the entire point cloud for fitting, which is unsuitable for real-time operation. To address this issue, we present a linear-time point sampling scheme. Preliminary analysis revealed that for the same number of points

M, the optimal point selection possesses two properties: (1) points are divided into two planes that are parallel to

-plane and positioned farthest from each other, and (2) in each band,

points are evenly distributed across circumferential angles around the pipe cross-section. However, selecting points closest to a plane and evenly distributed over circumferential angles requires sorting, which cannot be achieved in linear time. To overcome this, we present a near-optimal linear-time point sampling scheme. We expand the plane into a band with thickness

along

, as illustrated in

Figure 5a. Any point on this band is considered to belong to the plane. Then, we divide the circumferential angle into cells and select a single point in each cell, as illustrated in

Figure 5b. As the

M increases, points in cells converge to an even distribution around the circumferential angles. Given the bands and cells, point sampling becomes the problem of selecting the points in

M cells in two bands. To minimize the processing time, we choose the first available point in each cell during a single iteration through the point cloud. The iteration is terminated as soon as

M points are selected, ensuring linear-time complexity.

These sampled points are used for pose and straight pipe estimation using the scheme in [

17] to find the camera pose and the straight pipe model. Based on the pose

, each point

in the 3D ToF camera point cloud in the camera coordinate system is first transformed into the SCS

and is ready for detection and estimation.

4.2. Elbow and T-Junction Detection Based on Outlier Extraction

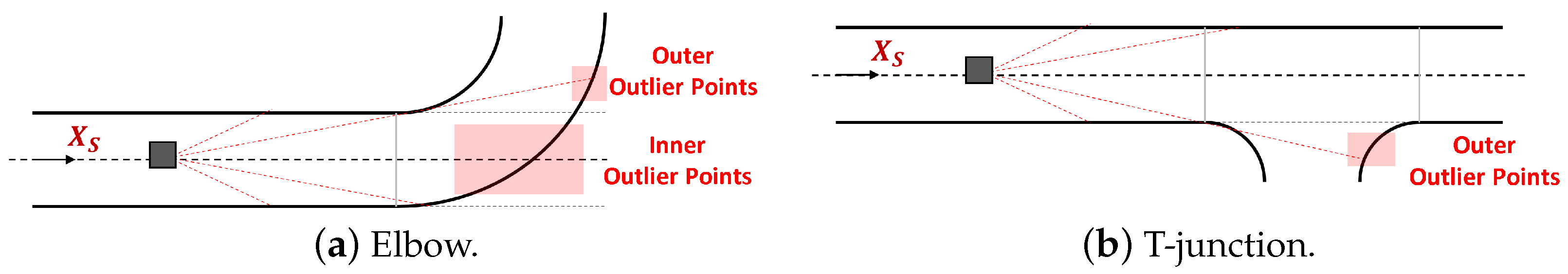

This section focuses on detecting elbows and T-junctions by analyzing outlier points that deviate from the straight pipe model within the transformed 3D point cloud in SCS. The elbow and T-junction introduce distinct patterns of outlier points, as shown in

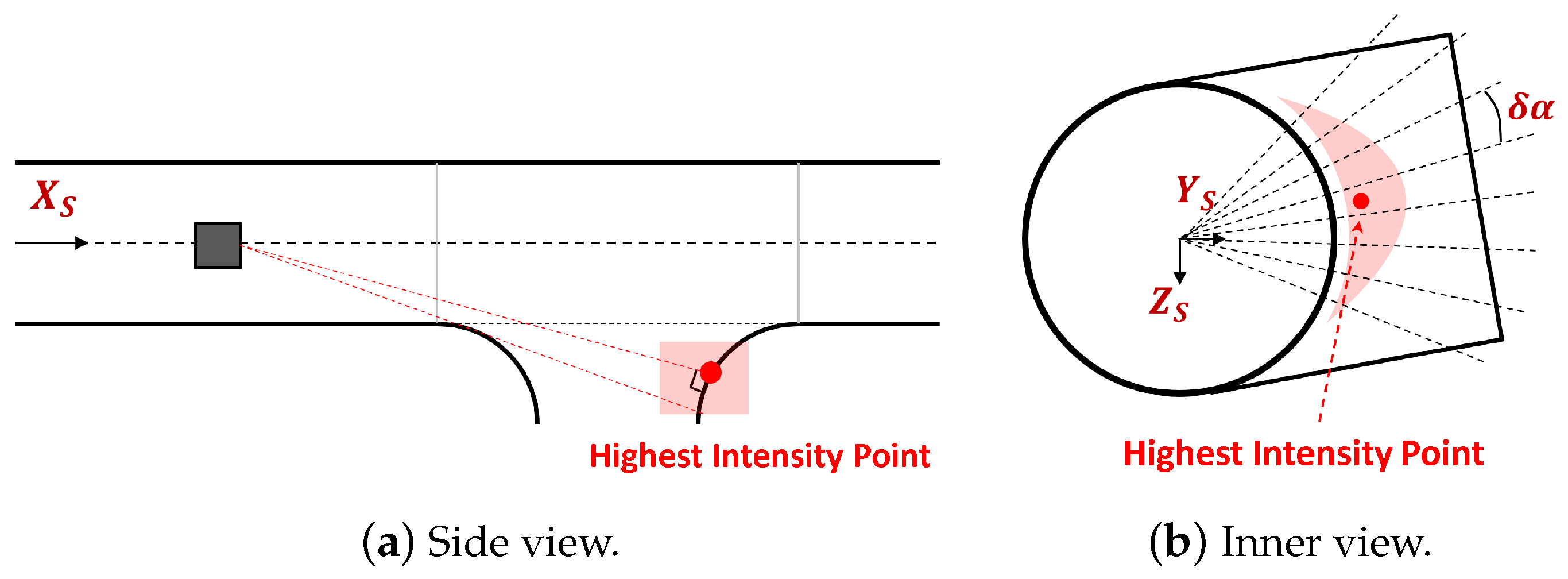

Figure 6. The elbow wall creates points in the inner and outer regions relative to the straight pipe model, which are called inner outlier points and outer outlier points, respectively. On the other hand, the branch of a T-junction only creates outer outlier points. These outlier patterns form the basis for detecting elbows and T-junctions.

To detect elbows and T-junctions, we propose a solution that relies on a simple point cloud feature. Due to multipath effects and depth errors, some outlier points may be missing, while others may not correspond to elbows or T-junctions. To address these challenges, we analyze the point coverage of inner outlier points for elbow detection and outer outlier points for T-junction detection.

We divide the point cloud into the concentric annuli in

-plane with

difference in radius, as shown in

Figure 7. By checking the number of points in each annulus exceeding a threshold or not, we can decide if the annulus contains either the elbow or T-junction. Since the inner outlier points only exist in the elbow, we first check the inner annuli to detect the elbow. If the elbow does not exist, we check the outer annuli for T-junction detection. To determine the existence of an elbow or T-junction, the number of annuli containing them should be greater than a threshold. Once detected, the parameter estimation is triggered for the elbow and T-junction accordingly.

4.3. Elbow Estimation

Once an elbow is detected, its critical parameters must be estimated, including direction, radius, distance from the 3D ToF camera, and length. The elbow direction determines the angle at which the PIG must turn, while the elbow radius defines the sharpness of the turn. The distance indicates the point at which the turn should begin, and the elbow length provides information on how long the turning maneuver will last.

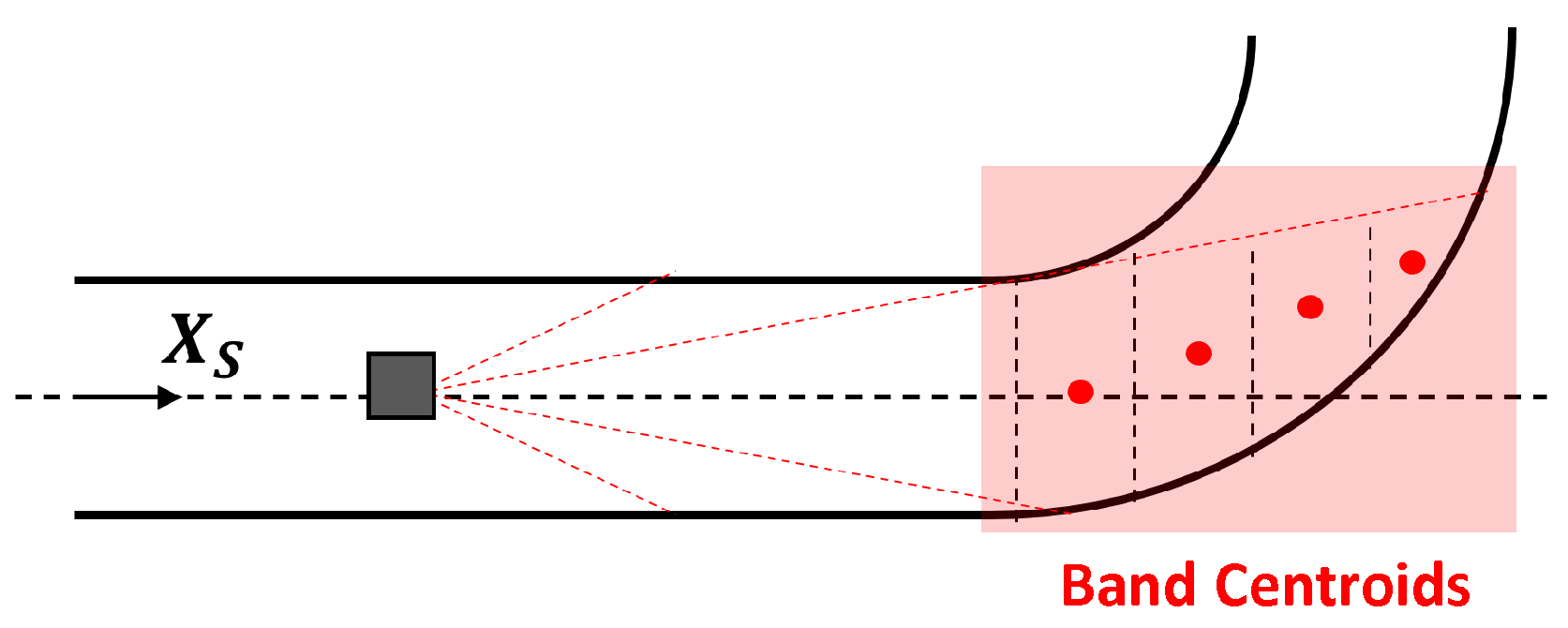

4.3.1. Elbow Direction Estimation Based on Band Centroid

The estimation of elbow direction relies on the observation that the point cloud inside the elbow deviates toward the elbow’s direction compared to the straight pipe. To evaluate this deviation reliably, we propose using

X-band and its centroid. Specifically, we classify the point cloud near the elbow area into the

X-band along the

at intervals of

, as illustrated in

Figure 8. We observe the key geometric property of the point cloud that

X-band farther from the camera has more deviation toward the elbow direction. To quantify this deviation, points within each

X-band are aggregated into a centroid. The centroid provides a robust estimate by neutralizing random errors from individual points. As the centroid is farther away from the origin, it is more reliable for direction estimation. Therefore, we compute the elbow direction using the weighted average of the direction computed by each centroid. Given

N centroids in SCS

, the elbow direction

is computed as follows:

Given

, the elbow plane can be computed. This plane contains

and creates angle

with the

-plane. The elbow plane is an important input for the elbow radius estimation in the following section.

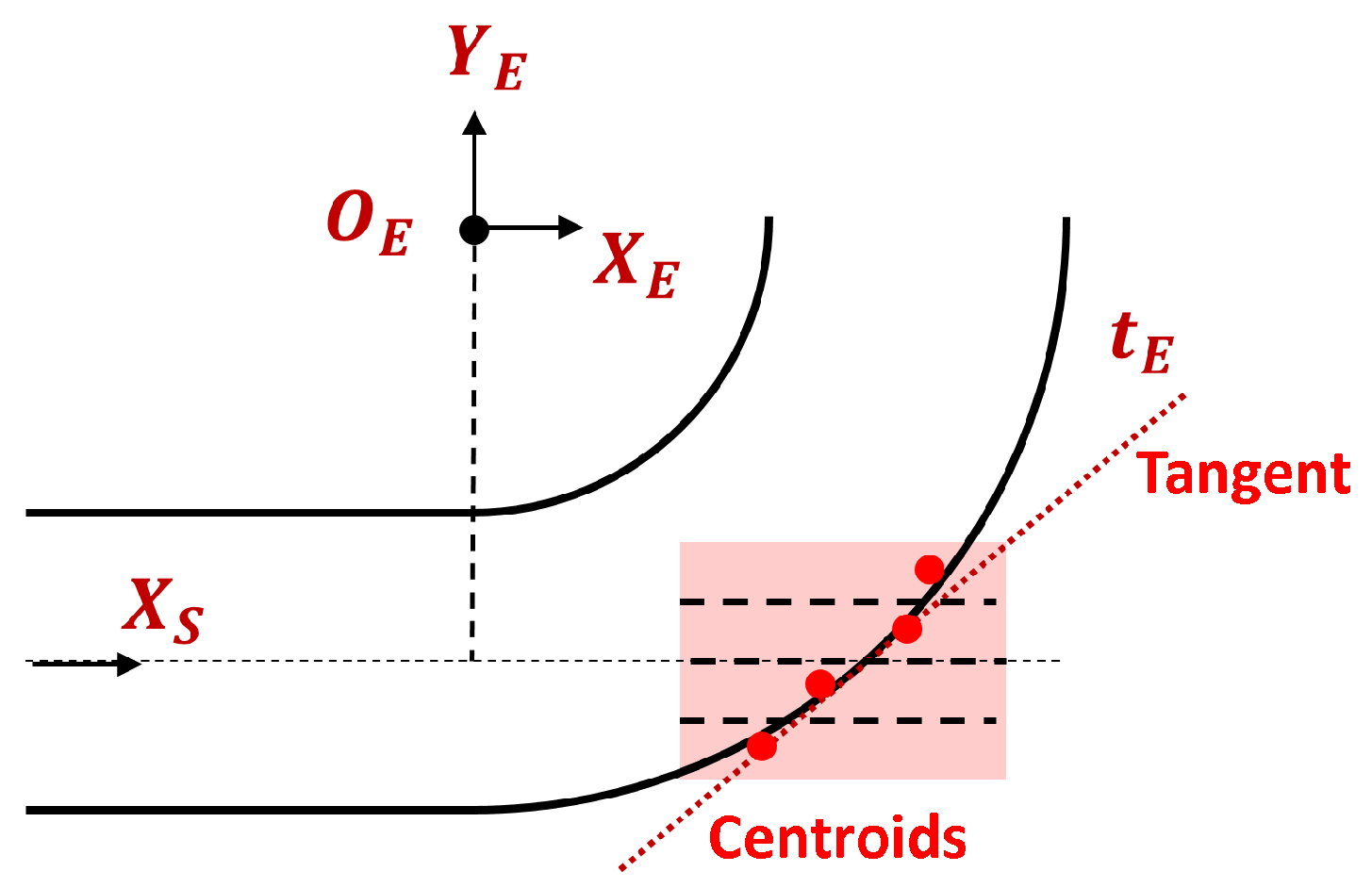

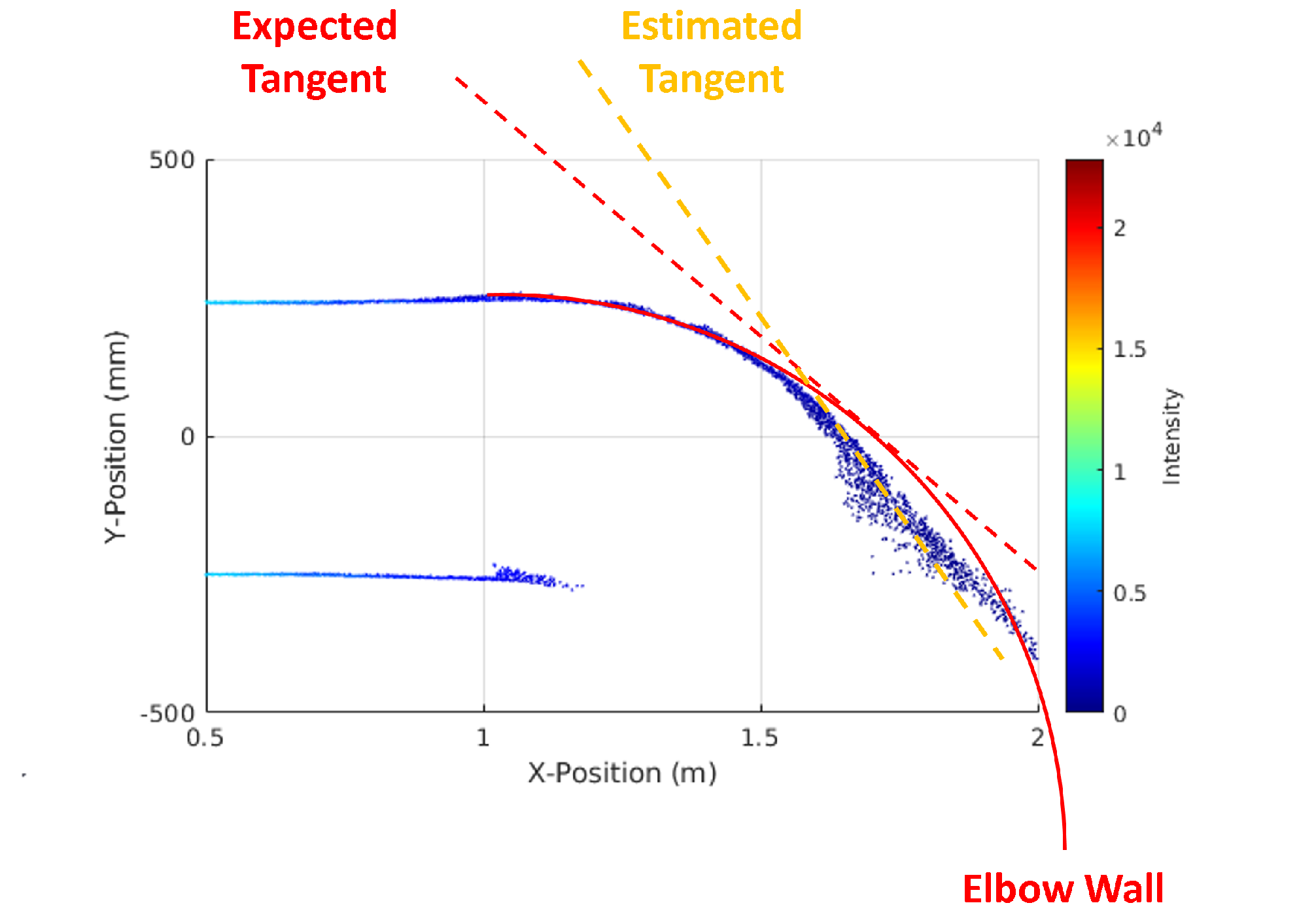

4.3.2. Elbow Radius Estimation Using Tangent Angle

The common approach to finding the elbow radius is typically based on the torus or circle fitting, but it is computationally intensive and often inaccurate due to the incomplete coverage of the torus model. To address this limitation, we propose a simple geometric approach based on the tangent of the elbow in the elbow plane, as shown in

Figure 9. Let

be the intersection between

and the larger inner wall of the elbow on the elbow plane, i.e.,

-plane. The tangent

of the elbow at

on the elbow plane creates an angle

with

.

is also equal to angle

, and

can be computed as follows:

From this equation, the elbow radius

can be computed as follows:

To estimate the tangent, we reuse the processing using the band and centroid. We first extract the points from inner outlier points within the

distance to the elbow plane. Then, the selected points are classified into

Y-bands along the

-axis of the elbow, as illustrated in

Figure 10. The centroid of each band is calculated to provide a robust representation of the points within the band. Using these centroids, the tangent is estimated, enabling the computation of the tangent angle

and the elbow radius

.

4.3.3. Elbow Distance Estimation

The elbow distance is directly derived from the tangent estimation. As shown in

Figure 9, the tangent estimation identifies the location of the tangent point

. Moreover, the distance from the elbow starting point

to tangent point

can be computed regarding

and

as follows:

Given the point

and the computed distance

, we can derive the location of

and the distance

. From this distance

together with the elbow direction

and elbow radius

, the origin

is the point on the elbow plane and is

distance away from

along the

-axis.

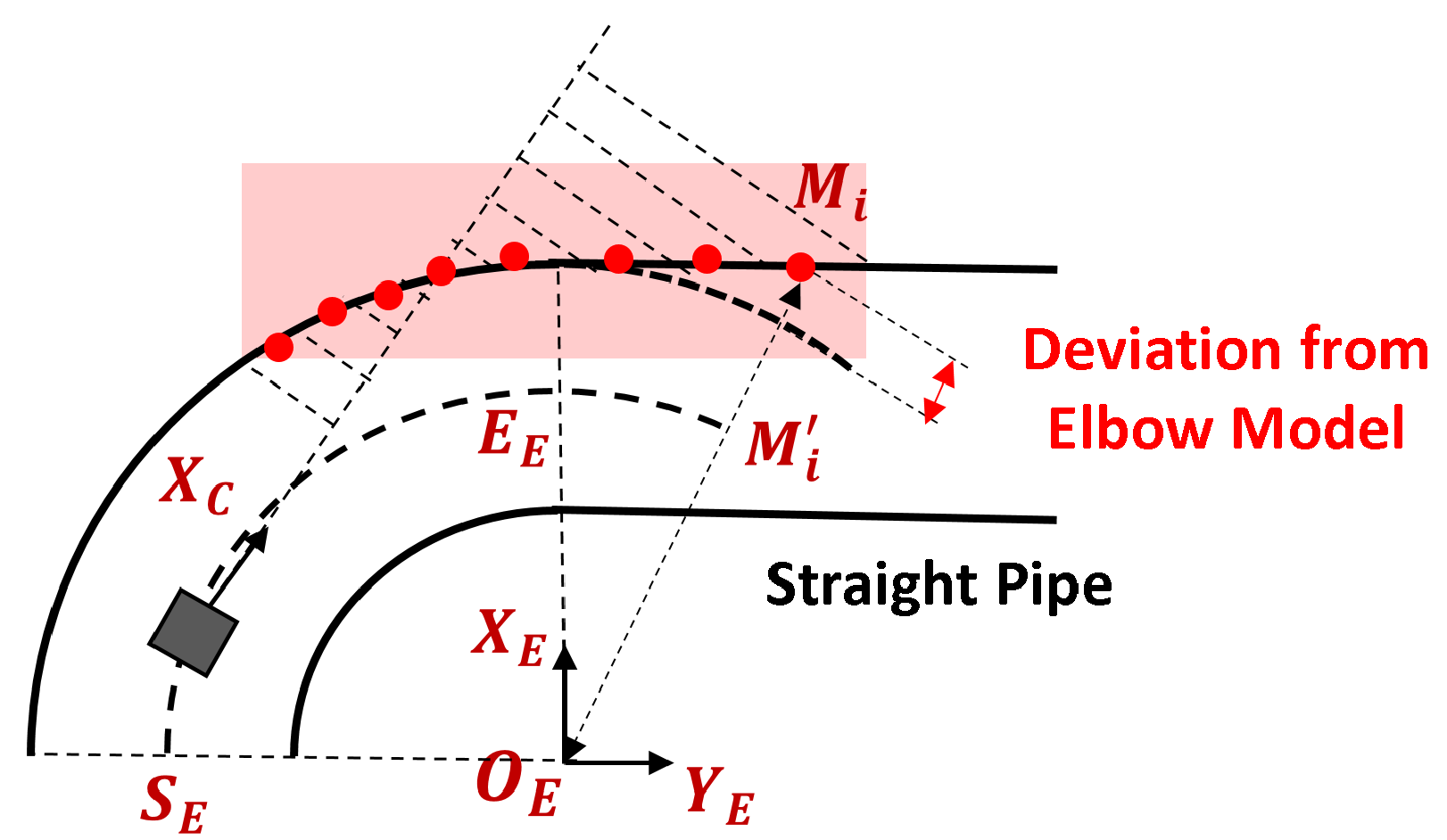

4.3.4. Elbow Length

Determining the elbow length requires identifying the start of the subsequent straight pipe. As the PIG approaches the subsequent straight pipe, portions of the point cloud begin to deviate from the torus shape characteristic of the elbow. To detect this deviation, we first extract points near the elbow plane within a distance of

as in elbow radius estimation. The points are then divided into

X-band along

-axis and find the centroid for each band, as shown in

Figure 11. If a centroid

satisfies the following condition, it is considered to deviate from the elbow torus model:

The centroid

satisfying this condition indicates the presence of the subsequent straight pipe.

is then projected on the central arc of the elbow to obtain

, as shown in

Figure 11. The elbow length

is obtained by compensating

from the length of arc

to remove the effect of the threshold

as follows:

4.4. T-Junction Estimation

Given that the T-junction is detected, we need to estimate the branch direction, branch radius, distance from the 3D ToF camera, and T-junction length. Similar to the elbow, the branch direction indicates which angle the PIG needs to consider the branch, while the branch radius affects how to control the PIG. The distance and length indicate where the control should be initiated and terminated.

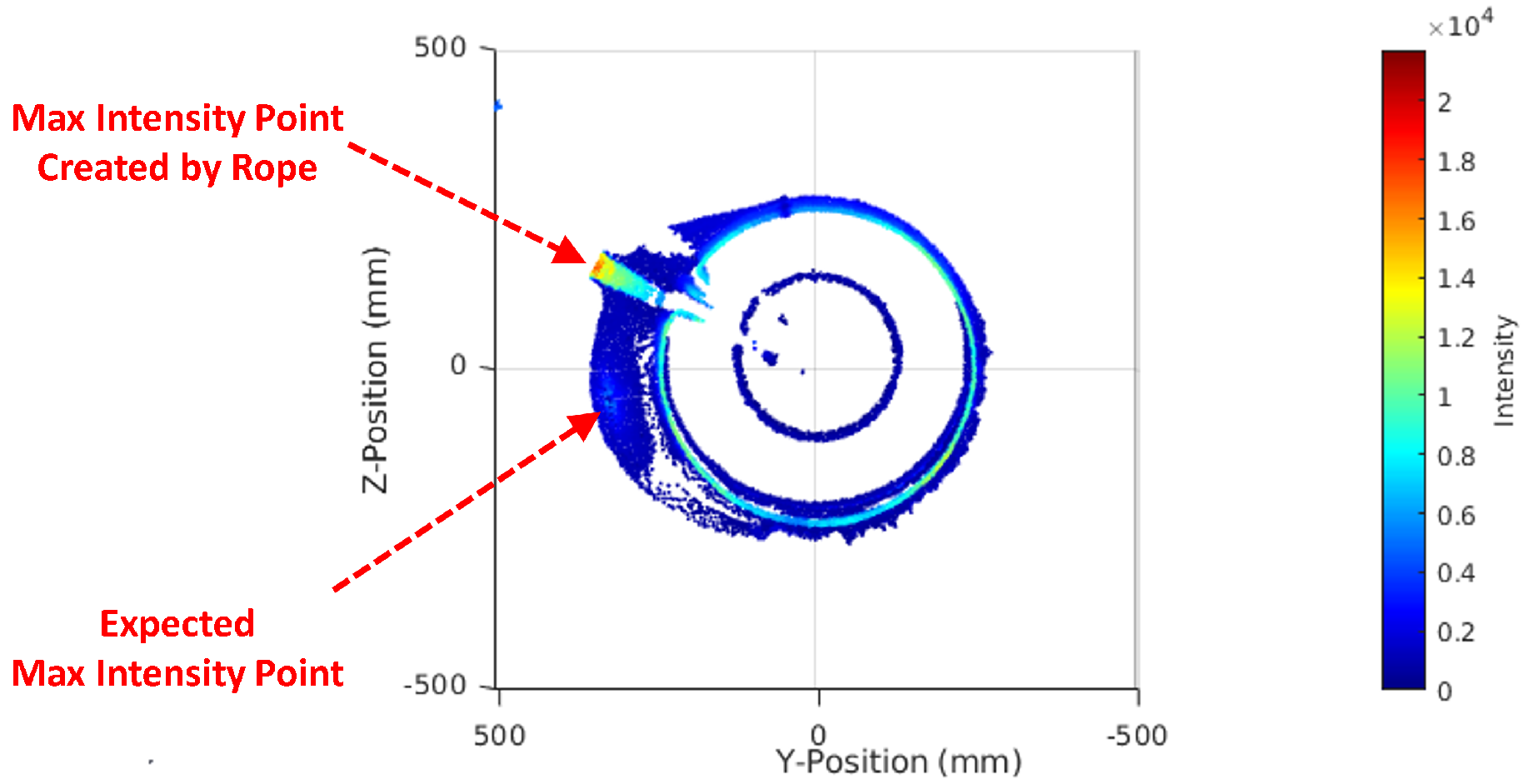

4.4.1. Branch Direction Estimation Based on Band Intensity

To detect the branch direction, we rely on the fact that an area of points on the branch having high intensities because of small incident angles indicates the branch direction. The highest intensity point indicates the direction of the branch. However, relying solely on the single highest-intensity point for branch direction estimation can be unreliable due to potential intensity errors or the presence of the defect with high intensity. To improve reliability, we propose dividing the outer outlier point cloud into

C-band along the circumferential axis in the

-plane with an angular step

, as illustrated in

Figure 12b. For each band, we compute the average intensity as a feature to reduce the impact of intensity errors and random defects. We select the

C-band with the highest average intensity, and inside it we select the highest intensity point. The direction of this point is also the branch direction

. Given

, the direction of

and

are obtainable. Therefore, the

-plane is well defined even though the origin

is unknown. This plane is used to estimate the branch radius in the following section.

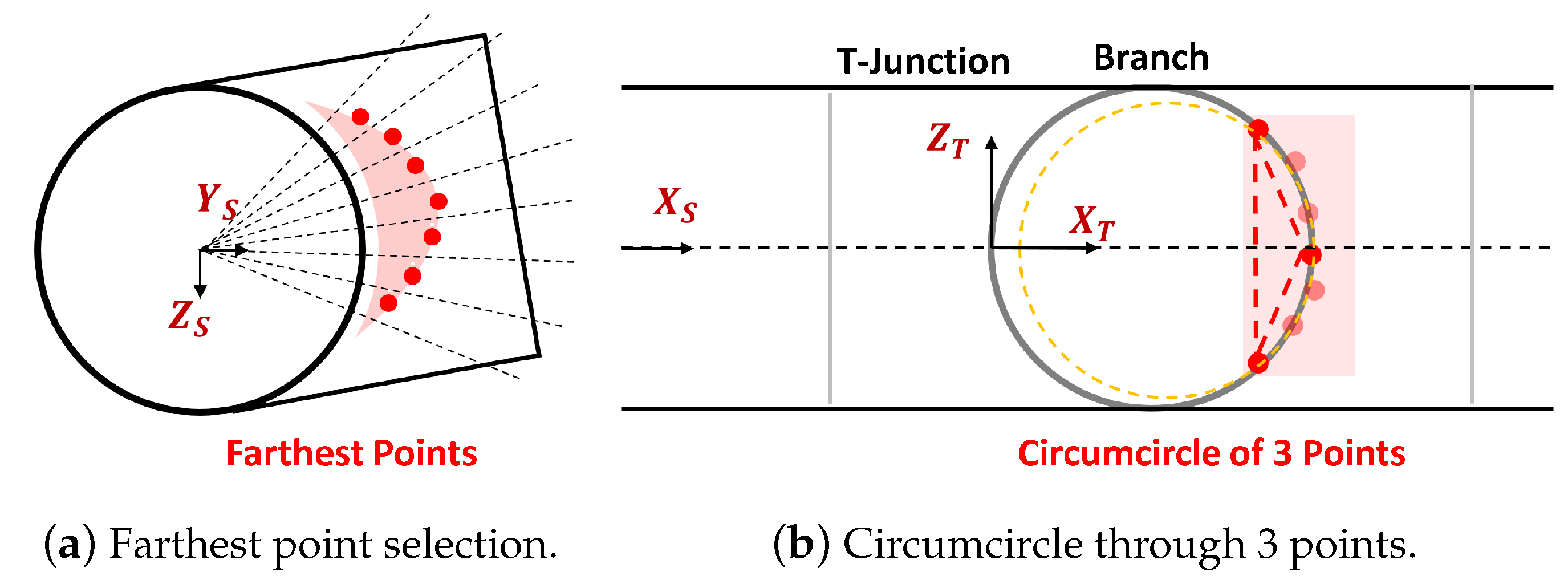

4.4.2. T-Junction Branch Radius Estimation Based on Circumcircle Estimation

We propose a simple geometric solution for branch radius estimation based on estimating the circumcircle through 3 points. It reuses the

C-band used for branch direction estimation as illustrated in

Figure 13a. We select the farthest points on

-plane of each

C-band as the feature since these points are located inside the branch. These points are projected to the

-plane and show the circle shape of the branch, as shown in

Figure 13b. To estimate the circle, we select three points from three

C-bands. The first point is in the

C-band having the highest average intensity. The remaining two points are in the

C-bands farthest away from the first

C-band. This selection ensures maximum separation of points and improves estimation accuracy. The branch radius is the radius of the circumcircle of the triangle created by the selected three points. Let

,

, and

be the edge length of the triangle. The radius of circumcircle according to [

18,

19] is as follows:

where

s is the semiperimeter of the triangle and is computed as

.

4.4.3. T-Junction Distance and Length Estimation

The T-junction distance is estimated directly based on the branch radius and points used for estimating it. As we can observe from

Figure 13, among three points used for branch radius estimation, the point in

C-band with the highest intensity can be projected to the

-axis to get the end point

. The start point

is

distance before the

on the

-axis. By knowing

, the distance

to the T-junction can be obtained as defined in

Figure 3. On the other hand, the T-junction length

is obtained directly as the branch diameter

.

5. Real-Time Adaptation

Even though the RT-ETDE framework is designed to be lightweight, further techniques need to be applied to meet the real-time constraint. We apply three techniques including point skipping, language-dependent optimization, and multi-processor offloading. Since the number of points in the point cloud can be extremely large, using all of them incurs a high processing load on the system. Therefore, we apply uniform point skipping where only one out of every points is selected for processing. Via preliminary experiments, we found that achieves the balance between detection accuracy and processing time. On the other hand, language-dependent optimization techniques include minimizing the variable allocation, maximizing the use of low-level codes, and using high-speed registers for frequently used variables.

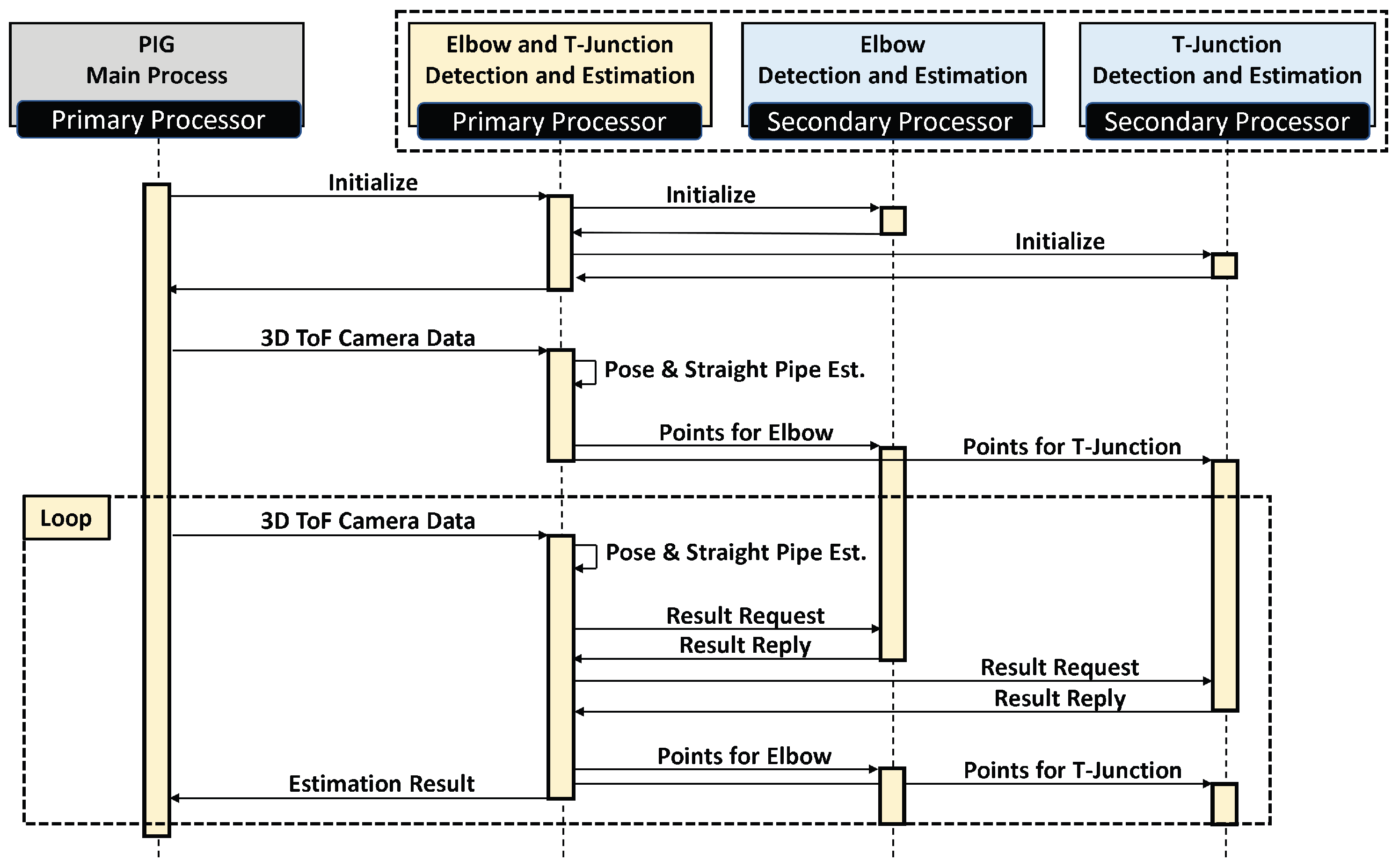

We also propose an offloading scheme for multi-processor systems. Particularly, we apply this scheme to the Xilinx Zynq 7000 processing unit which is used in the real PIG. This system consists of a dual-core ARM Cortex-A9 processor operating at 800 MHz as a primary processor and two Microblaze processors operating at 125 MHz as secondary processors. The primary processor needs to perform all tasks consisting of data collection, processing, and control. The RT-ETDE framework may interfere with these tasks. Therefore, we need to partially offload the RT-ETDE framework to the secondary processors considering their limited processing power.

The detection and detection operation for each feature are extracted to become the elbow detection and estimation (EDE) process and T-junction detection and estimation (TDE) process, respectively. The pose and straight pipe estimation and framework coordination are given to the ETDE process. The ETDE process is located on the master processor for interacting with the PIG main process while EDE and TDE processes are transferred to two secondary processors.

Figure 14 shows the sequence diagram of the multi-processor offloading scheme. First, the PIG main processing process initializes the ETDE, EDE, and TDE processes. When the data is available from the 3D ToF camera, it is sent to the ETDE process for processing. The pose and straight pipe are first estimated. Then, points for the elbow and T-junction are extracted and sent to EDE and TDE processes, respectively. The ETDE process does not wait for the result but becomes ready directly for the new data. When the second data comes from the 3D ToF camera, the ETDE process also performs pose and straight pipe estimation as in the first data. However, after that, it directly requests the detection and estimation results of the first data from EDE and TDE processes. Finally, it extracts and sends the points to EDE and TDE processes for detection and estimation. The process of the second data is repeated for the following data. Through this mechanism, ETDE, EDE, and TDE processes run in parallel, therefore saving processing time.