Integrating Rapid Application Development Courses into Higher Education Curricula

Abstract

1. Introduction

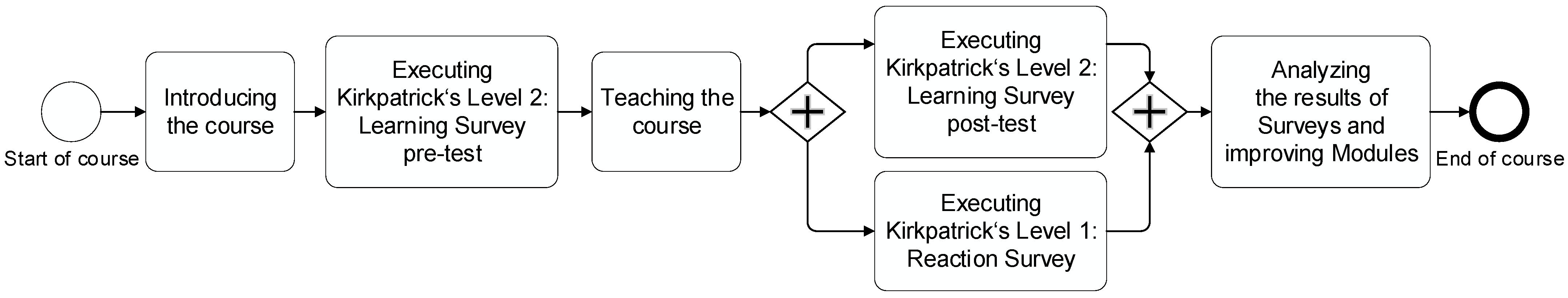

- A methodology that is sufficiently flexible to accommodate the integration of the prepared modules into a variety of study programs, with courses of different credit sizes, is proposed.

- An approach to assessing students’ knowledge and skills in database and RAD using Kirkpatrick’s Model Level 2: Learning Survey is developed.

- An approach to assessing student satisfaction with the courses delivered using Kirkpatrick’s Model Level 1: Reaction Survey is developed.

- The proposed methodology is implemented by integrating the developed modules into two study programs, delivered to the students, at VILNIUS TECH.

- The efficacy of the developed courses in imparting knowledge and skills in database and RAD to students is investigated.

- The level of satisfaction among students with regards to the courses they have received is examined.

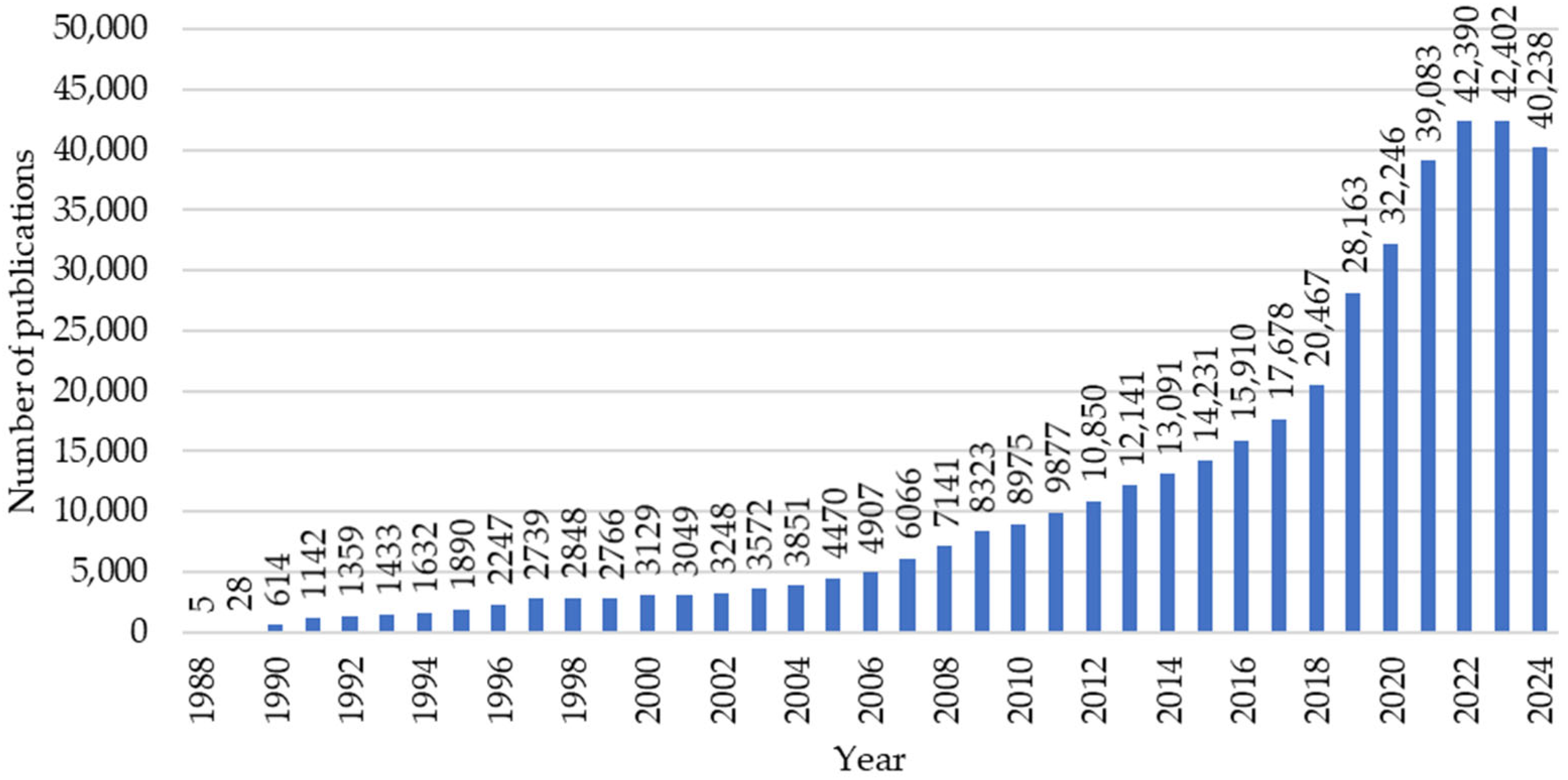

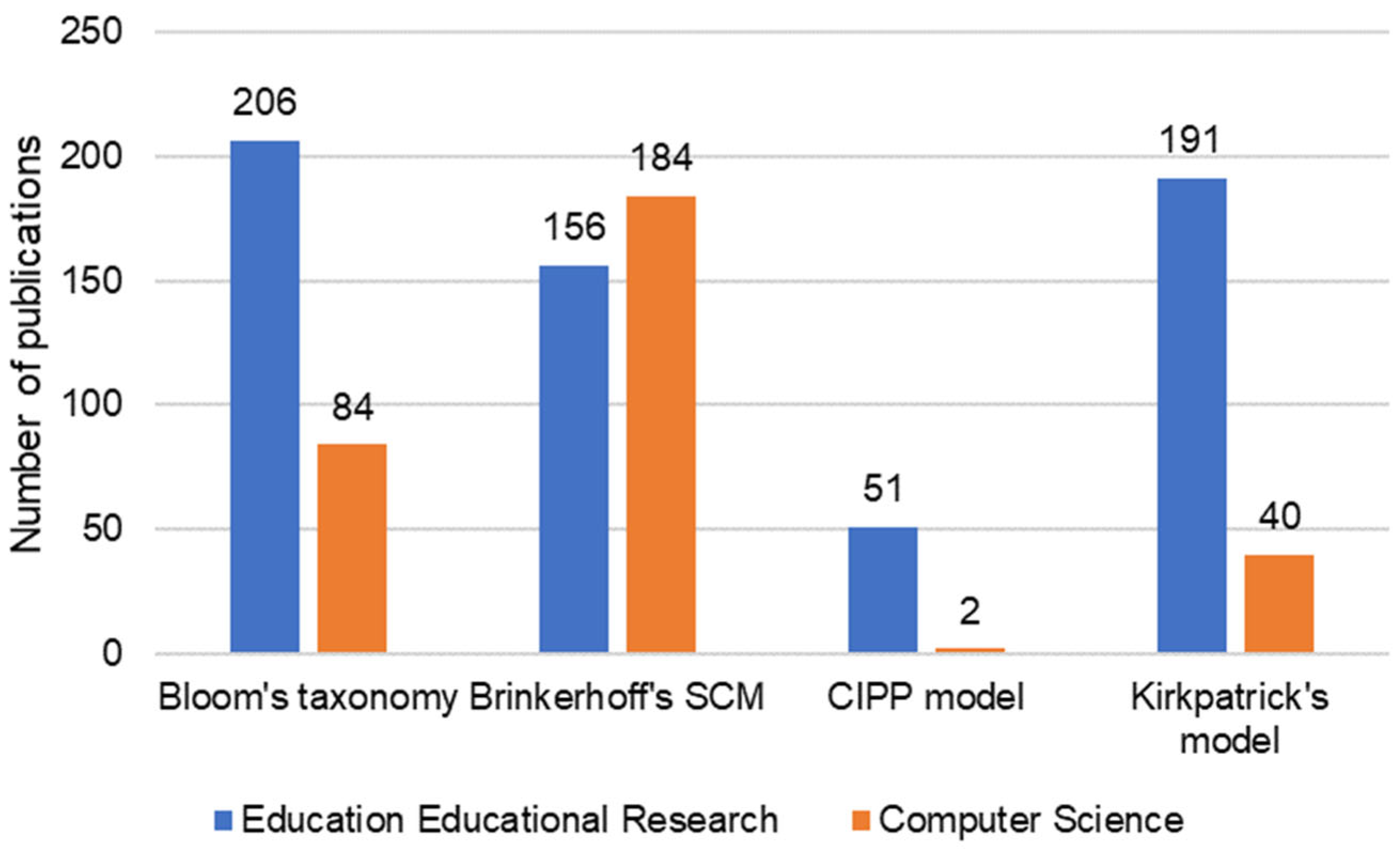

2. Related Work

3. Materials and Methods

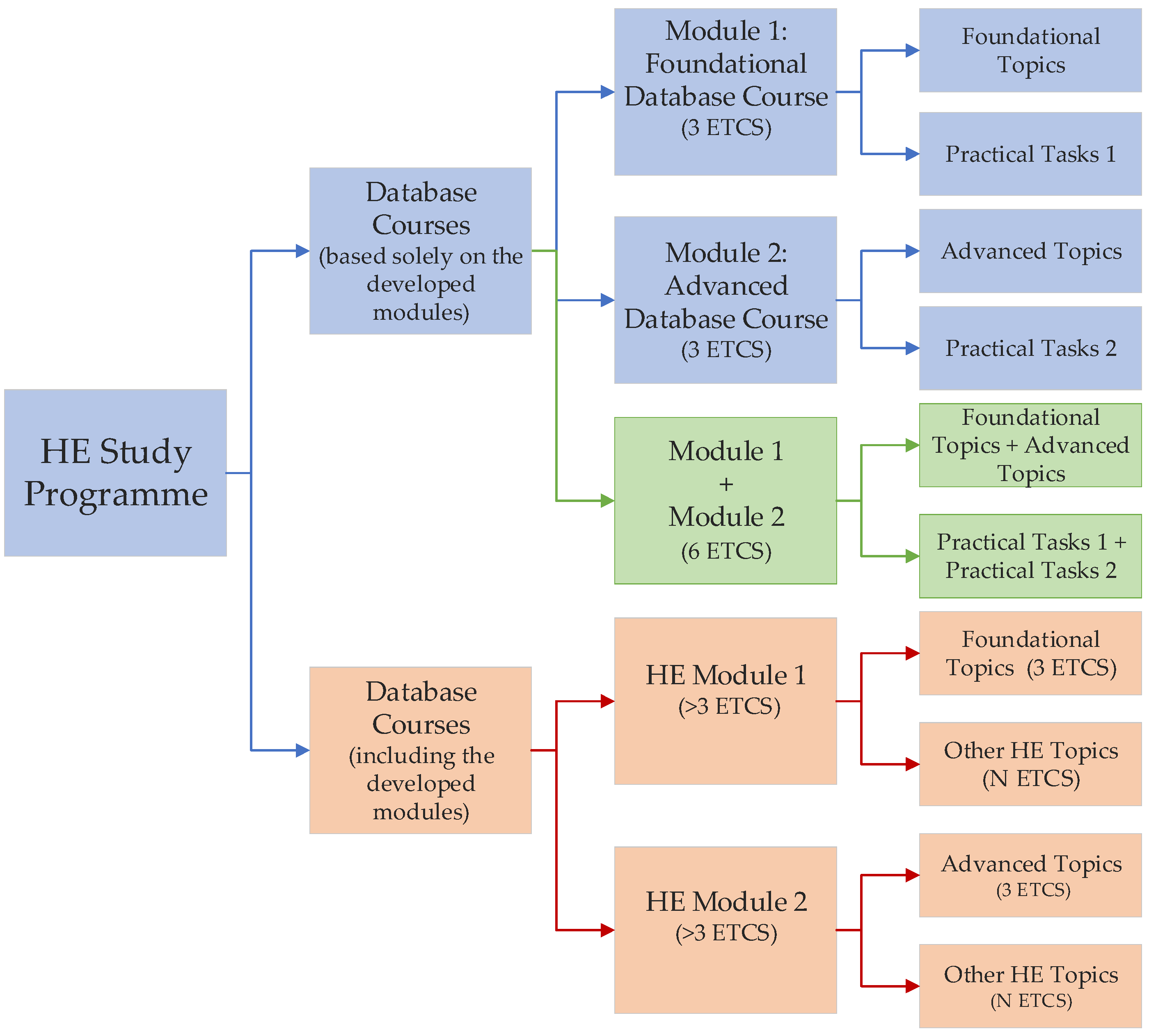

- The two developed modules are added to the study program as separate courses each of 3 ECTS (Figure 4, colored in blue). To implement this methodology, a university should support the 3 ECTS system.

- The two developed modules are added together to form one single course of 6 ECTS (Figure 4, colored in green). To implement this methodology, a university should support the 6 ECTS system.

- The two developed modules are added in conjunction with other supplementary topics to make courses of >3 ECTS (Figure 4, colored in orange). To implement this methodology, a university should support any ECTS system. This is the most flexible strategy of incorporating the module into the existing study program. For this strategy, new modules or courses should not be developed, i.e., the topics of existing courses should be modified by including the topics of the developed modules.

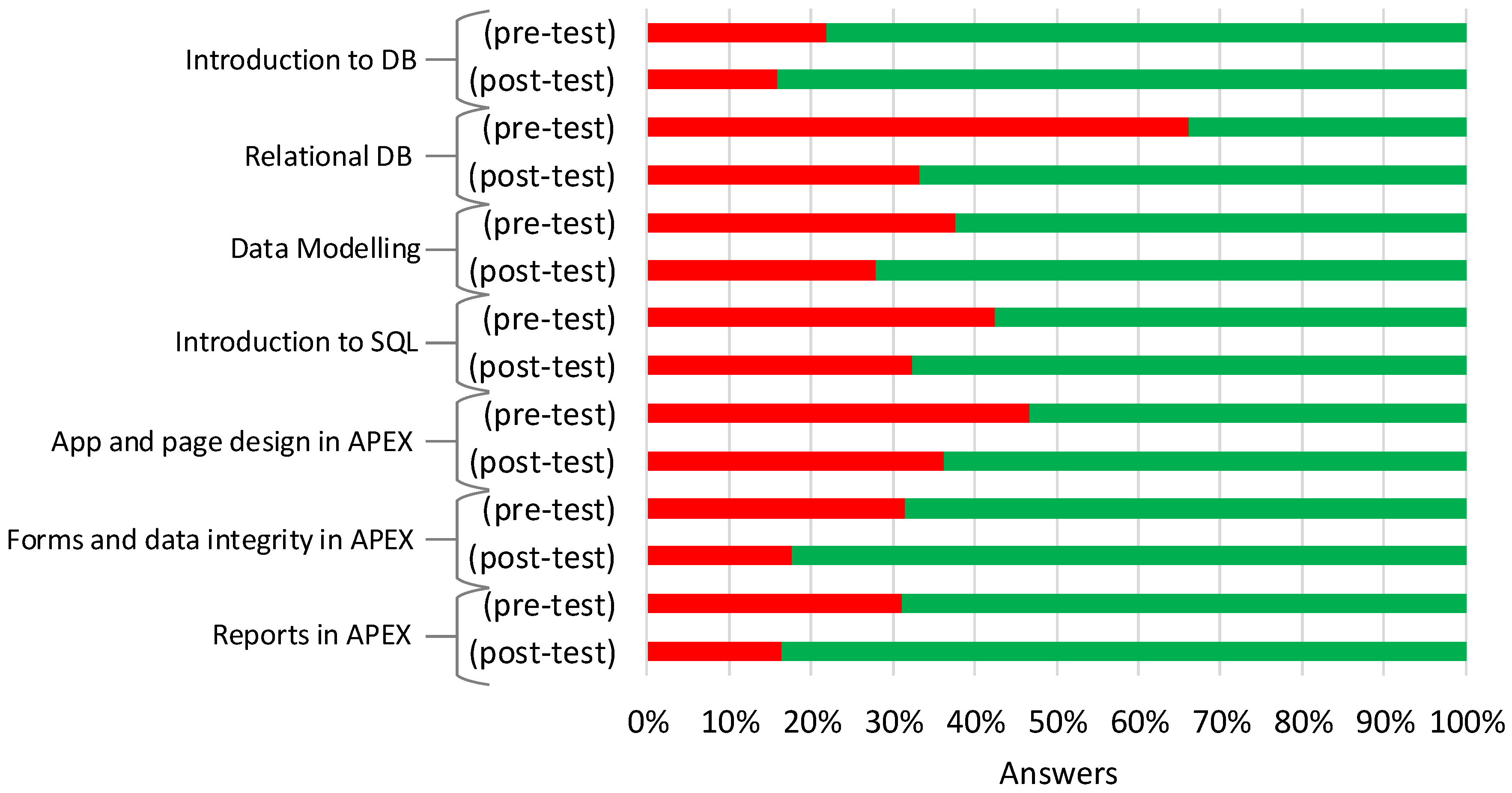

- Introduction to Databases (Module 1)

- Relational Databases (Module 1)

- Data Modelling (Module 1)

- Introduction to SQL (Module 1)

- Advanced SQL (Module 2)

- Application and page design in APEX (Module 2)

- Forms and data integrity in APEX (Module 2)

- Reports in APEX (Module 2)

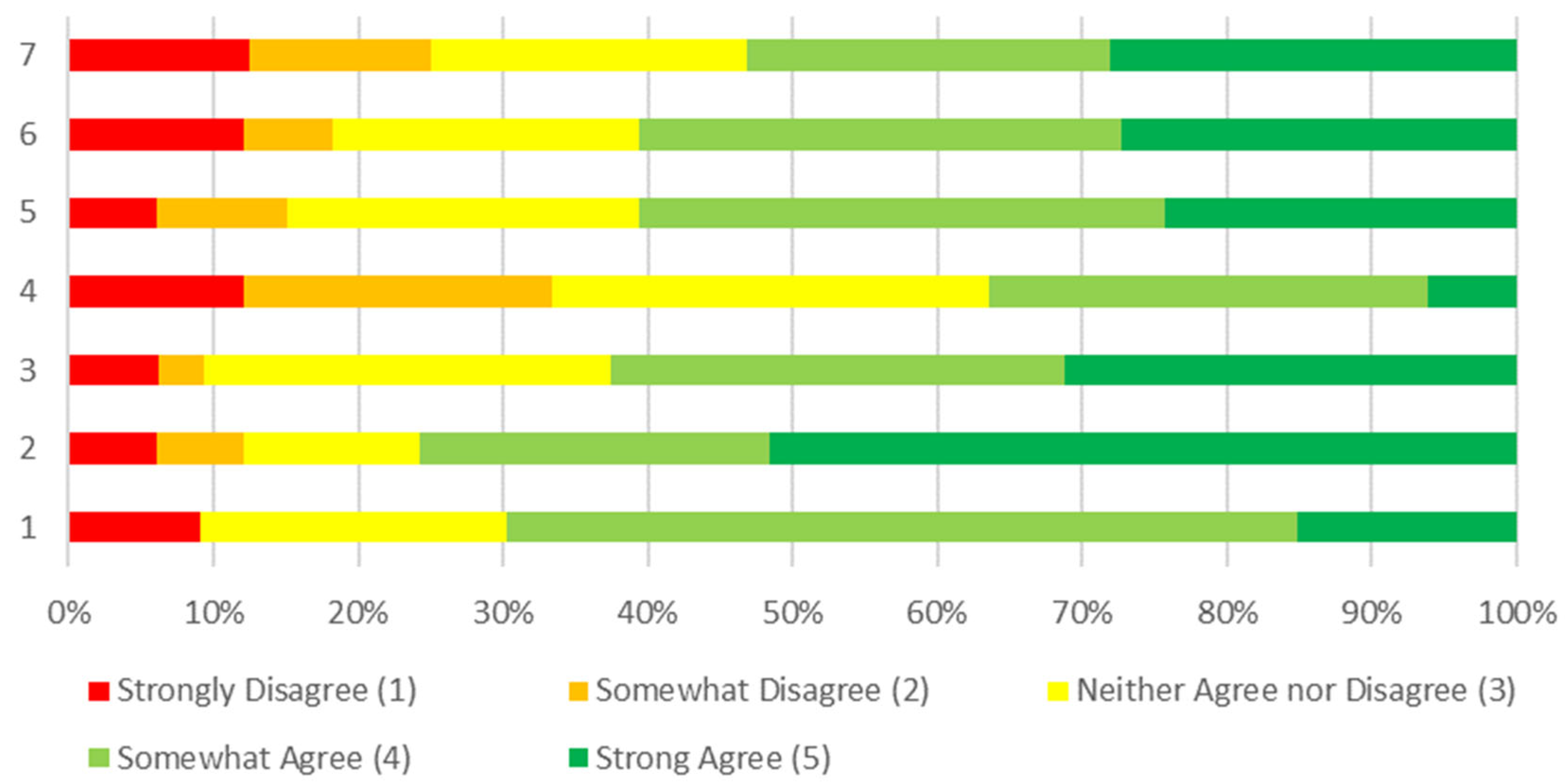

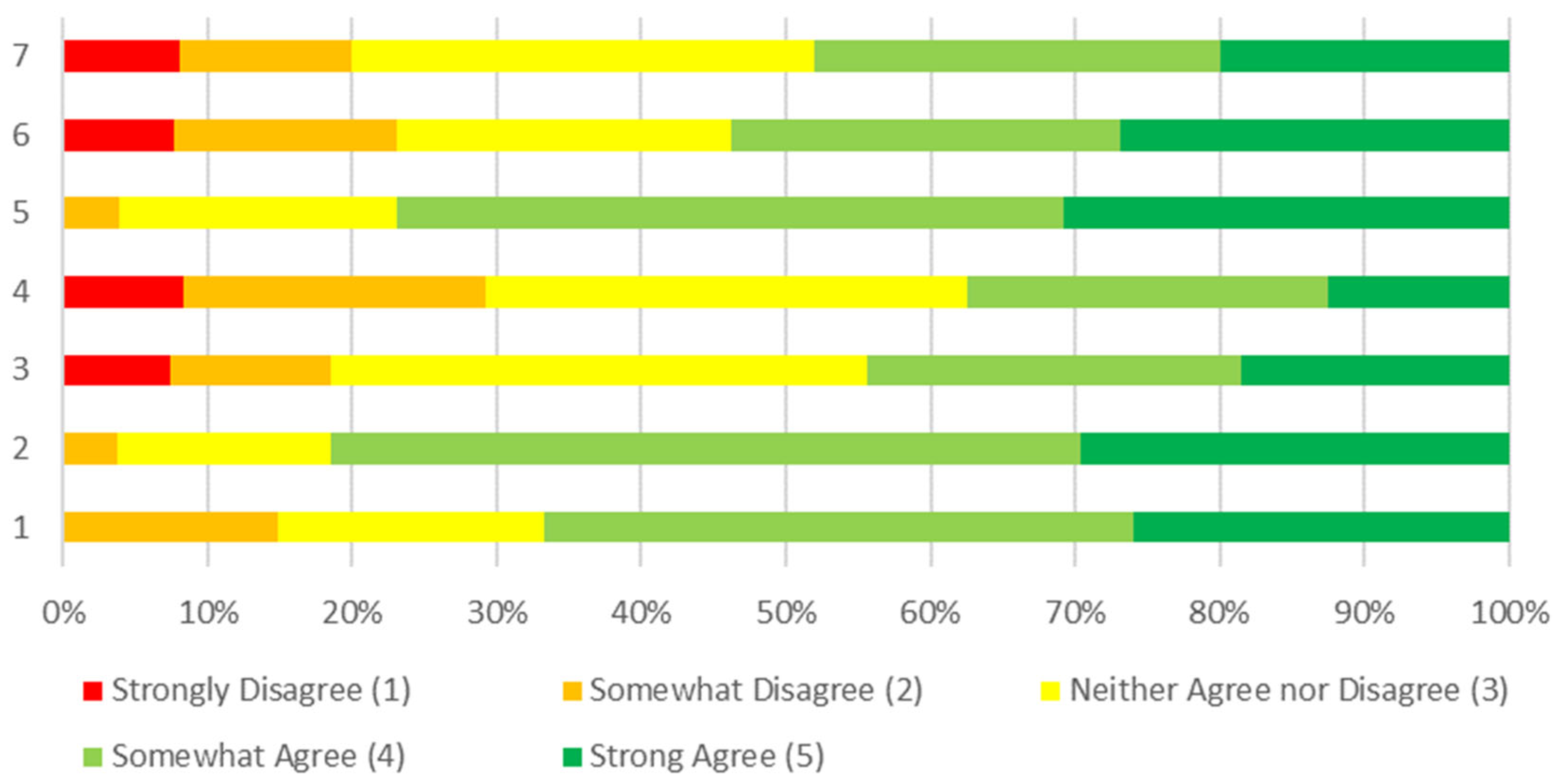

- Q1. I was satisfied with the course overall.

- Q2. This course enhanced my knowledge of the subject matter.

- Q3. The course was relevant to what I might be expected to develop rapid applications/a need to develop applications rapidly.

- Q4. This course provided content that is relevant to my daily job.

- Q5. This course provided delivery methods and materials appropriately.

- Q6. I would recommend this course to others.

- Q7. This course acted as a motivator towards further learning.

- threshold level (i.e., satisfactory) when the student knows the most important theories and principles of the course and is able to convey basic information and problems;

- typical level when the student knows the most important theories and principles and is able to apply knowledge by solving standard problems, and possesses learning skills necessary for further and self-study;

- outstanding level (i.e., advanced) when the student identifies the latest sources of the course, knows the theory and principles and can create and develop new ideas.

4. Results

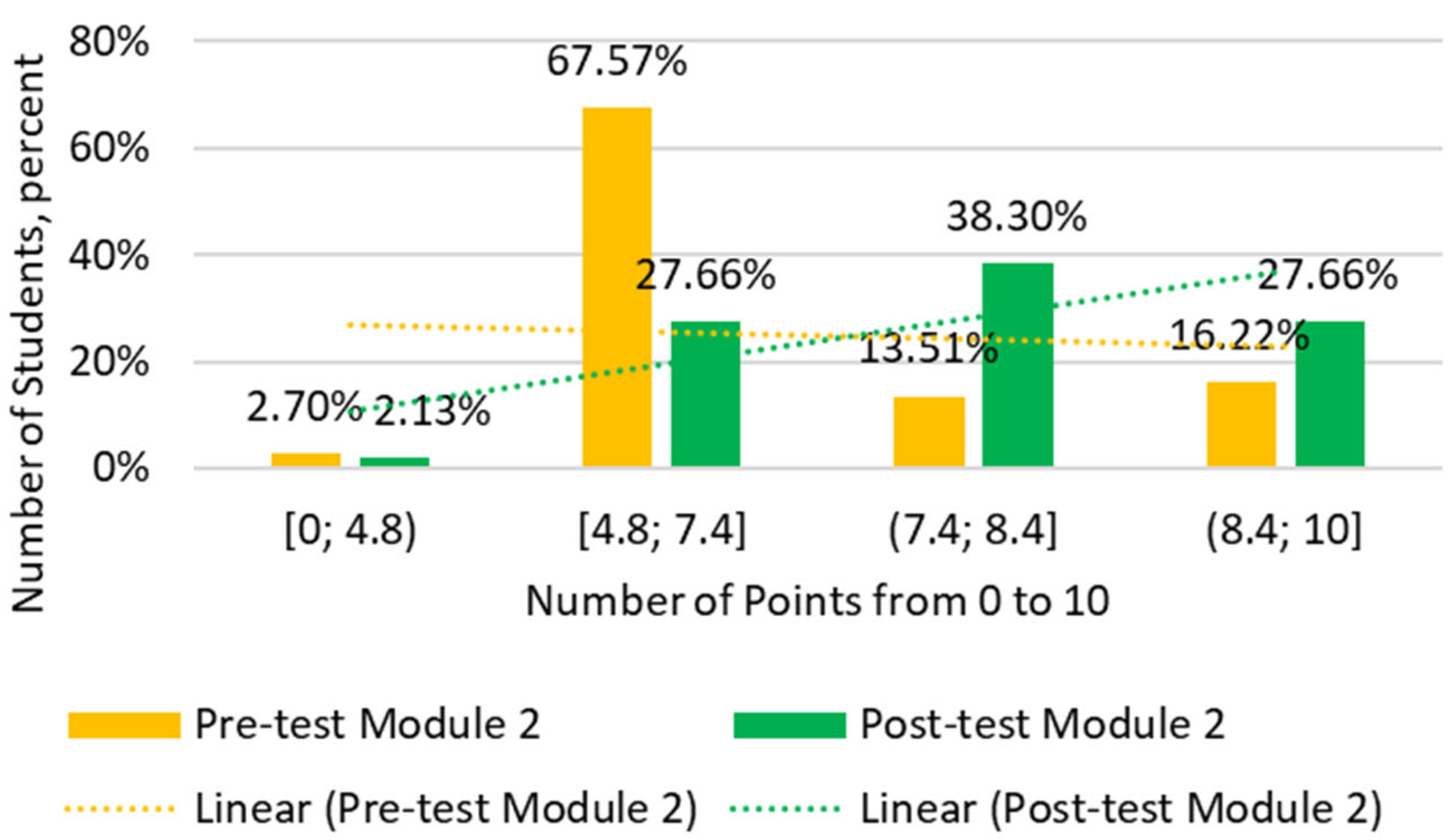

- [0; 4.8)—students who failed the test;

- [4.8; 7.4)—students who have satisfactory knowledge level;

- [7.4; 8.4)—students who have typical knowledge level;

- [8.4; 10]—students who achieved the advanced knowledge level.

5. Discussion

Limitations of the Study

- The developed strategy was implemented only for the courses taught for the entire semester (i.e., 16 weeks). This study has not examined the case when the courses are taught in cycles (i.e., ~4 weeks). However, the proposed methodology does not set strict time limits for teaching and could be applied without time limitations.

- The bias of students regarding the feedback about the course. Another limitation of this study is that Kirkpatrick’s model Level 1: Reaction Survey has been constructed to obtain feedback from the student about the course and its contents. The questions in a survey do not distinguish the content of the course from the teaching quality and the teacher as a person. Nevertheless, the performed Kirkpatrick’s model Level 1: Reaction Survey satisfies the scope and aim of the current study. So, the refinement of the feedback part of the survey is left for future works.

- There are limitations regarding the pre-test and post-test questions. During the study, it was observed that for a more detailed evaluation of the students’ knowledge, some questions should be revised or additional questions should be added. Nevertheless, the current set of questions satisfies the scope and aim of the study. So, the extension and refinement of the questions remains for future works.

- There were a limited number of participants and duration of the study. The methodology and developed modules were integrated into existing study programs only two years ago. Therefore, only a limited number of students and only in two study programs at VILNIUS TECH could be investigated. However, the study is ongoing and the effect of the newly integrated modules on students’ knowledge levels is under investigation with new students’ groups.

6. Conclusions

Future Research

- Deeper and more extensive investigation of the developed methodology at other partner universities.

- Investigation of the proposed methodology with different study time cycles.

- Supplementing the developed courses with the newest teaching methods, which encourage and motivate students for learning and increase digitalization of the developed courses.

- Improvement and extension of the feedback survey with the possibility to exclude the students’ and teachers’ biases from the results.

- Improvement and extension of the pre-test and post-test questions.

- Longitudinal survey of the integrated modules with different students over several years, i.e., collecting more test responses and conducting similar research with a larger set of respondents.

- Evaluating the teacher as a person and the teacher’s influence on the learning outcomes, as well as investigating the impact of digital technologies on the learning outcomes and students’ satisfaction.

- Conducting similar studies with other study programs and courses.

- Summarizing the obtained results together across all five partner universities to highlight and generalize the best practices of RAD course implementation, teaching and course digitalization.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Digital Education Action Plan (2021–2027). Available online: https://education.ec.europa.eu/focus-topics/digital-education/action-plan (accessed on 31 October 2024).

- Benavides, L.M.C.; Tamayo Arias, J.A.; Arango Serna, M.D.; Branch Bedoya, J.W.; Burgos, D. Digital transformation in higher education institutions: A systematic literature review. Sensors 2020, 20, 3291. [Google Scholar] [CrossRef] [PubMed]

- Deroncele-Acosta, A.; Palacios-Núñez, M.L.; Toribio-López, A. Digital Transformation and Technological Innovation on Higher Education Post-COVID-19. Sustainability 2023, 15, 2466. [Google Scholar] [CrossRef]

- González-Zamar, M.-D.; Abad-Segura, E.; López-Meneses, E.; Gómez-Galán, J. Managing ICT for Sustainable Education: Research Analysis in the Context of Higher Education. Sustainability 2020, 12, 8254. [Google Scholar] [CrossRef]

- Alenezi, M. Digital Learning and Digital Institution in Higher Education. Educ. Sci. 2023, 13, 88. [Google Scholar] [CrossRef]

- Alenezi, M. Deep dive into digital transformation in higher education institutions. Educ. Sci. 2021, 11, 770. [Google Scholar] [CrossRef]

- Mohamed Hashim, M.A.; Tlemsani, I.; Matthews, R. Higher education strategy in digital transformation. Educ. Inf. Technol. 2022, 27, 3171–3195. [Google Scholar] [CrossRef]

- Khan, W.; Sohail, S.; Roomi, M.A.; Nisar, Q.A.; Rafiq, M. Opening a new horizon in digitalization for e-learning in Malaysia: Empirical evidence of COVID-19. Educ. Inf. Technol. 2024, 29, 9387–9416. [Google Scholar] [CrossRef]

- Wang, L.; Li, W. The Impact of AI Usage on University Students’ Willingness for Autonomous Learning. Behav. Sci. 2024, 14, 956. [Google Scholar] [CrossRef]

- Ilić, M.P.; Păun, D.; Popović Šević, N.; Hadžić, A.; Jianu, A. Needs and Performance Analysis for Changes in Higher Education and Implementation of Artificial Intelligence, Machine Learning, and Extended Reality. Educ. Sci. 2021, 11, 568. [Google Scholar] [CrossRef]

- Guerrero-Osuna, H.A.; García-Vázquez, F.; Ibarra-Delgado, S.; Mata-Romero, M.E.; Nava-Pintor, J.A.; Ornelas-Vargas, G.; Castañeda-Miranda, R.; Rodríguez-Abdalá, V.I.; Solís-Sánchez, L.O. Developing a Cloud and IoT-Integrated Remote Laboratory to Enhance Education 4.0: An Approach for FPGA-Based Motor Control. Appl. Sci. 2024, 14, 10115. [Google Scholar] [CrossRef]

- Ruiz-Palmero, J.; Colomo-Magaña, E.; Ríos-Ariza, J.M.; Gómez-García, M. Big Data in Education: Perception of Training Advisors on Its Use in the Educational System. Soc. Sci. 2020, 9, 53. [Google Scholar] [CrossRef]

- Spaho, E.; Çiço, B.; Shabani, I. IoT Integration Approaches into Personalized Online Learning: Systematic Review. Computers 2025, 14, 63. [Google Scholar] [CrossRef]

- Petchamé, J.; Iriondo, I.; Korres, O.; Paños-Castro, J. Digital transformation in higher education: A qualitative evaluative study of a hybrid virtual format using a smart classroom system. Heliyon 2023, 9, e16675. [Google Scholar] [CrossRef] [PubMed]

- Bygstad, B.; Øvrelid, E.; Ludvigsen, S.; Dæhlen, M. From dual digitalization to digital learning space: Exploring the digital transformation of higher education. Comput. Educ. 2022, 182, 104463. [Google Scholar] [CrossRef]

- Nicklin, L.L.; Wilsdon, L.; Chadwick, D.; Rhoden, L.; Ormerod, D.; Allen, D.; Witton, G.; Lloyd, J. Accelerated HE digitalisation: Exploring staff and student experiences of the COVID-19 rapid online-learning transfer. Educ. Inf. Technol. 2022, 27, 7653–7678. [Google Scholar] [CrossRef]

- Lu, H.P.; Wang, J.C. Exploring the effects of sudden institutional coercive pressure on digital transformation in colleges from teachers’ perspective. Educ. Inf. Technol. 2023, 28, 15991–16015. [Google Scholar] [CrossRef]

- Cramarenco, R.E.; Burcă-Voicu, M.I.; Dabija, D.-C. Student Perceptions of Online Education and Digital Technologies during the COVID-19 Pandemic: A Systematic Review. Electronics 2023, 12, 319. [Google Scholar] [CrossRef]

- Akour, M.; Alenezi, M. Higher education future in the era of digital transformation. Educ. Sci. 2022, 12, 784. [Google Scholar] [CrossRef]

- Norabuena-Figueroa, R.P.; Deroncele-Acosta, A.; Rodríguez-Orellana, H.M.; Norabuena-Figueroa, E.D.; Flores-Chinte, M.C.; Huamán-Romero, L.L.; Tarazona-Miranda, V.H.; Mollo-Flores, M.E. Digital Teaching Practices and Student Academic Stress in the Era of Digitalization in Higher Education. Appl. Sci. 2025, 15, 1487. [Google Scholar] [CrossRef]

- Rahmani, A.M.; Groot, W.; Rahmani, H. Dropout in online higher education: A systematic literature review. Int. J. Educ. Technol. High. Educ. 2024, 21, 19. [Google Scholar] [CrossRef]

- Qolamani, K.I.B.; Mohammed, M.M. The digital revolution in higher education: Transforming teaching and learning. QALAMUNA J. Pendidik. Sos. Agama 2023, 1, 837–846. [Google Scholar] [CrossRef]

- Thai, D.T.; Quynh, H.T.; Linh, P.T.T. Digital transformation in higher education: An integrative review approach. TNU J. Sci. Technol. 2021, 226, 139–146. [Google Scholar] [CrossRef]

- Tinjić, D.; Nordén, A. Crisis-driven digitalization and academic success across disciplines. PLoS ONE 2024, 19, e0293588. [Google Scholar] [CrossRef]

- de Oliveira, C.F.; Sobral, S.R.; Ferreira, M.J.; Moreira, F. How does learning analytics contribute to prevent students’ dropout in higher education: A systematic literature review. Big Data Cogn. Comput. 2021, 5, 64. [Google Scholar] [CrossRef]

- Nurmalitasari; Awang Long, Z.; Faizuddin Mohd Noor, M. Factors influencing dropout students in higher education. Educ. Res. Int. 2023, 2023, 7704142. [Google Scholar] [CrossRef]

- Phan, M.; De Caigny, A.; Coussement, K. A decision support framework to incorporate textual data for early student dropout prediction in higher education. Decis. Support Syst. 2023, 168, 113940. [Google Scholar] [CrossRef]

- Roy, R.; Al-Absy, M.S.M. Impact of Critical Factors on the Effectiveness of Online Learning. Sustainability 2022, 14, 14073. [Google Scholar] [CrossRef]

- Li, C.; Herbert, N.; Yeom, S.; Montgomery, J. Retention Factors in STEM Education Identified Using Learning Analytics: A Systematic Review. Educ. Sci. 2022, 12, 781. [Google Scholar] [CrossRef]

- Hoca, S.; Dimililer, N. A Machine Learning Framework for Student Retention Policy Development: A Case Study. Appl. Sci. 2025, 15, 2989. [Google Scholar] [CrossRef]

- Guzmán Rincón, A.; Sotomayor Soloaga, P.A.; Carrillo Barbosa, R.L.; Barragán-Moreno, S.P. Satisfaction with the institution as a predictor of the intention to drop out in online higher education. Cogent Educ. 2024, 11, 2351282. [Google Scholar] [CrossRef]

- Gusenbauer, M.; Haddaway, N.R. Which academic search systems are suitable for systematic reviews or meta-analyses? Evaluating retrieval qualities of Google Scholar, PubMed, and 26 other resources. Res. Synth. Methods 2020, 11, 181–217. [Google Scholar] [CrossRef] [PubMed]

- Donthu, N.; Kumar, S.; Mukherjee, D.; Pandey, N.; Lim, W.M. How to conduct a bibliometric analysis: An overview and guidelines. J. Bus. Res. 2021, 133, 285–296. [Google Scholar] [CrossRef]

- Kalibatienė, D.; Miliauskaitė, J. A hybrid systematic review approach on complexity issues in data-driven fuzzy inference systems development. Informatica 2021, 32, 85–118. [Google Scholar] [CrossRef]

- Yan, L.; Zhiping, W. Mapping the Literature on Academic Publishing: A Bibliometric Analysis on WOS. Sage Open 2023, 13. [Google Scholar] [CrossRef]

- Dhankhar, K. Training effectiveness evaluation models. A comparison. Indian J. Train. Dev. 2020, 3, 66–73. [Google Scholar]

- Liu, S.; Zu, Y. Evaluation Models in Curriculum and Educational Program-A Document Analysis Research. J. Technol. Hum. 2024, 5, 32–38. [Google Scholar] [CrossRef]

- Ali, M.S.; Tufail, M.; Qazi, R. Training Evaluation Models: Comparative Analysis. Res. J. Soc. Sci. Econ. Rev. 2022, 3, 51–63. [Google Scholar] [CrossRef]

- Prasad, G.N.R. Evaluating student performance based on bloom’s taxonomy levels. In Proceedings of the Journal of Physics: Conference Series, Kalyani, India, 8–9 October 2020; IOP Publishing: Bristol, UK, 2021; Volume 1797, p. 012063. [Google Scholar] [CrossRef]

- Ullah, Z.; Lajis, A.; Jamjoom, M.; Altalhi, A.; Saleem, F. Bloom’s taxonomy: A beneficial tool for learning and assessing students’ competency levels in computer programming using empirical analysis. Comp. Appl. Eng. Educ. 2020, 28, 1628–1640. [Google Scholar] [CrossRef]

- West, J. Utilizing Bloom’s taxonomy and authentic learning principles to promote preservice teachers’ pedagogical content knowledge. Soc. Sci. Hum. Open 2023, 8, 100620. [Google Scholar] [CrossRef]

- Qiu, Z.; Wang, S.; Chen, X.; Xiang, X.; Chen, Q.; Kong, J. Research on the Influence of Nonmorphological Elements’ Cognition on Architectural Design Education in Universities: Third Year Architecture Core Studio in Special Topics “Urban Village Renovation Design”. Buildings 2023, 13, 2255. [Google Scholar] [CrossRef]

- Joseph-Richard, P.; Cadden, T. Delivery of e-Research-informed Teaching (e-RIT) in Lockdown: Case Insights from a Northern Irish University. In Agile Learning Environments amid Disruption: Evaluating Academic Innovations in Higher Education During COVID-19; Springer International Publishing: Cham, Switzerland, 2022; pp. 495–512. [Google Scholar] [CrossRef]

- Asghar, M.Z.; Afzaal, M.N.; Iqbal, J.; Waqar, Y.; Seitamaa-Hakkarainen, P. Evaluation of In-Service Vocational Teacher Training Program: A Blend of Face-to-Face, Online and Offline Learning Approaches. Sustainability 2022, 14, 13906. [Google Scholar] [CrossRef]

- Heydari, M.R.; Taghva, F.; Amini, M.; Delavari, S. Using Kirkpatrick’s model to measure the effect of a new teaching and learning methods workshop for health care staff. BMC Res. Notes 2019, 12, 338. [Google Scholar] [CrossRef] [PubMed]

- Alsalamah, A.; Callinan, C. The Kirkpatrick model for training evaluation: Bibliometric analysis after 60 years (1959–2020). Ind. Commer. Train. 2022, 54, 36–63. [Google Scholar] [CrossRef]

- Ghasemi, R.; Akbarilakeh, M.; Fattahi, A.; Lotfali, E. Evaluation of the Effectiveness of Academic Writing Workshop in Medical Students Using the Kirkpatrick Model. Nov. Biomed. 2020, 8, 29824. [Google Scholar] [CrossRef]

- Khan, N.F.; Ikram, N.; Murtaza, H.; Javed, M. Evaluating protection motivation based cybersecurity awareness training on Kirkpatrick’s Model. Comput. Secur. 2023, 125, 103049. [Google Scholar] [CrossRef]

- Gultom, C.S.H.; Komala, R.; Akbar, M. Flight Attendant Training Program Evaluation Based on Kirkpatrick Model. Nusant. Sci. Technol. Proc. 2021, 2021, 352–361. [Google Scholar] [CrossRef]

- Toosi, M.; Modarres, M.; Amini, M.; Geranmayeh, M. Context, Input, Process, and Product Evaluation Model in medical education: A systematic review. J. Educ. Health Promot. 2021, 10, 199. [Google Scholar] [CrossRef]

- Valarmathi, S.; Sivaranjani, E.; Sundar, J.S.; Srinivas, G.; Kalpana, S. Evaluation of Research Methodology Workshop Using CIRO Model. J. Comm. Med. Public Health Rep. 2024, 5, 14. [Google Scholar] [CrossRef]

- Ching, L.K.; Lee, C.Y.; Wong, C.K.; Lai, M.T.; Lip, A. Assessing the Zoom learning experience of the elderly under the effects of COVID in Hong Kong: Application of the IPO model. Interact. Technol. Smart Educ. 2023, 20, 367–384. [Google Scholar] [CrossRef]

- Yuan, S.; Rahim, A.; Kannappan, S.; Dongre, A.; Jain, A.; Kar, S.S.; Mukherjee, S.; Vyas, R. Success stories: Exploring perceptions of former fellows of a global faculty development program for health professions educators. BMC Med. Educ. 2024, 24, 1072. [Google Scholar] [CrossRef]

- Cahapay, M. Kirkpatrick model: Its limitations as used in higher education evaluation. Int. J. Assess. Tools Educ. 2021, 8, 135–144. [Google Scholar] [CrossRef]

- Afifah, S.; Mudzakir, A.; Nandiyanto, A.B.D. How to calculate paired sample t-test using SPSS software: From step-by-step processing for users to the practical examples in the analysis of the effect of application anti-fire bamboo teaching materials on student learning outcomes. Indones. J. Teach. Sci. 2022, 2, 81–92. [Google Scholar] [CrossRef]

- Fiandini, M.; Nandiyanto, A.B.D.; Al Husaeni, D.F.; Al Husaeni, D.N.; Mushiban, M. How to calculate statistics for significant difference test using SPSS: Understanding students comprehension on the concept of steam engines as power plant. Indones. J. Sci. Technol. 2024, 9, 45–108. [Google Scholar] [CrossRef]

- Janczyk, M.; Pfister, R. Confidence Intervals. In Understanding Inferential Statistics; Springer: Berlin/Heidelberg, Germany, 2023; pp. 69–80. [Google Scholar] [CrossRef]

- Lenhard, W.; Lenhard, A. Computation of Effect Sizes. Available online: https://www.psychometrica.de/effect_size.html (accessed on 10 March 2025).

- Kraft, M.A. Interpreting Effect Sizes of Education Interventions. Educ. Res. 2020, 49, 241–253. [Google Scholar] [CrossRef]

- Robal, T.; Reinsalu, U.; Jürimägi, L.; Heinsar, R. Introducing rapid web application development with Oracle APEX to students of higher education. New Trends Comput. Sci. 2024, 2, 69–80. [Google Scholar] [CrossRef]

- Robal, T.; Reinsalu, U.; Leoste, J.; Jürimägi, L.; Heinsar, R. Teaching Rapid Application Development Skills for Digitalisation Challenges. In Digital Business and Intelligent Systems; Lupeikienė, A., Ralyté, J., Dzemyda, G., Eds.; Springer: Cham, Switzerland, 2024; Volume 2157. [Google Scholar] [CrossRef]

- Radvilaitė, U.; Kalibatienė, D.; Stankevič, J. Implementing a rapid application development course in higher education and measuring its impact using Kirkpatrick’s model: A case study at Vilnius Gediminas Technical University. New Trends Comput. Sci. 2024, 2, 81–90. [Google Scholar] [CrossRef]

- Bell, K. Increasing undergraduate student satisfaction in Higher Education: The importance of relational pedagogy. J. Furth. High. Educ. 2022, 46, 490–503. [Google Scholar] [CrossRef]

- Baig, M.I.; Yadegaridehkordi, E. Flipped classroom in higher education: A systematic literature review and research challenges. Int. J. Educ. Technol. High. Educ. 2023, 20, 61. [Google Scholar] [CrossRef]

- Yangari, M.; Inga, E. Educational Innovation in the Evaluation Processes within the Flipped and Blended Learning Models. Educ. Sci. 2021, 11, 487. [Google Scholar] [CrossRef]

- Colomo Magaña, A.; Colomo Magaña, E.; Guillén-Gámez, F.D.; Cívico Ariza, A. Analysis of Prospective Teachers’ Perceptions of the Flipped Classroom as a Classroom Methodology. Societies 2022, 12, 98. [Google Scholar] [CrossRef]

- Khaldi, A.; Bouzidi, R.; Nader, F. Gamification of e-learning in higher education: A systematic literature review. Smart Learn. Environ. 2023, 10, 10. [Google Scholar] [CrossRef]

- Mellado, R.; Cubillos, C.; Vicari, R.M.; Gasca-Hurtado, G. Leveraging Gamification in ICT Education: Examining Gender Differences and Learning Outcomes in Programming Courses. Appl. Sci. 2024, 14, 7933. [Google Scholar] [CrossRef]

- de la Peña, D.; Lizcano, D.; Martínez-Álvarez, I. Learning through play: Gamification model in university-level distance learning. Entertain. Comput. 2021, 39, 100430. [Google Scholar] [CrossRef]

- Kurtz, G.; Amzalag, M.; Shaked, N.; Zaguri, Y.; Kohen-Vacs, D.; Gal, E.; Zailer, G.; Barak-Medina, E. Strategies for Integrating Generative AI into Higher Education: Navigating Challenges and Leveraging Opportunities. Educ. Sci. 2024, 14, 503. [Google Scholar] [CrossRef]

- Khlaif, Z.N.; Ayyoub, A.; Hamamra, B.; Bensalem, E.; Mitwally, M.A.A.; Ayyoub, A.; Hattab, M.K.; Shadid, F. University Teachers’ Views on the Adoption and Integration of Generative AI Tools for Student Assessment in Higher Education. Educ. Sci. 2024, 14, 1090. [Google Scholar] [CrossRef]

- Nikolovski, V.; Trajanov, D.; Chorbev, I. Advancing AI in Higher Education: A Comparative Study of Large Language Model-Based Agents for Exam Question Generation, Improvement, and Evaluation. Algorithms 2025, 18, 144. [Google Scholar] [CrossRef]

- Huang, Q.; Lv, C.; Lu, L.; Tu, S. Evaluating the Quality of AI-Generated Digital Educational Resources for University Teaching and Learning. Systems 2025, 13, 174. [Google Scholar] [CrossRef]

| Model | Levels | Usage | Data Collection Tool | Purpose |

|---|---|---|---|---|

| (1) | (2) | (3) | (4) | (5) |

| Bloom’s taxonomy | 1. Knowledge 2. Comprehension 3. Application 4. Analysis 5. Synthesis 6. Evaluation [39,40,41] | Preparing assessment questions, planning learning outcomes and assessment [40,41] | Set of 30 questions, 5 for each level [39] Empirical test [40] | Determine which learning (competency) level has been achieved [40,42] |

| Brinkerhoff’s Success Case Method (SCM) | 1. Goal Setting 2. Program Design 3. Program Implementation 4. Immediate Outcomes 5. Intermediate or Usage Outcomes 6. Impacts and Worth [38] | Online teaching for postgraduates [43] | Survey and interviews [43] | Identify the additional factors impacting the success of failure |

| Kirkpatrick’s model | 1. Reaction 2. Learning 3. Behavior 4. Results [36,37,44] | Training for healthcare staff [45] Medical education [46] Scientific writing workshop for medical students [47] Cybersecurity training [48] Flight attendant training program [49] | Questionnaire for reaction; pre-test and post-test for learning; observational checklist for behavior [45,48] | Effectiveness of training, learning measurement [38] |

| CIPP model | 1. Context evaluation 2. Input evaluation 3. Process evaluation 4. Product evaluation [36,37,44] | for formal education systems [36,37] medical education programs [50] | Questionnaires [50] | Improve the curriculum or the educational program [50] |

| CIRO model | 1. Context 2. Input 3. Reaction 4. Output [36] | research methodology workshop for postgraduate students from medical colleges [51] | Feedback questionnaires; follow-up test; pre- and post-test [51] | Monitor trainee’s progress before, during and after training [38] |

| IPO model | 1. Input 2. Process 3. Output 4. Outcome [36] | Elderly students’ perceptions regarding their Zoom learning experiences [52] | Online survey and focus group interviews [52] | Maximize the efficiency of training but lower (reduce) the cost of training [38] |

| Module 1 | Module 2 |

|---|---|

| 1. Introduction to Module 1 2. Introduction to Databases 3. Relational Databases 4. Database Normalization (1–3) 5. Physical Data Model 6. Access to Oracle APEX Environment 7. Introduction to Structured Query Language (SQL) 8. Application (App) Development in APEX (at wizard level) | 1. Introduction to Module 2 2. APEX Course Project 3. Advanced Data Normalization (3+ additional) 4. Advanced SQL 5. App building in APEX: pages and reports 6. App building in APEX: forms 7. App building in APEX: navigation and styles 8. Other Advanced Functions in APEX |

| Answers | Module 1 | Module 2 |

|---|---|---|

| Strongly Disagree (1) | 9.17 | 4.40 |

| Somewhat Disagree (2) | 8.30 | 11.54 |

| Neither Agree nor Disagree (3) | 22.71 | 25.27 |

| Somewhat Agree (4) | 33.62 | 35.16 |

| Strong Agree (5) | 26.20 | 23.63 |

| Number of responses | 33 | 27 |

| Attributes | Module 1 Values | Module 2 Values |

|---|---|---|

| Variance for pre-test | 1.68 | 1.54 |

| Variance for post-test | 2.27 | 1.50 |

| Mean for pre-test | 6.05 | 6.78 |

| Mean for post-test | 7.23 | 7.56 |

| DF | 79 | 77 |

| t Stat | −3.7964 | −2.8808 |

| P(T ≤ t) two-tail | 0.00029 | 0.00514 |

| t Critical two-tail | 1.99045 | 1.99125 |

| Confidence level | 0.95 | 0.95 |

| Confidence interval | 0.378–1.29 | 0.193–1.075 |

| Effect size | 0.838 | 0.634 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Radvilaitė, U.; Kalibatienė, D. Integrating Rapid Application Development Courses into Higher Education Curricula. Appl. Sci. 2025, 15, 3323. https://doi.org/10.3390/app15063323

Radvilaitė U, Kalibatienė D. Integrating Rapid Application Development Courses into Higher Education Curricula. Applied Sciences. 2025; 15(6):3323. https://doi.org/10.3390/app15063323

Chicago/Turabian StyleRadvilaitė, Urtė, and Diana Kalibatienė. 2025. "Integrating Rapid Application Development Courses into Higher Education Curricula" Applied Sciences 15, no. 6: 3323. https://doi.org/10.3390/app15063323

APA StyleRadvilaitė, U., & Kalibatienė, D. (2025). Integrating Rapid Application Development Courses into Higher Education Curricula. Applied Sciences, 15(6), 3323. https://doi.org/10.3390/app15063323