Abstract

Hair loss affects over 30% of the global population, impacting psychological well-being and social interactions. Robotic hair transplantation has emerged as a pivotal solution, requiring precise hair follicle detection for effective treatment. Traditional methods utilizing horizontal bounding boxes (HBBs) often misclassify due to the follicles’ elongated shapes and varied orientations. This study introduces YOLO-OHFD, a novel YOLO-based method using oriented bounding boxes (OBBs) for improved hair follicle detection in dermoscopic images, addressing the limitations of traditional HBB approaches by enhancing detection accuracy and computational efficiency. YOLO-OHFD incorporates the ECA-Res2Block in its feature extraction network to manage occlusions and hair follicle orientation variations effectively. A Feature Alignment Module (FAM) is embedded within the feature fusion network to ensure precise multi-scale feature integration. We utilize angle classification over regression for robust angle prediction. The method was validated using a custom dataset comprising 500 dermoscopic images with detailed annotations of hair follicle orientations and classifications. The proposed YOLO-OHFD method outperformed existing techniques, achieving a mean average precision (mAP) of 87.01% and operating at 43.67 frames per second (FPS). These metrics attest to its efficacy and real-time application potential. The angle classification component particularly enhanced the stability and precision of orientation predictions, critical for the accurate positioning required in robotic procedures. YOLO-OHFD represents a significant advancement in robotic hair transplantation, providing a robust framework for precise, efficient, and real-time hair follicle detection. Future work will focus on refining computational efficiency and testing in dynamic surgical environments to broaden the clinical applicability of this technology.

1. Introduction

Hair loss is a pervasive global issue that affects more than 30% of the population, ranging from mild to severe cases [1]. Alarmingly, the prevalence of hair loss is increasing annually and affecting younger demographics, severely impacting individuals’ family lives, social opportunities, and employment prospects. Moreover, hair loss can exacerbate psychological conditions such as anxiety and depression [2]. Hair transplantation has emerged as one of the most effective treatments, enabling the transplantation of healthy hair follicles to areas of hair loss. However, this process is highly demanding, requiring significant expertise, precision, and prolonged procedural durations, which may limit its accessibility and efficiency [3].

Hair transplantation procedures have seen advancements through the integration of robotics and image processing techniques, aimed at enhancing precision and reducing human error, as shown in Figure A1. For example, Hoffmann [4] proposed using dermoscopy and automatic digital image analysis for segmenting hairs, enabling the calculation of parameters like hair diameter and density. Vallotton et al. [5] developed a method combining color space transformation with a difference of Gaussian filtering for counting and measuring hair lengths. Shih [6] introduced preprocessing techniques such as segmental linear contrast stretching and multi-scale detection methods for hair segmentation. Despite their contributions, these methods are sensitive to parameter configurations and often struggle with background interference during hair segmentation.

Deep learning approaches have shown promising improvements in hair follicle detection and classification by leveraging diverse image features. Konstantinos [7] improved segmentation accuracy using RetinaNet and SegNet algorithms to identify preoperative hair follicles, hair locations, and postoperative scar marks. Kubaisi [8] applied a multi-task deep learning method based on Mask R-CNN [9] to detect hair follicles and evaluate hair loss severity. Further, Kim et al. [10] explored the performance of EfficientDet [11], YOLOv4 [12], and DetectoRS [13] in identifying and classifying different hair follicle types. Additionally, Gu et al. [14] implemented Faster R-CNN [15] and SSD [16] for detailed scalp health analysis, while Gallucci et al. [17] employed DenseNet121 [18] and U-Net [19] for automated hair counting.

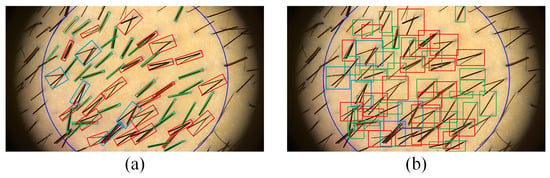

Existing methods for hair follicle detection primarily utilize horizontal bounding boxes (HBBs) for localization. However, the elongated and arbitrarily oriented nature of hair follicles in dermoscopic images poses challenges for HBB-based approaches. These methods often include excessive background information, leading to overlapping bounding boxes and increased instances of misdetections or omissions. In contrast, oriented bounding boxes (OBBs) can more accurately represent the shape, size, and direction of hair follicles, minimizing background interference. Figure 1 illustrates the superiority of OBB labeling over HBB labeling for hair follicles.

Figure 1.

Comparison of hair follicles labeled with different detection boxes. (a) HBB-labeled hair follicles. (b) OBB-labeled hair follicles.

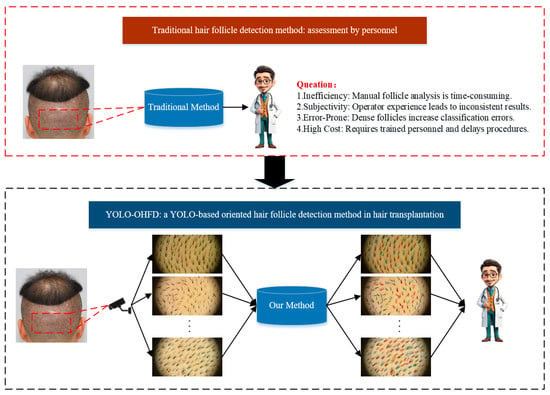

While oriented detection methods, such as R2CNN [20], RRPN [21], SCRDet [22], Rol-Transformer [23], and Gliding Vertex [24], among others [25,26,27,28,29], have proven effective in applications like remote sensing and text detection, their direct application to oriented hair follicle detection is limited due to the increased classification difficulty of densely packed and overlapping follicles. As shown in Figure 2, the research question of this study focuses on addressing the challenges in manual follicle analysis, which include inefficiency due to time-consuming processes, subjectivity arising from operator-dependent variability, susceptibility to errors in dense follicle classification, and the high costs associated with the need for trained personnel and delayed procedures.

Figure 2.

Motivation of this study.

To overcome the limitations of existing approaches, this study introduces a YOLO-based oriented hair follicle detection method named YOLO-OHFD. The proposed method incorporates oriented object detection to address the challenges of detecting hair follicles with large aspect ratios and arbitrary orientations. It employs ECA-Res2Block to enhance feature focus on hair follicles, improving classification accuracy. Additionally, the Feature Alignment Module (FAM) and decoupled heads are utilized to enhance OBB localization precision. An OBB-based dataset is constructed specifically for hair follicle detection and classification, providing a robust foundation for robot-assisted hair transplantation tasks.

The main contributions of this paper are summarized as follows:

- An oriented object detection method is introduced for the hair follicle detection task, enabling precise localization of hair follicles and reducing background interference in robot-assisted hair transplantation.

- A YOLO-OHFD framework is proposed, integrating ECA-Res2Block, FAM, and decoupled heads to enhance the network’s accuracy in detecting and classifying hair follicles.

- An OBB-based oriented hair follicle detection dataset is developed, offering a benchmark for evaluating methods designed for hair follicle detection and classification in dermoscopic images.

The rest of this paper is organized as follows. Firstly, Section 2 provides a detailed description of the proposed method’s framework and its specific details. Section 3 presents the rotating frame-based scalp hair follicle dataset, followed by the experimental results and discussion. Finally, the conclusions are presented in Section 4.

2. Materials and Methods

2.1. Proposed Method

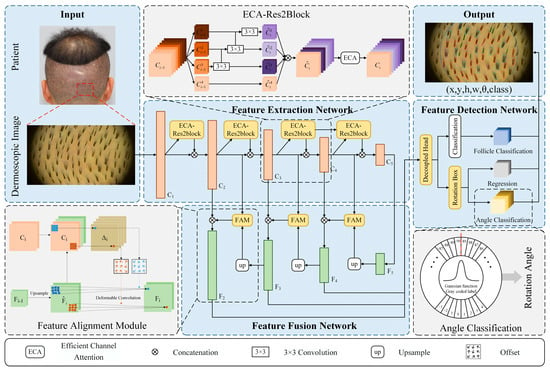

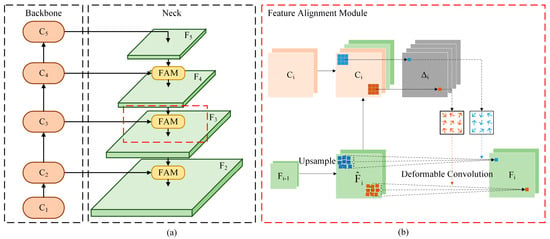

In this study, we introduce a novel oriented object detection method tailored for hair follicle identification, designated as YOLO-OHFD (You Only Look Once–Oriented Hair Follicle Detection). As depicted in Figure 3, the proposed system architecture comprises three core components: a feature extraction network, a feature fusion network, and a detection network. Initially, dermoscopic images from the posterior occipital region are processed through the feature extraction network, which integrates ECA-Res2Block to facilitate multi-scale feature extraction. This network captures feature layers at various scales (C1, C2, C3, C4, C5), essential for analyzing the orientation of hair follicles. Subsequently, these multi-scale feature layers are amalgamated in the feature fusion network, resulting in combined feature maps (F2, F3, F4, F5). The Feature Alignment Module (FAM) within this network addresses potential misalignments during the fusion of oriented object features, ensuring precise feature integration. The enriched feature maps (F2, F3, F4) are then relayed to the detection network, which predicts the position (x, y, w, h) and orientation angle θ of each follicle, alongside classifying the follicles into categories {1, 2, 3, 3+} based on the number of hairs. These classifications correspond to Class1, Class2, Class3, and Class4, representing follicles containing one, two, three, and more than three hairs, respectively. For visual clarity, Figure 1 illustrates these classifications: green boxes indicate single-hair follicles, red boxes denote two-hair follicles, blue boxes represent three-hair follicles, and purple boxes are used for follicles with more than three hairs. To enhance the precision of angle prediction, this study employs angle classification instead of regression. The subsequent sections provide a detailed description of these methodologies and their implementation.

Figure 3.

Illustration of the proposed YOLO-OHFD method. This diagram illustrates the architecture of a system designed for oriented hair follicle detection. The input image is first processed through a feature extraction network using ECA-Res2Block to capture multi-scale features (C1–C5). These features are then aligned and fused in the feature fusion network via Feature Alignment Modules (FAM), producing combined feature maps (F2–F5). Finally, the feature detection network utilizes these maps to predict the location and orientation of follicles, as well as to classify them. The output includes the follicle’s position, orientation, and classification.

2.2. Structure of YOLO-OHFD

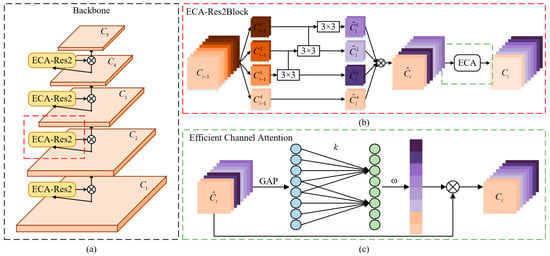

2.2.1. Feature Extraction Network Based on Adaptive Receptive Field

To address the inherent challenges of hair follicle detection, we introduce an enhanced feature extraction module named ECA-Res2Block, integrated within the CSPDarknet53 framework. CSPDarknet53 [30], renowned for its application in oriented detection tasks, combines the Cross Stage Partial (CSP) strategy with the Darknet53 architecture—a 53-layer convolutional network renowned for its robust feature extraction capabilities. The CSP approach enhances training efficiency and network performance by segmenting the network into two pathways and facilitating feature reusability and propagation through cross-stage connectivity [31].

Despite the strengths of CSPDarknet53, utilizing it directly for hair follicle detection presents two significant challenges. Firstly, hair follicles exhibit considerable variability in terms of hair type, thickness, and length across different populations, complicating feature extraction [32]. This variability tends to diminish the representation of finer hairs following successive downsampling operations. Secondly, incorporating angle prediction into the detection of oriented hair follicles, which often possess large aspect ratios, complicates the feature extraction process further.

To overcome these challenges, the ECA-Res2Block module is proposed to be integrated into CSPDarknet53. This module leverages the structural benefits of Res2Block, which features multiple parallel branches of convolution and pooling layers. These branches capture a diverse range of feature scales and resolutions, enhancing the network’s ability to synthesize information across different scales effectively. Subsequently, Efficient Channel Attention (ECA) is applied to the outputs of the Res2Block. As depicted in Figure 4c, ECA adaptively enhances the weights of crucial channels within Res2net branches, thus boosting the network’s proficiency in extracting pertinent features while preserving essential details critical for recognizing specific hair characteristics. This integration not only mitigates the issues associated with feature loss during downsampling but also enhances the network’s overall ability to discern and classify complex hair follicle structures accurately.

Figure 4.

Structure of feature extraction network based on adaptive receptive field. (a) Backbone is the feature extraction network named CSPDarknet53. (b) Structure of ECA-Res2Block. (c) Structure of ECA. ECA obtains aggregated features by global average pooling (GAP), and then generates weights by 1D convolution with a convolution kernel of k, where k is obtained adaptively from the channel dimensions.

2.2.2. Feature Fusion Network Based on Feature Alignment

After a detailed description of the YOLO-OHFD feature extraction network structure and its core component, ECA-Res2Block, we will now focus on the construction and optimization of the feature fusion network. This section is key to understanding how the entire detection framework effectively integrates and utilizes multi-scale features.

A feature alignment module (FAM) is proposed and integrated into the feature pyramid structure (FPN) to enhance multi-scale feature fusion.

FPN is constructed by upsampling input image to generate a series of images with different resolutions. It can help the network to process image information better at different scales, and thus improve the performance of visual task, which plays an important role in target detection and localization tasks. The structure of the FPN is shown in Figure 5a.

Figure 5.

Structure of feature fusion network based on FAM. (a) Neck is the feature fusion network named FPN. (b) Structure of FAM based on DCN.

However, in oriented object detection, the FPN may have feature misalignment during the upsampling feature fusion process [28]. Especially in oriented hair follicle detection, the hair follicle has a large aspect ratio and can appear at any angle. If the layers of the FPN are not properly aligned, it leads to a lack of consistency in the expression of the hair follicle features at different angles, which not only makes it difficult to correctly identify the boundary information of object, but also increases the sensitivity of detection results to angle change. It may decrease the stability of follicle localization in the rotational detection.

To solve this problem, we propose a FAM and integrate it into the upsampling process of the FPN. The structure of the FAM is shown in Figure 5b. First, the feature map is upsampled to obtain , then the offset present at each pixel point between and is calculated and converted into an offset in 2D space. The calculation formula is shown in Equation (1):

where is the offset between each prime in the two feature layers. is the function that transforms the pixel offset. denotes the stitching of the two feature layers.

Then, the offsets are learned by proposing deformable convolution network (DCN) and acted on in the upsampled feature map for matching alignment. The calculation process is shown in Equation (2):

where is the aligned feature map. is the deformable convolution function.

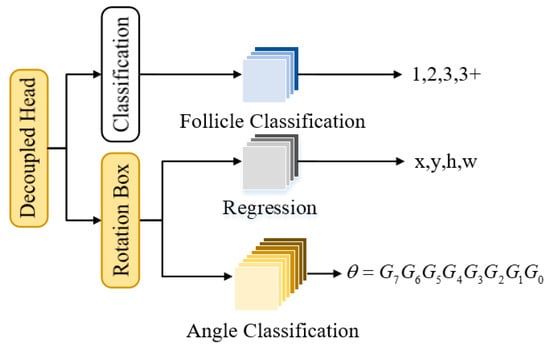

2.2.3. Feature Detection Network Based on Decoupled Heads

Decoupled heads are employed to predict the position, angle, and classification of oriented hair follicles. Additionally, angle smoothing algorithms and densely encoded labels are integrated into the angle classification prediction to supplant the conventional angle regression approach.

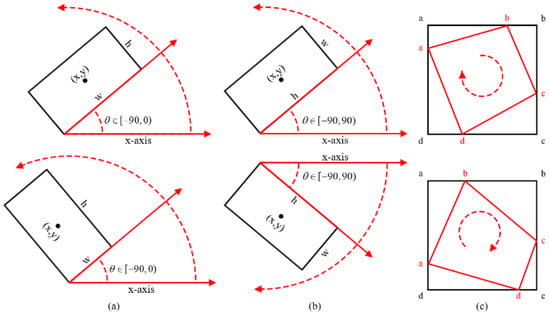

Currently, the prevalent methods for defining Oriented Bounding Boxes (OBBs) include the five-parameter and eight-parameter methods. Figure 6 illustrates these common approaches. The five-parameter method, characterized by fewer parameters and reduced computational complexity, is more apt for this study. Nonetheless, the interchangeability of edges in the OpenCV definition method can lead to irregular loss calculations. Consequently, we have chosen to utilize the long edge definition method to represent the OBB, which provides a more stable and accurate framework for our analysis [33].

Figure 6.

OBB definition methods. (a) OpenCV definition method: . (b) Long edge definition method: . (c) Ordered quadrilateral definition method: .

The parameters of the OBB are predicted, which has worked well in many rotation detection tasks. However, there is a boundary discontinuity problem during angular regression prediction. It results in large loss values, and some targets may be misdiagnosed and missed due to their proximity to the angular boundary.

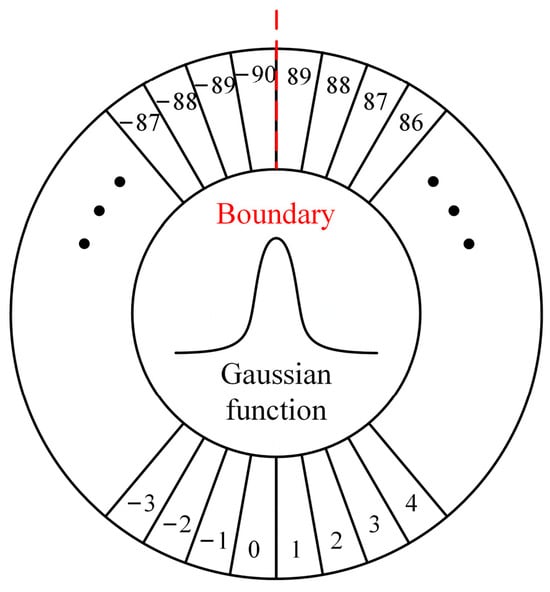

Therefore, we solve the above problem by transforming angle prediction from a regression problem to a classification problem. First, the angle of hair is classified in its category; 180 categories are defined in this article. Then, circular smooth label (CSL) is proposed to ensure the continuity between predicted angles [34]. The structure of CSL is shown in Figure 7. Then, the loss value between the predicted label and ground truth is calculated by (3).

where is the angle of the oriented box. is a Gaussian function as a window function. is the radius of the window function.

Figure 7.

Circular smooth label.

However, the classification for 180 categories leads to too thick a prediction layer, and a huge number of parameters reduce the operation efficiency. Therefore, the Gray-coded label (GCL) is proposed to reconstruct the prediction layer, which greatly reduces the parameters of the prediction layer without affecting the angular prediction error [35].

In addition, in order to solve the coupling problem between hair follicle position, angle, and classification, we design a decoupled head with the structure shown in Figure 8. The decoupled head distributes the regression and classification tasks through parallel convolutional layers, which can better apply the feature information focused on by different tasks for prediction, in order to further improve the accuracy of detection.

Figure 8.

Structure of decoupled head network. 1, 2, 3, 3+ denote four classification of hair follicles. are the center point of the follicle bounding box, and are the width and height of the box. is the angle of the rotation box, expressed in Gray code .

2.2.4. Loss Calculation Based on Oriented Object Detection

The loss function of YOLO-OHFD includes localization loss , angle classification loss , hair follicle classification loss , and confidence loss , as shown in Equation (4).

The loss function of YOLO-OHFD includes localization loss , angle classification loss , hair follicle classification loss , and confidence loss , as shown in (4).

First, among the loss calculations, , , and can be calculated by using binary cross entropy (BCE), as shown in Equation (5).

where and denote the probability distributions of the prediction box and ground truth box. and denote the probability distributions of the predicted and actual angle. and denote the predicted and actual confidence levels. indicates the number of rotation angle categories. indicates the number of follicle categories. indicates the number of anchor box.

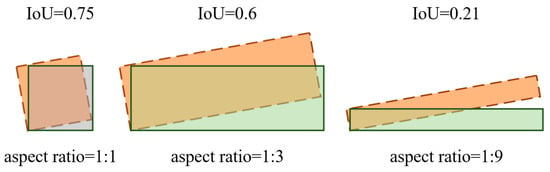

Then, the localization loss is calculated. In the oriented hair follicle detection, the hair follicle has large aspect ratios. Traditional Intersection over Union (IoU) only focuses on the degree of overlap between bounding boxes, which cannot reflect the actual loss of objects with large aspect ratios well, and increase the difficulty of network learning. However, Enhanced IoU (EIoU) is better adapted to the change of detection box size by introducing effective areas, which is more suitable for the calculation of hair follicle localization loss. The calculation of EIoU is shown in Equations (6)–(9).

where , , and are the overlap loss, the center distance loss, and the width and height loss of the predicted box and ground truth box. is the Euclidean distance. and are the center of the predicted box and ground truth box. and are the width and height of the predicted box. and are the width and height of the ground truth box. and are the width and height of the minimum bounding rectangle that covers the predicted box and the ground truth box.

3. Experiments

To validate the effectiveness of YOLO-OHFD in the oriented hair follicle detection, several experiments were conducted. In this section, the experimental setup and performance evaluation metrics are first proposed. Then, ablation experiments were conducted to verify the effectiveness of the feature extraction module, feature fusion module, feature detection module and loss calculation method.

This section details the comprehensive experiments conducted to evaluate the performance of the YOLO-OHFD algorithm in detecting oriented hair follicles, focusing on both its accuracy and computational efficiency.

3.1. Experimental Setup

In this study, various technical tools were utilized. A dermatoscope (FotoFinder Bodystudio ATBM, FotoFinder Systems GmbH, Bad Birnbach, Germany) was employed to capture high-resolution images of hair follicles, which allowed for detailed analysis of their morphology and distribution. The computer hardware configuration included an Intel Core i7-12700K CPU (Intel Corporation, Santa Clara, CA, USA), providing powerful processing capabilities for efficient data handling and model training, along with an NVIDIA GeForce GTX 3090 GPU (NVIDIA Corporation, Santa Clara, CA, USA), ensuring high-performance graphics processing suitable for large-scale deep learning model training and inference. The software environment involved the use of Pytorch 1.10.0, a deep learning framework used to build, train, and evaluate the models, and Python 3.8.0 as the programming language to implement the algorithms and models used in the experiment. Specific experimental details and related information can be obtained from the code at https://github.com/Melonpoint/YOLO-OHFD (accessed on 8 March 2025).

3.1.1. Dataset

We introduce a novel rotating frame-based scalp hair follicle dataset, developed in collaboration with Xiangya Hospital, which serves as the cornerstone for the experiments.

In this section, we build the first rotating frame-based scalp hair follicle dataset. In collaboration with Xiangya Hospital, one of the top medical institutions in China, we obtained dermoscopic images of the scalps of patients undergoing hair transplantation and the corresponding hair analysis reports. The 500 RGB images of the scalp are screened after a guided assessment by a professional doctor. The resolution of the images is 1920 × 1080, and each image is annotated using roLabelImg. The annotation of each image includes information about the location of each group of hair follicles, classification information, and detection difficulty. The position information of hair follicles includes the coordinates of the center point of the group’s follicle contour (x,y), the distance of the center point from both the short and long sides (w,h), and the rotation angle of the bounding box. In addition, there is no standardized definition of the classification of hair follicle in medicine, and doctors usually make judgments based on actual application scenarios and their own experience and knowledge. Therefore, we combine the guidance of professional doctors to classify hair follicles into four categories based on the number of hairs in the follicle, which are follicles with 1 hair, 2 hairs, 3 hairs, and more than 3 hairs. The dataset is divided into a training set (80%), a validation set (10%) and test sets (10%).

3.1.2. Data Preprocessing

Data preprocessing techniques are crucial for balancing the dataset and enhancing model training, which includes Mosaic augmentation and rotation adjustments to accommodate the diverse orientations of hair follicles.

In the preprocessing phase, the dataset contains a larger number of samples for follicles with fewer hairs (1 hair and 2 hairs) and fewer samples for follicles with more hairs (3 hairs and more than 3 hairs). Mosaic augmentation is employed to address this imbalance and increase the representation of follicles with more hairs. This technique involves randomly cropping and stitching together four dermoscopic images to generate new images, thereby enhancing the diversity of the training data. Additionally, since follicles, in directional follicle detection tasks, can appear at various rotation angles, multiple image rotations are applied to further augment our dataset. This approach increased the diversity of the training data and improved the training effectiveness. The rotation angles we selected were −45°, −30°, −15°, 15°, 30°, and 45°. All experiments are carried out on Pytorch1.10.0 deep learning framework and Python 3.8.0. The CPU uses i7-12700K and GPU uses NVIDIA GeForce GTX 3090.

3.1.3. Performance Evaluation

To quantitatively assess the algorithm’s effectiveness, we employ specific performance metrics such as AP, mAP, and FPS, which are pivotal for evaluating both accuracy and efficiency [36,37].

In order to comprehensively evaluate the performance of YOLO-OHFD, we set up evaluation indicators in terms of the detection accuracy and detection efficiency of the algorithm.

First, AP and mAP are used to quantitatively evaluate the detection accuracy of our proposed algorithm. AP measures the detection accuracy of the algorithm for each type of hair follicle, mAP measures the overall detection performance of the algorithm by calculating the average of the APs of all categories of hair follicles, and FPS measures the detection efficiency of the algorithm. The formulae for AP and mAP are shown in Equations (10)–(13).

where TP, FP and FN represent True Positive, False Negative, and False Negative, respectively. P represents precision and R represents recall. m is the number of hair follicle classifications.

However, in order to efficiently characterize hair follicles with large aspect ratios, we use OBB instead of HBB. In the HBB detection, TP and FP are determined only by EIoU. When Equation (14) is satisfied, the sample is defined as TP, otherwise it is defined as FP. However, in the oriented hair follicle detection, the rotation angle also affects the accuracy of hair follicle localization. Therefore, we add an angle constraint on the basis of EIoU, as shown in Equation (15). When both Equations (14) and (15) are satisfied, the sample is defined as TP; otherwise, it is defined as FP.

where and are the predicted box and ground truth box. and are the angle of the predicted box and ground truth box. and are the threshold of EIoU and angle.

In addition, the AP in the experiments of this paper is AP@0.5:0.95, which indicates the average of the corresponding APs for different (0.5 to 0.95 in steps of 0.05). mAP is also mAP@0.5:0.95.

As shown in Figure 9, boxes with different aspect ratios have different sensitivities to the rotation angle. The aspect ratios are larger for follicles with fewer hairs, and the angular error has a significant impact on IoU, so the should be set smaller. Conversely, for follicles with more hairs, which have a relatively smaller aspect ratio, it is advisable to increase the value of appropriately. So is set according to the aspect ratio of different types of hair follicles.

Figure 9.

IoU corresponding to different aspect ratios for the same rotation angle.

Then, frames per second (PFS), floating point operation per second (FLOPs), parameters (Paras), and mean inference time (MIT) are used to quantitatively evaluate the detection efficiency of our proposed algorithm. FPS measures the model’s ability to process images in real time, reflecting its practical applicability in time-sensitive scenarios. FLOPs provides an estimate of the computational complexity of the model, indicating the amount of processing power required. Paras represents the size of the model, which directly impacts memory usage and deployment feasibility. Lastly, MIT assesses the average time the model takes to process a single image, offering insights into the model’s overall speed and responsiveness. In experiments, FPS is utilized to identify the optimal balance between accuracy and computational cost for each module in the ablation experiment. Finally, by combining FLOPs, parameters, and MIT, the proposed model is compared with other object detection methods in terms of time complexity, space complexity, and real-time processing ability.

3.2. Hyperparameter Selection

3.2.1. Batch Size Selection

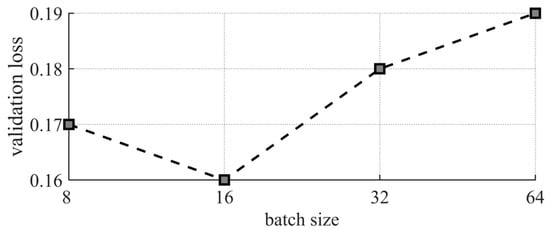

To select the optimal batch size, the grid search method was used to select parameters from the set {8, 16, 32, 64}. The processes were carried out 50 times on the validation sets, and the average of the detection accuracies was used as the selection criterion. The detection accuracy corresponding to different batch sizes is shown in Figure 10. The detection accuracy is the highest when the batch size is 16. Therefore, the optimal batch size is set to 16.

Figure 10.

Validation losses of the YOLO-OHFD under varied batch sizes.

3.2.2. Learning Rate Selection

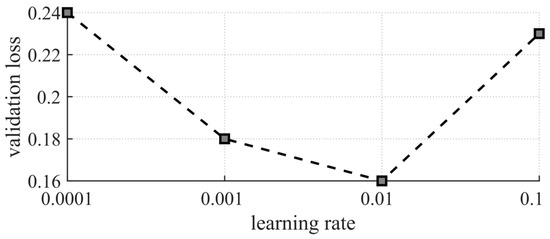

To select the optimal learning rate, the grid search method was used to select parameters from the set {0.0001, 0.001, 0.01, 0.1}. The processes were carried out 50 times on the validation sets, and the average of the detection accuracies was used as the selection criterion. The detection accuracy corresponding to different learning rates is shown in Figure 11. The detection accuracy is the highest when the learning rate is 0.01. Therefore, the optimal learning rate is set to 0.01.

Figure 11.

Validation losses of the YOLO-OHFD under varied learning rates.

3.2.3. Window Radius Selection

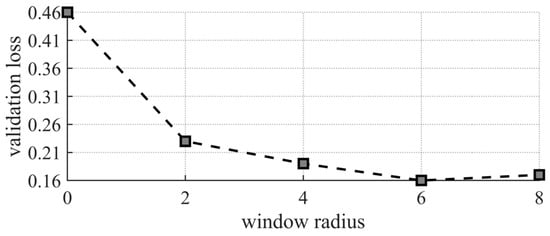

As described in Section 2.2.3, the size of the window radius affects the accuracy of the angle classification. If the window radius is too small, the learning effect of Angle information will be weakened, while the large window radius will increase the deviation of Angle prediction. In particular, single-stage detectors like YOLO are more sensitive to the window radius. Thus, it is a key hyperparameter of the proposed model.

To select the optimal window radius, the grid search method was used to select parameters from the set {0, 2, 4, 6, 8}. The processes were carried out 50 times on the validation sets, and the average of the detection accuracies was used as the selection criterion. The detection accuracy corresponding to different window radii is shown in Figure 12. The detection accuracy is the highest when the window radius is 6. Therefore, the optimal window radius is set to 6.

Figure 12.

Validation losses of the YOLO-OHFD under varied window radius.

3.2.4. Other Hyperparameter Selections

In addition to the hyperparameters selected in the hyperparameter selection, the settings of other hyperparameters are shown in Table 1. First, the number of Epochs for training is set to 150 to ensure that the model has enough time to learn. The Initial learning rate is 0.01, and the Batch size is 16, which makes full use of GPU resources and ensures the stable training of the model.

Table 1.

Main hyperparameters used for YOLO-OHFD training and testing.

Additionally, SGD is used as the optimizer in this model for its stability and strong generalization capabilities. The weight_decay is set to 0.0005, which modestly regularizes the model parameters during training. The Momentum is set to 0.937, which enhances the inertia of parameter update and promotes smoother convergence of the model.

Then, at the beginning of training, the warmup strategy is applied, with the warmup_epoch set to 3, which gradually increases the learning rate to avoid unstable gradient updates. The warmup_momentum is set to 0.8, ensuring stability and effectiveness during the initial training phase. For data augmentation, Mosaic augmentation is utilized with Mosaic set to 1.0, which increases the diversity of the training data by combining multiple images.

Finally, the CSL strategy is proposed, where the CSL theta is set to 180 and the Window radius is 6, to improve the detection accuracy of the model for the rotation Angle.

3.3. Ablation Experiments

3.3.1. Ablation Experiment of Feature Extraction Network

In this study, we propose a novel feature extraction network by integrating Res2block into CSPDarknet53 and enhancing it with the Efficient Channel Attention (ECA) mechanism, aiming to improve the extraction of oriented hair follicle features. To evaluate the effectiveness of different components within this network, we conducted grouped experiments on various modules. Specifically, this paper employs ECA as the channel attention module to assess its impact on feature extraction. To further explore the influence of attention mechanisms, we also incorporated a spatial attention module, referred to as CA, and the CBAM, which combines both channel and spatial attention mechanisms. These modules were analyzed in a comparative study to ascertain their respective effects on network performance.

For the purposes of this experiment, we froze the structure and parameters of all parts of the network except for the backbone, ensuring that our focus remained solely on evaluating the modified feature extraction components.

As shown in Table 2, the experimental results show that the effect of Res2Block is better than that of ResBlock regardless of whether the attention module is added; in addition, the introduction of an attention mechanism can increase the network’s focus on hair follicle features and suppress the interference of the scalp background, thus further improving the network’s feature extraction of hair follicles. However, although several attention modules improve the detection accuracy of the network, they also increase the complexity of the network; ECA clearly outperforms several other attention modules, has the best detection results, and slightly reduces the detection speed. The reason is that oriented hair follicles have large aspect ratios in oriented hair follicle recognition tasks. When dealing with objects with large aspect ratios, the spatial attention mechanism requires a wide range of weighted calculations at different spatial positions of the input, which leads to a significant increase in computational complexity. At the same time, more memory is needed to store spatial position information, which results in memory consumption. In contrast, ECA only focuses on the multi-channel decoupled data acquired via Res2Block and does not involve weighting computation of spatial locations, which makes ECA more efficient in dealing with hair follicle objects with large aspect ratios, as it is less computationally and memory consuming. It is more efficient while taking into account accuracy. According to the experimental results, it can be seen that the CSPDarknet53 incorporating the ECA-Res2block module is the best choice for hair follicle feature extraction.

Table 2.

Ablation experiments on the effect of different modules in the feature extraction network on detection accuracy.

3.3.2. Ablation Experiment of Feature Fusion Network

In this study, we introduce a Feature Aggregation Module (FAM), which is integrated into the Feature Pyramid Network (FPN) of the YOLO-OHFD framework. This enhancement is designed to improve the fusion capability of the model across various feature scales. The implementation of FAM aims to optimize the multi-scale feature integration, thereby enhancing the detection accuracy and robustness of YOLO-OHFD.

In this experiment, we conducted group comparison experiments to verify the role of the FAM and its DCN in the feature fusion network. where FAM (Conv) denotes a FAM module based on ordinary convolution and FAM (DCN) denotes a FAM module based on DCN.

As shown in Table 3, the experimental results demonstrate that the accuracy reaches 84.40% with an fps of 46.96 without the addition of the FAM. However, when the FAM with ordinary convolution is added, the accuracy does not significantly improve, but instead decreases, the detection speed. Meanwhile, the introduction of the DCN resulted in a significant improvement in accuracy, although the fps was slightly increased. because the reason is that we eliminate the bias in the upsampling process of oriented follicular features by introducing DCN and constructing learnable offsets. The ordinary convolution merely increases the complexity of the network, and there are still biases in the fusion of network features at different scales. According to the experimental results, it can be seen that the FAM based on DCN effectively improves the feature fusion capability of FPN.

Table 3.

Ablation experiments on the effect of different modules in the feature fusion network on detection accuracy.

3.3.3. Ablation Experiment of Feature Detection Network

This paper presents a decoupled head designed for the precise prediction of oriented hair follicle position, angle, and classification. Additionally, to enhance the accuracy of angle prediction, angle smoothing algorithms and densely coded labels are introduced into the angle classification framework, replacing traditional angle regression methods. These modifications aim to improve the precision and reliability of orientation predictions within the hair follicle detection process.

In this experiment, we conduct comparison experiments on the role of detection head coupling methods and angle prediction methods in feature detection networks. Coupled denotes coupled detector head structure, Decoupled denotes decoupled detector head structure, Reg denotes angular regression prediction, Cls denotes conventional angular classification prediction, and Cls (GCL) denotes angular classification prediction with the introduction of Gray-coded labels.

As shown in Table 4, the experimental results show that the detection accuracy of the decoupled head is higher and at higher FPS for both classification and regression of the rotation angle. This is because the follicle position regression and the rotation angle regression have different scales and optimization difficulties. Decoupling the detection heads not only makes the optimization more stable but also reduces the computational cost by computing in parallel between subtasks. In addition, when the angle classification is used for angle prediction, the prediction accuracy and PFS are also significantly improved. The reason is that the angle classification avoids the occurrence of discontinuous angle boundaries during detection and eliminates the impact of false positives and missed detections. Meanwhile, the GCL reduces the complexity of the network by the reduction of the predicted parameters, which allows for higher detection accuracy and operating speed. According to the experimental results, it can be seen that the decoupled head network based on angle classification and GCL effectively improves the feature detection capability of YOLO-OHFD.

Table 4.

Ablation experiments on the effect of different modules in the feature detection network on detection accuracy.

3.3.4. Ablation Experiments of IoU Methods

In this paper, we address the limitations faced by traditional IoU metrics in detecting objects with large aspect ratios, such as hair follicles. To this end, we employ the EIoU as a loss function, which is designed to more accurately reflect the actual loss associated with these challenging detection scenarios. In our experiments, we also evaluate the three most commonly used IoU variants—Generalized IoU (GIoU), Distance IoU (DIoU), and Complete IoU (CIoU)—as the loss functions within the YOLO-OHFD framework for oriented hair follicle detection.

As shown in Table 5, the experimental results show that CIoU and EIoU are relatively effective. Compared to GIoU and DIoU, CIoU and EIoU take the loss of the bounding box aspect ratio into account when calculating the loss, which is suitable for the task of detecting large aspect ratio objects such as hair follicles. Meanwhile, EIoU splits the influence factor of the aspect ratio of the predicted and real frames on the basis of CIoU, so as to capture the changes in the shape of the hair follicle more accurately and further improve the accuracy of the localization of the hair follicle. According to the experimental results, it can be seen that EIoU effectively improves the loss calculation accuracy of YOLO-OHFD.

Table 5.

Effect of different IoUs on detection accuracy.

3.4. Comparison with State-of-the-Art Oriented Object Detection Methods

In this section, we compare YOLO-OHFD with state-of-the-art rotation box detection networks. We conducted experiments using the same PC to quantify the mAP and PFS of each algorithm in the oriented hair follicle detection task.

First, it can be seen through Table 6 that most of the detection algorithms are relatively effective in detecting follicles with fewer hairs (Class1, Class2), while their detection accuracy for follicles with more hairs (Class3, Class4) is relatively low. This result is mainly due to the irregular distribution of hairs in multi-hair follicles, and the hairs may obscure each other under different dermatoscopic shooting angles. Therefore, the multi-hair follicles show the characteristics of the hairless follicles.

Table 6.

Comparison of our method with state-of-the-art oriented object detection methods.

Then, in terms of the detection accuracy of the algorithm, our method achieved a mAP of 87.01 on the hair follicle detection dataset, and the detection accuracy is 5.92% higher than that of ReDet. Compared to the methods we propose, most of the existing rotation detection methods are mainly used for aerial image detection or text detection in natural scene images. They focus on the classification as well as the localization of different kinds of objects in the overall background. However, in the task of hair follicle recognition, it is more difficult to correctly classify follicles according to the number of hairs in the follicle while considering the accuracy of follicle localization. It is difficult for general models to obtain better detection results. Our method fully considers the diversity and complexity of hair follicle information in the hair recognition, thus achieving better detection results.

In terms of detection efficiency, our method achieved an FPS of 43.67, which is 16.42% higher than that of S2A-Net, demonstrating superior real-time processing capabilities. Additionally, our model is relatively simple, with fewer parameters and FLOPs compared to other methods. This indicates that our model has lower memory requirements and higher computational efficiency, making it easier to deploy across various hardware platforms. And our model achieves an MIT of 22.90 ms, which more intuitively shows its efficiency in providing faster response. By integrating these metrics, the analysis shows that our proposed model strikes a good balance between computational complexity and speed in practical hair follicle detection tasks. The combination of high frame rates and low latency ensures that our algorithm can quickly respond to external changes, enhancing the model’s real-time performance and stability.

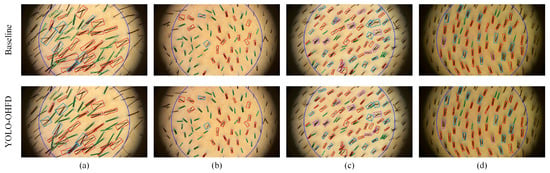

The qualitative detection results of the baseline method (i.e., YOLOv5 OBB) and our YOLO-OHFD are shown in Figure 13. When the scalp environment is relatively simple, with hair follicles being independent and minimally occluded, the baseline algorithm performs reasonably well, but there are still cases of bounding box misalignment, as shown in Figure 13b,d. However, when hair follicles are densely distributed and mutually occluded, the baseline method even mistakenly detects multiple overlapping hair follicles as a single follicle. In contrast, our method demonstrates superior performance in the localization and classification of hair follicles, allowing for more accurate detection and handling of complex scenarios, as shown in Figure 13a,c. By visualizing the experimental results, it can be intuitively seen that our method not only improves the detection accuracy but also enhances the robustness of the model in practical applications.

Figure 13.

Some detection results in different hair follicle environments. For each image pair, the upper is the baseline (i.e., YOLOv5 OBB) method and the bottom is the YOLO-OHFD method. (a) Hair occlusion. (b) Sparse hair distribution. (c) Complex hair types. (d) Relatively dark scalp.

3.5. Training Visualization

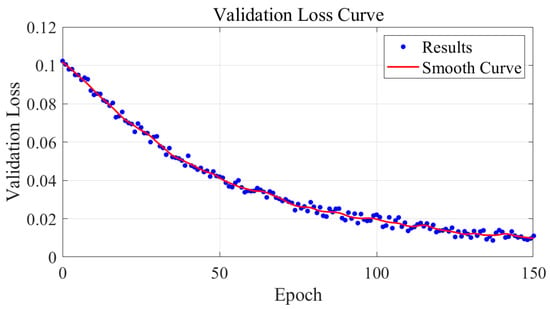

To better illustrate the training process, we present the loss curve shown in Figure 14. The validation loss curve illustrates the performance of the model throughout the training process. The x-axis represents the number of epochs, while the y-axis denotes the validation loss, which indicates how well the model generalizes to unseen data. From the curve, we observe a steady decrease in validation loss as the epochs increase, suggesting that the model is learning effectively and improving its performance over time. The blue dots represent the actual validation loss values at each epoch, while the red line is a smooth curve that provides a clearer overview of the overall trend. This smoothness indicates that the fluctuations in the loss values are minimal and that the model’s performance is stabilizing as it approaches a lower bound of the validation loss. At later epochs, the validation loss appears to plateau, signaling that the model has potentially reached its optimal performance. The rate of decrease slows down, which might indicate that further training would yield diminishing returns. This behavior is typical of models that are converging to a solution, suggesting that additional training might not result in significant improvements.

Figure 14.

Validation loss curve: epoch vs. loss.

4. Discussion

This study introduces YOLO-OHFD, a novel YOLO-based oriented hair follicle detection method that employs oriented bounding boxes (OBBs) to enhance the accuracy of hair follicle detection in robotic hair transplantation. The adoption of ECA-Res2Block in the feature extraction network significantly improves the network’s capability to handle occlusions and variations in hair follicle orientation and size. The integration of the Feature Alignment Module (FAM) within the feature fusion network ensures precise feature alignment, thus minimizing feature displacement issues typically observed with conventional feature pyramid networks.

The experimental results demonstrate the superiority of YOLO-OHFD over existing methods. With a mean average precision (mAP) of 87.01% and frames per second (FPS) of 43.67, YOLO-OHFD not only shows excellent detection accuracy but also maintains robust computational efficiency. These metrics indicate that YOLO-OHFD effectively balances high performance with computational demands, making it suitable for real-time applications.

Comparative analyses with state-of-the-art methods reveal that YOLO-OHFD excels in handling high-density and variable-orientation hair follicles, challenges that are typically problematic for other oriented object detection systems. Furthermore, the angle classification approach adopted in YOLO-OHFD, which replaces traditional angle regression, contributes to the stability and accuracy of angular orientation predictions, reducing the common boundary discontinuity problems found in other methods.

The development of robotic hair transplantation technology, as exemplified by YOLO-OHFD, is poised to revolutionize the field of dermatological surgery. As this technology continues to mature, several potential market applications and technical challenges are anticipated:

- (1)

- Market Expansion: The increasing prevalence of hair loss globally and the growing acceptance of cosmetic surgery are likely to drive market growth. Robotic systems that can offer high precision and reduced procedural times are particularly well-positioned to capitalize on this trend.

- (2)

- Integration with Other Technologies: Future developments could see the integration of YOLO-OHFD with augmented reality (AR) and virtual reality (VR) systems to enhance surgical planning and outcome visualization, providing surgeons with real-time, enhanced images of the surgical site.

- (3)

- Automation and Learning: Advancements in machine learning could enable these systems to improve their performance over time based on procedural data, potentially leading to fully automated systems that require minimal human oversight.

- (4)

- Technical Challenges: Despite significant advancements, challenges remain, particularly in handling very high-density hair follicles and those with complex orientations. Improvements in image acquisition technologies and real-time processing capabilities are necessary to address these issues.

- (5)

- Regulatory and Ethical Considerations: As with all medical technologies, rigorous testing and regulatory approval are crucial. There are also ethical considerations related to the automation of surgical procedures, including concerns about patient safety and the role of human surgeons.

- (6)

- Customization and Personalization: Future research could also explore how these technologies might be adapted for use in other types of hair, such as body hair, and for patients with varying scalp conditions. Customization to individual patients’ needs and conditions will be crucial for wider adoption.

5. Conclusions

The YOLO-OHFD method marks a significant advancement in the field of robotic hair transplantation. It addresses critical challenges such as mutual occlusion and variable orientations of hair follicles by effectively integrating oriented bounding boxes, enhanced feature extraction, and alignment techniques. The method’s high precision and efficiency demonstrate its potential to significantly improve the outcomes of hair transplantation procedures by providing reliable, rapid, and accurate hair follicle detection.

This research contributes to the broader field of medical imaging and robotic surgery by providing a framework that could be adapted for other complex recognition tasks in medical robotics. Future research will focus on optimizing the computational efficiency of YOLO-OHFD to facilitate wider adoption and integration into clinical settings. Moreover, efforts will be directed towards enhancing the robustness of the model against variations in image quality and exploring its applicability in dynamic surgical environments.

The possibility of extending this framework to classify hair follicles based on individual hair quality could also be explored. This would enable personalized treatment planning in hair transplantation, potentially extending the applicability of YOLO-OHFD beyond hair loss treatments to other dermatological and surgical practices, thus broadening the impact of this research in medical technology.

Author Contributions

X.L.: Software, Methodology, Writing—original draft. H.W.: Supervision, Validation, Writing—review and editing. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in part by the National Natural Science Foundation of China under Grant 62173351, and in part by the Project of Innovation-Driven Plan in Central South University under Grant 2021zzts0197.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board of Xiangya Hospital, Central South University (protocol code 20161169 and date of approval 2016-11).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The datasets presented in this article are not readily available because the data are part of an ongoing study. Requests to access the datasets should be directed to the corresponding author.

Acknowledgments

We sincerely thank Su Juan and Lu Lixia for their significant contributions to this study. Their support in providing the hair follicle imaging dataset and assisting with the categorization of hair follicles in dermoscopy images was invaluable. Their involvement in data collection and result analysis played a crucial role in the success of this research. We deeply appreciate their expertise and collaboration, which have greatly enhanced the quality and impact of this work.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Appendix A

To provide a clearer understanding of follicle recognition in robotic hair transplantation, Figure A1 presents an illustrative image of a robotic system. This system incorporates automated follicle recognition, enabling precise identification and localization of follicular units to assist in the transplantation process.

The system features a real-time recognition interface, which displays scalp imaging and follicle mapping to enhance accuracy and consistency. By integrating follicle recognition capabilities, the system improves detection efficiency and ensures selective extraction.

It is important to note that this image serves as a representative example and does not depict a system developed in this study. Instead, it provides a visual reference to illustrate the role of follicle recognition technology in robotic hair transplantation.

Figure A1.

A robotic hair transplantation system incorporating automated follicle recognition.

References

- Rhodes, T.; Girman, C.J.; Savin, R.C.; Kaufman, K.D.; Guo, S.; Lilly, F.R.; Siervogel, R.; Chumlea, W.C. Prevalence of male pattern hair loss in 18–49 year old men. Dermatol. Surg. 1998, 24, 1330–1332. [Google Scholar] [CrossRef] [PubMed]

- Okhovat, J.P.; Marks, D.H.; Manatis-Lornell, A.; Hagigeorges, D.; Locascio, J.J.; Senna, M.M. Association between alopecia areata, anxiety, and depression: A systematic review and meta-analysis. J. Am. Acad. Dermatol. 2023, 88, 1040–1050. [Google Scholar] [CrossRef] [PubMed]

- Bae, T.W.; Jung, Y.C.; Kim, K.H. Needle Transportable Semi-Automatic Hair Follicle Implanter and Image-Based Hair Density Estimation for Advanced Hair Transplantation Surgery. Appl. Sci. 2020, 10, 4046. [Google Scholar] [CrossRef]

- Hoffmann, R. TrichoScan: A novel tool for the analysis of hair growth in vivo. J. Investig. Dermatol. Symp. Proc. 2003, 8, 109–115. [Google Scholar] [CrossRef]

- Vallotton, P.; Thomas, N. Automated body hair counting and length measurement. Skin Res. Technol. 2008, 14, 493–497. [Google Scholar] [CrossRef]

- Shih, H.-C. An unsupervised hair segmentation and counting system in microscopy images. IEEE Sens. J. 2014, 15, 3565–3572. [Google Scholar] [CrossRef]

- Konstantinos, K.A. Hair transplantation—From the ‘ancient’ 4 mm plugs to the latest follicular unit excision technique: 8 decades of painful progress. In Hair Loss: Advances and Treatments; Springer Nature Switzerland: Cham, Switzerland, 2024; pp. 25–52. [Google Scholar] [CrossRef]

- Kubaisi, T.A. Acute versus chronic telogen hair loss: A review of the knowledge and recent facts. Al-Anbar Med. J. 2024, 20, 2. [Google Scholar] [CrossRef]

- Bi, X.; Hu, J.; Xiao, B.; Li, W.; Gao, X. IEMask R-CNN: Information-enhanced mask R-CNN. IEEE Trans. Big Data. 2022, 9, 688–700. [Google Scholar] [CrossRef]

- Kim, M.; Kang, S.; Lee, B.-D. Evaluation of automated measurement of hair density using deep neural networks. Sensors 2022, 22, 650. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Qiao, S.; Chen, L.-C.; Yuille, A. Detectors: Detecting objects with recursive feature pyramid and switchable atrous convolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 19–25 June 2021; pp. 10213–10224. [Google Scholar] [CrossRef]

- Gu, X.; Zhang, X.; Fang, H.; Wu, W.; Lin, J.; Yang, K. A New Dataset for Hair Follicle Recognition and Classification in Robot-Aided Hair Transplantation. In Proceedings of the International Conference on Artificial Neural Networks, Heraklion, Crete, Greece, 23–26 September 2023; pp. 38–49. [Google Scholar] [CrossRef]

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot Multibox Detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar] [CrossRef]

- Gallucci, A.; Znamenskiy, D.; Pezzotti, N.; Petkovic, M. Hair counting with deep learning. In Proceedings of the 2020 International Conference on Biomedical Innovations and Applications (BIA), Varna, Bulgaria, 24–27 September 2020; pp. 5–9. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27 June–1 July 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the 18th International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Jiang, Y.; Zhu, X.; Wang, X.; Yang, S.; Li, W.; Wang, H.; Fu, P.; Luo, Z. R2CNN: Rotational Region CNN for Orientation Robust Scene Text Detection. arXiv 2017, arXiv:1706.09579. [Google Scholar] [CrossRef]

- Ma, J.; Shao, W.; Ye, H.; Wang, L.; Wang, H.; Zheng, Y.; Xue, X. Arbitrary-Oriented Scene Text Detection via Rotation Proposals. IEEE Trans. Multimed. 2018, 20, 3111–3122. [Google Scholar] [CrossRef]

- Yang, X.; Yang, J.; Yan, J.; Zhang, Y.; Zhang, T.; Guo, Z.; Xian, S.; Fu, K. SCRDet: Towards More Robust Detection for Small, Cluttered and Rotated Objects. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8232–8241. [Google Scholar] [CrossRef]

- Ding, J.; Xue, N.; Long, Y.; Xia, G.-S.; Lu, Q. Learning RoI Transformer for Oriented Object Detection in Aerial Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–21 June 2019; pp. 2849–2858. [Google Scholar] [CrossRef]

- Xu, Y.; Fu, M.; Wang, Q.; Wang, Y.; Chen, K.; Xia, G.S.; Bai, X. Gliding Vertex on the Horizontal Bounding Box for Multi-Oriented Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 1452–1459. [Google Scholar] [CrossRef]

- Pan, X.; Ren, Y.; Sheng, K.; Dong, W.; Yuan, H.; Guo, X.; Ma, C.; Xu, C. Dynamic Refinement Network for Oriented and Densely Packed Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 11207–11216. [Google Scholar] [CrossRef]

- Yang, X.; Yan, J.; Feng, Z.; He, T. R3Det: Refined Single-Stage Detector with Feature Refinement for Rotating Object. arXiv 2019, arXiv:1908.05612. [Google Scholar] [CrossRef]

- Fu, K.; Chang, Z.; Zhang, Y.; Sun, X. Point-Based Estimator for Arbitrary-Oriented Object Detection in Aerial Images. IEEE Trans. Geosci. Remote Sens. 2021, 59, 4370–4387. [Google Scholar] [CrossRef]

- Han, J.; Ding, J.; Li, J.; Xia, G.-S. Align Deep Features for Oriented Object Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–11. [Google Scholar] [CrossRef]

- Han, J.; Ding, J.; Xue, N.; Xia, G.-S. ReDet: A Rotation-Equivariant Detector for Aerial Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 2786–2795. [Google Scholar] [CrossRef]

- Mahasin, M.; Dewi, I.A. Comparison of CSPDarkNet53, CSPResNeXt-50, and EfficientNet-B0 backbones on YOLO v4 as object detector. Int. J. Eng. Sci. Inf. Technol. 2022, 2, 64–72. [Google Scholar] [CrossRef]

- Senussi, M.F.; Kang, H.S. Occlusion removal in light-field images using CSPDarkNet53 and bidirectional feature pyramid network: A multi-scale fusion-based approach. Appl. Sci. 2024, 14, 9332. [Google Scholar] [CrossRef]

- Sun, B.; Wang, X.; Li, H.; Dong, F.; Wang, Y. Small-target ship detection in SAR images based on densely connected deep neural network with attention in complex scenes. Appl. Intell. 2023, 53, 4162–4179. [Google Scholar] [CrossRef]

- Xiao, Z.; Yang, G.; Yang, X.; Mu, T.; Yan, J.; Hu, S. Theoretically achieving continuous representation of oriented bounding boxes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–20 June 2024; pp. 16912–16922. [Google Scholar] [CrossRef]

- Tian, S.L.; Xu, K.; Xia, J.; Chen, L. Efficient parallel dynamic overset unstructured grid for flow simulation with moving bodies. AIAA J. 2023, 61, 2135–2166. [Google Scholar] [CrossRef]

- Liu, F.; Chen, R.; Zhang, J.; Xing, K.; Liu, H.; Qin, J. R2YOLOX: A lightweight refined anchor-free rotated detector for object detection in aerial images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The PASCAL visual object classes (VOC) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Farhadi, A.; Redmon, J. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).