Artificial Empathy in Home Service Agents: A Conceptual Framework and Typology of Empathic Human–Agent Interactions

Abstract

1. Introduction

2. Theoretical Background

2.1. Home Services AI Agent Evolution and HRI Theory-Based Interactions

2.2. Artificial Empathy Theory and Applications

3. Methods

4. Results

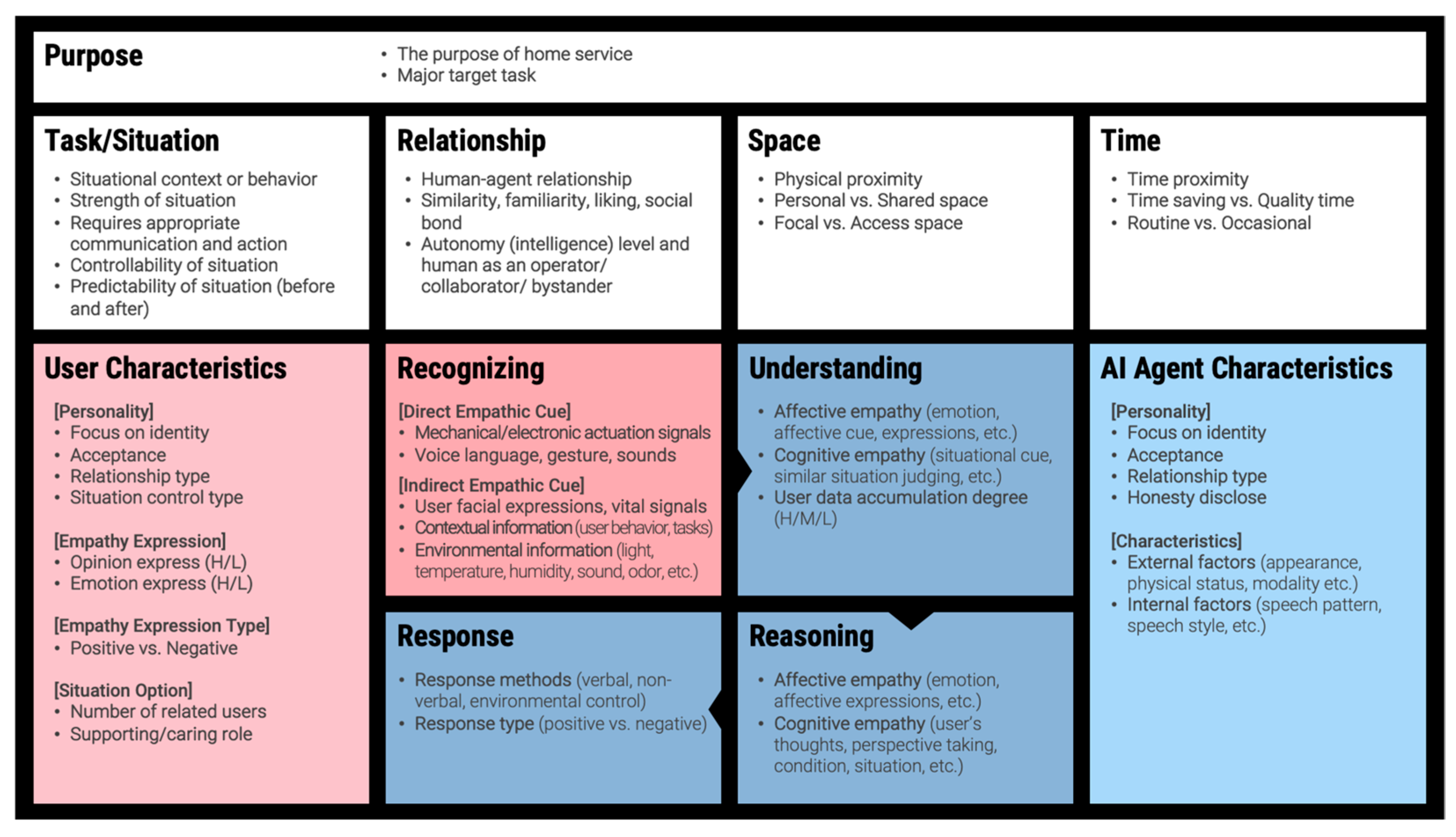

4.1. Analyzing Artificial Empathy Interaction Components and Deriving an Initial Framework

4.2. Advancing the Framework by Applying Industry Practices

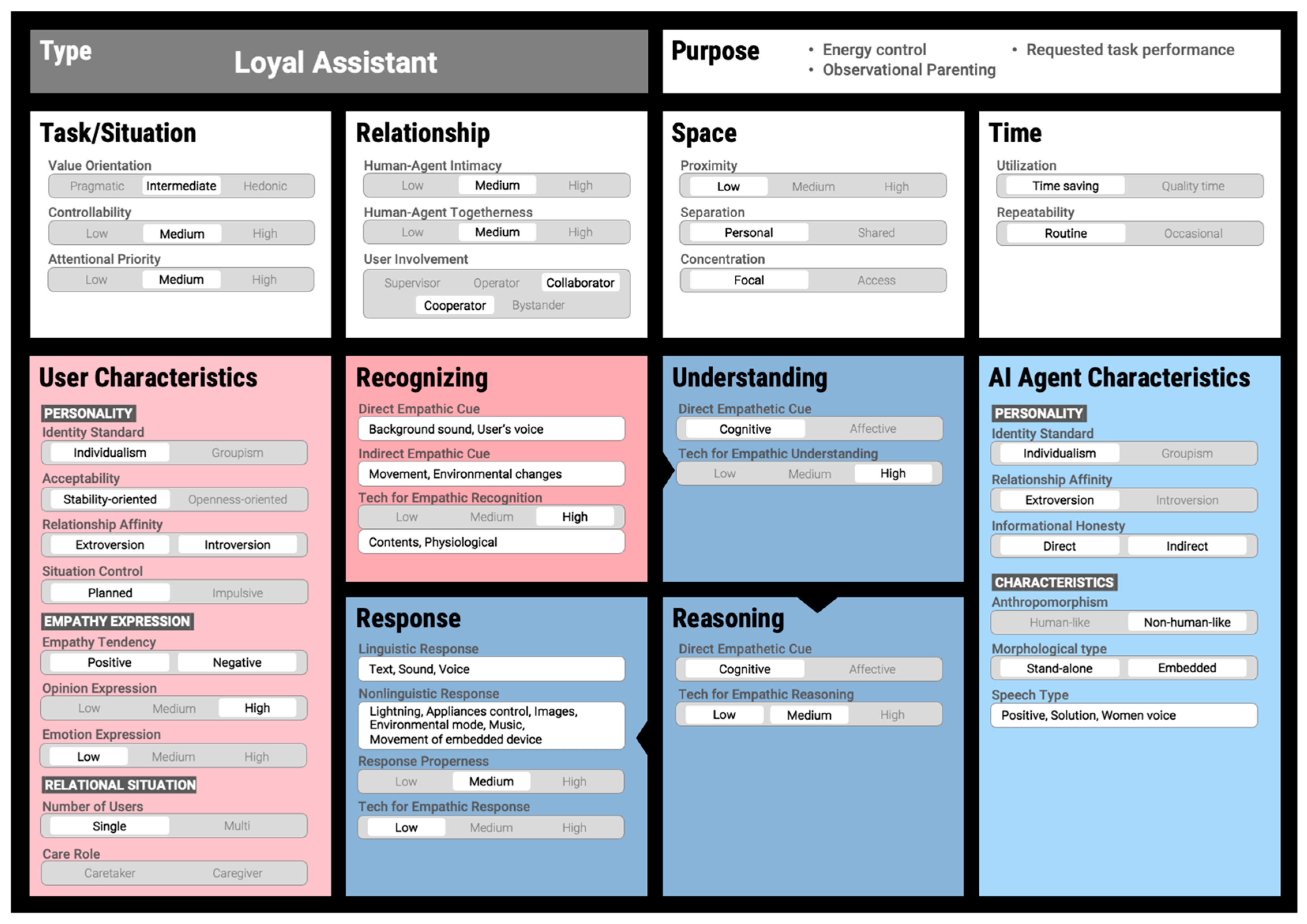

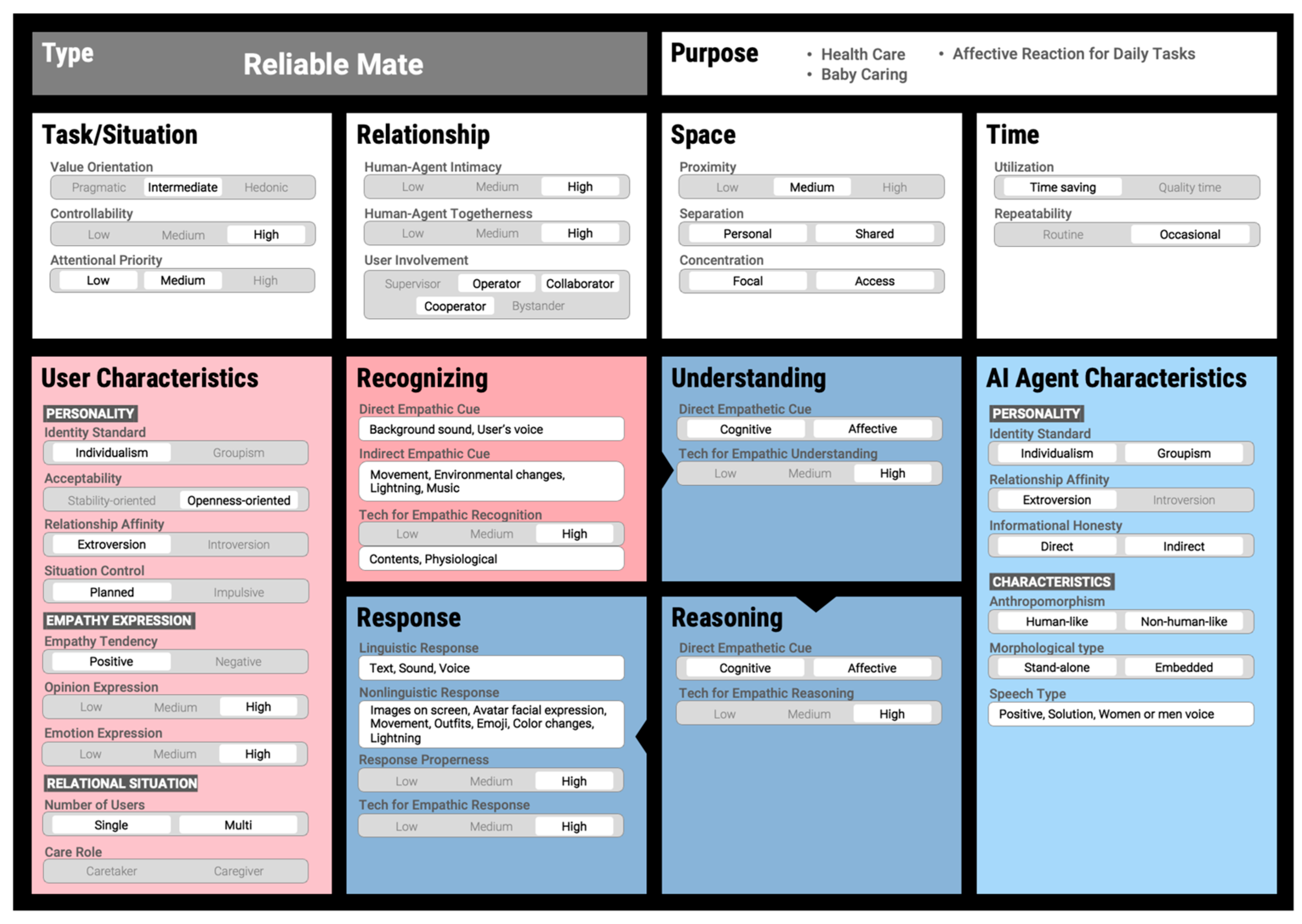

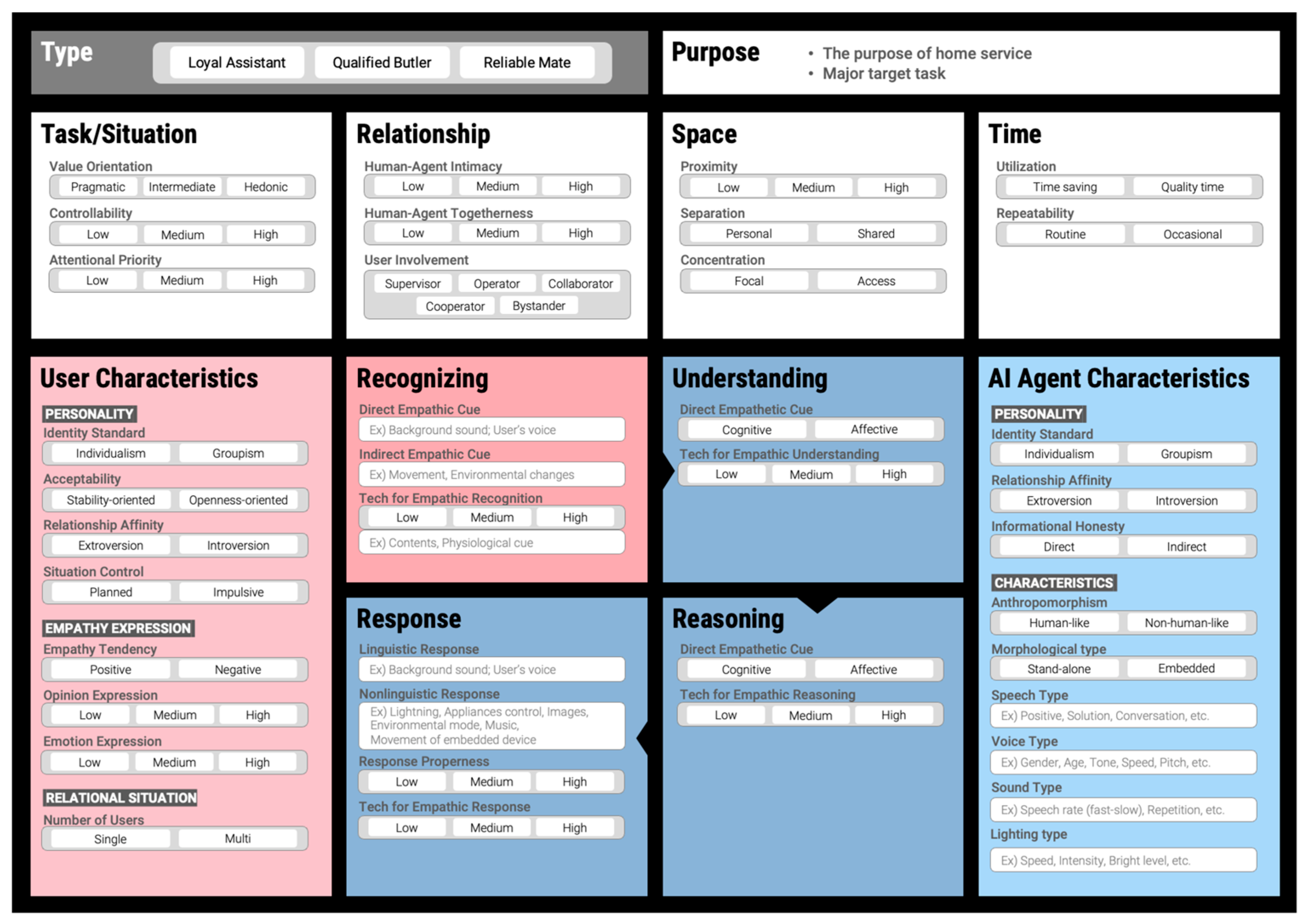

4.3. Final Framework Proposal: Empathic HAX (Human–Agent Interaction) Canvas

5. Discussion

6. Conclusions and Further Study

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zachiotis, G.A.; Andrikopoulos, G.; Gornez, R.; Nakamura, K.; Nikolakopoulos, G. A survey on the application trends of home service robotics. In Proceedings of the IEEE International Conference on Robotics and Biomimetics (ROBIO), Kuala Lumpur, Malaysia, 12–15 December 2018; pp. 1999–2006. [Google Scholar]

- Reig, S.; Carter, E.J.; Kirabo, L.; Fong, T.; Steinfeld, A.; Forlizzi, J. Smart home agents and devices of today and tomorrow: Surveying use and desires. In Proceedings of the 9th International Conference on Human-Agent Interaction, Online, 9–11 November 2021; pp. 300–304. [Google Scholar]

- Guo, X.; Shen, Z.; Zhang, Y.; Wu, T. Review on the application of artificial intelligence in smart homes. Smart Cities 2019, 2, 402–420. [Google Scholar] [CrossRef]

- Kim, H.M.; Kang, H.J. Smart Home AIoT Service Domains Derivation and Typology Based on User Involvement: Focused on Case Analysis. Des. Converg. Study 2023, 22, 1–20. [Google Scholar]

- Rialle, V.; Lamy, J.B.; Noury, N.; Bajolle, L. Telemonitoring of patients at home: A software agent approach. Comput. Methods Programs Biomed. 2003, 72, 257–268. [Google Scholar] [CrossRef] [PubMed]

- Chang, Y.; Gao, Y.; Zhu, D.; Safeer, A.A. Social robots: Partner or intruder in the home? The roles of self-construal, social support, and relationship intrusion in consumer preference. Technol. Forecast. Soc. Chang. 2023, 197, 122914. [Google Scholar] [CrossRef]

- Robert, L. Personality in the human robot interaction literature: A review and brief critique. In Personality in the Human Robot Interaction Literature: A Review and Brief Critique, Proceedings of the 24th Americas Conference on Information Systems, New Orleans, LA, USA, 16–18 August 2018; Robert, L.P., Ed.; AMCIS 2018: New Orleans, LA, USA, 2018. [Google Scholar]

- Rossi, S.; Staffa, M.; de Graaf, M.M.; Gena, C. Preface to the special issue on personalization and adaptation in human–robot interactive communication. User Model. User-Adapt. Interact. 2023, 33, 189–194. [Google Scholar] [CrossRef]

- Reich, N.; Eyssel, F. Attitudes towards service robots in domestic environments: The role of personality characteristics, individual interests, and demographic variables. Paladyn J. Behav. Robot. 2013, 4, 123–130. [Google Scholar] [CrossRef]

- What Is an AI Agent? Available online: https://aws.amazon.com/what-is/ai-agents/ (accessed on 18 September 2024).

- Cavone, D.; De Carolis, B.; Ferilli, S.; Novielli, N. An Agent-based Approach for Adapting the Behavior of a Smart Home Environment. In Proceedings of the 12th Workshop on Objects and Agents, Rende, Italy, 4–6 July 2011; pp. 105–111. [Google Scholar]

- Kim, S.W.; Kim, H.J. A Study on Design of Smart Home Service Robot McBot II. J. Korea Acad.-Ind. Coop. Soc. 2011, 12, 1824–1832. [Google Scholar]

- Anyfantis, N.; Kalligiannakis, E.; Tsiolkas, A.; Leonidis, A.; Korozi, M.; Lilitsis, P.; Antona, M.; Stephanidis, C. AmITV: Enhancing the role of TV in ambient intelligence environments. In Proceedings of the 11th PErvasive Technologies Related to Assistive Environments Conference, Corfu, Greece, 26–29 June 2018; pp. 507–514. [Google Scholar]

- Do, H.M.; Pham, M.; Sheng, W.; Yang, D.; Liu, M. RiSH: A robot-integrated smart home for elderly care. Robot. Auton. Syst. 2018, 101, 74–92. [Google Scholar] [CrossRef]

- Garg, R.; Sengupta, S. He is just like me: A study of the long-term use of smart speakers by parents and children. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2020, 4, 1–24. [Google Scholar] [CrossRef]

- Lin, G.C.; Schoenfeld, I.; Thompson, M.; Xia, Y.; Uz-Bilgin, C.; Leech, K. “What color are the fish’s scales?” Exploring parents’ and children’s natural interactions with a child-friendly virtual agent during storybook reading. In Proceedings of the 21st Annual ACM Interaction Design and Children Conference, Braga Portugal, 27–30 June 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 185–195. [Google Scholar]

- Ramoly, N.; Bouzeghoub, A.; Finance, B. A framework for service robots in smart home: An efficient solution for domestic healthcare. IRBM 2018, 39, 413–420. [Google Scholar] [CrossRef]

- Das, S.K.; Cook, D.J. Health monitoring in an agent-based smart home by activity prediction. In Proceedings of the International Conference on Smart Homes and Health Telematics, Singapore, 15 September 2004; Washington State University: Pullman, WA, USA, 2004; Volume 14, pp. 3–14. [Google Scholar]

- Sisavath, C.; Yu, L. Design and implementation of security system for smart home based on IOT technology. Procedia Comput. Sci. 2021, 183, 4–13. [Google Scholar] [CrossRef]

- Bangali, J.; Shaligram, A. Design and Implementation of Security Systems for Smart Home based on GSM technology. Int. J. Smart Home 2013, 7, 201–208. [Google Scholar] [CrossRef]

- Alan, A.T.; Costanza, E.; Ramchurn, S.D.; Fischer, J.; Rodden, T.; Jennings, N.R. Tariff agent: Interacting with a future smart energy system at home. ACM Trans. Comput.-Hum. Interact. 2016, 23, 1–28. [Google Scholar] [CrossRef]

- Luria, M.; Hoffman, G.; Megidish, B.; Zuckerman, O.; Park, S. Designing Vyo, a robotic Smart Home assistant: Bridging the gap between device and social agent. In Proceedings of the 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA, 26–31 August 2016; pp. 1019–1025. [Google Scholar]

- Nijholt, A. Google home: Experience, support and re-experience of social home activities. Inf. Sci. 2008, 178, 612–630. [Google Scholar] [CrossRef]

- Shim, H.R.; Choi, J.H. Anthropomorphic Design Factors of Pedagogical Agent: Focusing on the Human Nature and Role. J. Korea Contents Assoc. 2022, 22, 358–369. [Google Scholar]

- Sheehan, B.; Jin, H.S.; Gottlieb, U. Customer service chatbots: Anthropomorphism and adoption. J. Bus. Res. 2020, 115, 14–24. [Google Scholar] [CrossRef]

- Bošnjaković, J.; Radionov, T. Empathy: Concepts, theories and neuroscientific basis. Alcohol. Psychiatry Res. J. Psychiatr. Res. Addict. 2018, 54, 123–150. [Google Scholar] [CrossRef]

- Jin, H.O.; Kim, M.S.; Kim, C.S. A study on the Emotional Interface Elements of Design Products-focusing on newtro home appliances. J. Basic Des. Art 2021, 22, 477–490. [Google Scholar] [CrossRef]

- Dai, X.; Liu, Z.; Liu, T.; Zuo, G.; Xu, J.; Shi, C.; Wang, Y. Modelling conversational agent with empathy mechanism. Cogn. Syst. Res. 2024, 84, 101206. [Google Scholar] [CrossRef]

- Zhu, Q.; Luo, J. Toward artificial empathy for human-centered design: A framework. In Proceedings of the International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Boston, MA, USA, 13–16 August 2023; Volume 87318, p. V03BT03A072. [Google Scholar]

- Alanazi, S.A.; Shabbir, M.; Alshammari, N.; Alruwaili, M.; Hussain, I.; Ahmad, F. Prediction of emotional empathy in intelligent agents to facilitate precise social interaction. Appl. Sci. 2023, 13, 1163. [Google Scholar] [CrossRef]

- Paiva, A. Empathy in social agents. Int. J. Virtual Real. 2011, 10, 1–4. [Google Scholar] [CrossRef]

- Yalçın, Ö.N. Evaluating empathy in artificial agents. In Proceedings of the 2019 8th International Conference on Affective Computing and Intelligent Interaction (ACII), Cambridge, UK, 3–6 September 2019; pp. 1–7. [Google Scholar]

- Prendinger, H.; Ishizuka, M. The Empathic Companion: A Character-Based Interface that Addresses Users’ Affective States. Appl. Artif. Intell. 2005, 19, 267–285. [Google Scholar] [CrossRef]

- Feine, J.; Gnewuch, U.; Morana, S.; Maedche, A. A taxonomy of social cues for conversational agents. Int. J. Hum.-Comput. Stud. 2019, 132, 138–161. [Google Scholar] [CrossRef]

- Piumsomboon, T.; Lee, Y.; Lee, G.A.; Dey, A.; Billinghurst, M. Empathic mixed reality: Sharing what you feel and interacting with what you see. In Proceedings of the 2017 International Symposium on Ubiquitous Virtual Reality (ISUVR), Nara, Japan, 27–29 June 2017; pp. 38–41. [Google Scholar]

- Gladstein, G.A. Understanding empathy: Integrating counseling, developmental, and social psychology perspectives. J. Couns. Psychol. 1983, 30, 467. [Google Scholar] [CrossRef]

- Kwak, S.S.; Kim, Y.; Kim, E.; Shin, C.; Cho, K. What makes people empathize with an emotional robot?: The impact of agency and physical embodiment on human empathy for a robot. In Proceedings of the 2013 IEEE Ro-man, Gyeongju, Republic of Korea, 26–29 August 2013; pp. 180–185. [Google Scholar]

- Park, S.; Whang, M. Empathy in human–robot interaction: Designing for social robots. Int. J. Environ. Res. Public Health 2022, 19, 1889. [Google Scholar] [CrossRef]

- Glikson, E.; Woolley, A.W. Human trust in artificial intelligence: Review of empirical research. Acad. Manag. Ann. 2020, 14, 627–660. [Google Scholar] [CrossRef]

- Pelau, C.; Dabija, D.C.; Ene, I. What makes an AI device human-like? The role of interaction quality, empathy and perceived psychological anthropomorphic characteristics in the acceptance of artificial intelligence in the service industry. Comput. Hum. Behav. 2021, 122, 106855. [Google Scholar] [CrossRef]

- Ryu, Y. A Development and Validation of Cognitive Empathy Scale. Master’s Thesis, Ewha Women’s University, Seoul, Republic of Korea, 2019. [Google Scholar]

- Kim, M. A Study on the Correlation between Empathy and Personal Traits. Ph.D. Thesis, Sunmoon University, Chungcheongnam-do, Republic of Korea, 2011. [Google Scholar]

- Kim, B.; Kim, Y. A Study on the Design of User Emotional Interface by Smartphone Application Type. Des. Knowl. J. 2011, 20, 181–192. [Google Scholar]

- Onnasch, L.; Roesler, E. A taxonomy to structure and analyze human–robot interaction. Int. J. Soc. Robot. 2021, 13, 833–849. [Google Scholar] [CrossRef]

- Lee, J.H.; Kang, H.J. Design Strategies per Smart Work Types based on Multi-Layered Framework. Arch. Des. Res. 2024, 37, 431–455. [Google Scholar]

- Forlizzi, J. The product service ecology: Using a systems approach in design. In Proceedings of the Relating Systems Thinking and Design 2013 Symposium Proceedings, Oslo, Norway, 9–11 October 2013. [Google Scholar]

- Hsieh, W.F.; Sato-Shimokawara, E.; Yamaguchi, T. Investigation of robot expression style in human-robot interaction. J. Robot. Mechatron. 2020, 32, 224–235. [Google Scholar] [CrossRef]

- Clarke, C.; Krishnamurthy, K.; Talamonti, W.; Kang, Y.; Tang, L.; Mars, J. One Agent Too Many: User Perspectives on Approaches to Multi-agent Conversational AI. arXiv 2024, arXiv:2401.07123, 2024. [Google Scholar]

- Kozima, H.; Yano, H. A robot that learns to communicate with human caregivers. In Proceedings of the First International Workshop on Epigenetic Robotics, Lund, Sweden, 17–18 September 2001; Volume 2001. [Google Scholar]

- Park, M.; Kim, H.; Kim, E.; Seok, H. A Study on Scenario Based Virtual Reality Contents Design Guideline for Psychological Type Diagnosis: Focusing on Empathy Type. J. Digit. Art Eng. Multimed. 2021, 8, 73–86. [Google Scholar]

- Lee, S.; Choi, M.; Lee, J.; Son, M.; Choi, S.; Lee, J.; Kim, M.; Kim, J.; Nam, Y.; Nam, S. Effects of Sympathetic Talking and Happiness Level on Consumer Trust and Decision-making Styles. J. Consum. Policy Stud. 2022, 53, 121–147. [Google Scholar]

- Lee, S.; Yoo, S. Design of the emotion expression in multimodal conversation interaction of companion robot. Des. Converg. Study 2017, 16, 137–152. [Google Scholar]

- Surma-Aho, A.; Hölttä-Otto, K. Conceptualization and operationalization of empathy in design research. Des. Stud. 2022, 78, 101075. [Google Scholar] [CrossRef]

- Jai, L.; Park, J. Artificial Intelligence Technology Trends and Application. J. Korea Soc. Inf. Technol. Policy Manag. 2022, 14, 2827–2832. [Google Scholar]

- Girju, R.; Girju, M. Design considerations for an NLP-driven empathy and emotion interface for clinician training via telemedicine. In Proceedings of the Second Workshop on Bridging Human—Computer Interaction and Natural Language Processing, Online, 15 July 2022; pp. 21–27. [Google Scholar]

| Area | Purpose | Agent | Interaction | Type of Interaction | Ref No. |

|---|---|---|---|---|---|

| Cleaning | Efficiency | Robot, Integrated System | Context Recognition and Control, Alert | Text, Device Movement | [12] |

| Entertainment | Immersion | Smart Appliances, Smart Furniture, Integrated System | Context Recognition and Control, Alert, Communication | Text, Voice | [13] |

| Adult Care | Support | Robot, Integrated System | Context Recognition, Information Alert | Text, Device Movement | [14] |

| Baby Care | Support | Robot, Integrated System | Reaction by Context | Voice, Images, Video on Screen, Device Movement | [15,16] |

| Healthcare | Information Delivery | Robot, Integrated System | Informative Contents Delivery | Device Movement, Voice, Images, Video on Screen | [17,18] |

| Security | Efficiency and Support | Integrated System | Context Recognition and Control, Alert | Text | [19,20] |

| Energy | Efficiency and Support | Integrated System, Application | Context Recognition and Control, Alert, Summarization | Text | [21] |

| Social and Communication | Communication | Robot, Controller, Integrated System, Application | Physical Reaction for Empathic Synchronization | Device Movement, Voice, Images, Video on Screen | [22] |

| Work at Home | Immersion | System, Virtual Application System | [23] |

| Domain | Category | Elements | Metrics | Ref. |

|---|---|---|---|---|

| Purpose | Purpose of Use AI Agent | Purpose of AI Agent Service | N/A | [39] |

| Target Goal of Tasks | N/A | |||

| Context | Situation, Context, Task | Task Characteristics | Properness | [40,41] |

| Accuracy | ||||

| Controllability | ||||

| Importance | ||||

| Relationship | User–AI Agent Relationship | User–AI Agent Intimacy | High-Mid-Low | [42,43] |

| User–AI Agent Togetherness | High-Mid-Low | |||

| User Involvement | Supervisor/Operator/Collaborator/Cooperator/Bystander | |||

| Space | Space Characteristic | Physical Proximity | High-Mid-Low | [44] |

| Space Separation | Personal | |||

| Shared | ||||

| Space Concentration | Focal | |||

| Access | ||||

| Time | Time Characteristic | Time Proximity | High-Mid-Low | |

| Time Value | Time Saving | |||

| Quality Time | ||||

| Repetition | Routine | |||

| Occasional |

| Domain | Category | Elements | Metrics | Ref. |

|---|---|---|---|---|

| User | User’s Disposition | Identity Standard | Individualism | [47] |

| Groupism | ||||

| Change Acceptability | Stability-oriented | |||

| Openness-oriented | ||||

| Affinity for Relationship | Extroversion | |||

| Introversion | ||||

| Situation Control | Planned | |||

| Impulsive | ||||

| Tendency to Express Empathy | Positive | |||

| Negative | ||||

| User’s Situation | Number of Users | Single | [48,49] | |

| Multiple | ||||

| User Support Relationship Role | Caretaker | |||

| Caregiver | ||||

| AI Agent | AI Agent’s Disposition | Identity Standard | Individualism | [50] |

| Groupism | ||||

| Change Acceptability | Stability-oriented | |||

| Openness-oriented | ||||

| Affinity for Relationship | Extroversion | |||

| Introversion | ||||

| Informational Honesty | Direct | |||

| Indirect | ||||

| AI Agent Settings | External Factor | Human-like | [51,52] | |

| Non-human-like | ||||

| Tangible | ||||

| Intangible | ||||

| Potential Factor | Speech Style | N/A | ||

| Utterance Style | N/A |

| Domain | Category | Elements | Metrics | Ref |

|---|---|---|---|---|

| Interaction | Recognition | Empathic Cue | Direct | [29] |

| Indirect | ||||

| Related Technology | High-Mid-Low | |||

| Understand | Way of Empathic Understanding | Affective | [33,53] | |

| Cognitive | ||||

| Related Technology | High-Mid-Low | |||

| Reasoning | Way of Empathic Understanding | Affective | [54] | |

| Cognitive | ||||

| Related Technology | High-Mid-Low | |||

| Response | Way of Empathic Response | Linguistic | [34,55] | |

| Non-linguistic | ||||

| Related Technology | High-Mid-Low |

| Domain | Category | Elements | Metrics | Case 1 | Case 2 | Case 3 |

|---|---|---|---|---|---|---|

| Purpose | Purpose of Use AI agent | Purpose of AI Agent Service | N/A | Entertainment | Home Security Management | Auto Task for Healthcare |

| Target Goal of Tasks | N/A | Customized Settings | Overall Security Managing | Provide Health Service | ||

| Context | Situation, Context, Task | Task Characteristics | Accuracy | N | Y | Y |

| Controllability | Y | N | Y | |||

| Attentional Priority | L | H | M | |||

| Relationship | User–AI Agent Relationship | User–AI Agent Intimacy | H | H | M | |

| User–AI Agent Togetherness | H | L | M | |||

| User Involvement | Cooperator | Bystander | Operator | |||

| Space | Space Characteristic | Proximity | L | L | L | |

| Space Separation | Shared | Both | Personal | |||

| Space Concentration | Focal | Both | Focal | |||

| Time | Time Characteristic | Time Utilization Value | Quality Time | Time Saving | Time Saving | |

| Time Repetition | Occasional | Occasional | Routine | |||

| User | User Characteristic | User’s Disposition | Identity Standard | N/A | Individualism | N/A |

| Change Acceptability | Openness-oriented | Stability-oriented | Stability-oriented | |||

| Affinity for Relationships | N/A | N/A | N/A | |||

| Situation Control | N/A | Planned | Planned | |||

| Tendency to Express Empathy | Positive | Positive | Positive | |||

| Empathic Expression Degree | Verbal Expression | H | L | H | ||

| Affective Expression | H | L | H | |||

| User’s Situation | Number of Users | Single | Single | Single | ||

| User Support Relationship Role | N/A | N/A | N/A | |||

| AI Agent | AI Agent Characteristic | AI Agent’s Disposition | Identity Standard | N/A | Groupism | N/A |

| Affinity for Relationships | N/A | N/A | N/A | |||

| Informational Honesty | Both | Direct | Both | |||

| AI Agent Settings | External Factor | Non-human-like | Human-like (Partially) | Human-like (Virtual Avatar) | ||

| Potential Factor | Solution, Positive, Women | Solution, Women | Solution, Positive, Women | |||

| Interaction | Interaction Type and Method | Recognition | Empathic Cue | User’s Voice | Sound | User’s Voice |

| Related Technology | Contents, Physiological | Contents, Physiological | Contents, Physiological | |||

| Understand | Way of Empathic Understanding | Cognitive (Affective Little) | Cognitive | Cognitive | ||

| Related Technology | H | M | M | |||

| Reasoning | Way of Empathic Reasoning | Cognitive (Affective Little) | Cognitive | Cognitive | ||

| Related Technology | M | M | M | |||

| Response | Way of Empathic Response | Voice, Light, Control | Image, Movement, Colors | Image, Movement | ||

| Related Technology | H | H | H |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, J.; Kang, H.-J. Artificial Empathy in Home Service Agents: A Conceptual Framework and Typology of Empathic Human–Agent Interactions. Appl. Sci. 2025, 15, 3096. https://doi.org/10.3390/app15063096

Lee J, Kang H-J. Artificial Empathy in Home Service Agents: A Conceptual Framework and Typology of Empathic Human–Agent Interactions. Applied Sciences. 2025; 15(6):3096. https://doi.org/10.3390/app15063096

Chicago/Turabian StyleLee, Joohyun, and Hyo-Jin Kang. 2025. "Artificial Empathy in Home Service Agents: A Conceptual Framework and Typology of Empathic Human–Agent Interactions" Applied Sciences 15, no. 6: 3096. https://doi.org/10.3390/app15063096

APA StyleLee, J., & Kang, H.-J. (2025). Artificial Empathy in Home Service Agents: A Conceptual Framework and Typology of Empathic Human–Agent Interactions. Applied Sciences, 15(6), 3096. https://doi.org/10.3390/app15063096