Abstract

Mechanical, electrical, and plumbing (MEP) systems are vital in construction engineering as their installation quality significantly impacts project success. Traditional inspection methods often fail to ensure compliance with building information models (BIMs), leading to safety hazards due to deviations during construction. Spurred by these concerns, this paper introduces a novel BIM-based pipeline construction comparison system that relies on computer vision technology. The developed system uses deep learning algorithms for real-time data collection to enhance monitoring efficiency and accuracy, as well as advanced object detection algorithms to compare real-time construction images with BIMs. The proposed architecture addresses the limitations of existing techniques in handling MEP complexities, and through an automatic comparison and verification process, it detects deviations promptly, ensuring adherence to design specifications. This study innovatively integrates real-time data collection, deep learning algorithms, and an automated BIM comparison mechanism to enhance the accuracy, efficiency, and automation of pipeline installation monitoring, addressing the limitations of existing inspection methods.

1. Introduction

MEP (Mechanical, Electrical, and Plumbing) systems play a crucial role in construction engineering, with their construction progress and quality directly affecting the successful implementation of the entire project. MEP engineering involves installing various subsystems, such as piping, electrical, and air conditioning systems, including complex construction techniques and procedures. However, traditional manual inspection and measurement methods are inefficient and cannot ensure the consistency of pipe installation with design drawings (i.e., Building Information Models, BIMs). This is because, during construction, environmental changes, human error, or design changes lead to deviations from the original BIM in the pipe installation. Notably, if these deviations are not promptly detected and corrected, they can lead to later operation and maintenance difficulties and may cause safety incidents.

BIM model review methods based on computer vision technology have recently attracted widespread attention [1,2]. These methods collect real-time image and video data from the construction site through cameras and other devices and compare them with the BIM to monitor construction progress and quality in real time. The existing construction progress inspection methods based on object detection algorithms, such as YOLO [3] and Faster R-CNN [4], have significantly improved detection accuracy and efficiency. However, current methods suffer from dealing with the complexity of MEP components and fail to meet the requirements of practical engineering applications. Primary concerns include insufficient real-time monitoring capabilities, difficulty in comprehensively capturing dynamic changes during the pipe installation process, inadequate accuracy of monitoring data, inability to reflect the actual installation state of the pipes accurately, and the complex and cumbersome nature of the comparison and analysis work between the monitoring results and the BIM, due to the lack of an automated comparison and verification mechanism.

This paper develops a BIM-based pipeline construction comparison system that monitors the pipe installation process in real time and automatically compares and analyzes it with the BIM to ensure its accuracy and compliance. The proposed solution integrates real-time data collection and deep learning algorithms for high-precision monitoring and automatic verification of the pipe installation status. The core objective of this study is to enhance the level of automation of pipeline installation monitoring, reduce human interference, and improve monitoring efficiency and accuracy.

2. Related Work

2.1. Summary

The advancement of intelligent pipeline inspection and edge detection has been significantly influenced by the integration of BIM, deep learning, and generative models. Traditional pipeline acceptance methods rely on manual inspections, which, despite their reliability, are time-consuming and error-prone. The adoption of BIM and deep learning has transformed this process, enabling automated defect detection and real-time monitoring. Simultaneously, edge detection techniques have evolved from conventional gradient-based methods to sophisticated deep learning models, improving accuracy and robustness. Recently, diffusion probabilistic models have gained attention for their powerful generative capabilities, extending their application beyond synthesis tasks to perception-based challenges. This section reviews key developments in these areas, highlighting their contributions, existing challenges, and future research directions.

2.2. Pipeline Construction Acceptance

BIM technology is gradually transforming traditional inspection methods in pipeline construction acceptance testing. Conventional approaches primarily rely on manual visual inspections and non-destructive testing (NDT) [5]. Although these approaches ensure pipeline quality, they are time-consuming and vulnerable to human error. On the contrary, the BIM creates a three-dimensional digital pipeline system model that precisely records and monitors every stage of the construction process [6]. Researchers have highlighted that BIM models allow inspectors to compare actual construction with design drawings in real time, promptly identifying and rectifying potential issues, such as deviations in pipeline positioning and improper connections [7].

Recently, with the rapid advancement of deep learning technology, more studies have begun to explore its application in automated pipeline acceptance testing, mainly focusing on the effectiveness of convolutional neural networks (CNNs) in identifying defects in images. For instance, ref. [8] effectively detected and located corrosion in pipelines through a custom CNN, significantly improving the accuracy and efficiency of corrosion detection. Additionally, in [9], the authors proposed PipeNet, a novel deep learning network capable of detecting pipes regardless of the input data size and the target scene scale while predicting pipe centerline points along with other parameters. Furthermore, integrating sensor data from building information modeling (BIM) with deep learning algorithms facilitates real-time monitoring and analysis of pipeline conditions. This integration enables predictive maintenance strategies, reducing downtime and maintenance costs [10]. Such an approach enhances the accuracy and efficiency of inspections and provides valuable insights for future maintenance. Hence, combining BIM and deep learning is paving the way for more intelligent construction management practices, enabling a shift towards a more proactive approach in pipeline maintenance [11].

Despite these advancements, current research still faces challenges related to data quality, model interpretability, and integration with existing inspection systems. Therefore, future studies should continue to investigate the potential of BIM in pipeline construction, focusing on developing datasets, algorithm optimization, and practical system integration to promote the intelligent advancement of pipeline construction acceptance testing.

2.3. Edge Detection

Edge detection identifies objects’ contours and significant edge lines in natural images. Early edge detection techniques, such as Sobel [12] and Canny [13], primarily relied on local gradient changes to detect edges. However, these techniques were susceptible to image noise and failed to utilize global image information. With the rise of CNNs, researchers began developing methods that integrate multi-scale features, significantly improving edge detection accuracy. For instance, HED [14] first proposed a complete end-to-end edge detection framework, while RCF [15] further optimized this framework by incorporating richer hierarchical features. Moreover, BDCN [16] employed a bi-directional cascade structure and trained the edge detector with specific supervision signals for different network layers. PiDiNet [17] introduced pixel difference convolution and offered an efficient and lightweight solution for edge detection. UAED [18] addressed more challenging samples by evaluating the uncertainty levels among multiple annotations. Moreover, EDTER [19] adopted a two-stage vision transformer strategy to capture global context and local detail information.

Despite the significant advancements in improving edge detection accuracy by integrating cross-hierarchical features and uncertainty information, edges generated by learning-based methods are often too coarse and require complex post-processing steps. Although existing research has improved edge clarity through refined loss functions [20,21] and label refinement strategies [22], an edge detection technology still needs to be developed to meet the accuracy and clarity requirements without relying on post-processing.

2.4. Diffusion Probabilistic Model

As a class of generative models based on Markov chains, diffusion probabilistic models reconstruct data samples by progressively learning to remove noise. These models have demonstrated remarkable performance in several fields. For instance, in computer vision [23,24,25], they have demonstrated robust image analysis and processing capabilities. In natural language processing [26], they have optimized the generative abilities of language models, while in audio generation [27], they have also achieved significant results.

Although diffusion models have significantly progressed in data generation, their potential in perception tasks is equally noteworthy. Specifically, diffusion models have shown their potential in accurately capturing visual information in tasks such as image segmentation [28,29] and object detection [30]. These advancements broaden the application scope of diffusion models and lay the foundation for future research and technological innovations.

3. Methods

This chapter proposes an automated pipeline construction monitoring system that integrates computer vision techniques, deep learning-based segmentation, and advanced comparative analysis to enhance construction accuracy and efficiency. The proposed method begins by aligning on-site photos with BIM projections through a camera imaging model, ensuring a consistent perspective. Pipeline segmentation is then performed using the Segment Anything Model (SAM), enabling precise identification of pipeline structures. A simulation-based detection mechanism extracts and compares the expected pipeline model from BIM with the as-built pipeline, providing a systematic assessment of construction accuracy. Additionally, diffusion-based contour extraction enhances edge clarity, improving segmentation reliability. The final stage involves automated result generation, where segmented masks are aligned, geometric features are analyzed, and discrepancies are visualized in comparison diagrams. By streamlining the entire pipeline from image acquisition to automated analysis, this method significantly reduces manual effort, enhances real-time monitoring capabilities, and establishes a reliable framework for BIM-integrated construction quality control.

After successfully obtaining the pipeline segmentation results, the developed method applies simulation detection technology to extract the expected pipeline model specified by the construction requirements and the pipeline model produced by the actual construction on site. Finally, the correctness of the piping is assessed by comparing the dimensions of the two simulated structures, affording a visual recognition solution for construction quality control.

3.1. Camera Imaging Model

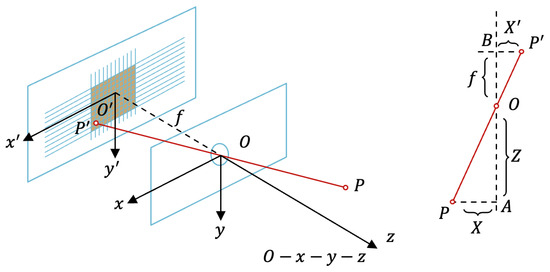

An image comprises numerous pixels, each recording the color and brightness information at its corresponding location. A core issue in camera model research is representing the three-dimensional world in a two-dimensional image. The most commonly used camera imaging model is the Pinhole Camera Model, which describes light passing through a small aperture to form an image on the imaging plane [31].

As illustrated in Figure 1, the pinhole imaging model typically establishes a right-handed Cartesian coordinate system with the camera’s optical center as the origin. The object to be captured is positioned along the positive z-axis, while the imaging plane, comprising photosensitive material, is placed on the negative z-axis. The imaging plane remains parallel to the xy-plane at z = −f, where f is the camera’s focal length. The z-axis intersects the imaging plane at point O’. Any light ray emitted or reflected from an arbitrary point P on the object passes through the camera’s optical center O and intersects the imaging plane at point P’. Let the coordinates of point P be and the coordinates of point P’ be . Since P, O, and P’ are collinear, Equation (1) holds, which maps any point in three-dimensional space to its corresponding point on the imaging plane.

Figure 1.

Pinhole camera model.

The brightness and color information of each pixel in the image can be obtained by sampling and quantizing the imaging plane. An image coordinate system is established on the imaging plane, typically with the origin at the image’s top-left corner. The positive u-direction is horizontal to the right, parallel to the camera’s x-axis, while the positive v-direction is vertically downward, parallel to the camera’s y-axis. Typically, there is a simple scaling and translation transformation between the image coordinate system and the imaging plane coordinate system . Let the coordinates of in the image coordinate system be , with the scaling factor for the u-axis and the scaling factor for the v-axis. The relationship between the imaging plane coordinate system and the image coordinate system is formulated as follows:

From Equations (1)–(3), it is derived that the pixel coordinates corresponding to any point in the three-dimensional space are related as follows:

where matrix K is the camera intrinsic matrix, which describes the relationship between the coordinates of a point in the three-dimensional space and the corresponding pixel in the image, typically fixed as the camera’s inherent parameters set at the factory. It can be observed that, during the imaging process, an object’s depth information, i.e., the z-coordinate, is lost. Thus, all points along the line in the three-dimensional world project to the same point on the camera plane.

In real-world applications, using the camera coordinate system established in Figure 1 may be inconvenient for model description and subsequent processing. Therefore, for practical reasons, a world coordinate system is often established based on specific requirements, leading to a misalignment between the camera coordinate system and the world coordinate system. Camera extrinsic parameters primarily handle the transformation of any point in the world coordinate system to P in the camera coordinate system. This transformation involves a rotation matrix R and a translation vector t, as described below:

Combined with Equation (4), it projects point p in the image coordinate system for any point in the world coordinate system, satisfying Equation (6).

In homogeneous coordinates, this is represented by Equation (7), where is the camera extrinsic matrix.

Camera extrinsics describe the relative relationship between the camera coordinate system and the world coordinate system, which changes with the camera’s movement.

3.2. BIM Projection

This paper utilizes angle information from photographs to map the 3D structure of the BIM onto the 2D plane of the photographs, using perspective transformation or projection transformation. This step generates virtual BIM images that match the actual scene, laying the foundation for subsequent pipeline segmentation and comparative analysis. In this process, the extrinsic matrix determines the position and orientation of the camera, while the intrinsic matrix determines the projection properties. The Structure-from-Motion (SfM) technique can obtain the camera’s intrinsic and extrinsic matrices. SfM is a 3D reconstruction technique that estimates the camera’s pose (extrinsic parameters) and computes the positions of points in the 3D space by extracting and matching feature points from multi-view images. SfM feature detection algorithms (e.g., SIFT or SURF) extract feature points from each image, which are matched to identify the correspondences between feature points in different images of the same object. Next, algorithms such as PnP are used for the initial pose estimation of image pairs, followed by triangulation to obtain a sparse 3D point cloud. Subsequently, bundle adjustment (BA), a nonlinear least-squares optimization technique, refines the camera poses and 3D point positions across all images, minimizing the reprojection error. This process yields accurate extrinsic and intrinsic parameters of the cameras and the 3D structure of the scene. A commonly used open source software package for this process is COLMAP 3.12.0.dev0, which uses multiple images as inputs to estimate the camera’s intrinsic and extrinsic parameters.

3.3. Segmentation Mask Comparison

This study employs the Segment Anything Model (SAM), an advanced, large-scale segmentation model, to extract segmentation masks of pipes from two distinct sources, i.e., photos projected from BIM and photos captured on-site. SAM has demonstrated its robust object recognition and segmentation capabilities by excelling in identifying and isolating various construction elements within an image. Typical examples of these elements are pipes, brackets, and other associated structures. Remarkably, SAM achieves this without requiring additional training, making it a convenient and efficient solution for construction and engineering applications.

This study employs SAM to obtain two segmentation masks for each image: one from the BIM model projection and one from the on-site photo. The masks represent a critical step in the proposed architecture, directly comparing the planned and as-built conditions. The BIM-based mask serves as a reference for the intended design, while the on-site mask reflects the actual state of the construction. SAM can handle complex segmentation tasks with minimal intervention. This is because, instead of requiring extensive manual labeling or retraining for specific contexts, it autonomously distinguishes the multiple objects in a scene, saving time and ensuring consistency and accuracy in the segmentation results. After generating masks using SAM, these will be further processed and analyzed to assess the alignment, discrepancies, and overall compatibility between the BIM design and the real-world implementation. This comparison is critical in evaluating construction accuracy, identifying potential issues, and ensuring that the as-built environment conforms to the original design specifications.

3.4. Contour Extraction

Diffusion models are increasingly used for contour extraction. Thus, this paper employs the DiffusionEdge model for contour extraction tasks, utilizing a decoupled structure and leveraging input images as auxiliary conditions. DiffusionEdge uses an adaptive Fast Fourier Transform (FFT) filter to separate edge maps and noise components in the frequency domain, effectively discarding unwanted components. Specifically, given the encoder features F, the DiffusionEdge model first performs a 2D FFT and then represents the transformed features as Fc. Subsequently, the DiffusionEdge model constructs a learnable weight map W and multiplies it with Fc to achieve adaptive spectral filtering. This process enhances training owing to its ability to adjust specific frequency components globally, and the learned weights adapting to different frequencies of the target distribution. Adaptively discarding useless components allows DiffusionEdge to project features back to the spatial domain from the frequency domain via the Inverse Fast Fourier Transform (IFFT) and retains useful information with residual connections from F.

DiffusionEdge addresses the imbalance between edge and non-edge pixels by adopting a weighted binary cross-entropy (WCE) loss for optimization. WCE loss calculates the difference between the true edge probability Ei of the i-th pixel and the value pji of the j-th edge map for the i-th pixel, ignoring uncertain edge pixels determined by a threshold. This approach prevents network confusion, stabilizes training, and enhances performance. RCF [15] further improved this method to handle uncertainty among multiple annotators.

However, applying WCE loss to latent spaces is challenging because latent codes follow a normal distribution with different ranges. Thus, DiffusionEdge involves an uncertainty distillation loss that directly optimizes gradients in the latent space, avoiding the negative impact of gradient feedback through autoencoders and reducing GPU memory costs. This strategy enhances the effectiveness of DiffusionEdge in contour extraction while maintaining computational efficiency.

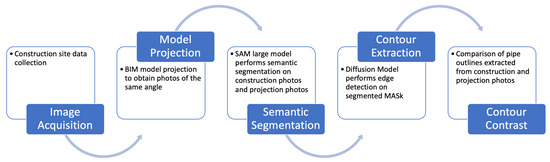

3.5. Comparison Results Output and Automated Process

Based on the segmentation masks generated by the automated segmentation network, this study introduces a comprehensive analysis architecture to produce pipeline construction monitoring comparison diagrams. Figure 2 illustrates this architecture, which integrates several critical steps, such as mask alignment and comparison, geometric feature extraction, feature difference calculation, visual representation, comparison diagram generation, and reporting and feedback. Each step is meticulously designed to provide actionable insights into the construction process. The comparison diagrams visually represent the discrepancies between the BIM model and construction progress. The analysis highlights areas of misalignment or deviation from the design by overlaying and aligning the segmentation masks. This is achieved through geometric features, such as pipe orientation, dimensions, and positioning, which are extracted and compared to identify specific inconsistencies. The calculated differences are visually represented in the comparison diagrams, enabling stakeholders to grasp the nature and extent of any issues quickly.

Figure 2.

Automatic comparison process between pipeline design and construction.

This paper also introduces an automated segmentation and comparison process encompassing several interconnected stages, i.e., image acquisition, BIM projection, pre-processing, mask generation, and error analysis. This workflow operates without manual intervention but automatically achieves a seamless pipeline for BIM projection and semantic segmentation comparison and outputs the results. Initially, images are acquired from the BIM or on-site sources, ensuring consistent input data. The BIM projection is then aligned with the real-world images to facilitate meaningful comparisons. Notably, a pre-processing strategy enhances the input data quality, optimizing it for segmentation. Then, SAM generates high-precision masks, capturing the geometric and semantic details necessary for downstream analysis. The error analysis phase further refines the process, identifying and quantifying deviations between the BIM projection and on-site results. This iterative approach continuously improves the accuracy and reliability of the comparison process. By automating these stages, the proposed methodology significantly enhances the efficiency of construction monitoring, reducing time and labor costs while improving the precision of the results. Ultimately, this comprehensive process provides a robust framework for integrating BIM technology with on-site construction practices, bridging the gap between digital models and real-world implementation.

4. Results

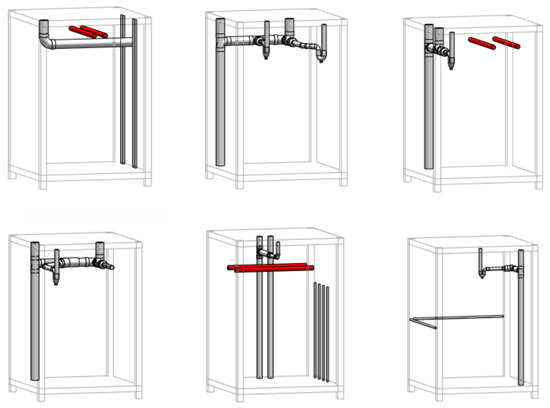

The proposed BIM-based pipeline construction monitoring system was rigorously evaluated through a series of experiments involving six BIMs and real-world construction scenarios. A dataset of on-site images was collected and used to validate the accuracy of the BIM projection method, confirming its consistency in viewing angles and scale alignment. Using the SAM, pipeline structures were accurately segmented from both the BIM projections and construction site images, enabling precise comparative analysis. Edge detection and contour extraction techniques were applied to assess alignment between the as-built and design models, with performance evaluated using the F-score metric. A comparative study with existing edge detection methods demonstrated the proposed approach’s superior accuracy and noise reduction capabilities. Additionally, Hu moment-based shape matching was employed to quantify discrepancies, effectively identifying construction deviations. Experimental results revealed that the proposed method achieved a 95.4% accuracy in detecting inconsistencies, highlighting its effectiveness in construction quality assessment and compliance verification. These findings confirm the system’s potential as a reliable and automated solution for pipeline construction monitoring offering robust technical support for quality control and engineering management.

4.1. Dataset

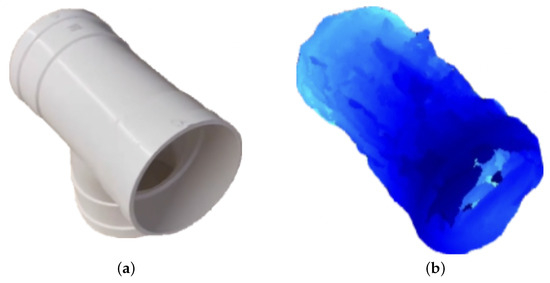

The effectiveness of the proposed BIM-based pipeline construction comparative system was evaluated using the following experimental design. First, we created six BIMs (Figure 3) to represent different construction scenarios. We used components of different specifications and sizes, such as PVC pipes, elbows, drainage tees, concentric reducers, flange connections, and P-traps. These components represent common parts of plumbing and drainage systems in our experiments, helping us validate the effectiveness of our method. These models were subsequently utilized in real construction scenarios to simulate the real-world pipeline installation process. During construction, we captured images of the installed pipeline models, creating a dataset of photographs. This dataset was then used to assess the accuracy and reliability of the proposed method, ensuring its capability to accurately monitor and compare the consistency between actual construction and BIMs.

Figure 3.

MEP components models.

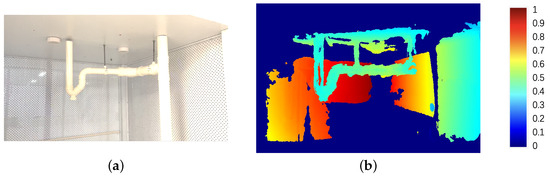

4.2. Projection Results

After capturing pictures of the construction models, we projected six BIMs using the BIM projection method mentioned above. The projection results are shown in Figure 4, where Figure 4a depicts the pipeline at the construction site and Figure 4b presents the images obtained by projecting the BIM using the camera’s intrinsic and extrinsic parameters at the time of capturing the image. By comparing these two images, it is evident that the projection from the BIM maintains consistency with the actual construction site photograph regarding angle and scale. This consistency validates the accuracy of the developed BIM projection method, providing a reliable foundation for subsequent construction monitoring and comparative analysis. We ensure that any deviations during construction can be accurately identified and quantified through precise BIM projection, thus offering robust visual support for quality control and assessment in construction processes.

Figure 4.

Projection results. (a) Construction; (b) Projected.

4.3. Segmentation Results

Once the projection results of the BIMs are obtained, a crucial segmentation step extracts the pipelines from the construction photographs and the projected results. The outcomes of the segmentation process are as follows:

Figure 5a displays the segmented pipelines extracted from the photographs taken at the construction site, while Figure 5b presents the segmented pipelines extracted from the BIM projection results. Applying advanced image segmentation techniques allows for accurately identifying and extracting the pipeline structures, laying the foundation for subsequent comparative analysis.

Figure 5.

Segmentation results. (a) Construction mask; (b) Projected mask.

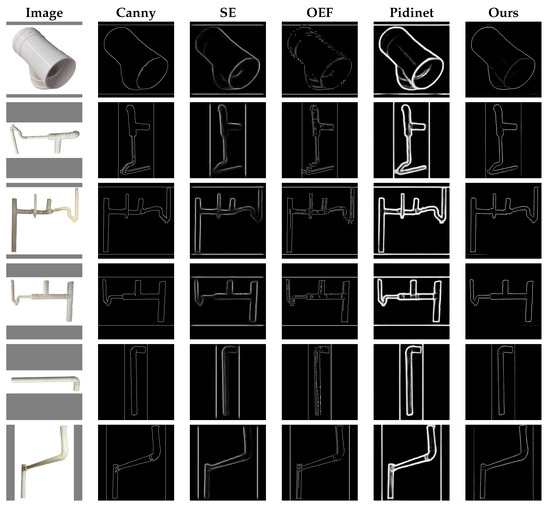

4.4. Contour Extraction

After successfully obtaining the pipeline segmentation results from the construction site photographs and the BIM projections, the contours are extracted from both sets of results. This process is critical for comparing the differences between the two segmentation outcomes. It aims to validate whether the on-site pipelines align with the BIMs designed during planning. The performance of the edge detection algorithms is evaluated using the F-score metric, which is formulated as . Precision (P) is the proportion of correctly detected edges among all predicted edges, and recall (R) is the proportion of correctly detected edges among all actual edges. The F-score effectively balances precision and recall, comprehensively measuring the edge detection performance (Table 1). Notably, this study further validates the effectiveness of the proposed method by challenging it against [13,17,32,33]. Figure 6 visualizes examples of contour extraction, where each subfigure presents the extraction results of different methods. This visual comparison demonstrates that the edge maps obtained using the proposed method exhibit superior accuracy and clarity while significantly reducing noise interference.

Table 1.

Quantitative results on the our dataset.

Figure 6.

Contour comparison.

4.5. Contour Comparison

Section 4.5 demonstrated that the pipeline contours extracted from the construction photographs and BIM projections clearly illustrated the shape and structure of the pipelines. This section identifies and compares the differences between these two sets of extracted contours and reveals the discrepancies between the actual construction and the design model. We used Hu moments to describe the shapes of the extracted contours and quantify their differences. The smaller the difference, the higher their consistency. Based on this analysis, we effectively assessed the construction quality, verified whether the construction results complied with the design specifications of the BIM, and provided accurate technical support for construction monitoring and quality assurance.

In image processing and computer vision, Hu moments [34] are a set of feature descriptors used to describe the shape and contour of images. Hu moments are invariant to geometric transformations, such as rotation, scaling, and translation, making them suitable for shape and pattern recognition. They are derived from an image’s second-order and central moments through mathematical transformations. Seven independent Hu moments exist, known as Hu invariant moments, which can capture the geometric features of image contours by calculating the image’s normalized central moments. Their mathematical formulation is based on the normalized central moments of an image. Specifically, given a binary image , the normalized central moments are as follows:

where and are the coordinates of the image’s centroid:

Based on the normalized central moments, the normalized moments can be calculated as follows:

where is the zero-order moment (the total mass or area of the image).

This study used the Hu moments matching method to identify discrepancies between design drawings and actual construction. We conducted a Hu moments matching analysis of the previously extracted contour features. To ensure the broad applicability and representativeness of the experiment, we constructed and analyzed five different models, each corresponding to 50 on-site construction photos, successfully extracting approximately 1000 pipe contours from a total of 250 images.

The experimental results indicate that, when the threshold for the Hu moments difference is 0.01, the matching algorithm attains an accuracy of up to 95.4%, confirming the efficiency and accuracy of the Hu moments matching method in identifying construction deviations. Hence, the proposed strategy provides a reliable technical means for construction quality control and monitoring, which accurately assesses the consistency between construction results and the original design, offering robust data support for subsequent engineering management and decision-making.

5. Discussion

This study presents an innovative BIM-integrated automated pipeline detection framework that leverages SAM for segmentation, DiffusionEdge for contour extraction, and Hu moment matching for structural comparison. Compared to conventional inspection methods that rely on manual assessments and rule-based feature extraction (e.g., Canny [12], HED [14]), the proposed approach significantly enhances detection robustness, automation, and adaptability to complex construction environments. While prior deep learning-based methods such as PipeNet [9] have improved defect identification, they remain computationally expensive and data-dependent. This study advances the field by integrating BIM projection refinement to ensure precise spatial alignment between as-built and as-designed conditions. The application of SAM in MEP component detection enhances segmentation accuracy, while DiffusionEdge outperforms traditional edge detection techniques, achieving a 3–5%. F-score improvement. Furthermore, Hu moment-based shape matching refines the comparison process, effectively mitigating perspective distortions and geometric inconsistencies encountered in previous methods such as OEF [32] and SE [13].

Despite these advancements, the proposed framework presents several limitations that warrant further research. The accuracy of BIM projection and SAM-based segmentation is highly contingent on input image quality, making the system susceptible to occlusions, lighting variations, and camera misalignment. Future studies should explore multi-view fusion techniques, such as integrating structured light scanning or SLAM-based spatial reconstruction, to mitigate data quality constraints. Additionally, the computational overhead associated with deep learning-based segmentation and contour extraction remains a concern, particularly for large-scale projects requiring real-time processing. Lightweight models such as PiDiNet [17] provide a potential solution, but optimizing the trade-off between efficiency and accuracy remains a challenge. Another limitation is the narrow scope of application, as this study focuses primarily on pipeline monitoring. Expanding the framework to encompass other MEP components, such as HVAC systems, electrical conduits, and structural reinforcements, would enhance its practical applicability. Furthermore, while the current system effectively identifies static deviations, dynamic construction monitoring requires time-series analysis and predictive modeling to track temporal changes and enable real-time anomaly detection and proactive intervention.

Future research should focus on three key areas to further enhance the system’s capabilities: multi-modal sensor fusion, adaptive calibration mechanisms, and real-time monitoring frameworks. Integrating heterogeneous sensor data (e.g., LiDAR, infrared thermography, and ultrasonic scanning) with deep learning could improve detection robustness and adaptability to diverse environments. Additionally, automated camera calibration using Structure-from-Motion (SfM) techniques would allow for dynamic parameter estimation, enhancing flexibility in varying construction conditions. Finally, transitioning from post-hoc analysis to real-time monitoring via reinforcement learning or adaptive filtering techniques could enable intelligent decision-making and proactive intervention. By advancing BIM-integrated computer vision methodologies, the construction industry can achieve higher levels of precision, automation, and intelligence in project management, ultimately setting new benchmarks for efficiency, quality assurance, and sustainability.

Our study focuses on detecting MEP components and verifying their consistency with BIMs, without identifying or tracking individuals. Privacy protection techniques (e.g., blurring, masking, or encrypting sensitive image regions) ensure compliance with data privacy regulations. To mitigate privacy risks, we primarily use synthetic data from BIMs or controlled environments (e.g., unoccupied construction sites) for training and testing. This approach prevents privacy breaches and enhances the model’s generalization across construction environments.

6. Conclusions

The proposed BIM-based pipeline construction monitoring system represents a significant step forward in modern construction management practices. The system bridges the gap between digital BIMs and real-world construction processes by integrating cutting-edge technologies such as the SAM and advanced comparative analysis methods. This innovation enables precise, efficient, and automated construction progress monitoring, addressing traditional challenges such as inefficiency, human error, and the lack of real-time capabilities.

The experimental results validate our system’s effectiveness in detecting and addressing deviations from design specifications, significantly enhancing construction quality control. Furthermore, the system’s automation reduces the reliance on manual inspections, allowing construction teams to allocate resources more effectively while ensuring adherence to project timelines and quality standards. Despite its success, the system faces challenges in handling complex construction environments and maintaining robustness under varying input conditions. Future research should improve the system’s adaptability and scalability, incorporate advanced preprocessing techniques, and expand its application to more complex MEP components and larger-scale projects.

In conclusion, the proposed BIM-based pipeline construction monitoring system offers a transformative approach to enhancing construction quality control and management efficiency. By leveraging real-time data collection, deep learning algorithms, and automated comparative analysis, the system effectively minimizes errors, optimizes resource allocation, and ensures compliance with design specifications. The experimental validation confirms its capability to detect deviations with high accuracy, reinforcing its potential as a practical tool for modern construction practices. While challenges remain in adapting to complex environments and large-scale projects, ongoing advancements in data processing, model robustness, and system integration will further enhance its applicability. Future research should focus on refining its adaptability, extending its functionalities to a broader range of MEP components, and integrating with emerging technologies to establish a more intelligent and resilient construction monitoring framework.

Author Contributions

Conceptualization, D.Z.; methodology, X.Y.; software, X.Y.; validation, All the authors; formal analysis, G.W.; investigation, G.W.; writing—original draft preparation, G.W.; writing—review and editing, All the authors; supervision, D.Z. and X.Y.; project administration, D.Z.; funding acquisition, D.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was financially supported by the 2022 Stable Support Plan Program for Shenzhen-based Universities (20220815150554000).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Son, H.; Kim, C. 3D structural component recognition and modeling method using color and 3D data for construction progress monitoring. Autom. Constr. 2010, 19, 844–854. [Google Scholar] [CrossRef]

- Kim, H.; Kim, K.; Kim, H. Vision-based object-centric safety assessment using fuzzy inference: Monitoring struck-by accidents with moving objects. J. Comput. Civ. Eng. 2016, 30, 04015075. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2015; Volume 28. [Google Scholar]

- Kot, P.; Muradov, M.; Gkantou, M.; Kamaris, G.S.; Hashim, K.; Yeboah, D. Recent advancements in non-destructive testing techniques for structural health monitoring. Appl. Sci. 2021, 11, 2750. [Google Scholar] [CrossRef]

- Santos, R.; Costa, A.A.; Silvestre, J.D.; Pyl, L. Informetric analysis and review of literature on the role of BIM in sustainable construction. Autom. Constr. 2019, 103, 221–234. [Google Scholar] [CrossRef]

- Azhar, S. Building information modeling (BIM): Trends, benefits, risks, and challenges for the AEC industry. Leadersh. Manag. Eng. 2011, 11, 241–252. [Google Scholar] [CrossRef]

- Bastian, B.T.; Jaspreeth, N.; Ranjith, S.K.; Jiji, C.V. Visual inspection and characterization of external corrosion in pipelines using deep neural network. NDT E Int. 2019, 107, 102134. [Google Scholar] [CrossRef]

- Xie, Y.; Li, S.; Liu, T.; Cai, Y. As-built BIM reconstruction of piping systems using PipeNet. Autom. Constr. 2023, 147, 104735. [Google Scholar] [CrossRef]

- Huang, F.; Wang, N.; Fang, H.; Liu, H.; Pang, G. Research on 3D Defect Information Management of Drainage Pipeline Based on BIM. Buildings 2022, 12, 228. [Google Scholar] [CrossRef]

- Pan, Y.; Zhang, L. Integrating BIM and AI for smart construction management: Current status and future directions. Arch. Comput. Methods Eng. 2023, 30, 1081–1110. [Google Scholar] [CrossRef]

- Kittler, J. On the accuracy of the Sobel edge detector. Image Vis. Comput. 1983, 1, 37–42. [Google Scholar] [CrossRef]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 6, 679–698. [Google Scholar] [CrossRef]

- Xie, S.; Tu, Z. Holistically-nested edge detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1395–1403. [Google Scholar]

- Liu, Y.; Cheng, M.-M.; Hu, X.; Wang, K.; Bai, X. Richer convolutional features for edge detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3000–3009. [Google Scholar]

- He, J.; Zhang, S.; Yang, M.; Shan, Y.; Huang, T. Bi-directional cascade network for perceptual edge detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 3828–3837. [Google Scholar]

- Su, Z.; Liu, W.; Yu, Z.; Hu, D.; Liao, Q.; Tian, Q.; Pietikäinen, M.; Liu, L. Pixel difference networks for efficient edge detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 5117–5127. [Google Scholar]

- Zhou, C.; Huang, Y.; Pu, M.; Guan, Q.; Huang, L.; Ling, H. The treasure beneath multiple annotations: An uncertainty-aware edge detector. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 15507–15517. [Google Scholar]

- Pu, M.; Huang, Y.; Liu, Y.; Guan, Q.; Ling, H. Edter: Edge detection with transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 23–28 June 2022; pp. 1402–1412. [Google Scholar]

- Deng, R.; Shen, C.; Liu, S.; Wang, H.; Liu, X. Learning to predict crisp boundaries. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 562–578. [Google Scholar]

- Huan, L.; Xue, N.; Zheng, X.; He, W.; Gong, J.; Xia, G.-S. Unmixing convolutional features for crisp edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 6602–6609. [Google Scholar] [CrossRef] [PubMed]

- Ye, Y.; Yi, R.; Gao, Z.; Cai, Z.; Xu, K. Delving into Crispness: Guided Label Refinement for Crisp Edge Detection. IEEE Trans. Image Process. 2023, 32, 4199–4211. [Google Scholar] [CrossRef] [PubMed]

- Nichol, A.; Dhariwal, P.; Ramesh, A.; Shyam, P.; Mishkin, P.; McGrew, B.; Sutskever, I.; Chen, M. Glide: Towards photorealistic image generation and editing with text-guided diffusion models. arXiv 2021, arXiv:2112.10741. [Google Scholar]

- Avrahami, O.; Lischinski, D.; Fried, O. Blended diffusion for text-driven editing of natural images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 18208–18218. [Google Scholar]

- Gu, S.; Chen, D.; Bao, J.; Wen, F.; Zhang, B.; Chen, D.; Yuan, L.; Guo, B. Vector quantized diffusion model for text-to-image synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 10696–10706. [Google Scholar]

- Austin, J.; Johnson, D.D.; Ho, J.; Tarlow, D.; Van Den Berg, R. Structured denoising diffusion models in discrete state-spaces. Adv. Neural Inf. Process. Syst. 2021, 34, 17981–17993. [Google Scholar]

- Popov, V.; Vovk, I.; Gogoryan, V.; Sadekova, T.; Kudinov, M. Gradtts: A diffusion probabilistic model for text-to-speech. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 8599–8608. [Google Scholar]

- Brempong, E.A.; Kornblith, S.; Chen, T.; Parmar, N.; Minderer, M.; Norouzi, M. Denoising pretraining for semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 4175–4186. [Google Scholar]

- Wu, J.; Fu, R.; Fang, H.; Zhang, Y.; Yang, Y.; Xiong, H.; Liu, H.; Xu, Y. Medsegdiff: Medical image segmentation with diffusion probabilistic model. In Proceedings of the Medical Imaging with Deep Learning, Toronto, ON, Canada, 6–8 May 2024; pp. 1623–1639. [Google Scholar]

- Chen, S.; Sun, P.; Song, Y.; Luo, P. Diffusiondet: Diffusion model for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 19830–19843. [Google Scholar]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Dollar, P.; Zitnick, C.L. Fast edge detection using structured forests. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 1558–1570. [Google Scholar] [CrossRef] [PubMed]

- Hallman, S.; Fowlkes, C.C. Oriented edge forests for boundary detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1732–1740. [Google Scholar]

- Hu, M.-K. Visual pattern recognition by moment invariants. IRE Trans. Inf. Theory 1962, 8, 179–187. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).