Abstract

Water extraction from Synthetic Aperture Radar (SAR) images is crucial for water resource management and maintaining the sustainability of ecosystems. Though great progress has been achieved, there are still some challenges, such as an insufficient ability to extract water edge details, an inability to detect small water bodies, and a weak ability to suppress background noise. To address these problems, we propose the Global Context Attention Feature Fusion Network (GCAFF-Net) in this article. It includes an encoder module for hierarchical feature extraction and a decoder module for merging multi-scale features. The encoder utilizes ResNet-101 as the backbone network to generate four-level features of different resolutions. In the middle-level feature fusion stage, the Attention Feature Fusion module (AFFM) is presented for multi-scale feature learning to improve the performance of fine water segmentation. In the advanced feature encoding stage, the Global Context Atrous Spatial Pyramid Pooling (GCASPP) is constructed to adaptively integrate the water information in SAR images from a global perspective, thereby enhancing the network’s ability to express water boundaries. In the decoder module, an attention modulation module (AMM) is introduced to rearrange the distribution of feature importance from the channel-space sequence perspective, so as to better extract the detailed features of water bodies. In the experiment, SAR images from Sentinel-1 system are utilized, and three different water areas with different features and scales are selected for independent testing. The Pixel Accuracy (PA) and Intersection over Union (IoU) values for water extraction are 95.24% and 91.63%, respectively. The results indicate that the network can extract more integral water edges and better detailed features, enhancing the accuracy and generalization of water body extraction. Compared with the several existing classical semantic segmentation models, GCAFF-Net embodies superior performance, which can also be used for typical target segmentation from SAR images.

1. Introduction

The utilization of synthetic aperture radar (SAR) images, characterized by their all-weather and cloud-penetrating capabilities, holds significant application value for surface water detection. By leveraging the obtained water detection results, timely insights into changes in flood conditions, water environments, coastlines, and environmental protection can be gained. Consequently, this provides vital information and guidance for national disaster prevention, mitigation, rescue efforts, and comprehensive development planning [1,2,3,4].

Remote sensing image-based techniques for water body extraction can be broadly categorized into two primary groups: classification rule-based and deep-learning-based. The method based on classification rules relies on the unique spectral reflectance characteristics of water bodies in optical images. Water body recognition and extraction can be achieved through manually set classification criteria, such as the utilization of water body indices [5], the construction of decision tree models [6], the application of support vector machine methods [7], the introduction of physical approaches [8,9], and the adoption of object-oriented analysis strategies [10]. However, such methods are limited by subjective judgment, which not only increases the workload, but also may affect the extraction accuracy due to subjective bias. Especially in the face of the diversity of different sensor data and the complexity of surface features, their accuracy and detail retention ability may be challenged.

Deep learning-based methods have demonstrated powerful automatic feature learning and recognition capabilities. They do not need to pre-set complex classification rules but can autonomously discover the deep-level features of water bodies from massive data and continuously optimize the representation of these features through model training, thus demonstrating significant advantages in extraction accuracy and generalization. Deep learning utilizes convolutional neural networks to learn high-dimensional and multi-scale information such as the color, texture, size, and position of target objects, forming extraction models for different objects. Since Hinton proposed deep learning [11] in 2006, an increasing number of water detection methods based on deep learning have emerged and become mainstream, achieving automation in water detection. Evan et al. [12] proposed fully convolutional networks (FCNs), which can achieve end-to-end semantic segmentation and significantly improve the accuracy of target segmentation. Ronneberger et al. [13] constructed U-Net for the semantic segmentation of medical images. It employs a symmetrical encoder–decoder architecture, which preserves the spatial information of the images. By utilizing skip connections, low-level features are transferred to the decoder, thereby enhancing the detail of the segmentation. To address the issue of vanishing or exploding gradients encountered during the training of deep neural networks, He et al. [14] designed the Residual Neural Network (ResNet). ResNet enables information to propagate more effectively within the network, thereby overcoming the challenges faced by traditional deep neural networks during training. This advancement has propelled further developments in deep learning technology. Chen et al. [15] incorporated depthwise separable convolutions into atrous spatial pyramid pooling (ASPP) operations. They designed the DeepLabV3+ model to refine the boundaries of target objects in semantic segmentation. This effectively addressed issues such as the resolution loss, inadequate feature extraction, and limited generalization ability faced by traditional convolutional neural networks when tackling semantic segmentation tasks. DeepLabV3+ has been widely recognized as a mature approach to semantic segmentation.

In the realm of water body extraction, numerous researchers have refined and enhanced existing models with the aim of increasing the accuracy of water body extraction. For instance, Luo et al. [16] introduced strip pooling in DeepLabv3+ to better extract water bodies with a discrete distribution at long distances using different strip kernels. Wang et al. [17] proposed a dual encoder structure to correctly extract small lake water bodies while suppressing noise interference, which can improve target accuracy. Cui et al. [18] designed an adaptive multi-scale feature learning module to replace traditional serial convolution operations, integrating the spectral, texture and semantic features of land cover at different scales so that neural networks can selectively emphasize the features of weak sea land boundaries. Tang et al. [19] designed a residual attention-based feature fusion module, aimed to enhance the recovery of fine-grained river details that may be lost in the initial dense skip connections. This module fortified the integration of detailed river texture features with high-level semantic information, thereby minimizing pixel-level segmentation errors in medium-resolution remote sensing imagery. Wang et al. [20] combined transfer learning and deep learning to expand the network’s receptive domain, which can extract more detailed information, improve the coherence of river extraction, and make the extracted river water clearer and more coherent. Weng et al. [21] merged residual blocks into the encoder to alleviate the problem of network degradation. In addition, they utilized dilated convolutions to expand the receptive field and enhance feature extraction capabilities, enabling the entire network to capture more spatial information about lake water bodies. Qin et al. [22] proposed an effective unsupervised deep gradient network to generate higher resolution lake regions from remote sensing images. Xie et al. [23] proposed an improved SegNet for aquaculture sea areas. This model added a pyramid convolution module and a convolution block attention module to capture more global image information, effectively improving feature utilization and enhancing the extraction accuracy of aquaculture sea areas. Wang et al. [24] designed a novel framework for SAR image water extraction that employed a Deep Pyramid Pool module and a Channel Spatial Attention Module to capture multi-scale features, effectively integrating high-level semantics with low-level details for precise detection outcomes. To tackle the edge serration and high data dependency faced by transformer-based models when extracting water bodies from SAR images, Zhao et al. [25] employed an innovative approach by replacing the regular patch partition with superpixel segmentation and incorporating a normalized adjacency matrix among superpixels into the Multi-Layer Perceptron model. Yuan et al. [26] proposed a feature-fused encoder–decoder network in order to mine and fuse the backscatter features and polarization features of SAR images, thereby achieving a more comprehensive and accurate depiction of water streams. Zhou et al. [27] proposed a structure-aware CNN–Transformer network with a U-shaped design and an edge refinement module specifically tailored for enhancing the water segmentation accuracy.

Currently, deep learning has achieved significant progress in water body extraction. Researchers have proposed various innovative methods. For instance, techniques such as the introduction of strip pooling, dual encoder structures, adaptive multi-scale feature learning modules, and others have been employed to enhance the model’s ability to capture water body features and improve the segmentation accuracy. Despite the improvements in the accuracy and efficiency of water body extraction achieved by the aforementioned methods, significant challenges still remain in enhancing the precision of water body extraction, particularly in addressing multi-scale features, blurred boundaries, and noise interference. For example, while the strip pooling proposed by Luo et al. [16] was effective in extracting water bodies with long-distance discrete distributions, it failed to provide sufficient detail when dealing with fine tributaries and complex edges. Zhou et al. [27] introduced Trans-SANet, characterized by a U-shaped structure, which enhanced the segmentation accuracy of fine water areas. However, when processing noisy regions, it may yield unstable predictions.

In summary, the field of water detection still faces numerous challenges, including the neglect of water body boundaries, a weak ability to extract detailed information and the multi-scale features of water bodies, as well as the poor suppression of complex background noise. To address these issues, this paper innovatively proposes the Global Context Attention Feature Fusion Network (GCAFF-Net) for conducting water extraction. The main contributions of this paper can be summarized as follows:

- GCAFF-Net is proposed, which integrates global and local information, and can accurately refine the edge contour of water bodies, extract significant multi-scale features and suppress noise interference. It demonstrates outstanding performance in feature extraction and segmentation, significantly enhancing the ability to identify various types of water bodies from SAR imagery.

- The Attention Feature Fusion Module (AFFM) is constructed. It integrates high-level semantic features and low-level detail features, enriches image detail information, enhances the model’s learning ability for water body details, and ensures the network’s extraction ability under different water body features.

- A feature extraction module, namely Global Context Atrous Spatial Pyramid Pooling (GCASPP), is proposed to effectively capture contextual information from images across different receptive fields, ensuring the network’s ability to recognize both large and small water bodies. It simultaneously achieves the fine recognition of water body edges and a suppression effect on complex background noise.

The remainder of this paper is structured as follows: Section 2 elaborates on the specific details of the SAR images employed in this paper, outlines the overall model structure, and provides a detailed breakdown of the specific configurations of each design module. In Section 3, we conduct water detection experiments using a dataset constructed based on Sentinel-1 SAR images and compare the experimental results with those of several advanced semantic segmentation networks for analysis. In Section 4, we systematically summarize the work of this study and outline the directions and plans for future research.

2. Materials and Methods

2.1. Datasets

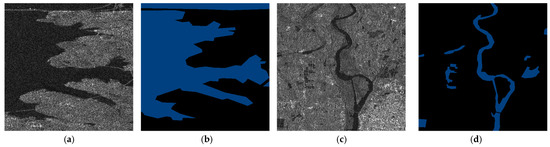

The SAR image utilized in this study was sourced from Sentinel-1, presenting a single polarization intensity image acquired in IW mode with geographic coding, registration, and denoising processing applied. The images boast a resolution of 5 m × 20 m. The study area encompasses water bodies such as lakes, rivers, streams, and ponds, as well as landform features including plains, mountains, forests, and shrublands, providing abundant data support for research on water body extraction. Given the scarcity of publicly accessible high-resolution SAR datasets, a custom PAS-CAL VOC 2012 dataset was crafted for this research. Following validation by SAR experts, the water areas were delineated as water bodies and other regions as background using Labelme software (version 5.2.0). Initially, a sliding window technique was employed to partition the large-scale SAR image into 512 × 512 pixels segments. To augment the dataset adequately, data enhancement strategies such as horizontal and 90-degree clockwise rotations were implemented to enhance the sample quality, yielding a total of 10,200 samples. Figure 1 shows two samples of SAR images and their ground truth (GT) in diverse scenes. The dataset was randomly split into training and testing sets at an 8:2 ratio, comprising 8816 training samples and 2204 testing samples. Each SAR image in the dataset is associated with a binary label, with blue denoting water and black representing background. Furthermore, three SAR images with a resolution of 2000 × 2000 pixels were earmarked for independent testing purposes and excluded from the dataset.

Figure 1.

The sample of datasets. (a,b) SAR images; (c,d) ground truth.

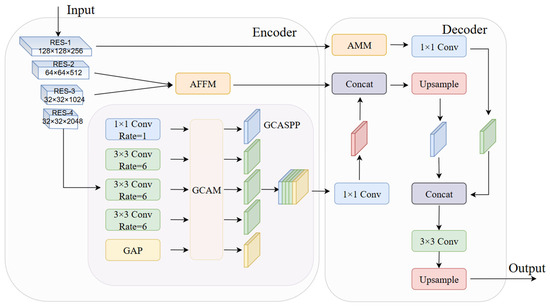

2.2. The Architecture of GCAFF-Net

In this article, we propose the Global Attention Feature Fusion Network (GCAFF-Net). The GCAFF-Net framework consists of the encoder module for hierarchical feature extraction and the decoder module for integrating multi-scale features. The overall structure is illustrated in Figure 2. The encoder consists of three parts: the backbone ResNet_101, the GCASPP and the AFFM. ResNet_101 serves as the backbone for conducting initial feature extraction from the image. The GCASPP module extracts multi-scale features from the high-level outputs of the backbone network, whereas the AFFM enhances spatial detail expression by utilizing middle-level features also derived from the backbone. The decoder comprises three inputs: firstly, the multi-scale features obtained through GCASPP; secondly, the low-level fusion features produced by the AFFM; and thirdly, the low-level features directly from the backbone. These features are then fused and processed to generate the final water segmentation result.

Figure 2.

The architecture of the global context attention feature fused network.

2.3. The Encoder

We use ResNet-101 with atrous convolution as the backbone network for hierarchical feature extraction. ResNet is characterized by its ability to optimize residuals, making it highly suitable for constructing semantic segmentation networks. Cai et al. [28] and Chen et al. [2] utilized ResNet-101 as the backbone network to construct a framework that achieved significant results in water extraction tasks. Water bodies have different sizes and color texture features in remote sensing images and do not have specific topological structures. Therefore, for the extraction of water bodies, it is necessary to fully integrate high, medium, and low-level features to improve the segmentation performance. In this article, we initially downscale the input image resolution through a convolutional layer, followed by a max pooling layer. Subsequently, we extract semantic features by utilizing four residual blocks. Additionally, the Res-4 augments features through concatenated atrous convolutions with varying rates, thereby deepening the network and enriching the feature maps. For AFFM, we adopt the feature maps outputted by res-2 and res-3 as inputs, whereas the feature map derived from res-4 serves as the input for GCASPP.

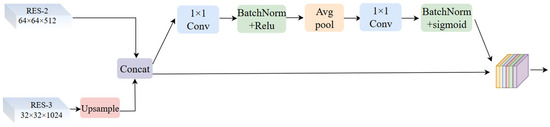

In the process of water feature extraction, the integration of multi-scale features is a pivotal approach to enhancing the segmentation performance. Consequently, for the purpose of extracting water bodies, it is indispensable in comprehensively integrating features across high, medium, and low levels. This paper proposes AFFM to fuse strong semantic high-level features and low-level detail features to improve feature expression, enhance the continuity of pixels in the image during subsequent upsampling, increase spatial and semantic information in the features, and thus improve object boundary segmentation. The specific structure of AFFM is illustrated in Figure 3. Firstly, the output of ResNet’s third layer, res-3, is upsampled by 2 times. Then, the initial fusion feature map is obtained by concatenating res-2 and the upsampled res-3. Then, a 1 × 1 convolution operation is performed on the initial fusion feature map to reduce its dimensionality and parameter count. Subsequently, BatchNorm and Relu are introduced to prevent the occurrence of silent neurons and the vanishing gradient problem. Following this, spatial average pooling is performed, and inter-channel information interaction and learning are conducted through a 1 × 1 convolution operation. Additionally, an application of the sigmoid activation function is utilized to generate the attention weights. Finally, the initial fusion feature map is multiplied by the attention weights, thereby achieving refined fusion feature selection. A 1 × 1 convolution is then applied to reduce the number of fused feature channels to 256, matching the channel count of the high-level features processed by the GCASPP. The input feature map can undergo multi-scale feature extraction via the capabilities of the AFFM. This enhancement not only facilitates a detailed comprehension of the imagery’s details but also augments the model’s analytical and discriminatory abilities.

Figure 3.

The structure of the attention feature fusion module.

In convolutional neural networks, due to the local receptive field characteristics of convolution operations, pixels with the same label may exhibit significant differences in the feature space, which can lead to intra-class inconsistency and thus reduce the accuracy of detection tasks. The spatial semantics obtained by sampling multiple pixels through convolution with different atrous rates in the ASPP module are a mixture of different target pixels, which limits the effectiveness of label prediction. In this article, we present the GCASPP module, which comprises five concurrent branches, as illustrated in Figure 2.

GCASPP comprises four concurrent attention branches, each consisting of a Global Context Attention Module (GCAM) and convolutions with varying atrous rates and scales, along with an additional branch dedicated to global average pooling. The GCASPP’s input feature map is imbued with abundant semantic details. The branch employing global average pooling performs down-sampling on the feature maps to alleviate over-training within the network. The four convolutions with different scales and atrous rates effectively capture contextual information from different receptive fields. Following the multiscale extraction layer, GCAM focuses on the pixel dependency relationships, thereby enhancing the network’s flexibility in extracting contextual information. Consequently, this design enhances the capability of multi-scale feature extraction, thereby improving the precision of water body detection.

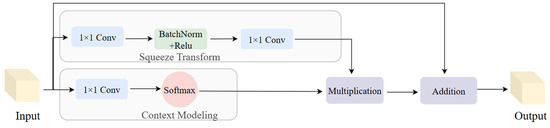

Attention mechanisms are widely used in deep learning tasks such as semantic segmentation [28] and object detection [29]. Chen et al. [30] integrated channel attention mechanisms with spatial attention to facilitate the detection of airport runways. However, the incorporation of dual attention introduces extra parameters, significantly prolonging the training duration of the neural network. Tan et al. [31] employed an efficient Squeeze and Excitation attention mechanism for extracting airport runways, achieving a reduction in both training and evaluation timeframes. The non-local network proposed by Wang et al. [32] can capture long-range dependencies and is a technique that fully utilizes the global information of images. However, this network has the problem of low computational efficiency.

GCAM is a lightweight improvement based on the Non-local Network. The input feature map is denoted as , where C signifies the channel count and H and W correspond to the height and width of the features, respectively. GCAM maps to . The detailed architecture of GCAM is illustrated in Figure 4.

Figure 4.

The structure of the global context attention module.

In order to achieve lightweight characteristics and reduce parameter computation, the squeeze transform module uses a convolution operation to obtain a eigenvector, where is the reduction ratio and is set to . Then, BatchNorm is used to facilitate optimization and act as a regularizer that facilitates generalization. After passing through Relu layers, convolution is performed. The following provides the definition:

where and are the input feature map and the output feature of the squeeze transform module, respectively, and is the index of query positions. signifies a convolution operation. Additionally, and represent Batch Normalization and the Rectified Linear Unit activation function, respectively. In the context modeling module, an attention feature map is generated through the application of a SoftMax activation function. The specific calculation is defined as follows:

where denotes the attention feature map and enumerates all possible positions of the feature map . denotes a convolution operation. Subsequently, the feature map is multiplied with the attention feature map to perform attention pooling. Finally, global contextual features are aggregated into the feature map at each location through the use of addition. The precise computational formulation is outlined as follows:

where , and represent the input feature map, the output feature map of the squeeze transform module, and the output feature map of GCAM, respectively. is the attention feature map. GCAM focuses on the relationships between objects within a global view, establishing correlations among features through the utilization of an attention mechanism. Consequently, it adaptively integrates similar features across any scale from a global perspective, explicitly enhancing object information.

2.4. The Decoder

The decoder part decodes the features of three levels step by step, and its inner architecture is illustrated in Figure 2. The input for it comprises three distinct components: first, the high-order multi-scale features output by GCASPP; second, the underlying features produced by the backbone network.; and third, the characteristics of intermediate fusion generated by AFFM. We introduce the Attention Modulation Module (AMM) [33] in the low-level feature to rearrange the distribution of feature importance from the channel–spatial sequential perspective, in order to better extract the detailed features of water body. AMM can enhance the secondary features and suppress the most sensitive and least sensitive features. AMM emphasizes the direct extraction of easily overlooked areas through secondary regions, which is crucial for water segmentation tasks. AMM consists of two parts: channel attention modulation and spatial attention modulation. Firstly, the input feature map will pass through the channel attention modulation stage, which utilizes average pooling and convolutional layers to explicitly capture and model the interdependence between channels. Subsequently, in order to capture internal spatial relationships more deeply in the spatial dimension, the feature map will be further modulated through spatial attention, which cascades the channel information. Additionally, AMM cleverly introduces a Gaussian modulation function, which enhances secondary features while suppressing the most and least sensitive features. This mechanism emphasizes the use of sub-important regions to directly extract important information that is easily overlooked, which is crucial for segmentation tasks. In short, AMM improves the performance of segmentation tasks by integrating attention modulation into both channel and spatial dimensions, and optimizing feature processing using Gaussian modulation functions.

The decoder first concatenates the intermediate fusion features and multi-scale advanced features output by the encoder and performs upsampling by 2 times to obtain the fusion features. Then, a 1 × 1 convolution operation is performed on the low-level features output by AMM to reduce channel redundancy features, and then these features are concatenated with the fused features. After fusion, we apply a 3 × 3 convolution to refine the features and subsequently achieve the final water extraction results through upsampling by 4 times and bilinear interpolation.

2.5. Evaluation Metrics

To quantitatively assess the efficacy of the network model detailed in this study, four fundamental evaluation metrics are employed: the Pixel Accuracy (PA), Intersection over Union (IoU), Recall rate (Recall) and F1-Score. PA serves as a metric delineating the precision of water body extraction, while IoU stands as the standard figure of merit for semantic segmentation, epitomizing the concordance between actual and predicted values. Recall serves as a metric assessing the completeness of water body extraction, indicating the proportion of actual water bodies that have been correctly identified. Meanwhile, the F1-Score stands as a balanced measure of performance for semantic segmentation, providing a comprehensive evaluation of the agreement between actual and predicted values. A higher value for these metrics signifies a superior model performance. The calculation methods are succinctly summarized below:

where represents the count of pixels correctly classified, represents the count of pixels incorrectly classified, and represents tthe count of pixels incorrectly classified as background.

3. Results

3.1. Training Parameter Configurations

The environment for conducting experiments is configured with Python 3.8, the deep learning framework is Pytorch 1.7.1, the Cuda version is 11.4, and the training uses GPU (single) NVIDIA RTX 3090 (NVIDIA Corporation, Santa Clara, CA, USA). During the model training process, ResNet is used as the backbone network, with the learning rate set to 0.005 and a weight decay value of 0.0005. The Batchsize during model training is set to 8, and the total number of model iterations is 100 epochs. After each iteration, the network autonomously evaluates the newly acquired losses. Upon completing 100 iterations, the network identifies and stores the model exhibiting the minimal loss. Subsequently, following each training round, validation is conducted, and the model weights that yield the best validation results are chosen as optimal.

3.2. Comparison Experiments

In order to verify the performance of the water body recognition method proposed in this paper, this study used our method and Deeplabv3+ [15], MF2AM [28], and U-Net [13] to extract water bodies from test images. Deeplabv3+ is a classic network architecture in the field of semantic segmentation that successfully captures rich multi-scale contextual information by introducing atrous convolution and the Atrous Spatial Pyramid Pooling module. This network adopts an encoder–decoder structure and has demonstrated excellent performance in water extraction tasks. MF2AM is an improvement based on DeepLabV3+ for water extraction from SAR imagery. The MF2AM network emphasizes the enhancement of semantic and edge feature learning through its Semantic Embedding Branch and Feature Aggregation Module, thus demonstrating a superior performance in accurately detecting in SAR images. The U-Net structure is renowned for its symmetric encoder–decoder architecture and skip connections. This structure can efficiently capture contextual information while preserving image details, and has wide applications and recognition in the field of water extraction and analysis. The pixel size of each image is 2000 × 2000. In the experimental outcomes, the blue region signifies the presence of water bodies, the green region denotes areas that were missed, and the red region indicates false detections. Scene I is a large-scale water area and some small water areas dominated by lakes with blurred water boundaries, Scene II is a large-scale water area and tributaries dominated by rivers with multi-scale features, and Scene III is a slender tributary and some small water areas with noise interference, with a complex background. The generalization ability of the model can be effectively verified through the inconsistency of the three scene types mentioned above.

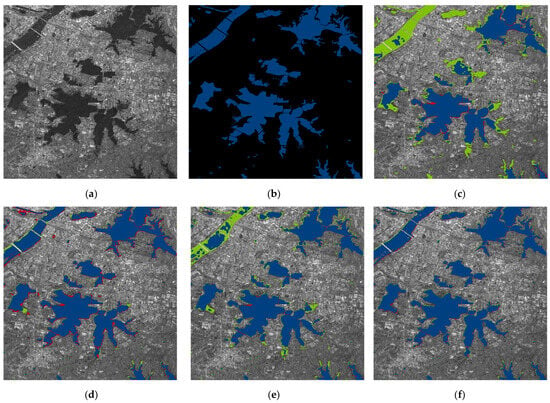

3.2.1. Scene I

In Scene I, the edge of the water body exhibits unevenness, characterized by a rough and varied contour. This irregularity poses challenges in accurately delineating the water body for detection purposes. According to the water body detection results in Figure 5, several algorithms can basically detect the large area of the water body correctly, while there are some false alarms and missed detections in other small water body parts and water body edges. GCAFF-Net extracts water boundaries more clearly than Deeplabv3+, MF2AM, and U-Net. The water in Scene I has irregular small boundaries, and DeepLabV3+ and U-Net have obvious missed detections with relatively few false alarms. The water extraction of Deeplabv3+ and U-Net is not precise enough to extract the small boundary areas at the edge of the water, resulting in a large number of missed detection areas. MF2AM has fewer missed detections, but there are more false alarms when judging the edge area of the water body. The GCAFF-Net proposed in this article significantly reduces missed detections compared to DeepLabV3+ and U-Net, and also has much fewer false alarms than MF2AM, achieving very good detection results. According to Table 1, the detection accuracy of this network is much higher than other networks. The network accuracy of U-Net is only 67.25%, with a large number of missed detections. Although the Deeplabv3+ achieved a recall value of 97.96%, indicating that it can recognize and label most of the water body areas, its PA was only 83.14% and its IoU value was only 81.73%. These two indicators are relatively low, indicating that the Deeplabv3+ has problems related to inaccurate edge segmentation and region recognition errors when dealing with water scenes with irregular edges and an uneven distribution. The detection accuracy of the GCAFF-Net network in this paper reached 95.24%, showing significant advantages compared to other networks. GCAFF-Net has a strong ability to express the semantic features of water bodies, which can refine the edge contours of water bodies and more accurately extract boundary information. It has obvious advantages in boundary pixel classification.

Figure 5.

The detection results for water bodies in Scene I using various networks. (a) The first SAR image for independent testing. (b) The ground truth. (c) The extracted water result for U-Net; (d) for MF2AM; (e) for DeepLabV3+; and (f) for GCAFF-Net. The blue color indicates correct detections of water, the green color highlights missed detections, and the red color marks false alarms.

Table 1.

Comparison of water detection results among various networks in Scene I.

To fully demonstrate the superiority of our proposed network in extracting detailed water body edges and small pond water bodies, we present the zoomed-in views of the water body detection results for Scene I, as shown in Figure 6. In this scene, the following observations can be clearly made: U-Net and Deeplabv3+ exhibit substantial missed detections along the water edges; MF2AM generates numerous false alarms along the water edges; in contrast, GCAFF-Net demonstrates the lowest number of both false alarms and missed detections along the water edges. Furthermore, GCAFF-Net successfully detects small water bodies that other models fail to identify. Overall, compared to other methods, GCAFF-Net exhibits the most complete extraction of water body edges and the highest preservation of water details. As illustrated in Table 2, GCAFF-Net attains an IoU value of 92.25%, while the IoU values of the other networks are all below 90%. It is noteworthy that, despite MF2AM having the highest PA value, its IoU value is 3.57% lower than that of GCAFF-Net, indicating that Deeplabv3+ lacks in detail capture. Additionally, our GCAFF-Net boasts the highest F1-Score of 95.97%, demonstrating a well-balanced performance between Precision and Recall. In summary, GCAFF-Net demonstrates the best performance in extracting detailed water body edges and small pond water bodies.

Figure 6.

The detection results for water in the zoomed-in views of Scene I by various networks. (a) Independent testing SAR image. (b) Ground truth. (c) The extracted water result for U-Net; (d) for MF2AM; (e) for DeepLabV3+; and (f) for GCAFF-Net. The blue color indicates correct detections of water, the green color highlights missed detections, the red color marks false alarms, and the dashed blue box indicates the highlighting of detailed differences.

Table 2.

Comparison of water detection results among various networks in the zoomed-in views of Scene I.

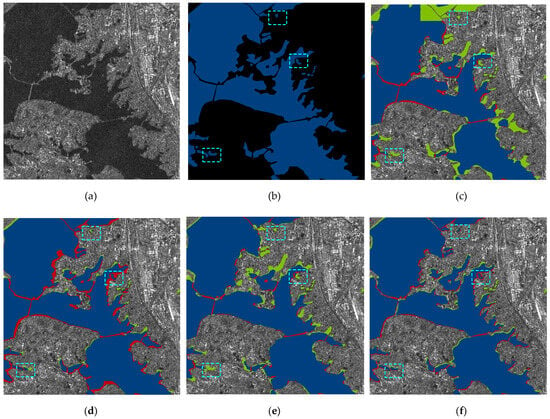

3.2.2. Scene II

Figure 7 illustrates that Scene II includes an extensive region of water, with multiple small areas of water and some scattered water bodies nearby. Therefore, it contains water bodies of different scales and has multi-scale characteristics. The experimental results show that GCAFF-Net and MF2AM accurately extract small water body areas and completely segment large water body areas. U-Net has a large number of false alarms in small-area water body segmentation, and Deeplabv3+ has many missed detections. As can be seen from Table 3, the overall detection accuracy of Scene II is relatively high, reaching over 88%. Our network is improved by 3.25% compared to DeepLabV3+. While U-Net demonstrates a marginally higher detection accuracy compared to GCAFF-Net, its IoU, Recall, and F1-Score are substantially lower than those achieved by our network. In summary, the AFFM and GCAM proposed in this article have a better grasp of the intricate characteristics of water bodies. GCAFF-Net considers contextual information at different levels and effectively learns multi-scale water features.

Figure 7.

The detection results for water bodies in Scene II using various networks. (a) The second SAR image for independent testing. (b) The ground truth. (c) The extracted water result for U-Net; (d) for MF2AM; (e) for DeepLabV3+; and (f) for GCAFF-Net. The blue color indicates correct detections of water, the green color highlights missed detections, and the red color marks false alarms.

Table 3.

Comparison of water detection results among various networks in Scene II.

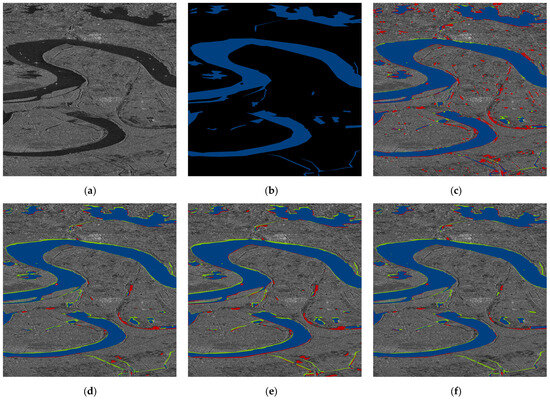

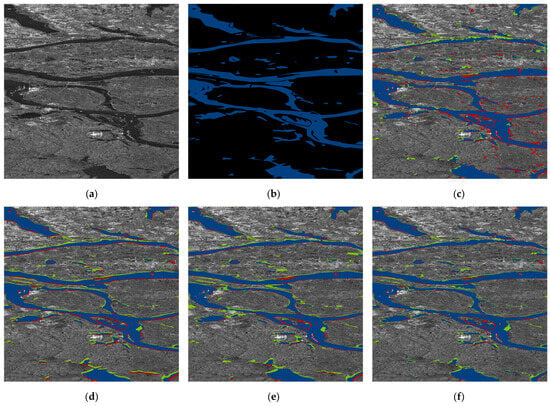

3.2.3. Scene III

Figure 8 displays the experimental outcomes obtained from various networks in Scene III. The water distribution in Scene III is irregular, with a complex background and details, which places high demands on the network’s feature extraction ability. From the results, it can be seen that MF2AM and Deeplabv3+ have a large number of missed detections and false alarms, resulting in a poor detection performance for small water areas. U-Net can roughly detect small and slender water bodies, but there are too many false alarms and it cannot effectively suppress noise interference, reflecting the problem of inaccurate semantic information extraction. GCAFF-Net can extract more detailed water bodies and detect much fewer false alarms than other networks, indicating its excellent ability to extract global semantic information about water bodies, effectively suppress noise interference, and extract the detailed boundaries of water bodies. Scene III requires a high capacity for the extraction of detailed water information. According to Table 4, the overall water detection accuracy of all four networks decreased, while the other networks did not reach 89%. GCAFF-Net achieved a detection accuracy of 89.59%, which is significantly improved compared to other networks and over 7% higher than DeepLabV3+. We can also see that GCAFF-Net outperforms other networks in this study across various indicators: PA (89.59%), IoU (82.10%), Recall (92.93%) and F1-Score (90.17%). These findings indicate that GCAFF-Net is highly effective for segmentation tasks with an irregular water morphology, complex backgrounds, and rich details.

Figure 8.

The detection results for water bodies in Scene III using various networks. (a) The third SAR image for independent testing. (b) The ground truth. (c) The extracted water result for U-Net; (d) for MF2AM; (e) for DeepLabV3+; and (f) for GCAFF-Net. The blue color indicates correct detections of water, the green color highlights missed detections, and the red color marks false alarms.

Table 4.

Comparison of water detection results among various networks in Scene III.

3.3. Analysis of Experimental Results

According to the experimental results for the extraction of water from the SAR images shown in Figure 5, Figure 6, Figure 7 and Figure 8, we can observe significant differences in image details, as well as a high degree of diversity in water types and their features. Table 1, Table 2, Table 3 and Table 4 provide a detailed list of the water detection performance indicators for the four networks in three experiments. Specifically, in terms of detecting small water bodies, GCAFF-Net significantly outperforms U-Net, particularly in Scene I, where U-Net missed a large number of small water bodies, whereas GCAFF-Net exhibited a higher detection accuracy. Furthermore, in Scene I, the PA, IoU and F1-Score of GCAFF-Net are significantly higher than other networks, which demonstrates that GCAFF-Net can more precisely extract small pond water bodies and the edge information of water bodies, indicating its higher accuracy and stronger robustness. Further referring to the data in Table 3, we can find that both U-Net and GCAFF-Net exhibit good detection accuracy in the detection of large water bodies. The PA values of U-Net and GCAFF-Net are 92.65% and 91.53%, respectively. However, U-Net generated a large number of false positives, resulting in its IoU being only 80.72%, its Recall being 83.12%, and its F1-Score being 89.34%. These key performance indicators are far lower than the corresponding values of GCAFF-Net, which are 87.10%, 95.37%, and 93.14%, respectively. This result highlights the progressiveness of the GCASPP in global semantic information extraction. As shown in Table 4, in scenarios featuring irregular water body shapes, complex backgrounds, and rich detailed characteristics, GCAFF-Net outperforms the comparison networks in various evaluation metrics for water body extraction. This result indicates that the effective fusion of multi-level features achieved through the AFFM module not only significantly enhances the network’s ability to suppress background noise but also ensures a stable extraction performance under different water body characteristics, effectively avoiding the loss of feature information.

As a backbone network, ResNet101, despite its higher computational complexity and a parameter count of 44.5 million, demonstrates significant advantages in high-precision segmentation tasks, attributed to its deep residual structure and robust feature extraction capabilities. To comprehensively evaluate the impact of different backbone networks on model performance, this paper replaces the backbone network of GCAFF-Net with MobileNetV2 [34] and compares their performance across three scenes. MobileNetV2, as a lightweight convolutional neural network, achieves a substantial reduction in computational costs and boasts a parameter count of just 3.5 million; meanwhile, it maintains a high accuracy through its innovative inverted residual structure and linear bottleneck layers. Table 5 shows that although GCAFF-MobileNetV2 has potential advantages regarding its computational efficiency, its accuracy performance in all scenes is lower than that of GCAFF-ResNet. For example, in Scene I, GCAFF-ResNet achieves a PA of 95.24% and an IoU of 91.63%, significantly higher than GCAFF-MobileNetV2′s 94.01% and 87.16%. This gap further widens in more complex scenes, such as Scene III: GCAFF-ResNet achieves a PA of 89.59% and an IoU of 82.10%, while GCAFF-MobileNetV2 only reaches 85.54% and 79.47%, respectively. These results indicate that ResNet, leveraging its deep residual structure, can more effectively extract multi-level features, thereby maintaining a significant advantage in high-precision segmentation tasks. Especially in complex scenes where target boundaries are blurred or background interference is severe, GCAFF-ResNet’s Recall and F1-Score also outperform those of the lightweight model, further validating its superiority in detail preservation and missed detection control. Although the adoption of ResNet entails greater computational demands, the resultant marked improvement in accuracy underscores its effectiveness. In future work, we will focus on refining the backbone network’s architecture to enhance both the accuracy and computational efficiency.

Table 5.

Comparison of water detection results among various backbone networks.

3.4. Ablation Experiment

To comprehensively evaluate the efficacy of GCAFF-Net, we conducted ablation experiments utilizing the DeepLabV3+ network as a baseline. Table 6 lists the average water detection indicators for three testing scenes. It is worth noting that DeepLabV3+ has a PA of 84.67% and an IoU of 79.81% in three scenes. When only replacing the GCAASP in the original DeepLabV3+, we observed a 3.02% and 4.62% increase in PA and IoU, respectively. By integrating the AFFM proposed in this article, we achieved a 3.48% increment in PA and a 5.76% increment in IoU. Similarly, after introducing the AMM, the performance of PA and IoU improved by 2.79% and 5.92%, respectively. After adding all three modules, we saw a significant increase of 7.45% in PA and 7.15% in IoU. These results clearly indicate that the network proposed in this paper can significantly improve the accuracy of water detection in SAR images, producing impressive water detection results.

Table 6.

Assessment of ablation study results.

4. Conclusions

In this paper, a deep learning semantic segmentation network, namely GCAFF-Net, is proposed for water extraction from SAR images, addressing the insufficient ability to extract water edge details, the inability to detect small water bodies, and the weak ability to suppress background noise. It integrates global and local information, and can accurately refine the edge contour of water body, demonstrating excellent feature extraction and segmentation performance. The GCAFF-Net model consists of two modules: an encoder module for hierarchical feature extraction and a decoder module for fusing multi-scale features. The encoder module is responsible for the in-depth feature extraction of the input SAR image. The AFFM and GCASPP enable the model to capture more extensive and in-depth information, laying the foundation for subsequent segmentation tasks. The decoder module integrates AMM to rearrange the distribution of feature importance from the channel space sequence perspective, so as to better extract the detailed features of the water body, thereby significantly improving the ability to detect water bodies. On the self-annotated dedicated dataset, the detection accuracy of the GCAFF-Net reached 95.24%, and the intersection to union ratio also reached 91.63%, achieving a significantly better water detection performance than several other excellent classification networks.

Although GCAFF-Net achieved better results on the current dataset, there is still potential for a further improvement in its network performance. Facing these challenges, future research directions will concentrate on how to further optimize and augment the model’s capabilities. In our future work, we will continue to explore and research techniques for reducing speckle noise, attempting to integrate other advanced technologies to further improve the accuracy and robustness of water body extraction from SAR images. Furthermore, we intend to conduct in-depth research on how to further optimize the computational efficiency while maintaining a high performance, and explore more advanced lightweight technologies. We plan to expand the dataset by introducing more SAR images from other bands, different resolutions, and multiple sources to increase its diversity and coverage. This measure is crucial for improving the generalization performance of the model in different water backgrounds, and can help the model better adapt to various complex water environments. With the continuous expansion of the dataset and the ongoing innovation of technology, water extraction has become increasingly accurate, gradually achieving the comprehensive and real-time monitoring of the water ecological environment, and providing scientific and reliable decision support for water ecological environment management.

Author Contributions

Conceptualization, M.G. and L.C.; methodology, M.G.; software, M.G. and W.D.; validation, M.G., L.C., Z.W. and W.D.; formal analysis, Z.W. and W.D.; writing—original draft preparation, M.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in the study are included in the article; further inquiries can be directed to the corresponding author.

Acknowledgments

The authors would like to thank the reviewers and the editor for their constructive comments.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Li, F.K.; Goldstein, R.M. Studies of multibaseline spaceborne interferometric synthetic aperture radars. IEEE Trans. Geosci. Remote Sens. 1990, 28, 88–97. [Google Scholar] [CrossRef]

- Chen, L.; Cai, X.; Li, Z.; Xing, J.; Ai, J. Where is my attention? An explainable AI exploration in water detection from SAR imagery. Int. J. Appl. Earth Obs. Geoinf. 2024, 130, 103878. [Google Scholar] [CrossRef]

- Zhang, P.; Chen, L.; Li, Z.; Xing, J.; Xing, X.; Yuan, Z. Automatic Extraction of Water and Shadow from SAR Images Based on a Multi-Resolution Dense Encoder and Decoder Network. Sensors 2019, 19, 3576. [Google Scholar] [CrossRef] [PubMed]

- Yang, T.; Sun, D.; Li, S.; Kalluri, S.; Zhou, L.; Helfrich, S.; Yuan, M.; Zhang, Q.; Straka, W.; Maggioni, V.; et al. Extracting Wetlands in Coastal Louisiana from the Operational VIIRS and GOES-R Flood Products. Remote Sens. 2024, 16, 3769. [Google Scholar] [CrossRef]

- Wang, X.; Xie, S.; Du, J. Water index formulation and its effectiveness research on the complicated surface water surroundings. J. Remote Sens. 2018, 22, 360–372. [Google Scholar] [CrossRef]

- Hajeb, M.; Karimzadeh, S.; Matsuoka, M. SAR and LIDAR Datasets for Building Damage Evaluation Based on Support Vector Machine and Random Forest Algorithms—A Case Study of Kumamoto Earthquake, Japan. Appl. Sci. 2020, 10, 8932. [Google Scholar] [CrossRef]

- Tang, J.; Hu, D.; Gong, Z. Study of Classification by Support Vector Machine on Synthetic Aperture Radar Image. Remote Sens. Technol. Appl. 2008, 23, 341–345. [Google Scholar]

- Bernard, T.G.; Davy, P.; Lague, D. Hydro-Geomorphic Metrics for High Resolution Fluvial Landscape Analysis. J. Geophys. Res. Earth Surf. 2022, 127, e2021JF006535. [Google Scholar] [CrossRef]

- Costabile, P.; Costanzo, C.; Lombardo, M.; Shavers, E.; Stanislawski, L.V. Unravelling spatial heterogeneity of inundation pattern domains for 2D analysis of fluvial landscapes and drainage networks. J. Hydrol. 2024, 632, 130728. [Google Scholar] [CrossRef]

- Tang, L.; Liu, W.; Yang, D.; Chen, L.; Su, Y.; Xu, X. Flooding Monitoring Application Based on the Object-oriented Method and Sentinel-1A SAR Data. J. Geo-Inf. Sci. 2018, 20, 377–384. [Google Scholar]

- Hinton, G.; LeCun, Y.; Bengio, Y. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Evan, S.; Jonathan, L.; Trevor, D. Fully Convolutional Networks for Semantic Segmentation. IEEE Tran. Pattern Anal. Mach. Int. 2017, 39, 640–651. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Springer International Publishing: Berlin/Heidelberg, Germany, 2015. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity Mappings in Deep Residual Networks. ECCV 2016. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; Volume 9908. [Google Scholar] [CrossRef]

- Chen, L.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018. [Google Scholar] [CrossRef]

- Luo, Y.; Feng, A.; Li, H.; Li, D.; Wu, X.; Liao, J.; Zhang, C.; Zheng, X.; Pu, H. New deep learning method for efficient extraction of small water from remote sensing images. PLoS ONE. 2022, 17, e0272317. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Li, S.; Lin, Y.; Wang, M. Lightweight Deep Neural Network Method for Water Body Extraction from High-Resolution Remote Sensing Images with Multisensors. Sensors 2021, 21, 7397. [Google Scholar] [CrossRef]

- Cui, B.; Jing, W.; Huang, L.; Li, Z.; Lu, Y. SANet: A Sea–Land Segmentation Network Via Adaptive Multiscale Feature Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 116–126. [Google Scholar] [CrossRef]

- Tang, Y.; Zhang, J.; Jiang, Z.; Lin, Y.; Hou, P. RAU-Net++: River Channel Extraction Methods for Remote Sensing Images of Cold and Arid Regions. Appl. Sci. 2024, 14, 251. [Google Scholar] [CrossRef]

- Wang, H.; Shen, Y.; Liang, L.; Yuan, Y.; Yan, Y.; Liu, G. River Extraction from Remote Sensing Images in Cold and Arid Regions Based on Attention Mechanism. Wirel. Commun. Mob. Comput. 2022, 2022, 9410381. [Google Scholar] [CrossRef]

- Weng, L.; Xu, Y.; Xia, M.; Zhang, Y.; Liu, J.; Xu, Y. Water Areas Segmentation from Remote Sensing Images Using a Separable Residual SegNet Network. ISPRS Int. J. Geo-Inf. 2020, 9, 256. [Google Scholar] [CrossRef]

- Qin, M.; Hu, L.; Du, Z.; Gao, Y.; Qin, L.; Zhang, F.; Liu, R. Achieving Higher Resolution Lake Area from Remote Sensing Images Through an Unsupervised Deep Learning Super-Resolution Method. Remote Sens. 2020, 12, 1937. [Google Scholar] [CrossRef]

- Xie, W.; Ding, Y.; Rui, X.; Zou, Y.; Zhan, Y. Automatic Extraction Method of Aquaculture Sea Based on Improved SegNet Model. Water 2023, 15, 3610. [Google Scholar] [CrossRef]

- Wang, J.; Jia, D.; Xue, J.; Wu, Z.; Song, W. Automatic Water Body Extraction from SAR Images Based on MADF-Net. Remote Sens. 2024, 16, 3419. [Google Scholar] [CrossRef]

- Zhao, T.; Du, X.; Xu, C.; Jian, H.; Pei, Z.; Zhu, J.; Yan, Z.; Fan, X. SPT-UNet: A Superpixel-Level Feature Fusion Network for Water Extraction from SAR Imagery. Remote Sens. 2024, 16, 2636. [Google Scholar] [CrossRef]

- Yuan, D.; Wang, C.; Wu, L.; Yang, X.; Guo, Z.; Dang, X.; Zhao, J.; Li, N. Water Stream Extraction via Feature-Fused Encoder-Decoder Network Based on SAR Images. Remote Sens. 2023, 15, 1559. [Google Scholar] [CrossRef]

- Zhou, Y.; Yang, K.; Ma, F.; Hu, W.; Zhang, F. Water–Land Segmentation via Structure-Aware CNN–Transformer Network on Large-Scale SAR Data. IEEE Sens. J. 2023, 23, 1408–1422. [Google Scholar] [CrossRef]

- Cai, X.; Chen, L.; Xing, J. Automatic and fast extraction of layover from InSAR imagery based on multi-layer feature fusion attention mechanism. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4017705. [Google Scholar] [CrossRef]

- Chen, L.; Weng, T.; Xing, J. Employing deep learning for automatic river bridge detection from SAR images based on adaptively effective feature fusion. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102425. [Google Scholar] [CrossRef]

- Chen, L.; Weng, T.; Xing, J.; Li, Z.; Yuan, Z. A New Deep Learning Network for Automatic Bridge Detection from SAR Images Based on Balanced and Attention Mechanism. Remote Sens. 2020, 12, 441. [Google Scholar] [CrossRef]

- Tan, S.; Chen, L.; Pan, Z.; Xing, J.; Li, Z.; Yuan, Z. Geospatial Contextual Attention Mechanism for Automatic and Fast Airport Detection in SAR Imagery. IEEE Access 2020, 8, 173627–173640. [Google Scholar] [CrossRef]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local Neural Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7794–7803. [Google Scholar] [CrossRef]

- Qin, J.; Wu, J.; Xiao, X.; Li, L.; Wang, X. Activation modulation and recalibration scheme for weakly supervised semantic segmentation. Proc. AAAI 2022, 36, 2117–2125. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).