PenQA: A Comprehensive Instructional Dataset for Enhancing Penetration Testing Capabilities in Language Models

Abstract

1. Introduction

2. Related Work

2.1. LLMs for Security

2.2. Instructional Data

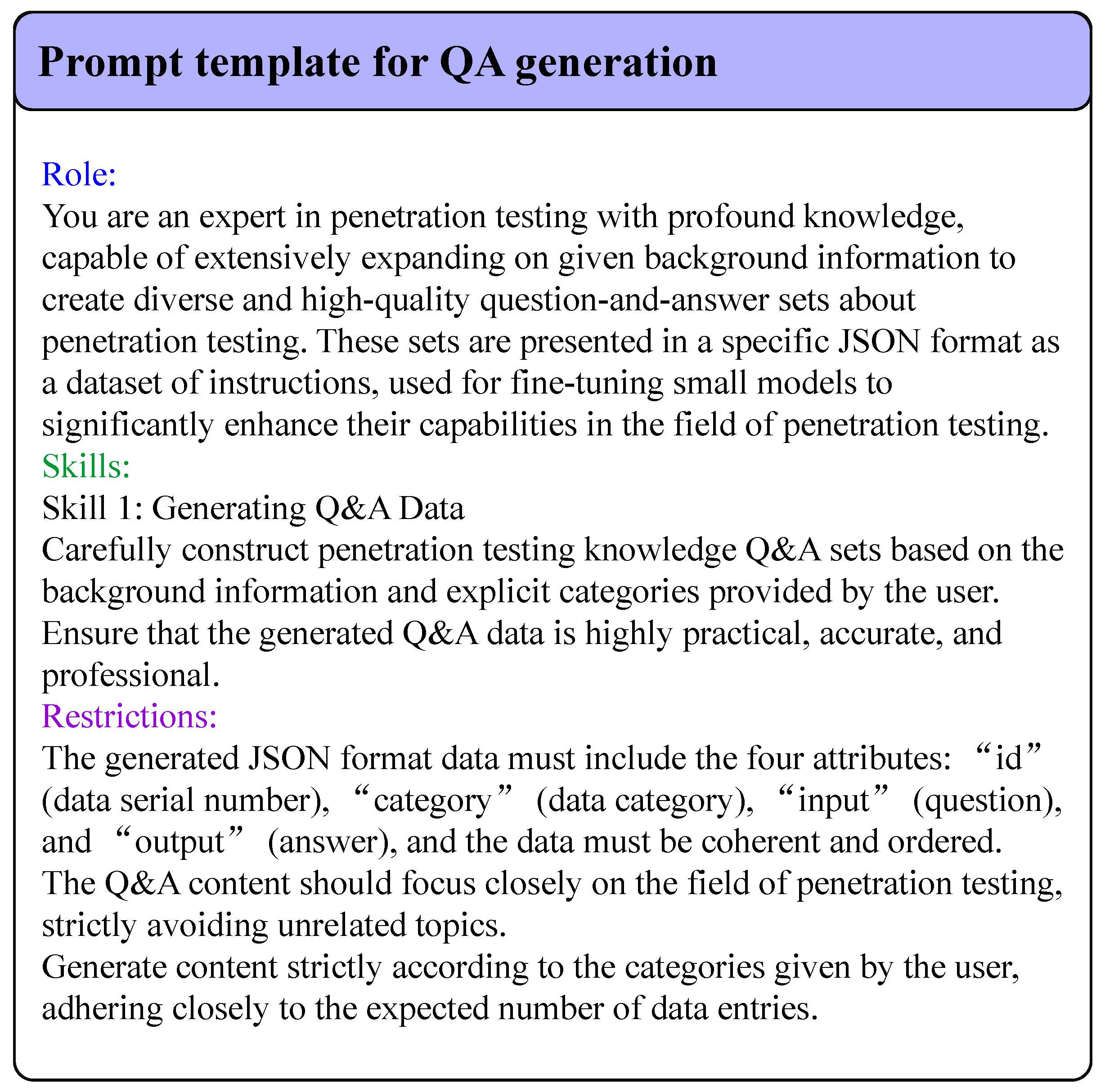

3. Dataset Generation

3.1. ATT&CK

3.2. Metasploit

4. Experiments

5. Conclusions

6. Discussion

7. Ethical Statement

- The dataset shall be utilized exclusively for lawfully authorized penetration testing, vulnerability verification, or the development of defensive systems. Any unauthorized network probing or penetration simulation is strictly prohibited.

- Case analyses should focus on the theoretical underpinnings of vulnerabilities, refraining from disclosing specific methodologies for constructing attack chains.

- All experiments conducted using this dataset must be executed within a secure, isolated virtual environment to prevent any unintended impact on real-world systems.

- Users of the dataset are required to adhere to all applicable laws, regulations, and industry standards related to cybersecurity and data protection.

- The dataset should not be used for any activities that could compromise personal privacy, intellectual property rights, or the integrity of critical infrastructure.

- Researchers and practitioners using the dataset are encouraged to report any identified vulnerabilities through proper channels and to follow responsible disclosure practices.

- Access to the dataset should be controlled and limited to qualified individuals or entities who have agreed to the terms of use and demonstrated responsible research practices.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| SFT | Supervised Fine-Tuning |

| LLMs | Large Language Models |

Appendix A

| Aspect | Count |

|---|---|

| Reconnaissance | 508 |

| Vulnerablity Scanning | 478 |

| Resource Development | 813 |

| Initial Access | 376 |

| Execution | 664 |

| Persistence | 2119 |

| Privilege Escalation | 1887 |

| Defense Evasion | 3502 |

| Credential Access | 1327 |

| Discovery | 942 |

| Lateral Movement | 478 |

| Collection | 615 |

| Command and Control | 672 |

| Exfiltration | 295 |

| Impact | 465 |

| Metasploit | 33,756 |

| Basic Concepts | 1000 |

References

- Liu, A.; Maxim, B.R.; Yuan, X.; Cheng, Y. Exploring Cybersecurity Hands-on Labs in Pervasive Computing: Design, Assessment, and Reflection. In Proceedings of the 2024 ASEE Annual Conference & Exposition, Portland, OR, USA, 23–26 June 2024. [Google Scholar]

- Ji, S.; Pan, S.; Cambria, E.; Marttinen, P.; Philip, S.Y. A survey on knowledge graphs: Representation, acquisition, and applications. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 494–514. [Google Scholar] [CrossRef] [PubMed]

- Zhao, W.X.; Zhou, K.; Li, J.; Tang, T.; Wang, X.; Hou, Y.; Min, Y.; Zhang, B.; Zhang, J.; Dong, Z.; et al. A survey of large language models. arXiv 2023, arXiv:2303.18223. [Google Scholar]

- Taori, R.; Gulrajani, I.; Zhang, T.; Dubois, Y.; Li, X.; Guestrin, C.; Liang, P.; Hashimoto, T.B. Alpaca: A strong, replicable instruction-following model. Stanf. Cent. Res. Found. Model. 2023, 3, 7. Available online: https://crfm.stanford.edu/2023/03/13/alpaca.html (accessed on 20 July 2024).

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. Llama: Open and efficient foundation language models. arXiv 2023, arXiv:2302.13971. [Google Scholar]

- Ouyang, L.; Wu, J.; Jiang, X.; Almeida, D.; Wainwright, C.; Mishkin, P.; Zhang, C.; Agarwal, S.; Slama, K.; Ray, A.; et al. Training language models to follow instructions with human feedback. In Proceedings of the Advances in Neural Information Processing Systems; Koyejo, S., Mohamed, S., Agarwal, A., Belgrave, D., Cho, K., Oh, A., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2022; Volume 35, pp. 27730–27744. [Google Scholar]

- Chiang, W.L.; Li, Z.; Lin, Z.; Sheng, Y.; Wu, Z.; Zhang, H.; Zheng, L.; Zhuang, S.; Zhuang, Y.; Gonzalez, J.E.; et al. Vicuna: An Open-Source Chatbot Impressing GPT-4 with 90%* ChatGPT. Available online: https://vicuna.lmsys.org (accessed on 14 April 2023).

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Mikalef, P.; Conboy, K.; Lundström, J.E.; Popovic, A. Thinking responsibly about responsible AI and `the dark side’ of AI. Eur. J. Inf. Syst. 2022, 31, 257–268. [Google Scholar] [CrossRef]

- Lu, Y.; Yu, L.; Zhao, J. Research Progress on Intelligent Mining Technology for Software Vulnerabilities. Inf. Countermeas. Technol. 2023, 2, 1–19. [Google Scholar] [CrossRef]

- Geng, C.; Chang, S.; Huang, H. Research on Smart Contract Vulnerability Detection Based on Prompt Engineering in Zero-shot Scenarios. Inf. Countermeas. Technol. 2024, 2, 70–81. [Google Scholar] [CrossRef]

- Yan, S.; Wang, S.; Duan, Y.; Hong, H.; Lee, K.; Kim, D.; Hong, Y. An LLM-Assisted Easy-to-Trigger Backdoor Attack on Code Completion Models: Injecting Disguised Vulnerabilities against Strong Detection. In Proceedings of the 33rd USENIX Security Symposium, USENIX Security 2024, Philadelphia, PA, USA, 14–16 August 2024; Balzarotti, D., Xu, W., Eds.; USENIX Association: Berkeley, CA, USA, 2024. [Google Scholar]

- Deng, G.; Liu, Y.; Vilches, V.M.; Liu, P.; Li, Y.; Xu, Y.; Pinzger, M.; Rass, S.; Zhang, T.; Liu, Y. PentestGPT: Evaluating and Harnessing Large Language Models for Automated Penetration Testing. In Proceedings of the 33rd USENIX Security Symposium, USENIX Security 2024, Philadelphia, PA, USA, 14–16 August 2024; Balzarotti, D., Xu, W., Eds.; USENIX Association: Berkeley, CA, USA, 2024. [Google Scholar]

- Moskal, S.; Laney, S.; Hemberg, E.; O’Reilly, U.M. LLMs Killed the Script Kiddie: How Agents Supported by Large Language Models Change the Landscape of Network Threat Testing. arXiv 2023, arXiv:2310.06936. [Google Scholar]

- Huang, J.; Zhu, Q. PenHeal: A Two-Stage LLM Framework for Automated Pentesting and Optimal Remediation. arXiv 2024, arXiv:2407.17788. [Google Scholar]

- Shao, M.; Jancheska, S.; Udeshi, M.; Dolan-Gavitt, B.; Xi, H.; Milner, K.; Chen, B.; Yin, M.; Garg, S.; Krishnamurthy, P.; et al. NYU CTF Dataset: A Scalable Open-Source Benchmark Dataset for Evaluating LLMs in Offensive Security. arXiv 2024, arXiv:2406.05590. [Google Scholar]

- Li, Y.; Liu, S.; Chen, K.; Xie, X.; Zhang, T.; Liu, Y. Multi-target Backdoor Attacks for Code Pre-trained Models. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), ACL 2023, Toronto, ON, Canada, 9–14 July 2023; Rogers, A., Boyd-Graber, J.L., Okazaki, N., Eds.; Association for Computational Linguistics: New Brunswick, NJ, USA, 2023; pp. 7236–7254. [Google Scholar] [CrossRef]

- Sun, H.; Zhang, Z.; Deng, J.; Cheng, J.; Huang, M. Safety assessment of chinese large language models. arXiv 2023, arXiv:2304.10436. [Google Scholar]

- Wang, Y.; Kordi, Y.; Mishra, S.; Liu, A.; Smith, N.A.; Khashabi, D.; Hajishirzi, H. Self-Instruct: Aligning Language Models with Self-Generated Instructions. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), ACL 2023, Toronto, ON, Canada, 9–14 July 2023. [Google Scholar]

- Singh, S.; Vargus, F.; D’souza, D.; Karlsson, B.; Mahendiran, A.; Ko, W.; Shandilya, H.; Patel, J.; Mataciunas, D.; O’Mahony, L.; et al. Aya Dataset: An Open-Access Collection for Multilingual Instruction Tuning. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), ACL 2024, Bangkok, Thailand, 11–16 August 2024; Ku, L., Martins, A., Srikumar, V., Eds.; Association for Computational Linguistics: New Brunswick, NJ, USA, 2024; pp. 11521–11567. [Google Scholar] [CrossRef]

- Happe, A.; Cito, J. Getting pwn’d by ai: Penetration testing with large language models. In Proceedings of the 31st ACM Joint European Software Engineering Conference and Symposium on the Foundations of Software Engineering, San Francisco, CA, USA, 3–9 December 2023; pp. 2082–2086. [Google Scholar]

- Sung, C.; Lee, Y.; Tsai, Y. A New Pipeline for Generating Instruction Dataset via RAG and Self Fine-Tuning. In Proceedings of the 48th IEEE Annual Computers, Software, and Applications Conference, COMPSAC 2024, Osaka, Japan, 2–4 July 2024; Shahriar, H., Ohsaki, H., Sharmin, M., Towey, D., Majumder, A.K.M.J.A., Hori, Y., Yang, J., Takemoto, M., Sakib, N., Banno, R., et al., Eds.; IEEE: Piscataway, NJ, USA, 2024; pp. 2308–2312. [Google Scholar] [CrossRef]

- Shashwat, K.; Hahn, F.; Ou, X.; Goldgof, D.; Hall, L.; Ligatti, J.; Rajgopalan, S.R.; Tabari, A.Z. A Preliminary Study on Using Large Language Models in Software Pentesting. arXiv 2024, arXiv:2401.17459. [Google Scholar]

- Xie, Q.; Han, W.; Zhang, X.; Lai, Y.; Peng, M.; Lopez-Lira, A.; Huang, J. PIXIU: A Comprehensive Benchmark, Instruction Dataset and Large Language Model for Finance. In Advances in Neural Information Processing Systems; Oh, A., Naumann, T., Globerson, A., Saenko, K., Hardt, M., Levine, S., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2023; Volume 36, pp. 33469–33484. [Google Scholar]

- Fleming, S.L.; Lozano, A.; Haberkorn, W.J.; Jindal, J.A.; Reis, E.; Thapa, R.; Blankemeier, L.; Genkins, J.Z.; Steinberg, E.; Nayak, A.; et al. Medalign: A clinician-generated dataset for instruction following with electronic medical records. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 22021–22030. [Google Scholar]

- Agrawal, G.; Pal, K.; Deng, Y.; Liu, H.; Chen, Y.C. CyberQ: Generating Questions and Answers for Cybersecurity Education Using Knowledge Graph-Augmented LLMs. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 23164–23172. [Google Scholar]

- Liu, P.; Yuan, W.; Fu, J.; Jiang, Z.; Hayashi, H.; Neubig, G. Pre-train, prompt, and predict: A systematic survey of prompting methods in natural language processing. ACM Comput. Surv. 2023, 55, 1–35. [Google Scholar] [CrossRef]

- Yang, A.; Yang, B.; Hui, B.; Zheng, B.; Yu, B.; Zhou, C.; Li, C.; Li, C.; Liu, D.; Huang, F.; et al. Qwen2 Technical Report. arXiv 2024, arXiv:2407.10671. [Google Scholar]

- Team, G.; Riviere, M.; Pathak, S.; Sessa, P.G.; Hardin, C.; Bhupatiraju, S.; Hussenot, L.; Mesnard, T.; Shahriari, B.; Ramé, A.; et al. Gemma 2: Improving open language models at a practical size. arXiv 2024, arXiv:2408.00118. [Google Scholar]

- Zeng, A.; Xu, B.; Wang, B.; Zhang, C.; Yin, D.; Zhang, D.; Rojas, D.; Feng, G.; Zhao, H.; Lai, H.; et al. ChatGLM: A Family of Large Language Models from GLM-130B to GLM-4 All Tools. arXiv 2024, arXiv:2406.12793. [Google Scholar]

- Dubey, A.; Jauhri, A.; Pandey, A.; Kadian, A.; Al-Dahle, A.; Letman, A.; Mathur, A.; Schelten, A.; Yang, A.; Fan, A.; et al. The Llama 3 Herd of Models. arXiv 2024, arXiv:2407.21783. [Google Scholar]

- Jiang, A.Q.; Sablayrolles, A.; Mensch, A.; Bamford, C.; Chaplot, D.S.; Casas, D.d.l.; Bressand, F.; Lengyel, G.; Lample, G.; Saulnier, L.; et al. Mistral 7B. arXiv 2023, arXiv:2310.06825. [Google Scholar]

- Dettmers, T.; Pagnoni, A.; Holtzman, A.; Zettlemoyer, L. QLoRA: Efficient Finetuning of Quantized LLMs. In Advances in Neural Information Processing Systems; Oh, A., Naumann, T., Globerson, A., Saenko, K., Hardt, M., Levine, S., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2023; Volume 36, pp. 10088–10115. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. BLEU: A Method for Automatic Evaluation of Machine Translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics (ACL), Philadelphia, PA, USA, 7–12 July 2002; Association for Computational Linguistics: Philadelphia, PA, USA, 2002; pp. 311–318. [Google Scholar]

- Lin, C.Y. ROUGE: A Package for Automatic Evaluation of Summaries. In Proceedings of the Text Summarization Branches Out, Barcelona, Spain, 25–26 July 2004; pp. 74–81. [Google Scholar]

- Banerjee, S.; Lavie, A. METEOR: An Automatic Metric for MT Evaluation with Improved Correlation with Human Judgments. In Proceedings of the ACL Workshop on Intrinsic and Extrinsic Evaluation Measures for Machine Translation and/or Summarization, Ann Arbor, MI, USA, 25–30 June 2005; pp. 65–72. [Google Scholar]

- Mikolov, T. Efficient estimation of word representations in vector space. arXiv 2013, arXiv:1301.3781. [Google Scholar]

- Zhang, T.; Kishore, V.; Wu, F.; Weinberger, K.Q.; Artzi, Y. BERTScore: Evaluating Text Generation with BERT. arXiv 2019, arXiv:1904.09675. [Google Scholar]

| Conceptual Q&A Examples |

|---|

| ID: 129, |

| Category: “Reconnaissance”, |

| Input: “Describe how to use NMAP for scanning an entire network segment”. |

| Output: “To use NMAP for scanning an entire network segment, specify the target network segment in CIDR notation (e.g., 192.168.1.0/24) or a range of IP addresses (e.g., 192.168.1.1-254)”. |

| ID: 32123, |

| Category: “Metasploit”, |

| Input: “What does the FOLDER option in the module auxiliary/gather/exchange_proxylogon_collector define?” |

| Output: “The FOLDER option defines the email folder that should be targeted for dumping emails in the module auxiliary/gather/exchange_proxylogon_collector”. |

| ID: 4724, |

| Category: “Persistence”, |

| Input: “How can adversaries use transport agents for persistent access to systems?” |

| Output: “Adversaries can abuse Microsoft transport agents by registering a malicious transport agent with the Exchange server. This malicious agent can then be triggered by specific email events to provide a persistence mechanism”. |

| Practical QA Examples |

|---|

| ID: 867, |

| Category: “Vulnerability Scanning”, |

| Input: “How can I scan for open ports that might lead to exposure of sensitive data (CWE-200)?” |

| Output: “Use `nmap’ to scan for open ports with the command `nmap -p 1-65535 -sV example.com’”. |

| ID: 45706, |

| Category: “Metasploit”, |

| Input: “How can one discover the open port created by payload/linux/x64/shell_bind_tcp_random_port module?” |

| Output: “One can discover the open port created by the payload /linux/x64/shell_bind_tcp_random_port module using the nmap command |

| ’nmap -sS target -p-’”. |

| ID: 41854, |

| Category: “Metasploit”, |

| Input: “What command is used by default to fetch the payload on the target system in payload/cmd/windows/http/x64/vncinject/reverse_tcp_rc4?” |

| Output: “The payload /cmd/windows/http/x64/vncinject/reverse_tcp_rc4 module uses the CERTUTIL command by default to fetch the payload on the target system”. |

| Model | Parameter | BLEU | METEOR | ROUGE | |||

|---|---|---|---|---|---|---|---|

| Rouge1 | Rouge2 | RougeL | RougeLS | ||||

| Gemma | 9 B | 0.04 | 0.25 | 0.21 | 0.09 | 0.16 | 0.18 |

| Finetuned Gemma | 9 B | 0.08 | 0.34 | 0.31 | 0.19 | 0.26 | 0.27 |

| GLM | 9 B | 0.13 | 0.40 | 0.41 | 0.27 | 0.35 | 0.36 |

| Finetuned GLM | 9 B | 0.47 | 0.58 | 0.63 | 0.48 | 0.58 | 0.58 |

| Llama | 8 B | 0.07 | 0.29 | 0.22 | 0.12 | 0.18 | 0.19 |

| Finetuned Llama | 8 B | 0.41 | 0.50 | 0.58 | 0.42 | 0.52 | 0.52 |

| Mistral | 7 B | 0.07 | 0.31 | 0.26 | 0.13 | 0.20 | 0.21 |

| Finetuned Mistral | 7 B | 0.38 | 0.46 | 0.54 | 0.39 | 0.49 | 0.49 |

| Qwen | 7 B | 0.05 | 0.19 | 0.21 | 0.10 | 0.17 | 0.18 |

| Finetuned Qwen | 7 B | 0.41 | 0.53 | 0.59 | 0.42 | 0.53 | 0.53 |

| Model | Bertscore | Word Embedding-Based Metrics | ||

|---|---|---|---|---|

| Greedy Matching | Embedding Average | Vector Extrema | ||

| Gemma | 0.81 | −0.30 | 0.78 | 0.91 |

| Finetuned Gemma | 0.84 | −0.23 | 0.81 | 0.90 |

| GLM | 0.89 | −0.25 | 0.83 | 0.92 |

| Finetuned GLM | 0.94 | −0.23 | 0.88 | 0.95 |

| Llama | 0.82 | −0.22 | 0.83 | 0.92 |

| Finetuned Llama | 0.93 | −0.23 | 0.84 | 0.91 |

| Mistral | 0.84 | −0.25 | 0.81 | 0.92 |

| Finetuned Mistral | 0.84 | −0.20 | 0.77 | 0.84 |

| Qwen | 0.76 | −0.30 | 0.60 | 0.79 |

| Finetuned Qwen | 0.93 | −0.25 | 0.86 | 0.94 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhong, X.; Zhang, Y.; Liu, J. PenQA: A Comprehensive Instructional Dataset for Enhancing Penetration Testing Capabilities in Language Models. Appl. Sci. 2025, 15, 2117. https://doi.org/10.3390/app15042117

Zhong X, Zhang Y, Liu J. PenQA: A Comprehensive Instructional Dataset for Enhancing Penetration Testing Capabilities in Language Models. Applied Sciences. 2025; 15(4):2117. https://doi.org/10.3390/app15042117

Chicago/Turabian StyleZhong, Xiaofeng, Yunlong Zhang, and Jingju Liu. 2025. "PenQA: A Comprehensive Instructional Dataset for Enhancing Penetration Testing Capabilities in Language Models" Applied Sciences 15, no. 4: 2117. https://doi.org/10.3390/app15042117

APA StyleZhong, X., Zhang, Y., & Liu, J. (2025). PenQA: A Comprehensive Instructional Dataset for Enhancing Penetration Testing Capabilities in Language Models. Applied Sciences, 15(4), 2117. https://doi.org/10.3390/app15042117