Abstract

In tasks such as intelligent surveillance and human–computer interaction, developing rapid and effective models for human action recognition is crucial. Currently, Graph Convolution Networks (GCNs) are widely used for skeleton-based action recognition. Still, they primarily face two issues: (1) The insufficient capture of global joint responses, making it difficult to utilize the correlations between all joints. (2) Existing models often tend to be over-parameterized. In this paper, we therefore propose an Efficient Hierarchical Co-occurrence Graph Convolution Network (EHC-GCN). By employing a simple and practical hierarchical co-occurrence framework to adjust the degree of feature aggregation on demand, we first use spatial graph convolution to learn the local features of joints and then aggregate the global features of all joints. Secondly, we introduce depth-wise separable convolution layers to reduce the model parameters. Additionally, we apply a two-stream branch and attention mechanism to further extract discriminative features. On two large-scale datasets, the proposed EHC-GCN achieves better or comparable performance on both 2D and 3D skeleton data to the state-of-the-art methods, with fewer parameters and lower computational complexity, which will be more beneficial for application on computing resource-limited robot platforms.

1. Introduction

In recent years, human action recognition technology has shown strong momentum in practical applications, gradually being applied in various fields such as real-time monitoring and video retrieval, robotics and industrial automation, and in healthcare applications (patient monitoring, rehabilitation assistance, etc.). These specific real-world applications, especially those on devices with limited computational resources, pose higher demands on the recognition accuracy and computational efficiency of models. Additionally, collaboration between humans and robots has greatly enhanced industrial automation and production efficiency. Among the key technologies of human–robot collaboration, rapidly and effectively perceiving human behavior information is a challenge [1,2,3,4,5]. Deep learning techniques for recognizing actions based on skeleton data have garnered considerable interest due to their resilience in adapting to changing environments [6,7,8]. Figure 1 shows a visualization of the human skeletal sequence.

Figure 1.

Human skeletal sequence. Taking off clothes (top) and putting on shoes (bottom).

Skeletal data are typically acquired through human pose estimation algorithms from RGB video sources or directly from depth sensors. Current research indicates that merely depicting skeleton data as a series of vectors that are handled by Recurrent Neural Networks (RNNs), or as 2D/3D forms analyzed by Convolutional Neural Networks (CNNs), fails to capture the intricate spatio-temporal relationships between body joints. Inspired by graph-based methods, many human action recognition methods have been proposed. Yan et al. [9] were the pioneers in applying spatial–temporal Graph Convolutional Networks (ST-GCNs) to address the challenge of action recognition based on skeleton data. Following this work, an increasing number of research studies [10,11,12,13] based on GCN models have been proposed. However, the receptive fields of the spatial graph are usually predefined, although the related methods make the adjacency matrices learnable, their expressive power is still limited by the regular spatial graph structure.

Additionally, to learn more discriminative features from skeleton data, the state-of-the-art (SOTA) methods usually use a large number of learnable parameters to enhance the representative capability of the network [14,15]. This makes the model training process more complex and computationally expensive. Recently, transformer-based methods have shown great potential in capturing long-range dependencies, but they come with a high computational cost. This makes the algorithm challenging to apply in real-world applications. Thus, reducing model complexity continues to be a significant challenge.

To make robots quickly and to effectively understand the actions performed by human companions, this paper proposes an efficient hierarchical co-occurrence GCN model. Similar to CNNs, GCN operations can be seen as the aggregation of local features in the spatial domain and the global feature aggregation across channels for skeleton data. Since they are locally aggregated in space, they may fail to capture remote joint interactions involved in actions such as putting on shoes. Inspired by the HCN algorithm [16], we captured the global response of all joints through a hierarchical co-occurrence approach to utilize the correlations between different joints.

The output of the convolution is the global response of all input channels, so if each body joint is treated as a channel, the convolution layer can easily learn the co-occurrence of all joints. This leads us to adopt a simple and practical method to adjust the degree of aggregation on demand. First, the spatial local features of each joint are learned through graph convolution operations. After the transpose operation, the joint dimension is placed into the channels of input, aggregating the global features from all joints through CNN operations. Furthermore, we have introduced separable convolution layers, commonly employed in lightweight CNN architectures, to reduce the model parameters. Additionally, the proposed model benefits from a two-stream framework and channel attention mechanisms, which are utilized to enhance the extraction capability of discriminative features.

To summarize, this paper contributes the following:

- We designed a hierarchical co-occurrence feature learning module, which achieves performance improvement with a minimal number of parameters. Firstly, SGC operations are employed to learn the local features of body joints. Then, after transposing the dimensions, CNN characteristics are utilized to aggregate the global features of the joints.

- By introducing the advanced depth-wise separable layer into the model, we further enhance the efficiency of the model.

- Our method achieved higher accuracy with fewer parameters and computational complexity on the NTU RGB+D 60 and 120 datasets. Compared with the SOTA methods, it achieves a better balance between accuracy and efficiency.

2. Related Work

2.1. Skeleton-Based Action Recognition

Li et al. [16] proposed a CNN-based co-occurrence feature learning method, where they employed a hierarchical aggregation approach to learn point-frame-level skeleton information. However, CNN-based methods do not consider the natural spatial structure of the skeleton. Inspired by methods based on GNNs, Yan et al. [9] first proposed a ST-GCN to model the spatial and temporal sequences of skeleton data simultaneously. Subsequent to this research, Li et al. [11] utilized the potential physical dependencies between joints to model the global relationships within the skeleton. Shi et al. [12] introduced a two-stream adaptive GCN model, utilizing bone length and orientation information to improve the model’s feature discrimination ability. Ye et al. [17] proposed a novel GNN based on context encoding to model global dependencies for the automatic learning of skeleton topology. Liu et al. [15] designed a large-scale multi-scale context aggregation and spatio-temporal dependency complex model (MS-G3D). Si et al. [18] adopted a residual GNN to hierarchically capture the dependency information between body joints, and combined it with multiple skip-clip LSTMs to model the dynamic changes of skeleton sequences. Subsequently, Si et al. [19] proposed a novel attention-enhanced graph convolution LSTM network to extract discriminative features. To enhance the robustness of action recognition models against noisy/incomplete skeleton data, references [20,21] extracted non-local features from the skeleton joint position data. Song et al. [10,13] proposed a multi-stream Graph Convolution Network to explore more discriminative features, achieving more robust action recognition with incomplete skeletons. Li et al. [22] introduced a pose refinement module to recover joints/pose that are occluded or inaccurately detected.

In recent years, transformer-based methods have received extensive attention due to their effectiveness in capturing long-range dependencies. Plizzari et al. [23] proposed a two-stream transformer self-attention network to separately model the spatio-temporal correlations of joints. Liu et al. [24] combined a GCN and transformer operations to capture the spatial–temporal features of joints. Additionally, multi-modal learning has achieved mutual complementation between different types of data, enabling a more comprehensive understanding of the context of actions. Moon et al. [25] integrated the features of RGB and pose streams through the idea of pose-driven, achieving good recognition accuracy. Guo et al. [26] proposed an asynchronous fusion strategy to address the issues of temporal consistency and spatial complementarity in multi-modal fusion. However, existing algorithms improve model performance while introducing a large number of learnable parameters, leading to more difficult model training and higher computational complexity.

2.2. Efficient Models

A considerable amount of research has been proposed on CNN’s lightweight issue. However, research on the complexity of networks based on skeleton data is relatively scarce. Cheng et al. [27] constructed a lightweight CNN based on skeleton data, but its accuracy is not as high as that of the GCN model. Zhang et al. [28] proposed a simple and effective Semantic Guidance Network (SGN), which enhances the network’s feature expression capability by introducing high-level semantics. Li et al. [22] proposed an efficient model by integrating skeletal motion and spatial information. Cheng et al. [14] proposed a Shift-GCN network, which provides a flexible receptive field for spatial and temporal graphs by grouping and shifting the input channels before convolution, but it is a relatively time-consuming in-memory operation. Song et al. [29] built a set of efficient Graph Convolution Network (EfficientGCN) baselines by embedding separable convolution layers into multi-stream networks. Lukas et al. [30] proposed a continuous inference version of ST-GCN through frame-by-frame prediction, which significantly reduces the online inference time complexity. Chen et al. [4] captured global + local features through the Multi-Scale Video Longformer network and employed local window attention to reduce redundancy and computational cost. Wu et al. [31] proposed the multi-grain contextual focus module (MCF) and the temporal discrimination focus module (TDF) to enhance the model performance. Shi et al. [32] proposed a transformer-based lightweight model, but its accuracy in action recognition is not as high as other SOTA models.

3. Efficient Hierarchical Co-Occurrence Graph Convolution Networks

3.1. Model Architecture

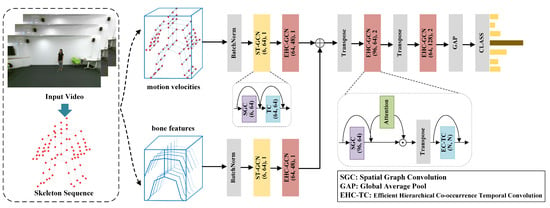

Figure 2 illustrates the structure of the EHC-GCN. Firstly, after data preprocessing, two input branches of identical size are obtained: motion velocity and bone features. Each input sequence can be represented by a tensor of dimensions , where , , and represent the channels, frames, and joints, respectively. These inputs are processed as streams into the network, with each branch sharing the same network architecture. Each input branch includes a BatchNorm layer, an initialization ST-GCN module [7], and the efficient hierarchical co-occurrence Graph Convolution Network (EHC-GCN) module proposed in this paper. The details of the module will be explained in the following subsections. Following the input branches, we integrate the feature maps from the two branches with concatenation operation. The main branch also employs two EHC-GCN modules. Finally, the output feature maps undergo global averaging to create a feature vector, followed by the application of a fully connected layer to classify the action category.

Figure 2.

The overview of the proposed EHC-GCN model.

3.2. Data Preprocessing

By preprocessing the skeleton data [12], two sets of distinct input features are obtained: motion velocity and bone features. They are defined as follows:

- Motion velocity consists of fast motion and slow motion .

- Bone features consists of skeletal length and skeletal angle .where represents the adjacent joint of the i-th joint and represents the 3D coordinates of joint.

3.3. Spatio-Temporal Graph Convolution

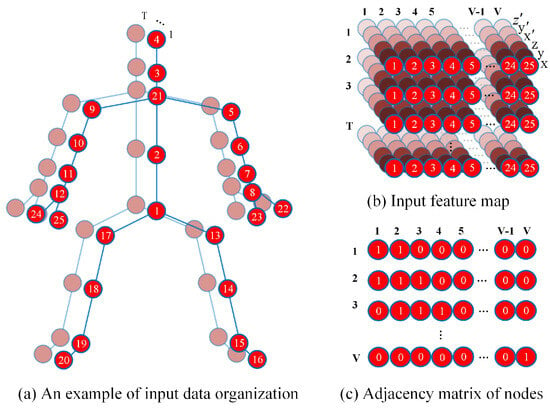

A skeleton sequence can be regarded as a graph structure of all body joints in each frame, as shown in Figure 3a. Due to the fixed structure and function of body joints, the ST-GCN algorithm [9] extracts joint features by constructing a spatio-temporal graph. Specifically, it includes a spatial graph convolution (SGC) and a temporal convolution (TC). Figure 3b shows the input feature map of the SGC operation, which can be represented as . Figure 3c shows the adjacency matrix between joints, which can be represented as A + I, where A denotes the connection matrix by the natural structure of body joints, and I represents the connection relationship of each joint to itself. Moreover, since each edge connecting joints has different importance, a learnable edge weight matrix M is introduced. Finally, the SGC operation is expressed in Equation (3) as follows:

where D is the predefined maximum spatial graph distance (set to 2 in this paper) used for normalizing the adjacency matrix, and W represents the weight parameters. After SGC operation, the resulting output feature map is .

Figure 3.

Spatial graph convolution operation representation.

In order to capture the temporal information of joints, convolution operations are applied to the temporal dimension. Since the same nodes in all frames can naturally be arranged into a 2D sequence, the convolution operation is performed on the output feature map of the SGC operation, where is the size of the TC kernel (set to 5 in this paper).

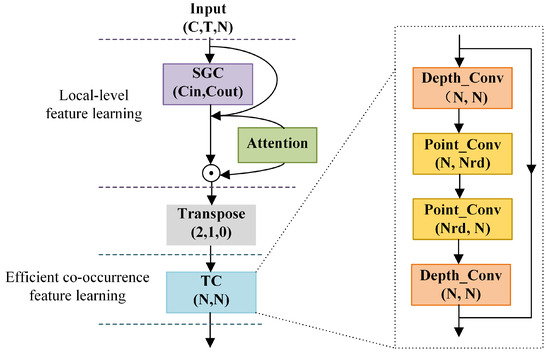

3.4. Efficient Hierarchical Co-Occurrence Convolution

Similar to CNNs, GCN can be seen as the aggregation of local features in the spatial domain of skeleton data and the global feature aggregation across channels. Since it is locally aggregated in space, it may be difficult to capture remote joint interactions involved in actions. As the output of the convolution is the global response of all input channels, if the joint and channel dimensions are transposed, the convolution layer can easily learn the co-occurrence of all joints. Therefore, we adopt a hierarchical co-occurrence framework to adjust the degree of feature aggregation. First, we learn the local features of joint through SGC operations. After transposing the joint and channel dimension, we can use CNN operations to learn the global features of all joints while using convolution kernels to extract temporal features, as shown in Figure 4(left), where and represent the number of input and output channels, respectively, and N represents the total number of body joints.

Figure 4.

Hierarchical co-occurrence module (left) and efficient co-occurrence temporal convolution layers (right).

To further improve the efficiency of the model and reduce the computational cost, an advanced separable convolution architecture [33] is applied, as shown in Figure 3b,c. This architecture replaces the standard TC by stacking depth-wise convolution and point-wise convolution layer in order, and introduces residual connections to reduce the difficulty of model training. Additionally, we have introduced a visualization chart (details can be seen in Appendix B) to illustrate the step-by-step transformation of skeleton data through different network layers.

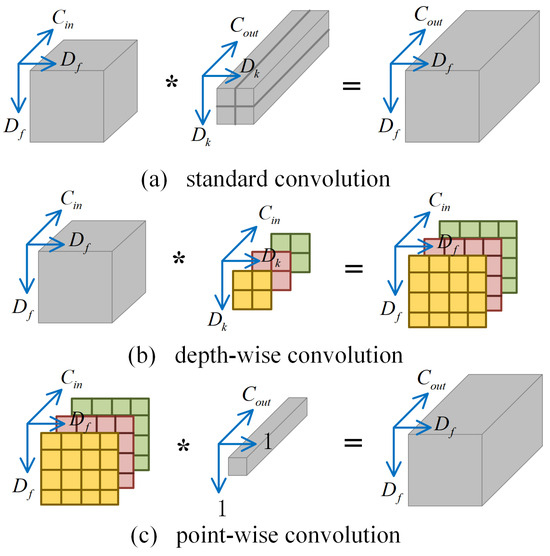

Separable convolution operation consists of two parts: depth-wise convolution (D_Conv) and point-wise convolution (P_Conv). D_Conv is to perform separate group convolution on each input channel. P_Conv employs 1 × 1 convolution kernels to combine the outputs of all channels. A visual comparison of standard and separable convolution is illustrated in Figure 5. Assuming that the dimensions of the input features are , the kernel dimensions are , and the counts of input and output channels are and , respectively, the computational expense of standard convolution is calculated as , as depicted in Figure 5a. In contrast, for the separable convolution represented in Figure 5b,c, the computational requirement is represented as . It can be seen that replacing standard convolution with separable convolution layers can effectively reduce the computational cost of the model.

Figure 5.

Comparison between standard convolution and separable convolution.

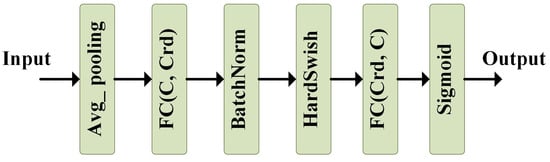

3.5. Channel Attention Module

The hierarchical co-occurrence framework can effectively extract the local and global information of joint features, implicitly incorporating an attention mechanism in the joint dimension. To further consider the relationships between channel features, this paper implements an attention module based on the SENet [34] structure to automatically learn the importance of each channel feature. As shown in Figure 6, the spatial dimensions are first compressed through an adaptive average pooling operation to obtain a global receptive field. Then, the channels are dimensionally reduced and expanded through fully connected (FC) layers, which is performed to apply nonlinear transformations to the network and reduce the computational load. Finally, the obtained scores for each channel are applied to the corresponding channels through a multiplicative weighting process.

Figure 6.

Channel attention module.

3.6. Loss Function

Considering that the final fully connected layer of the model generates a logit vector , where Q represents the total number of action categories, the probability of classifying the input sample can be calculated using the Softmax function:

where represents the i-th component of z. Subsequently, a cross-entropy loss is computed to serve as the objective function for optimizing the model:

where is the one-hot vector indicating the ground truth of action class.

4. Experiments

4.1. Datasets and Evaluation Metrics

NTU RGB+D 60 dataset [35] is one of the most widely used large-scale action recognition datasets, consisting of 40 subjects aged between 10 and 35 years performing 60 types of actions, with a total of 56,880 videos captured by 3 Microsoft Kinect v2 cameras. The actions are categorized into three classes: (1) 40 daily actions; (2) 9 health-related actions; (3) 11 interactive actions. The dataset follows two different benchmarks: (1) Cross-Subject (X-Sub) includes 40,320 training samples and 16,560 testing samples, divided by separating the 40 subjects into two groups. (2) Cross-View (X-View) uses videos collected from cameras 2 and 3 as training samples (37,920 videos) and videos from camera 1 as testing samples (18,960 videos). A total of 302 samples with missing or incomplete skeleton data are ignored [15]. The dataset provides RGB images, depth images, and skeleton information simultaneously. In our experiments, we used 3D skeleton sequences provided by the Kinect v2 camera and 2D human pose results extracted from RGB videos. The 2D human pose estimation was mainly achieved by the Faster-RCNN [36] with a ResNet50 backbone network and the HRNet-w32 [37] single-person pose estimation algorithm.

NTU RGB+D 120 dataset [38] is presently the most extensive dataset available for indoor action recognition and serves as an advanced iteration of the NTU RGB+D 60 dataset. It comprises 114,480 videos that are categorized into 120 action classes. The dataset also follows two benchmarks: (1) Cross-Subject (X-Sub) includes 630,226 training samples and 50,922 evaluation samples; (2) Cross-Setup (X-Set) includes 54,471 training videos and 59,477 evaluation videos, which are divided based on the distance and height between the subject and the camera. Additionally, 532 samples with missing or incomplete skeleton data are excluded from the dataset.

Evaluation metric: We report the Top-1 accuracy for action recognition datasets. Additionally, we measure the model efficiency by considering the number of trainable parameters (Params) and floating point operations (FLOPs). A higher number of FLOPs indicates that more computational operations are needed during the neural network inference process, which usually means greater computational complexity and longer execution time. In our ablation studies and comparative experiments on the NTU RGB+D 60 dataset, we included Params and FLOPs as further evaluation metrics.

4.2. Implementation Details

Skeleton data can be obtained from motion capture systems (such as the Kinect v2 camera, etc.) or through pose estimation algorithms. For the NTU 2D and 3D skeleton data that we used, there are some similarities and differences worth noting:

- The NTU dataset has a maximum of 300 frames for each video clip. To unify the dimension of input frames, skeleton sequences with fewer than 300 frames were padded with zeros. At most, the skeleton data of two individuals were selected for each action sequence; if there were fewer than two individuals, they were padded with zeros.

- The NTU RGB+3D skeleton data were obtained from the Kinect v2 camera, which can sense the 3D positions of 25 body joints. Therefore, each skeleton sequence contains the coordinates of 25 body joints for 300 frames, with 3 input channels consisting of 2D pixel coordinates (x, y) and depth coordinate z.

- The NTU RGB+2D skeleton data were obtained using the HRNet-w32 [37] pose estimation algorithm, which provides 2D coordinates for 17 body joints. Thus, each skeleton sequence contains the coordinates of 17 body joints for 300 frames. Following reference [11], we set three input channels consisting of 2D pixel coordinates (x, y) and confidence score.

- The different methods of obtaining skeleton data resulted in variations in the number of body joints and their adjacency relationships. When using GCN operations to process skeleton data, it is necessary to customize the adjacency matrix between joints (as shown in Figure 3c). Therefore, when dealing with skeleton data from different sources, it is necessary to reset the adjacency matrix to reflect the current body joint connectivity.

The experiment was conducted using 2 RTX 2080 Ti (11 GB) GPUs, with an Intel(R) Xeon(R) Platinum 8255C CPU@2.50 GHz CPU. The model was trained for a total of 70 epochs. The starting learning rate was initialized to 0.1, with a warm-up strategy applied during the first 10 epochs [39], and decayed using a cosine schedule after the 10th epoch. The model training utilized the SGD optimizer with a momentum of 0.9 and a weight decay of 0.0001.

4.3. Ablation Study

To verify the effectiveness of each module, we conducted ablation studies on the proposed EHC-GCN model using the NTU RGB+D 60 dataset with the X-Sub benchmark. First, we validated the effectiveness of the hierarchical co-occurrence (HC) framework and the attention module. As shown in Table 1, when only the hierarchical co-occurrence framework is removed (Non-HC), the model’s recognition accuracy decreases by 1.6% compared to the proposed EHC-GCN. Similarly, when only the attention module is removed (Non-Attention), the model’s recognition accuracy decreases by 0.9% compared to the EHC-GCN. Additionally, as seen in Table 1, the HC structure and attention module can effectively improve the performance of the model, while the model parameters and FLOPs only increase slightly.

Table 1.

The effectiveness of HC and the attention module.

Next, to verify the necessity of each input branch, we designed a tabular comparison. As shown in Table 2, the model performance with the two-stream structure significantly improved. This demonstrates the effectiveness of data preprocessing.

Table 2.

The effectiveness of data preprocessing.

Finally, we investigated the impact of the basic convolution layer versus the depth-wise separable convolution layer structure on the performance. In previous studies, separable convolution layers have proven their effectiveness and robustness [40]. As shown in Table 3, by adopting the advanced depth-wise separable structure, we can achieve performance comparable to that of the basic convolution layer with significantly reduced computational cost.

Table 3.

The impact of separable layers on model performance.

Additionally, The efficient temporal convolution module uses a convolution kernel of a certain size to extract temporal features. Since the size of the convolution kernel determines its receptive field, convolution kernels of different sizes have varying abilities to extract features. To explore the optimal temporal convolution kernel size for the model, a comparative experiment was conducted using convolution kernels of various sizes on the NTU RGB+D 60 dataset under the X-Sub benchmark. As shown in Table 4, when the temporal convolution layer uses a 5 × 1 convolution kernel to extract temporal features, the model’s performance reaches its optimal; hence, the temporal convolution kernel size is set to 5 × 1.

Table 4.

The impact of different temporal convolution kernel sizes (%).

The model in this paper consists of L EHC-GCN modules, and the number of network layers is directly related to the recognition accuracy. Few layers may fail to extract discriminative features sufficiently, while too many layers can lead to overfitting, and the increased computational cost can also affect the practical application of the model. As shown in Table 5, we conducted experiments with different numbers of EHC-GCN modules. When L = 3, the model achieved a recognition accuracy of 88.5% on the X-Sub benchmark, with Gflops of 2.09 and a parameter amount of 0.16 M. However, when we continued to increase the number of GCN modules, we found that the network’s performance did not improve.

Table 5.

The impact of different numbers of EHC-GCN modules.

4.4. Comparisons with SOTA Methods

We compare EHC-GCN model with the SOTA methods on the NTU 60 [35] and NTU 120 [38] datasets, with the results shown in Table 6 and Table 7. These tables include methods based on a 2D skeleton extracted from RGB data and a 3D skeleton obtained from Kinect cameras. Our method achieves better or more competitive accuracy on both data forms. For 3D skeleton-based action recognition, compared to the CNN-based HCN algorithm [16], our proposed EHC-GCN model improves the Top-1 accuracy by 2.0% and 2.3% on the X-Sub and X-View benchmarks, respectively. Among the graph-based comparative methods, several models are particularly noteworthy. The first is ST-GCN [9], which is a pioneering graph convolution model. Compared to ST-GCN, our model’s recognition accuracy improves by 7.0% on the X-Sub benchmark and 5.1% on the X-View benchmark, respectively, with a reduction in FLOPs by a factor of 7.8 times and a decrease in parameters by a factor of 19.4 times. Compared to 2s-AGCN [12], we achieve similar recognition accuracy, but its FLOPs is about 17.9 times and the number of parameters is about 43.4 times that of our model. The third is MS-G3D [15], which is a high-accuracy model proposed in recent years. Its accuracy is higher than our method, but it also suffers from the problem of being too complex and over-parametrized.

Table 6.

Comparison experiment on the NTU 60 dataset.

Table 7.

Comparison experiment on the NTU 120 dataset.

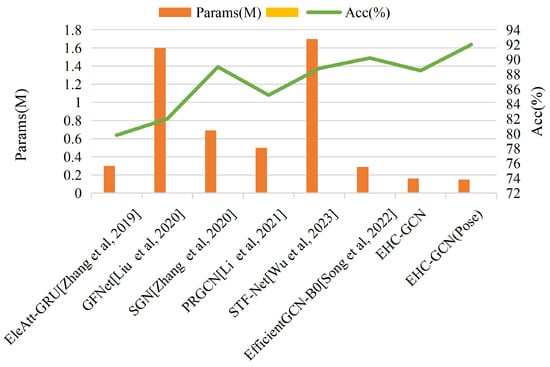

In particular, we focus on several lightweight action recognition models [22,28,29,30,31,44,45], which are indicated with an asterisk (*) in Table 6 and Table 7. Figure 7 shows the Params (M) and accuracy (%) for different lightweight models. The horizontal axis lists the names of different models; the left side of the vertical axis displays the Params (M), represented by an orange bar chart; the right side of the vertical axis shows the accuracy (%), represented by a green line graph. It can be observed that, compared to EleAtt-GRU [44], GFNet [45], and PRGCN [22], our model achieves higher accuracy with fewer parameters. Compared to the SGN method [28], our approach has slightly lower accuracy on the NTU 60 dataset but performs better on the NTU 120 dataset with fewer parameters. Compared to the most advanced lightweight models (CoST-GCN, CoAGCN, and CoS-TR [30]), our model achieves similar performance with fewer parameters. It is worth noting that this algorithm provides a frame-by-frame prediction approach, which significantly reduces its FLOPs, whereas our method and other comparative algorithms operate on the entire skeleton sequence. The accuracy of the EfficientGCN-B0 model [29] is higher than our method, but we can achieve slightly higher accuracy on the NTU 120 dataset X-Set benchmark, and with fewer parameters and lower computational complexity. Moreover, in 2D skeleton-based action recognition using pose estimation, our paper achieves superior results in both accuracy and efficiency. In summary, our model has lower requirements for computational resources and demonstrates good performance.

Figure 7.

Comparison of model complexity between the EHC-GCN and competing methods. (EleAtt-GRU [44], GFNet [45], SGN [28], PRGCN [22], STF-Net [31], EfficientGCN-B0 [29]).

4.5. Speed

In the “Experiments” section, we reported the FLOPs required for model inference. FLOPs refer to the number of floating-point operations and can be simply understood as a unit for assessing computational volume. FLOPs can be used to measure the overall complexity of the model (i.e., the amount of computation required). For example, during model inference, one can roughly estimate the time required for model inference by calculating the model’s inference FLOPs in relation to the GPU’s peak FLOPS (floating point operations per second) and the GPU’s utilization rate. Theoretically, the inference time of a model on any device should be positively correlated with the FLOPs metric; the higher the FLOPs value, the longer the inference time required.

To provide a more intuitive understanding of the real-time efficiency of model inference, we have added the model inference times on different hardware setups (CPU, GPU) for the NTU 60 dataset. We used a CPU of Intel(R) Xeon(R) Platinum 8255C@2.50 GHz and a GPU of an RTX 2080 Ti (11 GB). When performing inference predictions on the CPU, we calculated for individual samples, which means setting the batch size to 1. When performing inference predictions on the GPU, we used a batch size of 16 to leverage its parallel processing capabilities, at which point we observed the GPU utilization to be close to 100%.

As shown in Table 8, “Frames per pred” represents the number of frames contained in each skeleton clip sample, and “Throughput (preds/s)” indicates the number of samples that can be processed by each CPU/GPU per second. We observed that the EHC-GCN model can achieve an inference speed of more than 12.1 sequences per second on the CPU and over 210 sequences per second on the GPU. Please note that each skeleton sequence typically contains 300 frames, which means that the inference speed is capable of real-time processing.

Table 8.

Real-time efficiency on different hardware setups (CPU, GPU).

5. Discussion

5.1. The Application of the EHC-GCN Model in Human–Computer Interaction Scenarios

Human–robot collaboration (HRC) is increasingly becoming a key element of manufacturing automation to meet the demands for higher productivity and flexibility. In assembly tasks, for instance, components may be placed at a location far from humans, and robots can assist in completing the assembly by performing operations such as picking up, placing, and tightening screws. HRC enables dynamic interaction between humans and robots in shared spaces, based on continuously changing real-time situations. Both humans and robots should be able to recognize each other’s tasks and intentions. The central focus of this paper is on rapidly and effectively perceiving human behavior to implicitly help robots understand human intentions. After identifying human behavior, the robot uses a specific action as a trigger to predict the next behavior and take action, which can eliminate the need for workers to directly command the robot, making human–robot collaboration more natural and enjoyable. Currently, the deployment of human perception systems on robot platforms is not yet mature, due to the necessity of human detection, tracking, and action recognition models, all of which need to operate in real-time. Therefore, reducing model complexity is necessary, and our EHC-GCN model has achieved good performance in both recognition accuracy and efficiency, which is beneficial for its deployment on robot platforms.

5.2. Discussion on Noisy/Incomplete Skeleton Data

In real-world applications, it is common to capture noisy/incomplete skeletons. Handling occluded pose data is a challenge in the field of computer vision, especially in tasks such as human pose estimation and skeleton-based action recognition. We believe this issue can be addressed from two aspects. On one hand, the focus is on how to obtain more accurate and complete skeleton data, through strategies such as data augmentation, model design [37], pose correction [22], contextual information, and fusion of multi-modal data [44]. On the other hand, the research emphasis is on improving the robustness of action recognition models on noisy/incomplete data [10,13,20,21]. Song et al. [13] have established an occlusion dataset for human actions by setting the occluded joints to zero and have achieved good performance by exploring more discriminative features of the skeleton data.

In this paper, we can obtain skeleton data even in the presence of occlusions through a depth camera or existing pose estimation algorithms. Although these data may not be precise enough, and some joint data may be occluded (set to zero), we can still adopt some strategies to enhance the robustness of action recognition based on noisy/incomplete data. By preprocessing the skeleton data and using motion velocity and bone features as input streams to the network, we explore sufficient discriminative features to overcome the noise/inaccuracy of the skeleton. With the hierarchical structure, an EHC-GCN can handle features at different levels. After the network focuses on local joint features, it integrates these local features to understand the global structure. If joints are inaccurately located or incomplete at the local level, global information and more discriminative features may help to correct these errors, as it can understand the relationships between joints from a broader perspective. Inspired by this, the potential of this paper in handling noisy/incomplete skeleton data can be seen.

5.3. Future Work

In future work, we will delve into several potential directions:

- Fine-grained data augmentation: Integrating more detailed hand joint data features is beneficial for the recognition of finger-level action categories. Moreover, augmenting face keypoint data can help in identifying the emotional states of individuals during behavioral processes.

- Multi-modal learning: By integrating multi-modal data (such as skeleton data, RGB videos, depth information, and audio signals), we can achieve mutual complementarity between different types of data. This allows for a more comprehensive understanding of the context of actions, thereby improving the recognition accuracy.

- Deployment in practical applications: To transition the action recognition model from experimental simulation to real-world application, it is necessary to test and validate it in actual human–robot interaction scenarios. This includes integration with industrial robot systems, on-site trials, and performance evaluation.

6. Conclusions

In practical applications of human action recognition tasks, maintaining a balance between recognition speed and accuracy has always been a central focus of research. This paper proposes an efficient hierarchical co-occurrence Graph Convolution Network that adjusts the degree of feature aggregation on demand through a simple and practical method, and introduces advanced separable convolution layers to reduce model parameters. In addition, a two-stream architecture and channel attention mechanism are applied to the proposed model. Compared to the ST-GCN baseline model, the recognition accuracy of the EHC-GCN model has improved by 7.0% and 5.1% on the CS and CV benchmarks, respectively, with a reduction of 7.8% in FLOPs. Compared to the SOTA model, our model achieves better or comparable accuracy with fewer model parameters and computational cost, which will be more conducive to deployment on embedded platforms with limited computational resources.

Author Contributions

Conceptualization, H.W. and Y.B.; methodology, Y.B. and J.X.; validation, Y.B. and J.X.; investigation, D.Y.; data curation, D.Y. and L.X.; writing—original draft preparation, Y.B. and H.W.; writing—review and editing, D.Y.; visualization, L.X.; supervision, D.Y. and H.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Liaoning Province Key Research and Development Program Project (2024JH2/102400062).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Publicly available datasets were analyzed in this study. The data can be found here. NTU RGB+D: https://rose1.ntu.edu.sg/dataset/actionRecognition/ (accessed on 20 December 2024). The code for this research is available at https://github.com/baiying1812/EHC-GCN.git (accessed on 1 January 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

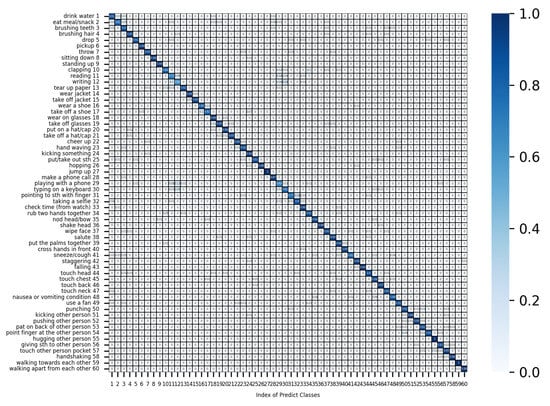

Appendix A. Confusion Matrix on the NTU 60 Dataset

Figure A1 visualizes the confusion matrix for action recognition in the NTU 60 dataset. It can be observed that the recognition accuracy of the EHC-GCN model is still relatively low for actions such as writing, reading, typing on a keyboard, and using a smartphone. Upon analysis, these misclassified actions are mainly performed through slight tremors of both hands, and their features are extremely similar in both spatial and temporal sequences. Due to the lack of sufficient finger joint data in the NTU RGB+D skeleton dataset, the information of both hands is generally insufficient, which has a significant impact on the recognition accuracy. Additionally, since the action characteristics of putting on shoes and taking off shoes are similar in the spatial dimension but differ in the temporal sequence, further improvement is needed in distinguishing these actions.

Figure A1.

Confusion matrix.

Appendix B. Network Architecture

To enhance the clarity of the model architecture, especially the hierarchical co-occurrence framework, we introduce a visual diagram to supplement the explanation of the step-by-step transformation of skeleton features through different network layers.

Table A1.

Network architecture of the EHC-GCN.

Table A1.

Network architecture of the EHC-GCN.

| Layers | Motion Velocities | Bone Features | Output | |

|---|---|---|---|---|

| Input | Fast motion; slow motion | Skeleton length; skeleton angle | M: 6 × 300 × 25 B: 6 × 300 × 25 | |

| Norm | Batch Norm | Batch Norm | M: 6 × 300 × 25 B: 6 × 300 × 25 | |

| STGCN Block | SGC | Adjacency matrix; D = 2 (1, 1), 64, stride (1, 1) | Adjacency matrix; D = 2 (1, 1), 64, stride (1, 1) | M: 64 × 300 × 25 B: 64 × 300 × 25 |

| TC | (5, 1), 64, stride (1, 1) | (5, 1), 64, stride (1, 1) | M: 64 × 300 × 25 B: 64 × 300 × 25 | |

| EHC-GCN Block | SGC | Adjacency matrix; D = 2 (1, 1), 48, stride (1, 1) | Adjacency matrix; D = 2 (1, 1), 48, stride (1, 1) | M: 48 × 300 × 25 B: 48 × 300 × 25 |

| Attention | Channel attention module | Channel attention module | M: 48 × 300 × 25 B: 48 × 300 × 25 | |

| Transpose | (2, 1, 0) | (2, 1, 0) | M: 25 × 300 × 48 B: 25 × 300 × 48 | |

| EC-TC | D_conv: (5, 1), 48, stride (1, 1) P_conv: (1, 1), 24, stride (1, 1) P_conv: (1, 1), 48, stride (1,1) D_conv: (5, 1), 48, stride (1, 1) | D_conv: (5, 1), 48, stride (1, 1) P_conv: (1, 1), 24, stride (1, 1) P_conv: (1, 1), 48, stride (1, 1) D_conv: (5, 1), 48, stride (1, 1) | M: 25 × 300 × 48 B: 25 × 300 × 48 | |

| Concate | Concate, dim = 2 | 25 × 300 × 96 | ||

| Transpose | (2, 1, 0) | 96 × 300 × 25 | ||

| EHC-GCN Block | SGC | Adjacency matrix; D = 2; (1, 1), 64, stride (1, 1) | 64 × 300 × 25 | |

| Attention | Channel attention module | 64 × 300 × 25 | ||

| Transpose | (2, 1, 0) | 25 × 300 × 64 | ||

| EC-TC | D_conv: (5, 1), 64, stride (1, 1) P_conv: (1, 1), 32, stride (1, 1) P_conv: (1, 1), 64, stride (1, 1) D_conv: (5, 1), 64, stride (2, 1) | 25 × 150 × 64 | ||

| Transpose | (2, 1, 0) | 64 × 150 × 25 | ||

| EHC-GCN Block | SGC | Adjacency matrix; D = 2; (1, 1), 128, stride (1, 1) | 128 × 150 × 25 | |

| Attention | Channel attention module | 128 × 150 × 25 | ||

| Transpose | (2, 1, 0) | 25 × 150 × 128 | ||

| EC-TC | D_conv: (5, 1), 128, stride (1, 1) P_conv: (1, 1), 64, stride (1, 1) P_conv: (1, 1), 128, stride (1, 1) D_conv: (5, 1), 128, stride (2, 1) | 25 × 75 × 128 | ||

| Transpose Pool | (2, 1, 0) | 128 × 75 × 25 | ||

| Global average pooling | 128 | |||

| Classifier | Fully connected layer | number of classes | ||

References

- Yu, X.; Zhang, X.; Xu, C.; Ou, L. Human–robot collaborative interaction with human perception and action recognition. Neurocomputing 2024, 563, 126827. [Google Scholar] [CrossRef]

- Gammulle, H.; Ahmedt-Aristizabal, D.; Denman, S.; Tychsen-Smith, L.; Petersson, L.; Fookes, C. Continuous human action recognition for human-machine interaction: A review. Acm Comput. Surv. 2023, 55, 272. [Google Scholar] [CrossRef]

- Moutinho, D.; Rocha, L.F.; Costa, C.M.; Teixeira, L.F.; Veiga, G. Deep learning-based human action recognition to leverage context awareness in collaborative assembly. Robot. Comput.-Integr. Manuf. 2023, 80, 102449. [Google Scholar] [CrossRef]

- Chen, C.; Zhang, C.; Dong, X. A Multi-Scale Video Longformer Network for Action Recognition. Appl. Sci. 2024, 14, 1061. [Google Scholar] [CrossRef]

- Delamou, M.; Bazzi, A.; Chafii, M.; Amhoud, E.M. Deep learning-based estimation for multitarget radar detection. In Proceedings of the 2023 IEEE 97th Vehicular Technology Conference (VTC2023-Spring), Florence, Italy, 20–23 June 2023; IEEE: New York, NY, USA, 2023; pp. 1–5. [Google Scholar]

- Du, Y.; Fu, Y.; Wang, L. Skeleton based action recognition with convolutional neural network. In Proceedings of the 2015 3rd IAPR Asian Conference on Pattern Recognition (ACPR), Kuala Lumpur, Malaysia, 3–6 November 2015; IEEE: New York, NY, USA, 2015; pp. 579–583. [Google Scholar]

- Sun, Z.; Ke, Q.; Rahmani, H.; Bennamoun, M.; Wang, G.; Liu, J. Human action recognition from various data modalities: A review. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 3200–3225. [Google Scholar] [CrossRef] [PubMed]

- Kong, Y.; Fu, Y. Human action recognition and prediction: A survey. Int. J. Comput. Vis. 2022, 130, 1366–1401. [Google Scholar] [CrossRef]

- Yan, S.; Xiong, Y.; Lin, D. Spatial temporal graph convolutional networks for skeleton-based action recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Song, Y.F.; Zhang, Z.; Wang, L. Richly activated graph convolutional network for action recognition with incomplete skeletons. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; IEEE: New York, NY, USA, 2019; pp. 1–5. [Google Scholar]

- Li, M.; Chen, S.; Chen, X.; Zhang, Y.; Wang, Y.; Tian, Q. Actional-structural graph convolutional networks for skeleton-based action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3595–3603. [Google Scholar]

- Shi, L.; Zhang, Y.; Cheng, J.; Lu, H. Two-stream adaptive graph convolutional networks for skeleton-based action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12026–12035. [Google Scholar]

- Song, Y.F.; Zhang, Z.; Shan, C.; Wang, L. Richly activated graph convolutional network for robust skeleton-based action recognition. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 1915–1925. [Google Scholar] [CrossRef]

- Cheng, K.; Zhang, Y.; He, X.; Chen, W.; Cheng, J.; Lu, H. Skeleton-based action recognition with shift graph convolutional network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 183–192. [Google Scholar]

- Liu, Z.; Zhang, H.; Chen, Z.; Wang, Z.; Ouyang, W. Disentangling and unifying graph convolutions for skeleton-based action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 143–152. [Google Scholar]

- Li, C.; Zhong, Q.; Xie, D.; Pu, S. Co-occurrence feature learning from skeleton data for action recognition and detection with hierarchical aggregation. arXiv 2018, arXiv:1804.06055. [Google Scholar]

- Ye, F.; Pu, S.; Zhong, Q.; Li, C.; Xie, D.; Tang, H. Dynamic gcn: Context-enriched topology learning for skeleton-based action recognition. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 55–63. [Google Scholar]

- Si, C.; Jing, Y.; Wang, W.; Wang, L.; Tan, T. Skeleton-based action recognition with hierarchical spatial reasoning and temporal stack learning network. Pattern Recognit. 2020, 107, 107511. [Google Scholar] [CrossRef]

- Si, C.; Chen, W.; Wang, W.; Wang, L.; Tan, T. An attention enhanced graph convolutional lstm network for skeleton-based action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1227–1236. [Google Scholar]

- Wang, X.; Han, T.X.; Yan, S. An HOG-LBP human detector with partial occlusion handling. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 32–39. [Google Scholar] [CrossRef]

- Weinland, D.; Özuysal, M.; Fua, P. Making Action Recognition Robust to Occlusions and Viewpoint Changes. In Proceedings of the Computer Vision—ECCV 2010, Heraklion, Greece, 5–11 September 2010; Daniilidis, K., Maragos, P., Paragios, N., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 635–648. [Google Scholar]

- Li, S.; Yi, J.; Farha, Y.A.; Gall, J. Pose refinement graph convolutional network for skeleton-based action recognition. IEEE Robot. Autom. Lett. 2021, 6, 1028–1035. [Google Scholar] [CrossRef]

- Plizzari, C.; Cannici, M.; Matteucci, M. Spatial temporal transformer network for skeleton-based action recognition. In Proceedings of the Pattern Recognition. ICPR International Workshops and Challenges, Virtual Event, 10–15 January 2021; Proceedings, Part III. Springer: Berlin/Heidelberg, Germany, 2021; pp. 694–701. [Google Scholar]

- Liu, Y.; Zhang, H.; Xu, D.; He, K. Graph transformer network with temporal kernel attention for skeleton-based action recognition. Knowl.-Based Syst. 2022, 240, 108146. [Google Scholar] [CrossRef]

- Moon, G.; Kwon, H.; Lee, K.M.; Cho, M. Integralaction: Pose-driven feature integration for robust human action recognition in videos. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 3339–3348. [Google Scholar]

- Guo, F.; Jin, T.; Zhu, S.; Xi, X.; Wang, W.; Meng, Q.; Song, W.; Zhu, J. B2c-afm: Bi-directional co-temporal and cross-spatial attention fusion model for human action recognition. IEEE Trans. Image Process. 2023, 32, 4989–5003. [Google Scholar] [CrossRef] [PubMed]

- Cheng, Q.; Ren, Z.; Cheng, J.; Zhang, Q.; Yan, H.; Liu, J. Skeleton-based action recognition with multi-scale spatial-temporal convolutional neural network. In Proceedings of the 2021 IEEE International Conference on Real-time Computing and Robotics (RCAR), Xining, China, 15–19 July 2021; IEEE: New York, NY, USA, 2021; pp. 957–962. [Google Scholar]

- Zhang, P.; Lan, C.; Zeng, W.; Xing, J.; Xue, J.; Zheng, N. Semantics-guided neural networks for efficient skeleton-based human action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1112–1121. [Google Scholar]

- Song, Y.F.; Zhang, Z.; Shan, C.; Wang, L. Constructing stronger and faster baselines for skeleton-based action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 1474–1488. [Google Scholar] [CrossRef]

- Hedegaard, L.; Heidari, N.; Iosifidis, A. Continual spatio-temporal graph convolutional networks. Pattern Recognit. 2023, 140, 109528. [Google Scholar] [CrossRef]

- Wu, L.; Zhang, C.; Zou, Y. SpatioTemporal focus for skeleton-based action recognition. Pattern Recognit. 2023, 136, 109231. [Google Scholar] [CrossRef]

- Shi, F.; Lee, C.; Qiu, L.; Zhao, Y.; Shen, T.; Muralidhar, S.; Han, T.; Zhu, S.C.; Narayanan, V. Star: Sparse transformer-based action recognition. arXiv 2021, arXiv:2107.07089. [Google Scholar]

- Zhou, D.; Hou, Q.; Chen, Y.; Feng, J.; Yan, S. Rethinking bottleneck structure for efficient mobile network design. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part III 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 680–697. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Shahroudy, A.; Liu, J.; Ng, T.T.; Wang, G. NTU RGB+D: A large scale dataset for 3D human activity analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1010–1019. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X.; et al. Deep high-resolution representation learning for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3349–3364. [Google Scholar] [CrossRef]

- Liu, J.; Shahroudy, A.; Perez, M.; Wang, G.; Duan, L.Y.; Kot, A.C. NTU RGB+D 120: A large-scale benchmark for 3D human activity understanding. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 2684–2701. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Guo, J.; Li, Y.; Lin, W.; Chen, Y.; Li, J. Network decoupling: From regular to depthwise separable convolutions. arXiv 2018, arXiv:1808.05517. [Google Scholar]

- Shahroudy, A.; Ng, T.T.; Gong, Y.; Wang, G. Deep multimodal feature analysis for action recognition in rgb+ d videos. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 1045–1058. [Google Scholar] [CrossRef] [PubMed]

- Luvizon, D.C.; Picard, D.; Tabia, H. 2d/3d pose estimation and action recognition using multitask deep learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5137–5146. [Google Scholar]

- Baradel, F.; Wolf, C.; Mille, J.; Taylor, G.W. Glimpse clouds: Human activity recognition from unstructured feature points. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 469–478. [Google Scholar]

- Zhang, P.; Xue, J.; Lan, C.; Zeng, W.; Gao, Z.; Zheng, N. EleAtt-RNN: Adding attentiveness to neurons in recurrent neural networks. IEEE Trans. Image Process. 2019, 29, 1061–1073. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Zhang, L.; Guan, L.; Liu, M. Gfnet: A lightweight group frame network for efficient human action recognition. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; IEEE: New York, NY, USA, 2020; pp. 2583–2587. [Google Scholar]

- Li, L.; Wang, M.; Ni, B.; Wang, H.; Yang, J.; Zhang, W. 3d human action representation learning via cross-view consistency pursuit. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 4741–4750. [Google Scholar]

- Peng, W.; Hong, X.; Zhao, G. Tripool: Graph triplet pooling for 3D skeleton-based action recognition. Pattern Recognit. 2021, 115, 107921. [Google Scholar] [CrossRef]

- Nikpour, B.; Armanfard, N. Spatio-temporal hard attention learning for skeleton-based activity recognition. Pattern Recognit. 2023, 139, 109428. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).