Abstract

Few-shot multimodal sentiment analysis (FMSA) has garnered substantial attention due to the proliferation of multimedia applications, especially given the frequent difficulty in obtaining large quantities of training samples. Previous works have directly incorporated vision modality into the pre-trained language model (PLM) and then leveraged prompt learning, showing effectiveness in few-shot scenarios. However, these methods encounter challenges in aligning the high-level semantics of different modalities due to their inherent heterogeneity, which impacts the performance of sentiment analysis. In this paper, we propose a novel framework called Multi-task Supervised Alignment Pre-training (MSAP) to enhance modality alignment and consequently improve the performance of multimodal sentiment analysis. Our approach uses a multi-task training method—incorporating image classification, image style recognition, and image captioning—to extract modal-shared information and stronger semantics to improve visual representation. We employ task-specific prompts to unify these diverse objectives into a single Masked Language Model (MLM), which serves as the foundation for our Multi-task Supervised Alignment Pre-training (MSAP) framework to enhance the alignment of visual and textual modalities. Extensive experiments on three datasets demonstrate that our method achieves a new state-of-the-art for the FMSA task.

1. Introduction

The proliferation of multimedia applications has propelled multimodal sentiment analysis (MSA) on image and text to the forefront of research endeavors. Whether it is for dialogue systems or human–computer interaction, or even more application scenarios, MSA has a wide range of applicability [1]. However, early MSA research was limited by large-scale manual annotation work, requiring careful labeling for a large number of sentiment tags, which undoubtedly consumed a lot of cost and time [2,3]. FMSA, on the other hand, can achieve accurate sentiment analysis by efficiently leveraging a small number of samples in resource-limited scenarios. For this reason, exploring few-shot multimodal sentiment analysis (FMSA) in resource-poor scenarios in the real world is gradually showing its feasibility.

Prompt learning has demonstrated effectiveness for few-shot learning in natural language processing (NLP) by reconfiguring the downstream task into the pre-training task so that the pre-trained language model (PLM) requires only minimal training data to adapt to the downstream task [4]. PVLM [5] leverages prompt learning in multimodal few-shot scenarios and directly incorporates vision modality into the PLM. However, the challenges brought by heterogeneity cannot be ignored. This fundamental difference between visual and language modalities impedes PLM from directly parsing and comprehending visual information. Faced with this situation, a research direction of few-shot multimodal sentiment analysis (FMSA) is how to effectively align visual and language modalities to obtain consistent visual-language representations.

Inspired by recent studies on the Multimodal Large Language Models (MLLM) [6,7,8], we recognize that alignment pre-training is a potent way to tackle this issue. Implementing this strategy would aid in enhancing the PLM’s flexible adaptability to the visual modality. UP-MPF [9], the previous state-of-the-art method for FMSA, leverages alignment pre-training to address the challenge of modality alignment in FMSA. To achieve alignment pre-training, UP-MPF freezes the PLM and feeds a substantial quantity of images along with text prompts into the PLM to predict the direction of image rotation in textual format, thereby enabling the model to learn the relationship between low-level visual features and language features, effectively enhancing the alignment between these modalities. However, UP-MPF exposes two main issues in practice: (1) The task of predicting the direction of image rotation, a well-known self-supervised pretext task in computer vision, primarily models low-level visual features and lacks the complex semantics that include human emotions [10]. These supervision signals, which are absent in this task, are crucial for aligning the learned visual features with strongly semantic language features. (2) UP-MPF only uses a single task during the pre-training stage. Focusing solely on one pre-training task can limit a model’s visual understanding by exposing it to only a narrow range of contexts and patterns, making it less adept at comprehensively analyzing diverse visual information. This could hinder the model’s effective learning of shared information between visual and language modalities, thereby presenting an obstacle to effective modality alignment.

In summary, the enhancement of few-shot multimodal sentiment analysis performance hinges on the optimization of the alignment pre-training stage. This optimization aims to bolster the model’s visual grounding capabilities and foster a more comprehensive multimodal understanding, thereby empowering downstream sentiment analysis tasks. By doing so, the pre-trained model can acquire robust sentiment analysis proficiency through a limited number of training samples in data-scarce scenarios. Current alignment pre-training approaches exhibit deficiencies in rich semantic information and modal sharing, which are crucial elements for few-shot multimodal sentiment analysis tasks. Consequently, our research focuses on addressing these limitations to improve model performance in few-shot multimodal sentiment analysis contexts. By refining the alignment pre-training process, we aim to bridge the gap between visual and textual modalities, enabling more effective knowledge transfer and generalization in low-resource settings. This approach has the potential to significantly advance the field of multimodal sentiment analysis, particularly in scenarios where labeled data are scarce.

To address the above issues, we propose Multi-task Supervised Alignment Pre-training (MSAP) for the FMSA task. For issues with missing rich semantic information, since more and more large-scale labeled datasets designed for tasks such as image classification or image captioning have become easily accessible [11], our method combines these datasets for supervised pre-training [12], which provide explicit semantic signals like image categories, styles, or descriptions. This makes it possible to align visual and language modalities by incorporating stronger semantic information into the visual representation. Regarding the issue of missing modal shared information, we recognize that relying on a single pre-training task during the alignment stage may lead to potential overfitting and hinder the model’s ability to learn generalized multimodal representations. This limitation could result in the model becoming overly biased, thereby compromising its effectiveness in capturing shared information across different modalities. To address this issue, we employ multi-task learning [13], a paradigm that enables the model to synthesize knowledge acquired from diverse tasks, thereby mitigating the risk of overfitting to any single task [14]. Moreover, multi-task learning facilitates the extraction of shared information across modalities, enhancing the model’s ability to leverage cross-modal relationships. This approach not only promotes a more balanced and generalizable learning process but also fosters the development of robust representations that capture the intricate interplay between different modalities.

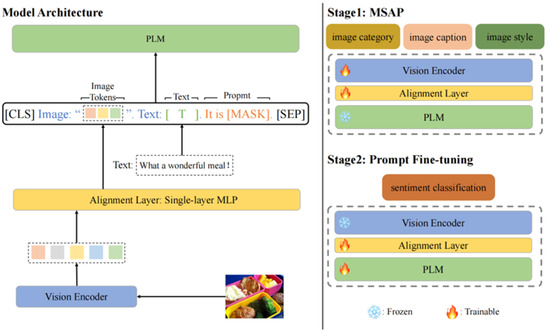

Specifically, we use a vision encoder to extract visual features and use a simple single-layer MLP as the alignment layer to align visual features with language features. Then, we feed the aligned visual tokens and language tokens into the pre-trained language model. During the Multi-task Supervised Alignment Pre-training (MSAP) stage, we first select three large image datasets, each annotated for different tasks. Combining these datasets, we created a massive comprehensive dataset containing approximately 900,000 images. As shown in Figure 1, our strategy is to fix the pre-trained language model (PLM) and update the weights of the visual encoder and alignment layer. Through the transformation of diverse visual tasks—encompassing image classification, image style recognition, and image captioning—into a unified Masked Language Modeling (MLM) paradigm, we achieve a synergistic integration of supervised pre-training and multi-task learning methodologies. Doing so not only improves the overall learning effect through the complementarity of each task but also allows the visual features to obtain richer semantic information. In the prompt fine-tuning stage, we fix the visual encoder and fine-tune the PLM with prompts to complete the FMSA task. Specifically, we make full use of the visual features with rich semantic information and modality sharing information obtained in the first stage through pre-training techniques. We use these features for lightweight and efficient parameter fine-tuning, successfully increasing the training efficiency while ensuring the predictive effect.

Figure 1.

Our model architecture and training paradigm. The left side of the figure illustrates the unified model architecture for both training stages, while the right side of the figure depicts the two-stage training strategy and training tasks for each stage. “Frozen” indicates that a module’s parameters remain unchanged during the training stage, whereas “Trainable” means the module’s parameters will be updated in the training stage.

Our main contributions can be summarized as follows:

- We propose a novel Multi-task Supervised Alignment Pre-training (MSPA) framework to address the challenges in few-shot multimodal sentiment analysis (FMSA). This innovative approach significantly enhances modality alignment and improves the overall performance of few-shot multimodal sentiment analysis tasks.

- We design a multimodal supervised semantic alignment training paradigm that unifies diverse visual tasks, including image classification, image style recognition, and image captioning, into a cohesive Masked Language Model (MLM). This methodology enables the incorporation of rich semantic and modality-shared information into visual features, thereby facilitating more effective modality alignment.

- We integrate three distinct labeled image datasets to create a comprehensive corpus for our study. Extensive experimental evaluations conducted on this consolidated dataset demonstrate that our proposed method achieves state-of-the-art performance across three benchmark datasets for the FMSA task, substantiating the efficacy of our approach.

2. Related Work

In this section, we provide a brief overview of the related work of multimodal sentiment analysis, multi-task learning, and supervised pre-training.

Multimodal Sentiment Analysis. The applications of multimodal sentiment analysis are extensive, such as intelligent interactions, advertising marketing, and social media analysis [15]. Ref. [16] employed attention mechanisms and Recurrent Neural Networks (RNN) for sentiment analysis and mood detection. The attention mechanism can help the neural network focus on important parts of the sequence, thereby improving the performance of sentiment analysis and sentiment detection. Ref. [17] considered the necessity of uniformly processing text, audio, and image data; employed a deep Long Short-Term Memory (LSTM) model; and combined audio and visual modes for multimodal sentiment analysis. Ref. [18] established a model for information fusion in sentiment analysis tasks. The method takes into account the varying importance of information provided by different modalities for sentiment analysis. Ref. [19] proposed a deep multi-level attention network model for multimodal sentiment analysis. This network uses semantic attention to simulate the relationship between image and text modalities. Despite the sophistication of these methods, most of the above studies rely on large-scale annotated data, which consume a considerable cost. Therefore, we focus on efficiently using a small number of samples to achieve accuracy in sentiment analysis in an environment with limited resources. UP-MPF, as an advanced technology in this field, can well handle the text–image input and only requires pure unlabeled images rather than handcrafted text–image pairs to perform task-aligned multimodal pre-training for few-shot MSA. Based on this method, we use multi-task learning and supervised pre-training to solve the two main problems that UP-MPF currently faces.

Multi-task Learning. Multi-task learning is an advanced paradigm in machine learning that involves the simultaneous acquisition of knowledge from multiple related tasks. Its fundamental principle is to leverage shared information across tasks, thereby enhancing the model’s generalization capabilities and overall performance [20]. Multi-task learning can effectively improve the performance of multimodal sentiment analysis [21]. In multimodal sentiment analysis, multi-task learning optimizes the training process through multiple tasks together, thereby achieving information complementarity between tasks. The different tasks of the model share the same base model, so different tasks can learn shared representations, extracting shared features of various modalities in multimodal sentiment analysis. When the model handles multiple tasks, the likelihood of model overfitting is reduced due to the increase in training data and constraints among multiple tasks. Ref. [22] jointly trains multimodal and unimodal tasks, learning consistency and differences, respectively. In addition, during the training stage, ref. [22] designed a weight adjustment strategy to balance the learning progress between different subtasks. Ref. [23] studied the concept of multi-task learning for multimodal sentiment analysis and explored a contextual multimodal attention framework. Ref. [23] defined three different multi-task settings, and each setting has two tasks. Therefore, we utilized multi-task learning, enabling the model to “synthesize” the knowledge learned from multiple tasks and preventing the model from overly focusing on any single task, which solves the second issue of UP-MPF. Specifically, in the classifying features of the MSAP stage, we chose to perform image classification tasks and image style recognition tasks; in addition, we added an image title matching task. These tasks can provide clearer semantic signals, enriching the embedded semantic information.

Supervised Pre-training. Supervised pre-training plays roles in promoting feature learning and transfer learning, enhancing system performance, and improving model robustness in multimodal sentiment analysis [24]. Supervised pre-training can learn high-order features and complex abstract patterns, which are vital for sentiment recognition. The application of supervised pre-training in multimodal sentiment analysis can indeed reduce the risk of model overfitting to a certain extent. In addition, the pre-trained model can be fine-tuned more effectively, thus improving the accuracy of sentiment analysis tasks. Ref. [25] performed supervised learning on the labels of multimodal tweets and designed three pre-training tasks that include text, visual, and multimodal elements. In order to conduct sentiment analysis, ref. [26] utilized as much multimodal information as possible. During the pre-training process, ref. [26] randomly masked the input tokens and then made predictions. Ref. [27] utilized the batch contrastive approach in the multimodal sentiment analysis system, transitioning from a fully supervised manner to a self-supervised one. Ref. [28] utilized domain-specific self-supervised graph convolution for multimodal sentiment analysis. By pre-training on a large amount of labeled data, the model can learn the shared features and patterns of multimodal data, providing strong support for subsequent sentiment analysis tasks. In addition, the pre-trained model can be transferred to specific sentiment analysis tasks, fully utilizing the knowledge learned by the model during the pre-training stage, which reduces the cost of starting training from scratch on a new task. Therefore, we address the lack of semantic signals in UP-MPF through supervised pre-training. Incorporating multi-task learning on the basis of UP-MPF can help the model better understand and utilize the correlation between different modal data, achieving better pattern alignment and improving model performance. Supervised pre-training can enhance representations, provide richer label information, and improve the model’s generalization ability to enhance the learning effect of the UP-MPF algorithm on label information.

3. Methods

Task Definition. In this paper, we denote the multimodal sentiment analysis dataset as . For the MSA task that mainly focuses on text and images, , T denotes the sentence and I denotes the corresponding image that T is describing. denotes the predicted sentiment label, where Y is a predefined label set of sentiments (e.g., {positive, neutral, negative}). We assume access to a pre-trained language model M and use it to recognize the sentiment Y based on the image–text pair x. In the FMSA task, a limited number of training samples can be accessed. Additionally, the sizes of the development set and training set are equal, i.e., . This ensures consistency in the volumes of data used for creating the model and fine-tuning it, thereby giving us a fair framework to assess the effectiveness and efficiency of the model under similarly sized datasets.

3.1. Model Architecture

In this section, we will introduce our model in detail. Formally, given a template (e.g., Image: . Text: T. It is [MASK].) that contains a text–image set and a designed text prompt with [MASK] token, we align the semantic information in the image to text through the alignment layer, then predict what may appear in [MASK], with an aim to help the model learn the multimodal sentiment information in both text and image forms.

As illustrated in Figure 1, the overall model architecture comprises a vision encoder , a pre-trained foundation language model , and a single-layer MLP as the alignment layer . We employ the vision encoder and alignment layer to extract semantic information from the image and transform it into corresponding image tokens to align with the text T. For the image modality, we first employ NF-ResNet [29] as the vision encoder to extract the vision representation corresponding to the input image I. The visual representation extracted at this stage forms a set of critical features that capture the essence of the visual elements presented in the image. Subsequently, we utilize the alignment layer , a simple single-layer MLP, as a bridge that connects two different spaces: the vision embedding space and the language embedding space. The alignment layer plays an instrumental role in connecting visual representation with linguistic representation. To achieve this, we employ a lightweight linear network as the alignment layer to obtain the vision–language representation of , denoted as , which can also be viewed as a sequence of image tokens. Formally, to obtain the image tokens of the input image I,

where ; is the dimension of the language embedding space in the pre-trained language model; and K serves as a hyper-parameter, representing the length of the image token sequence and quantifying the extent of visual information encompassed within these tokens.

Furthermore, as a key component integrating visual and language understanding, the alignment layer not only fulfills the crucial purpose of aligning modalities but also plays a pivotal role in effectively filtering out fine-grained visual information. It essentially performs a downsampling process, dimensionally reducing the larger image features produced within the vision encoder and leading to a substantial reduction in computational complexity. Once we have obtained , we combine the text T, the designed text prompt with [MASK] token (e.g., It is [MASK]), and the image tokens to create a multimodal prompt template T. An example of T is provided below:

We then feed the embedding of T into the pre-trained language model M in order to predict the token with the highest probability at the [MASK] position. Additionally, we define a verbalizer, denoted as v, to convert sentiments into indices that point towards specific vocabulary. The process can be described as , where V is the vocabulary of M. For each label , maps the label to its corresponding token in V. Formally, obtaining the predicted label given the image–text pair x can be illustrated as

3.2. Multi-Task Supervised Alignment Pre-Training

To enhance the performance of multimodal prompt learning in the case of few shots, we utilize our MSAP method to promote the alignment of visual and language modes.

To enrich the semantic information embedded within vision features in the MSAP stage, we chose to perform the image classification task and the image style recognition task. As displayed in Table 1, the image classification task aims to classify the given images using a set of categories drawn from ImageNet. Concurrently, the image style recognition task is engaged in identifying the specific style that a given image exhibits. Additionally, we implemented an image caption matching task to improve visual–textual alignment. This task challenges the model to associate images with their correct captions, fostering a deeper understanding of cross-modal relationships. By including this task, we aim to enhance the model’s ability to capture subtle semantic connections between modalities, ultimately improving its multimodal sentiment analysis performance. These tasks provide explicit semantic signals by offering information on image categories, styles, and fine-grained image content. As shown in Figure 1, to acquire more generalized modality-shared information, we unify these tasks into an MLM task for multi-task learning by employing prompts.

Table 1.

Multimodal templates for pre-training tasks. denotes the image tokens from the image input I. T denotes the caption text corresponding to the image I.

To optimize the utilization of shared modal information, we devised a novel approach that employs a single MLM task to achieve the effects of multi-task learning. This methodology provides a scalable and unified multi-task learning paradigm, leveraging diverse prompt templates to encapsulate rich knowledge derived from multiple visual tasks within the learned image tokens. By consolidating varied visual objectives into a cohesive framework through carefully crafted prompts, we enable the model to distill and integrate complex visual information across various domains. This approach not only enhances the model’s overall representational capacity and cross-modal understanding but also offers flexibility in task formulation through the strategic use of prompts, thereby facilitating more efficient and comprehensive learning. Table 1 presents the multimodal prompt templates set up for each task. While gravitating through the MSAP stage, the PLM is kept frozen and unaltered, ensuring its stability, thus exploiting its learned knowledge without any changes. By leveraging both the trainable vision encoder and alignment layer , this approach significantly enhances the ability to align modalities effectively. In simpler terms, the MSAP method facilitates the integration and alignment of semantic and shared modality information within visual features, thereby boosting the overall performance and effectiveness of the model across various tasks.

3.3. Downstream Prompt Fine-Tune

To better adapt PLM for the downstream task, it is vital to fine-tune the PLM. In the fine-tuning stage, we adopt an identical model architecture to that employed in the pre-training stage. We freeze the vision encoder to obtain coherent vision–language representations. Simultaneously, we update the weights of the alignment layer and PLM to maximize its performance on the downstream task. The prompt we employed is as follows:

where denotes the image tokens of the image input I and T denotes the corresponding text.

4. Experiments

4.1. Datasets and Data Split

Pre-training Dataset. We adopt different datasets for three tasks in the MSAP stage. For the image classification task, We choose 371,362 images from 283 categories in ImageNet [30]. For the image style recognition task, we filter 222,253 images from three styles in the Domain-Net dataset [31]. Additionally, we select 300,733 image–text pairs from the SBU Captions dataset [32] to design the image caption matching task. To ensure class balance for each task, we split them by a 9:1 ratio to form our training set and development set in the alignment pre-training stage.

Benchmark Datasets. We evaluate the effect of our MSAP method on three MSA datasets, including MVSA-S, MVSA-M [2], and TumEmo [3]. As for MVSA-S and MVSA-M, the composition of the two datasets is entirely consistent. Each row of the dataset contains an image and its corresponding text description, with its sentiment orientation labeled {Positive, Neutral, Negative}. As for the Tumemo dataset, although it maintains structural uniformity with the previous two, it provides sentiment labels, which contain {Angry, Bored, Calm, Fear, Happy, Love, Sad}. In light of the disparate volume of data associated with different label groups within the dataset, the application of sampling strategies becomes crucial in minimizing the impact of data imbalance. Therefore, we keep the test set unchanged and randomly sample 1% from the training and development sets to form our few-shot dataset (TumEmo is too large, so we only choose 497 samples). The statistics of the benchmark datasets are shown in Table 2.

Table 2.

Statistics on the benchmark datasets.

4.2. Implementation Details

We employ NF-Resnet50 as the vision encoder and BERT-base [33] as the PLM during both training stages. Additionally, we employ the early stopping strategy. During the MSAP stage, we optimize the model with a learning rate of . To incorporate more visual information, we set the number of image tokens to seven. We select the checkpoint with the highest validation performance for the downstream task.

In the downstream FMSA stage, we use a learning rate of . We conduct a greedy search on the number of image tokens from one to seven, allowing the model to find the most suitable level of visual information to incorporate. We run five times randomly for each experiment and discard the highest and lowest results, reporting only the middle-three average performance.

4.3. Experimental Results

In our experiment, we compare multiple multimodal model methods and further evaluate their performance according to two metrics: standard accuracy (Acc) and weighted F1 measure (Weighted-F1). Among other multimodal model methods, MVAN [3] and MGNNS [34] are multimodal fusion methods and LXMERT [35] is a classic pre-trained multimodal model. PF [36] and LM-BFF [37] employ prompt learning for texts. PVLM [5] directly feeds vision features into the PLM for multimodal prompt learning. UP-MPF [9], the previous state-of-the-art model, utilizes alignment pre-training to align modalities. From the result from our experiment, as shown in Table 3, we can conclude the following:

Table 3.

Performance comparisons with standard deviations for few-shot experiments on three benchmark datasets.

(1) The performance of prompt-based learning methods like PF and LM-BFF is consistently better than conventional multimodal fusion methods across the three datasets. This further proves the effectiveness of prompt-based methods, which may be attributed to the flexibility of prompt-based learning, as it can easily adapt to new tasks by tailoring prompts rather than requiring the extensive structural modifications that multimodal fusion methods may require.

(2) Methods like UP-MPF utilize vision–language alignment pre-training to help the model better understand the semantic information included in the image–text pair. This, in turn, enhances performance in the FMSA task compared to other methods, except our MSAP method.

(3) Our MSAP outperforms existing methods on all three datasets, achieving a new state-of-the-art performance on the FMSA task. Our MSAP approach distinguishes itself by garnering unique semantic information from a variety of tasks, along with the accomplishment of multimodal alignment between language and vision. This results in an enhanced performance in FMSA tasks as compared to the existing methods.

As shown in Table 3, our MSAP method outperforms existing methods on all three datasets, achieving a new state-of-the-art performance on the FMSA task.

In addition, as shown in Table 4, we comprehensively compare the performance of the MSAP method with classic baselines including UP-MPF on the fine-grained MSA task. We find that MSAP significantly outperforms existing methods on all three datasets, further verifying its effectiveness in this task.

Table 4.

Performance comparisons with standard deviations for fine-grained experiments on three benchmark datasets.

4.4. Ablation Experiment

4.4.1. Ablation of Fine-Tuning Strategies

Further investigation into fine-tuning strategies is necessary to maximize the utilization of these pre-trained models from our MSAP stage. As shown in Table 5, both fine-tuning the vision encoder and freezing the alignment layer can result in a decline in performance. Additionally, fine-tuning the vision encoder might further deteriorate the model’s performance more than freezing the alignment layer does. When there are very little data available for downstream tasks, updating the visual encoder can potentially lead to severe overfitting, thereby distorting the representations learned from pre-training. However, updating the alignment layer is feasible since it has fewer parameters compared to the vision encoder. The above experiments demonstrate that freezing the visual encoder and fine-tuning the alignment layer is the optimal fine-tuning strategy, allowing for the acquisition of coherent vision–language features while transferring knowledge to downstream tasks.

Table 5.

Performance comparisons of different training strategies for downstream tasks. is the vision encoder, is the alignment layer, “+” denotes the trainable module, and “−” denotes the frozen module.

4.4.2. Ablation of Prompt Templates

To assess the impact of prompt template variations on model performance, as shown in Table 6, we conducted ablation experiments across coarse-grained, fine-grained, and MSA tasks. These experiments focused on modifying the prompt templates of downstream tasks, generating multiple variants to evaluate their effects.

Table 6.

Performance comparisons of different prompt templates on downstream tasks.

Our findings indicate that while prompt design in few-shot learning influences performance, its overall impact remains limited. Despite structural variations, our approach exhibits strong robustness, enabling the model to effectively adapt to diverse downstream task requirements.

5. Conclusions

We propose a novel Multi-task Supervised Alignment Pre-training (MSAP) method for the few-shot multimodal sentiment analysis (FMSA) task. By incorporating richer semantic information and modality-shared information into vision features, MSAP further facilitates modality alignment, achieving a new state-of-the-art performance on three datasets for the FMSA task. In future work, we will explore more data-centric methods for alignment pre-training.

Author Contributions

Conceptualization, J.Y.; Methodology, J.Y.; Software, J.Y.; Validation, C.D.; Resources, C.D.; Data curation, C.D.; Writing—original draft, J.Y.; Writing—review & editing, J.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data is contained within the article. The data presented in this study are available in Section 4.1 of the article.

Conflicts of Interest

The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Kaur, R.; Kautish, S. Multimodal sentiment analysis: A survey and comparison. In Research Anthology on Implementing Sentiment Analysis Across Multiple Disciplines; IGI Global: Hershey, PA, USA, 2022; pp. 1846–1870. [Google Scholar]

- Niu, T.; Zhu, S.; Pang, L.; El Saddik, A. Sentiment analysis on multi-view social data. In Proceedings of the MultiMedia Modeling: 22nd International Conference, MMM 2016, Miami, FL, USA, 4–6 January 2016; Springer: Berlin/Heidelberg, Germany, 2016. Proceedings, Part II 22. pp. 15–27. [Google Scholar]

- Yang, X.; Feng, S.; Wang, D.; Zhang, Y. Image-text multimodal emotion classification via multi-view attentional network. IEEE Trans. Multimed. 2020, 23, 4014–4026. [Google Scholar] [CrossRef]

- Liu, P.; Yuan, W.; Fu, J.; Jiang, Z.; Hayashi, H.; Neubig, G. Pre-train, prompt, and predict: A systematic survey of prompting methods in natural language processing. Acm Comput. Surv. 2023, 55, 1–35. [Google Scholar] [CrossRef]

- Yu, Y.; Zhang, D. Few-shot multi-modal sentiment analysis with prompt-based vision-aware language modeling. In Proceedings of the 2022 IEEE International Conference on Multimedia and Expo (ICME), Taipei, Taiwan, 18–22 July 2022; pp. 1–6. [Google Scholar]

- Zhu, D.; Chen, J.; Shen, X.; Li, X.; Elhoseiny, M. Minigpt-4: Enhancing vision-language understanding with advanced large language models. arXiv 2023, arXiv:2304.10592. [Google Scholar]

- Liu, H.; Li, C.; Wu, Q.; Lee, Y.J. Visual instruction tuning. Adv. Neural Inf. Process. Syst. 2024, 36. [Google Scholar]

- Ye, Q.; Xu, H.; Xu, G.; Ye, J.; Yan, M.; Zhou, Y.; Wang, J.; Hu, A.; Shi, P.; Shi, Y.; et al. mplug-owl: Modularization empowers large language models with multimodality. arXiv 2023, arXiv:2304.14178. [Google Scholar]

- Yu, Y.; Zhang, D.; Li, S. Unified multi-modal pre-training for few-shot sentiment analysis with prompt-based learning. In Proceedings of the 30th ACM International Conference on Multimedia, Lisbon, Portugal, 10–14 October 2022; pp. 189–198. [Google Scholar]

- Gidaris, S.; Singh, P.; Komodakis, N. Unsupervised representation learning by predicting image rotations. arXiv 2018, arXiv:1803.07728. [Google Scholar]

- Liu, Y. Multilingual denoising pre-training for neural machine translation. arXiv 2020, arXiv:2001.08210. [Google Scholar] [CrossRef]

- Feng, Y.; Jiang, J.; Tang, M.; Jin, R.; Gao, Y. Rethinking supervised pre-training for better downstream transferring. arXiv 2021, arXiv:2110.06014. [Google Scholar]

- Collobert, R.; Weston, J. A unified architecture for natural language processing: Deep neural networks with multitask learning. In Proceedings of the 25th International Conference on Machine Learning, Helsinki, Finland, 5–9 July 2008; pp. 160–167. [Google Scholar]

- He, H.; Choi, J.D. The stem cell hypothesis: Dilemma behind multi-task learning with transformer encoders. arXiv 2021, arXiv:2109.06939. [Google Scholar]

- Gandhi, A.; Adhvaryu, K.; Poria, S.; Cambria, E. Hussain Multimodal sentiment analysis: A systematic review of history, datasets, multimodal fusion methods, applications, challenges and future directions. Inf. Fusion 2023, 91, 424–444. [Google Scholar] [CrossRef]

- Wang, R.; Geng, Z. Wa-icp algorithm for tackling ambiguous correspondence. In Proceedings of the IEEE 2015 3rd IAPR Asian Conference on Pattern Recognition (ACPR), Kuala Lumpur, Malaysia, 3–6 November 2015; pp. 76–80. [Google Scholar]

- Sindhu, P. The future of transport networks. In Proceedings of the Optical Fiber Communication Conference, Los Angeles, CA, USA, 22–26 March 2015; Optica Publishing Group: Washington, DC, USA, 2015. [Google Scholar]

- Wang, B.; Dong, G.; Zhao, Y.; Li, R.; Cao, Q.; Chao, Y. Non-uniform attention network for multi-modal sentiment analysis. In International Conference on Multimedia Modeling; Springer: Berlin/Heidelberg, Germany, 2022; pp. 612–623. [Google Scholar]

- Yadav, A.; Vishwakarma, D.K. A deep multi-level attentive network for multimodal sentiment analysis. ACM Trans. Multimed. Comput. Commun. Appl. 2023, 19, 1–19. [Google Scholar] [CrossRef]

- Ruder, S. An overview of multi-task learning in deep neural networks. arXiv 2017, arXiv:1706.05098. [Google Scholar]

- Yang, B.; Wu, L.; Zhu, J.; Shao, B.; Lin, X.; Liu, T.-Y. Multimodal sentiment analysis with two-phase multi-task learning. IEEE/ACM Trans. Audio Speech Lang. Process. 2022, 30, 2015–2024. [Google Scholar] [CrossRef]

- Yu, W.; Xu, H.; Yuan, Z.; Wu, J. Learning modality-specific representations with self-supervised multi-task learning for multimodal sentiment analysis. AAAI Conf. Artif. Intell. 2021, 35, 10790–10797. [Google Scholar] [CrossRef]

- Akhtar, M.S.; Chauhan, D.S.; Ekbal, A. A deep multi-task contextual attention framework for multi-modal affect analysis. ACM Trans. Knowl. Discov. Data 2020, 14, 1–27. [Google Scholar] [CrossRef]

- Zadeh, A.B.; Liang, P.P.; Poria, S.; Cambria, E.; Morency, L.-P. Multimodal language analysis in the wild: Cmu-mosei dataset and interpretable dynamic fusion graph. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; Volume 1: Long Papers. pp. 2236–2246. [Google Scholar]

- Ling, Y.; Yu, J.; Xia, R. Vision-language pre-training for multimodal aspect-based sentiment analysis. arXiv 2022, arXiv:2204.07955. [Google Scholar]

- Kim, K.; Park, S. Aobert: All-modalities-in-one bert for multimodal sentiment analysis. Inf. Fusion 2023, 92, 37–45. [Google Scholar] [CrossRef]

- Mai, S.; Zeng, Y.; Zheng, S.; Hu, H. Hybrid contrastive learning of tri-modal representation for multimodal sentiment analysis. IEEE Trans. Affect. Comput. 2022, 14, 2276–2289. [Google Scholar] [CrossRef]

- Zeng, Y.; Li, Z.; Tang, Z.; Chen, Z.; Ma, H. Heterogeneous graph convolution based on in-domain self-supervision for multimodal sentiment analysis. Expert Syst. Appl. 2023, 213, 119240. [Google Scholar] [CrossRef]

- Brock, A.; De, S.; Smith, S.L. Characterizing signal propagation to close the performance gap in unnormalized resnets. arXiv 2021, arXiv:2101.08692. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Li, F.F. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Peng, X.; Bai, Q.; Xia, X.; Huang, Z.; Saenko, K.; Wang, B. Moment matching for multi-source domain adaptation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1406–1415. [Google Scholar]

- Ordonez, V.; Kulkarni, G.; Berg, T. Im2text: Describing images using 1 million captioned photographs. Adv. Neural Inf. Process. Syst. 2011, 24. [Google Scholar]

- Devlin, J. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Yang, X.; Feng, S.; Zhang, Y.; Wang, D. Multimodal sentiment detection based on multi-channel graph neural networks. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, Online, 1–6 August 2021; Volume 1: Long Papers. pp. 328–339. [Google Scholar]

- Tan, H.; Bansal, M. Lxmert: Learning cross-modality encoder representations from transformers. arXiv 2019, arXiv:1908.07490. [Google Scholar]

- Schick, T.; Schütze, H. Exploiting cloze questions for few shot text classification and natural language inference. arXiv 2020, arXiv:2001.07676. [Google Scholar]

- Gao, T.; Fisch, A.; Chen, D. Making pre-trained language models better few-shot learners. arXiv 2020, arXiv:2012.15723. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).