Abstract

Increasing traffic density in cities exacerbates air pollution, threatens human health and worsens the global climate crisis. Urgent solutions for sustainable and eco-friendly urban transportation are needed. Innovative technologies like artificial intelligence, particularly Deep Reinforcement Learning (DRL), play a crucial role in reducing fuel consumption and emissions. This study presents an effective approach using DRL to minimize waiting times at traffic lights, thus reducing fuel consumption and emissions. DRL can evaluate complex traffic scenarios and learn optimal solutions. Unlike other studies focusing solely on optimizing traffic light durations, this research aims to choose the optimal vehicle acceleration based on traffic conditions. This method provides safer, more comfortable travel while lowering emissions and fuel consumption. Simulations with various scenarios prove the Deep Q-Network (DQN) algorithm’s success in adjusting speed according to traffic lights. Although the findings confirmed that the DRL algorithms used were effective in reducing fuel consumption and emissions, the DQN algorithm outperformed other DRL algorithms in reducing fuel consumption and emissions in complex city traffic scenarios, and in reducing waiting times at traffic lights. It provides better contributions to creating a sustainable environment by reducing fuel consumption and emissions.

1. Introduction

Cities are among the largest and most complex structures in human history. While these densely populated urban areas facilitate life, they also bring significant challenges. One such challenge is the environmental pollution caused by transportation, an essential component of modern life. According to the International Energy Agency (IEA), the global energy consumption of the transportation and industrial sectors was approximately 30%, yet together, these sectors accounted for approximately 46% of total carbon emissions in 2021. Interestingly, while energy consumption and emission trends have remained relatively stable since 2011, the transportation sector’s impact has continued to increase [1]. Air pollution is directly linked to heavy vehicle traffic in urban areas. Harmful particles and gases emitted from exhaust contribute to respiratory diseases, cardiovascular disorders and even cancer. Additionally, these emissions, including greenhouse gases such as carbon dioxide (CO2), nitrogen dioxide (NOx) and carbon monoxide (CO), exacerbate climate change and disrupt the environmental balance. The constant stop-and-go movement of cars and other vehicles further amplifies emission levels and prolonged time spent in traffic exacerbates this problem. The environmental pollution and emission issues associated with urban traffic are becoming increasingly urgent. Efforts to develop new emission sources continue to grow [2]. However, addressing the existing fleet of vehicles and equipment is also crucial for significantly reducing environmental pollution. In their 2023 experimental study, Yigit and Karabatak examined the impact of sudden changes in travel time and vehicle speeds on fuel consumption and environmental pollution in real-world traffic scenarios [3]. They found that waiting times in traffic contribute to approximately 50% of fuel consumption and environmental pollution. Consequently, traffic management is not only a daily inconvenience but also critical for the sustainability and livability of cities.

Technological advancements, including artificial intelligence (AI), offer new perspectives for solving these challenges. DRL, a subset of AI, has gained significant attention due to its potential to develop eco-friendly solutions in traffic management and transportation systems. DRL algorithms, guided by data and environmental feedback, adapt, learn and optimize real-time traffic control strategies [4,5,6]. In a 2022 study, Liu et al. proposed an adaptable speed-planning method for connected and autonomous vehicles [7]. Their trained DRL agent could adapt to variable traffic light scenarios and quickly solve for an approximate optimal speed trajectory. Another study in 2020 applied the existing Markovian traffic assignment framework to new traffic control strategies [8]. Initially, they performed classical Markovian static assignments and then transitioned to dynamic traffic assignments using the Simulation of Urban Mobility (SUMO) simulation program. Their work focused on teaching routing behavior at intersections and highlighted the scalability potential of Markovian chain theory for larger networks. In a 2021 thesis, DRL was used to optimize urban signal networks [5]. The proposed approach outperformed the classical DQN algorithm in terms of results. Similarly, in another 2020 study, machine learning was employed to recommend vehicle speeds [9]. The researchers used linear regression under supervised learning to train the model and demonstrated that their proposed algorithm achieved better accuracy and performance than existing state-of-the-art algorithms.

Ensuring optimal speed control is vital for enhancing road safety, minimizing traffic congestion and improving fuel efficiency. Traditional vehicle speed control systems rely on rule-based algorithms or classical control methods based on open information and predefined rules. However, these approaches often struggle to adapt to dynamic and complex driving scenarios that require real-time learning and responsiveness. Consequently, researchers have turned their attention to machine learning techniques, particularly DRL, to address the limitations of traditional control methods. DQN [7,10], a prominent DRL algorithm, has garnered significant interest due to its ability to learn optimal policies directly from raw sensory input data. Initially proposed for Atari games by Mnih et al. [11], DQN combines deep neural networks with Q-learning, enabling the direct learning of complex behaviors from high-dimensional input spaces. Researchers leveraging this approach aim to develop an advanced system capable of autonomously regulating vehicle speeds across various driving scenarios. In the study conducted by Zhou et al. in 2024, they tried to solve the traffic congestion problem by using 6G communication technologies and DRL [12]. As a result of their study, they stated that they reduced the travel time in traffic by 28.2%. In a study conducted in 2024, Zhang et al. proposed a DRL-based method to improve human and vehicle evacuation in parking lots during emergencies [13]. They stated that as a result of their work, they reduced the evacuation time by 7.75%. In another study conducted by Wang et al. in 2024, they proposed a method that provides traffic management using reinforcement learning vehicle-to-vehicle (V2V) connection technologies [14]. In their study, they also stated that they achieved better performance than human drivers in terms of safety, efficiency, comfort and fuel consumption. In their study conducted in 2024, Hua and Fan proposed a method using DRL to relieve traffic congestion in mixed traffic consisting of connected autonomous vehicles and human-operated vehicles [15]. They stated that their proposed method significantly reduces non-repetitive congestion on the highway. Gao et al. used the DRL method while proposing a driving strategy for connected autonomous vehicles in a traffic scenario with humans and connected autonomous vehicles in their study conducted in 2024 [16]. They stated in their study that they developed a method to avoid congestion for connected autonomous vehicles.

Numerous studies in the literature have proposed optimal speed recommendations for vehicles. The majority of these studies were conducted using the green light optimal speed advisory (GLOSA) algorithm. Many models have been developed for autonomous driving using AI in recent years. Some published studies, their methods and their objectives are shown in Table 1.

Table 1.

Reviewed articles.

In the studies given in Table 1, the linear regression method was used to calculate the optimum speed for vehicles in the study conducted by [9]. DRL methods [17,18,19,20,22], which have become popular in recent years, are being used for autonomous driving models, and successful results are being achieved. Another area where DRL is commonly used is the development of Autonomous Driving Policies for vehicles on highways [17,18,19,20,21,22,23]. The studies listed in Table 1 identify their target audience as connected vehicles or vehicles with autonomous driving capability.

Urban traffic management is pivotal in reducing fuel consumption, CO2 emissions and enhancing driving conditions in cities, all of which contribute significantly to environmental sustainability. Traditional traffic control methods often struggle to cope with the complexity of modern urban transportation networks, leading to inefficiencies, congestion and rising environmental impacts. In recent years, DRL has shown promise in optimizing traffic flow and addressing environmental challenges by leveraging its ability to learn optimal control policies in dynamic, complex environments.

The primary objective of this research is to assess the effectiveness of DRL algorithms in urban traffic management and their role in fostering environmental sustainability while enhancing the driving experience. In this context, the study seeks to address the following research questions:

- How can DRL algorithms, particularly the DQN-based model, be applied to optimize fuel consumption, reduce emissions and improve driving comfort and safety across various traffic scenarios?

- To what extent can the proposed DQN-based model produce effective results using existing traffic data and how adaptable is it to different traffic conditions, in terms of both environmental impact and user experience?

- How does the performance of the DQN-based model compare to other methods in the existing literature in terms of fuel consumption, emissions, driving comfort and safety?

- Can the proposed method be applied to vehicles driven by human drivers and what is its potential to improve traffic flow, reduce fuel consumption, enhance driving comfort and increase safety in real-world traffic conditions?

In response to these research questions, the following hypotheses are proposed:

- Hypothesis 1: The DQN-based model can effectively optimize fuel consumption, reduce CO2 emissions and improve driving comfort and safety by learning optimal driving policies in various traffic scenarios.

- Hypothesis 2: The DQN-based model, when trained with existing traffic data, can produce reliable results in optimizing fuel consumption, reducing emissions and improving driving comfort and safety, and it can be generalized to various urban traffic conditions.

- Hypothesis 3: The DQN-based model will outperform other DRL methods or traditional traffic optimization techniques in terms of fuel consumption reduction, environmental impact and enhancements in driving comfort and safety.

- Hypothesis 4: The proposed DQN-based method can be applied to vehicles with human drivers, improving traffic flow, reducing congestion, decreasing fuel consumption and enhancing driving comfort and safety in real-world driving situations.

This study aims to explore the impact and significance of the proposed DQN-based method for urban traffic management, emphasizing its potential to foster sustainable urban mobility, improve driving comfort and safety and contribute to a more efficient transportation system.

The main contributions of the study can be summarized as follows:

- Using different scenarios, the proposed method’s performance in reducing fuel consumption, emission release and environmental pollution was demonstrated.

- Recently popular DRL algorithms such as DQN, Double Deep Q-Network (DDQN), Proximal Policy Optimization (PPO) and Advantage Actor-Critic (A2C) were trained on the same scenarios, and their results were compared in terms of reducing fuel consumption, environmental pollution and emission release; it is thought that the proposed method is more successful and will contribute to this area.

- A method that can be applied not only to autonomous vehicles but also to existing vehicles is proposed.

2. Materials and Methods

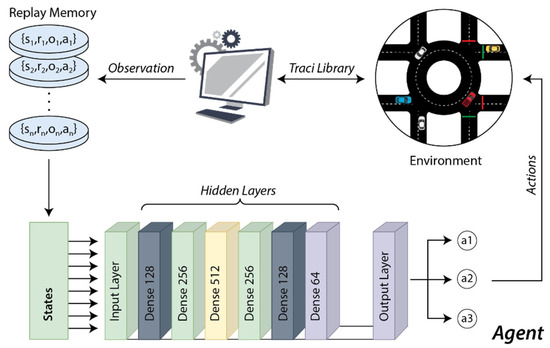

Urban traffic flow is a highly complex system with numerous dynamics that can change instantaneously. Many parameters in traffic can change in real time. When examining machine learning methods capable of providing solutions that account for these real-time changes, DRL algorithms inherently meet this need. DRL algorithms attempt to learn through trial and error without any prior knowledge of the environment. During the learning process, they employ reward and punishment methods, aiming to maximize the reward. A few examples are parameters such as road conditions, traffic density, vehicle speed, the status and timing of traffic lights and the number of vehicles waiting at lights. Achieving the optimal speed for vehicles is inherently complex for traffic. A block diagram of the system designed to suggest acceleration to drivers considering these variables is shown in Figure 1.

Figure 1.

Block diagram of the method used.

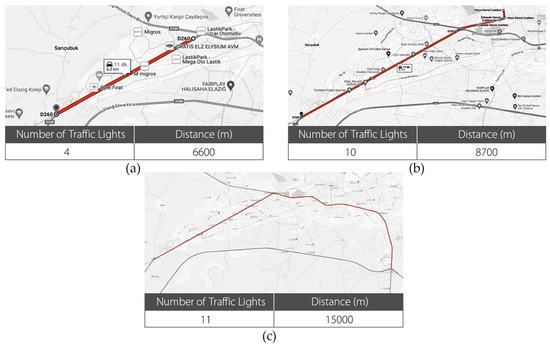

In Figure 1, Environment represents the traffic simulation environment provided by the SUMO application. At each step of the simulation environment, the observation values obtained using the Traffic Control Interface (TRACI) provided by the SUMO application were recorded in the replay memory. The observation values recorded in the replay memory consist of an array of 11 elements. A certain amount of data from the replay memory were given as input to the created network. There are 3 outputs in the output layer of the developed network. These outputs are in the form of (“Accelerate”, “Maintain speed” and “Slow down”). These actions obtained as outputs were sent back to the simulation environment and applied, and new observation values were obtained. The map used in the study pertains to the city of Elazığ and was obtained from the open-source application, Open Street Map [25]. Using the city map obtained, three different routes were established. The purpose of selecting these routes used in the study is that they have a heavy traffic flow, many traffic lights and intersections and the data to be used as input in the system are provided by the municipality. The different routes created using the map consist of a total of four lanes, two for arrival and two for departure. These three different routes were incorporated into the SUMO program using the NETEDIT program, where traffic light information was added and necessary adjustments were made to create the simulation universe. The created simulation environment was transferred to the simulation program to prepare the simulation environment [26,27,28]. The study employed the Python programming language and TRACI library [29]. All the programs used are open-source applications. In this study, three different scenarios were created to compare the performance of DRL algorithms in finding the optimum speed for vehicles in urban traffic. These algorithms were applied to these scenarios, and the results were analyzed. The routes used for the three different scenarios created for network training are shown in Figure 2.

Figure 2.

Routes used in experimental studies: (a) First scenario. (b) Second scenario. (c) Third scenario.

A total of 25 traffic lights were added to the maps shown in Figure 2 through the NETEDIT application to be used in the study. The phase durations of the traffic lights used in the study are expressed in Table 2.

Table 2.

Phase durations of traffic lights used in the experimental study.

The phase times expressed in Table 2 are the traffic light phase values used in real-world conditions, taken from the city traffic control center.

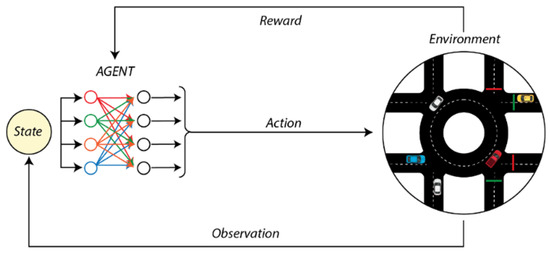

2.1. Deep Reinforcement Learning

Reinforcement learning refers to the process in which an agent observes the situation in its environment, selects an action appropriate to this situation, executes the selected action and receives a reward or penalty from the environment in response to the chosen action [30]. The agent uses this feedback to perform the learning process and develops better action strategies over time. The primary goal is to create an action strategy that maximizes the total reward in the long term. Q-learning is a model-free reinforcement learning technique. It uses a trial and error approach to explore complex and stochastic environments. Q-Learning aims to create a learning process using a state–action pair with negative or positive rewards. In Q-Learning, a table is used that records the states in which the agent is located and the agent’s movements. These Q values are updated at each step. DQN, which combines reinforcement learning and deep learning, uses deep neural networks as a function approximator to find the most suitable Q-values for actions. In this study, the DQN algorithm was trained using a dataset sourced from traffic simulations and enhanced through a reward-based learning method. During training, the model learned the right acceleration or deceleration actions for various potential future scenarios by observing each step in the simulated traffic environments. However, applying the DQN model for prediction after training relies on the existing dataset. The objective here is not to predict future model features but to recommend optimal actions based on current conditions. For instance, it suggests whether to increase or maintain vehicle speed based on data like the current traffic light status and vehicle density. Thus, the DQN model is intended not as a predictive tool, but as a decision support mechanism that utilizes learned policies. The working principle of DRL is shown in Figure 3.

Figure 3.

Deep Reinforcement Learning.

PPO, developed by OpenAI in 2017, is a family of model-free reinforcement learning algorithms. PPO is a widely used reinforcement learning algorithm that focuses on optimizing the policies of autonomous agents in dynamic environments [31,32]. PPO aims to increase the stability of policy updates by limiting the size of changes made to the policy during training, thereby preventing sudden and disruptive policy changes. This is achieved by calculating a surrogate objective function that encourages policy updates to be “close” to the current policy, thus ensuring a smooth learning process. PPO iteratively collects data from interactions with the environment and uses these data to update the policy to maximize cumulative rewards, effectively balancing exploration and exploitation. Its versatility and robustness have made PPO a preferred choice for training agents in various fields such as robotics. A2C is a reinforcement learning algorithm that combines elements of both policy change methods and value function estimation to train intelligent agents for sequential decision-making tasks. In A2C, an agent maintains two components simultaneously: an actor who learns the policy for selecting actions and a critic who estimates the value of the current state. These components work in collaboration, with the critic providing feedback to the actor about the quality of the selected actions. A2C uses advantage values, which represent the advantage of performing a particular action over the average action value in a specific situation, to guide policy updates. This approach reduces the variance in policy change estimates, increasing the stability and efficiency of training. A2C is known for its suitability for parallelized environments. DDQN is an advanced variant of the DQN algorithm used in reinforcement learning. DDQN addresses the problem of overestimation bias that can occur in traditional DQN. In DDQN, two separate neural networks are used: one to select actions (target network) and the other to evaluate these actions (value network). Separating the roles in this way helps reduce the overestimation of Q values and results in more stable and accurate learning. DDQN combines elements of both Q-learning and DQN, allowing it to learn effective action value estimates while also preserving the benefits of experience replay and target networks. This technique has been successful in training DRL agents in various fields, especially in game playing and robotic control.

2.1.1. Reward Function Design

In the DRL method, the reward function is a critical component that evaluates the success of an agent’s actions in response to a given situation. The reward function guides the agent to make the right decisions to achieve the goal. This function provides the feedback that the agent receives after performing a certain action and this feedback can be positive or negative. For example, in a traffic management system, in order to minimize the waiting time of vehicles at traffic lights, a high reward is given for short waiting times and a low or negative reward for long waiting times. Thus, the agent constantly adjusts and learns its strategies to achieve the best results. Careful and problem-oriented design of the reward function can greatly improve the performance and efficiency of the DRL algorithm.

2.1.2. Neural Network Development

In the DRL method, network creation involves the design and training of deep neural networks that enable an agent to learn different situations and actions. These networks usually have a layered structure and optimize action policies through hidden layers by processing information received from the input layer. In the input layer, state information from the environment is received, and this information is processed in deep layers to extract features. In the output layer, predictions are made to determine the best actions for the agent. In this process, the network is optimized using a specific reward function, and the agent develops its strategies over time to obtain the highest reward. Correct configuration of neural networks and careful tuning of hyperparameters greatly increases the efficiency and success of DRL algorithms.

3. Experimental Results

3.1. Experimental Setups

Three different scenarios were designed for the experimental studies. This allows for the evaluation of the generalizability and performance of the developed DRL algorithm in various complex environments. In Scenario 1, a route with a total length of 6600 m was used. A simulation was prepared for this route, which included four traffic lights. For Scenario 2, a more complex route of 8700 m was determined, and the number of traffic lights on this route increased. Finally, Scenario 3 was determined to have the longest distance (15,000 m) and to have a more complex structure as a route. In this study, three different scenarios were specifically designed to represent various aspects of urban traffic management. The first scenario simulates a low-density traffic environment, while the other two scenarios model different real-world conditions with increasing traffic density and more complex road structures. The main reason for this selection is to evaluate the performance of the model in different traffic situations and to create a general infrastructure to provide a wide range of applications. The generalizability of the selected scenarios depends on the compatibility of the simulation parameters used with real city data. For example, variables such as traffic light phase durations, vehicle densities and road structures were determined to mimic real traffic situations. These parameters show the applicability of our method to different scenarios in other cities or traffic systems. In order to ensure the generalizability of the results, the scenarios were expanded to include different traffic densities and vehicle types, and the model was trained to provide optimum speed control in various situations. Thus, the proposed method offers a solution that is applicable not only in a specific scenario but also in more complex and dense traffic environments. In future studies, the generalizability of this model can be further expanded with real-time traffic data and data obtained from different geographical regions. Detailed parameter information for these scenarios is provided in Table 3.

Table 3.

Parameters of the scenarios used in the study.

The vehicle percentages indicated in Table 3 were selected as car- and bus-intensive because the road used was in urban traffic. Although these roads are closed to heavy vehicles, the truck rate was kept low because they are available in small quantities.

3.1.1. Development of the Reward Function in the Study

Before starting the training process using the scenarios created with the parameters shown in Table 3, the design of the reward function is highly important. The primary goal in reward design is to ensure that the vehicle completes its route in city traffic as quickly as possible and to engage in acceleration actions that minimize the waiting time at traffic lights while achieving this goal. When this goal is achieved, increasing safety and comfort during travel time, as well as ensuring minimum fuel consumption, is an important focus. To achieve this goal, a penalty point of −1 is given at each step, and the waiting time is multiplied by −1 to give a penalty point. The distance covered/fuel consumed and the distance covered/emission amount at each step are also given as rewards. These penalty points are applied to encourage the vehicle to avoid certain acceleration actions and maintain its optimal performance. The formula expressing the reward function is expressed in Equation (1).

In Equation (1), the symbol ri denotes the total reward at step I, di represents the distance traveled up to step I, cai signifies the amount of carbon dioxide emitted at step I, fei indicates the fuel consumed, wt denotes the waiting time, and bgrw stands for the grand reward at the end of the section. The formula for the grand reward is presented in Equation (2).

In the formula expressed in Equation (2), ‘steps’ represents the number of steps taken from the start until the termination condition is met, ‘max_steps’ represents the maximum number of steps, ‘length’ represents the length of the path, and ‘distance’ represents the path taken until the termination condition is met. If the vehicle completes the entire path on time, it is awarded 1000 points. If it cannot complete on time, it receives penalty points equal to the remaining distance, and if it completes ahead of time, it receives a reward equal to the remaining time. The grand prize of 1000 full points was determined because it determined the travel time at the optimum level with ideal fuel consumption. In this study, the restrictive parameter was set as Vmax = 50 km/hour, which is the speed limit for city traffic.

3.1.2. Development of the Neural Network in the Study

In this study, a neural network was constructed for use. The neural network consists of one input layer with 14 neurons, two hidden layers with 128 neurons each and one output layer. The input layer with 14 neurons represents a 14-dimensional state variable received from the environment. The study defines three action spaces as the output of the network: 0: accelerate, 1: decelerate and 2: maintain speed. The values of ts, q, tlsc and de in the state variable were obtained with the help of sensors in the traffic light. The 14-dimensional state space provided to the input layer of the network is expressed in Equation (3).

In Equation (3), s represents the instantaneous speed of the vehicle, t denotes the time required for the vehicle to pass through a green light at the traffic signal on its route, c indicates the current position of the vehicle, ts signifies the status of the traffic light (red, green, yellow), tlsc represents the cycle value of the traffic light, d denotes the distance traveled by the vehicle up to that time, q represents the number of vehicles in the queue at the traffic light, de indicates the vehicle density at the traffic light, a represents the previous action, f denotes fuel consumption and ca. signifies carbon dioxide emission. The design of the neural network used in the study is illustrated in Table 4.

Table 4.

Neural network layer parameters.

The parameters used in designing the neural network model shown in Table 4 were directly employed without modification for the other three algorithms that will be used for performance comparisons in the study. The additional model parameters used in the study are presented in Table 5.

Table 5.

Model parameters used in the study.

The parameters shown in Table 5 are from the four methods described in Section 2, and each method utilizes one or more of these parameters.

For training deep learning neural networks, an average system specification computer was used. The training process was conducted on a computer equipped with an AMD Ryzen 5 processor (AMD, Santa Clara, CA, USA), 32 GB of RAM and a 192-bit graphics card with 12 GB of memory.

3.2. Results

In this section, the results of the DQN algorithm trained using three different scenarios are analyzed. Subsequently, a comparison will be made with the other three algorithms run using the same scenarios.

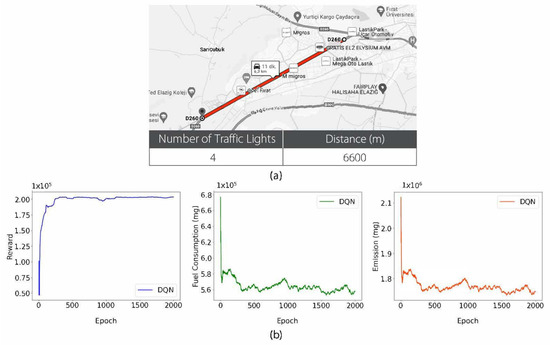

Overall, successful results were obtained for all four methods compared in the study. The training was conducted over 2000 steps. Graphs depicting the average reward, fuel consumption and emission values obtained from the training sessions of the DQN algorithm based on route characteristics for the first scenario are shown in Figure 4.

Figure 4.

For the first scenario, (a) route properties (b) DQN algorithm rewards, fuel consumption and emission values with respect to the number of steps.

The graph presented in Figure 4 shows that the DQN algorithm achieved learning in a short period of time. Fuel consumption and emission values were obtained and collected at each step using the traci library in a simulation environment during training. The average reward swiftly reached its maximum, indicating the successful completion of learning and the minimization of fuel consumption. The emission values also reached their minimum levels in a short time. Although the average fuel consumption and average emission release are different in terms of their values, they appear identical graphically. This is due to the emission release being directly derived from the amount of fuel consumed. This result demonstrates that learning was accomplished in alignment with the objective.

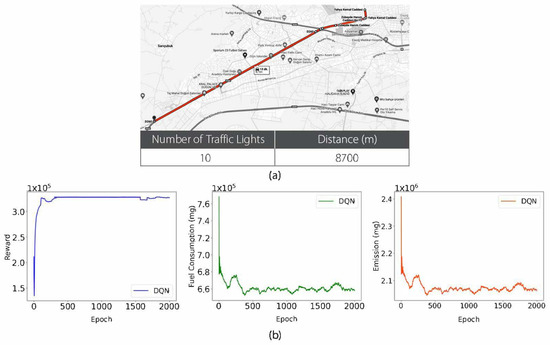

For the second scenario, the route used, the characteristics of the route and the graph of the values obtained as a result of the training study conducted using this route are shown in Figure 5.

Figure 5.

For the second scenario, (a) route properties (b) DQN algorithm rewards, fuel consumption and emission values with respect to the number of steps.

The route depicted in Figure 5 is a total of 8700 m in length and includes 10 traffic lights. Figure 5 shows that learning was successfully accomplished in alignment with the objective. The data obtained show that the average reward was maximized, while the average fuel consumption and average emission values were minimized in accordance with the desired goals. This indicates that positive results were achieved in terms of ensuring optimum speed on the determined route and reducing environmental impacts through the use of the DQN algorithm, a method of DRL. These data demonstrate the effectiveness of adjustments made on a specific route in optimizing traffic flow and reducing environmental impacts. The third route used in the study, the parameters of this route and the graph obtained from the training study conducted using this route are shown in Figure 6.

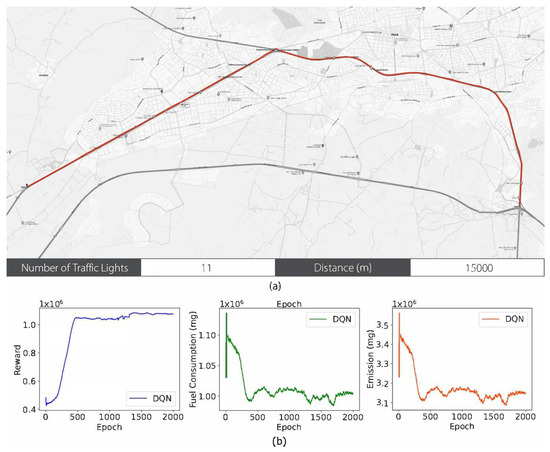

Figure 6.

For the third scenario, (a) route properties (b) DQN algorithm rewards, fuel consumption and emission values with respect to the number of steps.

The graph presented in Figure 6 shows that the model successfully executed the learning process. This graph clearly demonstrates successful performance in maximizing the reward while simultaneously minimizing fuel consumption and emission release.

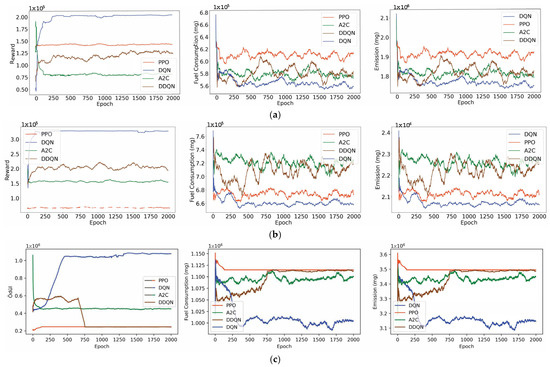

During the training process, popular DRL methods such as POP, A2C and DDQN, along with the DQN method, were applied across three different scenarios, and the results obtained were examined. This evaluation, which includes comparative results of the average reward, average fuel consumption and emission values obtained at the end of the training, is presented in Figure 6. These graphs will be used to visually compare and analyze the performance of DRL methods used under different scenarios. In this way, a comprehensive evaluation of the efficiencies and performances of different methods is intended.

An examination of the graphs labeled a, b and c in Figure 7 clearly shows that the DQN method is significantly more successful than the other methods. It achieves its maximum reward in fewer than 1000 steps. Although the other DRL algorithms used in the study also accomplished learning, they were not as successful as the DQN method. The results clearly show that the DQN method results in less fuel consumption and emission release in the same scenario. When all the results obtained are considered, as the time spent in traffic increases, fuel consumption also increases. Moreover, increasing the vehicle speed to reduce the driving time also increases fuel consumption. For these reasons, presenting the most suitable acceleration suggestion to minimize fuel consumption will reduce fuel consumption to a minimum.

Figure 7.

Reward, fuel consumption and emission values obtained as a result of the training. (a) First scenario. (b) Second scenario. (c) Third scenario.

4. Discussion

This study presents a DQN model designed to optimize traffic flow and reduce environmental impacts in urban traffic systems. The findings provide significant evidence supporting the proposed hypotheses. Unlike predictive traffic models, the DQN approach focuses on real-time data from current traffic conditions and simulations, providing optimal speed management decisions for each situation. This methodology demonstrates significant potential in minimizing CO2 emissions and fuel consumption across various scenarios. Compared to other DRL methods, the DQN algorithm consistently outperforms in achieving lower emission levels, highlighting its robustness in handling complex traffic conditions. By reducing stop-and-go movements, the proposed model enhances traffic flow and contributes to sustainable urban mobility objectives, such as smoother speed transitions, reduced abrupt accelerations or decelerations and indirectly improved driving comfort and safety. Although the study did not directly measure driving comfort and safety, the observed reduction in traffic congestion suggests qualitative benefits in these areas. Additionally, the economic incentives provided by reduced fuel consumption, along with the environmental advantages of lower emissions, underscore the broader impact of this method. For real-world application, integrating the model with cloud-based data from urban traffic control systems such as traffic light phase durations, density and queue lengths would be essential. As many urban traffic systems already store such data, sharing it via APIs could streamline the deployment of this method. Furthermore, equipping vehicles with onboard trip computers containing Global System for Mobile Communications (GSM) modules would enable real-time data reception and optimization. However, implementing such systems introduces challenges, including the necessity of data sharing between traffic management centers and vehicles, as well as the additional costs of integrating trip computers in non-autonomous, human-operated vehicles. The target audience for this model includes vehicles lacking autonomous driving capabilities and vehicle-to-vehicle communication. Despite these limitations, the findings demonstrate the potential of a DRL-based approach in improving traffic flow, reducing emissions and offering a safer and more comfortable driving experience. Future research could enhance this model by incorporating time series forecasting to enable predictive optimization. Exploring the integration of forecasting methods with the DQN algorithm could further improve traffic flow and environmental outcomes. While the study underscores the significant contributions of DRL algorithms in determining optimal speeds, it also highlights the need to overcome implementation challenges, such as cost and infrastructure requirements, to fully realize their potential. Ultimately, this study represents an important step toward cleaner, more efficient and sustainable urban traffic systems.

5. Conclusions

This study attempts to explore the viability of DRL methods, particularly the DQN, to improve the urban traffic flow and reduce the environmental impacts. The DQN method is first trained on real-world traffic data and simulation environments, and it outperforms other DRL methods like PPO, DDQN and A2C in three scenarios with different level of complexity and traffic density.

The findings of this study are as follows:

- Fuel Consumption Reduction: In the most complex traffic conditions of Scenario 3, the DQN algorithm was found to consume up to 15% less fuel than other DRL methods.

- Emission Reduction: In all the scenarios, the DQN method was found to reduce the CO2 emissions by an average of 13% compared to the baseline methods.

- Improved Traffic Flow: The proposed method minimized stop-and-go movements and also optimized vehicle acceleration leading to a 22% decrease in the average time spent at traffic lights.

While this study is promising, it does have limitations. The simulation-based approach might not have captured real-world complexity, including traffic incidents or differences in human behavior. Its reliance on accurate traffic data serves to further restrict its applicability in areas lacking access to these data. In addition, not being integrated with multi-modal transportation systems limits its capacity to serve as a full optimization solution.

Future research could concentrate on the incorporation of real-time traffic data, investigations of full multi-modal systems as well as predictive capabilities that will allow the exploration of most likely traffic bottleneck scenarios.

Author Contributions

Conceptualization, M.K.; methodology, Y.Y.; software, Y.Y.; validation, M.K. and Y.Y.; formal analysis, M.K.; investigation, Y.Y.; resources, Y.Y.; data curation, Y.Y.; writing—original draft preparation, Y.Y.; writing—review and editing, Y.Y. and M.K.; visualization, Y.Y.; supervision, M.K.; project administration, M.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in the study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Castillo, O.; Álvarez, R.; Domingo, R. Opportunities and Barriers of Hydrogen–Electric Hybrid Powertrain Vans: A Systematic Literature Review. Processes 2020, 8, 1261. [Google Scholar] [CrossRef]

- Rawat, A.; Garg, C.P.; Sinha, P. Analysis of the key hydrogen fuel vehicles adoption barriers to reduce carbon emissions under net zero target in emerging market. Energy Policy 2024, 184, 113847. [Google Scholar] [CrossRef]

- Yiğit, Y.; Karabatak, M. Akıllı Şehirler ve Trafik Güvenliği için Sürüş Kontrolü Uygulaması. Fırat Üniversitesi Mühendislik Bilim. Derg. 2023, 35, 761–770. [Google Scholar] [CrossRef]

- Li, J.; Wu, X.; Fan, J.; Liu, Y.; Xu, M. Overcoming driving challenges in complex urban traffic: A multi-objective eco-driving strategy via safety model based reinforcement learning. Energy 2023, 284, 128517. [Google Scholar] [CrossRef]

- Çeltek, S.A. Şehir Içi Trafik Sinyal Ağinin Takviyeli Öğrenme Algoritmalari Ve Nesnelerin Interneti Tabanli Kontrolü; Konya Teknik Üniversitesi: Konya, Türkiye, 2021. [Google Scholar]

- Liu, Z.; Hu, J.; Song, T.; Huang, Z. A Methodology Based on Deep Reinforcement Learning to Autonomous Driving with Double Q-Learning. In Proceedings of the 2021 the 7th International Conference on Computer and Communications, Chengdu, China, 10–13 December 2021; pp. 1266–1271. [Google Scholar]

- Liu, B.; Sun, C.; Wang, B.; Sun, F. Adaptive Speed Planning of Connected and Automated Vehicles Using Multi-Light Trained Deep Reinforcement Learning. IEEE Trans. Veh. Technol. 2022, 71, 3533–3546. [Google Scholar] [CrossRef]

- Cabannes, T.; Li, J.; Wu, F.; Dong, H.; Bayen, A.M. Learning Optimal Traffic Routıng Behaviors Using Markovian Framework in Microscopıc Simulatıon. In Proceedings of the Transportation Review Board Annual Meeting, Wahington, DC, USA, 12–16 January 2020. [Google Scholar]

- Bhuiyan, M.; Kabir, M.A. Vehicle Speed Prediction based on Road Status using Machine Learning. Adv. Res. Energy Eng. 2020, 2, 1–9. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef]

- Mnih, V. Playing atari with deep reinforcement learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

- Zhou, S.; Chen, X.; Li, C.; Chang, W.; Wei, F.; Yang, L. Intelligent Road Network Management Supported by 6G and Deep Reinforcement Learning. IEEE Trans. Intell. Transp. Syst. 2024, PP, 1–9. [Google Scholar] [CrossRef]

- Zhang, Z.; Fei, Y.; Fu, D. A Deep Reinforcement Learning Traffic Control Model for Pedestrian and Vehicle Evacuation in the Parking Lot. Physica A Stat. Mech. Appl. 2024, 646, 129876. [Google Scholar] [CrossRef]

- Wang, T.; Qu, D.; Wang, K.; Dai, S. Deep Reinforcement Learning Car-Following Control Based on Multivehicle Motion Prediction. Electronics 2024, 13, 1133. [Google Scholar] [CrossRef]

- Hua, C.; Fan, W. Safety-oriented dynamic speed harmonization of mixed traffic flow in nonrecurrent congestion. Physica A Stat. Mech. Appl. 2024, 634, 129439. [Google Scholar] [CrossRef]

- Gao, C.; Wang, Z.; Wang, S.; Li, Y. Mitigating oscillations of mixed traffic flows at a signalized intersection: A multiagent trajectory optimization approach based on oscillation prediction. Physica A Stat. Mech. Appl. 2024, 635, 129538. [Google Scholar] [CrossRef]

- Makantasis, K.; Kontorinaki, M.; Nikolos, I. A Deep Reinforcement Learning Driving Policy for Autonomous Road Vehicles. arXiv 2019, arXiv:1907.05246. [Google Scholar] [CrossRef]

- Li, J.; Fotouhi, A.; Pan, W.; Liu, Y.; Zhang, Y.; Chen, Z. Deep reinforcement learning-based eco-driving control for connected electric vehicles at signalized intersections considering traffic uncertainties. Energy 2023, 279, 128139. [Google Scholar] [CrossRef]

- Pérez-Gil, Ó.; Barea, R.; López-Guillén, E.; Bergasa, L.M.; Gómez-Huélamo, C.; Gutiérrez, R.; Díaz-Díaz, A. Deep reinforcement learning based control for Autonomous Vehicles in CARLA. Multimed. Tools Appl. 2022, 81, 3553–3576. [Google Scholar] [CrossRef]

- EL Sallab, A.; Abdou, M.; Perot, E.; Yogamani, S. Deep Reinforcement Learning framework for Autonomous Driving. Electron. Imaging 2017, 29, 70–76. [Google Scholar] [CrossRef]

- Zhang, Z. Autonomous Car Driving Based on Deep Reinforcement Learning. In Proceedings of the 2022 International Conference on Economics, Smart Finance and Contemporary Trade (ESFCT 2022), Xi’an, China, 22–24 July 2022; Atlantis Press: Amsterdam, The Netherlands, 2022; pp. 835–842. [Google Scholar]

- Ke, P.; Yanxin, Z.; Chenkun, Y. A Decision-making Method for Self-driving Based on Deep Reinforcement Learning. AIACT 2020 IOP Publ. J. Phys. Conf. Ser. 2020, 1576, 012025. [Google Scholar] [CrossRef]

- Zhao, J.; Qu, T.; Xu, F. A Deep Reinforcement Learning Approach for Autonomous Highway Driving. IFAC-PapersOnLine 2020, 53, 542–546. [Google Scholar] [CrossRef]

- Zou, Y.; Ding, L.; Zhang, H.; Zhu, T.; Wu, L. Vehicle Acceleration Prediction Based on Machine Learning Models and Driving Behavior Analysis. Appl. Sci. 2022, 12, 5259. [Google Scholar] [CrossRef]

- Haklay, M.; Weber, P. OpenStreetMap: User-Generated Street Maps. IEEE Pervasive Comput. 2008, 7, 12–18. [Google Scholar] [CrossRef]

- Codeca, L.; Cahill, V. Using Deep Reinforcement Learning to Coordinate Multi-Modal Journey Planning with Limited Transportation Capacity. Sumo Conf. Proc. 2022, 2, 13–32. [Google Scholar] [CrossRef]

- Reichsöllner, E.; Freymann, A.; Sonntag, M.; Trautwein, I. SUMO4AV: An Environment to Simulate Scenarios for Shared Autonomous Vehicle Fleets with SUMO Based on OpenStreetMap Data. Sumo Conf. Proc. 2022, 3, 83–94. [Google Scholar] [CrossRef]

- Boz, C.; Gülgen, F. Sumo Trafik Simülasyonu Kullanılarak Trafik Düzenlemelerinin Etkilerinin Gözlenmesi. In Proceedings of the VII. Uzaktan Algılama-CBS Sempozyumu (UZAL-CBS 2018), Eskişehir, Turkiye, 18–21 September 2018; Volume 1, pp. 467–471. [Google Scholar]

- Wegener, A.; Piórkowski, M.; Raya, M.; Hellbrück, H.; Fischer, S.; Hubaux, J.P. TraCI: An Interface for Coupling Road Traffic and Network Simulators. In Proceedings of the 11th Communications and Networking Simulation Symposium, Ottawa Canada, 14–17 April 2008; Association for Computing Machinery: New York, NY, USA, 2008. [Google Scholar]

- Hua, C.; Fan, W. Dynamic speed harmonization for mixed traffic flow on the freeway using deep reinforcement learning. IET Intell. Transp. Syst. 2023, 17, 2519–2530. [Google Scholar] [CrossRef]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Anwar, M.; Wang, C.; De Nijs, F.; Wang, H. Proximal Policy Optimization Based Reinforcement Learning for Joint Bidding in Energy and Frequency Regulation Markets. In Proceedings of the 2022 IEEE Power & Energy Society General Meeting (PESGM), Denver, CO, USA, 17–21 July 2022; IEEE. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).