Abstract

This study presents a novel approach to inclusive education by integrating augmented reality (XR) and generative artificial intelligence (AI) technologies into an immersive and adaptive learning platform designed for students with special educational needs. Building upon existing solutions, the approach uniquely combines XR and generative AI to facilitate personalized, accessible, and interactive learning experiences tailored to individual requirements. The framework incorporates an intuitive Unity XR-based interface alongside a generative AI module to enable near real-time customization of content and interactions. Additionally, the study examines related generative AI initiatives that promote inclusion through enhanced communication tools, educational support, and customizable assistive technologies. The motivation for this study arises from the pressing need to address the limitations of traditional educational methods, which often fail to meet the diverse needs of learners with special educational requirements. The integration of XR and generative AI offers transformative potential by creating adaptive, immersive, and inclusive learning environments. This approach ensures real-time adaptability to individual progress and accessibility, addressing critical barriers such as static content and lack of inclusivity in existing systems. The research outlines a pathway toward more inclusive and equitable education, significantly enhancing opportunities for learners with diverse needs and contributing to broader social integration and equity in education.

1. Introduction

The educational domain is continually evolving, seeking new methodologies and technologies to create more engaging and effective learning environments. In recent years, one of the most notable developments in education has been the integration of extended reality (XR) technologies. XR, which includes virtual reality (VR), augmented reality (AR), and mixed reality (MR), offers a groundbreaking approach that seamlessly merges physical and digital environments, providing immersive and interactive experiences that surpass the capabilities of traditional methods. These shifts are part of a broader transformation across sectors as we transition from the fourth to the emerging fifth industrial revolution, which promises to further integrate digital technologies into human environments [1]. As emerging trends in e-learning and m-learning continue to gain momentum, particularly through bring-your-own-device (BYOD) strategies and the integration of robust cybersecurity policies, the role of XR technologies in reshaping education becomes increasingly pronounced [2].

Inclusive education is essential to ensure equitable learning opportunities for all students, yet traditional methods often fail to address the complex needs of learners with disabilities. Advances in XR technologies and AI offer an opportunity to mitigate this difference by creating personalized, accessible, and interactive learning environments. This educational approach seeks to address the diverse needs of learners, including those with disabilities, different cultural backgrounds, and varying learning styles. The goal is to provide equitable access to quality education, ensuring that every student can participate fully in the learning process while promoting social integration, reducing discrimination, and increasing the sense of belonging and respect among students.

The intersection of XR technologies and inclusive education holds significant promise. XR can offer personalized and adaptable learning experiences, making education more accessible and engaging for all students, regardless of their individual challenges. For example, VR can simulate real-world scenarios for students with mobility issues, AR can provide real-time translations for non-native speakers, and MR can offer interactive, hands-on experiences for students with different learning preferences. These capabilities enable educators to build inclusive classrooms that embrace the challenges and strengths of their students.

Recent educational reforms have emphasized inclusive practices, prioritizing equitable access and participation for learners with special needs. Foundational studies, such as those by Ainscow et al. (2006), highlight the importance of adaptive teaching strategies and assistive technologies in addressing diverse learner needs [3]. These principles have been further advanced by research advocating the integration of inclusive practices with emerging educational technologies [4]. Through the application of XR technologies, these principles can be actualized, improving educational access and engagement [3,5]. Extended reality (XR) technologies, including virtual reality (VR), augmented reality (AR), and mixed reality (MR), offer transformative potential for inclusive education by delivering immersive, adaptive, and personalized learning experiences. These technologies address sensory, cognitive, and mobility challenges, allowing learners to engage interactively in meaningful, controlled settings. XR’s ability to create multisensory environments and provide personalized simulations for learners with disabilities enhances skill development and cognitive engagement in a low-risk, engaging manner [6,7].

While XR technologies are getting more attention, other approaches, such as e-learning platforms, gamified tools, and assistive technologies, have also contributed to advancing inclusive education. E-learning platforms like Moodle and Canvas support inclusivity [8] through features such as adaptive learning paths, accessibility tools, and integration with assistive technologies, including screen readers, speech-to-text software, and adaptive keyboards [8]. Similarly, gamified tools like Kahoot! and Quizlet enhance engagement by catering to diverse cognitive styles with interactive quizzes and rewards [9]. However, despite these advancements, such tools lack the immersive and multisensory capabilities inherent to XR technologies.

By integrating XR with generative AI, our proposed framework addresses these limitations by offering real-time customization and adaptability, thus filling critical gaps in existing approaches. The framework extends the scope of inclusivity by providing personalized, interactive, and immersive learning environments that better support the diverse needs of learners, including those with disabilities.

Compared to traditional approaches, XR technologies combine immersive environments with real-time adaptability, offering highly personalized and engaging learning experiences that promote inclusion through collaborative, gamified, and multisensory settings [9]. However, challenges such as high costs, limited accessibility, and the need for teacher training constrain XR’s integration into mainstream education, whereas traditional tools like e-learning platforms and gamified systems remain more affordable and user-friendly [10].

The use of extended reality (XR) technologies and generative artificial intelligence (AI) can provide opportunities for development and learning for people with special educational needs. Recent studies have highlighted the efficacy of XR technologies in compensating for specific shortcomings experienced by individuals with special educational needs. The immersive nature of these technologies proves instrumental in addressing cognitive deficits, attention disorders, and sensory processing challenges. Romero-Ayuso et al. [11] illustrate the progress made in using VR-based interventions to enhance sustained attention, particularly among children with attention-deficit/hyperactivity disorder (ADHD). These interventions not only showcased improvements in attentional vigilance measures but also heralded a new frontier in cognitive function enhancement.

In this article, we will explore the current state of inclusive education, delve into the various XR technologies, and examine their potential in addressing the challenges faced by traditional educational methods. Through case studies and real-world examples, we will illustrate the transformative impact of XR in creating more inclusive and effective learning environments. We will also discuss the technological and pedagogical considerations for implementing XR in schools, the challenges and limitations of these technologies, and the prospects of XR in education.

The structure of the paper is as follows. The Introduction outlines the motivation for integrating XR technologies and generative AI in inclusive education, emphasizing their potential to address challenges faced by students with special needs. The Related Work section reviews existing approaches, identifying gaps that the proposed framework addresses. The XR Technologies and Inclusive Education section explores the benefits, applications, and challenges of XR in supporting inclusion. The Immersive Environment Architecture section presents the proposed architecture that uses extended reality (XR) technologies and generative artificial intelligence (AI) to create an adaptive, inclusive educational platform tailored to children with special needs. The Methodology and Metrics section details the evaluation approach and criteria for assessing effectiveness. The Discussion highlights the framework’s innovations and compares it to existing solutions, while the Conclusions summarize the findings and propose recommendations for advancing XR adoption in inclusive education.

2. Related Work

Immersive technologies show great increased potential in compensating for several specific shortcomings of people with special educational needs. This includes the use of XR technologies for learning or skill training purposes. For example, XR technology can be used to improve cognitive functioning (e.g., attention) among children with attention-deficit/hyperactivity disorder (ADHD).

Immersive technologies have shown significant potential in addressing the unique challenges faced by individuals with special educational needs. While many studies focus on XR technologies for educational purposes, the contextualization of these technologies’ contributions and limitations remains underexplored.

The study of Romero-Ayuso et al. [11] showed that VR-based interventions are more effective in improving sustained attention. Improvements were observed in attentional vigilance measures, increasing the number of correct responses and decreasing the number of errors of omission. No improvements were observed in impulsivity responses.

Corrigan et al. [12] aimed to evaluate the impact of immersive VR-based interventions compared to control conditions on measures of cognition. The findings revealed substantial effect sizes favoring VR-based interventions concerning global cognitive functioning, attention, and memory outcomes.

For instance, Romero-Ayuso et al. (2021) demonstrated the effectiveness of VR-based interventions for improving sustained attention in children with ADHD [13]. However, the scope was limited to attentional vigilance without addressing emotional or behavioral outcomes. Similarly, Corrigan et al. (2023) highlighted improvements in global cognitive functioning through immersive VR but noted challenges related to user adaptability and cost-efficiency in large-scale implementations [12].

Chen et al. (2022) provided robust evidence for XR and telehealth interventions in enhancing outcomes for children and adolescents with autism spectrum disorder (ASD) [14]. Their results demonstrated positive improvements across multiple areas, including social interaction, communication and speech, emotion recognition and control, daily living skills, attention, and anxiety symptom reduction. Additionally, benefits were observed in acceptance, engagement, problem behavior reduction, pretend play, contextual processing, and insomnia control. However, the study highlighted the need for more dynamic, AI-driven content generation to address diverse sensory and cognitive profiles. This underscores the necessity for systems capable of real-time content personalization, a gap effectively addressed by our proposed XR and generative AI integration.

Hutson’s study [13] highlights the beneficial role of VR technology and the metaverse in opening new avenues of communication and collaboration for people with ASD. Hutson and McGinley [15] consider that XR technologies can provide a supportive and accommodating environment for those with sensory processing disorders (SPD) by focusing on four key factors: indirect social engagement, digital communication preferences, sensory sensitivity, and avatar-based communication.

Also, many educational institutions and programs have successfully integrated XR technologies to enhance inclusive education. These case studies demonstrate the practical applications and benefits of XR in real-world settings. These initiatives are using generative AI to help the inclusion of people with special needs, focused on:

- Communication aids, such as augmentative and alternative communication tools created with the help of generative AI to generate speech for non-verbal children [16];

- Educational support, such as adaptive learning platforms that can personalize, with the help of generative AI, the interactive educational content for their needs [17];

- Emotional support such as virtual companions that can adapt to children’s behavior using their emotional state and interact with them in a positive way [18];

- Social skills training, such as interactive social scenarios and avatars, where generative AI can help children practice and improve their social skills by simulating social situations [19];

- Speech and language therapy, such as interactive language exercises with the help of generative AI. In this way, children with speech difficulties can practice pronunciation and grammar and can improve their vocabulary [20];

- Cognitive skill enhancement, such as personalized brain training for children that can stimulate their cognitive abilities [21,22];

- Sensory integration activities that can include personalized sensory experiences where generative AI can create virtual environments corresponding to the children’s sensory sensitivities [23];

- Behavioral therapy support with the help of interactive behavior modification tools developed with generative AI. The result consists in offering a secure and controlled environment for children to practice and strengthen their positive behaviors [24];

- Customizable assistive technologies [25] such as adaptable interfaces having generative AI integrated to customize them based on the child’s specific needs and capabilities.

At the national level, we have not identified relevant studies and applications, emphasizing once again the need to create specialized applications for children with special needs. The novelty and the main advantage of the virtual environment proposed to be developed within this article refers to the integration of XR technologies with generative AI to improve the focus of attention and the understanding of abstract knowledge by children with special needs.

Our approach uniquely combines XR and generative AI technologies to overcome limitations identified in existing works. Unlike traditional XR platforms, which often rely on static content, our system dynamically adjusts learning environments to align with individual progress and preferences. Moreover, by incorporating accessibility standards such as WCAG2 and W3C XR Accessibility User Requirements, our framework ensures inclusivity for users with diverse abilities, addressing a critical limitation in many existing systems.

High implementation costs and the need for extensive teacher training are recurring challenges across XR initiatives. For example, Hutson (2022) emphasized the accessibility of VR for ASD individuals but highlighted barriers in achieving scalability and affordability [13]. Our proposed system addresses these issues through the use of cost-effective devices and user-friendly interfaces, ensuring it remains a viable solution for underfunded educational institutions.

The proposed framework not only overcomes the limitations identified in related works but also sets a new benchmark for inclusivity and adaptability in education. By contextualizing its contributions within the landscape of XR technologies, this study highlights its transformative potential for attenuating the limitations of existing methodologies.

3. XR Technologies and Inclusive Education

3.1. How Can XR Technologies Increase Inclusion?

Extended reality (XR) technologies offer innovative solutions to the most important challenges faced in inclusive education. By creating immersive and interactive learning environments, XR technologies can address the diverse needs of all students, making education more accessible and engaging.

XR technologies can create tailored learning experiences that adapt to individual students’ needs. For example, VR can simulate real-world scenarios that help students with disabilities practice life skills in a controlled environment. AR can provide real-time translations for non-native speakers, while MR can offer interactive lessons that adjust to different learning styles and paces. Additionally, ontology-based frameworks can further enhance these adaptive capabilities by providing structured knowledge representation. Ontologies enable the mapping of learner profiles to specific educational content, ensuring that the learning experience aligns closely with individual needs and goals. Studies such as Băjenaru [26] have explored how ontology-driven approaches can support adaptive learning and personalized educational environments.

Also, the immersive nature of XR can significantly boost student engagement and motivation. Students are more likely to participate actively and retain information when they can interact with 3D models, virtual simulations, and augmented content. This is particularly beneficial for students with attention deficits or those who struggle with traditional learning methods.

XR technologies can make education more accessible for students with physical, sensory, or cognitive disabilities. For instance, VR can provide virtual field trips for students who cannot physically visit certain locations. AR can overlay digital information onto real-world objects, aiding students with visual impairments. MR can create interactive environments that facilitate hands-on learning for students with various disabilities.

3.2. Case Studies and Examples of XR in Educational Settings

The transformative potential of XR technologies in inclusive education is best demonstrated through real-world applications and good practices. These case studies showcase how various institutions have implemented XR to address diverse learning needs, enhance engagement, and improve educational outcomes. From social skills training for students with autism to augmented reality for language learning and mixed reality for STEM (Science, Technology, Engineering, Arts, and Mathematics) education, these examples illustrate the broad impact of XR technologies on a more inclusive and effective educational environment. The following case studies provide detailed insights into how XR is being used to break down barriers and create new opportunities for learners of all abilities.

One of the most needed applications of VR in inclusive education is its use in social skills training for students with autism spectrum disorder (ASD). VR allows these students to practice social interactions in a safe and controlled environment, where they can make mistakes without real-world consequences.

At the University of Texas, Dallas, researchers have developed a VR program called “Bravemind” to help students with ASD improve their social skills. The program immerses students in various social scenarios, such as job interviews, classroom interactions, and casual conversations. Through repeated practice in these simulated environments, students can build confidence and improve their social competencies. Research has shown that participants who used the VR program showed significant improvements in social engagement and communication skills compared to those who did not use the program [27].

AR applications have proven to be effective tools for language learning, providing real-time translations and contextual information that enhance comprehension and engagement for non-native speakers. In this regard, the Smithsonian Institution has integrated AR into its exhibits to support language learning. Visitors can use their smartphones or tablets to scan objects and receive information in multiple languages. This initiative not only makes the exhibits more accessible to non-English speakers but also enhances their learning experience by providing additional context and interactive content. According to a report, this AR application has increased visitor engagement and improved language comprehension among diverse audiences [28].

VR can provide experiential learning opportunities that are otherwise impossible due to physical, financial, or safety constraints. Stanford University’s Virtual Human Interaction Lab (VHIL) has developed a series of VR simulations designed to teach empathy and social justice. One notable program, “Becoming Homeless”, immerses users in the experience of losing their job and home, helping them understand the challenges faced by homeless individuals. Research shows that participants who completed the VR simulation were more likely to exhibit empathetic behavior and support policies aimed at helping the homeless [29].

Extended reality (XR) technologies are not only improving accessibility but are also transforming instructional approaches in STEM education. For example, the XR-Ed framework serves as a comprehensive guide for designing learner-centered XR systems, highlighting key principles such as physical accessibility, social interactivity, and real-time assessment strategies [30].

A notable application of XR lies in its ability to address barriers to accessibility in STEM education. Initiatives such as the development of XR-based modular learning packages at the University of South-Eastern Norway illustrate how educators can integrate theoretical knowledge with practical, workplace-relevant skills. These modules encourage collaboration between academic staff and industry professionals, enabling real-time interactions and immersive, hands-on training in XR environments [31].

Similarly, the integration of XR into physical education highlights its ability to make abstract scientific concepts more understandable. By providing virtual simulations of complex physical phenomena, XR improves the engagement and accessibility of science education [32].

These case studies highlight the significant potential of XR technologies in creating more inclusive and effective educational environments. By meeting diverse learning needs and increasing student engagement, XR can substantially enhance educational outcomes for all learners. As technology advances, the adoption of XR in education is expected to grow, providing even more innovative solutions to support inclusivity.

3.3. Benefits of XR Technologies for Students with Disabilities

XR technologies offer numerous benefits for students with disabilities, helping to create a more inclusive and supportive learning environment. XR can provide multisensory experiences that cater to different sensory needs. For example, VR can offer visual and auditory stimuli that help students with sensory processing disorders engage more fully in learning activities. AR can enhance visual information with auditory cues, aiding students with visual impairments.

XR allows for flexible and customizable learning environments that can be adapted to suit individual needs. Students can learn at their own pace, revisit challenging concepts, and engage with content in ways that best suit their learning styles. This flexibility is particularly beneficial for students with learning disabilities who may require additional time and support.

XR technologies can promote greater independence among students with disabilities. For instance, VR can simulate real-world tasks, such as navigating public transportation or shopping, allowing students to practice and gain confidence in their abilities. AR can provide step-by-step guidance for complex tasks, enabling students to complete activities independently. XR can create rich visual experiences that enhance understanding and retention for visual learners. 3D models, virtual simulations, and augmented content can bring abstract concepts to life, making them more tangible and easier to grasp. Also, XR can integrate auditory elements, such as narrated content and sound effects, to support auditory learners. For example, VR environments can include verbal explanations and audio cues that reinforce learning.

XR can provide interactive and hands-on experiences that are ideal for kinesthetic learners. MR allows students to manipulate virtual objects and engage in physical activities within a digital environment, promoting active learning and engagement.

In summary, XR technologies hold immense potential in promoting inclusive education by addressing the diverse needs of all students. Through personalized learning experiences, enhanced engagement, and improved accessibility, XR can create more inclusive and effective educational environments.

3.4. Challenges and Limitations of XR in Inclusive Education

While XR technologies hold great promise for promoting inclusive education, they also present several challenges and limitations that must be addressed to fully realize their potential. These challenges include high costs, technological limitations, potential health effects, and the need for teacher training and curriculum integration.

One of the most significant barriers to the widespread adoption of XR technologies in education is the high cost associated with purchasing and maintaining the necessary equipment. VR headsets, AR glasses, and MR devices can be prohibitively expensive for many educational institutions, especially those in underfunded districts. Additionally, there are costs related to developing or purchasing XR content, upgrading infrastructure to support XR applications, and ongoing maintenance and technical support. Existing solutions are frequently fragmented, focusing on isolated aspects of learning rather than offering a comprehensive, integrated platform. Developing high-quality, diverse, and culturally relevant XR content is time-consuming and resource-intensive. The lack of such content could limit the effectiveness of XR platforms for inclusive education. To address this, we use widely available devices—Meta Oculus Quest 2 and 3, tablets, and smartphones.

Despite rapid advancements, XR technologies still face several technical challenges. Issues such as limited field of view, latency, and the need for high computational power can reduce the effectiveness and usability of XR applications. Additionally, there are concerns about the quality and accuracy of the virtual content, which can impact the learning experience. Connectivity issues and the need for robust Internet infrastructure are also significant challenges, particularly in rural or underserved areas [33].

Frequent use of XR technologies can have potential health implications, including eye strain, motion sickness, and other discomforts. VR can cause “cybersickness”, a condition like motion sickness, which can deter students from engaging with the technology. There are also concerns about the long-term effects of prolonged exposure to XR environments on vision and posture [34].

Effective integration of XR technologies in the classroom requires comprehensive teacher training. Educators need to be proficient in using XR tools and designing XR-based learning activities that align with curriculum standards. Many teachers may lack the necessary skills or confidence to implement XR technologies effectively, and ongoing professional development is essential to ensure successful adoption.

Integrating XR technologies into existing curricula can be challenging. Educational content must be carefully designed to ensure that it aligns with learning objectives and enhances, rather than distracts from, the educational experience. There is a need for more research and development of high-quality XR educational content that is pedagogically sound and accessible to all students.

Ensuring equitable access to XR technologies is a major concern. Students from low-income backgrounds or schools with limited resources may not have the same opportunities to benefit from XR as their more affluent peers. Additionally, XR technologies need to be accessible to students with disabilities, which requires thoughtful design. Not all students, particularly those with physical or cognitive disabilities, may be able to fully utilize XR devices. For instance, students with motion sickness or sensory sensitivities may find immersive environments uncomfortable. Limited availability of content adapted for specific disabilities may restrict the usability of XR platforms. To address this, in the design and development of XR environments, we consider both the WCAG2 standards [35] and the W3C XR Accessibility User Requirements [36] in addressing multiple user needs.

XR platforms often require complex technical infrastructure, including high-speed Internet, powerful computing devices, and skilled technical staff. This induces delays in broad adoption and also raises issues in terms of scalability. The EU Digital Decade program aims to have all European households covered by a gigabit network by 2030 [37].

The collection of user data, such as interaction logs and personal preferences, raises concerns about data security and compliance with privacy regulations. Even though there are regulations in place in the EU such as the General Data Protection Regulation (GDPR) and Artificial Intelligence Act (AI Act), many XR solutions and devices are produced outside the EU. Improper handling of sensitive data can lead to breaches or misuse, undermining trust in the technology. In our research, we apply strict compliance with privacy laws and ethical regulations.

As shown by the recent widespread application of generative AI, there is also a major risk of over-reliance on AI and XR platforms, which could lead to reduced human interaction and traditional teaching methods. This may affect students who benefit from more direct, personal engagement with educators. In this matter, we consider blended learning, which combines traditional methods with XR technologies. Our approach fills some of the existing gaps and sets a foundation for future advancements in inclusive education.

Despite these challenges and limitations, the potential benefits of XR technologies in promoting inclusive education are substantial. Addressing these barriers requires coordinated efforts from educators, policymakers, technologists, and researchers to ensure that XR can be effectively and equitably integrated into educational environments.

4. Immersive Environment Architecture

Connectivity and accessibility of XR technologies are key features, which refer to the ability of users to access virtual environments, regardless of the devices they use or the skills they have. Providing the necessary level of connectivity for XR environments requires a combination of technologies and infrastructure components, such as high-speed Internet connections, low-latency network infrastructure, and robust compute and storage capabilities. Interfaces with virtual environments should include accessibility features, making experiences inclusive and adaptable for users with disabilities.

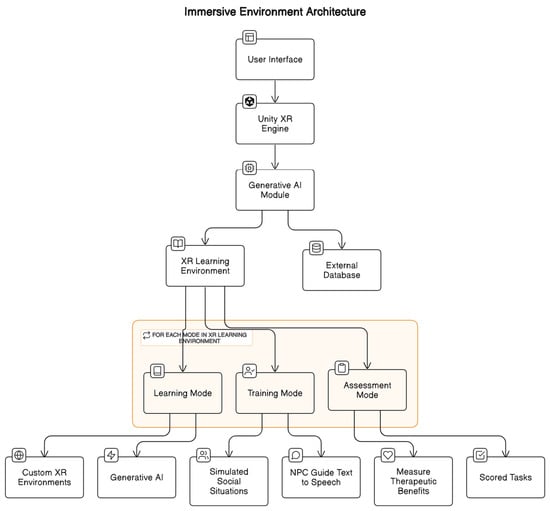

The proposed architecture (Figure 1) incorporates extended reality (XR) technologies and generative artificial intelligence (AI) to establish an adaptive, inclusive educational platform for children with special needs. This system is designed to provide a transformative learning experience by dynamically adjusting to each user’s unique requirements and progress.

Figure 1.

The immersive environment architecture.

The current version of the software, designated as version 0.6-alpha, is under development, incorporating XR technologies and generative AI for adaptive, inclusive learning environments. The key components within the architecture are as follows: User Interface (UI), Unity XR Engine, generative AI Module, XR Learning Environment, and External Database.

At the same time, innovative approaches and solutions are proposed to develop the XR inclusive environment:

- Integration of XR technologies and generative AI—utilizing XR to create immersive learning environments tailored to children with special needs, alongside generative AI to personalize the learning experience based on individual progress and preferences.

- Adaptive learning environments to dynamically adjust learning scenarios, ensuring they are aligned with each child’s learning pace.

- Data-driven development and evaluation to generate reports for parents, educators, and healthcare professionals, allowing for real-time monitoring and adjustment of the learning modules based on the child’s development.

The User Interface serves as the gateway for users to access a variety of XR learning experiences. The Unity (XR) Engine acts as the backbone, creating custom environments within which the generative AI module operates. This module, fueled by the OpenAI API (openai-python v1.52.1), dynamically tailors the XR learning environments in the XR Learning Environment component.

The virtual environment developed within the project can be used for three main aims: learning, training, and assessment. The Learning Mode provides customized experiences addressing specific special needs, while the Training Mode immerses users in simulated social scenarios guided by a dynamically adjusting NPC that uses text-to-speech technology. In the Assessment Mode, therapeutic benefits are quantified through measured improvements in motor skills and coordination, and the results are stored in the External Database for later analysis. This data storage component not only captures assessment outcomes but also houses user progress and preferences, providing a comprehensive foundation for a transformative and inclusive learning experience for children with special needs. These components are visually represented in Figure 1, highlighted within the orange area.

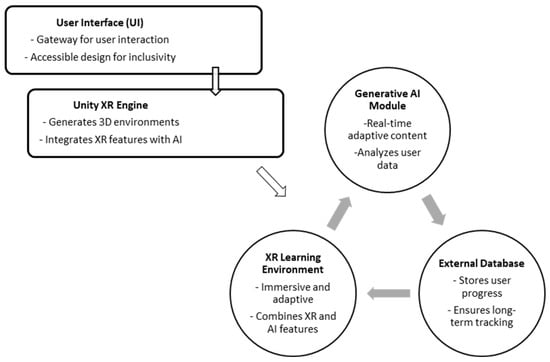

A schematic representation for the integration of the Unity XR Engine with AI modules is presented in Figure 2.

Figure 2.

Integration of Unity XR with AI modules.

Next, the components of the architecture of the inclusive immersive environment will be analyzed in detail, as well as the relationships between them and the modes of operation.

4.1. User Interface (UI)

The User Interface (UI) is the gateway through which users interact with the immersive learning environment. Designed with inclusivity and accessibility in mind, the UI plays a crucial role in ensuring that children with special educational needs can engage with XR-based activities seamlessly. This component is crafted to support a wide range of abilities and learning preferences, providing intuitive access to educational content and enhancing the user’s overall experience.

The UI’s features include intuitive navigation, adaptive accessibility tools, and visual cues to enhance user interaction. These characteristics ensure engagement for children with varying levels of technical familiarity or disabilities [34].

4.2. Unity XR Engine

The Unity XR Engine underpins the system, generating 3D environments and facilitating seamless integration between virtual and augmented reality features. The engine’s robust graphical capabilities support immersive simulations that adapt dynamically to users’ interactions, enhancing real-time feedback and responsiveness [38]. Unity’s XR framework is a versatile and powerful toolset, allowing developers to create cross-platform applications that seamlessly integrate with various XR hardware devices, such as VR headsets, AR glasses, and mobile devices.

The Unity XR Engine acts as the core hub, seamlessly integrating with the generative AI module to provide personalized learning experiences. Through AI-powered APIs, Unity can dynamically adjust virtual environments, NPC (non-player character) behaviors, and scenario difficulty in real time, as follows:

- Dynamic content generation: Using natural language processing (NLP) via the OpenAI API (openai-python v1.52.1), the system generates contextual dialogue or instructions based on user input.

- Interactive NPCs: AI-driven NPCs guide users through training and assessment scenarios, simulating social interactions or real-world tasks [16].

- Adaptation to feedback: Data collected during interactions are fed back into the AI module, which modifies subsequent tasks to better align with the user’s developmental needs.

4.3. Generative AI Module

Powered by machine learning models, this module personalizes educational content by analyzing user data such as preferences, progress, and challenges. For example, text-to-speech and natural language processing (NLP) algorithms allow for real-time adaptive interactions, empowering users to progress at their own pace.

The generative AI module dynamically creates educational content based on the user’s developmental stage, learning pace, and individual challenges. For instance, it can generate custom visual aids or interactive exercises to address specific cognitive or sensory deficits, such as those encountered by children with autism spectrum disorder (ASD) or attention-deficit/hyperactivity disorder (ADHD). It also integrates text-to-speech (TTS) and speech-to-text (STT) functionalities to ensure accessibility for non-verbal users or those with language processing challenges [20].

Using real-time feedback from user actions, the module adjusts the difficulty, pacing, and structure of tasks. For example, if a user struggles with a specific activity, the module simplifies the task or offers step-by-step guidance, enhancing confidence and engagement. Instead, for users excelling in tasks, the system introduces more complex scenarios to challenge and stimulate their cognitive growth.

Generative AI enables the creation of diverse, context-rich scenarios for training and skill development. Social interaction modules, for example, simulate conversations with NPCs, allowing users to practice communication skills in a controlled environment. The module ensures that scenarios reflect real-world variability, preparing users for practical applications of learned skills.

The generative AI module is an important element of the immersive environment architecture, allowing artificial intelligence to create inclusive, personalized, and engaging learning experiences. Its ability to dynamically adapt to individual needs and provide real-time feedback emphasizes its potential to revolutionize education for children with special needs.

4.4. External Database

The External Database is a component of the immersive environment architecture providing robust data management capabilities to store, analyze, and retrieve critical information about user interactions, progress, and preferences. Its role enables adaptive learning, personalized feedback, and longitudinal tracking of developmental outcomes.

The database is designed to track individualized data securely, ensuring that all information is linked to specific user profiles while maintaining privacy and compliance with ethical standards. The modular design allows customization of data fields based on the specific educational, therapeutic, or behavioral objectives of each user.

By storing previous data, the database enables longitudinal study of a user’s progress over time. Patterns in cognitive, emotional, or behavioral development can be identified, providing educators and therapists with actionable insights to refine interventions. Long-term tracking supports evidence-based decision-making for educational and therapeutic program adjustments. The incorporation of predictive analytics could enable early identification of learning challenges or developmental delays. Thus, AI-powered dashboards could provide stakeholders with tailored recommendations based on historical and real-time data.

4.5. The Learning Mode

The Learning Mode is designed to support the acquisition of new knowledge and skills in an engaging, exploratory manner. This mode supports the power of custom XR (extended reality) environments and generative AI to provide users with highly personalized and adaptive learning experiences. By immersing users in dynamic and interactive virtual spaces, the Learning Mode creates opportunities for deeper understanding, active participation, and meaningful engagement.

Overall, the Learning Mode focuses on helping users build foundational knowledge. The custom XR environments are crafted to support a wide range of cognitive and sensory needs, offering visually rich, interactive simulations that make abstract concepts more accessible. For example, users might explore a 3D model of a solar system, interact with virtual objects to understand physics principles, or navigate through historical reconstructions to enhance their understanding of specific events. The design of these environments ensures inclusivity, supporting different learning styles by integrating visual, auditory, and tactile elements.

For example, when a user encounters difficulties with a particular concept, the AI system can adjust by simplifying the task or offering additional contextual support through an interactive guide. Conversely, if the user exhibits a high level of proficiency, the system dynamically increases task complexity to sustain engagement and challenge the learner.

The integration of generative AI further enhances the learning experience by adapting content to the user’s progress and comprehension in real time. The AI system continuously monitors user interactions and dynamically generates supplemental explanations, examples, or challenges tailored to individual needs. For example, when a user encounters difficulties with a particular concept, the AI system can adjust by simplifying the task or offering additional contextual support through an interactive guide. On the other hand, when a user demonstrates advanced proficiency, the system adaptively increases task complexity to sustain engagement and further growth.

An essential aspect of the Learning Mode is its emphasis on a growth-oriented mindset. The system adopts a structured approach to concept introduction, gradually increasing complexity to provide users with appropriate support as they build confidence. Early tasks are deliberately simplified, offering clear instructions and immediate feedback to facilitate initial success and foundational learning. As they progress, the system reduces guidance, encouraging independent problem-solving and critical thinking.

The Learning Mode also encourages curiosity and exploration by allowing users to interact with the XR environment at their own pace. Unlike traditional educational settings, where rigid structures often constrain learning, this mode gives users the freedom to experiment and discover within a safe virtual space. For example, a user learning about ecosystems might observe how altering one variable, such as the number of predators, impacts the entire system. Such hands-on experiences facilitate deeper comprehension by linking theoretical knowledge with practical applications.

In addition, the Learning Mode incorporates collaborative elements to enhance social learning. Users can engage in group activities or interact with AI-powered virtual peers, simulating real-world collaboration. This not only improves communication and teamwork skills but also creates opportunities for shared problem-solving and peer feedback.

4.6. The Training Mode

The Training Mode is a dynamic component of the immersive environment architecture, emphasizing the practical application and development of skills. This mode builds on the knowledge gained in the Learning Mode, transitioning users from passive understanding to active performance within realistic, scenario-driven virtual settings. By offering a structured and supportive platform for practice, the Training Mode develops confidence and proficiency in specific competencies, making it especially valuable for users needing repeated exposure to real-world challenges in a controlled and adaptable environment.

In essence, the Training Mode is designed to simulate real-life situations where users can apply their knowledge and practice specific skills. These simulations are powered by highly interactive environments that replicate realistic scenarios, such as workplace tasks, social interactions, or therapeutic activities. For example, a user might practice responding to customer inquiries in a virtual retail setting, rehearse effective communication strategies in a simulated team meeting, or engage in role-playing activities designed to build emotional regulation and conflict resolution skills. The emphasis is on creating a safe space where users can experiment, make mistakes, and learn without fear of real-world consequences.

One of the most important features of the Training Mode is its integration of AI-powered non-player characters (NPCs). These virtual agents act as interactive guides, facilitators, or role-playing partners, adapting their behavior based on user performance. Using advanced text-to-speech and natural language processing technologies, NPCs can simulate realistic dialogues, provide instructions, or offer constructive feedback in real time. For instance, in a training scenario focused on social interaction, an NPC might adjust its tone or responses depending on the user’s communication style, allowing the user to practice different approaches and develop their interpersonal skills.

The Training Mode is particularly effective in developing practical competencies through iterative practice. Tasks within this mode are structured with progressive difficulty levels, ensuring that users are gradually challenged as they improve. Initial exercises might involve simple, guided tasks to build familiarity with the environment, while later stages require independent problem-solving and more complex decision-making. This progression ensures sustained engagement while preventing the frustration or boredom that can result from tasks that are either too easy or too difficult. Real-time feedback is another central element of the Training Mode. As users engage with the environment, the system continuously monitors their actions and provides immediate insights into their performance. This feedback is delivered through various channels, including visual cues, verbal prompts from NPCs, or performance dashboards. For example, a user practicing a vocational task might receive feedback on their speed, accuracy, or efficiency, helping them identify areas for improvement.

In addition to individual practice, the Training Mode incorporates collaborative and cooperative elements. Users can engage in group activities within the virtual environment, working alongside peers or AI-driven agents to complete tasks that require teamwork. These collaborative exercises are designed to enhance communication, negotiation, and coordination skills, preparing users for real-world scenarios that demand group interaction.

4.7. The Assessment Mode

The Assessment Mode is another significant component of the immersive environment architecture, designed to evaluate the user’s progress, competencies, and outcomes in a comprehensive and objective manner. It acts as a reflective phase, where the system measures the effectiveness of learning and training interventions while providing detailed feedback for both users and stakeholders, such as educators or therapists. By combining structured evaluation tasks with advanced analytics, this mode ensures that the immersive environment serves not only as a tool for skill development but also as a reliable mechanism for monitoring growth and identifying areas for improvement.

Very important to the Assessment Mode are evaluation tasks, which form the basis of performance evaluation. These tasks are carefully designed to test specific knowledge and skills acquired during the Learning and Training Modes. They encompass a variety of formats, ranging from interactive problem-solving activities to scenario-based simulations. For instance, a user might be tasked with resolving a conflict between virtual characters in a social–emotional training program or completing a procedural task in a simulated workplace. Each task is scored against predefined criteria such as accuracy, response time, and efficiency, ensuring an objective measurement of user performance. The scoring system is transparent, providing users with clear benchmarks to understand how their actions align with expected outcomes.

Another key aspect of the Assessment Mode is its ability to measure therapeutic or developmental benefits. Beyond evaluating task-specific skills, the Assessment Mode tracks broader cognitive, emotional, and behavioral improvements resulting from interactions within the immersive environment. For example, a user participating in a rehabilitation program might have their progress monitored through metrics such as reaction times, emotional stability during stress-inducing scenarios, or changes in engagement levels over time. Feedback in the Assessment Mode is both immediate and detailed, providing users with actionable insights into their performance. The system delivers feedback through multiple channels, such as visual summaries, verbal reports, or interactive dashboards. For example, a user might receive a detailed breakdown of their strengths and weaknesses after completing a simulation, with recommendations for areas requiring further practice. This feedback loop not only helps users track their progress but also motivates them to continue improving by highlighting their achievements.

Additionally, the Assessment Mode incorporates qualitative and quantitative metrics to provide a holistic view of user performance. While scored tasks and system-generated data offer objective measures, qualitative insights—such as user reflections, behavioral observations, or recorded interactions—add depth to the evaluation. This combination ensures that the assessment captures not only measurable outcomes but also the nuanced aspects of user engagement and behavior.

5. Methodology and Metrics

To evaluate the effectiveness of our framework and its impact on executive functioning in students with special educational needs, we will employ the Behavior Rating Inventory of Executive Function—BRIEF-2 [39] as the primary benchmark. BRIEF-2 is a validated tool designed to assess executive functioning behaviors across multiple domains, including working memory, task completion, planning, and emotional regulation. This instrument is particularly suitable for capturing the nuanced executive function challenges faced by children and adolescents in educational settings. This is supported by a formal agreement with a specialized school for children with special needs. Its multidimensional approach will allow us to make profiles of students’ self-regulatory strengths and weaknesses, gaining insights aligned closely with the goals of our framework. The inclusion of subscales such as behavior regulation, emotion regulation, and cognitive regulation will let us quantitatively measure improvements in areas targeted by the XR platform.

For the evaluation, we will use structured experiments, incorporating control groups and longitudinal studies, to validate the framework’s effectiveness.

Control groups: Participants will be divided into two groups, those using the XR-enhanced learning platform and those engaging with traditional learning methods. By comparing outcomes such as task completion rates, learning success, and executive function improvements between these groups, we aim to isolate and quantify the contribution of the XR framework.

- Longitudinal studies: We will measure changes over time in key metrics, such as working memory performance and task completion consistency, to assess the framework’s sustainability. Data collection will occur at predefined intervals, allowing us to analyze trends in learning retention and skill development over extended periods.

A list of specific metrics which will be used for future implementation and testing of our system, categorized by functionality, can be seen in Table 1.

Table 1.

Metrics for evaluating the implementation and functionality of the proposed system.

Future iterations of this research will involve the collection of interaction and performance data from students with special educational needs. These data will be gathered in collaboration with schools and therapy centers, ensuring diversity in user demographics and learning profiles.

To promote transparency and reproducibility, the datasets that we will collect during future evaluations will be anonymized and made available through open data platforms, abiding by ethical guidelines and privacy regulations.

6. Discussion

Our findings highlight the transformative potential of extended reality (XR) technologies in enhancing educational inclusivity. XR tools such as VR, AR, and MR have proven effective in creating engaging and accessible environments that cater to a wide array of learning needs, supporting the integration of digital and physical realms in education.

The proposed system introduces several innovative features that set it apart from other XR-based educational systems. From a technological framework perspective, the proposed system combines the Unity XR Engine with generative AI, creating dynamic, immersive environments that evolve based on user interactions [16]. Unlike other XR platforms, such as those applied in physics education that rely on static setups, our system offers real-time, AI-driven adjustments, ensuring adaptive and personalized learning experiences.

One of the key strengths of the proposed system is its adaptation and personalization capabilities. The generative AI module dynamically adjusts task complexity based on the user’s proficiency level, making it highly targeted and effective for learners with special needs. This feature is comparable to the XR-Ed framework, which emphasizes instructional design and user-centered experiences. However, unlike XR-Ed, our system directly integrates these adjustments into specific XR tools, tailoring them to meet the unique needs of learners with sensory and cognitive challenges.

The system also excels in delivering real-time feedback through interactive non-player characters (NPCs) and data-driven insights. This distinguishes it from initiatives such as the modular XR learning packages developed at the University of South-Eastern Norway, which emphasize collaboration between educators and industry professionals but do not prioritize interactive feedback as a core feature [31]. Additionally, the modular XR packages focus on predefined learning modules, whereas our framework offers distinct learning, training, and assessment modes. This multi-mode structure provides a holistic approach to education, enabling users to transition seamlessly from skill acquisition to practical application and evaluation [32].

The proposed system also addresses critical limitations observed in existing frameworks. Both XR-Ed and the modular XR packages encounter challenges in scalability and adaptability. Our architecture overcomes these issues by utilizing cost-effective, widely available devices (e.g., Meta Quest and tablets) and employing cloud-based solutions for computationally intensive tasks. This design ensures broader accessibility while maintaining high performance. Furthermore, unlike these systems, our framework adheres to WCAG2 and W3C XR Accessibility User Requirements, ensuring inclusivity for diverse user groups, including those with sensory and cognitive disabilities.

Despite its strengths, the proposed system could incorporate more collaborative elements, as seen in the modular XR learning packages from the University of South-Eastern Norway, which emphasize cooperation between learners and professionals. Additionally, while it excels in addressing specific challenges in special-needs education, the system’s applicability could be broadened to encompass general educational contexts, as seen with the XR-Ed framework and ISHS initiatives.

By focusing on addressing these limitations, the proposed system could achieve greater versatility and impact while maintaining its commitment to inclusivity and adaptive learning. This positions it as a potential benchmark for the future of XR-based education.

Broader Implications and Future Directions

The implications of XR technologies extend beyond the educational sector into areas like healthcare, where they can similarly enhance patient education and rehabilitation. Looking ahead, future research should focus on the long-term impacts of XR across different age groups and cultural contexts to understand better the scalability and effectiveness of these technologies. Additionally, exploring advanced features like AI-driven personalization and the integration of biometric data within XR platforms could further tailor educational experiences to individual learner needs. A cloud-based or HPC-based architecture would enable our system to perform computationally intensive tasks off-site, improving front-end performance behavior on less powerful devices.

In summary, XR technologies hold significant promise for inclusive education, but their broader adoption and impact require continued exploration and thoughtful implementation.

7. Conclusions

The integration of XR technologies in inclusive education necessitates several key strategies to maximize their benefits. Significant investment in infrastructure and resources is essential to address the cost barriers associated with XR technologies. Comprehensive teacher training and professional development programs are required to equip educators with the necessary skills and knowledge to effectively integrate XR into their teaching practices. Additionally, thoughtful curriculum design and content development are crucial to ensure that XR applications align with curriculum standards and learning objectives. Ongoing research and evaluation are necessary to assess the effectiveness of XR in various educational contexts and for different student populations.

Looking ahead, advancements in XR technology are expected to enhance usability, reduce costs, and address technical limitations. These technological innovations will enable personalized learning experiences tailored to individual student needs and preferences. Global collaboration and accessibility efforts are essential to ensure equitable access to XR technologies, particularly in underserved communities and developing countries. Addressing ethical considerations and privacy concerns is critical to protect student welfare and rights as XR becomes more integrated into educational settings. Interdisciplinary research, involving collaboration between educators, technologists, psychologists, and policy experts, will enable innovative approaches in using XR for inclusive education.

In conclusion, while challenges exist, XR technologies offer immense potential to transform inclusive education by making learning more engaging, accessible, and effective for all students. Through collaboration, investing in research and development, and prioritizing equity and ethical considerations, stakeholders can harness the full capabilities and fully utilize the potential of XR to create inclusive educational environments that prepare students for the challenges of the future.

Author Contributions

Conceptualization, M.B., D.-D.I., I.P. and D.-C.B.; methodology, M.B., D.-D.I. and I.P.; software, D.-D.I. and I.P.; validation, M.B., D.-D.I., I.P., D.-C.B. and L.B.; formal analysis, D.-D.I. and I.P.; investigation, M.B., D.-D.I., I.P., D.-C.B. and L.B.; writing—original draft preparation, M.B., D.-D.I., I.P., D.-C.B. and L.B.; writing—review and editing, M.B., D.-D.I., I.P., D.-C.B. and L.B.; visualization, M.B., D.-D.I., I.P., D.-C.B. and L.B.; supervision, D.-D.I. and I.P. All authors have read and agreed to the published version of the manuscript.

Funding

This study is supported by the project “Advanced research based on emerging and disruptive technologies—support for the society of the future—FUTURE TECH” (funded by the Romanian Core Program within the National Research Development and Innovation Plan 2022–2027 of the Ministry of Research and Innovation), project no 23380601.

Data Availability Statement

The data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Petcu, I.; Radu, A.F.; Barbu, D.C.; Golea, D.G.; Anghel, M. Shaping the future: Between opportunities and challenges of the ongoing 4th and the forthcoming 5th industrial revolution. In Proceedings of the 16th International Scientific Conference eLearning and Software for Education, Bucharest, Romania, 30 April–1 May 2020; pp. 91–97. [Google Scholar]

- Anghel, M.; Pereteanu, G.C.; Cîrnu, C.E. Emerging trends in elearning and mlearning from a BYOD perspective and cyber security policies. In Proceedings of the 16th International Scientific Conference eLearning and Software for Education, Bucharest, Romania, 23–24 April 2020; Volume 1. [Google Scholar] [CrossRef]

- Ainscow, M.; Booth, T.; Dyson, A. Improving Schools, Developing Inclusion; Routledge: Abingdon, UK, 2006; ISBN 9781134193455. [Google Scholar]

- Ibrahim, U. Integration of Emerging Technologies in Teacher Education for Global Competitiveness. Int. J. Educ. Life Sci. 2024, 2, 127–138. [Google Scholar] [CrossRef]

- Alnagrat, A.; Che Ismail, R.; Syed Idrus, S.Z.; Abdulhafith Alfaqi, R.M. A Review of Extended Reality (XR) Technologies in the Future of Human Education: Current Trend and Future Opportunity. J. Hum. Centered Technol. 2022, 1, 81–96. [Google Scholar] [CrossRef]

- Liarokapis, F.; Milata, V.; Ponton, J.L.; Pelechano, N.; Zacharatos, H. XR4ED: An Extended Reality Platform for Education. IEEE Comput. Graph. Appl. 2024, 44, 79–88. [Google Scholar] [CrossRef] [PubMed]

- Meccawy, M. Creating an Immersive XR Learning Experience: A Roadmap for Educators. Electronics 2022, 11, 3547. [Google Scholar] [CrossRef]

- Aresh, B.; Vichare, P.; Dahal, K.; Leslie, T.; Gilardi, M.; Lovska, A.; Vatulia, G. Integration of Extended Reality (XR) in Non-Native Undergraduate Programmes. In Proceedings of the 2023 15th International Conference on Software, Knowledge, Information Management and Applications (SKIMA), Kuala Lumpur, Malaysia, 8–10 December 2023; pp. 285–292. [Google Scholar]

- Aguayo, C.; Eames, C. Using Mixed Reality (XR) Immersive Learning to Enhance Environmental Education. J. Environ. Educ. 2023, 54, 58–71. [Google Scholar] [CrossRef]

- Hawkinson, E.; Alizadeh, M.; Anesa, P.; Figueroa, R., Jr.; Hall, W.; Barr, M.; Ryszawy, J.; Esguerra, J.; Klaphake, J. Narrative Inquiry into the Challenges of Implementing XR Technologies in Education: Voices from Future Hub 2023. Together Res. 2024, 2024, 1–48. [Google Scholar] [CrossRef]

- Romero-Ayuso, D.; Toledano-González, A.; Rodríguez-Martínez, M.d.C.; Arroyo-Castillo, P.; Triviño-Juárez, J.M.; González, P.; Ariza-Vega, P.; Del Pino González, A.; Segura-Fragoso, A. Effectiveness of Virtual Reality-Based Interventions for Children and Adolescents with ADHD: A Systematic Review and Meta-Analysis. Children 2021, 8, 70. [Google Scholar] [CrossRef]

- Corrigan, N.; Păsărelu, C.-R.; Voinescu, A. Immersive Virtual Reality for Improving Cognitive Deficits in Children with ADHD: A Systematic Review and Meta-Analysis. Virtual Real. 2023, 27, 3545–3564. [Google Scholar] [CrossRef]

- Hutson, J. Social Virtual Reality: Neurodivergence and Inclusivity in the Metaverse. Societies 2022, 12, 102. [Google Scholar] [CrossRef]

- Chen, Y.; Zhou, Z.; Cao, M.; Liu, M.; Lin, Z.; Yang, W.; Yang, X.; Dhaidhai, D.; Xiong, P. Extended Reality (XR) and Telehealth Interventions for Children or Adolescents with Autism Spectrum Disorder: Systematic Review of Qualitative and Quantitative Studies. Neurosci. Biobehav. Rev. 2022, 138, 104683. [Google Scholar] [CrossRef]

- Hutson, J.; McGinley, C. Neuroaffirmative Approaches to Extended Reality: Empowering Individuals with Autism Spectrum Condition through Immersive Learning Environments. Int. J. Technol. Educ. Sci. 2023, 7, 400–414. [Google Scholar] [CrossRef]

- Yang, B.; Kristensson, P.O. Designing, Developing, and Evaluating AI-Driven Text Entry Systems for Augmentative and Alternative Communication Users and Researchers. In Proceedings of the 25th International Conference on Mobile Human-Computer Interaction, Athens, Greece, 26–29 September 2023; ACM: New York, NY, USA, 2023; pp. 1–4. [Google Scholar]

- Gligorea, I.; Cioca, M.; Oancea, R.; Gorski, A.-T.; Gorski, H.; Tudorache, P. Adaptive Learning Using Artificial Intelligence in E-Learning: A Literature Review. Educ. Sci. 2023, 13, 1216. [Google Scholar] [CrossRef]

- Cao, L.; Dede, C. Navigating a World of Generative AI: Suggestions for Educatorso Title; The Next Level Lab, Harvard Graduate School of Education: Cambridge, MA, USA, 2023. [Google Scholar]

- Nadeem, M. Generative Artificial Intelligence [GAI]: Enhancing Future Marketing Strategies with Emotional Intelligence [EI], and Social Skills? Br. J. Mark. Stud. 2024, 12, 1–15. [Google Scholar] [CrossRef]

- Deka, C.; Shrivastava, A.; Abraham, A.K.; Nautiyal, S.; Chauhan, P. AI-Based Automated Speech Therapy Tools for Persons with Speech Sound Disorder: A Systematic Literature Review. Speech Lang. Hear. 2024, 1–22. [Google Scholar] [CrossRef]

- Doshi, A.R.; Hauser, O.P. Generative AI Enhances Individual Creativity but Reduces the Collective Diversity of Novel Content. Sci. Adv. 2024, 10, eadn5290. [Google Scholar] [CrossRef]

- Jeun, Y.J.; Nam, Y.; Lee, S.A.; Park, J.-H. Effects of Personalized Cognitive Training with the Machine Learning Algorithm on Neural Efficiency in Healthy Younger Adults. Int. J. Environ. Res. Public Health 2022, 19, 13044. [Google Scholar] [CrossRef]

- Bodison, S.C.; Parham, L.D. Specific Sensory Techniques and Sensory Environmental Modifications for Children and Youth With Sensory Integration Difficulties: A Systematic Review. Am. J. Occup. Ther. 2018, 72, 7201190040p1–7201190040p11. [Google Scholar] [CrossRef]

- Serpa-Andrade, L.; Vélez, R.G.; Serpa-Andrade, G. Iteration of Children with Attention Deficit Disorder, Impulsivity and Hyperactivity, Cognitive Behavioral Therapy, and Artificial Intelligence. In Human Interaction, Emerging Technologies and Future Systems V. IHIET 2021. Lecture Notes in Networks and Systems; Springer: Cham, Switzerland, 2022; pp. 91–99. [Google Scholar]

- Bandi, A.; Adapa, P.V.S.R.; Kuchi, Y.E.V.P.K. The Power of Generative AI: A Review of Requirements, Models, Input–Output Formats, Evaluation Metrics, and Challenges. Future Internet 2023, 15, 260. [Google Scholar] [CrossRef]

- Băjenaru, L.; Smeureanu, I.; Balog, A. An Ontology-Based E-Learning Framework for Healthcare Human Resource Management. Stud. Inform. Control. 2016, 25, 100. [Google Scholar] [CrossRef]

- Bellani, M.; Fornasari, L.; Chittaro, L.; Brambilla, P. Virtual Reality in Autism: State of the Art. Epidemiol. Psychiatr. Sci. 2011, 20, 235–238. [Google Scholar] [CrossRef]

- Smithsonian AR Experiences. Available online: https://www.si.edu/spotlight/ar-experiences (accessed on 23 December 2024).

- Herrera, F.; Bailenson, J.; Weisz, E.; Ogle, E.; Zaki, J. Building Long-Term Empathy: A Large-Scale Comparison of Traditional and Virtual Reality Perspective-Taking. PLoS ONE 2018, 13, e0204494. [Google Scholar] [CrossRef] [PubMed]

- Yang, K.; Zhou, X.; Radu, I. XR-Ed Framework: Designing Instruction-Driven AndLearner-Centered Extended Reality Systems for Education. arXiv 2020, arXiv:2010.13779. [Google Scholar] [CrossRef]

- Ferreira, J.M.M.; Qureshi, Z.I. Use of XR Technologies to Bridge the Gap between Higher Education and Continuing Education. In Proceedings of the 2020 IEEE Global Engineering Education Conference (EDUCON), Porto, Portugal, 27–30 April 2020; pp. 913–918. [Google Scholar]

- Yavoruk, O. The Study of Observation in Physics Classes through XR Technologies. In Proceedings of the 2020 the 4th International Conference on Digital Technology in Education, Busan, Republic of Korea, 15–17 September 2020; ACM: New York, NY, USA, 2020; pp. 58–62. [Google Scholar]

- Erica, S.; Buchanan, R.; Cividino, C.; Shane, S.; Graham, E.; Shamus, P.S.; Bergin, C.; Kilham, J.; Summerville, D.; Scevak, J. Research—Highly Immersive Virtual Reality. Scan J. Educ. 2018, 37, 130–143. [Google Scholar]

- Rebenitsch, L.; Owen, C. Review on Cybersickness in Applications and Visual Displays. Virtual Real. 2016, 20, 101–125. [Google Scholar] [CrossRef]

- W3C Web Content Accessibility Guidelines (WCAG) International Standard. Available online: https://www.w3.org/WAI/standards-guidelines/wcag/ (accessed on 23 December 2024).

- W3C XR Accessibility User Requirements. Available online: https://www.w3.org/TR/xaur/#immersive-semantics-and-customization (accessed on 23 December 2024).

- Parliament, E. Decision (EU) 2022/2481 of the European Parliament and of the Council of 14 December 2022 Establishing the Digital Decade Policy Programme 2030. Available online: https://eur-lex.europa.eu/eli/dec/2022/2481/oj/eng (accessed on 23 December 2024).

- Aggarwal, S.; Chugh, N. Signal Processing Techniques for Motor Imagery Brain Computer Interface: A Review. Array 2019, 1–2, 100003. [Google Scholar] [CrossRef]

- Gioia, G.A.; Isquith, P.K.; Guy, S.C.; Kenworthy, L. Behavior Rating Inventory of Executive Function® Second Edition. APA PsycTESTS 2015. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).