3.1. Main Concepts

In the fields of computer science, artificial intelligence, and mathematical optimization, heuristics are indispensable tools for expediting problem-solving processes. They are particularly valuable when traditional methods are computationally prohibitive or when seeking exact solutions is infeasible due to the problem’s inherent complexity. It is important to note, however, that heuristics do not guarantee the discovery of the optimal solution, categorizing them as approximate algorithms. These algorithms are adept at rapidly and efficiently generating solutions that closely approximate the optimal one. In some instances, they may even achieve the exact optimal solution, but they remain classified as heuristics until their output is formally proven to be optimal [

18].

Expanding on this concept, metaheuristics provide flexible and adaptive frameworks for designing heuristics, enabling them to address a wide variety of combinatorial and global optimization problems [

19]. Metaheuristics generalize the heuristic approach, introducing higher-level strategies to explore the solution space more effectively and avoid pitfalls such as premature convergence to suboptimal solutions.

An optimization problem can be formally described as follows:

where

represents the ambient solution space encompassing all potential solutions,

denotes the feasible subset of where solutions satisfy predefined constraints,

x is an individual feasible solution within , and

f is the real-valued objective function to be minimized.

In the context of MSSC (

1), the feasible solution space simplifies to

, reflecting the continuous multidimensional nature of the clustering problem.

Variable Neighborhood Search (VNS), introduced by Mladenovic in 1997 [

4], is a modern metaheuristic framework that provides a flexible and robust approach to solving combinatorial and continuous nonlinear global optimization problems. The strength of VNS lies in its systematic exploitation of neighborhood changes, enabling both descent to local minima and escape from the valleys containing them [

4,

19,

20]. This method strategically explores distant neighborhoods of the current solution and moves to a new solution only when such movement yields an improvement in the objective function.

VNS serves as a powerful tool for constructing innovative global search heuristics by integrating existing local search methods. Its effectiveness is grounded in the following foundational principles:

- Fact 1:

A solution that is a local optimum under one neighborhood structure may not remain optimal when assessed using a different neighborhood.

- Fact 2:

A globally optimal solution is also a local optimum for all possible neighborhood structures.

- Fact 3:

For many optimization problems, local optima across one or multiple neighborhoods tend to be located in close proximity to one another.

Fact 1 establishes the basis for using diverse and complex moves to uncover local optima across multiple neighborhood structures. Fact 2 suggests that incorporating a larger variety of neighborhoods into the search process increases the likelihood of discovering a global optimum, particularly when the current local optima are suboptimal. Together, these insights propose an intriguing strategy: a solution that is a local optimum across several neighborhood structures has a higher probability of being globally optimal compared to one that is optimal within a single neighborhood [

21].

Fact 3, which arises primarily from empirical observations, underscores that local optima often provide valuable insights into the characteristics of the global optimum. In essence, local optima in optimization problems tend to share structural similarities. This phenomenon highlights the utility of thoroughly exploring the neighborhoods of a given local optimum. For instance, if we aggregate all local solutions

of the MSSC problem as defined in Equation (

1) into a single set, it is likely that a significant subset of these locally optimal centroids will be clustered close to each other, while a few may deviate. This pattern suggests that the global optimum shares overlaps in certain variables (specific coordinates of the vector representations) with those found in local optima.

However, identifying these overlapping variables in advance is inherently difficult. Consequently, a methodical exploration of neighborhoods surrounding the current local optimum remains prudent, continuing until a superior solution emerges. This systematic neighborhood search not only enhances the likelihood of escaping suboptimal solutions but also leverages the structural tendencies of the problem to converge more efficiently toward the global optimum.

Variable Neighborhood Search (VNS) operates through two fundamental phases: the improvement phase, which refines the current solution by descending to the nearest local optimum, and the shaking (or perturbation) phase, which seeks to escape local minima traps. These phases are alternated with a neighborhood change step, and the process repeats until predefined stopping criteria are satisfied. The VNS framework revolves around three primary, iterative steps:

a shaking procedure, which introduces a controlled perturbation to the current solution to explore new areas of the solution space;

an improvement procedure, which applies local search techniques to refine the perturbed solution, potentially reaching a local optimum;

a neighborhood change step, which alters the neighborhood structure, allowing exploration of different regions of the solution space.

To better understand the VNS framework, let us define some essential concepts:

The incumbent solution is the current best-known solution, denoted as x, which minimizes the objective function value among all solutions examined thus far.

For a given solution

x, its neighborhood comprises the set of solutions that can be directly obtained from

x by applying a specific local change. Neighborhoods are typically defined using a metric (or quasi-metric) function, denoted as

. For a non-negative distance threshold

, the neighborhood of

x is formally defined as

Examples of neighborhoods:

In continuous optimization problems over , a neighborhood like might represent an Euclidean ball of radius 1 centered at x, while could include solutions obtained by modifying exactly three coordinates of x.

In the Traveling Salesman Problem (TSP), a common neighborhood involves all tours generated by reversing a subsequence of the current tour, an operation known as the “2-opt” move.

Neighborhoods play a critical role in both the shaking and improvement phases, enabling VNS to effectively explore the solution space and navigate complex optimization landscapes. By iteratively combining these steps, VNS achieves a balance between diversification (escaping local optima) and intensification (refining toward better solutions), making it a powerful tool for solving global optimization problems.

A neighborhood structure, denoted as

, is defined as an ordered collection of operators:

where each operator

maps a solution

to a predefined set of neighboring solutions

within the feasible solution space

. Here,

represents the power set of

, and

indexes the individual neighborhoods. This hierarchical arrangement of neighborhoods is a cornerstone of the VNS framework. When local search within one neighborhood fails to uncover an improved solution, the algorithm systematically transitions to the next neighborhood in the sequence, thereby expanding the search scope and increasing the likelihood of finding superior solutions. By dynamically adjusting the neighborhood structures during the search process, VNS can effectively navigate complex solution landscapes and escape local optima. Throughout this discussion, the terms “neighborhood structure” and “neighborhood” are used interchangeably to refer to both the collection of operators

and the set of neighborhoods

associated with a given solution

x.

Each neighborhood structure employs a unique method to define the relationship between a solution and its neighbors. For example, in the context of the Traveling Salesman Problem (TSP), a neighborhood structure can be constructed using “k-opt” moves. In this case, k edges are removed from the current tour, and the resulting segments are reconnected in a new configuration to generate alternative solutions.

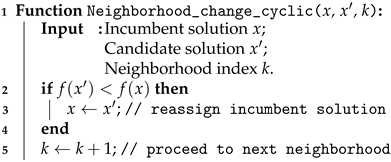

To distinguish between the neighborhood structures used in different phases of the VNS algorithm, we introduce two separate notations: is reserved for the neighborhood structures utilized during the shaking phase, which introduces perturbations to the incumbent solution, while N is used for the neighborhoods explored during the improvement phase, where local search is conducted to refine the solution. This distinction ensures clarity and emphasizes the complementary roles of shaking and local search in the VNS process.

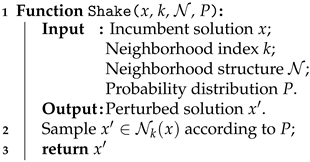

3.2. The Shaking Procedure

The shaking procedure is a critical step in the VNS framework, designed to help the algorithm escape local optima traps by introducing controlled perturbations to the current solution.

The simplest form of a shaking procedure involves randomly selecting a solution from the neighborhood , where k, the shaking power, is a predetermined index that defines the scope of the neighborhood. The shaking power k determines the degree of perturbation applied to the current solution during the shaking step. It controls how far the algorithm explores the search space by systematically increasing the “intensity” or “distance” of the perturbation.

While effective in many cases, a purely random jump within the k-th neighborhood can occasionally result in excessively aggressive perturbations, particularly for problems where the objective function is highly sensitive to changes in the solution. To address this, alternative approaches such as intensified shaking may be employed, where the perturbation considers the sensitivity of the objective function to small variations in the decision variables. Such methods allow for more focused and deliberate perturbations.

However, for the purposes of this work, we adopt a straightforward random-selection shaking approach, which is both effective and computationally efficient. In this method, the next solution is chosen at random from the

k-th neighborhood

based on a predefined probability distribution. This ensures sufficient exploration of the solution space while maintaining simplicity. The pseudocode for this procedure is presented in Algorithm 1.

| Algorithm 1: Shaking Procedure |

![Applsci 15 01032 i001]() |