1. Introduction

Over the past few decades, human activity recognition (HAR) based on wearable sensors has garnered significant attention due to its wide applications in health monitoring, sports training, and medical rehabilitation. In the field of health monitoring, HAR technology enables continuous tracking of daily activities for the elderly and patients, providing early warnings for abnormal behaviors and ensuring their health and safety [

1,

2,

3]. In sports training, HAR can deliver precise, quantitative feedback by analyzing athletes’ movement patterns, thereby enhancing training efficiency and preventing sports injuries [

4,

5,

6]. Furthermore, in medical rehabilitation, HAR is widely applied in the control of prosthetics and assistive devices for patients with motor disabilities by detecting muscle activity and hand movements [

7,

8,

9].

Early wearable sensor-based HAR methods primarily relied on manually extracted time-domain features (such as mean, variance, standard deviation, etc.) and frequency-domain features (such as centroid frequency, average frequency, etc.), followed by the application of traditional machine learning algorithms (such as Support Vector Machines, Decision Trees, K-Nearest Neighbors, etc.) for classification [

10,

11]. However, these methods exhibit limited performance when dealing with complex sensor data. On the one hand, manually extracted features fail to fully capture the rich information in the raw signals, resulting in constrained recognition accuracy. On the other hand, feature engineering is heavily dependent on expert knowledge, making it both time-consuming and labor-intensive, and difficult to adapt to diverse application scenarios and complex sensor data [

12,

13,

14].

With the rapid development of deep learning technologies, significant breakthroughs have been made in various fields such as image recognition [

15] and natural language processing [

16], and these technologies have begun to be widely applied in HAR [

17]. Deep learning models, particularly Convolutional Neural Network (CNN) and Recurrent Neural Network (RNN), are capable of automatically learning and extracting high-level, abstract features from raw sensor data through their multi-layered network structures. This greatly reduces the reliance on manual feature engineering and significantly improves the accuracy of activity recognition.

Although deep learning-based HAR models have achieved remarkable progress, traditional architectures still encounter several technical challenges when dealing with long-term time-series sensor data. Models such as CNNs and RNNs have difficulty capturing long-range temporal dependencies inherent in long-term sequences. In addition, as the network depth increases, the extracted features tend to include a large amount of redundant information, which degrades the overall recognition efficiency.

To address the above challenges, this paper proposes an attention-based CNN– Bidirectional Gated Recurrent Unit (BiGRU)–Transformer hybrid deep learning model, aiming to more effectively extract key features from long-term time-series sensor data and significantly improve the accuracy and generalization of human activity recognition. The main contributions of this work can be summarized as follows:

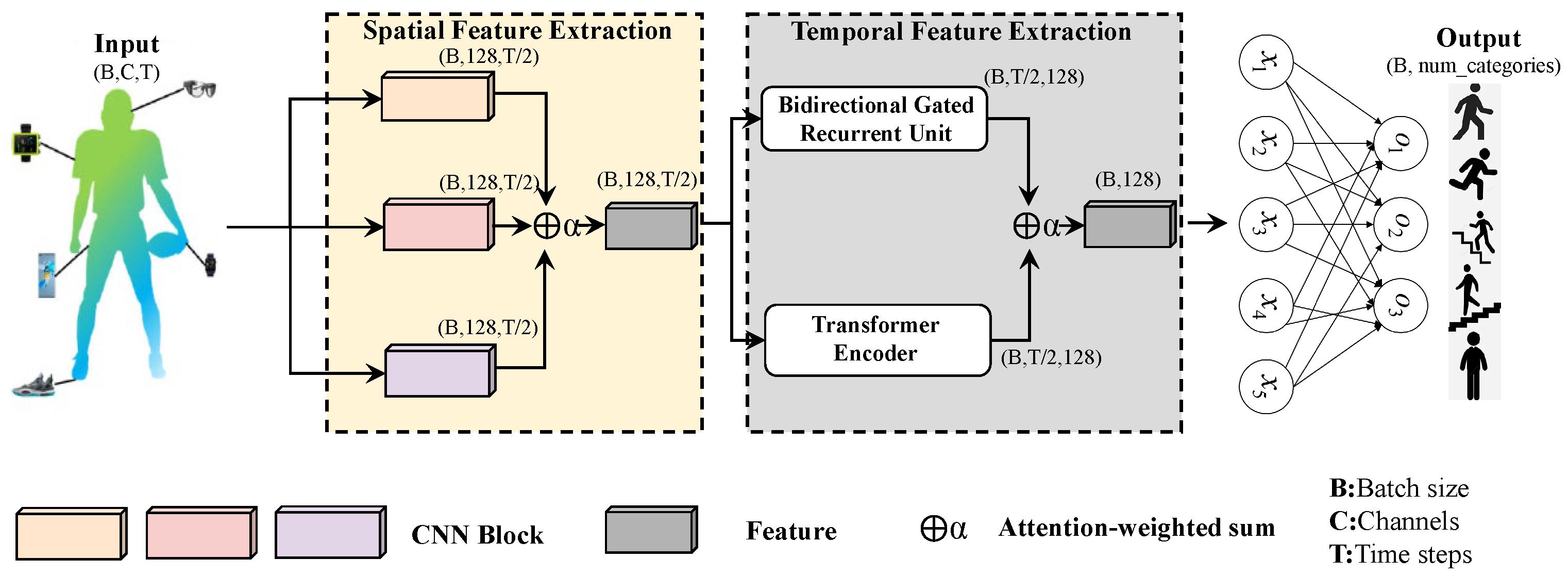

To comprehensively extract spatial features, a multi-branch Convolutional Neural Network module is employed. Unlike previous models, which typically use a single convolution kernel, this model uses three convolutional kernels of different sizes to capture multi-scale local spatial features. This multi-kernel approach enhances the model’s ability to capture a broader range of spatial patterns from the input data.

To effectively extract temporal features from long-term time-series data, the model integrates the strengths of the BiGRU module in modeling short-range dependencies and the Transformer module in capturing long-range dependencies. This hybrid architecture, combining RNN and Transformer, distinguishes it from prior models that typically rely solely on RNNs. The inclusion of the Transformer enables the model to better capture complex, long-term temporal relationships in sensor data, improving its temporal modeling capabilities.

To reduce feature redundancy and emphasize critical information, an attention mechanism is introduced to dynamically weight the extracted features. Unlike prior models that often rely on simple channel stacking, which may result in redundant feature representations, our attention mechanism suppresses unnecessary information and emphasizes the most important features. This approach not only reduces data redundancy but also enhances the classification performance by focusing on the most relevant aspects of the data.

The effectiveness and superiority of the proposed model were evaluated using the WISDM, PAMAP2, and UCI-HAR datasets.

The subsequent sections of the paper are structured as follows:

Section 2 reviews related works;

Section 3 provides a detailed description of the proposed CNN-BiGRU-Transformer model;

Section 4 describes the model setup, including dataset description, data preprocessing, evaluation metrics, and hyperparameter settings.

Section 5 presents the evaluation results and provides a detailed analysis.

Section 6 discusses the ablation study in detail. Finally,

Section 7 concludes the paper and outlines possible directions for future research.

2. Related Work

The research methods in the field of HAR have continuously evolved with advancements in technology. This section reviews research related to wearable sensor-based HAR, with a particular focus on deep learning-based approaches and their progress and challenges in feature extraction. Early wearable sensor-based HAR studies primarily employed traditional machine learning methods. Researchers manually designed and extracted time-domain and frequency-domain features from sensor signals, and then used classifiers such as Support Vector Machine, Decision Tree, or K-Nearest Neighbor for activity classification. However, these methods heavily relied on manual feature design, had limited feature representation capabilities, and struggled to fully exploit the deep information within complex sensor data. With the rise of deep learning, the Convolutional Neural Network was introduced to the HAR field due to its powerful local feature extraction capabilities. Sena et al. [

18] proposed a CNN-based approach for human activity recognition, which achieved significant improvements compared to traditional machine learning methods. Wan et al. [

19] developed a CNN model for activity classification, and results showed that CNN surpassed Multi-layer Perceptrons and Support Vector Machine in overall recognition accuracy. However, CNN mainly focuses on local spatial features, and its ability to model temporal dependencies in sequential data remains limited.

To address the limitations of CNN in modeling temporal dependencies in sequential data, researchers have begun to incorporate RNN and its variants, such as the Long Short-Term Memory network (LSTM) and the Gated Recurrent Unit (GRU), to solve this issue. Wang et al. [

20] proposed an LSTM-based HAR classification algorithm, which achieved high recognition accuracy on the UCI-HAR dataset. Li et al. [

21] proposed a deep learning model based on residual blocks and Bidirectional Long Short-Term Memory (BiLSTM). The proposed model was evaluated on two public datasets, WISDM and PAMAP2.

Simultaneously, researchers have proposed various CNN-RNN hybrid architecture models, which effectively combine the advantages of CNN in spatial feature extraction and RNN in modeling temporal dependencies. In these models, CNN is typically used to extract high-level spatial features and may also perform sequence dimensionality reduction, while RNN is employed to process the reduced feature sequence, modeling the temporal dependencies within the sequence and extracting temporal features. The CNN-LSTM model proposed by Xia et al. [

22] demonstrated superior performance on several benchmark HAR datasets. Challa et al. [

23] explored the combination of multi-branch CNN and BiLSTM, utilizing CNN branches with different convolution kernel sizes to extract multi-scale local features and employing BiLSTM to extract temporal features from the sequence. Khan et al. [

24] proposed a hybrid model combining 1D-CNN and LSTM for the task of transition activity recognition. Albogamy [

25] used a hybrid LSTM–GRU architecture and achieved excellent performance on multiple datasets. Although LSTM and GRU are capable of modeling dependencies within sequential data to some extent, their modeling ability and computational efficiency still have considerable room for improvement when dealing with longer data sequences.

In recent years, the Transformer model [

16] has achieved tremendous success in the field of natural language processing. Its architecture, based on self-attention mechanisms and parallel computation, has demonstrated remarkable capabilities in modeling long-range dependencies within sequential data. The Transformer has been widely applied in various fields, including computer vision [

26], speech processing [

27], and time-series analysis [

28]. In the context of HAR, the Transformer offers new insights for addressing the challenge of modeling dependencies in long-term time-series data. However, directly applying the standard Transformer to high-dimensional sensor data may lead to prohibitively high computational costs. Some studies have attempted to combine a Transformer with a CNN for dimensionality reduction. For instance, Al-Qaness et al. [

29] proposed the PCNN-Transformer model for fall detection.

Furthermore, in order to optimize feature representation and reduce information redundancy, attention mechanisms have been increasingly applied to HAR models [

30,

31,

32]. The attention mechanism assigns different weights to different feature dimensions or time steps, enabling the model to focus on the most important information for the task at hand. Khan et al. [

33] enhanced the feature selection capability of the CNN model by incorporating the Squeeze-and-Excitation (SE) attention module. Khatun et al. [

34] combined the self-attention mechanism with the CNN-LSTM model, achieving high recognition accuracy on the H-Activity dataset, thereby validating the effectiveness of attention mechanisms in enhancing HAR performance.

Based on existing research, this paper proposes an innovative CNN-BiGRU-Transformer hybrid model that integrates the strengths of each module to more effectively address the human activity recognition problem in long-term wearable sensor time-series data.

3. Proposed Model

The CNN-BiGRU–Transformer model proposed in this paper consists of three main modules. The first module is the multi-branch CNN module, which extracts local spatial features from multi-sensor signals and captures the interrelationships between different sensors, such as changes in acceleration and angular velocity. These patterns effectively represent the local dynamic features of the motion state. The second module is the BiGRU–Transformer, responsible for modeling the dependencies in the temporal data and extracting temporal features. Finally, a fully connected layer is used for classification. This model integrates the advantages of CNN, BiGRU, and Transformer in feature extraction, resulting in more comprehensive feature information, which improves the accuracy and generalization ability of the model. The overall structure of the CNN-BiGRU–Transformer model is shown in

Figure 1.

3.1. Spatial Feature Extraction Module

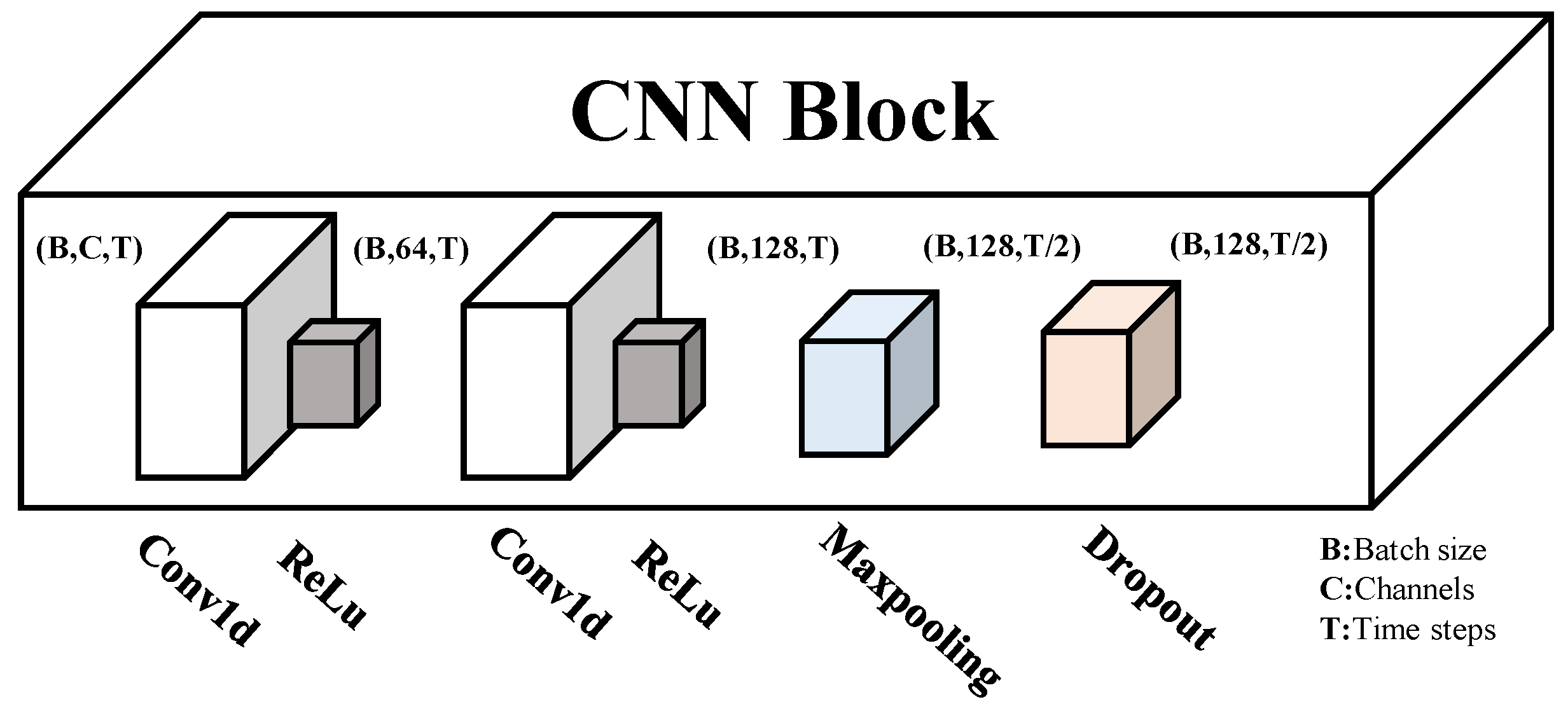

To accommodate different application scenarios, convolutional layers of varying dimensions have been developed, with one-dimensional convolutional layers primarily used for processing time-series data [

35]. The proposed model incorporates one-dimensional convolutional layers with three kernel sizes (3, 5, and 7), enabling it to capture features at multiple scales. The CNN block architecture used in this paper is shown in

Figure 2. In branch

, assuming the input tensor is

, two consecutive convolutional operations are applied, followed by max-pooling to reduce the temporal dimensionality of the time-series data. The calculation process is shown in Equations(

1)–(

3) [

36].

Considering the inconsistency of input parameters across the WISDM, UCI-HAR, and PAMAP2 datasets, the proposed model is designed as an adaptive framework capable of handling different input dimensions. These datasets vary in the number and types of sensor channels, ranging from simple tri-axial accelerometer signals to multimodal inertial measurements. To ensure compatibility, all data are uniformly represented as

, where

T is the time window length and

C the number of sensor channels. All available channels are utilized in the CNN stage, and multi-kernel convolutions (3, 5, 7) are applied to extract multi-scale features. This adaptive design effectively mitigates input inconsistency across datasets and enhances the generalization ability of the model.

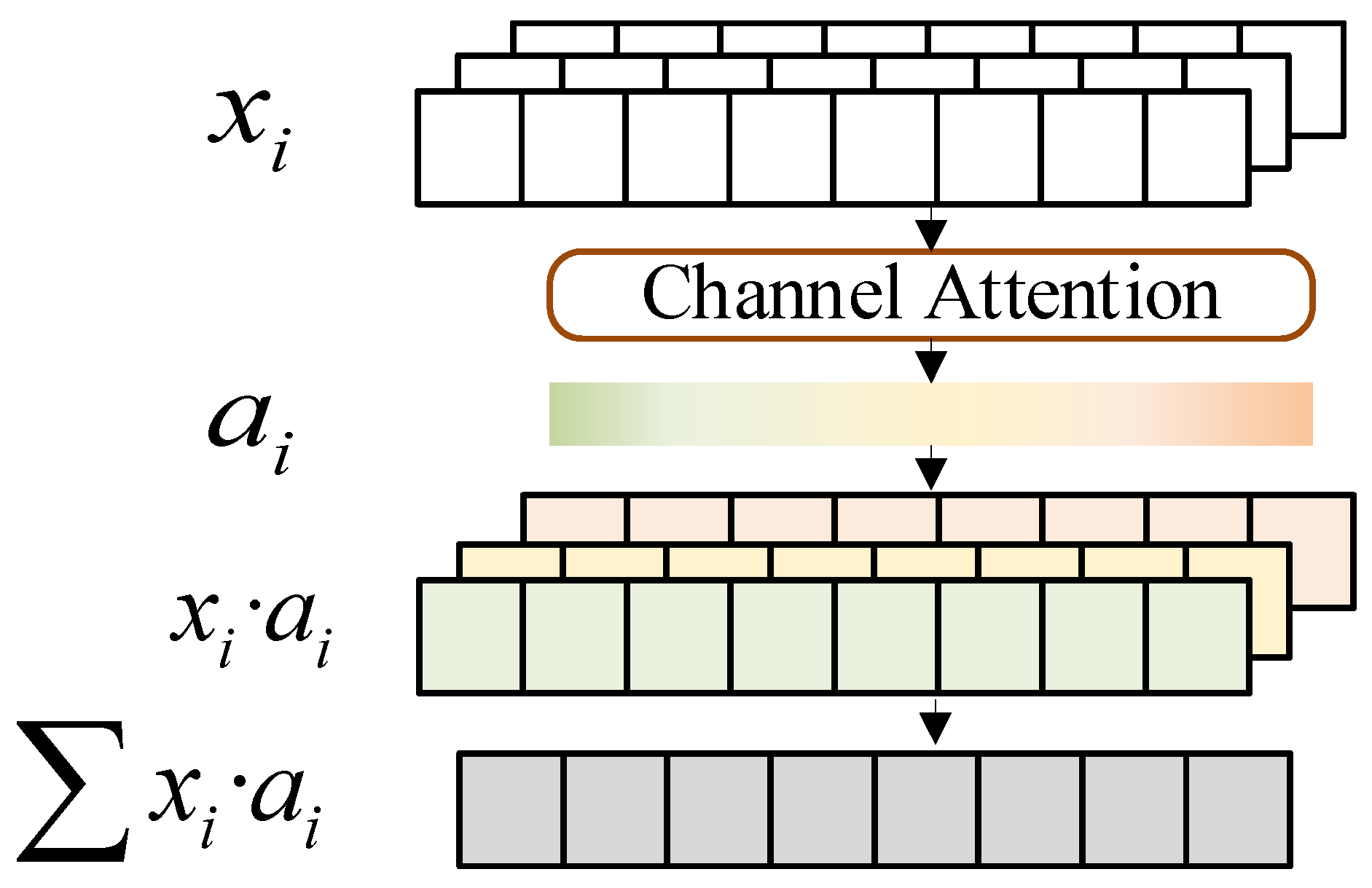

An attention mechanism is employed to perform weighted fusion of features from different branches, allowing the network to adjust weights based on the contributions of each branch. By using different convolutional kernels to capture distinct features, the network leverages weighted fusion to effectively utilize these diverse features, thereby enhancing the model’s overall performance. The application of the channel attention mechanism is shown in

Figure 3.

First, average pooling is applied to the features from the three branches to extract the global features of each branch. A fully connected network is then used to generate attention weights for the three branches, which are normalized using the Softmax function. The features of the three branches are subsequently weighted and summed according to the attention weights, emphasizing the contributions of the most important branches. The final calculation process is as follows (Equation (

4) [

37]):

Here, represents the attention weight corresponding to branch .

3.2. Temporal Feature Extraction Module

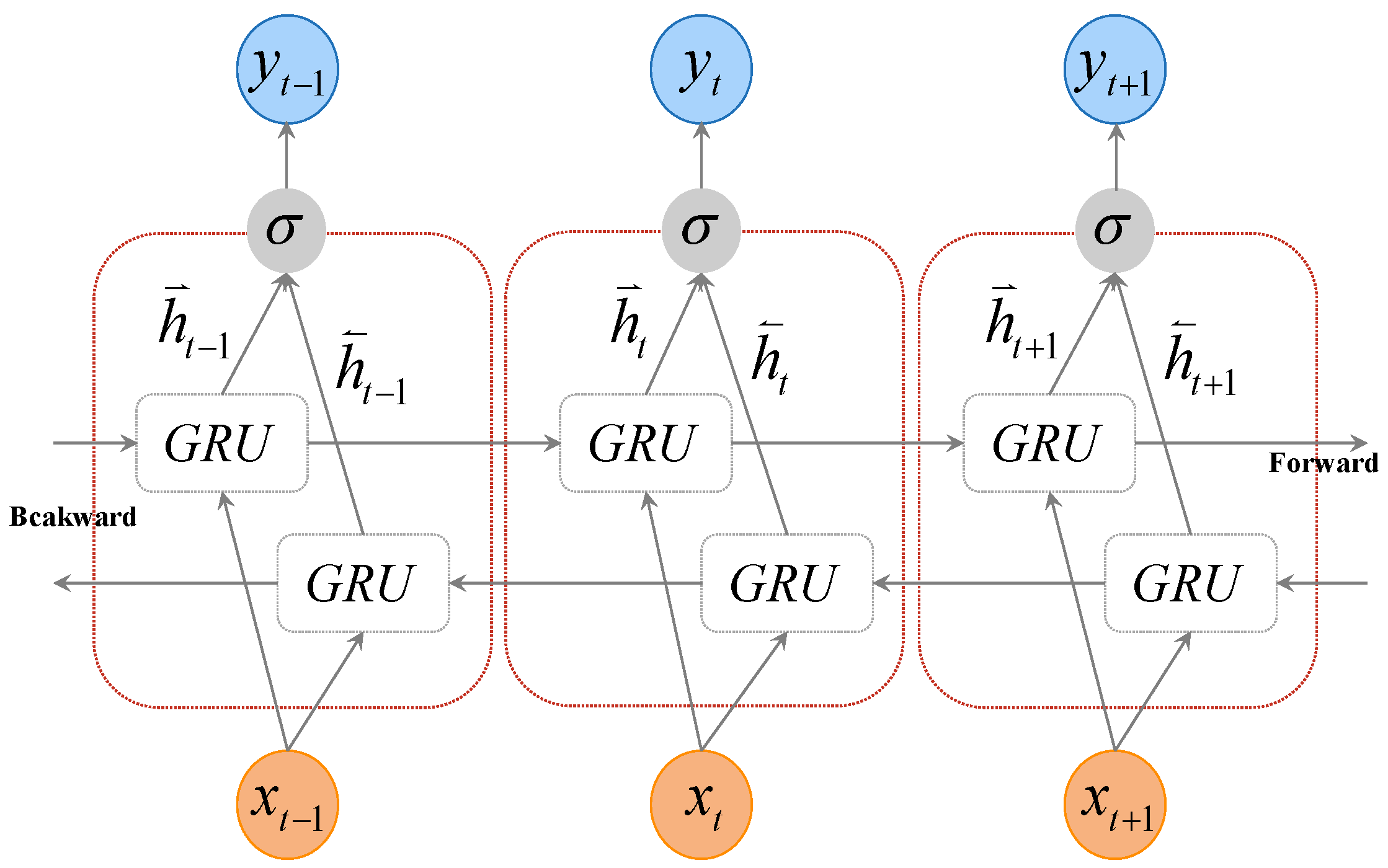

Human activity data is a form of time-series data, for which considering the temporal context is crucial for accurate activity recognition. The BiGRU, as a simplified and efficient variant of Recurrent Neural Networks, offers a more compact structure along with faster computation and training. In this study, BiGRU is employed to model human activity time-series data and capture contextual information within sequences.

By leveraging its bidirectional structure, BiGRU can simultaneously process forward and backward information within sequences, significantly enhancing classification performance. The framework of the BiGRU is shown in

Figure 4.

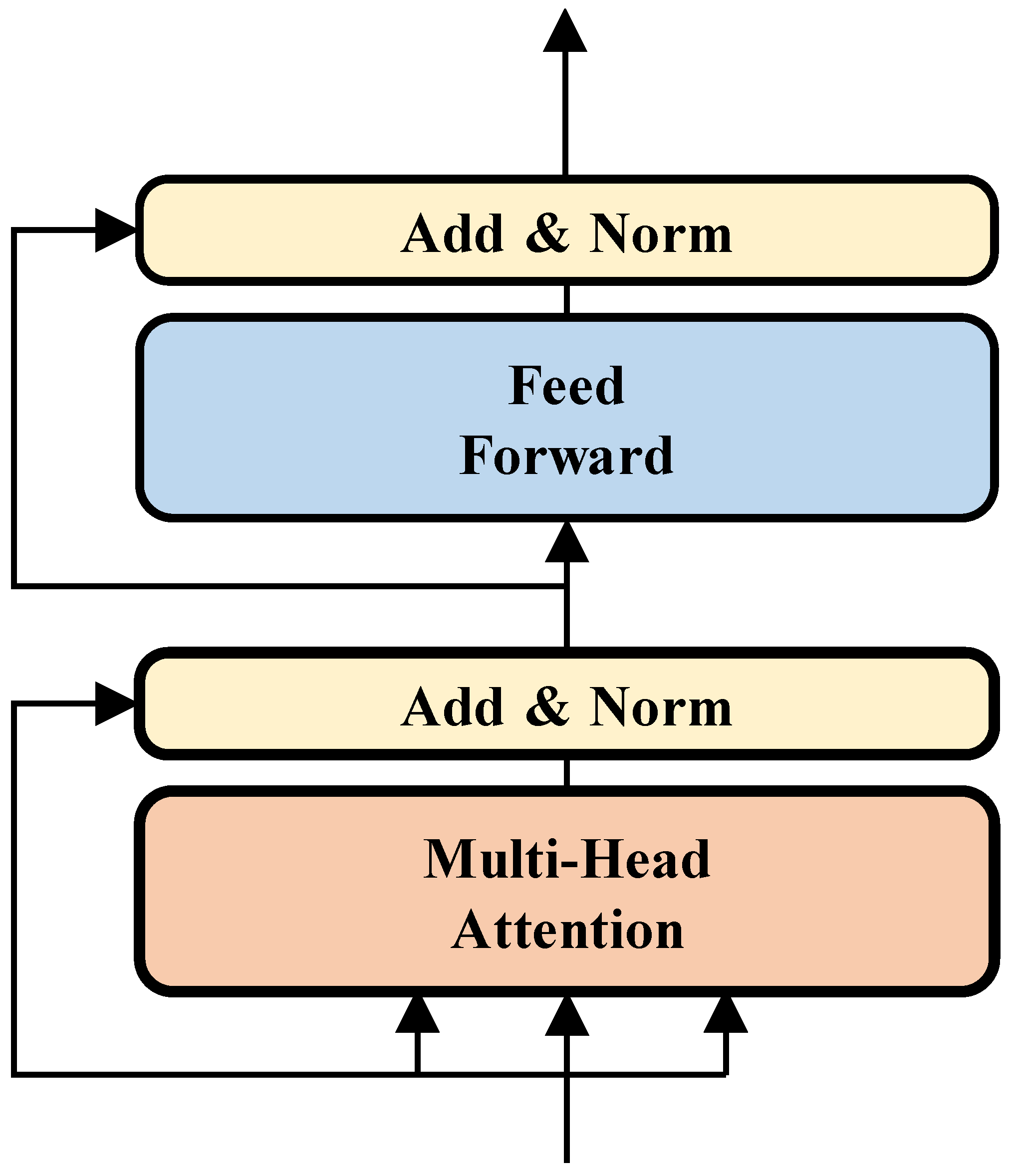

The Transformer model is widely used in natural language processing. However, due to differences in data types and task objectives, certain modifications are necessary when applying it to human activity recognition tasks. In this study, the Encoder module of the Transformer model is employed to construct a time-series model, enabling the extraction of temporal features from human activity data. The design of the Transformer Encoder is shown in

Figure 5.

The self-attention mechanism is the core component of the Transformer model. The computation process is as follows (Equation (

5) [

16]):

Here,

,

, and

represent the query matrix, key matrix, and value matrix, respectively, while

denotes the dimensionality of each vector in the input tensor. The attention matrix is obtained by computing the dot product between

and

, scaling the result, and then applying the Softmax function to calculate the weight of each vector in the input tensor

. The multi-head self-attention mechanism is expressed as follows (Equation (

6) [

16]):

The calculation for each head is as follows (Equation (

7) [

16]):

where

,

, and

represent the linear transformation matrices for each head, and a linear transformation matrix is applied after the multi-head concatenation.

A core component of the model is the Feed-Forward Network (FFN), which enhances the model’s ability to process information by performing nonlinear mapping and dimensional expansion on the input data. This allows it to more accurately capture features of the input sequence, significantly improving performance in natural language processing tasks.

The parallel architecture of the Transformer and BiGRU accelerates the model’s training process by improving computational efficiency. Similar to the feature fusion approach used in the multi-branch CNN, features extracted by the Transformer and BiGRU are fused using an attention mechanism. This mechanism selects the optimal feature representations, which improves the final classification performance.

3.3. Classification Module

At the final stage of the model, a fully connected layer is used to integrate the extracted features and perform the final classification. The fully connected layer combines features through linear transformations and nonlinear activation functions. The computation is defined as follows (Equation (

8) [

38]):

Here,

represents the weight matrix, and

denotes the bias vector. At the final classification stage, the output

is passed through the Softmax function to compute the probability of each class; the calculation formula is as follows (Equation (

9) [

39]):

Here, i represents the k element of the output, and denotes the number of classes.

In the final step, the model provides results based on the highest predicted probability. The calculation process is as follows Equation (

10) [

36]):

4. Model Setup

The proposed CNN–BiGRU–Transformer model was implemented in Python using PyTorch and executed on a workstation equipped with an Intel Core i5 CPU and an NVIDIA RTX 4060 Ti GPU. The model was trained and evaluated on the WISDM, PAMAP2, and UCI-HAR datasets. The detailed software and hardware configurations are summarized in

Table 1.

4.1. Dataset Description

WISDM [

40]: The WISDM dataset was created by the Department of Computer and Information Science at Fordham University, New York, NY, USA, to support research in human activity recognition. The dataset contains 1,098,207 samples, with the proportions of each activity category shown in

Table 2. It includes data from 36 participants, each of whom wore a smartphone, and the data was collected using an accelerometer at a frequency of 20 Hz. The WISDM dataset records six distinct activities: standing, sitting, stair climbing, walking, jogging, and others. Walking accounts for the largest proportion at 39%, while standing represents the smallest proportion at 4%. The dataset samples are labeled according to activity type, facilitating human activity classification and recognition tasks. This dataset is widely used in fields such as human activity recognition and health monitoring.

PAMAP2 [

41]: The PAMAP2 dataset was developed by the Technical University of Munich, Munich, Germany, with the aim of providing high-quality, multidimensional data for human activity recognition research. The dataset records activity data from nine participants using multiple wearable sensors, including heart rate monitors, accelerometers, and gyroscopes, at a sampling frequency of 100 Hz. PAMAP2 includes 18 different daily activities, such as walking, running, stair climbing, and cycling. The time-series data for each activity includes measurements from various sensors, including accelerometers and heart rate monitors, with the sample distribution for each activity shown in

Table 3.

UCI-HAR [

42]: The UCI-HAR dataset was collected by the Human Activity Recognition Research Group at the University of California, Irvine. It contains sensor data recorded from a smartphone placed on the waist of 30 volunteers performing daily activities. The dataset includes a total of 10,299 samples, and the distribution of activity categories is shown in

Table 4. The signals were recorded using a built-in accelerometer and gyroscope at a sampling frequency of 50 Hz. The dataset comprises six activities: walking, walking upstairs, walking downstairs, sitting, standing, and lying. Among these categories, lying accounts for the highest proportion at 19.5%, while walking downstairs exhibits the lowest proportion at 13.7%. The dataset provides meticulously labeled activity samples, making it widely used for human activity recognition, wearable computing research, and mobile health monitoring applications.

4.2. Dataset Preprocessing

The dataset used in this study is collected from real-world scenarios and contains a large amount of incomplete, inconsistent, imbalanced, noisy, and outlier data, requiring preprocessing before further analysis. The data preprocessing pipeline mainly includes normalization, handling missing values, and data segmentation.

The sensor time series data from all three datasets are segmented using a sliding-window approach. For the WISDM and PAMAP2 datasets, window sizes of 200 and 171 sample points are used, respectively, whereas the UCI-HAR dataset follows its standard protocol with a window size of 128 sample points. A 50% overlap is applied across all datasets to improve data utilization and preserve temporal continuity at window boundaries. After segmentation, the samples are divided into training, validation, and test sets according to a 7:2:1 ratio. Specifically, the WISDM dataset yields 10,709 samples (7496 training, 2142 validation, 1071 testing), the PAMAP2 dataset yields 6038 samples (4227 training, 1207 validation, 604 testing), and the UCI-HAR dataset yields 10,299 samples (7209 training, 2060 validation, 1030 testing). The training set is used for model optimization, the validation set for hyperparameter tuning and ablation experiments, and the test set for final model evaluation, ensuring a balanced distribution and sufficient samples for reliable performance assessment.

Because the data originate from heterogeneous sensors with different measurement ranges, normalization is performed to scale all features to a consistent range. This step is crucial for stabilizing and accelerating the training of deep learning models, as it improves the efficiency and convergence behavior of gradient-based optimization algorithms.

4.3. Evaluation Metrics

Most activities in the dataset are imbalanced, with certain activities having significantly higher proportions than others. Therefore, relying solely on overall recognition accuracy cannot fully reflect the algorithm’s performance. To address this, multiple evaluation metrics, including accuracy, precision, recall, F1 score, and the confusion matrix, were used to comprehensively evaluate the model.The calculation formulas are shown in Equations (

11)–(

14) [

43].

4.4. Hyperparameter Evaluation

Hyperparameters play a crucial role in the performance of deep learning models. Identifying the optimal set of hyperparameters resembles searching for the best solution among numerous possibilities, which is often challenging. Thus, an iterative approach is adopted in this study, adjusting various hyperparameter configurations to determine the optimal combination. The evaluation of hyperparameters is based on the WISDM dataset. The model is first trained on the training set, and then evaluated using the validation set to obtain corresponding results. Based on these results, the model’s hyperparameters are assessed, with a focus on the following key aspects.

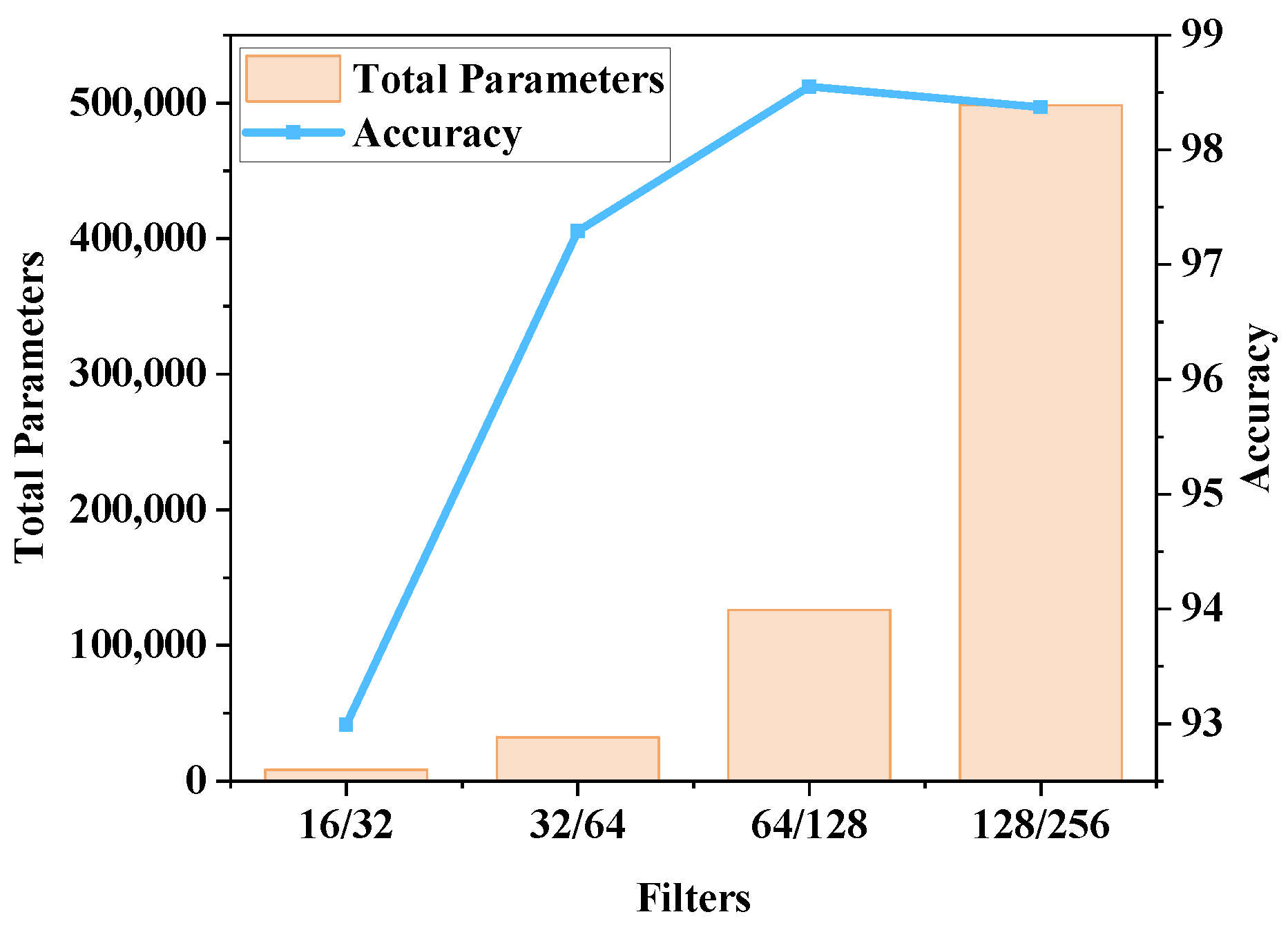

4.4.1. Number of Filters in Convolutional Layers

The number of filters in the convolutional layers determines the model’s feature extraction ability. If the number of filters is too small, the model cannot extract sufficient features, leading to poor recognition performance. Conversely, if the number of filters is too large, the feature extraction ability improves, but the model’s complexity increases. Therefore, finding a balanced number of filters is crucial to ensuring high performance while minimizing unnecessary computational overhead. In this study, four different filter configurations were tested for the first and second convolutional layers, with combinations such as (16/32, 32/64, 64/128, 128/256). By comparing the parameter count and recognition accuracy for each configuration, the optimal combination was identified. As shown in

Figure 6, the 64/128 combination achieves high recognition accuracy while maintaining relatively low model complexity.

4.4.2. Batch Size

The batch size determines the amount of data used for each model update. A smaller batch size may lead to unstable updates, whereas a larger batch size can slow down convergence and increase training difficulty. To identify the optimal batch size for the model, evaluations were conducted using different settings (32, 64, 128, and 256). The evaluation results are presented in

Figure 7, where it can be observed that a batch size of 128 achieves the highest recognition accuracy.

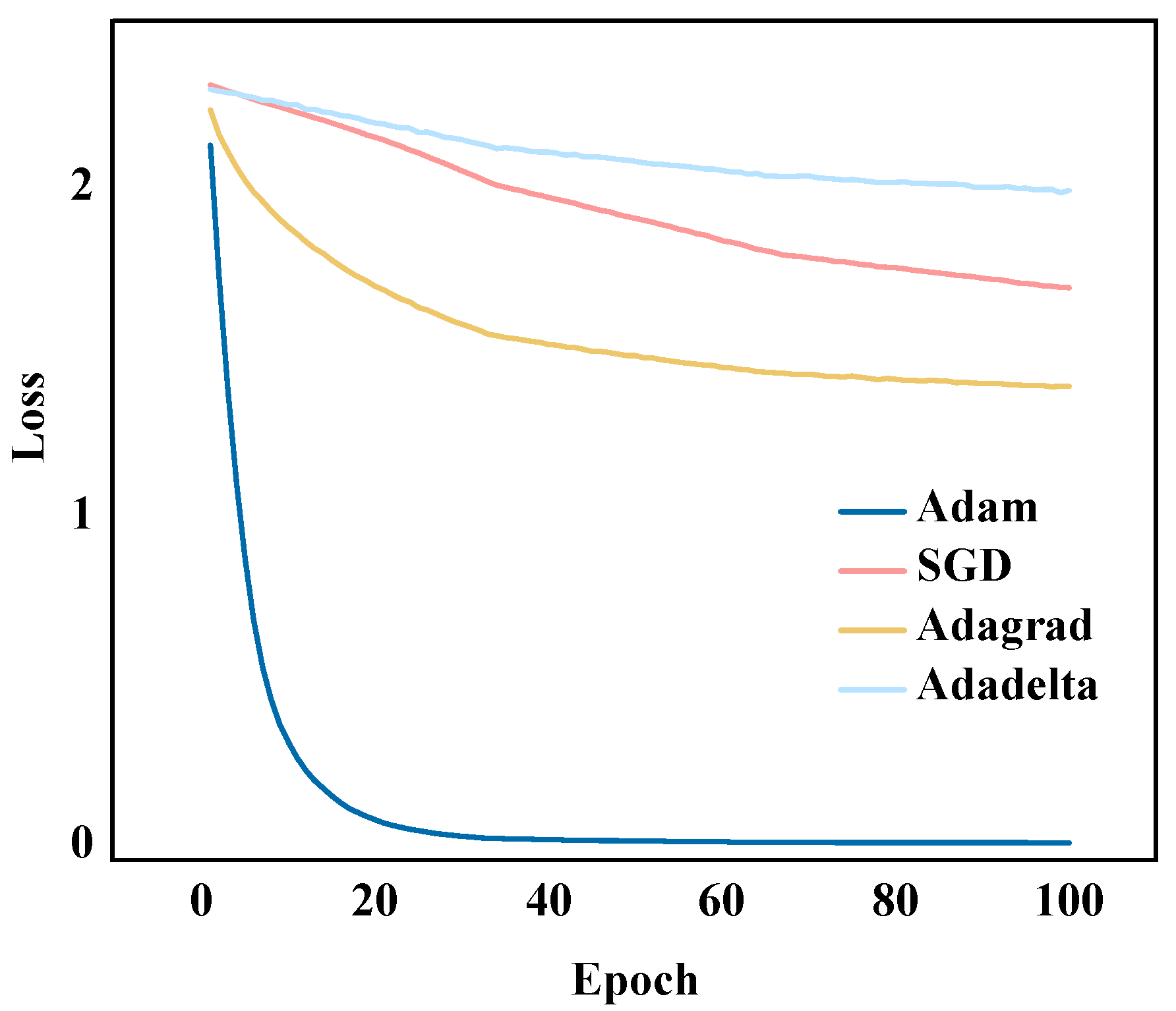

4.4.3. Choice of Optimizer

The optimizer is a crucial factor affecting both the training performance and convergence speed of the model. Different optimizers (such as Adam, SGD, and Adagrad) can have a significant impact on model performance. In this study, commonly used optimizers were evaluated and compared to determine the most suitable choice for the model. The evaluation results are presented in

Figure 8, where it can be observed that the Adam optimizer achieves the highest training efficiency.

Therefore, the final set of hyperparameters adopted in this study is summarized in

Table 5. Based on these settings, we further evaluate the computational efficiency of the proposed model. As shown in

Table 6, the CNN–BiGRU–Transformer model achieves high performance with a relatively small number of trainable parameters and floating-point operations (FLOPs) while maintaining millisecond-level inference times per sample on a PC-grade GPU across the benchmark datasets. These results demonstrate the high computational efficiency of the model and suggest that it has promising potential for real-time human activity recognition in practical applications.

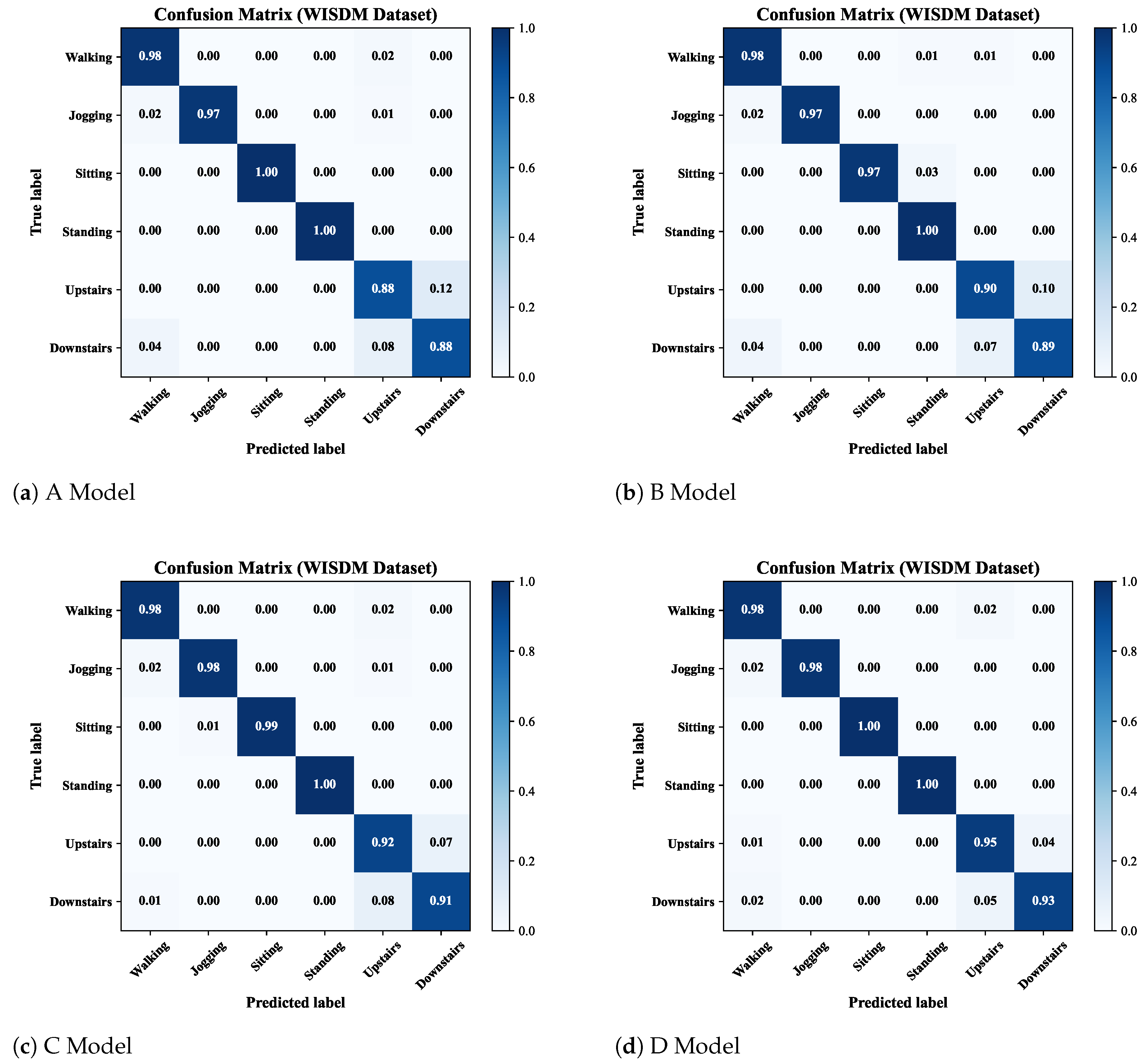

6. Ablation Study

To evaluate the impact of different modules on the overall model performance, four comparative models were designed and compared with the final proposed CNN-BiGRU–Transformer model. All models were trained on the training set of the WISDM dataset and evaluated on the validation set. Specifically, Model A contains only the three-branch CNN for spatial feature extraction, without any temporal modeling. Model B extends Model A by introducing a BiGRU module to capture local temporal dependencies. Model C incorporates a Transformer encoder on top of the CNN to capture global temporal dependencies, without including the BiGRU module or attention mechanism. Model D further extends Model B by combining both BiGRU and Transformer modules to jointly capture short- and long-term temporal dependencies, but without the attention mechanism. Finally, the proposed model integrates all modules, including the attention mechanism, to adaptively fuse the extracted spatial and temporal features.

As shown in

Table 10, different combinations of modules have a significant impact on model performance. In this table, Acc., Prec., Rec., and F1 denote accuracy, precision, recall, and F1-score, respectively. Model A, which relies solely on CNN for spatial feature extraction without temporal modeling, achieves an accuracy of 94.35%, demonstrating relatively limited performance. With the addition of the BiGRU module, Model B effectively captures local temporal dependencies, improving the accuracy to 94.48% and the F1 score to 94.51%. Model C, which incorporates the Transformer to model global temporal dependencies but excludes both the BiGRU and attention mechanisms, achieves further improvements, reaching an accuracy of 96.61% and an F1 score of 96.65%. Moreover, Model D combines the BiGRU and Transformer modules, enabling the network to jointly capture both short- and long-term dependencies, achieving an accuracy of 97.51% and an F1 score of 97.53%. Finally, the proposed CNN–BiGRU–Transformer model, equipped with an attention mechanism, attains the best overall performance, with an accuracy of 98.56% and an F1 score of 98.47%, confirming that the integration of multi-branch CNN, BiGRU, Transformer, and attention effectively enhances both spatial–temporal feature representation and classification robustness.To further verify the generalizability of the module design, the same ablation experiments were also repeated on the PAMAP2 dataset, and the detailed results are provided in the

Supplementary Materials (see

Figure S1 and Table S1). For the UCI-HAR dataset, since its activity categories and data characteristics are highly similar to those of the WISDM dataset, the ablation experiments were not repeated.

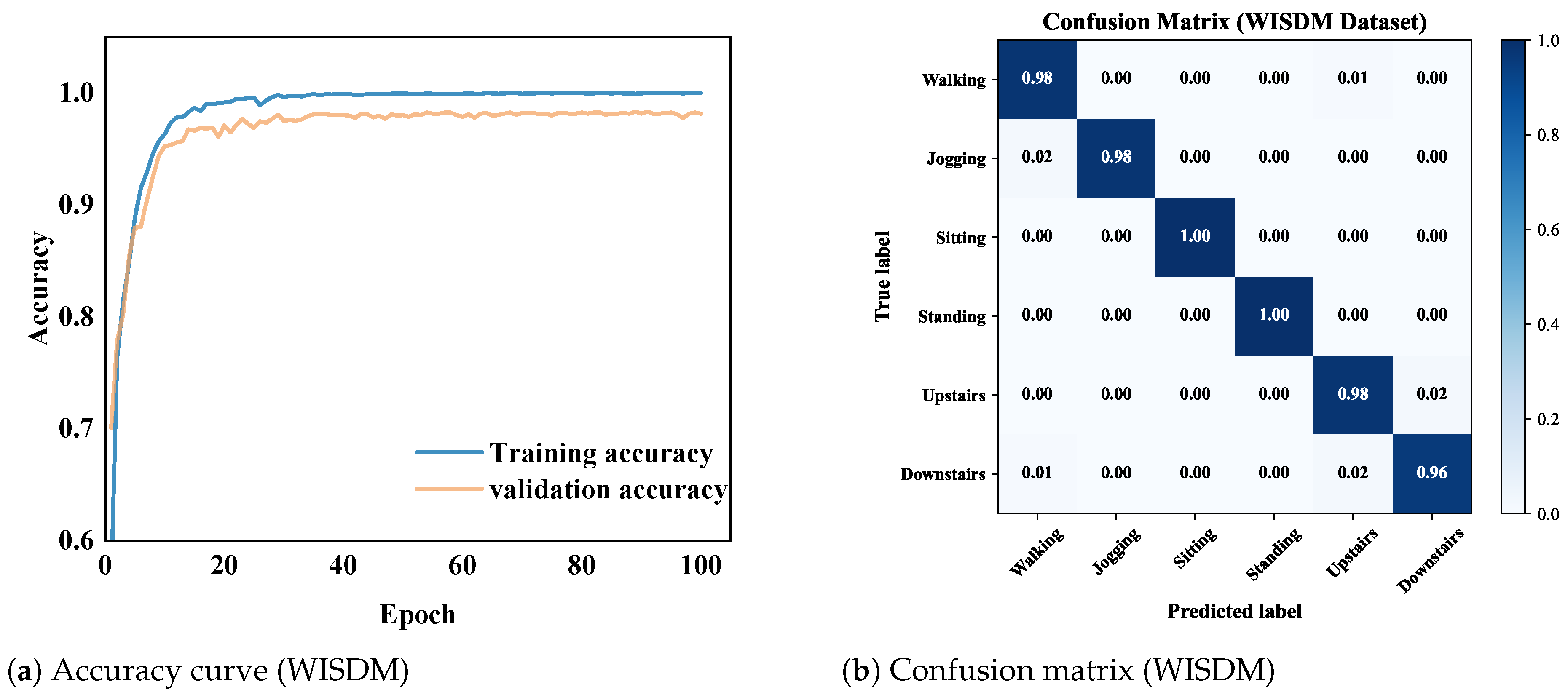

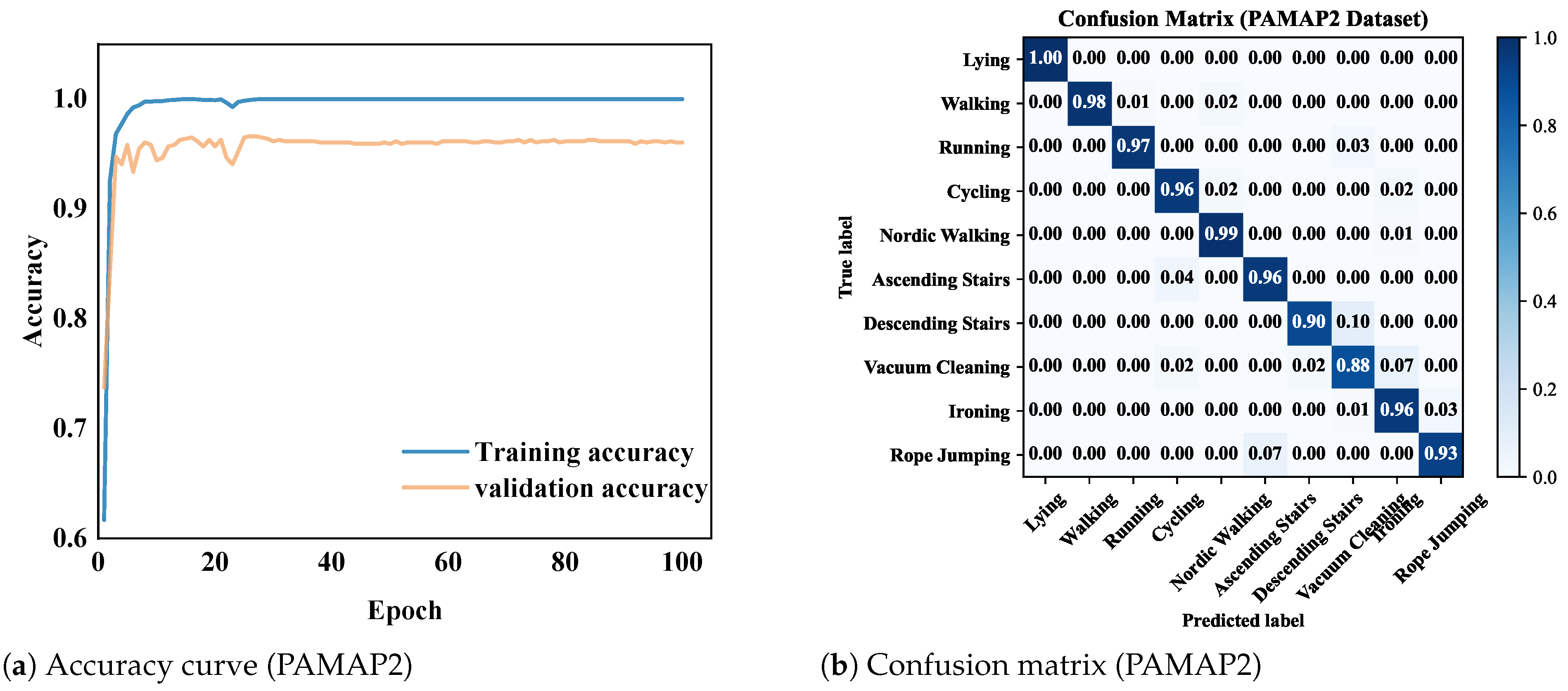

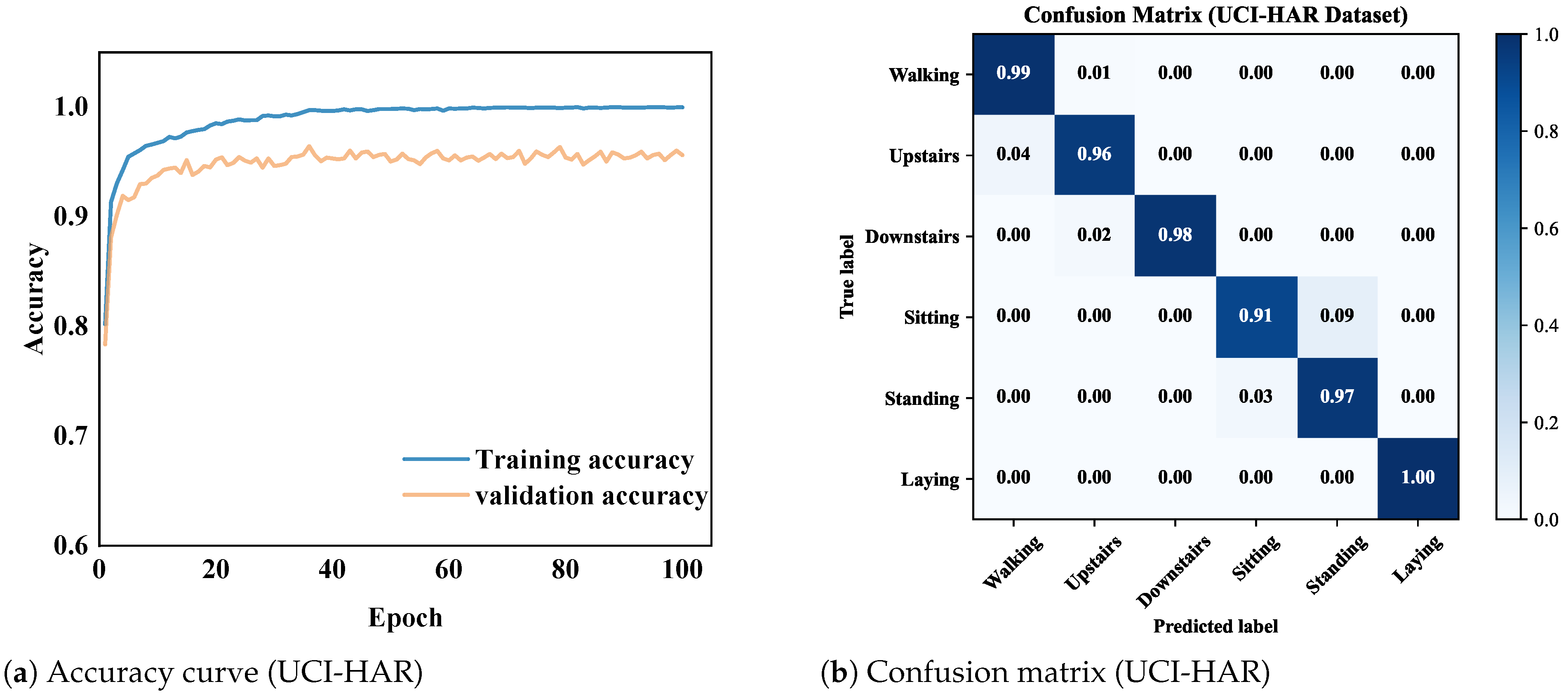

The classification performance of each model can be further observed from the confusion matrices in

Figure 12. Model A performs reasonably well in static activities such as sitting and standing but exhibits significant confusion in dynamic activities such as upstairs and downstairs, with an accuracy of only 88%. After introducing the BiGRU module, Model B improves the recognition accuracy for upstairs and downstairs activities to 90% and 89%, respectively, demonstrating enhanced capability in modeling local temporal dependencies. Model C, which incorporates the Transformer to capture global temporal dependencies, further improves the recognition accuracy for upstairs and downstairs to 92% and 91%, respectively. Model D, which combines both the BiGRU and Transformer modules, captures both local and global temporal dependencies, resulting in an even higher accuracy for upstairs and downstairs activities at 95% and 93%, respectively. Finally, our proposed model, which incorporates attention into the outputs of the BiGRU and Transformer, achieves the best overall performance, with accuracies reaching 98% for upstairs and 96% for downstairs, demonstrating the effectiveness of attention in enhancing both spatial–temporal feature representation and classification robustness.

7. Conclusions

This paper proposes an attention-based CNN–BiGRU–Transformer model for human activity recognition using wearable sensor data. By combining CNN for local spatial feature extraction with BiGRU and Transformer for modeling short- and long-range temporal dependencies, and further incorporating an attention mechanism to emphasize informative patterns, the model effectively exploits the spatial–temporal structure of multichannel time-series signals.

Experiments on three benchmark datasets demonstrate the effectiveness of the proposed approach. The CNN–BiGRU–Transformer model achieves recognition accuracies of 98.41% on WISDM, 95.62% on PAMAP2, and 96.74% on UCI-HAR. Compared with traditional machine learning methods, it substantially reduces the need for manual feature engineering by automatically learning discriminative representations from raw data. In comparison with existing deep learning models, it provides competitive or superior accuracy while maintaining a relatively small number of trainable parameters, moderate FLOPs, and millisecond-level inference times per sample on a PC-grade GPU.

Future work will focus on developing lightweight and deployable variants of the proposed model for wearable and mobile platforms, for example, through model compression, pruning, and knowledge distillation, as well as incorporating personalized data and adaptive learning strategies to improve robustness across different users and real-world environments. In addition, although this study concentrates on human activity recognition, the underlying spatial–temporal modeling framework is also relevant to other domains that involve complex temporal and spatial patterns, such as grazing behavior recognition in agricultural cyber–physical systems based on triaxial accelerometer data [

51], sustainable vehicle routing under uncertainty using time-dependent data-driven models [

52], and few-shot industrial defect detection with multi-scale feature representations and memory mechanisms [

53]. Exploring these directions would further validate the generality of the proposed architecture and extend its applicability to a broader range of engineering and industrial applications. Moreover, differences in input parameter configurations across datasets—such as variations in sensor modalities, channel dimensionality, and sampling characteristics—can influence the model’s performance and generalization behavior. Since the proposed framework processes all available sensor channels in a unified end-to-end manner and employs attention mechanisms to adaptively highlight informative features, it is inherently capable of handling such heterogeneity. Nonetheless, conducting a more systematic examination of how different input parameter settings affect model robustness would be a meaningful direction for future research, particularly when deploying the framework across diverse sensing platforms and real-world environments.